Abstract

Autism diagnosis through magnetic resonance imaging (MRI) has advanced significantly with the application of artificial intelligence (AI). This systematic review examines three computational paradigms: radiomics-based machine learning (ML), deep learning (DL), and hybrid models combining both. Across 49 studies (2011–2025), radiomics methods relying on classical classifiers (i.e., SVM, Random Forest) achieved moderate accuracies (61–89%) and offered strong interpretability. DL models, particularly convolutional and recurrent neural networks applied to resting-state functional MRI, reached higher accuracies (up to 98.2%) but were hampered by limited transparency and generalizability. Hybrid models combining handcrafted radiomic features with learned DL representations via dual or fused architectures demonstrated promising balances of performance and interpretability but remain underexplored. A persistent limitation across all approaches is the lack of external validation and harmonization in multi-site studies, which affects robustness. Future pipelines should include standardized preprocessing, multimodal integration, and explainable AI frameworks to enhance clinical viability. This review underscores the complementary strengths of each methodological approach, with hybrid approaches appearing to be a promising middle ground of improved classification performance and enhanced interpretability.

1. Introduction

Autism is a neurodevelopmental condition characterized by atypical social communication, restricted and repetitive behaviors, focused interests, and distinct sensory processing. Cognitive functioning in autistic individuals varies from intellectual disability to high intelligence. These traits typically emerge in early childhood by 24 months of age and exhibit substantial heterogeneity in both presentation and intensity across individuals [1]. As of 2021, autism ranked among the top 10 non-fatal health conditions of individuals under 20 years of age, with an estimated prevalence of 61.8 million people worldwide [2]. Its complex etiology includes genetic, epigenetic, environmental, and immune factors, with heritability estimates of 60–90% [3,4]. Neuroimaging, especially magnetic resonance imaging (MRI), has become central in research on brain structure and function in autism.

Structural MRI enables detailed assessment of cortical thickness, gray matter volume, and brain morphology, revealing differences in regions associated with social cognition, communication, and sensory processing. These differences affect multiple brain regions such as the hippocampus, basal ganglia, cerebellum, and thalamus rather than being confined to a specific localized area [5]. Other findings include early brain overgrowth and altered gyrification patterns [6,7]. Functional MRI (fMRI), especially resting-state fMRI, reveals disrupted connectivity in brain networks like the default mode network (DMN), with increased local connectivity in children and decreased long-range coherence in adults [8]. Diffusion MRI and DTI show atypical white matter connectivity, especially reductions in long-range tracts affecting the DMN and other cognitive networks, reinforcing the concept that autism involves widespread network-level disruptions rather than isolated regional abnormalities [9]. Magnetic resonance spectroscopy (MRS) studies have reported neurotransmitter imbalances, such as in GABA and glutamate levels [10].

Despite these technological advancements, no single neuroimaging or biological marker has been validated for the definitive diagnosis of autism. Formal diagnosis remains reliant on behavioral criteria outlined in the Diagnostic and Statistical Manual of Mental Disorders—Fifth Edition (DSM-5) with the Autism Diagnostic Observation Schedule-2 (ADOS 2)—a standardized series of tasks and social interactions designed to observe communication and repetitive behaviours [11]—and the Autism Diagnostic Interview-Revised (ADI-R) [12]—a structured caregiver interview that gathers developmental history and symptom patterns—being considered the gold-standard diagnostic instruments. Increasing criticism has also been directed toward deficit-based diagnostic frameworks, which are often inconsistent with the neurodiversity paradigm that conceptualizes autism as a natural variation in cognitive functioning [13,14]. The variability in symptomatology and neurobiological underpinnings complicates the development of a universal biomarker and supports the view of autism as a constellation of phenotypes rather than a singular entity [15].

Given these limitations of current diagnostic approaches, which are time-consuming, clinician-dependent, and structured with deficit-based behavioral criteria, there is increasing interest in objective, biologically grounded tools capable of capturing the neurodevelopmental heterogeneity of autism. In this context, quantitative MRI offers a means to detect reproducible brain-based differences in structure, connectivity, and neurochemistry, yet interpreting high-dimensional imaging data remains a challenge. Among the earliest quantitative approaches, voxel-based morphometry (VBM) provided voxel-wise assessments of gray matter volume and has been applied in some machine learning studies for autism. More recently, artificial intelligence (AI) and computer-aided diagnosis (CAD) systems have emerged as promising approaches for detecting subtle, distributed neurobiological patterns from MRI data that may align with phenotypic variability within the autism spectrum [16,17].

A notable advancement in this area is the emergence of radiomics, an application of advanced computational techniques that converts standard MRI scans into large sets of quantitative descriptors that capture tissue texture, shape, and spatial complexity [18]. Traditionally, this involves handcrafted features such as first-order statistics (i.e., intensity-based metrics), shape descriptors, and texture patterns derived from methods like the gray-level co-occurrence matrix (GLCM) and the gray-level-run-length-matrix (GLRLM) [19]. In more recent publications, the scope of radiomics seems to have expanded to encompass a wider array of quantitative imaging biomarkers. Notably, features extracted from advanced MRI techniques, such as Quantitative Susceptibility Mapping (QSM) for brain iron content [20], Cerebral Blood Flow (CBF) from arterial spin labeling (ASL) [21], and Diffusion Kurtosis Imaging (DKI) metrics (i.e., mean kurtosis, radial kurtosis, and mean diffusivity) [22], are increasingly integrated into radiomics pipelines. While VBM is traditionally a group-wise morphometric tool, several studies have repurposed VBM-derived gray matter maps as high-dimensional features in machine learning pipelines functioning like radiomic descriptors, particularly in studies aiming to characterize structural brain phenotypes at a spatial scale [23]. These imaging biomarkers capture functional activity (CBF), microstructural integrity (diffusion metrics), molecular composition (iron, QMS), and the density and morphological properties of tissue (VBM), offering complementary information to conventional structural descriptors, reflecting a conceptual shift toward comprehensive quantitative imaging.

Machine learning algorithms have been proven to be powerful tools for pattern recognition and classification using high-dimensional quantitative imaging data as inputs. In parallel, deep learning (DL) models, such as convolutional neural networks (CNNs) and transformer-based architectures, have enabled automatic feature learning directly from raw neuroimaging data, bypassing the need for handcrafted inputs and manual bias. When applied to radiomic or raw MRI data (structural, diffusion, and/or functional), these models have demonstrated promising performance in distinguishing autistic from non-autistic groups, in some cases identifying subgroups within the spectrum [24]. These methods may reduce diagnostic delays by offering scalable, data-driven, and less subjective diagnostic support—an especially critical goal, given the importance of early intervention in autism, where timely support can significantly influence developmental trajectories [25].

Related Work

Previous systematic reviews have assessed the role of artificial intelligence in autism classification, yet few have addressed the comparative performance of radiomics and deep learning. For example, the authors of [26] evaluate the diagnostic performance of 134 MRI-based machine and deep learning methods for identifying autism, with a focus on sensitivity, specificity, and heterogeneity across studies using different MRI modalities, but do not analyze ML-DL separately.

Machine learning applications to MRI have been reviewed in [27], highlighting improved diagnostic performance in structural compared to functional modalities and noting methodological heterogeneity with a focus on machine learning without differentiation between deep learning architectures. Another study [28] focused on deep learning applied only to resting-state fMRI and reported consistently high classification accuracy across architectures, while others [29] have briefly reviewed AI-based imaging tools for early autism detection, referencing both radiomics and DL, but without a direct comparison. That review also addressed the types of imaging markers associated with autism and the relevance of parcellation strategies. The authors of [30] focused on the application of deep learning techniques for the automatic diagnosis and rehabilitation of autism. It is also discussed how DL models like CNNs and autoencoders have been used to automatically extract and classify features, often outperforming traditional ML approaches, while the authors of [31] reviewed the use of vision transformer architectures for autism classification from neuroimaging data, highlighting their potential to capture distributed neural patterns more effectively than traditional CNNs.

Despite increasing interest in AI-based neuroimaging for autism, the authors are not aware of prior reviews systematically comparing radiomics, deep learning, and hybrid (radiomics used in deep learning) models in terms of methodology, performance, interpretability, and modality-specific applications. A comparison of these approaches could be beneficial because previous reviews have focused on radiomics and deep learning in isolation without providing a cohesive framework for evaluating their relative strengths and limitations, while hybrid models have not been sufficiently highlighted, possibly limiting progress toward clinically applicable AI tools in autism diagnostics. Furthermore, the distribution of these computational approaches across structural, functional, and diffusion MRI has not been thoroughly mapped. Methodological robustness, cohort size, and clinical relevance are also inconsistently reported across studies. This review attempts to address these gaps by providing a structured, modality-stratified comparison of radiomics-based machine learning and deep learning studies in autism classification. In doing so, it aims to offer insights into their respective capabilities and potential as objective, neuroimaging AI-based diagnostic tools.

2. Materials and Methods

2.1. Selection Criteria

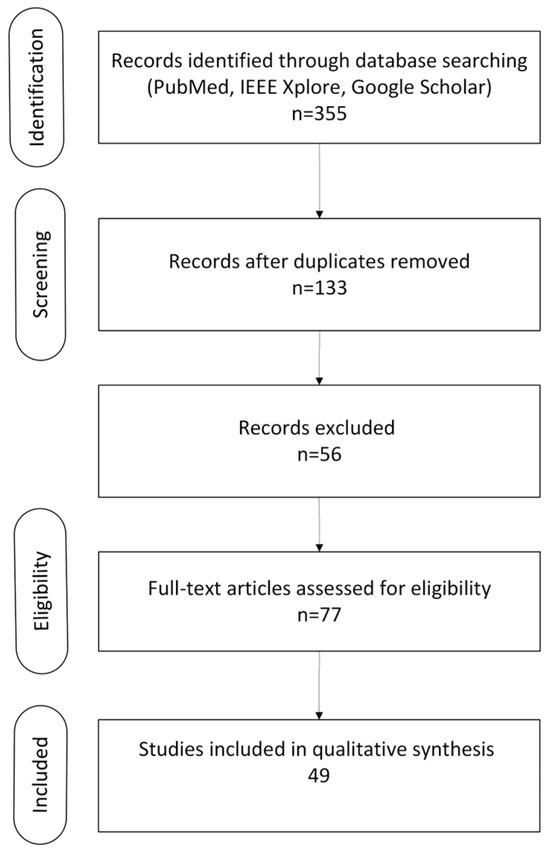

A systematic literature review was conducted across relevant databases (PubMed, IEEE Xplore, and Google Scholar) by two reviewers to identify peer-reviewed articles published between 2011 and 2025. The search aimed to capture studies on MRI-based classification of autism using radiomics, machine learning (ML), and deep learning (DL) methods. The search strategy used a combination of Medical Subject Heading (MeSH) terms and free-text keywords, including “autism” OR “autism spectrum disorder” OR “autism” “AND” “MRI” OR “magnetic resonance imaging” “AND” “classification” OR “diagnosis” OR “prediction” “AND” “radiomics” OR “machine learning” OR “deep learning” OR “artificial intelligence”. Filters were applied to include only original articles, conference proceedings, and systematic reviews and to exclude case reports, non-peer-reviewed material, and non-English publications. Additional references were identified through manual screening of relevant bibliographies. The initial database search identified n = 355 unique hits relevant to “autism + MRI+ classification + radiomics/ML/DL”. After removing duplicates (n = 222), n = 133 records remained for title and abstract screening. Of these, n = 56 were excluded based on irrelevance to MRI, autism, or AI methods. The full texts of 77 articles were assessed for eligibility, resulting in the exclusion of 28 studies due to insufficient methodological detail, lack of quantitative data, or not meeting inclusion criteria, resulting in the inclusion of a total of 49 studies (Figure 1).

Figure 1.

PRISMA flow diagram illustrating the study selection process.

2.2. Overview of Included Studies

A total of 49 studies were included in this systematic review, published between 2011 and 2025, all investigating MRI-based classification of autism using the computational approaches of radiomics-based machine learning and deep learning. Publicly available datasets, especially ABIDE I and II, either in full or subsets, were frequently used, and several studies also used institutional or locally acquired cohorts. Sample sizes ranged from small single-site cohorts (n < 50) to large multi-center datasets exceeding 1000 participants. The studies included in this review investigated autism across a broad age range, from 2 to 57 years. The majority (~82%) of the studies focused on pediatric and adolescent populations (ages 2–18), reflecting the clinical emphasis on early diagnosis of autism. A smaller proportion (~10–15%) involved adult-only cohorts, while a few studies examined mixed-age or lifespan samples. However, in many cases, age-specific results were not reported, and some used datasets with mixed age groups without stratification. Classification performance varied, with reported accuracies typically ranging between 70% and 95%, depending on the modeling approach, data source, and validation design. The methodologies employed a diverse range of analytical strategies, including 27 studies utilizing deep learning (DL) architectures, 19 studies based on radiomics or traditional machine learning (ML) pipelines, and a smaller subset of 3 studies comprising hybrid models of deep learning and radiomics combined.

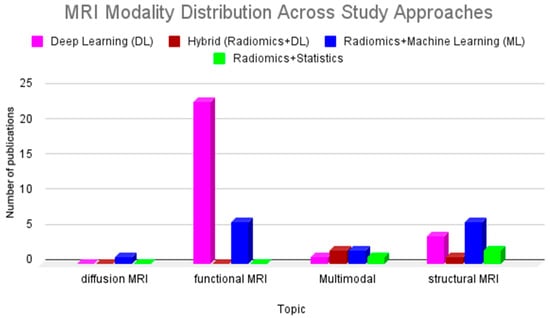

The MRI modalities examined across these studies include structural MRI (sMRI), predominantly T1-weighted sequences; functional MRI (fMRI), primarily resting-state (rs-fMRI); and diffusion-weighted imaging (DWI/DTI), which was less commonly used. Radiomics and handcrafted feature-based studies primarily relied on structural MRI to extract morphometric or texture-based features, while deep learning studies often leveraged functional data to model complex brain connectivity patterns. Figure 2 illustrates the distribution of MRI modalities used across these methodological approaches. Functional MRI was the most used modality in deep learning studies, whereas structural MRI was more frequently employed in radiomics-based approaches. Hybrid models and multimodal pipelines remain limited. Radiomics, combined with statistical analysis, appeared earlier and only in structural MRI studies and remained steady but less dominant in the literature. In contrast, deep learning applied to functional MRI shows a rise in publication frequency after 2018, peaking in 2020. Hybrid models combining radiomics with deep learning remain limited, with only sparse representation in recent years.

Figure 2.

Distribution of MRI modalities across study approaches in the reviewed literature.

Figure 3 presents a heatmap of the temporal distribution of included studies by MRI modality and analytical method. The temporal pattern highlights a shift in focus from handcrafted to automatically learned features, as deep learning gained traction due to applications of it being enabled by advancements in computing hardware.

Figure 3.

Heatmap illustrating the temporal distribution of included studies by modality and methodological approach from 2011 to 2025. The color scale indicates the number of studies per category per year.

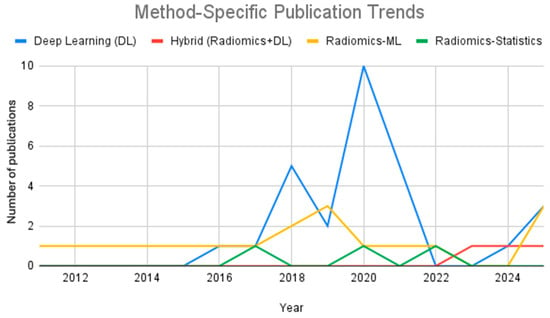

Figure 4 further emphasizes this trend through a line graph of method-specific publication frequency. Deep learning approaches exhibit a sharp rise beginning in 2018, reflecting growing interest in data-driven feature extraction for autism classification. Meanwhile, radiomics machine learning studies maintained a modest but consistent presence. Hybrid approaches emerged only after 2020, indicating a preliminary but evolving direction in the field. Radiomics combined with solely univariate statistical analysis remained marginal throughout the studied period.

Figure 4.

Line graph showing method-specific publication trends from 2011 to 2025.

2.2.1. Preprocessing and Modeling Approaches

Preprocessing and feature extraction are critical steps in MRI-based texture analysis aiming for classification using machine learning. These steps shape the quality of the input data but also influence the reliability and generalizability of the analysis. Studies in this domain typically rely on structural MRI data and differ in how they handle scanner variability, subject demographics, and feature selection strategies. Below, the preprocessing methodology and modeling approach for the selected studies of this review are presented. Table 1 presents the main differences between radiomics, deep learning, and hybrid approaches.

2.2.2. Radiomics-Based Studies

The authors of [32] used ABIDE-I data (1100 subjects, 20 sites) with standard preprocessing and Desikan–Killiany parcellation. A Random Forest model with 3-fold cross-validation achieved varying accuracy across sites (0.70–0.96), suggesting site effects. No harmonization was applied (i.e., ComBat), and the lack of normalization across scanners and acquisition protocols was a key limitation. Feature selection used a genetic algorithm, but demographics and scanning parameters showed no correlation with performance.

A diagnostic model has also been developed [33] for classifying autism in children aged 18–37 months using structural MRI-derived features from T1-weighted sequences. Cortical parcellation was based on the Desikan–Killiany atlas, and three types of structural features were extracted: regional cortical thickness, surface area, and volume. Cortical thickness was the most predictive feature, outperforming both volume- and surface-area-based models. The Random Forest (RF) classifier achieved the highest performance (accuracy: 80.9%, AUC: 0.88). Feature importance analysis identified the top 20 cortical regions (e.g., entorhinal cortex, cuneus, and paracentral gyrus), which were used to build the optimal RF model.

In ref. [34], the authors used 64 T1-weighted MRI scans from the ABIDE I database to study radiomic differences in the hippocampus and amygdala. The data consisted of two subsets drawn from different sites to account for age-related and site-related variability. Manual segmentation was performed by two radiologists, ensuring high inter-rater reliability, followed by intensity normalization to 32 gray levels. Gray-level co-occurrence matrices (GLCMs) were derived using four directions and a fixed offset, and eleven Haralick features were extracted for each slice. These features were averaged across slices and directions for a robust representation of each region. Z-score normalization was applied to standardize the feature distributions before classification. Feature selection was conducted using ANOVA with Holm–Bonferroni correction, followed by classification using support vector machines (SVMs) and Random Forest models. The hippocampus features, particularly GLCM correlation, showed strong discriminative power, while amygdala features were less consistent. No harmonization techniques were applied for scanner differences. Despite the small sample size and reliance on manual segmentation, the approach highlights the potential of radiomic texture analysis for autism classification based on hippocampal microstructural patterns.

The same authors in another study [35] investigated whether T1-weighted radiomic texture analysis could capture differences in brain tissue associated with autism, sex, and age. The preprocessing pipeline followed included standard steps such as skull-stripping, intensity normalization, atlas registration, and segmentation into 31 subcortical brain regions. For texture analysis, a Laplacian-of-Gaussian filter (LoG) was applied at the spatial scale to highlight fine, medium, and coarse textures. Within each region, statistical descriptors were calculated (mean, standard deviation, and entropy). These features were compared across groups using statistical tests (permutation testing and corrected p-values), but no machine learning models were used for classification or prediction purposes. Texture differences were noted in the hippocampus, choroid plexus, and cerebellar white matter. Sex- and age-related differences appeared in the amygdala and brain stem. No harmonization was applied.

Ref. [36] used multimodal quantitative MRI techniques to identify structural and physiological brain differences in young children with autism. The study included 60 children with autism and 60 without (ages 2–3). Radiomic features such as mean diffusivity (MD) and kurtosis were extracted from 38 brain regions using the AAL3 atlas. Postprocessing involved image registration, segmentation, and atlas-based parcellation using SPM12 and CAT12. The study found lower iron content (QSM), CBF, and kurtosis-based measures in brain regions linked to language, memory, and cognition. ROC analysis showed that combining QSM, CBF, and DKI features resulted in the highest classification accuracy (AUC = 0.917). However, the study has several limitations, including single-center data and a narrow age range, limiting generalizability. The study’s strengths lie in the integration of radiomics across multiple MRI contrasts.

2.2.3. Deep Learning Studies

In [37], the authors used resting-state fMRI data from ABIDE I, preprocessed with Configurable Pipeline for the Analysis of Connectomes (CPAC), including motion correction, bandpass filtering, and MNI space normalization. Functional connectivity matrices (19,900 features) were input into a stacked denoising autoencoder and MLP, achieving 70% accuracy (sensitivity 74%, specificity 63%), outperforming SVM and RF. Despite no harmonization, the model showed modest generalization across sites. Identified patterns included anterior–posterior underconnectivity and posterior hyperconnectivity, supporting autism theories. However, a low positive predictive value (4.3%) limits clinical utility. The authors of [38] trained a stacked autoencoder (SAE) on rs-fMRI from 84 ABIDE-NYU participants (age 6–13). After preprocessing, 20 frequency-enriched independent components (3400 features) were input into an SAE-SoftMax model, achieving 87.2% accuracy (sensitivity 92.9%, specificity 84.3%). Strengths included multi-band features and unsupervised learning; limitations included small, single-site data. The pipeline outperformed SVM and probabilistic neural networks.

In ref. [39], a deep attention neural network (DANN) integrating rs-fMRI and personal characteristics (PC) data was proposed from 809 ABIDE participants. Data were preprocessed with the CPAC pipeline. The architecture fused attention layers and multilayer perceptrons. Accuracy was 73.2% (F1 score: 0.736), outperforming SVM, RF, and standard DNNs. Leave-one-site-out validation confirmed robustness. Inclusion of PC data enhanced the study’s results. A key limitation was a lack of harmonization across sites.

In [40], rs-fMRI data from the ABIDE I and II datasets were preprocessed to reduce the inherent dimensionality of 4D volumes and enable their use in deep learning workflows. To achieve this, the temporal dimension was summarized using a series of voxel-wise transformations that preserved full spatial resolution, resulting in 3D feature volumes suitable for 3D convolutional neural networks (3D-CNNs). These features were designed to capture different aspects of local and global brain activity over time. This resulted in achieving a maximum accuracy of approximately 66%. Interestingly, combining all summary measures did not improve classification results, and support vector machine (SVM) models trained on the same features yielded comparable performance, suggesting limited added value from deep learning in this specific temporal transformation framework.

The authors of [41] developed and compared 14 deep learning models, including 2D/3D CNNs, spatial transformer networks (STNs), RNNs, and RAMs, using structural MRI data from the ABIDE and Yonsei datasets. Preprocessing differed by site (e.g., MNI registration for ABIDE). No harmonization was applied, limiting generalizability. 3D-CNNs performed best on ABIDE and RAMs on YUM. Attention maps highlighted basal ganglia involvement, consistent with repetitive behavior theories. Despite no multimodal data or harmonization, the study’s model diversity and interpretability are notable.

Finally, in [42], the authors analyzed rs-fMRI from ABIDE I and II (2226 samples) using pretrained VGG-16 and ResNet-50. NIfTI images were converted into 2D JPEG axial slices and resized to 224 × 224. ResNet-50 achieved 87.0% accuracy, outperforming VGG-16 (63.4%). A GUI was developed for clinical deployment. However, the lack of 3D context and harmonization limits applicability.

A notable mention of radiomics integration in deep learning models is a recent study by Chaddad et al. [43], who introduced a deep radiomics framework for ASD diagnosis and age prediction that combines convolutional neural network (CNN) feature extraction with principal component analysis (PCA) for feature map dimensionality reduction. T1-weighted structural MRI data from ABIDE I was used across 17 international sites. CNN-derived features were extracted using both 3D-CNNs pretrained on Alzheimer’s datasets and transfer-learned 2D-CNNs. The reported results included AUC values between 79 and 85% at individual sites after leave-one-out cross-validation at the site level and five-fold cross-validation across combined sites. A key methodological strength is the explicit handling of multisite variability: the authors avoided texture bias by analyzing site-specific subcortical regions and brain areas minimally affected by scanner/site heterogeneity. Moreover, the PCA step enhanced interpretability, countering the “black box” nature of CNNs, by reducing CNN feature maps to dominant components reflecting subcortical texture patterns. Limitations include dataset imbalance (male over-representation in ABIDE), reliance on automated Freesurfer parcellation, and lack of external validation beyond ABIDE. Nevertheless, the work highlights how hybrid radiomics–deep learning approaches can improve predictive power while addressing transparency and generalization issues in autism classification.

2.3. Risk of Bias Assessment

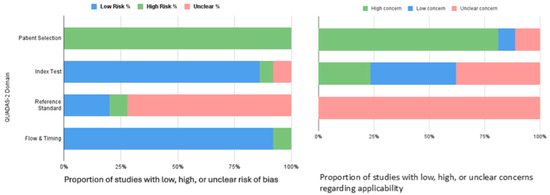

To evaluate the methodological quality and reproducibility of the included studies, the two reviewers conducted a structured risk of bias assessment using the QUADAS-2 criteria (Quality Assessment of Diagnostic Accuracy Studies-2) [44]. This framework is widely applied in systematic reviews of diagnostic accuracy and assesses studies across four domains: Patient Selection (appropriateness of recruitment and inclusion criteria), Index Tests (conduct and interpretation of the diagnostic method under review), Reference Standard (validity of the comparator or gold standard used), and Flow and Timing (completeness of data and consistency in applying the reference standard across participants). Each domain is evaluated for potential risk of bias and, where relevant, concerns regarding applicability to the review question. Studies are categorized as having low, high, or unclear risk in each domain. A study was scored as “unclear” when the absolute information was not provided or was insufficient to permit a judgment. The results of the bias risk and applicability assessments are summarized in Figure 5.

Figure 5.

Summary results of QUADAS-2 tool on risk of bias and applicability concerns for the included studies.

Table 1.

Comparison of radiomics and deep learning approaches for MRI-based autism classification.

Table 1.

Comparison of radiomics and deep learning approaches for MRI-based autism classification.

| Radiomics Studies [33,34,35,36,45,46,47,48,49,50,51,52,53,54,55] | Deep Learning Studies [37,39,40,41,42,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77] | Hybrid Studies [78,79,80] | |

|---|---|---|---|

| Feature Type | Handcrafted (texture, shape, intensity) | Learned automatically from image tensors | Combination of handcrafted and learned features |

| Segmentation | Required for ROI definition | Optional (whole-brain or patch-based input) | Usually required for handcrafted features; optional for deep inputs |

| Interpretability | High | Low | Moderate |

| Preprocessing | Strict normalization, harmonization, segmentation | Variable; less dependent on-site harmonization | Needed for handcrafted features; DL branches not as sensitive |

| Model Type | Classical ML (i.e., SVM, RF, XGBoost) | CNNs, 3D CNNs, ViTs | Dual of fused architectures (i.e., AE+CN, GCN+ML) |

| Data Need | Small to moderate datasets | Large datasets, especially for training from scratch | Moderate to large; depends on model complexity and fusion strategy |

| Pipeline | Modular (multi-step) | End-to-end | Semi-modular; parallel or fused branches integrated in final layers |

3. Results

This systematic review included 49 studies conducted between 2011 and 2025, encompassing three major methodological paradigms in MRI-based classification of autism: radiomics-based machine learning, deep learning, and hybrid models integrating elements of both. Among these, 21 studies employed radiomics and traditional machine learning algorithms, 25 utilized deep learning architectures, and 4 applied hybrid approaches that combined handcrafted radiomic features with neural network frameworks. The Autism Brain Imaging Data Exchange (ABIDE I and II) datasets were the most commonly used sources, either in full or partially, with additional studies relying on locally acquired or institutional datasets. Sample sizes ranged considerably, from fewer than 50 to over 1000 participants, with a predominant focus on pediatric populations.

Performance metrics across studies varied by methodology and were typically reported in terms of accuracy; sensitivity; specificity; and, less frequently, AUC (Area Under the Curve). Reported accuracies ranged from moderate (around 70%) to high (>90%), though often within limited or homogeneous datasets.

Stratified by modality, deep learning models trained on resting-state functional MRI, i.e., [37,39], generally outperformed radiomics-based approaches and demonstrated higher classification accuracies, often exceeding 80% and in some cases reaching 94.7%. These models, including autoencoders and CNNs, were trained end-to-end on connectivity matrices or voxel data. Despite strong internal validation results, deep learning models were rarely validated externally and often lacked harmonization across imaging sites, leading to concerns regarding their robustness and clinical translatability. However, structural MRI, i.e., [35], paired with radiomics features yielded respectable performance as well (i.e., accuracy of 80.9%), especially when using Random Forest or SVM classifiers. Diffusion MRI-based models, such as [36], reported significance through correlation coefficients and permutation p-values rather than direct classification metrics, reflecting their exploratory nature. When considering datasets, the ABIDE I and II repositories were most frequently used and often associated with higher performance claims, though these were subject to site and scanner variability. Models trained on smaller, single-site cohorts tended to overfit and reported inflated accuracies without external validation. For instance, studies using personalized or statistical pipelines on restricted clinical populations, i.e., [56], often lacked generalization capabilities.

Notably, no single method demonstrated universal superiority across all settings. Deep learning models showed consistently strong results in internal cross-validation, especially when trained on rs-fMRI-derived connectivity matrices, but they often failed to generalize well due to limited external validation and demographic diversity. Radiomics approaches, while less accurate on average, offered greater transparency and interpretability, particularly when paired with atlas-based parcellations and explainability frameworks like LIME. Thus, while DL methods tend to outperform in terms of raw metrics, hybrid models integrating multimodal data and interpretable features may offer more balanced clinical applicability.

Hybrid studies combining radiomic features with deep learning architectures were sparse but demonstrated classification performance reaching up to an accuracy of 89.47% and increased potential for interpretability. These models incorporated dual-branch autoencoders, graph convolutional networks, or feature-level fusion strategies to integrate anatomical and functional data. However, none of the included studies employed Vision Transformers (ViTs) within a radiomics-based hybrid framework. This absence may reflect the current architectural and computational limitations in integrating tabular, handcrafted radiomic features with the token-based, high-dimensional input structure required by ViTs. Structural MRI remained the dominant modality in radiomics studies, while deep learning approaches favored rs-fMRI. Multimodal studies incorporating both data types were limited, and diffusion MRI was comparatively underutilized.

Preprocessing strategies across the reviewed literature varied greatly. While most studies employed standard steps such as motion correction, normalization, and segmentation, only a minority applied formal harmonization techniques to correct for site effects in multi-center datasets. The lack of harmonization emerged as a recurrent limitation across both radiomics and deep learning pipelines, contributing to reduced reproducibility and generalizability.

4. Discussion

Several methodological strengths were noted, including the use of large multi-center datasets (ABIDE I/II); cross-validation strategies; and, in some cases, explainable AI techniques. However, the QUADAS-2 assessment revealed recurring risks of bias, particularly in patient selection and flow/timing, reflecting the reliance on convenience samples and incomplete reporting. Deep learning studies frequently reported higher accuracy, whereas radiomics studies offered greater interpretability, underscoring a trade-off between performance and transparency. These findings highlight both encouraging progress and persistent gaps in methodological rigor across the field.

4.1. Limitations

This review is not currently registered in a systematic review database such as PROSPERO (https://www.crd.york.ac.uk/prospero/ URL accessed on 15 July 2025), and only basic descriptive statistics were included. All three approaches mentioned in this study (radiomics, deep learning, and hybrid) for autism classification using MRI present distinct methodological challenges and limitations (Figure 6) that impact their reliability and clinical translation. The QUADAS-2 assessment (Figure 5) further revealed important sources of bias across the included studies. In particular, the patient selection domain was frequently rated as having high or unclear risk, reflecting the reliance on convenience samples from public repositories such as ABIDE I and II and limited external validation. The flow and timing domain also showed frequent risks due to insufficient reporting of subject inclusion/exclusion processes and handling of missing data. By contrast, the index test and reference standard domains were more often rated as low risk, reflecting clearer descriptions of imaging protocols and diagnostic criteria. Overall, these findings underscore the methodological heterogeneity and reporting gaps that may influence reproducibility.

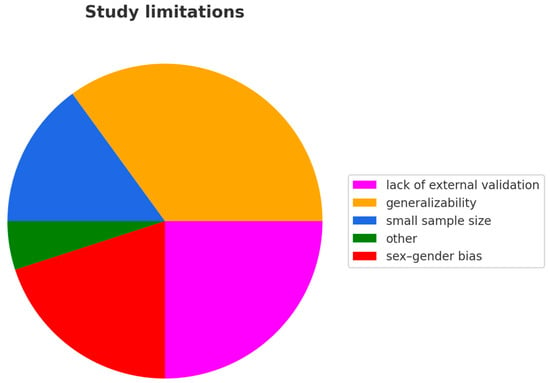

Figure 6.

Distribution of methodological limitations across included studies. Generalizability (38.8%) and lack of external validation (24.5%) were the most frequently cited challenges. Small sample size (16.3%), sex-gender bias (14.3%), and other factors (6.1%) were also reported.

Across all methodologies, small sample sizes and class imbalance are recurring issues. Many studies rely on datasets with fewer than a few hundred autism subjects, often skewed toward male or high-functioning individuals. This not only compromises the statistical power of classification models but also limits their generalizability to the broader autism spectrum, particularly among underrepresented subgroups (i.e., females, low-IQ individuals). Class imbalance, where the number of autism cases differs substantially from non-autistic cases, can lead to biased model training and inflated accuracy if not properly addressed through techniques like resampling, class weighting, or balanced evaluation metrics (i.e., AUC or F1 score). Figure 6 illustrates the distribution of methodological limitations across the included studies. Generalizability (38.8%) and lack of external validation (24.5%) were the most frequently cited limitations, followed by small sample size (16.3%), sex–gender bias (14.3%), and other factors (6.1%).

Furthermore, most included studies reported only accuracy, with relatively few providing complementary metrics such as AUC or F1 score. This limited the possibility of conducting a balanced comparative analysis and underscores the need for more consistent and comprehensive performance reporting in future research.

4.2. Challenges

All three approaches mentioned in this study (radiomics, deep learning, and hybrid models) for autism classification using MRI present distinct methodological challenges (Figure 6) that impact their reliability and clinical translation.

In radiomics pipelines, segmentation sensitivity remains a critical challenge. The quality and reproducibility of radiomic features are heavily dependent on accurate brain segmentation, typically performed using tools like FreeSurfer or manual delineation. Variability in segmentation, whether due to differences in MRI acquisition, preprocessing, or atlas definitions, can introduce substantial bias into feature extraction, particularly for small or anatomically variable regions such as the amygdala or cerebellum. Additionally, the lack of standardization in feature definitions and extraction protocols (i.e., differences in texture calculation algorithms or intensity normalization) limits the comparability of radiomics studies across institutions and hinders reproducibility. Despite emerging efforts to define standardized radiomic feature sets (i.e., through the IBSI initiative), inconsistencies in preprocessing pipelines remain a concern.

Deep learning models are well known for their dependence on large datasets. These models require large volumes of labeled data to generalize effectively and avoid overfitting. In autism research, the available datasets, such as ABIDE I and II, are demographically imbalanced and affected by inter-site variability, posing a significant limitation for training robust deep architectures. Furthermore, among the studies reviewed, relatively few explicitly employed explainable AI techniques such as SHAP (SHapley Additive exPlanations) or saliency maps, with few exceptions [57]. Most deep learning studies lacked a systematic evaluation of feature importance or brain region relevance, limiting their clinical trustworthiness. This weakness makes it difficult for clinicians to validate the biological plausibility of the learned features, particularly when predictions are not accompanied by transparent justifications.

Hybrid approaches remain underexplored in autism MRI classification, with only a small number of eligible studies identified. This scarcity is itself a challenge, as hybrid frameworks, those that combine handcrafted radiomic features with deep learning-derived representations, have the potential to address weaknesses inherent in each individual approach. Radiomics contributes interpretability and clinically meaningful biomarkers, while deep learning captures high-dimensional, non-linear patterns that may be overlooked by predefined feature sets. Similar hybrid frameworks in other diseases, such as Parkinson’s disease [58], lung cancer [59], and more, have demonstrated that combining radiomic features with deep learning representations consistently outperforms either approach alone. These results reinforce the potential of hybrid designs as a promising “middle ground,” offering both predictive accuracy and improved interpretability, and highlight the need for further exploration of such approaches in autism MRI classification.

5. Conclusions

This review synthesizes the state-of-the-art AI approaches in MRI-based classification of autism, noting a methodological divergence between radiomics and deep learning approaches. Table 2, Table 3 and Table 4 list the studies analyzed in this review. Our findings indicate that clinical translation of AI models for autism MRI classification is not yet feasible; however, the following steps outline the key priorities required to move the field toward clinical applicability. From a clinical perspective, radiomics-based models are better suited for interpretability and integration into diagnostic workflows, whereas deep learning models may capture more subtle and distributed neurobiological patterns. The lack of standardized pipelines, inconsistent reporting of validation strategies, and limited exploration of multimodal fusion remain critical limitations across the field. Most studies continue to rely heavily on the ABIDE dataset, which, despite its size, suffers from site variability and demographic imbalances that compromise model generalizability. Additionally, few studies report performance metrics beyond accuracy, such as AUC or F1 score, which are essential for evaluating models in imbalanced classification tasks. The few existing hybrid studies favor more traditional DL architectures that are easier to merge with handcrafted features. In contrast, combining ViT embeddings with radiomics would require custom fusion strategies, such as cross-modal transformers, attention-based feature gating, or mutual redundancy filtering, to avoid overlap and overfitting. Without these advanced integration methods, naïve hybridization could dilute model performance and hamper interpretability, possibly stopping researchers from attempting this approach. To date, no studies involve the hybrid approach of radiomic features or deep learning approaches using Vision Transformers. A possible explanation for this could be the different representational domains of Vision Transformers (ViTs) and radiomics. ViTs operate on raw imaging data, which is divided into fixed-sized 2D or 3D patches that are subsequently encoded as patch tokens. These patches are embedded and passed through a transformer encoder that captures global contextual relationships via self-attention. In contrast, radiomics generates handcrafted, tabular features from predefined regions of interest (ROIs), focusing on intensity, texture, shape, and statistical descriptors. These are not image tensors, but feature vectors, which do not naturally integrate with the image-token-based ViT pipeline. Radiomic features are often low-dimensional and tabular, while ViT embeddings are high-dimensional and hierarchical. There is no standard practice for injecting external feature vectors into the transformer architecture, unlike CNNs, where radiomics can be concatenated with fully connected layers. Also, Vision Transformers require large-scale pretraining to generalize well. Most autism-related datasets have limited sample sizes. Researchers usually prioritize radiomics-based ML-small and data-friendly approaches-or ViTs withtransfer learning, but not both, due to architectural and training complexities. In autism imaging, radiomic and ViT-extracted features may partially overlap in what they represent (i.e., shape, edge structure). Integrating both without feature redundancy handling (via feature selection, attention gating, or cross-modal transformers) could lead to overfitting or reduced performance, discouraging experimental attempts.

Table 2.

Summary of reviewed publications on deep learning approaches.

Table 3.

Summary of reviewed publications on hybrid approaches.

Table 4.

Summary of reviewed publications on radiomics approaches.

To advance the field, future research should, therefore, prioritize larger and more balanced datasets, standardized evaluation protocols, external validation, and the systematic exploration of hybrid models, which together will shape the next steps toward clinical translation. Also, focus should be placed on developing explainable hybrid models that integrate radiomic features with deep learning and multimodal fusion strategies such as attention mechanisms, graph-based representations, or cross-modal transformers. Harmonization techniques are essential to reducing site effects, alongside external validation and stratified analysis by age and sex. Standardized preprocessing, open benchmarking datasets, and tailored explainability frameworks are also critical. These steps will support the clinical translation of AI tools for autism diagnosis, prognosis, and personalized subtype identification.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; American Psychiatric Association Publishing: Washington, DC, USA, 2022. [Google Scholar] [CrossRef]

- Santomauro, D.; Erskine, H.; Mantilla Herrera, A.; Miller, P.A.; Shadid, J.; Hagins, H.; Addo, I.Y.; Adnani, Q.E.S.; Ahinkorah, B.O.; Ahmed, A.; et al. The global epidemiology and health burden of the autism spectrum: Findings from the Global Burden of Disease Study 2021. Lancet Psychiatry 2025, 12, 111–121. [Google Scholar] [CrossRef]

- Sandin, S.; Lichtenstein, P.; Kuja-Halkola, R.; Hultman, C.; Larsson, H.; Reichenberg, A. The heritability of autism spectrum disorder. J. Am. Med. Assoc. 2017, 318, 1182–1184. [Google Scholar] [CrossRef]

- Modabbernia, A.; Velthorst, E.; Reichenberg, A. Environmental risk factors for autism: An evidence-based review of systematic reviews and meta-analyses. Mol. Autism 2017, 8, 1–16. [Google Scholar] [CrossRef]

- Van Rooij, D.; Anagnostou, E.; Arango, C.; Auzias, G.; Behrmann, M.; Busatto, G.F.; Calderoni, S.; Daly, E.; Deruelle, C.; Di Martino, A.; et al. Cortical and subcortical brain morphometry differences between patients with autism spectrum disorder and healthy individuals across the lifespan: Results from the ENIGMA ASD working group. Am. J. Psychiatry 2018, 175, 359–369. [Google Scholar] [CrossRef]

- Emerson, R.W.; Adams, C.; Nishino, T.; Hazlett, H.C.; Zwaigenbaum, L.; Constantino, J.N.; Shen, M.D.; Swanson, M.R.; Elison, J.T.; Kandala, S.; et al. Functional neuroimaging of high-risk 6-month-old infants predicts a diagnosis of autism at 24 months of age. Sci. Transl. Med. 2017, 9, eaag2882. [Google Scholar] [CrossRef]

- Ecker, C.; Bookheimer, S.Y.; Murphy, D.G.M. Neuroimaging in autism spectrum disorder: Brain structure and function across the lifespan. Lancet Neurol. 2015, 14, 1121–1134. [Google Scholar] [CrossRef]

- Padmanabhan, A.; Lynch, C.J.; Schaer, M.; Menon, V. The Default Mode Network in Autism. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 2017, 2, 476. [Google Scholar] [CrossRef] [PubMed]

- Ameis, S.H.; Catani, M. Altered white matter connectivity as a neural substrate for social impairment in Autism Spectrum Disorder. Cortex 2015, 62, 158–181. [Google Scholar] [CrossRef]

- Horder, J.; Petrinovic, M.M.; Mendez, A.A.; Bruns, A.; Takumi, T.; Spooren, W.; Barker, G.J.; Künnecke, B.; Murphy, D.G. Glutamate and GABA in autism spectrum disorder-a translational magnetic resonance spectroscopy study in man and rodent models. Transl. Psychiatry 2018, 8, 1–11. [Google Scholar] [CrossRef] [PubMed]

- McCrimmon, A.; Rostad, K. Test Review: Autism Diagnostic Observation Schedule, Second Edition (ADOS-2) Manual (Part II): Toddler Module. J. Psychoeduc. Assess. 2014, 32, 88–92. [Google Scholar] [CrossRef]

- Kim, S.H.; Hus, V.; Lord, C. Autism Diagnostic Interview-Revised. In Encyclopedia of Autism Spectrum Disorders; Springer: New York, NY, USA, 2013; pp. 345–349. [Google Scholar] [CrossRef]

- Happé, F.; Frith, U. Annual Research Review: Looking back to look forward—Changes in the concept of autism and implications for future research. J. Child. Psychol. Psychiatry 2020, 61, 218–232. [Google Scholar] [CrossRef]

- Kapp, S.K.; Gillespie-Lynch, K.; Sherman, L.E.; Hutman, T. Deficit, difference, or both? Autism and neurodiversity. Dev. Psychol. 2013, 49, 59–71. [Google Scholar] [CrossRef] [PubMed]

- Klin, A. Biomarkers in Autism Spectrum Disorder: Challenges, Advances, and the Need for Biomarkers of Relevance to Public Health. Focus J. Life Long. Learn. Psychiatry 2018, 16, 135. [Google Scholar] [CrossRef]

- Arbabshirani, M.R.; Plis, S.; Sui, J.; Calhoun, V.D. Single subject prediction of brain disorders in neuroimaging: Promises and pitfalls. Neuroimage 2017, 145 Pt. B, 137–165. [Google Scholar] [CrossRef]

- Bone, D.; Goodwin, M.S.; Black, M.P.; Lee, C.C.; Audhkhasi, K.; Narayanan, S. Applying Machine Learning to Facilitate Autism Diagnostics: Pitfalls and Promises. J. Autism Dev. Disord. 2015, 45, 1121–1136. [Google Scholar] [CrossRef]

- Lambin, P.; Rios-Velazquez, E.; Leijenaar, R.; Carvalho, S.; van Stiphout, R.G.P.M.; Granton, P.; Zegers, C.M.L.; Gillies, R.; Boellard, R.; Dekker, A.; et al. Radiomics: Extracting more information from medical images using advanced feature analysis. Eur. J. Cancer 2012, 48, 441–446. [Google Scholar] [CrossRef]

- Aerts, H.J.W.L.; Rios Velazquez, E.; Leijenaar, R.T.H.; Parmar, C.; Grossmann, P.; Carvalho, S.; Bussink, J.; Monshouwer, R.; Haibe-Kains, B.; Rietveld, D.; et al. Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat. Commun. 2014, 5, 1–9. [Google Scholar] [CrossRef]

- Xiao, B.; He, N.; Wang, Q.; Cheng, Z.; Jiao, Y.; Haacke, E.M.; Yan, F.; Shi, F. Quantitative susceptibility mapping based hybrid feature extraction for diagnosis of Parkinson’s disease. Neuroimage Clin. 2019, 24, 102070. [Google Scholar] [CrossRef]

- Hashido, T.; Saito, S.; Ishida, T. A radiomics-based comparative study on arterial spin labeling and dynamic susceptibility contrast perfusion-weighted imaging in gliomas. Sci. Rep. 2020, 10, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Su, C.; Chen, X.; Liu, C.; Li, S.; Jiang, J.; Qin, Y.; Zhang, S. T2-FLAIR, DWI and DKI radiomics satisfactorily predicts histological grade and Ki-67 proliferation index in gliomas. Am. J. Transl. Res. 2021, 13, 9182. Available online: https://pmc.ncbi.nlm.nih.gov/articles/PMC8430185/ (accessed on 23 June 2025). [PubMed]

- Tsai, M.-L.; Hsieh, K.L.-C.; Liu, Y.-L.; Yang, Y.-S.; Chang, H.; Wong, T.-T.; Peng, S.-J. Morphometric and radiomics analysis toward the prediction of epilepsy associated with supratentorial low-grade glioma in children. Cancer Imaging 2025, 25, 63. [Google Scholar] [CrossRef]

- Singh, A.P.; Jain, V.S.; Yu, J.P.J. Diffusion radiomics for subtyping and clustering in autism spectrum disorder: A preclinical study. Magn. Reson. Imaging 2022, 96, 116. [Google Scholar] [CrossRef] [PubMed]

- Zwaigenbaum, L.; Bauman, M.L.; Choueiri, R.; Kasari, C.; Carter, A.; Granpeesheh, D.; Mailloux, Z.; Smith Roley, S.; Wagner, S.; Fein, D.; et al. Early Intervention for Children With Autism Spectrum Disorder Under 3 Years of Age: Recommendations for Practice and Research. Pediatrics 2015, 136 (Suppl. 1), S60–S81. [Google Scholar] [CrossRef] [PubMed]

- Schielen, S.J.C.; Pilmeyer, J.; Aldenkamp, A.P.; Zinger, S. The diagnosis of ASD with MRI: A systematic review and meta-analysis. Transl. Psychiatry 2024, 14, 1–11. [Google Scholar] [CrossRef]

- Moon, S.J.; Hwang, J.; Kana, R.; Torous, J.; Kim, J.W. Accuracy of machine learning algorithms for the diagnosis of autism spectrum disorder: Systematic review and meta-analysis of brain magnetic resonance imaging studies. JMIR Ment. Health 2019, 6, e14108. [Google Scholar] [CrossRef] [PubMed]

- Huda, S.; Khan, D.M.; Masroor, K.; Warda; Rashid, A.; Shabbir, M. Advancements in automated diagnosis of autism spectrum disorder through deep learning and resting-state functional mri biomarkers: A systematic review. Cogn. Neurodyn. 2024, 18, 3585–3601. [Google Scholar] [CrossRef]

- Abdelrahim, M.; Khudri, M.; Elnakib, A.; Shehata, M.; Weafer, K.; Khalil, A.; Saleh, G.A.; Batouty, N.M.; Ghazal, M.; Contractor, S.; et al. AI-based non-invasive imaging technologies for early autism spectrum disorder diagnosis: A short review and future directions. Artif. Intell. Med. 2025, 161, 103074. [Google Scholar] [CrossRef]

- Khodatars, M.; Shoeibi, A.; Sadeghi, D.; Ghaasemi, N.; Jafari, M.; Moridian, P.; Khadem, A.; Alizadehsani, R.; Zare, A.; Kong, Y.; et al. Deep learning for neuroimaging-based diagnosis and rehabilitation of Autism Spectrum Disorder: A review. Comput. Biol. Med. 2021, 139, 104949. [Google Scholar] [CrossRef]

- Alharthi, A.G.; Alzahrani, S.M. Do it the transformer way: A comprehensive review of brain and vision transformers for autism spectrum disorder diagnosis and classification. Comput. Biol. Med. 2023, 167, 107667. [Google Scholar] [CrossRef]

- Ma, R.; Huang, Y.; Pan, Y.; Wang, Y.; Wei, Y. Meta-data Study in Autism Spectrum Disorder Classification Based on Structural MRI. In Proceedings of the 15th ACM International Conference on Bioinformatics, Computational Biology and Health Informatics, Shenzhen, China, 22–25 November 2024; p. 1. [Google Scholar] [CrossRef]

- Xiao, X.; Fang, H.; Wu, J.; Xiao, C.; Xiao, T.; Qian, L.; Liang, F.; Xiao, Z.; Chu, K.K.; Ke, X. Diagnostic model generated by MRI-derived brain features in toddlers with autism spectrum disorder. Autism Res. 2015, 10, 620–630. [Google Scholar] [CrossRef]

- Chaddad, A.; Desrosiers, C.; Hassan, L.; Tanougast, C. Hippocampus and amygdala radiomic biomarkers for the study of autism spectrum disorder. BMC Neurosci. 2017, 18, 52. [Google Scholar] [CrossRef]

- Chaddad, A.; Desrosiers, C.; Toews, M. Multi-scale radiomic analysis of sub-cortical regions in MRI related to autism, gender and age. Sci. Rep. 2017, 7, 1–17. [Google Scholar] [CrossRef] [PubMed]

- Tang, S.; Nie, L.; Liu, X.; Chen, Z.; Zhou, Y.; Pan, Z.; He, L. Application of Quantitative Magnetic Resonance Imaging in the Diagnosis of Autism in Children. Front. Med. 2022, 9, 818404. [Google Scholar] [CrossRef] [PubMed]

- Heinsfeld, A.S.; Franco, A.R.; Craddock, R.C.; Buchweitz, A.; Meneguzzi, F. Identification of autism spectrum disorder using deep learning and the ABIDE dataset. Neuroimage Clin. 2018, 17, 16–23. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Xiao, Z.; Wang, B.; Wu, J. Identification of Autism Based on SVM-RFE and Stacked Sparse Auto-Encoder. IEEE Access 2019, 7, 118030–118036. [Google Scholar] [CrossRef]

- Niu, K.; Guo, J.; Pan, Y.; Gao, X.; Peng, X.; Li, N.; Li, H. Multichannel Deep Attention Neural Networks for the Classification of Autism Spectrum Disorder Using Neuroimaging and Personal Characteristic Data. Complexity 2020, 2020, 1357853. [Google Scholar] [CrossRef]

- Thomas, R.M.; Gallo, S.; Cerliani, L.; Zhutovsky, P.; El-Gazzar, A.; van Wingen, G. Classifying Autism Spectrum Disorder Using the Temporal Statistics of Resting-State Functional MRI Data With 3D Convolutional Neural Networks. Front. Psychiatry 2020, 11, 440. [Google Scholar] [CrossRef]

- Ke, F.; Choi, S.; Kang, Y.H.; Cheon, K.A.; Lee, S.W. Exploring the Structural and Strategic Bases of Autism Spectrum Disorders with Deep Learning. IEEE Access 2020, 8, 153341–153352. [Google Scholar] [CrossRef]

- Husna, R.N.S.; Syafeeza, A.R.; Hamid, N.A.; Wong, Y.C.; Raihan, R.A. Functional magnetic resonance imaging for autism spectrum disorder detection using deep learning. J. Teknol. 2021, 83, 45–52. [Google Scholar] [CrossRef]

- Chaddad, A. Deep Radiomics for Autism Diagnosis and Age Prediction. IEEE Trans. Hum. Mach. Syst. 2025, 55, 144–154. [Google Scholar] [CrossRef]

- Whiting, P.F.; Rutjes, A.W.S.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.G.; Sterne, J.A.C.; Bossuyt, P.M.M. QUADAS-2: A Revised Tool for the Quality Assessment of Diagnostic Accuracy Studies Evaluation of QUADAS, a tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- Zheng, Q.; Nan, P.; Cui, Y.; Li, L. ConnectomeAE: Multimodal brain connectome-based dual-branch autoencoder and its application in the diagnosis of brain diseases. Comput. Methods Programs Biomed. 2025, 267, 108801. [Google Scholar] [CrossRef]

- Reiter, M.A.; Jahedi, A.; Fredo, A.R.J.; Fishman, I.; Bailey, B.; Müller, R.A. Performance of machine learning classification models of autism using resting-state fMRI is contingent on sample heterogeneity. Neural Comput. Appl. 2020, 33, 3299. [Google Scholar] [CrossRef] [PubMed]

- Anderson, J.S.; Nielsen, J.A.; Froehlich, A.L.; DuBray, M.B.; Druzgal, T.J.; Cariello, A.N.; Cooperrider, J.R.; Zielinski, B.A. Functional connectivity magnetic resonance imaging classification of autism. Brain 2011, 134, 3739. [Google Scholar] [CrossRef]

- Plitt, M.; Barnes, K.A.; Martin, A. Functional connectivity classification of autism identifies highly predictive brain features but falls short of biomarker standards. Neuroimage Clin. 2014, 7, 359. [Google Scholar] [CrossRef]

- Zhang, F.; Savadjiev, P.; Cai, W.; Song, Y.; Rathi, Y.; Tunç, B.; Parker, D.; Kapur, T.; Schultz, R.T.; Makris, N.; et al. Whole brain white matter connectivity analysis using machine learning: An application to autism. Neuroimage 2018, 172, 826–837. [Google Scholar] [CrossRef]

- Soussia, M.; Rekik, I. Unsupervised Manifold Learning Using High-Order Morphological Brain Networks Derived From T1-w MRI for Autism Diagnosis. Front. Neuroinform 2018, 12, 70. [Google Scholar] [CrossRef]

- Dekhil, O.; Mohamed, A.; El-Nakieb, Y.; Shalaby, A.; Soliman, A.; Switala, A.; Ali, M.; Ghazal, M.; Hajjdiab, H.; Casanova, M.F.; et al. A Personalized Autism Diagnosis CAD System Using a Fusion of Structural MRI and Resting-State Functional MRI Data. Front. Psychiatry 2019, 10, 392. [Google Scholar] [CrossRef] [PubMed]

- Spera, G.; Retico, A.; Bosco, P.; Ferrari, E.; Palumbo, L.; Oliva, P.; Muratori, F.; Calderoni, S. Evaluation of altered functional connections in male children with autism spectrum disorders on multiple-site data optimized with machine learning. Front. Psychiatry 2019, 10, 620. [Google Scholar] [CrossRef] [PubMed]

- Kazeminejad, A.; Sotero, R.C. Topological properties of resting-state FMRI functional networks improve machine learning-based autism classification. Front. Neurosci. 2019, 13, 414728. [Google Scholar] [CrossRef]

- Chaitra, N.; Vijaya, P.A.; Deshpande, G. Diagnostic prediction of autism spectrum disorder using complex network measures in a machine learning framework. Biomed. Signal Process Control 2020, 62, 102099. [Google Scholar] [CrossRef]

- Squarcina, L.; Nosari, G.; Marin, R.; Castellani, U.; Bellani, M.; Bonivento, C.; Fabbro, F.; Molteni, M.; Brambilla, P. Automatic classification of autism spectrum disorder in children using cortical thickness and support vector machine. Brain Behav. 2021, 11, e2238. [Google Scholar] [CrossRef]

- Sarovic, D.; Hadjikhani, N.; Schneiderman, J.; Lundström, S.; Gillberg, C. Autism classified by magnetic resonance imaging: A pilot study of a potential diagnostic tool. Int. J. Methods Psychiatr. Res. 2020, 29, 1–18. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar] [CrossRef]

- Chen, H.; Liu, X.; Luo, X.; Fu, J.; Zhou, K.; Wang, N.; Li, Y.; Geng, D. An automated hybrid approach via deep learning and radiomics focused on the midbrain and substantia nigra to detect early-stage Parkinson’s disease. Front. Aging Neurosci. 2024, 16, 1397896. [Google Scholar] [CrossRef]

- Kim, S.; Lim, J.H.; Kim, C.-H.; Roh, J.; You, S.; Choi, J.-S.; Lim, J.H.; Kim, L.; Chang, J.W.; Park, D.; et al. Deep learning–radiomics integrated noninvasive detection of epidermal growth factor receptor mutations in non-small cell lung cancer patients. Sci. Rep. 2024, 14, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Yahata, N.; Morimoto, J.; Hashimoto, R.; Lisi, G.; Shibata, K.; Kawakubo, Y.; Kuwabara, H.; Kuroda, M.; Yamada, T.; Megumi, F.; et al. A small number of abnormal brain connections predicts adult autism spectrum disorder. Nat. Commun. 2016, 7, 11254. [Google Scholar] [CrossRef] [PubMed]

- Jahani, A.; Jahani, I.; Khadem, A.; Braden, B.B.; Delrobaei, M.; MacIntosh, B.J. Twinned neuroimaging analysis contributes to improving the classification of young people with autism spectrum disorder. Sci. Rep. 2024, 14, 1–10. [Google Scholar] [CrossRef]

- Abraham, A.; Milham, M.P.; Di Martino, A.; Craddock, R.C.; Samaras, D.; Thirion, B.; Varoquaux, G. Deriving reproducible biomarkers from multi-site resting-state data: An Autism-based example. Neuroimage 2017, 147, 736–745. [Google Scholar] [CrossRef] [PubMed]

- Zhao, F.; Zhang, H.; Rekik, I.; An, Z.; Shen, D. Diagnosis of Autism Spectrum Disorders Using Multi-Level High-Order Functional Networks Derived From Resting-State Functional MRI. Front. Hum. Neurosci. 2018, 12, 184. [Google Scholar] [CrossRef]

- Xiao, Z.; Wang, C.; Jia, N.; Wu, J. SAE-based classification of school-aged children with autism spectrum disorders using functional magnetic resonance imaging. Multimed. Tools Appl. 2018, 77, 22809–22820. [Google Scholar] [CrossRef]

- Wang, Z.; Peng, D.; Shang, Y.; Gao, J. Autistic Spectrum Disorder Detection and Structural Biomarker Identification Using Self-Attention Model and Individual-Level Morphological Covariance Brain Networks. Front. Neurosci. 2021, 15, 756868. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Parikh, N.A.; He, L. A novel transfer learning approach to enhance deep neural network classification of brain functional connectomes. Front. Neurosci. 2018, 12, 491. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Dvornek, N.C.; Zhou, Y.; Zhuang, J.; Ventola, P.; Duncan, J.S. Efficient Interpretation of Deep Learning Models Using Graph Structure and Cooperative Game Theory: Application to ASD Biomarker Discovery. Inf. Process. Med. Imaging 2018, 11492, 718–730. [Google Scholar] [CrossRef]

- Wang, C.; Xiao, Z.; Wu, J. Functional connectivity-based classification of autism and control using SVM-RFECV on rs-fMRI data. Phys. Medica 2019, 65, 99–105. [Google Scholar] [CrossRef]

- Yang, X.; Islam, M.S.; Khaled, A.M.A. Functional connectivity magnetic resonance imaging classification of autism spectrum disorder using the multisite ABIDE dataset. In Proceedings of the 2019 IEEE EMBS International Conference on Biomedical and Health Informatics, BHI 2019—Proceedings, Chicago, IL, USA, 19–22 May 2019. [Google Scholar] [CrossRef]

- Sherkatghanad, Z.; Akhondzadeh, M.; Salari, S.; Zomorodi-Moghadam, M.; Abdar, M.; Acharya, U.R.; Khosrowabadi, R.; Salari, V. Automated Detection of Autism Spectrum Disorder Using a Convolutional Neural Network. Front. Neurosci. 2020, 13, 1325. [Google Scholar] [CrossRef]

- Sewani, H.; Kashef, R. An Autoencoder-Based Deep Learning Classifier for Efficient Diagnosis of Autism. Children 2020, 7, 182. [Google Scholar] [CrossRef]

- Leming, M.; Górriz, J.M.; Suckling, J. Ensemble Deep Learning on Large, Mixed-Site fMRI Datasets in Autism and Other Tasks. Int. J. Neural Syst. 2020, 30, 2050012. [Google Scholar] [CrossRef] [PubMed]

- Rakić, M.; Cabezas, M.; Kushibar, K.; Oliver, A.; Lladó, X. Improving the detection of autism spectrum disorder by combining structural and functional MRI information. Neuroimage Clin. 2020, 25, 102181. [Google Scholar] [CrossRef]

- Ahammed, M.S.; Niu, S.; Ahmed, M.R.; Dong, J.; Gao, X.; Chen, Y. DarkASDNet: Classification of ASD on Functional MRI Using Deep Neural Network. Front. Neuroinform 2021, 15, 635657. [Google Scholar] [CrossRef]

- Gao, J.; Chen, M.; Li, Y.; Gao, Y.; Li, Y.; Cai, S.; Wang, J. Multisite Autism Spectrum Disorder Classification Using Convolutional Neural Network Classifier and Individual Morphological Brain Networks. Front. Neurosci. 2021, 14, 629630. [Google Scholar] [CrossRef]

- Almuqhim, F.; Saeed, F. ASD-SAENet: A Sparse Autoencoder, and Deep-Neural Network Model for Detecting Autism Spectrum Disorder (ASD) Using fMRI Data. Front. Comput. Neurosci. 2021, 15, 654315. [Google Scholar] [CrossRef]

- Leming, M.J.; Baron-Cohen, S.; Suckling, J. Single-participant structural similarity matrices lead to greater accuracy in classification of participants than function in autism in MRI. Mol. Autism 2021, 12, 34. [Google Scholar] [CrossRef]

- Jung, W.; Jeon, E.; Kang, E.; Suk, H.I. EAG-RS: A Novel Explainability-guided ROI-Selection Framework for ASD Diagnosis via Inter-regional Relation Learning. IEEE Trans. Med. Imaging 2023, 43, 1400–1411. [Google Scholar] [CrossRef]

- Vidya, S.; Gupta, K.; Aly, A.; Wills, A.; Ifeachor, E.; Shankar, R. Explainable AI for Autism Diagnosis: Identifying Critical Brain Regions Using fMRI Data. 2025. Available online: https://arxiv.org/pdf/2409.15374 (accessed on 20 June 2025).

- Khan, K.; Katarya, R. MCBERT: A multi-modal framework for the diagnosis of autism spectrum disorder. Biol. Psychol. 2025, 194, 108976. [Google Scholar] [CrossRef] [PubMed]

- Ashraf, A.; Zhao, Q.; Bangyal, W.H.; Raza, M.; Iqbal, M. Female autism categorization using CNN based NeuroNet57 and ant colony optimization. Comput. Biol. Med. 2025, 189, 109926. [Google Scholar] [CrossRef] [PubMed]

- Manikantan, K.; Jaganathan, S. A Model for Diagnosing Autism Patients Using Spatial and Statistical Measures Using rs-fMRI and sMRI by Adopting Graphical Neural Networks. Diagnostics 2023, 13, 1143. [Google Scholar] [CrossRef]

- Song, J.; Chen, Y.; Yao, Y.; Chen, Z.; Guo, R.; Yang, L.; Sui, X.; Wang, Q.; Li, X.; Cao, A.; et al. Combining Radiomics and Machine Learning Approaches for Objective ASD Diagnosis: Verifying White Matter Associations with ASD. 2024. Available online: https://arxiv.org/abs/2405.16248v1 (accessed on 31 March 2025).

- Ali, M.T.; ElNakieb, Y.; Elnakib, A.; Shalaby, A.; Mahmoud, A.; Ghazal, M.; Yousaf, J.; Abu Khalifeh, H.; Casanova, M.; Barnes, G.; et al. The Role of Structure MRI in Diagnosing Autism. Diagnostics 2022, 12, 165. [Google Scholar] [CrossRef]

- Dong, Y.; Batalle, D.; Deprez, M. A Framework for Comparison and Interpretation of Machine Learning Classifiers to Predict Autism on the ABIDE Dataset. Hum. Brain Mapp. 2025, 46, e70190. [Google Scholar] [CrossRef]

- Raj, A.; Ratnaik, R.; Sengar, S.S.; Fredo, A.R.J. Characterizing ASD Subtypes Using Morphological Features from sMRI with Unsupervised Learning. Stud. Health Technol. Inf. 2025, 327, 1403–1407. [Google Scholar] [CrossRef]

- He, C.; Cortes, J.; Ding, Y.; Shan, X.; Zou, M.; Chen, H.; Chen, H.; Wang, X.; Duan, X. Combining functional, structural, and morphological networks for multimodal classification of developing autistic brains. Brain Imaging Behav. 2025, 1–13. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).