1. Introduction

The accelerating development of renewable generation has brought new challenges to the secure and economical operation of power systems [

1,

2], because its power output depends on fluctuating meteorological conditions such as wind speed and solar radiation [

2,

3,

4].

In order to capture the uncertainties of renewable generation, it is crucial to establish the probabilistic model of actual power output beyond deterministic forecast values [

5]. As a powerful probability density estimator, the Gaussian Mixture Model (GMM) is characterized by its outstanding flexibility to fit arbitrary multidimensional correlated random variables precisely [

6]. Thus, GMM has been successfully applied to modeling spatial-temporal probabilistic characteristics of uncertain renewable generation [

7,

8].

Due to its favorable properties for chance-constrained programming, the GMM-based probabilistic model of renewable generation is also widely employed in the uncertainty-aware operation of power systems [

9,

10]. It serves as a robust tool for decision-making under uncertainty across a range of applications. For example, in unit commitment, the GMM helps optimize the day-ahead scheduling of generating units by probabilistically characterizing wind and solar power output [

11]. Similarly, it is instrumental in economic dispatch [

12,

13,

14,

15], where it enables a more precise trade-off between operational costs and risks. Specifically, some studies use it to capture forecast errors [

12] or embed it within a chance-constrained framework to enhance security [

13], while others apply GMM to multi-area problems to efficiently handle uncertainty across interconnected grids [

14,

15]. Beyond this, the GMM proves crucial for the aggregation of distributed energy resources [

16,

17], as it provides a compact and accurate probabilistic representation of the collective output from numerous small generators, which is essential for effective grid management. The model also provides a powerful tool for the allocation of flexible ramping capacity [

18,

19] by quantifying the required ramping capability based on the probabilistic fluctuations of high-penetration renewable generation. By accurately modeling these needs, this approach helps optimize flexible resources like fast-ramping generators or energy storage systems to maintain grid balance and stability.

As a parametric probabilistic model, estimating parameters of GMM based on empirical data is essential to characterize spatial-temporal uncertainties of renewable generation precisely [

20,

21]. The well-known Expectation Maximization (EM) algorithm is the most acknowledged approach to estimate parameters of GMM from historical samples [

22,

23]. In the context of probabilistic modeling of renewable generation, the typical EM algorithm requires an offline training set composed of historical forecast and corresponding actual generation to tune parameters of GMM, and then the tuned GMM with static parameters is deployed online to predict uncertainties of renewable generation in the future [

24].

In real-world power systems, numerous renewable energy sources continuously generate a large volume of data from forecast and measurement [

25]. Probabilistic characteristics of renewable generation represented by these emerging samples are obviously time-varying due to meteorological fluctuations [

26,

27,

28]. Static GMM trained on offline data cannot capture the non-stationary uncertainties of renewable generation in practice. Meanwhile, because the EM algorithm needs to traverse the entire dataset per iteration, re-estimating the parameters of GMM is a time-consuming and computationally intensive task considering the size of historical samples [

29,

30]. Thus, it is a critical issue to adjust parameters of GMM efficiently in response to continuously emerging data if we aim to track the time-varying uncertainties of renewable generation [

31]. The ability to rapidly integrate new data streams into existing probabilistic models opens avenues for more responsive and adaptive decision-making in managing renewable energy resources.

In the field of machine learning and data mining, incremental or recursive estimation of Gaussian Mixture Model (GMM) parameters has garnered remarkable attention [

32,

33,

34,

35]. This approach is particularly advantageous as it addresses the significant computational burden associated with updating a probabilistic model dynamically [

36,

37]. Instead of relying on the entire dataset, the incremental update strategy modifies GMM parameters recursively using only information from new data samples. This recursive process allows for the efficient, on-the-fly adjustment of the model’s parameters as new data stream in, which is crucial for applications that require continuous model adaptation. This principle has been successfully extended to various domains, including the probabilistic modeling of renewable energy. For instance, the authors in [

38] proposed a distributed variant of the incremental GMM update algorithm and applied it to the probabilistic modeling of wind power forecast errors. This demonstrates the practical utility of incremental GMM in handling large-scale dynamic data typical of modern power systems.

In this paper, we propose a comprehensive framework for updating the parameters of the GMM using continuously arriving samples. We focus on exploring the application of this framework as a recursively updated probabilistic model for renewable generation. This recursive update method addresses two main issues, which are often overlooked in existing research on incremental GMM.

Firstly, we introduce the calibration of GMM parameters to enhance the long-term performance of the probabilistic model after a large number of incremental updates. While previously proposed recursive update algorithms are computationally efficient, they do not guarantee the optimality of parameters. The inaccuracies accumulated after recursive updates may compromise the precision of the probabilistic model in characterizing the uncertainties of renewable generation. To mitigate this, we combine an efficient recursive update step with an auxiliary calibration step, periodically invoked to compensate for suboptimality introduced by the former and to prevent degradation of precision over time.

Secondly, our recursively updated probabilistic model allows for a bidirectional update of the training dataset by incorporating new observations of renewable generation while concurrently discarding outdated samples. This stands in contrast to previous research where the total size of the training dataset monotonically increases due to the continuous integration of new samples. As the archive of historical renewable generation grows, the decreasing ratio of upcoming new samples against existing samples weakens the influence of incremental updates. In contrast, our bidirectional update strategy mitigates the inflation of the training dataset by gradually replacing the oldest samples with new ones in an incremental manner. This ensures that the most recent samples are always emphasized, enabling the model to capture the latest trend of uncertainties more effectively.

The remainder of this paper is organized as follows: The probabilistic model of renewable generation with GMM and the conventional parameter estimation algorithm are introduced in

Section 2 and

Section 3 as prelude. The incremental update framework for GMM using streaming samples of renewable generation is proposed in

Section 4.

Section 5 presents the results of case study. Conclusions are drawn in

Section 6.

2. Probabilistic Model of Renewable Generation with Gaussian Mixture Model

Suppose we have already obtained deterministic forecast curves of

RESs with

lookahead points. These forecasts can be denoted as a

-dimensional vector (1) where

represents the operator that reshapes the matrix as a vector. The corresponding actual power generated by

RESs revealed after the forecast is denoted as

(2) with the same size of

. The forecast error

is defined as the difference between

and

.

If

and

are concatenated into a new

-dimensional vector

to include the observed pairs between the forecast and the actual generation, the joint probability distribution of

can be described by the Gaussian Mixture Model (GMM).

denote the weight, expectation, and covariance matrix of each Gaussian component in GMM, respectively.

The mean vector and covariance matrix can be separated by different parts of

.

Once the joint probability model of

is revealed, we are more interested in the conditional distribution of the actual generation

with respect to the deterministic forecast value known as

in advance. As pointed out by [

2], the conditional distribution of

is still an

-dimensional GMM whose parameters can be inferred from the joint probability model of

analytically.

The conditional distribution of forecast error

is described as a GMM as well.

3. Parameter Estimation Algorithm for Probabilistic Model of Renewable Generation

As mentioned in

Section 2, the key to an accurate model of renewable generation is the joint probability distribution of

(5). The parameters of GMM

(including weight, expectation, and covariance matrix of each Gaussian component) in (5) can be efficiently estimated by the Expectation Maximization (EM) algorithm. We will introduce the outline of the EM algorithm as the prerequisite of its incremental variation.

Step 1: samples of composed of historical forecast and actual generation data, , are collected to formulate the training set.

Step 2: With the given number of components in GMM

, the parameters of GMM

are initialized to bootstrap the EM algorithm. A commonly used approach is to execute k-means algorithm with

clusters on the training set, then the parameters of the

kth component is calculated based on training samples in the

kth cluster

.

denotes the number of training samples belonging to

.

Step 3 (Expectation step, E-step): The responsibility of each training sample to each Gaussian component based on current parameters of GMM.

Step 4 (Maximization step, M-step): The parameters of GMM are adjusted to maximize the total likelihood of training samples.

Step 5: The log likelihood of training samples is calculated and compared with the result from the previous iteration. If their difference is smaller than the tolerable criteria, the EM algorithm has converged; otherwise, steps three and four are iteratively repeated to further improve the parameters of GMM.

Following these steps from one to five, we can effectively estimate the parameters of GMM with the goal of maximizing log likelihood of the whole training set under GMM.

4. Recursive Parameter Update for Streaming Samples of Renewable Generation

After estimating parameters in the joint probability distribution of (5), it can be employed to infer the conditional distribution of actual renewable generation based on the latest forecast values. During the operation of power systems, the forecasts of renewable generation and their corresponding actual values are continuously updated and cumulated in a streaming fashion. It is important to consolidate the recent observations of renewable generation with the pre-trained probabilistic model to improve its accuracy. Although we can abandon the existing model completely and estimate the parameters of GMM from scratch on the extended training set including new samples, this naïve approach is not scalable and highly inefficient for online application. Thus, we use the following three procedures to recursively update the parameters of joint probability distribution of by keeping track of the newly collected data samples with moderate computational overhead: First, learning new samples ensures that the probabilistic model incorporates the most recent forecast and measurement data of renewable generation, thereby capturing the latest time-varying uncertainty patterns. Second, removing old samples prevents outdated information from dominating the training set and ensures that the model emphasizes current conditions instead of being diluted by obsolete data. Third, calibration of parameters is introduced to correct suboptimality accumulated after multiple incremental updates.

4.1. Procedure 1 (Learning New Samples)

When new samples of are collected, the E-step and M-step in the original EM algorithm are adapted to update the parameters without traversing existing training samples.

In the E-step, we calculate responsibilities of new samples with existing parameters of GMM:

In the M-step, the parameters should be updated using the extended training set with

samples.

By observing the difference between (25)–(27) and (20)–(22), the parameter update formulas above can be rewritten in the following equivalent recursive forms, (28)–(30), to avoid duplicate computation on the old training samples. The update formula of covariance matrix (30) depends on the equality (31).

Because PDF formula of GMM contains the inverse of covariance matrix, Cholesky decomposition of each covariance matrix (32) where Cholesky factor

is a lower triangular matrix is calculated and stored to avoid computing its inverse explicitly.

can be obtained by solving the linear systems

and

.

Calculating the determinant of the covariance matrix is also straightforward with Cholesky decomposition.

The computational complexity of calculating Cholesky decomposition of an matrix is . However, Cholesky decomposition can be recursively updated with only computations for basic operations:

- 1.

Scaling operation: If matrix is multiplied by a constant coefficient , its Cholesky factor is multiplied by .

- 2.

Rank-1 update operation: If matrix

is updated by a rank-1 matrix (36) where

is a vector, its Cholesky decomposition can be updated with

complexity. The detailed algorithm can be found in Section 6.5.4 of [

39] and omitted here. There have been several implementations in well-known numerical software, such as

cholupdate in MATLAB (R2024a) and

lowrankupdate in Julia (1.10.2).

The recursive update formula of covariance (30) is composed of one scaling operation and rank-1 update operations. Thus, Cholesky decomposition of the covariance matrix can also be updated recursively to reuse existing Cholesky factors.

4.2. Procedure Two (Removing Old Samples)

In parallel to learning new samples, we also want to remove some old samples from the training set to restrict its size and accommodate more recently updated samples. Here, we assume the oldest samples of (from No. 1 to No. ) are selected to be removed. The adapted versions of E-step and M-step for removing old samples are presented as follows:

In the E-step, responsibilities of old samples are retrieved from historical computational traces directly.

In the M-step, the parameters should be updated using the reduced training set with

samples.

Like learning new samples, the parameter update formulas can be rewritten in equivalent recursive forms to avoid computation on unaffected training samples.

4.3. Procedure Three (Calibration of GMM)

Learning new samples and removing old samples can be regarded as one adaptive E-step and M-step to update the parameters of GMM for renewable generation, where the convergence of parameter estimation is not achieved to ensure its optimality. Consequently, suboptimality might be accumulated after executing these incremental update procedures multiple times and undermining the performance and accuracy of GMM as a probabilistic model of renewable generation.

Thus, full-scale E-step and M-step should be executed on the whole training set (including both updated samples and initial samples not removed) for several iterations to calibrate parameters of the GMM. The algorithm has been described

Section 2.

4.4. General Framework for Recursive Probabilistic Model

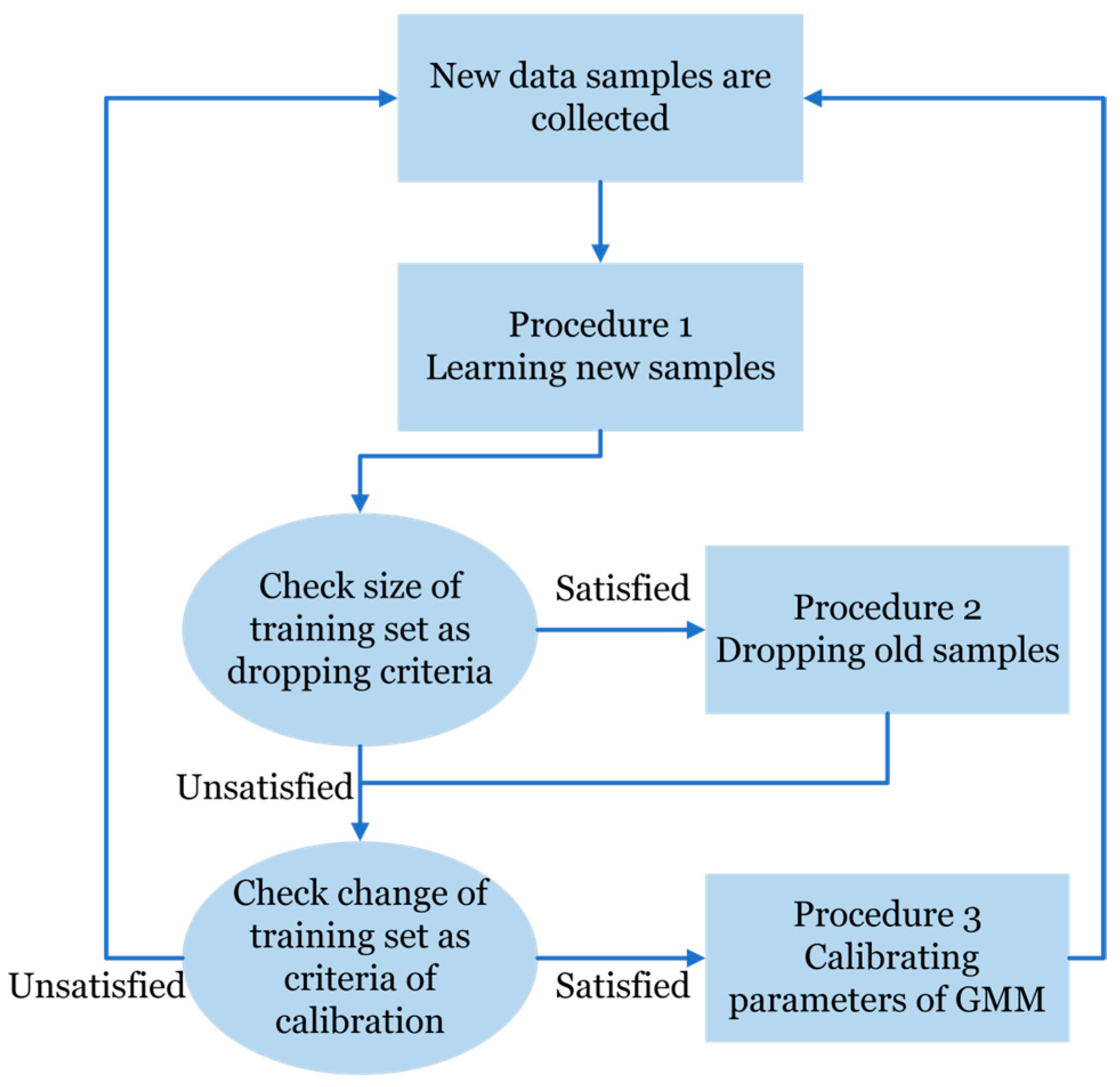

As illustrated in the flow chart in

Figure 1, we propose the following framework to maintain a recursive probabilistic model of renewable generation based on continuously updated samples by composing three kinds of procedures discussed above.

When new observations of renewable generation including forecast and actual generation are collected, procedure one is invoked to extend the training and update the parameters of probabilistic model.

The size of the training set is used as the criterion to determine whether some old samples should be removed to keep its compactness. If the size is beyond the determined threshold, some old samples are disposed from the training set and procedure two is invoked to update the parameters of the probabilistic model accordingly.

When the probabilistic model of forecast errors experiences multiple rounds of recursive updates, including learning new samples and removing old samples, its parameters should be calibrated by procedure three periodically. The change in training set is regarded as the triggering criteria of calibration. For example, we can invoke the calibration procedure if 5% of samples in the training set are replaced by new samples since the last calibration.

Remark (Analysis of computational complexity): For procedure one (learning new samples) and two (removing old samples), the computational complexity of their update formulas for parameters (28)–(30) and (40)–(42) is where is the dimension of data samples ( in our case). The performance of recursive update procedures is only related to the size of the alternated data samples and not affected by the size of the whole training set. Thus, they can be invoked frequently to deal with streaming samples without much computational effort.

Comparatively, a full-scale update of parameters (20)–(22) requires operations. Because the alternated samples represent only a small fraction of the overall training set , calibrating the model parameters is a more computationally expensive operation and should therefore be performed at a lower frequency.

5. Numerical Tests

The techno-economic WIND toolkit [

40] from NREL serves as the data source for renewable generation in this section. The whole dataset contains aligned forecast and actual generation data for wind power for seven years at 120,000 sites in the US. The forecast data are available at a 1 h resolution for 1 h, 4 h, 6 h, and 24 h forecast horizons, and the actual generation is available at a 5 min resolution estimated from meteorological data. The 6 h ahead forecast and actual wind power generation of 40 sites (No. 205–244) are chosen as examples to establish the complete sample set of

(

) with 1 h resolution for 7 years, where the actual generation is down sampled to align with forecast data. The dimension of

is

. All numerical tests are implemented in Julia and executed on a laptop with Intel i7-1360P CPU and 32GB RAM.

The numerical tests are separated into two parts with different emphasis on precision and computational efficiency of the proposed recursively updated probabilistic model.

5.1. Comparing Precision to Characterize Uncertainties of Renewable Generation

We designed the following steps to emulate the continuous process of collecting new samples of , updating the probabilistic model, and predicting the probability distribution of actual generation on the fly.

Step 1: The first 8760 samples of (for one entire year) are selected as the initial training set and the EM algorithm is employed to obtain the parameters of GMM for .

Step 2: The next

observations of

are used to evaluate the performance of the probabilistic model of

. For each observation of forecast generation, with

denoted as

, we can obtain the conditional distribution of actual generation

based on the GMM of

according to (10). The log likelihood of corresponding actual generation

under the conditional distribution is calculated as the performance index because higher likelihood means that the GMM of

provides more precise characterization for randomness of wind power to predict actual generation based on the forecast value.

Step 3: The observations of in step two are absorbed into the training set. If the total number of training samples exceeds the predetermined upper bound , the oldest samples are discarded to reduce the size of training set to an appropriate level .

Step 4: The parameters of the GMM are updated according to the change in training samples in step three, and then we go back to step two and evaluate the performance of the probabilistic model of on new observations.

Steps two to four above are executed for

iterations, and all the parameters in our numerical tests are set as follows:

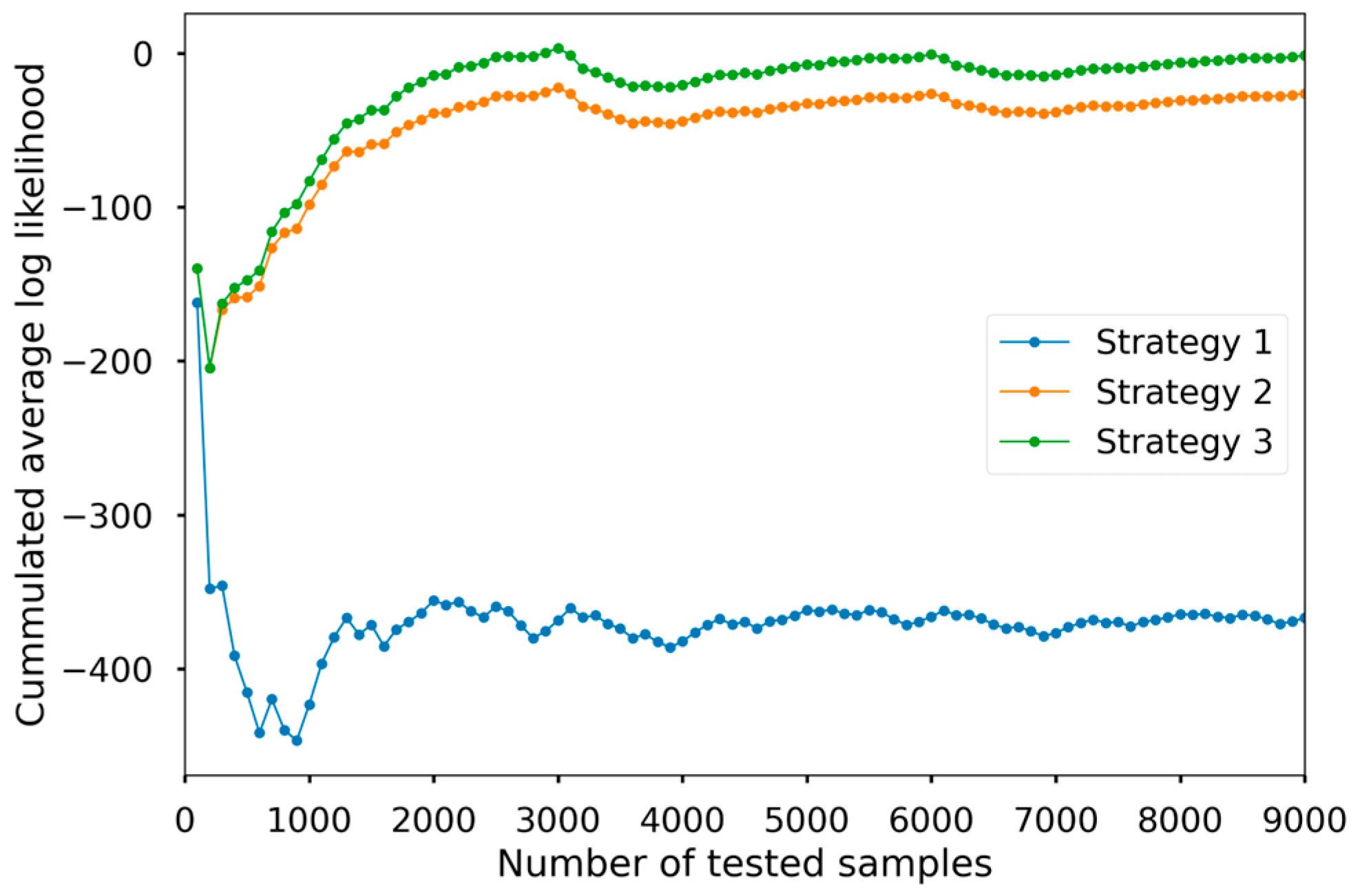

The following three strategies are used to update the parameters of GMM in step four and compare their influence on the performance of the probabilistic model of :

Strategy 1 (Initial parameter): This strategy does not update the parameters of GMM and always uses the initial parameters of GMM obtained in step one.

Strategy 2 (Recursive update without calibration): This strategy updates the parameters of GMM with the proposed procedure 1 to learn new samples and procedure 2 to remove old samples but never invokes the calibration procedure.

Strategy 3 (Recursive update): Compared with the previous strategy, this strategy invokes the proposed procedure three automatically to calibrate the parameters of GMM if step two and three have been executed 50 times since the previous calibration.

We compare the accuracy of the probabilistic model via the log likelihood of actual generation under the GMM of

calculated in step two. Each strategy has collected

results of log likelihood. We calculate the cumulated average log likelihood for comparison. The results of the three strategies are shown in

Figure 2.

As shown in

Figure 2, the proposed strategy three exhibits the highest cumulated average log likelihood among all probabilistic models. It proves that the combination of recursive updates and calibration steps can effectively update the probabilistic model of renewable generation to capture its time-varying characteristics. The performance of strategy two is approximately equal to strategy three in the initial stages due to the effectiveness of the recursive update, but the gap between strategy two and three grows and then stabilizes when more and more new observations of renewable generation are collected. The performance degradation of strategy two can be explained by its lack of a calibration step, which is essential to compensate for suboptimality accumulated in recursive update steps. To serve as a control group, strategy one demonstrated substantially lower performance versus the remaining strategies that severely degrade as more samples of renewable generation are observed, which highlights the necessity of updating the probabilistic model based on new data samples.

Based on comparisons on the precision of predictions, the proposed recursive probabilistic model has the following advantages compared to the other two approaches.

Compared with the static probabilistic model with fixed parameters, the recursive probabilistic model can adapt to new observations of renewable generation and update internal parameters of GMM dynamically. Thus, the predicted probability distribution of actual generation is more accurate and does not degrade due to outdated historical samples.

Compared with the recursive probabilistic model without calibration in previous studies, our model demonstrates higher long-term accuracy in the prediction of renewable generation. The improvement is mainly attributed to periodic calibration procedures to overcome the suboptimality of parameters accumulated during recursive updates.

5.2. Comparing Computational Time to Acquire Parameters of Probabilistic Model of Renewable Generation

In this section, we conduct two experiments to demonstrate computational efficiency to accommodate new observations of renewable generation provided by the recursive probabilistic model compared with GMM tuned by a conventional full-scale EM algorithm.

In general, we assume that there are

historical samples of renewable generation

and the probabilistic model in the form of GMM has been tuned via EM algorithm. The task is to update the parameters of the probabilistic model with knowledge of

new observations of

. The conventional full-scale EM algorithm reconstructs the parameters of the GMM on the whole training set including

observations by executing E-step and M-step in

Section 3 iteratively. Meanwhile, the proposed recursive probabilistic model updates the parameters of GMM by traversing

new observation once without iteration.

The first experiment fixes

as 10 and enlarges

from 8760 (12 months of samples) to 20,440 (28 months of samples) in interval of 4 months. The performance of the two approaches is shown in

Table 1. The recursive probabilistic model demonstrates minor fluctuations in computational time, maintaining performance below 0.13 s constantly. Notably, this efficiency persists regardless of the increasing number of existing samples

.

In contrast to the recursive probabilistic model, the full-scale EM algorithm exhibits a rapid escalation in total computational time as the sample size increases. This increase is influenced by two main factors: the number of iterations the EM algorithm undergoes, and the duration of each iteration. The number of iterations is particularly sensitive to the initial parameters, which are often derived from clustering methods like k-means and the criteria set for convergence. Meanwhile, the time spent on each iteration is approximately proportional to the total size of the training samples. This is because each iteration of the EM algorithm requires processing all the samples, making the process more time-consuming as the sample size grows.

Empirical evidence from

Table 1 supports these observations. It shows that the average time spent per iteration increases about fourfold, from 4.0 s to 15.8 s, as the sample size expands from 8760 to 20,440. When the sample size reaches 20,440, the total computational time for the full EM algorithm soars to 649 s. This duration is nearly 12,000 times longer than that of the recursive probabilistic model, underscoring the significant difference in scalability and efficiency between the two methodologies.

The second experiment was conducted with a fixed parameter,

, while varying the number of new samples

between 10 and 90. This setup was designed to elucidate the relationship between the number of new samples and the computational efficiency of the two methods. The result of the experiment is presented in

Table 2.

The time consumed by the recursive update process is positively related to the quantity of processed new samples and grows at an approximately linear rate. This empirical finding is in harmony with our prior theoretical analysis regarding computational complexity, underscoring the predictability of the recursive update’s performance. In contrast, the iteration time for the full EM algorithm displayed a tendency to oscillate around the 10 s mark. This pattern can be attributed to the algorithm’s computational complexity being more acutely affected by minor variations in the total number of samples (ranging from 11,690 to 11,770), rather than the number of new samples.

The recursive update procedure adeptly integrates up to 90 new observations in a mere 0.34 s, demonstrating remarkable computational efficiency. This performance is especially noteworthy when juxtaposed with the full-scale EM algorithm, where the recursive update method requires less than 0.1% of the time needed by its counterpart (417 s).

The experiments conducted in this section demonstrate a significant reduction in computational time when employing the proposed recursive update algorithm, as opposed to the traditional full-scale EM algorithm. A key observation is that the degree of acceleration is influenced by the ratio of existing samples to the newly added samples, which leads to a remarkable 1000- to 10,000-fold decrease in computational time as evidenced in our experiments.

In scenarios where the number of renewable energy sources expands, the computational demands of the full EM algorithm for updating a new batch of observations could become impractically burdensome, potentially extending to several hours. In contrast, the recursive probabilistic model demonstrated the capability to accomplish similar tasks within a matter of seconds. The efficiency and agility of the recursive update algorithm highlight its potential for online probabilistic modeling, particularly in processing real-time streaming data. This aspect is especially pertinent in practical applications involving renewable energy generation, where timely and efficient data processing is paramount.

5.3. Discussion

To highlight the relative merits of different GMM updating strategies, as detailed in

Table 3, we compare the proposed recursive GMM with sample elimination and calibration against two representative approaches from recent studies, as well as the conventional EM-based static GMM. The comparison focuses on the updating mechanism, treatment of historical data, calibration strategy, computational efficiency, and accuracy performance.

The conventional EM algorithm achieves high accuracy in static settings but lacks adaptability to non-stationary environments, and its computational burden increases significantly with data size. The recursive GMM with a forgetting factor [

34] effectively emphasizes recent data and enables efficient online updating. However, it relies heavily on the proper selection of the forgetting parameter and does not address the long-term accuracy degradation problem. Similarly, the online GMM with a forgetting factor [

38] provides rapid adaptation for renewable energy forecasting, but the absence of a calibration mechanism may lead to accumulated bias over time.

In contrast, the proposed method integrates recursive incremental updating, sample elimination, and periodic calibration, thereby maintaining computational efficiency while ensuring long-term stability. By discarding outdated samples, the model captures the most recent stochastic behavior, and the calibration step prevents cumulative errors in recursive updates. Numerical experiments confirm that this approach achieves a favorable balance between real-time adaptability and long-term accuracy, making it particularly suitable for renewable energy scenarios with significant variability.

6. Conclusions

We propose a recursively updated probabilistic model based on the GMM for renewable generation, aimed at continuously tracking time-varying uncertainties using emerging forecasted and measured data. Our approach leverages an efficient incremental learning algorithm, allowing the parameters of the probabilistic model to be autonomously adjusted by learning from new observations and discarding old ones simultaneously, with slight computational burden. Furthermore, a periodic calibration step is introduced to maintain the long-term performance of the probabilistic model after a large number of recursive updates.

In our numerical experiments conducted on empirical data of wind power, our recursive model demonstrates higher precision in prediction compared to existing recursive update strategies, which improves the average log likelihood of predictions by approximately 5–10% over long-term updates. It also shows a significant reduction in computational time when compared to conventional full-scale EM algorithms. For instance, updating parameters with 20,000 training samples and 10 new observations required only 0.13 s, whereas the conventional EM algorithm took 649 s, nearly 5000 times slower.

In practice, the significance of this study lies in its ability to cope with the inherently time-varying characteristics of renewable generation. Unlike static offline models that quickly become outdated, the proposed recursive method provides a computationally efficient and accurate tool to dynamically update probabilistic models in real time, thereby offering strong potential for application in power system operation.

The proposed method has one limitation. Each step of the incremental update is computationally efficient but cannot guarantee parameter optimality; while the periodic full calibration ensures accuracy through multiple iterations, it is computationally expensive. How to coordinate these two procedures to achieve a better trade-off between efficiency and accuracy remains an open question for future research.