Abstract

The future of anxiety management lies in bridging traditional evidence-based treatments with intelligent and adaptive digital platforms. Embedding multi-agent systems capable of real-time mood detection and self-management support represents a transformative step towards intelligent care, enabling users to independently regulate acute episodes, prevent relapse, and promote sustained personal well-being. These digital solutions illustrate how technology can improve accessibility, personalization, and adherence, while establishing the foundation for integrating multi-agent architectures into mental health systems. Such architectures can continuously detect and interpret users’ emotional states through multimodal data, coordinating specialized agents for monitoring, personalization, and intervention. Crucially, they extend beyond passive data collection to provide active, autonomous support during moments of heightened anxiety, guiding individuals through non-pharmacological strategies such as breathing retraining, grounding techniques, or mindfulness practices without requiring immediate professional involvement. By operating in real time, multi-agent systems function as intelligent digital companions capable of anticipating needs, adapting to context, and ensuring that effective coping mechanisms are accessible at critical moments. This paper presents a multi-agent architecture for the digital management of anxiety episodes, designed not only to enhance everyday well-being but also to deliver immediate, personalized assistance during unexpected crises, offering a scalable pathway towards intelligent, patient-centered mental health care.

1. Introduction

Anxiety disorders, including generalized anxiety disorder (GAD) and panic disorder (PD), are among the most prevalent mental health conditions worldwide and are strongly associated with disability and impaired daily functioning. Although cognitive behavioral therapy (CBT) and pharmacological interventions such as selective serotonin reuptake inhibitors (SSRIs) and serotonin–norepinephrine reuptake inhibitors (SNRIs) remain the clinical gold standards, their real-world impact is limited by issues of accessibility, adherence, and personalization. Recent advances in digital mental health—such as internet-delivered CBT, virtual reality exposure, and mobile applications for mindfulness, sleep regulation, and panic management—have expanded therapeutic reach. However, these digital solutions are typically static systems, delivering pre-programmed interventions without the ability to dynamically adapt to users’ changing emotional states.

From a computational perspective, addressing this limitation requires intelligent architectures capable of real-time sensing, reasoning, and intervention. Multi-agent systems (MAS) provide a robust paradigm for this challenge. In MAS, autonomous agents operate collaboratively, each with specialized responsibilities such as monitoring emotional states, personalizing interventions, or triggering context-sensitive strategies. Applied to anxiety management, such systems can process multimodal inputs—physiological signals, behavioral markers, or self-reports—to detect rising anxiety levels and deliver adaptive, non-pharmacological strategies, including breathing retraining, grounding techniques, or mindfulness practices. By coordinating these agents within a unified architecture, digital platforms can evolve from static repositories into intelligent, adaptive companions capable of supporting users during moments of acute distress.

This paper focuses exclusively on the computational dimension of this problem. Specifically, we present a multi-agent architecture for the digital management of anxiety episodes, designed to empower users to self-regulate during peak anxiety without requiring immediate professional involvement. Our contribution lies in the design of the system’s architecture, highlighting how monitoring agents, personalization agents, and intervention agents can collaborate in real time to provide autonomous, context-aware support. The emphasis of this work is therefore purely informatics: rather than evaluating clinical outcomes, we describe how multi-agent principles can be operationalized into a scalable, adaptive digital health system.

2. Related Work

Anxiety disorders, particularly generalized anxiety disorder (GAD) and panic disorder (PD), are among the most prevalent mental health conditions and cause substantial impairment in daily functioning. Current therapeutic strategies are based on both psychological and pharmacological approaches, often structured within stepped-care models that adjust according to symptom severity and patient needs [1,2].

Cognitive behavioral therapy (CBT) represents the most extensively validated psychological intervention. It incorporates psychoeducation, cognitive restructuring, breathing retraining, relaxation, and behavioral experiments. In PD, exposure-based strategies are particularly central: interoceptive exposure targets feared bodily sensations such as palpitations or dizziness, while in vivo exposure addresses avoidance of real-life situations. These methods reduce catastrophic misinterpretations and disrupt the cycle of anticipatory fear [3,4]. Evidence from meta-analyses has shown CBT to be as effective as pharmacotherapy, with lower relapse rates following treatment discontinuation [5].

Efforts to expand accessibility have led to the development of alternative delivery formats. Group CBT has demonstrated similar outcomes to individual therapy, while guided self-help and internet-delivered CBT (iCBT) have consistently shown non-inferior efficacy compared to traditional face-to-face interventions [6,7]. Importantly, guided iCBT outperforms unguided formats, underlining the importance of therapist involvement [8]. Recent innovations, including virtual reality exposure therapy and personalized CBT platforms integrating interoceptive exposure, further strengthen the therapeutic potential of psychological interventions [9].

Pharmacological treatments remain important, particularly for moderate to severe cases. Selective serotonin reuptake inhibitors (SSRIs) such as sertraline and escitalopram, and serotonin–norepinephrine reuptake inhibitors (SNRIs) such as venlafaxine and duloxetine, are considered first-line choices, with continuation for at least 6–12 months post-remission recommended to prevent relapse [2]. Second-line treatments include pregabalin, which has demonstrated particular efficacy in GAD, and buspirone, which offers a favorable safety profile albeit with a slower onset of action. Tricyclic antidepressants (TCAs) and monoamine oxidase inhibitors (MAOIs) may be considered in treatment-resistant cases, though their use is limited by adverse effects [1]. Benzodiazepines (e.g., lorazepam, clonazepam) may provide short-term relief of acute symptoms; however, given their risk of dependence, tolerance, and cognitive impairment, they play only a marginal role in contemporary management and are generally reserved for crisis intervention under careful clinical supervision [10].

Current guidelines recommend a stepped-care approach: beginning with low-intensity interventions such as guided self-help, progressing to high-intensity CBT or pharmacotherapy, and employing combined approaches in cases of partial response [2,6]. While CBT and SSRIs/SNRIs remain the gold standard, there has been growing recognition of the transformative role of digital health solutions.

Digital applications are emerging as scalable and innovative complements to traditional therapies. Some platforms, such as StayFine [11] and Talkspace [12], provide broad-based wellness and psychotherapy services. StayFine combines monitoring, psychoeducation, cognitive restructuring, relapse prevention, and user-selected modules (e.g., sleep, behavioral activation, exposure therapy), all guided by experts and supported by long-term follow-up. Talkspace, by contrast, emphasizes flexible access to professional therapy via text, voice, or video communication, supported by tools such as symptom tracking and daily wellness challenges.

Other platforms apply CBT principles to specific symptom domains. Sleepio offers a six-week CBT-based program for insomnia, enhanced by a neural model that predicts sleep quality more effectively than traditional approaches through the analysis of behavioral data [13]. Similarly, Rejoyn [14] integrates CBT-based video lessons with cognitive-emotional training (CET) to improve emotional processing, providing structured digital support for adults with depression under pharmacological treatment.

Mindfulness-based platforms also play an increasingly important role. Headspace combines guided meditation, mindful movement, and sleep-focused practices, and observational studies have confirmed its effectiveness in reducing stress and improving well-being when used consistently [15]. Petit Bambou, widely adopted in Europe and recently introduced in Mexico [16], offers over 300 sessions designed by experts in psychology and mindfulness. It also includes programs for children and adolescents [17], supporting creativity, concentration, and empathy, while enabling personalization through offline use, adjustable session duration, and progress tracking.

Specialized tools targeting anxiety crises further enrich the digital landscape. Rootd provides immediate relief through features such as a “panic button”, guided breathing exercises (Breathr), and visualization strategies (Visualizr). It also includes educational lessons, mood tracking, and self-awareness features. Evidence gathered during the COVID-19 pandemic demonstrated that 4–6 weeks of Rootd use reduced anxiety, stress, and the severity of panic attacks [18].

Taken together, digital interventions represent a major evolution in the treatment of anxiety disorders. They not only extend the reach of evidence-based approaches such as CBT and mindfulness but also provide users with flexible, accessible, and personalized strategies outside traditional clinical settings.

Looking ahead, a critical direction for innovation lies in the integration of multi-agent architectures within digital mental health platforms. Unlike conventional digital tools, these computational frameworks are capable of real-time mood detection, autonomous decision-making, and adaptive intervention delivery. Multi-agent systems allow the orchestration of specialized agents—such as monitoring agents for emotional state detection, personalization agents for tailoring interventions, and intervention agents for delivering strategies in real time. This architecture enables a continuous loop of sensing, reasoning, and acting, offering dynamic, context-aware responses to acute anxiety episodes [19,20].

Importantly, these systems can support individuals during difficult moments by providing autonomous, non-pharmacological assistance. For example, upon detecting physiological or behavioral markers of rising anxiety, agents could recommend grounding techniques, breathing retraining, or mindfulness practices, while escalating to professional involvement when necessary. By empowering users to manage acute episodes independently, multi-agent systems shift digital interventions from static tools into intelligent companions that enhance adherence, prevent relapse, and promote sustained well-being [21].

In conclusion, while CBT and SSRIs/SNRIs continue to serve as the foundation of anxiety disorder treatment, digital mental health interventions are reshaping the therapeutic landscape. By combining applications for wellness, CBT delivery, mindfulness, and crisis support with advanced multi-agent computational systems, care can become more proactive, personalized, and sustainable. The integration of such architectures represents a decisive step towards intelligent, patient-centered mental health care.

3. Design of Multi-Agent Architecture for Anxiety Management

3.1. Design of Software Prototypes for a Multi-Agent Architecture in Anxiety Management

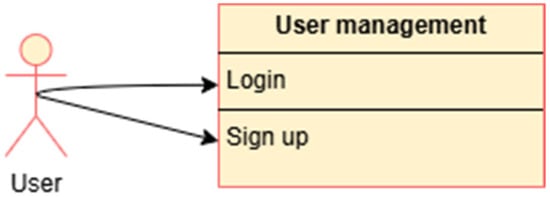

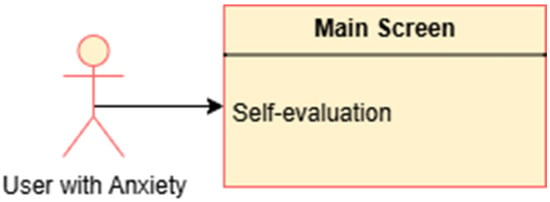

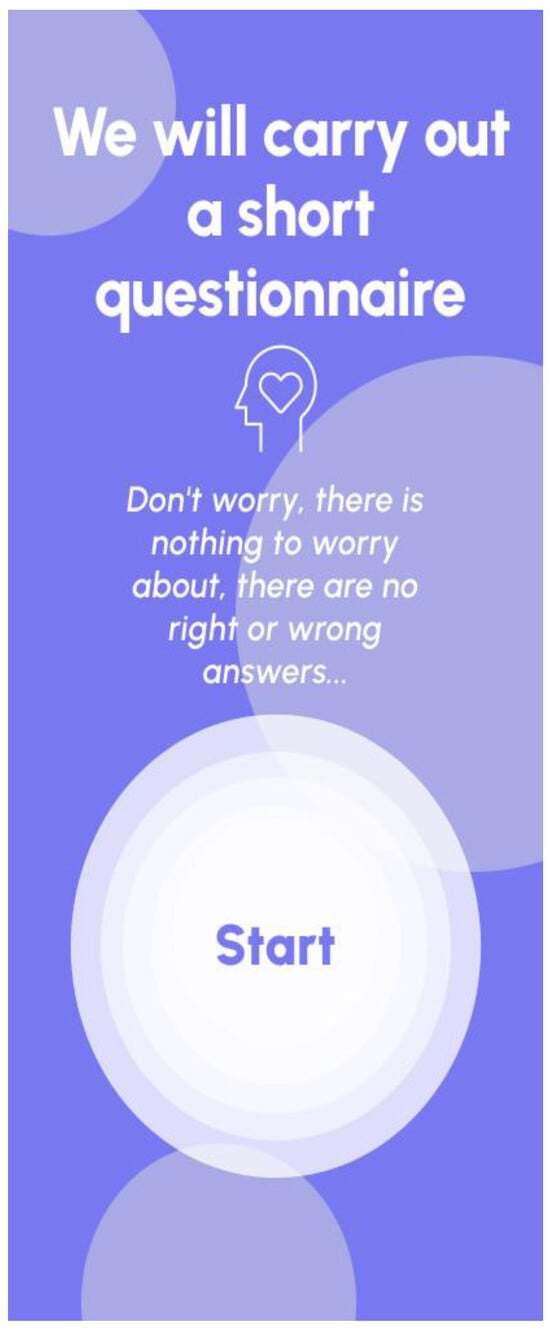

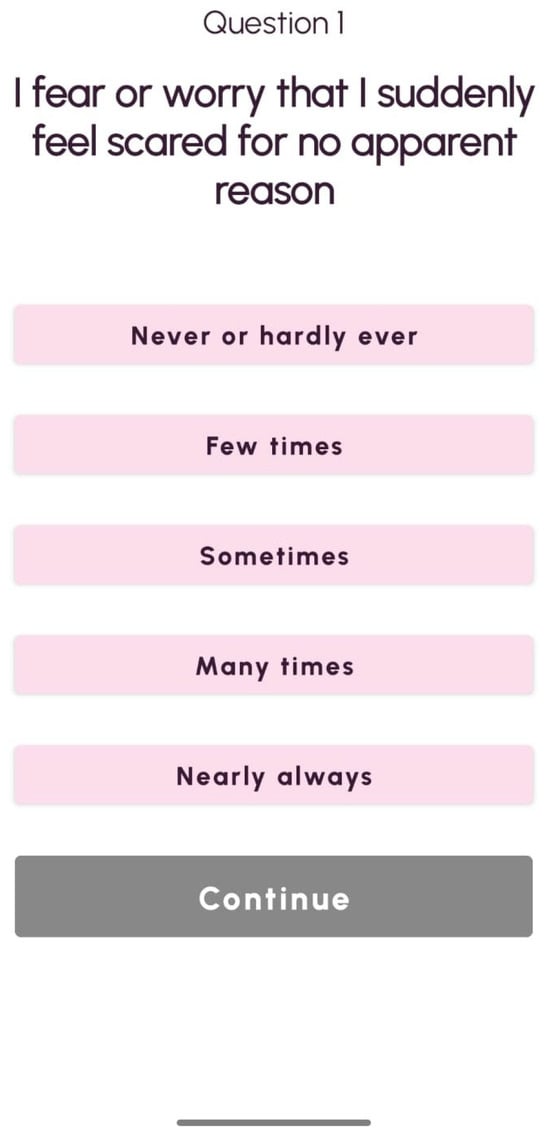

The design of software prototypes constitutes a critical step in the development of computational systems, particularly in health-related applications where usability, accessibility, and responsiveness to user needs are paramount. Prototyping enables the translation of conceptual architectures into tangible interfaces, providing a structured way to evaluate interaction flows, identify potential usability issues, and refine functional requirements before advancing to more complex development stages. In the context of digital mental health, where user engagement and trust are decisive factors for system adoption, low-fidelity prototypes are especially valuable. They allow rapid iteration, cost-effective testing, and early validation of design principles aligned with the envisioned multi-agent system. Such interactions are further illustrated in Appendix A. Use Case Diagrams.

This section presents the design of low-fidelity prototypes for a personal wellness application aimed at supporting individuals experiencing anxiety and panic episodes. The prototypes were developed using Figma, a vector-based design and prototyping tool widely employed for early-stage interface design.

The initial screens include registration and login modules, both requiring only a username and password. This design choice deliberately avoids storing sensitive personal data, thereby reinforcing user privacy and minimizing data security risks. Following registration, users complete an initial assessment comprising a brief questionnaire and heart rate measurement. Importantly, subsequent logins prompt the user to repeat this assessment if it has not already been completed on the same day, ensuring regular monitoring.

Once the assessment is completed, the prototype directs users to three core options: Daily Assessment, Help, and Learning. The Help option is tailored for acute stress situations. Here, the system collects heart rate measurements to determine whether physiological arousal is elevated. If elevated, a relaxation exercise is initiated, followed by a second measurement to evaluate effectiveness. In cases where no improvement is observed, the prototype includes the option to notify a family member for additional support.

The Learning section is designed as a preventive and educational resource. It provides access to breathing exercises as well as personalized recommendations (e.g., books, music, or podcasts) that can support users in coping with anxiety. While the initial design scope was ambitious, the decision was made to focus on a smaller, more effective prototype emphasizing the detection of generalized anxiety and panic episodes, complemented by breathing exercises tailored to individual needs.

By concentrating on these fundamental components, the prototype has been designed to serve as a practical foundation for subsequent iterations of the system. It provides early insights into user interaction with the application’s key functionalities, ensuring that the final multi-agent architecture can be aligned not only with computational robustness but also with user-centered design principles.

For additional details regarding the prototype structure, see Appendix B.

3.2. System Architecture

Our system is organized into two fundamental components: the Frontend and the Backend. The Backend comprises the server, the Multi-Agent System, the learning microservice, and the NoSQL database. The Frontend is implemented as an Android application that manages user interaction through a graphical interface and communicates with the Backend via an HTTP server.

The Backend includes an HTTP server that functions as an intermediary between the Android application and the Multi-Agent System. This design choice was motivated by the lack of suitable libraries and the inherent complexity of deploying the JADE platform directly on Android. The server centralizes requests, manages authentication, and coordinates responses among the various Backend services.

A NoSQL database, specifically MongoDB, is employed to store user data. MongoDB was selected due to its flexibility in handling dynamic data structures, eliminating the need for a rigid Entity–Relationship model or predefined static schema. In parallel, the Multi-Agent System provides distributed logic through autonomous agents that interact with one another to address complex tasks. Complementing this, the learning microservice—developed in Python 3.13.2 to take advantage of the maturity of its machine learning libraries—implements the structure and logic required for reinforcement learning. This microservice communicates with the agents to achieve the central goal of the system: delivering adaptive behavior tailored to each user through continuous learning.

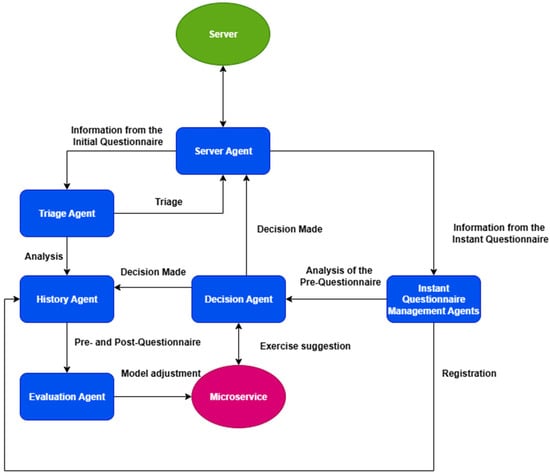

In summary, this division of responsibilities leverages the strengths of each technology, resulting in a scalable and flexible system capable of adapting to future modifications and extensions. The following sections provide a detailed description of each system component. An outline of the overall architecture is presented in Figure 1:

Figure 1.

System Architecture.

3.3. Android Application Prototype: Design and Functional Overview

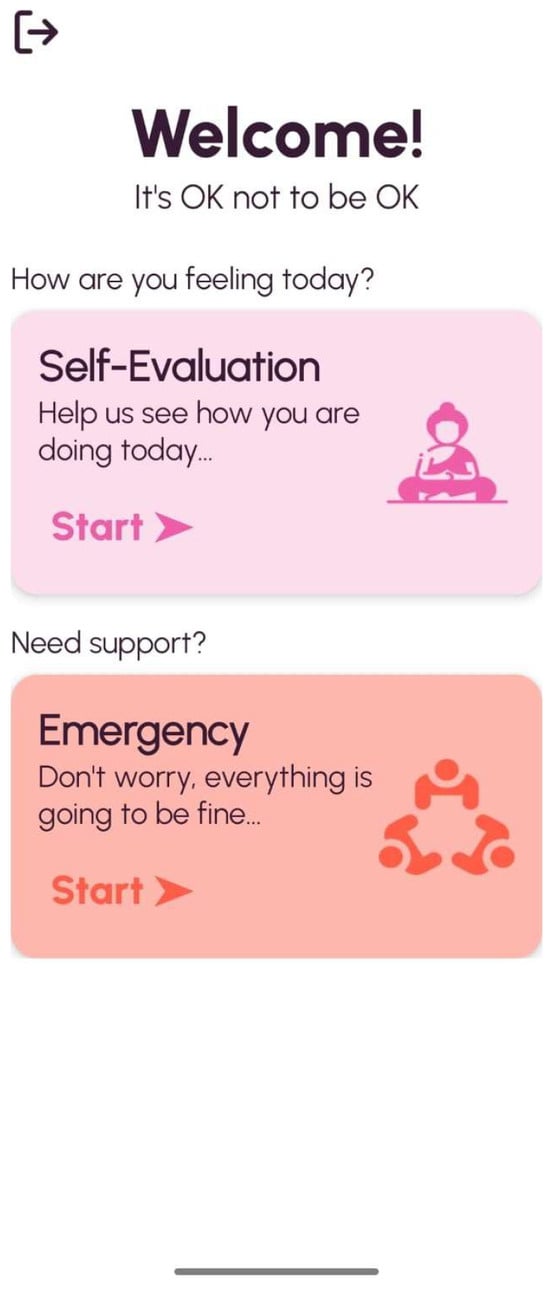

An Android application was developed based on the previously described low-fidelity prototype, with a strong emphasis on usability and accessibility. The interface was designed to be intuitive, incorporating a clean layout, recognizable icons, logical navigation, and minimal cognitive load to avoid overwhelming users. The overall design prioritizes the user’s core needs, particularly providing calm and structured guidance during episodes of panic and anxiety, while adapting to the specific requirements of each individual. The following subsections describe the functionality of the main application screens.

The application begins with a home screen offering login and registration options. In both cases, a username and password are required. During login, the database validates credentials to initiate a session; during registration, a new entry is created and the triage process begins. The triage consists of an initial questionnaire, based on the DSM-V [22], which defines the user’s main screen—tailored either to anxiety or to both anxiety and panic. If a user logs in without having completed the triage, the assessment is automatically reinitiated to ensure an accurate configuration of their main interface.

Following the triage, users are directed to their personalized main screen. For individuals identified with generalized anxiety disorder, a single option is displayed: Self-Assessment. This function measures the user’s current anxiety level and suggests appropriate strategies for relief. For those with panic disorder, an additional option, Emergency, is provided to guide users through acute panic episodes or imminent panic attacks, complementing the Self-Assessment functionality.

Selecting either option initiates a standardized evaluation. The State-Trait Anxiety Inventory (STAI) [23] is used to assess momentary anxiety levels, while a DSM-V [22] symptom-based questionnaire is employed to detect panic episodes. Upon completion, the system prompts a heart rate measurement to capture physiological correlates of the reported symptoms.

Heart rate detection leverages the phone’s rear camera. When a finger is placed on the lens, light absorption fluctuates in synchrony with blood flow, altering image intensity. These variations are processed to extract a photoplethysmographic signal. The Fast Fourier Transform (FFT) algorithm is then applied to determine the dominant frequency, which corresponds to the user’s heart rate [24].

Based on the evaluation results, one of two breathing-based interventions is launched, adapted to the user’s condition. Both exercises are derived from Anxiety and Overarousal [25].

Abbreviated Training Procedure: The user is guided to assume a comfortable position and follow instructions for a structured breathing routine. This involves controlled inhalation, breath-holding, and exhalation cycles. Parameters such as duration and repetition count are dynamically adjusted to the individual.

Relaxing Breathing Procedure: Similar in structure, this exercise emphasizes awareness of bodily sensations during breathing. Users are guided through cycles of inhalation, holding, exhalation, and pauses, with adjustments tailored to user response and pacing. After completion, the system redirects the user to the evaluation screen to assess improvement. The exercise may be repeated or adapted until the user’s symptoms subside.

This design ensures that users receive personalized, adaptive interventions during episodes of anxiety or panic while maintaining a simple and supportive flow of supportive interaction. Detailed interface layouts are provided in Appendix B.

3.4. Backend and Multi-Agent System Architecture

The system includes an HTTP server implemented with Spring Boot, which acts as an intermediary between clients and the Multi-Agent System. Spring Boot was chosen due to its advantages in simplifying deployment and configuration. Tomcat is embedded within the JAR, allowing the server to run directly via java -jar, without the need to deploy WAR files in an external container. Furthermore, dependencies such as spring-boot-starter-security provide automated configuration, significantly reducing development complexity.

The server incorporates an authentication and session management filter using JSON Web Tokens (JWT) [26]. Specifically, the JWTAuthorizationFilter intercepts each request, extracts the Authorization header containing the JWT, validates the signature and expiration date, and, if valid, loads an authentication object into the Spring Security context.

The communication design emphasizes decoupling and asynchrony. The Android client sends an HTTP request, which the server converts into a domain object (e.g., TriageRequest, PanicRequest). Each object includes a callback that enables asynchronous responses once the corresponding agent completes its processing. In this way, HTTP threads are not blocked, ensuring scalability and efficient resource use.

The main endpoints are as follows:

- POST/auth/signup: Receives a JSON object with username and password, registers the user in the database, and returns an exception if the username already exists. Otherwise, it delegates internally to the login process to return a new JWT.

- POST/auth/login: Validates credentials using Spring’s AuthenticationManager. If successful, the server generates a signed token (JWT) with an expiration date, representing the authenticated session.

- Other routes such as/triage,/analysis,/exercise: Protected routes, validated by the JWT filter. The decoded payload and username are processed, and the request is forwarded to the Multi-Agent System.

Communication between the server and the Multi-Agent System is managed asynchronously. When a client request is received, the controller constructs an object that includes a callback to be executed once the agent responds. The request is first captured by the Server Agent, which assigns a unique conversation identifier. This identifier is then included in the message sent to the appropriate agent. Once the response is available, the callback is triggered, completing the HTTP transaction.

This design ensures that HTTP threads are not blocked, allowing agents to run in parallel or even on different nodes. Consequently, the business logic resides within specialized agents, while the server functions as a secure and efficient gateway.

A NoSQL database has been implemented in MongoDB for its flexibility in storing data without a fixed relational schema. The database has three collections, one of which is “users”, where all users of the system are stored, with their username, a unique identifier, and a hash of the password for user security. The second collection, called “history”, is responsible for storing all interaction and questionnaire data for each user; more specifically, it is a session log for each user’s events. In this second collection, we have the following types of data: first, we have “AnxietyEvaluation”, which focuses on the instant anxiety questionnaire; “PanicEvaluation”, which focuses on the instant panic questionnaire; “Decision”, where the decision made for that user is stored; and finally “TriageAnalysis”, which stores information on whether the user suffers from anxiety or both anxiety and panic, which will also define the main screen. All these types of data will be stored with the unique identifier of the specific user so that it can be filtered by data and user. We also have a third collection, “model”, which is responsible for storing the learning model for each user. In short, this database offers adaptability, performance, security, and personalization, as well as allowing us to track the entire user by saving everything in the system.

Multi-Agent Systems (MAS) are computational frameworks in which independent entities, or agents, collaborate to solve complex tasks in a distributed, adaptive manner. The architecture, depicted in Figure 2 was designed to optimize interaction and information analysis by coordinating specialized agents with well-defined roles:

Figure 2.

Multi-Agent System Architecture.

- Server Agent: Manages requests received from the HTTP server and delegates them to the appropriate agents.

- Triage Agent: Performs user classification based on initial assessments.

- History Agent: Manages persistent storage and retrieval of user data.

- Instant Questionnaire Management Agents: Conduct immediate user evaluations through questionnaires.

- Decision Agent: Determines the most appropriate strategy for each user, based on historical and current data.

- Evaluation Agent: Updates and refines the learning model to adapt to individual users.

The interaction among these agents (according to Figure 2) ensures a structured and efficient flow of information—from data collection to decision-making—enabling real-time adaptation to user needs. This distributed architecture enhances scalability, resilience, and personalization, while separating the business logic from the server infrastructure.

3.5. Types of Agents and Their Responsibilities

3.5.1. Server Agent

The Server Agent acts as the primary intermediary between the HTTP server and the rest of the agents in the system. Its core responsibility is to encapsulate incoming client requests into objects that include internal callbacks, enabling asynchronous responses once processing is complete. Upon receiving a request from the server, the Server Agent forwards it to the appropriate specialized agent, such as the Triage Agent, or the agents responsible for anxiety and panic analysis. Similarly, it collects responses from other agents, for instance, the Triage Agent, which determines the user’s interface configuration, or the Decision Agent, which specifies the exercise to be performed along with its corresponding parameters.

By delegating communication tasks to the Server Agent, the remaining agents can focus on their domain-specific responsibilities, operating in parallel or even on distributed nodes. This separation enhances system modularity, scalability, and efficiency, while decoupling server-side communication from the internal logic of the Multi-Agent System.

3.5.2. Triage Agent

The Triage Agent serves as the entry point for user-specific processing within the Multi-Agent System. It receives from the Server Agent the results of the initial questionnaire completed by the user, designed to identify symptoms of anxiety, panic, or both. The agent evaluates two numerical scores associated with each category and interprets them according to the clinical thresholds defined in the DSM-V [27]. Based on this classification, the Triage Agent determines the most appropriate user interface, either a screen specific to anxiety or one that combines anxiety and panic (Table 1 and Table 2).

Table 1.

Initial anxiety assessment results.

Table 2.

Initial panic assessment results.

Once this decision is reached, the Triage Agent records the outcome in the “TriageAnalysis” data structure within the History Agent, associating it with the user’s unique identifier. In parallel, the decision is transmitted back to the Server Agent, which then communicates with the Android application to render the appropriate screen for the user.

3.5.3. History Agent

The History Agent functions as the intermediary between the history collection in the database and the Multi-Agent System, managing both insertions and queries.

With respect to insertions, several agents interact with the History Agent to ensure consistent data storage. The Triage Agent transmits the TriageAnalysis data type, representing the outcome of the initial classification (anxiety screen or panic screen). The Instant Questionnaire Management Agents contribute the PanicEvaluation and AnxietyEvaluation data types, corresponding to real-time assessments of the user’s panic or anxiety levels. Finally, the Decision Agent provides the Decision data type, which contains the selected exercise along with its parameters, tailored to the specific user.

Regarding queries, the Evaluation Agent retrieves pre- and post-assessment records from the history collection through the History Agent. These records are subsequently used to update and refine the models managed by the learning microservice.

In summary, the History Agent ensures consistency, persistence, and reliability of user data, making it a cornerstone for decision-making processes within the Multi-Agent System.

3.5.4. Instant Questionnaire Management Agents

The Instant Panic Questionnaire Management Agent is responsible for evaluating whether a user is experiencing a panic attack. It collects two key inputs: (i) the score from the panic questionnaire completed within the application, and (ii) the user’s heart rate measurement. These are encapsulated within a PanicEvaluation object.

The questionnaire is based on the diagnostic criteria defined in the DSM-V [22], which specifies that the presence of four or more symptoms is indicative of a panic attack. The agent transmits both the questionnaire score and the heart rate data to the History Agent, which stores and normalizes the information, and to the Decision Agent, which uses it to determine the most appropriate intervention strategy.

The normalization procedure is defined as follows:

- score: raw questionnaire score obtained from the user.

- normalizedPanicScore: normalized representation of the score used to standardize decision-making across users.

This design ensures that the Decision Agent operates on consistent, normalized data, facilitating reliable and individualized recommendations.

3.5.5. Instant Anxiety Questionnaire Management Agent

The Instant Anxiety Questionnaire Management Agent is responsible for evaluating whether a user may be experiencing an anxiety attack. To perform this task, it collects two types of input data from the server: (i) the user’s score from the anxiety questionnaire and (ii) the user’s heart rate measurement.

The questionnaire is structured into two distinct sub-scores:

- Positive items (scoreP): Questions that do not reflect anxiety symptoms.

- Negative items (scoreN): Questions specifically associated with anxiety-related symptoms.

Following the methodology of the State–Trait Anxiety Inventory (STAI) [23], the agent computes an overall score using the formula score = 30 − scoreN + scoreP, where scoreN represents the negative sub-score linked to anxiety symptoms, and scoreP represents the positive sub-score.

This calculated score, combined with the user’s heart rate, is encapsulated into the AnxietyEvaluation data object. The information is then transmitted to the History Agent for storage and normalization, and simultaneously to the Decision Agent, which uses it to select appropriate intervention strategies.

The normalization (below), where score is the questionnaire score and normalizedAnxietyScore is the normalized and standardized score, is used to ensure comparability and consistency across users:

Through this procedure, the Instant Anxiety Questionnaire Management Agent enables the system to integrate subjective (self-reported questionnaire data) and objective (heart rate) indicators, ensuring robust and adaptive assessment of acute anxiety states.

3.5.6. Decision Agent

The Decision Agent is responsible for determining which exercise a user should perform and with which parameters, with the aim of alleviating anxiety or panic symptoms. It receives PanicEvaluation or AnxietyEvaluation objects from the Instant Questionnaire Management Agents. Based on the questionnaire data and the user’s heart rate, the agent evaluates whether an intervention is required.

If the panic questionnaire score is below 4, or the anxiety questionnaire score is ≤35, combined with a heart rate lower than 80 beats per minute, the Decision Agent concludes that no exercise is necessary and informs the Server Agent accordingly. Otherwise, the agent issues a request to the learning microservice using the suggest endpoint. The microservice then returns the most suitable exercise along with personalized parameters. This decision is sent back to both the Server Agent (to be displayed in the application) and the History Agent, encapsulated in a Decision object. In parallel, the updated model is inserted into the model collection of the database.

3.5.7. Evaluation Agent

The Evaluation Agent is responsible for refining the learning model associated with each user. Whenever the History Agent registers a new entry in the history collection, it verifies whether the element is of type AnxietyEvaluation or PanicEvaluation and whether a corresponding Decision object has already been stored. If both conditions are satisfied, the History Agent provides the Evaluation Agent with two elements: the pre-evaluation (prior to the intervention) and the post-evaluation (the most recent entry).

Using these objects, the Evaluation Agent submits a request to the learning microservice via the feedback endpoint. This request includes both questionnaire scores and heart rate values, which are then used to adjust the learning model. Once updated, the microservice returns the new model to the Evaluation Agent, which subsequently stores it in the model collection of the database.

3.5.8. Learning Microservice

The learning microservice is the computational component where Reinforcement Learning (RL) is implemented, enabling continuous personalization of the system for each user. Python was chosen for its mature machine learning ecosystem and extensive library support. The microservice provides two main functions:

- suggest: Returns the exercise recommended for a specific user, including tailored parameters. This endpoint is called by the Decision Agent.

- feedback: Updates the model based on post-intervention outcomes, using a reward function. This endpoint is called by the Evaluation Agent.

The decision-making model combines two algorithms: Contextual Bandits (LinUCB) for exercise selection and Bayesian Optimization for parameter adjustment.

3.5.9. Contextual Bandits—LinUCB

Contextual Bandits algorithms operate by observing a context at each step, selecting an action, and receiving a reward [28,29]. In this system, the context consists of the user’s heart rate and questionnaire score, while the actions are the two available interventions: the Abbreviated Training Procedure and the Relaxing Breathing Procedure.

Once an action is executed, the reward is calculated as:

where newP and oldP represent the current and previous questionnaire scores, and newBpm and oldBpm represent the current and previous heart rate values.

Reward = (newP − oldP) + (oldBpm − newBpm)

This reward guides the algorithm in associating contexts with optimal actions, balancing exploration and exploitation. The system uses LinUCB, which assumes that expected rewards are a linear combination of the context, thereby selecting actions that maximize expected outcomes. The implementation was carried out using the contextualbandits library [28].

3.5.10. Bayesian Optimization

Bayesian Optimization is a method for optimizing functions that are costly to evaluate [30]. In this case, the function corresponds to the parameter configuration of the selected exercise. The model is represented as a Gaussian process. After each intervention, the observed improvement is translated into a reward, which updates the Gaussian process. Based on this feedback, the optimizer decides whether to explore new parameter combinations or exploit those previously shown to be effective.

This approach enables the system to efficiently identify parameter settings (e.g., duration, breathing cycles, intensity) that maximize user benefit. The implementation was performed using the Optuna library [31].

3.5.11. Rationale for the Hybrid Approach

The combination of LinUCB and Bayesian Optimization was selected to address two complementary challenges: (i) exercise selection based on immediate contextual signals (heart rate and questionnaire scores), and (ii) fine-tuning of exercise parameters based on short-term outcomes. Together, these algorithms provide a robust reinforcement learning framework that supports personalization, adaptability, and efficiency. Notably, they require only a small number of trials to converge toward optimal configurations and are capable of dynamically adjusting to changes in user states, thereby ensuring reliable adaptation in real time.

4. Multi-Agent System Validation and Results

In this section, we present the validation of the Multi-Agent System (MAS) integrated with the Reinforcement Learning (RL) algorithm. The evaluation focuses on a comprehensive set of performance indicators: execution time, success rate, responsiveness, adaptability, cooperation level, communication efficiency, performance degradation, robustness, redundancy, and recovery time. These parameters collectively assess not only the efficiency of the system under normal conditions but also its behavior in scenarios of complexity, concurrency, and potential failure.

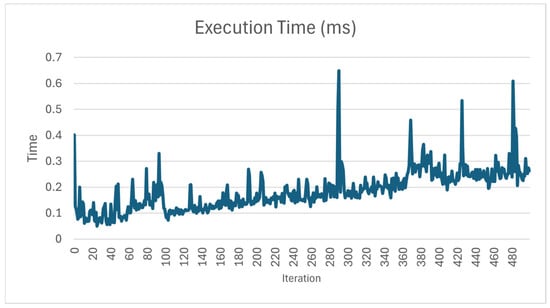

4.1. Execution Time

Execution time was measured as the interval between a user request and the corresponding system response. Three representative user cases were tested: a user with anxiety symptoms; a user with panic symptoms, and a user without symptoms. Each case was executed over 500 iterations to ensure stability and representativeness of the results.

In this first case, the system detects that the user is showing symptoms of anxiety and invokes the Multi-Agent System to assess their anxiety level and recommend the most appropriate exercise. The graph shows a slow start in the first iterations due to the cache. However, after this, rapid stabilization can be seen and queries begin to become shorter. In addition, once it is reasonably stable, the response time remains low and there are no constant peaks.

In this sense, Figure 3 represents the execution time for a user with anxiety symptoms. The system exhibits an initial delay due to cache loading, after which the response time stabilized rapidly. Once stable, execution times were consistently low, with minimal fluctuations.

Figure 3.

Execution time for a user with anxiety.

The second case is related to a user with panic symptoms (see Figure 4). In this case, the system detects indicators of panic, calling the Multi-Agent System and repeating the same process as in the previous case. The graph shows a similar average time to that of the anxiety case, where the cache is loaded at the beginning, and as the execution time stabilizes, it is reduced and maintained, although there are small occasional peaks.

Figure 4.

Execution time for a user with panic.

Performance trends were analogous to the anxiety scenario. After cache initialization, response times converged to stable values, with only minor peaks that did not compromise overall responsiveness.

Finally, the execution time for a user without symptoms (Figure 5), where the system detects that the user does not show symptoms of anxiety or panic, and therefore no exercises are recommended.

Figure 5.

Execution time for a user without symptoms.

In the graph, we can see that, as there is no need to load specialized agents or access the database, the system only checks the user’s status. Furthermore, the cache has little influence and there are very few peaks.

This scenario yielded the fastest execution times, as no complex processing or database queries were required. Cache effects were negligible, and peaks were virtually absent.

The results confirm that execution time is primarily influenced by cache initialization and the complexity of agent logic. While anxiety and panic cases introduce slightly higher variability due to additional processing, all three scenarios demonstrate stable and efficient performance once the cache is loaded.

4.2. Success Rate

This second parameter evaluates the learning capacity of the Reinforcement Learning algorithm in conjunction with the Multi-Agent System, specifically measuring the number of times it correctly selects the exercise or procedure required to improve the user’s symptoms. As in the previous parameter, each test was repeated 500 times to stabilize the results and obtain a meaningful average. In addition, for each scenario the average number of executions was recorded, representing the number of iterations required until the algorithm proposed the correct solution and the user’s state was stabilized. Together, the average number of executions (i.e., the average number of attempts in which the algorithm successfully selected the exercise) and the success rate (the ratio of successful executions to total executions) provide an overall indication of the system’s effectiveness across different scenarios.

At each step, the system evaluates the user’s condition using questionnaire scores and heart rate values, and then proposes an exercise with specific parameters. A successful execution is defined as one in which the system’s recommendation coincides with the intervention appropriate for the user. If this condition is not met, the algorithm continues iterating until the correct exercise is identified. After 500 epochs, the average number of attempts and successful selections are calculated, yielding the average success rate.

As shown in Table A1 (Appendix C), the proximity of the success rate to 100% indicates that the Abbreviated Training Procedure is highly effective in detecting anxiety patterns and recommending the appropriate exercise almost immediately.

A similar outcome is observed in Table A2 (Appendix C), where the success rate for users with anxiety who benefit from the Relaxing Breathing Procedure is also very close to 100%. This high performance can be attributed to the relative simplicity of identifying the correct exercise without the added complexity of parameter optimization.

In contrast, when the system must not only select the exercise but also determine specific parameters, performance shows a slight reduction. For example, the success rate in Table A3 (Appendix C), corresponding to the Abbreviated Training Procedure with parameter adjustments, is somewhat lower but still remains at a very high level. Similarly, Table A4 (Appendix C) demonstrates that the Relaxing Breathing Procedure with parameter specification is also highly effective, although its performance is influenced by the inherent randomness of the algorithm.

In summary, across all scenarios the system exhibits a high learning capacity, achieving reliable and accurate results from the early stages of user interaction.

4.3. Agent Reactivity Analysis

The purpose of this parameter is to measure the time each agent in the Multi-Agent System (MAS) requires to process an incoming request and issue a corresponding response to other agents. This measurement provides insights into the reaction speed of each agent, the efficiency of the information flow, and the identification of potential bottlenecks—agents that require excessive time to respond and could compromise overall system performance.

The methodology was as follows: upon receiving a request, the system records the timestamp; upon sending the response, a second timestamp is recorded. The difference between these two values represents the response time for that event. Averaging across all events yields the mean response time for each agent. This process was conducted across four scenarios, representing the most complex system configurations.

4.3.1. Case 1: User with Anxiety Improved Through the Abbreviated Training Procedure (Table A5, Table A6, Table A7 and Table A8, Appendix C)

According to the data presented in Appendix C, the evaluation of response times highlights both the efficiency of lightweight agents and the comparatively higher load of decision-making components, as the Instant Anxiety Questionnaire Agent responded in less than 0.30 ms on average, both to the Decision Agent and the History Agent; the Decision Agent exhibited response times of approximately 325 ms when interacting with the Server Agent and the History Agent; and the Evaluation Agent required the longest processing time, averaging 343.9 ms when interacting with the database. The Server Agent maintained near-instantaneous responses of 0.24 ms. Taken together, these results confirm that architecture ensures real-time responsiveness, with negligible latency for lightweight agents and acceptable delays for computationally intensive components. While the Evaluation Agent represents the main bottleneck due to its reliance on database operations, overall performance remains well within the limits required for interactive digital health applications, thereby validating the feasibility of the proposed Multi-Agent System in supporting anxiety management tasks.

4.3.2. Case 2: User with Anxiety Improved Through the Relaxation Procedure (Table A9, Table A10, Table A11 and Table A12, Appendix C)

According to the data presented in Appendix C, the second evaluation scenario confirms the consistency of agent performance across different conditions. The Instant Anxiety Questionnaire Agent again demonstrated sub-millisecond responsiveness, 0.31 ms to the Decision Agent and 0.27 ms to the History Agent, ensuring that initial assessments are processed with negligible delay. The Decision Agent required approximately 312 ms, while the Evaluation Agent averaged 323.8 ms when interacting with the database, reflecting the higher computational cost of decision-making and learning tasks. The Server Agent continued to maintain very low latency at 0.24 ms. Taken together, these results reinforce the stability of the Multi-Agent System, highlighting its ability to deliver rapid responses for lightweight agents and acceptable latencies for more complex operations, thereby ensuring reliable real-time support for anxiety management applications.

4.3.3. Case 3: User with Anxiety Improved Through the Abbreviated Training Procedure with Specific Parameters (Table A13, Table A14, Table A15 and Table A16, Appendix C)

According to the data presented in Appendix C, the third evaluation scenario again demonstrates the reliability of the system under increased task complexity. The Instant Anxiety Questionnaire Agent remained extremely fast, averaging 0.31 ms to the Decision Agent and 0.26 ms to the History Agent. The Decision Agent exhibited stable response times in the range of 320–321 ms, while the Evaluation Agent required approximately 340 ms when interacting with the database, reflecting its heavier computational load. The Server Agent maintained near-instantaneous responsiveness at 0.25 ms. Overall, these results confirm that even as complexity increases through the inclusion of parameterized tasks, the Multi-Agent System sustains efficient operation, with negligible delays for lightweight agents and acceptable latencies for decision-making and evaluation processes, thereby reinforcing its suitability for real-time anxiety management applications.

4.3.4. Case 4: User with Anxiety Improved Through the Relaxation Procedure with Specific Parameters (Table A17, Table A18, Table A19 and Table A20, Appendix C)

According to the data presented in Appendix C, the fourth evaluation scenario further confirms the consistency and efficiency of the system. The Instant Anxiety Questionnaire Agent maintained stable sub-millisecond performance, responding within 0.32 ms. The Decision Agent showed the lowest response times observed across all scenarios, averaging 308 ms, while the Evaluation Agent recorded average times of 324.6 ms when interacting with the database. The Server Agent continued to perform nearly instantaneously, at 0.26 ms. Taken together, these results highlight the robustness of the Multi-Agent System, demonstrating that even under parameterized conditions, agent performance remains predictable and efficient. The reduced response time of the Decision Agent in this scenario further reinforces the system’s capacity to sustain real-time operation, making it well suited for deployment in anxiety management applications where timely responses are critical.

4.3.5. Comparative Analysis, Interpretation and Future Work

Across all four scenarios, two consistent patterns emerge:

- Near-Instantaneous Agents: Both the Instant Anxiety Questionnaire Agent and the Server Agent demonstrate reaction times well below 0.3 ms, effectively imperceptible within the system’s operation. This ensures that basic communication and initial assessments introduce negligible latency.

- Bottleneck Agents: The Decision Agent and the Evaluation Agent consistently exhibit significantly higher response times, ranging between 308 and 325 ms and 323–344 ms, respectively. While the Decision Agent’s delay is largely attributable to database interactions, the Evaluation Agent introduces the greatest latency, making it the primary bottleneck of the MAS.

From a practical standpoint, these results can be considered highly positive. With total response times consistently below half a second, the system achieves the responsiveness required for real-time digital interventions in anxiety management. The fact that results remain stable across increasingly complex scenarios further supports the robustness of the MAS.

However, the Evaluation Agent’s dependency on database operations clearly positions it as the main bottleneck in the current architecture. While acceptable in the context of this initial prototype, this limitation highlights the need for optimization strategies in future iterations. Potential improvements include:

- Enhancing database access efficiency (e.g., caching or asynchronous writes).

- Implementing parallelization strategies to distribute evaluation tasks.

- Incorporating distributed or replicated database architectures to improve scalability under high concurrency.

In summary, while the current prototype demonstrates strong performance and validates the feasibility of the proposed architecture, future development should focus on improving scalability and fault tolerance. The system is structurally prepared to support such enhancements, making this a promising direction for upcoming work.

4.4. Level of Cooperation

This parameter evaluates the degree of collaboration among the agents during the decision-making process to alleviate the user’s anxiety symptoms. A high level of cooperation indicates that the agents exchange information effectively and work together toward a shared solution. To measure this, scenarios involving the largest number of agents were analyzed.

In all cases, five agents participate during the decision-making stages. However, once the user improves, the intervention of all five agents is no longer required; at that point, only four agents remain active. Thus, the maximum level of cooperation is five agents, and the minimum is four.

Case 1—Abbreviated Training Procedure (Table A21): The system achieved an average cooperation level of 4.799, reflecting nearly full engagement of all five agents throughout the process.

Case 2—Relaxation Procedure (Table A22): Cooperation remained high, with an average of 4.8, indicating consistent collaboration among the agents.

Case 3—Abbreviated Training with parameters (Table A23): The average cooperation level was slightly reduced to 4.758. This decrease is attributable to the added complexity of parameter adjustment, which reduced the frequency of full participation by all five agents.

Case 4—Relaxation Procedure with parameters (Table A24): A similar trend was observed, with an average of 4.755, again due to the complexity introduced by parameterization.

In all scenarios, the average level of cooperation exceeded 4.75 agents, confirming that the system operates with high collaboration across agents. The slight reductions in parameterized cases reflect the increased specialization of agent activity, but do not compromise overall functionality. This demonstrates that the system is designed for collective problem-solving, with all agents actively contributing until the final stages of the intervention.

4.5. Communication Efficiency

This parameter assesses whether every message sent by an agent receives a corresponding response. Ensuring that requests are always matched with replies guarantees that there are no “orphan” or blocked messages, thereby validating the integrity of the communication network.

The metric was calculated by incrementing a counter for each message sent and each response received, using unique identifiers to pair requests and replies. At the end of testing, the total number of requests and responses was compared. Ideally, both values should match.

Case 1—Efficiency of communication between agents for a user with anxiety who improves their symptoms with the relaxing relaxation procedure: 14,054 requests and 14,054 responses were recorded, confirming complete efficiency (Table A25)

Case 2—Communication efficiency between agents for a user with anxiety who improves their symptoms with the abbreviated training procedure (Table A26): Communication remained perfectly balanced, with 14,090 requests and 14,090 responses.

Case 3—Efficiency of communication between agents for a user with anxiety who improves their symptoms with the abbreviated training procedure and specific parameters (Table A27): Efficiency was again complete, with 11,408 requests and 11,408 responses.

Case 4—Efficiency of communication between agents for a user with anxiety improves their symptoms with the relaxation procedure and specific parameters (Table A28): The system maintained full efficiency with 11,282 requests and 11,282 responses.

The results confirm that the communication framework is fully reliable: every message sent by an agent received a corresponding reply in all tested scenarios. This ensures that no information is lost and that there are no deadlocks in agent interaction. Such communication integrity is essential for maintaining the scalability and robustness of the MAS, particularly as the system evolves to support higher levels of concurrency.

4.6. Adaptability

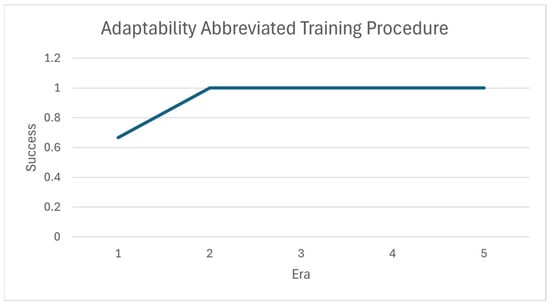

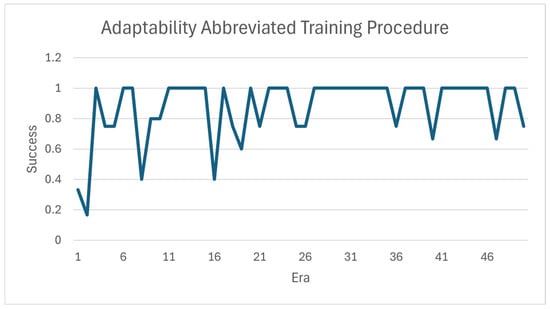

The objective of this parameter is to evaluate the adaptability of the Multi-Agent System (MAS) integrated with the Reinforcement Learning (RL) algorithm. Specifically, it measures the time required for the system to switch from one exercise to another during training. To this end, the system is initially trained on one exercise for a number of epochs and then transitioned to a second exercise, continuing training until it regains optimal performance. Two cases were considered: exercises without specific parameters and exercises with specific parameters. In both cases, success rates were recorded per episode to capture the dynamics of adaptation, illustrated across four diagrams.

4.6.1. Procedures Without Specific Parameters

In the first case, the system was trained for 5 epochs using the Abbreviated Training Procedure. After this period, the exercise was switched to the Relaxation Procedure, with training continuing for 60 epochs to assess the adaptation process.

Given the small action space, the system rapidly achieved maximum performance within only a few iterations (Figure 6).

Figure 6.

First training session: Abbreviated training procedure.

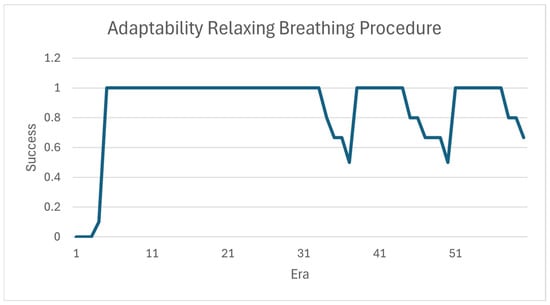

Upon switching exercises, the system exhibited a reset effect due to the environmental change. Nevertheless, it retained part of the knowledge acquired in the initial stage. Over successive epochs, the system gradually adapted, demonstrating effective knowledge transfer (Figure 7).

Figure 7.

Second training session: Relaxation procedure.

4.6.2. Procedures with Specific Parameters

In the second case, the system was trained for 50 epochs with the Abbreviated Training Procedure including specific parameters. Following this, the exercise was switched to the Relaxation Procedure with parameters, trained for an additional 60 epochs to evaluate adaptability under more complex conditions.

The introduction of parameters expanded the search space, increasing complexity. Despite this, the system was still able to achieve satisfactory performance (Figure 8).

Figure 8.

First training: Abbreviated training procedure with parameters.

At the start of the new training phase, the curve began at an intermediate level, reflecting partial retention of prior learning. However, parameter values required reassignment, extending the adaptation process (Figure 9).

Figure 9.

Second training session: Relaxation procedure with parameters.

Overall, the system demonstrated robust adaptability in both cases. Without parameters, adaptation was faster, as the smaller search space facilitated knowledge transfer between exercises. With parameters, adaptation was slower but still effective, confirming the system’s ability to manage more complex tasks. Importantly, results suggest that if the system is trained for too many epochs on a single exercise, it may overfit, reducing its ability to quickly relearn after switching tasks. This highlights a trade-off between rapid convergence and flexible adaptability, which is central to designing reinforcement-based multi-agent architectures.

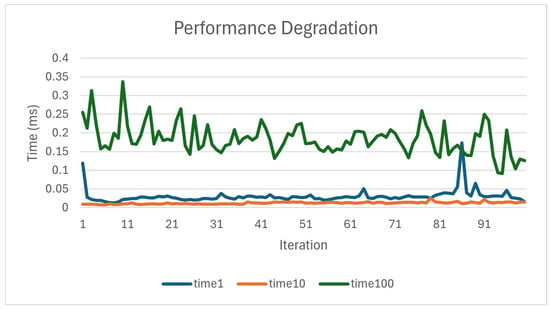

4.7. Performance Degradation

Performance degradation refers to the decline in the system’s ability to process requests as the workload increases, that is, as the number of concurrent users grows, leading to longer response times per request. Although the Multi-Agent System (MAS) handles individual iterations efficiently, this parameter highlights the saturation point of the database, particularly when numerous write operations are executed simultaneously.

For this evaluation, we tested cases involving users without symptoms, meaning that the system load was almost exclusively limited to database writes. As a result, the database’s capacity directly determined performance.

To conduct the measurement, three levels of concurrency were tested: 1 process, 10 processes, and 100 processes, each represented by a different color in the Figure 10. With a single process, response time was virtually instantaneous. However, as concurrency increased, latency rose incrementally, reaching response times of approximately 600 ms under 100 concurrent processes.

Figure 10.

Performance degradation with users who have no symptoms.

In conclusion, the analysis of performance degradation reveals that while the Multi-Agent System itself maintains stable operation under increasing workloads, overall responsiveness becomes constrained by the database’s capacity to process concurrent write operations. The results show that response times remain acceptable at low and moderate loads but rise to approximately 600 ms under high concurrency, highlighting the database as the primary limiting factor. This finding confirms that the MAS architecture is fundamentally efficient, yet its scalability in real-world scenarios will depend on optimizing database performance through strategies such as distributed storage, asynchronous writes, or caching mechanisms. Thus, performance degradation in the current prototype should be understood not as a systemic flaw, but as a natural boundary that guides future improvements toward greater scalability and robustness.

5. Conclusions

As highlighted in the introduction, there is an urgent need to design intelligent digital systems capable of supporting individuals in managing anxiety episodes in real time, complementing traditional psychological and pharmacological approaches. The limitations of conventional treatments—including accessibility barriers, delays in care, and the lack of continuous personalized monitoring—underscore the importance of computational architectures that can provide scalable, adaptive, and user-centered solutions. Against this backdrop, this study has presented a Multi-Agent System (MAS) integrated with Reinforcement Learning (RL), specifically designed to help users autonomously manage peaks of anxiety through context-sensitive, non-pharmacological interventions, supporting daily emotional state detection and user self-assessment, as shown in Appendix D.

The validation of the system demonstrates several notable strengths. First, in terms of responsiveness, the majority of agents (such as the Instant Anxiety Questionnaire Agent and the Server Agent) achieved near-instantaneous reaction times (<0.3 ms), ensuring seamless communication and real-time interaction. Even the Decision and Evaluation Agents, which introduce the highest computational load, consistently responded within 308–344 ms—well below the threshold of perceptible delay for interactive health applications. This confirms the suitability of the MAS for real-time use cases.

Second, the system achieved a very high success rate across all scenarios, with values consistently approaching 100%. Even in parameterized conditions, where complexity increased, the performance reduction was marginal, demonstrating the robustness of the integrated RL algorithm. These results validate the capacity of the system to accurately identify the most appropriate exercise and personalize its parameters, ensuring tailored support for each user profile.

Third, the system proved its adaptability when transitioning between exercises. In both non-parameterized and parameterized cases, it retained partial knowledge from prior tasks and successfully adjusted to new conditions. This feature is critical for real-world deployment, where user states can change rapidly and unpredictably. The ability to relearn and reconfigure strategies in a short time frame is a key advantage of the MAS-RL approach over static digital interventions.

Fourth, the system fosters strong cooperation and communication among agents. On average, more than 4.75 agents collaborated throughout the process, ensuring distributed problem-solving and preventing bottlenecks at the level of individual agents. Additionally, communication efficiency was flawless: every request generated a corresponding response, with no orphaned or lost messages detected. This confirms the reliability and stability of the messaging infrastructure, which is essential for scalability.

Finally, while some performance degradation was observed under high concurrency, this effect was directly linked to database saturation rather than weaknesses in the MAS itself. Even under 100 simultaneous processes, response times remained under 600 ms, which is acceptable for interactive applications. This suggests that with relatively straightforward improvements in database management—such as distributed storage, caching, or asynchronous operations—the architecture could achieve much higher levels of scalability without fundamental redesign.

In summary, the proposed architecture successfully integrates agent-based coordination with reinforcement learning to deliver an intelligent, adaptive, and user-centered system for managing anxiety episodes. The strengths demonstrated—responsiveness, accuracy, adaptability, cooperation, and communication reliability—position this work as a strong foundation for future development. Next steps will focus on improving scalability, refining learning mechanisms, and integrating the system into real-world digital health ecosystems. In doing so, this line of research contributes to the broader vision of intelligent, proactive, and non-pharmacological digital interventions that can complement traditional treatments and expand access to personalized mental health care.

Author Contributions

Conceptualization was led by P.H.-M. Methodology and Software implementation were conducted by M.G.-O. Resources, Formal analysis, Data curation, and Validation were carried out by P.H.-M. Writing—Original Draft Preparation was undertaken by M.G.-O. and P.H.-M., while Writing—Review & Editing and administrative interoperability information and relevant use cases were managed by P.H.-M. All authors approved the submitted version of the manuscript, including revisions made by journal staff. Each author agrees to be personally accountable for their contributions and ensures that any questions related to the accuracy or integrity of any part of the work are appropriately investigated, resolved, and documented. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable. This study does not involve human participants or animals in any form. All data employed were simulated and/or estimated for the exclusive purpose of testing the computational performance of the proposed architecture. At no point were real human data collected, processed, or stored, and consequently, no personal information or identifiable records were handled throughout the project. The work focuses solely on the informatics dimension of the research, specifically the design, implementation, and validation of a Multi-Agent System integrated with Reinforcement Learning techniques. As such, the contribution of this study lies entirely in the development and evaluation of the computational framework, without the involvement of human subjects or clinical data.

Data Availability Statement

The datasets generated during and/or analyzed during the current study are not publicly available but are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Appendix A. Use Case Diagrams

In this appendix, we present some of the use case diagrams of the application, which illustrate some interactions between users and the system.

Figure A1.

Diagram showing user management.

Figure A2.

Diagram showing options for a user with anxiety.

Figure A3.

Diagram showing options for a user experiencing panic or anxiety and panic.

Figure A4.

Flowchart for exercise selection.

Appendix B. Prototype Screenshots

This appendix presents a selection of screenshots from the prototype developed for the final application. These screenshots complement the architectural description by illustrating how core functionalities—such as user registration, initial assessments, and the handling of unanswered questions—are translated into an accessible and intuitive interface. By including these visual materials, the appendix provides a clearer understanding of how the conceptual architecture is embodied in the practical design of the application.

Figure A5.

Sign up screen.

Figure A6.

Unanswered questions screen.

Appendix C. Evaluation Tables

This appendix presents the most relevant tables obtained during the evaluation of the application. These tables summarize key performance metrics and experimental results, providing a structured view of the system’s behavior under different scenarios. By consolidating the quantitative evidence, the appendix complements the discussion in the main text and allows readers to examine the empirical basis of the analysis in greater detail. The inclusion of these tables facilitates transparency, reproducibility, and a clearer understanding of how the proposed architecture performs in practice.

Table A1.

Success rate for a user with anxiety who improves their symptoms with the abbreviated training procedure.

Table A1.

Success rate for a user with anxiety who improves their symptoms with the abbreviated training procedure.

| Average Number of Executions | Average Number of Successful Executions | Average Success Rate |

|---|---|---|

| 4.554 | 4.164 | 99.74% |

Table A2.

Success rate for a user with anxiety who improves their symptoms with the relaxing breathing procedure.

Table A2.

Success rate for a user with anxiety who improves their symptoms with the relaxing breathing procedure.

| Average Number of Executions | Average Number of Successful Executions | Average Success Rate |

|---|---|---|

| 4.534 | 4.242 | 96.43% |

Table A3.

Success rate for a user with anxiety who improves their symptoms with the abbreviated training procedure and specific parameters.

Table A3.

Success rate for a user with anxiety who improves their symptoms with the abbreviated training procedure and specific parameters.

| Average Number of Executions | Average Number of Successful Executions | Average Success Rate |

|---|---|---|

| 3.136 | 2.77 | 91.2% |

Table A4.

Success rate for a user with anxiety who improves their symptoms with the relaxing breathing procedure and specific parameters.

Table A4.

Success rate for a user with anxiety who improves their symptoms with the relaxing breathing procedure and specific parameters.

| Average Number of Executions | Average Number of Successful Executions | Average Success Rate |

|---|---|---|

| 3.094 | 3.09 | 99.9% |

Table A5.

Average response time of the Instant Anxiety Questionnaire Agent in responding to different agents. Calculation made with 21,298 requests.

Table A5.

Average response time of the Instant Anxiety Questionnaire Agent in responding to different agents. Calculation made with 21,298 requests.

| Average Response Time (ms) | |

|---|---|

| Average Time to Decision Agent | Average Time to History Agent |

| 0.2972 | 0.2551 |

Table A6.

Average response time of the Decision Agent in responding to the various agents. Calculation made with 21,302 requests.

Table A6.

Average response time of the Decision Agent in responding to the various agents. Calculation made with 21,302 requests.

| Average Response Time (ms) | |

|---|---|

| Average Time to Server Agent | Average Time to History Agent |

| 324.866 | 324.955 |

Table A7.

Average response time of the Evaluation Agent in responding to the database. Calculation made with 15,787.

Table A7.

Average response time of the Evaluation Agent in responding to the database. Calculation made with 15,787.

| Average Response Time (ms) |

|---|

| Average Time to Database |

| 343.858 |

Table A8.

Average response time from the Server Agent to the other agents. Calculation made with 21,302 requests.

Table A8.

Average response time from the Server Agent to the other agents. Calculation made with 21,302 requests.

| Average Response Time (ms) |

|---|

| 0.2365 |

Table A9.

Average response time of the Instant Anxiety Questionnaire Agent in responding to different agents. Calculation made with 12,400 requests.

Table A9.

Average response time of the Instant Anxiety Questionnaire Agent in responding to different agents. Calculation made with 12,400 requests.

| Average Response Time (ms) | |

|---|---|

| Average Time to Decision Agent | Average Time to History Agent |

| 0.3112 | 0.2689 |

Table A10.

Average response time of the decision agent in responding to the different agents. Calculation made with 13,396 requests.

Table A10.

Average response time of the decision agent in responding to the different agents. Calculation made with 13,396 requests.

| Average Response Time (ms) | |

|---|---|

| Average Time to Server Agent | Average Time to History Agent |

| 311.835 | 311.94 |

Table A11.

Average response time of the Evaluation Agent in responding to the database. Calculation made with 9496 requests.

Table A11.

Average response time of the Evaluation Agent in responding to the database. Calculation made with 9496 requests.

| Average Response Time (ms) |

|---|

| Average Time to Database |

| 323.759 |

Table A12.

Average response time of the Server Agent to the other agents. Calculation made with 13,400 requests.

Table A12.

Average response time of the Server Agent to the other agents. Calculation made with 13,400 requests.

| Average Response Time (ms) |

|---|

| 0.2404 |

Table A13.

Average response time of the Instant Anxiety Questionnaire Agent in responding to different agents. Calculation made with 31,064 requests.

Table A13.

Average response time of the Instant Anxiety Questionnaire Agent in responding to different agents. Calculation made with 31,064 requests.

| Average Response Time (ms) | |

|---|---|

| Average Time to Decision Agent | Average Time to History Agent |

| 0.3126 | 0.2635 |

Table A14.

Average response time of the Decision Agent in responding to the different agents. Calculation made with 32,560 requests.

Table A14.

Average response time of the Decision Agent in responding to the different agents. Calculation made with 32,560 requests.

| Average Response Time (ms) | |

|---|---|

| Average Time to Server Agent | Average Time to History Agent |

| 320.501 | 320.599 |

Table A15.

Average response time of the Evaluation Agent in responding to the database. Calculation made with 25,044 requests.

Table A15.

Average response time of the Evaluation Agent in responding to the database. Calculation made with 25,044 requests.

| Average Response Time (ms) |

|---|

| Average Time to Database |

| 340.379 |

Table A16.

Average response time from the Server Agent to the other agents. Calculation performed with 32,565 requests.

Table A16.

Average response time from the Server Agent to the other agents. Calculation performed with 32,565 requests.

| Average Response Time (ms) |

|---|

| 0.2460 |

Table A17.

Average response time of the Instant Anxiety Questionnaire Agent in responding to different agents. Calculation made with 35,330 requests.

Table A17.

Average response time of the Instant Anxiety Questionnaire Agent in responding to different agents. Calculation made with 35,330 requests.

| Average Response Time (ms) | |

|---|---|

| Average Time to Decision Agent | Average Time to History Agent |

| 0.3195 | 0.2695 |

Table A18.

Average response time of the Decision Agent in responding to the various agents. Calculation made with 36,827 requests.

Table A18.

Average response time of the Decision Agent in responding to the various agents. Calculation made with 36,827 requests.

| Average Response Time (ms) | |

|---|---|

| Average Time to Server Agent | Average Time to History Agent |

| 308.037 | 308.139 |

Table A19.

Average response time of the Evaluation Agent in responding to the database. Calculation made with 28,639 requests.

Table A19.

Average response time of the Evaluation Agent in responding to the database. Calculation made with 28,639 requests.

| Average Response Time (ms) |

|---|

| Average Time to Database |

| 324.557 |

Table A20.

Average response time from the Server Agent to the other agents. Calculation performed with 36,831 requests.

Table A20.

Average response time from the Server Agent to the other agents. Calculation performed with 36,831 requests.

| Average Response Time (ms) |

|---|

| 0.2551 |

Table A21.

Medium level of cooperation between agents for a user with anxiety whose symptoms improve with the abbreviated training procedure.

Table A21.

Medium level of cooperation between agents for a user with anxiety whose symptoms improve with the abbreviated training procedure.

| Average Level of Cooperation Between Agents |

|---|

| 4.799 |

Table A22.

Medium level of cooperation between agents for a user with anxiety whose symptoms improve with the relaxation procedure.

Table A22.

Medium level of cooperation between agents for a user with anxiety whose symptoms improve with the relaxation procedure.

| Average Level of Cooperation Between Agents |

|---|

| 4.8 |

Table A23.

Medium level of cooperation between agents for a user with anxiety whose symptoms improve with the abbreviated training procedure with specific parameters.

Table A23.

Medium level of cooperation between agents for a user with anxiety whose symptoms improve with the abbreviated training procedure with specific parameters.

| Average Level of Cooperation Between Agents |

|---|

| 4.758 |

Table A24.

Medium level of cooperation between agents for a user with anxiety whose symptoms improve with the relaxing relaxation procedure with specific parameters.

Table A24.

Medium level of cooperation between agents for a user with anxiety whose symptoms improve with the relaxing relaxation procedure with specific parameters.

| Average Level of Cooperation Between Agents |

|---|

| 4.755 |

Table A25.

Efficiency of communication between agents for a user with anxiety who improves their symptoms with the relaxing relaxation procedure.

Table A25.

Efficiency of communication between agents for a user with anxiety who improves their symptoms with the relaxing relaxation procedure.

| Requests | Responses |

|---|---|

| 14,054 | 14,054 |

Table A26.