1. Introduction

As one of the most representative components of Chinese cultural heritage, ancient Chinese classics encapsulate a wealth of historical, cultural, and philosophical thought, holding significant academic, cultural, and artistic value. With the advancement of digital humanities, a range of text analysis tools have been developed to support humanities scholars in studying primary sources, including algorithms for automatic punctuation, word segmentation, named entity recognition, and text classification. However, existing analytical methods predominantly focus on semantic understanding and knowledge extraction within single texts, while paying less attention to another important research scenario—the analysis of parallel texts across different documents. Parallel texts refer to semantically equivalent, similar, or related textual segments, such as passages describing the same historical event or discussing the same topic. This phenomenon, widely observed in Chinese classics, is referred to as “text reuse”.

The study of text reuse has a long tradition in Chinese scholarship, tracing back to the exegetical work of ancient commentators and remaining a focus of research in modern academia. This methodology plays a crucial role in textual collation, dating of ancient works, and tracing the evolution of intellectual traditions. In the era of digital humanities, natural language processing (NLP) techniques have enabled scholars to efficiently identify large-scale instances of textual reuse across vast corpora, facilitating macro-level quantitative cultural analysis.

Early methods for text reuse detection (TRD) primarily relied on literal matching strategies, such as string comparison or feature extraction [

1,

2,

3], to identify explicit similarities between texts. However, these approaches can only detect surface-level character similarities and fail to capture deeper semantic relationships. With the development of deep learning, significant progress has been made in text reuse detection. Researchers have begun employing models such as LSTM and BERT/RoBERTa as foundational architectures for TRD. For instance, Cheng [

4] fine-tuned BERT-CCPoem on classical Chinese poetry to vectorize poems and analyze reused text. Similarly, Duan et al. [

5] employed SikuRoBERTa [

6], a base model pre-trained on the Siku Quanshu corpus, and applied a contrastive learning strategy during fine-tuning. This strategy optimizes the model by pulling semantically similar texts closer and pushing unrelated texts farther apart in the vector space, thereby yielding representations with enhanced semantic discriminability and achieving superior performance in ancient Chinese text matching.

Despite these advancements, current methods still face limitations. Literal matching approaches, while computationally efficient, suffer from poor generalization and cannot effectively identify semantically similar but lexically divergent passages. Deep learning-based methods, though capable of detecting high-level semantic relationships, struggle with “hard negative samples”—texts that are lexically similar but semantically distinct. This challenge arises due to the concise and flexible nature of classical Chinese, where models trained on modern Chinese or English datasets often underperform without domain-specific adaptation. Thus, optimizing model training strategies according to the linguistic characteristics of ancient texts is essential for improving the accuracy and robustness of TRD. Current research should focus on enhancing semantic understanding while mitigating false positives caused by superficial similarities.

A natural approach would be to leverage the powerful capabilities of large language models (LLMs) for automatic detection of text reuse. However, the practical application of LLMs for large-scale text analysis faces significant challenges due to their slow inference speed and the computational overhead required when processing two passages simultaneously to determine relationships. These limitations hinder their feasibility for processing extensive corpora. In contrast, sentence representation models possess efficiency advantages since they can pre-convert sentences into vectors for storage and subsequently calculate vector similarity scores to determine semantic similarity between sentences. However, due to the lack of interaction between sentences, representation models have limitations in performance.

To address these challenges, we propose

AncientTRD, a novel representation method for detecting text reuse in ancient Chinese literature based on knowledge distillation from LLMs. Knowledge distillation [

7] is a model compression technique in which a smaller “student” model learns to reproduce the capabilities of a larger “teacher” model. By transferring the teacher’s knowledge into the student model, it is possible to preserve much of the performance advantage of large models while substantially reducing inference cost. Our key insight is to first distill the semantic discrimination capability of LLMs into a smaller model, which can then be efficiently deployed for text reuse detection while maintaining a balance between computational efficiency and performance. The proposed framework incorporates two complementary training objectives: First, a contrastive learning objective enables the model to acquire a coarse-grained semantic discrimination ability. The positive and negative samples required for this training phase are automatically generated by the LLM. Second, a ranking distillation objective transfers the LLM’s fine-grained semantic ranking knowledge to the smaller pre-trained language model (PLM), endowing it with a nuanced semantic differentiation capability. This ranking distillation process similarly relies on the LLM to synthesize samples with varying degrees of semantic similarity.

To facilitate model evaluation, we have constructed a specialized benchmark dataset. Experimental results demonstrate the effectiveness of our proposed model, showing significant improvements in both accuracy and efficiency compared to existing approaches. This work provides a practical solution for large-scale analysis of text reuse in ancient Chinese literature while overcoming the computational constraints of direct LLM application.

2. Related Work

2.1. Text Reuse Detection

Early efforts in automatic text reuse detection in ancient Chinese texts primarily employed character-based matching techniques, often relying on manually crafted rules that leveraged the structural regularities of classical Chinese. Representative approaches include Sturgeon’s matching algorithm [

8], which incorporated features such as the number of matched characters, distinctions between content and function words, and the relative frequency of matched terms. Deng’s method [

3] introduced entity-based features to identify instances of text reuse, while Wang’s hybrid framework [

9] combined regular expression-based matching with Latent Semantic Indexing (LSI) topic modeling to improve detection accuracy.

With the development of deep learning, traditional text reuse detection methods based on manual feature engineering have been progressively replaced by deep learning techniques. Huang et al. [

10] employed a Bi-LSTM-CRF model to automatically identify cited texts in three classics from The Thirteen Classics with Commentaries and Subcommentaries using a sequence labeling approach. Cheng [

4] utilized the BERT-CCPoem model to generate vector representations of poetic lines and measured intertextual similarity between verse pairs using cosine similarity. Recent advances in contrastive learning have significantly improved the representation capabilities of pre-trained language models for ancient texts, with the construction of positive and negative samples emerging as a key research focus. For positive sample generation, Duan et al. [

5] created semantically consistent variants by randomly deleting clauses and n-gram fragments from original sentences. Building upon the ESimCSE framework, Ye et al. [

11] proposed a random replication strategy for positive sample construction, followed by rigorous evaluation of model performance on a more challenging idiom origin-tracing task. In addition, Li et al. [

12] integrated traditional rule-based matching with the SimCSE [

13] model, effectively increasing the model’s focus on critical segments in ancient texts. Notably, Zhang et al. [

14] explored the application of large language models (LLMs) in data augmentation, leveraging ChatGPT-4 to generate large-scale, high-quality positive and negative sample pairs for fine-tuning the RoBERTa model. Experimental results demonstrated that this LLM-based data augmentation approach significantly enhances the representation ability of pre-trained models in specialized domains such as classical medical literature.

Although deep learning models have achieved remarkable progress in automatic text reuse detection for ancient texts, the concise phrasing and flexible grammar characteristic of classical writings still pose significant challenges to existing methods of semantic differentiation. These limitations severely constrain the applicability of text reuse detection technologies in broader cultural analysis. In this paper, we propose an LLM-based knowledge distillation approach that effectively transfers the profound semantic comprehension capabilities of large language models to lightweight specialized models. Our method significantly enhances semantic sensitivity to ancient textual features while maintaining computational efficiency.

2.2. Sentence Representation

Sentence representation is a fundamental task in natural language processing (NLP), widely applied in areas such as information retrieval, text classification, and semantic textual similarity. By transforming texts into vector representations that can be precomputed and stored, representation models enable efficient similarity measurements, thereby facilitating large-scale text reuse detection. Early work primarily relied on word embedding methods, which transformed individual words into vectors using models such as Word2Vec [

15] and GloVe [

16]. These word vectors were then aggregated—via concatenation, weighted summation, or other heuristics—to form sentence-level representations. Although computationally simple, such methods often failed to capture the holistic semantics of the text. Subsequent research gradually shifted from isolated word-level embeddings to sequence modeling, with an emphasis on capturing contextual dependencies. Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) became the foundational architectures for encoding sequential textual data during this phase. With the emergence of pre-trained language models (PLMs), text representation techniques entered a new era. However, Reimers et al. [

17] demonstrated that sentence embeddings derived directly from BERT exhibit anisotropy, prompting the development of a new training paradigm combining PLMs with contrastive learning. This paradigm has since become dominant in sentence representation learning.

The central objective of contrastive learning is to structure the embedding space such that positive samples are drawn closer together while negative samples are pushed apart. Much of the research in this area focuses on constructing appropriate positive and negative pairs through data augmentation. For example, SimCSE treats two independently dropout-augmented views of the same sentence as a positive pair; ConSERT [

18] generates positives via word shuffling, cropping, dropout, and adversarial examples; DCLR [

19] synthesizes negatives based on Gaussian perturbations; and EASE [

20] constructs positives by pairing sentences with relevant entities. Building on this foundation, recent studies have explored knowledge distillation strategies to transfer the semantic discrimination capabilities of large models to smaller ones, thereby further improving representation quality. These include methods that distill ranking-based knowledge [

21], as well as approaches that leverage large language models (LLMs) to generate high-quality positive and negative pairs for guiding the training of smaller student models [

22,

23,

24].

3. Preliminary

We provide some conceptual explanations and definitions in learning to rank.

3.1. Bradely–Terry Model

Preference modeling has been used for aligning language models with human preferences. The Bradley–Terry (BT) model [

25] is widely adopted for modeling preferences with more than two choices. Consider a pair of answer passages,

and

, given anchor sentence

x. If

is preferred over

by a human annotator, the preference relation is denoted as

. In preference modeling, it is typically assumed that there exists some (implicit) reward function

for anchor sentence

x and answer

y. Given anchor sentence

x and two answers,

and

, the BT model is defined by the following distribution:

3.2. Plackett–Luce Model

The BT model is specifically designed for pairwise preference comparisons, whereas the Plackett–Luce (PL) model [

26,

27] offers a more generalized framework capable of handling ranked preferences among multiple alternatives.

Complete Ranking. Given an anchor sentence

x and a candidate set

, the PL model defines the distribution of a complete permutation

as

Partial Top- Ranking. For observed top-

K ranking

where

, the distribution is

This formulation specifically focuses on the strict ordering of top-K items while treating all remaining candidates as an unranked equivalence class.

4. Methodology

In this paper, we introduce a text reuse detection model for ancient Chinese texts named

AncientTRD, which relies on LLM-generated training samples to transfer knowledge from LLMs to smaller models, thereby achieving a balance between efficiency and performance.

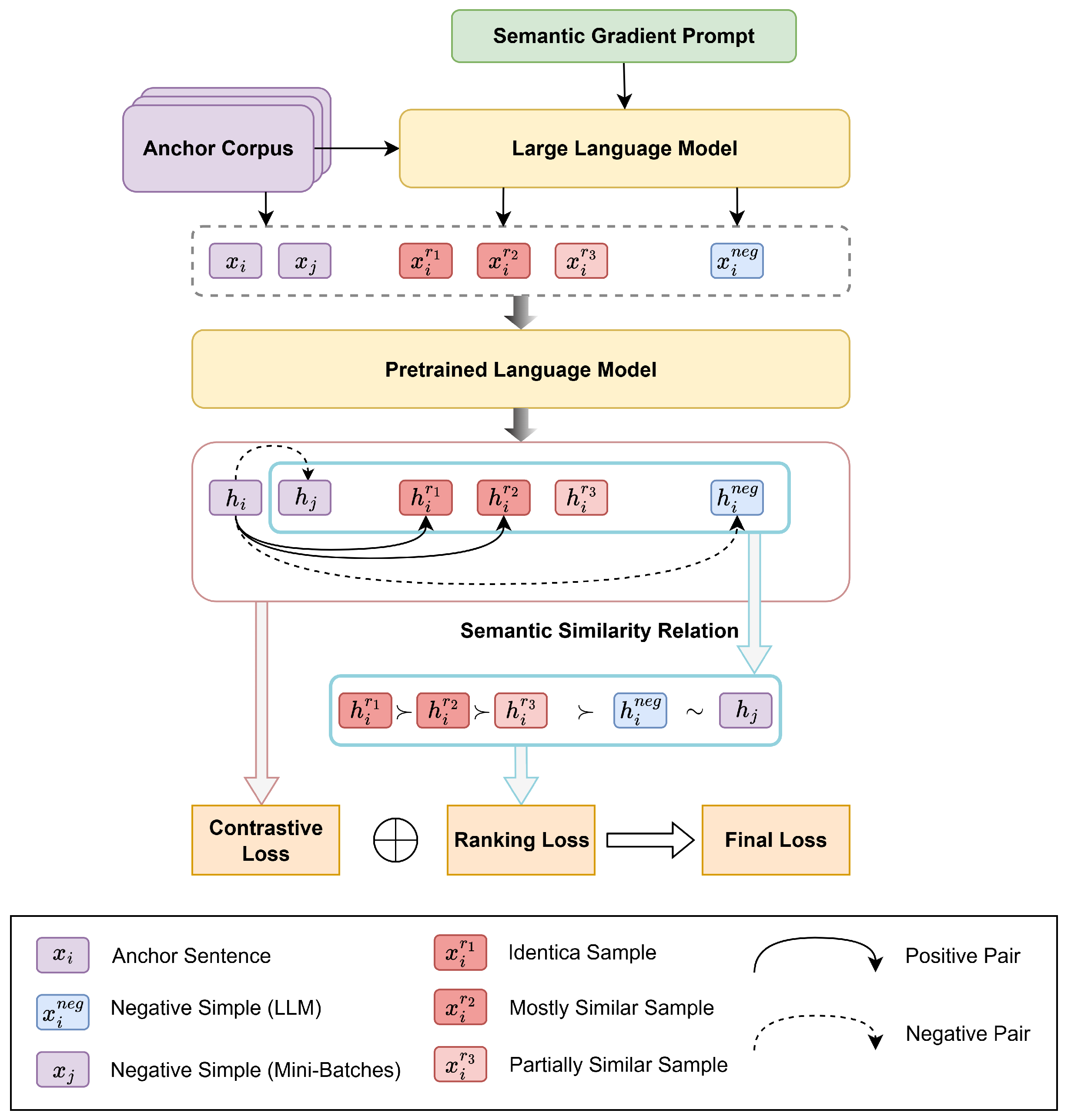

Figure 1 illustrates the architecture of our approach, comprising two modules: (1) a contrastive learning objective that enables the smaller model to distinguish between positive and negative samples and (2) a ranking distillation objective that distills the ranking knowledge from the LLM to the smaller model.

4.1. Contrastive Learning

Contrastive learning aims to learn effective representations by pulling similar semantics closer and pushing away dissimilar ones. We base our approach on the formulation of SimCSE, which is one of the most common and effective contrastive learning frameworks to learn sentence embeddings. Given a mini-batch of N sentences

, each sentence

is encoded twice using independent dropout masks by a pre-trained language model (PLM, e.g., BERT), producing positive pairs

. The contrastive loss (InfoNCE) is formulated as

where

is a temperature parameter and

is the cosine similarity.

In the unsupervised SimCSE framework, the same input sentence

is encoded twice under independent dropout masks, resulting in a positive pair

that captures semantic consistency. The remaining instances within the same mini-batch are treated as negative examples, as formulated in Equation (

4). The supervised SimCSE enhances this setup by incorporating hard negative samples

, thereby constructing triplet datasets

, upon which a supervised contrastive loss is defined to explicitly optimize the model for discriminative sentence representations.

In the supervised SimCSE, the triplets

are typically derived from annotated NLI datasets, where

denotes the premise and

and

represent the corresponding entailment and contradiction hypotheses, respectively. Due to the enhanced quality of both positive and hard negative samples, the supervised training paradigm of SimCSE demonstrates significantly better performance than its unsupervised counterpart. However, such annotated resources are often unavailable for practical application, and manual construction of

triplets is labor-intensive and costly. Consequently, unsupervised approaches remain the most practical and scalable solution. In this study, while we adopt the supervised loss formulation as defined in Equation (

5), we propose to synthesize both positive and negative samples using an LLM, aiming to distill the extensive knowledge embedded in LLMs into a smaller PLM.

Given a mini-batch of M anchor sentences

, we employ the prompt template shown in

Table 1 to instruct the LLM in generating both positive and negative samples for each anchor sentence

. To ensure the optimal selection of an LLM for sample generation, we conducted a preliminary evaluation in which annotators tested multiple LLMs (including GPT-4, Qwen-3, and DeepSeek-V3) on a small-scale corpus. Based on qualitative assessment (e.g., semantic coherence), DeepSeek demonstrated superior performance for our specific task. Consequently, we adopted it as the primary model for generating high-quality positive and negative samples in our study. Specifically, during the generation of positive samples, the LLM constructs two types of positive samples: (1)

(identical sample), which maintains complete consistency with the anchor sentence

in both the core semantic content and detailed expression, and (2)

(mostly similar sample), which preserves the core semantic content of

while introducing minor variations in non-essential details that do not affect semantic interpretation. For negative sample generation, we focus on constructing a hard negative sample

—an instance that exhibits surface-level linguistic similarity to

while possessing fundamentally different semantics. Previous research has demonstrated that hard negative samples are more effective in enhancing model performance. Finally, we obtain mini-batches

and define the loss:

4.2. Ranking Distillation

Contrastive learning methods typically adopt a simplistic binary classification approach that merely distinguishes between positive and negative sample pairs, thus inadequately accounting for the continuous spectrum of semantic similarity. To achieve the objective of learning fine-grained semantic representations, we propose a ranking-aware knowledge distillation framework that effectively transfers the ranking knowledge from large language models (LLMs) to smaller models through a specifically designed ranking loss function. The formulation of our ranking loss function is theoretically grounded in established ranking models, particularly drawing upon the Plackett–Luce (PL) model and related methodological frameworks.

We propose a ranking distillation strategy based on Equation (

3). Given an anchor sentence

, we prompt the LLM to generate

, which is partially similar to

but contains a divergence of key information. To enhance the model’s ability to discriminate semantic relevance, we establish the following preference relation based on the samples generated for the contrastive learning:

where

denote the set of all negative samples. Meanwhile, a rule-based approach is employed during the data generation process to assess the quality of the generated text. If the output is incomplete or its length deviates by more than 50% from the anchor sentence, the model is prompted to regenerate the text. The well-defined ranking effectively captures the monotonic decline in semantic relevance, thereby providing clear and informative supervisory signals for model training. The ranking objective can be defined as

The final loss function of

AncientTRD is the combination of the above two loss functions:

5. Dataset Construction

In this section, we present the construction process of a benchmark dataset for evaluating text reuse detection models. The dataset construction consists of three main steps: data collection, data preprocessing, and annotation.

We construct a corpus of Chinese classical philosophical texts spanning from the Shang-Zhou dynasties to the Qing dynasty. This corpus includes 244 classic philosophical works and is intended to support the analysis of interrelations among various philosophical schools, as well as the historical development of specific philosophical concepts.

The preprocessing stage aims to extract high-quality candidate sentence pairs from the raw corpus for subsequent annotation. We first fine-tune SikuRoBERTa on our corpus following the SimCSE training paradigm to obtain an initial sentence representation model. Before constructing the pre-annotated dataset, we first evaluate the effectiveness of the SimCSE training paradigm. In our experiments, we retrieve 1000 candidate text reuse instances, each using both the original SikuRoBERTa and the SimCSE-fine-tuned SikuRoBERTa models. Expert annotators then manually verify these samples, with results showing the SimCSE-enhanced model achieves a 12% higher precision rate in identifying valid text reuse cases. This demonstrates the superiority of the SimCSE training approach for improving recall quality in our task. This model is then used to generate sentence embeddings, and Faiss (a library developed by Facebook) is employed to retrieve candidate sentence pairs. During retrieval, we set the parameter K (the number of most similar results returned per anchor sentence) to 150 and further filter the results using a similarity threshold, ultimately constructing a candidate pool containing millions of sentence pairs. To refine this pool, we implement three optimization strategies: (a) deduplication of repeated sentence pairs; (b) rule-based filtering to remove low-quality or semantically meaningless pairs (e.g., those containing only temporal or seasonal references); and (c) stratified sampling. In this sampling process, sentence pairs are divided into two categories; those containing at least one sentence from core philosophical texts (e.g., Analects of Confucius, Tao Te Ching, Mencius, Book of Rites, Book of Changes) are assigned to the first category, while the remaining pairs are assigned to the second. Given that core texts are frequently cited and interpreted in subsequent literature, they exhibit higher academic representativeness and are therefore treated as a separate stratum. The main advantage of stratified sampling lies in its ability to partition a heterogeneous population into internally homogeneous subgroups, thereby significantly enhancing the structural representativeness of samples [

28]. We sample 10,000 instances from each category, resulting in a pre-annotated dataset of 20,000 entries to ensure comprehensive coverage and representativeness.

To guarantee annotation quality, we adopt a three-stage annotation process. Each stage strictly adheres to the annotation guideline outlined in

Table 2. First, in the pre-annotation phase, we prompt DeepSeek to perform preliminary classification of text pairs, automatically determining their semantic similarity categories. Next, in the manual annotation phase, five annotators with expertise in classical literature, each having undergone systematic training on annotation guidelines, independently validate the pre-annotated results. Finally, a professional review panel resolves discrepancies between model pre-annotations and human annotations. For cases where consensus cannot be reached, domain experts make the final adjudication to ensure the accuracy of the annotated results. Given that differences in textual interpretation may lead to inconsistencies, we assess inter-annotator agreement using Fleiss’ Kappa [

29], which yields a score of 0.83. According to the criteria established by Richard et al. [

30], a Kappa value between 0.81 and 1.00 denotes almost perfect agreement, indicating a high level of consistency among annotators in our study. As shown in

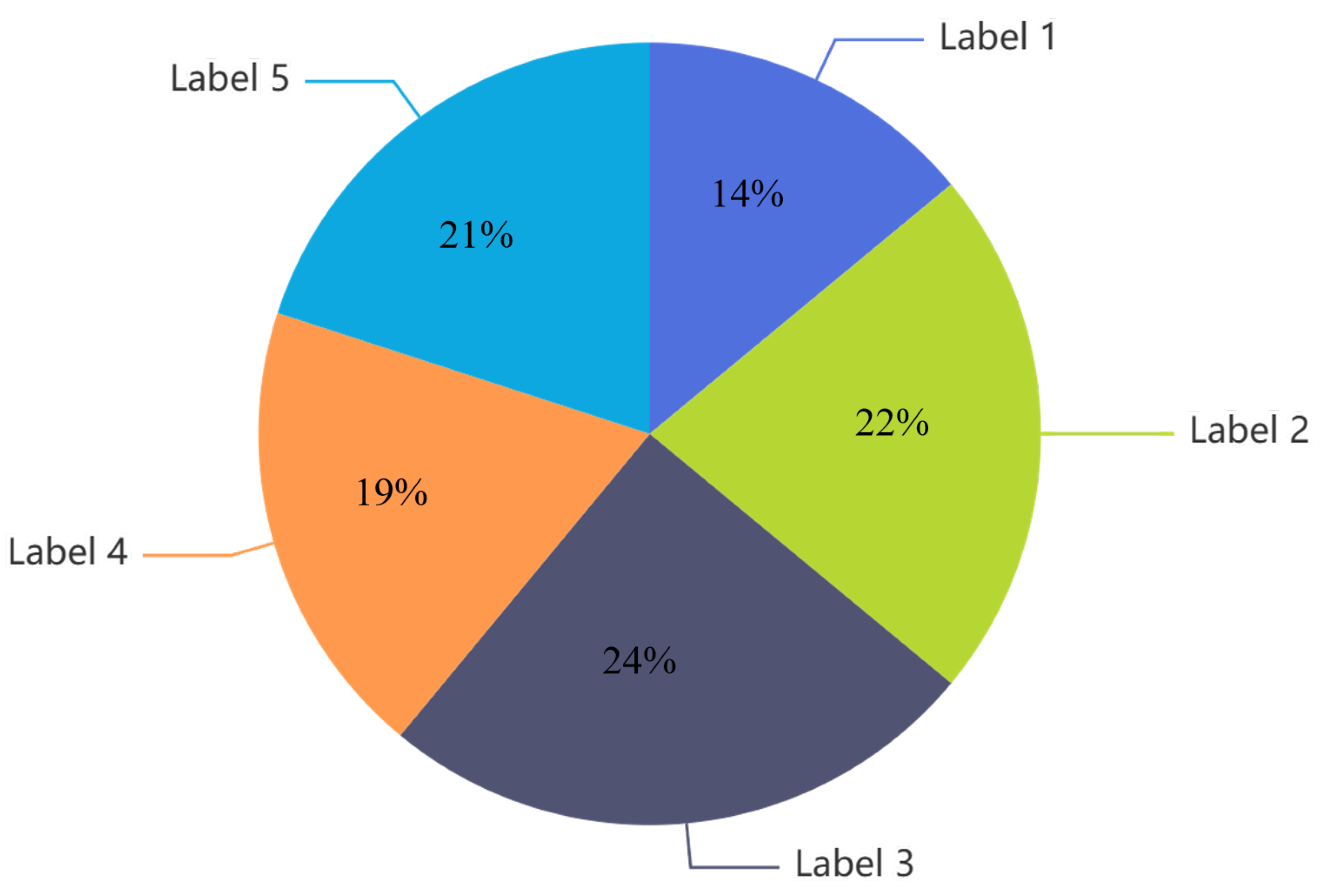

Figure 2, the sentence length distribution within the constructed dataset demonstrates a clear concentration of textual units in the 5–20 character range, which aligns with the characteristically concise nature of classical Chinese literature. A gradual decline is observed for longer sentences (>20 characters), with minimal representation beyond 50 characters, consistent with the stylistic conventions of historical philosophical works. And the class distribution of the dataset is illustrated in

Figure 3, showing an overall balanced distribution across categories. Notably, Label 2 accounts for the largest proportion, which may be attributed to the fact that citations in philosophical texts often involve not merely verbatim repetition but rather semantic transformation and creative reinterpretation.

6. Experimental Settings

6.1. Baselines

We compare our approach with state of-the-art sentence representation methods:

SikuRoBERTa: A RoBERTa model continually pre-trained on the Siku Quanshu corpus.

SimCSE: A simple yet effective contrastive learning method that utilizes dropout masks to construct positive samples.

SSCL [31]: Constructing hard negative samples for contrastive learning by leveraging intermediate layer representations from PLMs.

RankCSE: Learning semantically discriminative sentence representations by capturing ranking information among sentences.

SynCSE: Generating positive and negative samples for contrastive learning by leveraging LLM-based synthetic data augmentation.

Our proposed method follows the training paradigm of the supervised SimCSE. By prompting the LLM to generate positive and negative samples and incorporating a ranking distillation strategy, it leads to a significant improvement in the performance of the model.

6.2. Implementation Details

We use 80% of our self-constructed dataset as the anchor sentence set. Based on anchor sentences, we employ DeepSeek to generate the final training set for contrastive learning fine-tuning and ranking learning, while the remaining 20% of the self-constructed dataset serves as the test set. To develop models suitable for ancient text reuse detection and ensure fair comparisons, we adopt SikuRoBERTa/SikuBERT as the backbone architecture for all baseline models, given its widespread application in ancient Chinese text processing.

In our experiments, we set the temperature parameter to 0.05 and employ the Adam optimizer for model training. The input batch size is configured to 64, with a maximum sequence length of 32 tokens. To derive sentence-level embeddings, we separately apply average pooling over the token representations and directly extract the [CLS] token vector. Model training is conducted for a single epoch using one NVIDIA V100 GPU with 32 GB of memory.

6.3. Evaluation Metrics

We adopt the Spearman correlation [

32] to evaluate model performance. This metric reflects the model’s performance by measuring the correlation between the ranking of the model’s predicted results and the ground-truth ranking annotated in the dataset. The key advantage of this metric lies in its direct evaluation from a ranking perspective, eliminating the need to set specific thresholds and thereby avoiding the subjectivity and uncertainty associated with threshold selection. Specifically, we convert the continuous similarity scores output by the model into ranked sequences and compute their correlation with the pre-annotated ground-truth ranking sequences. The calculation formula is as follows:

where

denotes the Spearman correlation,

is the difference between the two ranks, and n is the number of the sample size.

During the evaluation phase, we first use the trained model to obtain the embeddings of sentence pairs. Then, we employ three different similarity computation functions to get similarity scores for all sentence pairs, including cosine similarity, Manhattan distance, and Euclidean distance. These scores are then compared with the annotated score sequences to calculate the Spearman correlation. Since cosine similarity primarily measures the direction of vectors rather than their length, it is more suitable for text semantic similarity tasks. In contrast, Manhattan distance and Euclidean distance are more sensitive to vector length, making these metrics useful as supplementary evaluation criteria. Based on this, after computing the four metrics mentioned above, we weight them in a ratio of 2:1:1 and sum them up to obtain a comprehensive evaluation metric, thereby thoroughly assessing the method’s performance. This metric is denoted as

, and its formulation is as follows:

7. Experimental Results and Analysis

7.1. Main Results

Table 3 presents a comprehensive performance comparison between the proposed

AncientTRD framework and baseline models for text reuse detection, evaluated using three distinct metrics and an comprehensive metric

. The experimental results reveal several noteworthy observations:

The AncientTRD model demonstrates superior performance across all evaluation metrics. Quantitative analysis shows that AncientTRD achieves remarkable improvements of 14.92% and 14.36% over the original SikuBERT and SikuRoBERTa architectures, respectively. Furthermore, when compared with the current state-of-the-art data distillation method, SynCSE, AncientTRD still maintains a clear advantage, with performance enhancements of 3.34% and 3.25%. Notably, models based on the SikuBERTbase architecture generally outperform those using the SikuRoBERTabase architecture.

The experimental results show that, except for SikuBERT/SikuRoBERTa, all models fine-tuned with contrastive learning strategies exhibit substantial improvements over their base models. This strongly validates the effectiveness of contrastive learning fine-tuning in the task of ancient text reuse detection.

Among all compared models, only SynCSE and AncientTRD leverage LLM-synthesized data for fine-tuning. These two models significantly outperform the others, indicating that LLM-based data distillation can bring substantial performance enhancements.

We employ a bootstrap resampling method to evaluate the stability of the models. Specifically, we repeatedly sample with replacement from the original test set, drawing 4000 samples each time to form a bootstrap subset. We then assess the model performance on each subset and record the corresponding metrics. This procedure is independently repeated 500 times to construct the distribution of performance metrics. Based on these 500 resampling results, we calculate the mean, standard deviation, and 95% confidence intervals of the performance metrics and visualize the outcomes using boxplots. As shown in

Figure 4, the results demonstrate that our proposed model yields small standard deviations and compact confidence intervals, providing strong evidence of its superior stability. A permutation test (10,000 permutations) confirms that the proposed model achieves a significantly higher score on the test set compared with other baseline models (

p < 0.005), thereby validating the effectiveness of the method.

7.2. Ablation Study

The proposed

AncientTRD comprises two modules: Synthesizing Positive and Negative Samples (SPN) and Ranking Distillation (RD). To investigate how each module influences performance, we conduct ablation experiments based on the SikuBERT

base and SikuRoBERTa

base architectures. As shown in

Table 4, the results demonstrate that both the SPN and RD modules make significant contributions to the model’s performance. Our experiments reveal that removing the SPN module alone leads to a notable decline in model performance (with decreases of 7.17%/8.02%), whereas the performance drop is relatively smaller when the RD module is removed individually. This indicates that the strategy of generating positive and negative samples using an LLM plays a pivotal role in enhancing model performance.

Furthermore, when both modules are removed simultaneously (equivalent to adopting the unsupervised training strategy of SimCSE), the model experiences an even more substantial performance decline (9.58%/9.79%). Although this decrease is significantly greater than the performance drop observed when removing either module alone, it is smaller than the theoretical sum of the individual decreases (10.32%/10.86%). This phenomenon indicates that the two modules exhibit both functional complementarity and partial redundancy: (1) The SPN module enables the model to distinguish between positive and negative samples by constructing high-quality contrastive samples, while the RD module further optimizes fine-grained semantic discrimination capabilities through ranking loss, thereby collaboratively enhancing model performance; (2) Since the SPN module inherently embodies a preference for ranking positive samples, there is partial functional overlap between the two modules.

7.3. Analysis

7.3.1. Synthetic Data

During the generation process, the LLM is prompted to produce samples with varying degrees of semantic similarity for each anchor sentence. To evaluate the quality of the synthesized data, 10% of the samples are randomly selected and assessed by three annotators. The evaluation is conducted across three dimensions: fluency (grammatical correctness and naturalness of expression, scored on a scale of 1–5), relevance (semantic relatedness with the anchor sentence, 1–5), and diversity (novelty of the generated content, 1–5). The final quality score for each sample is calculated as the average across these dimensions. The overall mean score reaches 4.13, with a Kappa value of 0.76, indicating a high level of inter-annotator agreement and suggesting that the generated samples are of high quality.

7.3.2. Ranking Distillation

Applicability of the PL model for ranking distillation loss. In this study, we adopt the Plackett–Luce (PL) model as the ranking loss, which closely aligns with the objectives of text reuse detection. From a humanities perspective, the most critical cases are those in which the core semantics remain highly consistent despite minor variations in expression, typically corresponding to direct quotations. We expect the model to map such sentence pairs to nearby positions in the vector space, producing very high similarity scores. By contrast, interpretative writings by later authors often extend original meanings with new perspectives, resulting in partially similar instances of lower priority than direct quotations. The model should therefore map these representations to moderately greater distances, yielding slightly lower similarity scores. Semantically unrelated samples, regardless of surface similarity, must be placed far apart to ensure low similarity scores. Mathematically, the Partial Top-k Ranking function of the PL model captures this hierarchical preference: high-priority pairs are pulled closer by placing them in the numerator, lower-priority pairs are pushed farther away by placing them in the denominator, and negative samples are treated with equal priority. Leveraging this property, we construct a PL-based loss function to guide the model in learning representations consistent with the semantic priority logic of humanities research, and negative samples are treated with equal priority. Leveraging this property, we construct a PL-based loss function to guide the model in learning representations consistent with the semantic priority logic of humanities research.

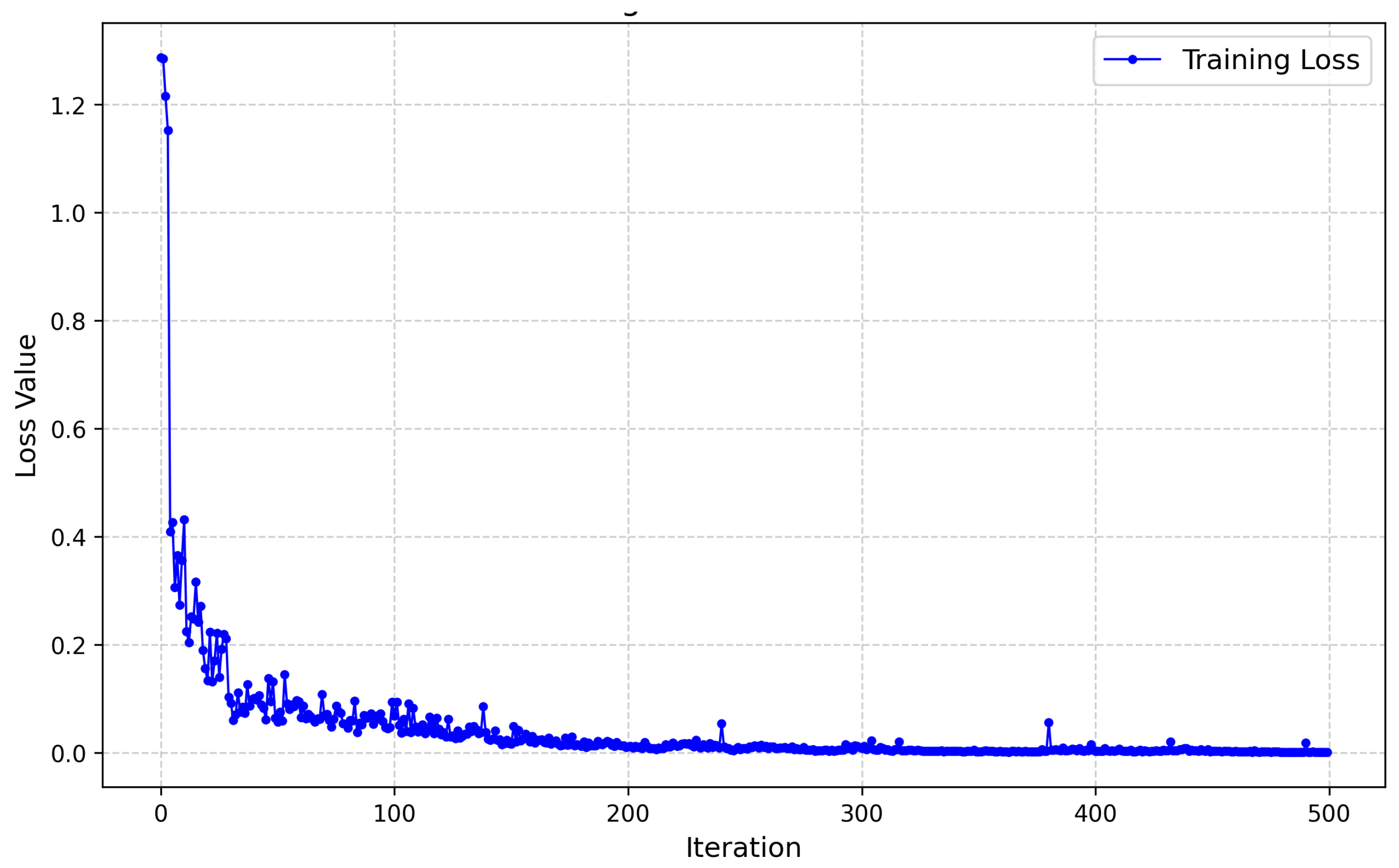

Computational complexity and convergence of ranking distillation. From a mathematical perspective, the ranking distillation loss is structurally analogous to the contrastive learning loss, as both optimize by pulling positive pairs closer in the numerator and pushing negative pairs apart in the denominator, resulting in comparable training complexity. However, our method introduces additional time overhead because it relies on the LLM to generate training samples at multiple semantic levels. Quantitative analysis indicates that training time increases by about 13% compared with SynCSE and about 30% compared with the unsupervised SimCSE. Importantly, no extra computational cost is incurred during inference, and representation efficiency remains unaffected. As shown in

Figure 5, the loss value decreases rapidly during the early stage of training and soon converges to a stable state at a relatively low level.

8. Application of Text Reuse Detection

8.1. Allusion Tracing

Cross-temporal text reuse in ancient texts is a hallmark of traditional scholarly transmission, particularly in the form of literary allusion. The proposed AncientTRD algorithm enables semantic-level identification of how later texts inherit and adapt classical sources. As demonstrated in

Figure 6, the method effectively detects text reuse at the semantic level—even when the source text has been substantially rephrased—outperforming traditional string-matching approaches. In contrast to contemporary academic compositions, classical texts seldom incorporate explicit citations. Consequently, the proposed algorithm successfully uncovers implicit intertextual references that conventional methods fail to detect. Texts with reuse relationships form an inheritance chain that can assist scholars in tracing the evolution of ideas embedded in the texts.

8.2. Analysis of Literature Influence: A Case Study of the Analects

Building upon the work presented in this study, we have developed an online platform (

https://ca.pkudh.net, accessed on 5 June 2025) tailored for humanities research. The platform incorporates the full texts of 244 classical Chinese philosophical works, which are available for users to browse directly, as shown in

Figure 7. Based on these primary sources, our proposed algorithm processes the original texts to construct a core corpus of over three million pairs of parallel texts with reuse relationships. In addition, the platform supports querying of original passages and multi-level semantic unit analysis. These analytical functions enable humanities scholars to systematically trace the evolution of intellectual and cultural ideas, identify intertextual connections, and uncover potential research trends and phenomena. In this section, we present a case study to demonstrate the platform’s applicability and value in advancing humanities research.

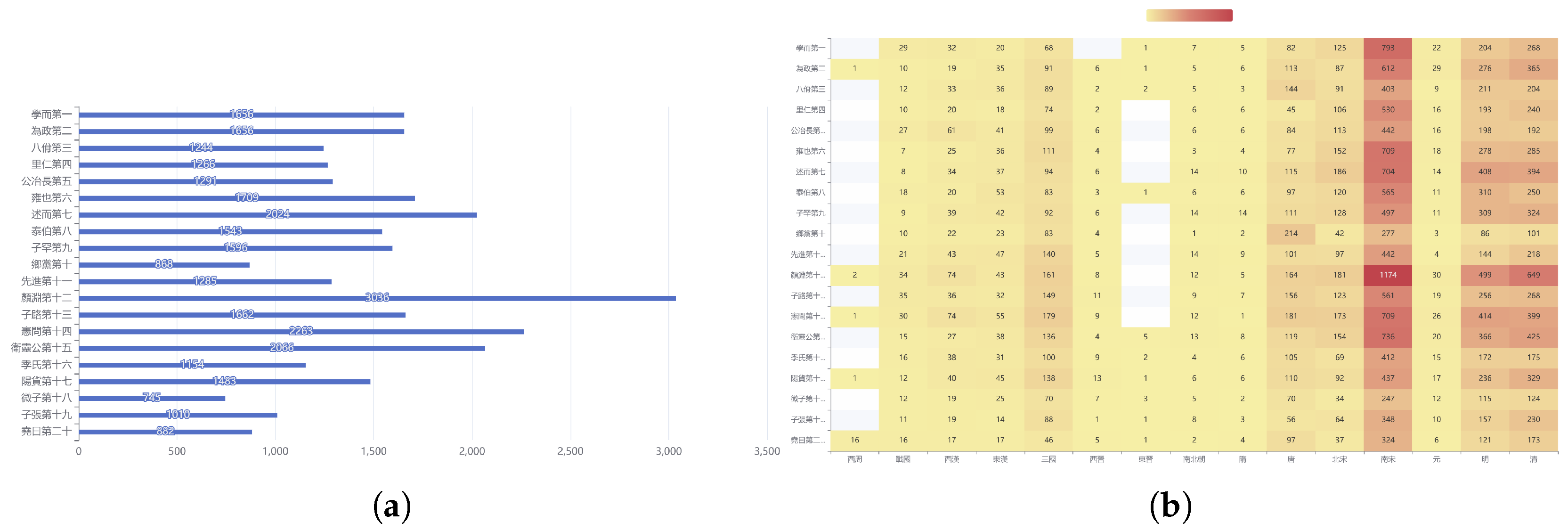

The Analects (Lunyu), as the foundational text of Confucianism, has exerted a profound and enduring influence on the development of Chinese traditional culture through its systematic teachings on moral philosophy, social governance, and personal cultivation. Applying our AncientTRD algorithm, we identified text reuse instances for each passage in The Analects across a diachronic corpus, generating a quantitative analysis of the citation intensity of each chapter over time (

Figure 8). Moreover, during reading, users can also examine the reuse of individual sentences in real time, thereby obtaining a more fine-grained understanding of the text’s reception and influence. This method uncovered patterns of ideological transmission that are difficult to capture through traditional manual counting. Taking the chapter Yan Yuan as a case: it exhibited the highest frequency of text reuse among all chapters, with citation intensity reaching its historical peak during the Southern Song dynasty (1127–1279). This phenomenon correlates strongly with Zhu Xi’s promotion of Yan Hui’s philosophical ideals. Evidently, text reuse analysis enables efficient organization and comparison of textual information, thereby facilitating a more systematic and comprehensive examination of the transmission of ancient Chinese philosophical thought.

9. Future Work

Although the proposed method achieves significant improvements compared to the baseline models, the use of an LLM for synthesizing training data still incurs additional computational costs. In future work, we plan to employ a smaller-scale (8B parameters) domain-specific LLM for classical text to reduce computational overhead. Additionally, we will design differentiated prompt templates for positive and negative sample generation tailored to different types of classical texts. For dataset construction, we will further expand the dataset scale while ensuring annotation quality through multi-round manual verification and expert review mechanisms.

Currently, research on text reuse detection and text semantic similarity has predominantly concentrated on enhancing models’ semantic representation capabilities through contrastive learning strategies, while innovation in the underlying model architectures remains relatively limited. The method proposed in this study represents an innovation at the training-strategy level, improving model performance through loss function optimization. In future work, we plan to design dedicated model architectures tailored to the characteristics of ancient Chinese texts, aiming to more effectively address the unique challenges of detecting text reuse in classical literature. To further enhance representational capacity, we will explore incorporating domain-specific features—such as glyph structures, prosodic patterns, and culturally significant keywords (e.g., allusions)—into the pre-training framework. Moreover, we aim to move beyond semantic-level relevance to capture higher-order conceptual and thematic relationships across texts. These extensions are expected to broaden the applicability of the model and provide deeper analytical insights for digital humanities research.

10. Conclusions

In this paper, we present a novel knowledge distillation-based approach for text reuse detection in ancient Chinese literature which substantially improves the semantic understanding of classical texts while preserving computational efficiency. Furthermore, we develop a high-quality annotated dataset to provide a reliable benchmark for evaluating relevant algorithms. Two case studies are conducted to illustrate the applicability of the proposed method in cultural analysis, thereby offering a new technical avenue for the digitization and intelligent processing of cultural heritage.

Author Contributions

Conceptualization, J.W. and B.F.; methodology, B.F.; software, B.F.; validation, B.F.; formal analysis, J.W. and B.F.; resources, J.W.; data curation, B.F.; writing—original draft preparation, B.F.; writing—review and editing, J.W. and B.F.; supervision, J.W.; project administration, B.F.; funding acquisition, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the NSFC project “The Construction of the Knowledge Graph for the History of Chinese Confucianism”, grant number 72010107003.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Chang, E.; Hou, H.; Cao, L. Research on Automatic Version Comparison and Analysis of Ancient Book and Its Realization. J. Chin. Inf. Process. 2007, 2, 83–88. [Google Scholar]

- Ma, C.; Chen, X.; Qu, W. Automatic Analysis of Comments in Commentary Literature. Comput. Sci. 2012, 39, 220–223. [Google Scholar]

- Deng, Z.; Yang, H.; Wang, J. A Comparative Study of Shiji and Hanshu from the Perspective of Digital Humanities. In Proceedings of the 21st Chinese National Conference on Computational Linguistics, Nanchang, China, 14–16 October 2022. [Google Scholar]

- Cheng, N. The Intertextual Space Between Wenxuan and the Tang Poetry from the Perspective of Digital Humanities. J. Tsinghua Univ. (Philos. Soc. Sci.) 2023, 6, 75–88+222. [Google Scholar]

- Duan, S.; Wang, J.; Yang, H.; Su, Q. Disentangling the cultural evolution of ancient China: A digital humanities perspective. Humanit. Soc. Sci. Commun. 2023, 10, 310. [Google Scholar] [CrossRef]

- Wang, D.; Liu, C.; Zhu, Z.; Liu, J.; Hu, H.; Shen, S.; Li, B. Construction and Application of Pre-trained Models of Siku Quanshu in Orientation to Digital Humanities. Libr. Trib. 2022, 42, 31–43. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Sturgeon, D. Digital approaches to text reuse in the early Chinese corpus. J. Chin. Lit. Cult. 2018, 5, 186–213. [Google Scholar] [CrossRef]

- Wang, S. The Study of Sentence Alignment between Shijing with Its Comments and Annotation. Master’s Thesis, Nanjing Agricultural University, Nanjing, China, 2018. [Google Scholar]

- Liang, Y.; Wang, D.; Huang, S. Research on Automatic Mining of Variants Expressing the Same Event in the Ancient Books. Libr. Inf. Serv. 2021, 9, 97–104. [Google Scholar]

- Ye, W.; Hu, D.; Wang, D.; Zhou, H.; Liu, L. Research on Unsupervised Automatic Intertextual Discovery Based on Large Models of Ancient Books. Libr. Inf. Serv. 2024, 68, 41–51. [Google Scholar]

- Li, W.; Shao, Y.; Bi, M. Data Construction and Matching Method for the Task of Ancient Classics Reference Detection. J. Chin. Inf. Process. 2024, 11, 171–180. [Google Scholar]

- Gao, T.; Yao, X.; Chen, D. Simcse: Simple contrastive learning of sentence embeddings. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021. [Google Scholar]

- Zhang, J.; Liu, J.; Deng, J.; Liu, Y.; Huang, Q. Research on Similarity Calculation of Traditional Chinese Medicine Classical Prose: A SimCSE Approach to Fusing Domain Knowledge with Generative. J. Mod. Inf. 2025, 45, 49–59. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. In Proceedings of the 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–8 December 2013. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-bert: Sentence embeddings using siamese bert-networks. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing, Hong Kong, China, 3–7 November 2019. [Google Scholar]

- Yan, Y.; Li, R.; Wang, S.; Zhang, F.; Wu, W.; Xu, W. Consert: A contrastive framework for self-supervised sentence representation transfer. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing, Virtual Event, 1–6 August 2021. [Google Scholar]

- Zhou, K.; Zhang, B.; Zhao, W.X.; Wen, J.R. Debiased contrastive learning of unsupervised sentence representations. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022. [Google Scholar]

- Nishikawa, S.; Ri, R.; Yamada, I.; Tsuruoka, Y.; Echizen, I. EASE: Entity-aware contrastive learning of sentence embedding. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022. [Google Scholar]

- Liu, J.; Liu, J.; Wang, Q.; Wang, J.; Wu, W.; Xian, Y.; Zhao, D.; Chen, K.; Yan, R. RankCSE: Unsupervised Sentence Representations Learning via Learning to Rank. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023. [Google Scholar]

- Zhang, J.; Lan, Z.; He, J. Contrastive Learning of Sentence Embeddings from Scratch. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023. [Google Scholar]

- Wang, L.; Yang, N.; Huang, X.; Yang, L.; Majumder, R.; Wei, F. Improving text embeddings with large language models. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, Bangkok, Thailand, 11–16 August 2024. [Google Scholar]

- Thirukovalluru, R.; Wang, X.; Chen, J.; Li, S.; Lei, J.; Jin, R.; Dhingra, B. Sumcse: Summary as a transformation for contrastive learning. In Findings of the Association for Computational Linguistics: NAACL 2024; Association for Computational Linguistics: Mexico City, Mexico, 2024. [Google Scholar]

- Brandley, R.A.; Terry, M.E. Rank Analysis of Incomplete Block Designs: I. Method Paired Comp. 1952, 39, 324–345. [Google Scholar]

- Plackett, R.L. The Analysis of Permutations. J. R. Stat. Soc. Ser. C 1975, 24, 193–202. [Google Scholar] [CrossRef]

- Luce, D.R. Individual Choice Behavior: A Theoretical Analysis. J. Am. Stat. Assoc. 2005, 67, 1–15. [Google Scholar]

- Wang, S. Automated Fault Diagnosis Detection of Air Handling Units Using Real Operational Labelled Data Using Transformer-based Methods at 24-hour operation Hospital. Build. Environ. 2025, 282, 113257. [Google Scholar] [CrossRef]

- Fleiss, J.L. Measuring nominal scale agreement among many raters. Psychol. Bull. 1971, 76, 378–382. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The Measurement of Observer Agreement for Categorical Data. Biometrics 1971, 76, 378–382. [Google Scholar] [CrossRef]

- Chen, N.; Shou, L.; Gong, M.; Pei, J.; Cao, B.; Chang, J.; Jiang, D.; Li, J. Alleviating over-smoothing for unsupervised sentence representation. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023. [Google Scholar]

- Reimers, N.; Beyer, P.; Gurevych, I. Task-oriented intrinsic evaluation of semantic textual similarity. In Proceedings of the 26th International Conference on Computational Linguistics: Technical Papers, Osaka, Japan, 11–16 December 2016. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).