Abstract

This paper explores the integration of Artificial Intelligence and semantic technologies to support the creation of intelligent Heritage Digital Twins, digital constructs capable of representing, interpreting, and reasoning over cultural data. This study focuses on transforming the often fragmented and unstructured documentation produced in cultural heritage into coherent Knowledge Graphs aligned with internationally recognised standards and ontologies. Two complementary AI-assisted workflows are proposed: one for extracting and formalising structured knowledge from heritage science reports and another for enhancing AI models through the integration of curated ontological knowledge. The experiments demonstrate how this synergy facilitates both the retrieval and the reuse of complex information while ensuring interpretability and semantic consistency. Beyond technical efficacy, this paper also addresses the ethical implications of AI use in cultural heritage, with particular attention to transparency, bias mitigation, and meaningful representation of diverse narratives. The results highlight the importance of a reflexive and ethically grounded deployment of AI, where knowledge extraction and machine learning are guided by structured ontologies and human oversight, to ensure conceptual rigour and respect for cultural complexity.

1. Introduction

It is said that Helen of Troy, the most beautiful woman in the world according to Greek mythology, never set foot on the shores of Asia Minor. In a dramatic and unsettling version of the myth, told by Euripides and echoed in later traditions, it was not the real Helen whom Paris carried off to Troy. Instead, a simulacrum, an eidolon, a perfect likeness devoid of substance, was sent by the gods to take her place (this version of the myth is attested in Apollodorus, Epitome III. 5; Euripides, Electra, 128 and Helen 31 ff.; Servius on Virgil’s Aeneid, I. 655 and II. 595; Stesichorus, quoted by Tzetzes, On Lycophron 113). While the true Helen waited in silence in Egypt, perhaps unaware of the war unfolding in her name, it was her image that ignited desire, deceived kings, and plunged the world into its most storied conflict [1]. The eidolon was not a lie but a form with agency: it acted, provoked, and transformed the course of history. Though lacking life of its own, it possessed real effectiveness.

In this figure of the operative double, of an image that does not merely represent but intervenes, there is a striking anticipation of the modern notion of digital twin. Such an entity is designed not just to mirror the original but to accompany or replace it in action, to operate in its stead, often with greater reach and precision. A digital twin, to fulfil this role, must do more than resemble. Like Helen’s phantom, it must be endowed with structured intelligence and a “grammar” that sustains its coherence. The story of Helen’s eidolon speaks to an ancient intuition: that a likeness, when crafted with precision and animated with purpose, can operate in the world with consequences equal to, or even surpassing, those of its original. This mythic logic finds a contemporary counterpart in the digital age, where cultural entities are increasingly represented by what might be called simulacra, i.e., constructed doubles whose very name shares etymological roots with the verb to simulate. These digital twins are not inert copies but dynamic, structured representations designed to engage with the world through processes of analysis, interaction, and prediction.

In the realm of cultural heritage, the digital twin has evolved far beyond the notion of a static visualisation or isolated model. It now constitutes a complex informational organism, integrating diverse layers of documentation: historical texts, scientific analyses, sensory data, and curatorial knowledge. To preserve coherence across this multiplicity of sources, ontologies provide the foundational structure to build the memory of the digital twin by serving as its semantic architecture and allowing knowledge to be expressed formally, queried effectively, and shared across systems and institutions.

Yet, structure alone cannot suffice. Just as Helen’s eidolon required a divine breath to become operative, digital twins must be endowed with intelligence in order to act meaningfully. Artificial Intelligence, when guided by ontological frameworks, enables digital twins to transcend passive representation. Through the semantic interpretation of textual and visual sources, the detection of patterns in environmental data, and the simulation of complex behaviours, AI seems ideal to become the interpretive engine of the twin. In this framework, natural language processing, entity recognition, semantic inference, and predictive modelling are no longer isolated functionalities but become part of a coherent system of knowledge and memory production.

A distinctive innovative aspect of this study is the systematic combination of Artificial Intelligence and ontologies in the construction of digital twins. Previous approaches, especially in cultural heritage, have often relied on AI without semantic guarantees, or on ontological models without operational integration. The framework presented here links the two dimensions inseparably: ontologies act as the semantic backbone that ensures transparency, coherence, and interoperability, while AI functions as the cognitive engine that extracts, enriches, and interprets knowledge from the complex body of documentation that sustains the digital twin, including analytical reports, curatorial records, and other heritage data. It is precisely in the synergy between ontologies and AI that the true potential of digital twins can unfold. Their mutual interaction constitutes the epistemological core of a new generation of digital twins in which ontologies ensure that AI models are not only efficient but also transparent, interpretable, and anchored in shared conceptual frameworks. In return, AI can enrich semantic information by identifying latent connections, disambiguating meanings, and populating knowledge graphs with contextually valid information. The result is a self-expanding semantic system, capable of both reasoning and remembering, a system that learns, explains, and operates in alignment with the epistemic and ethical values of cultural heritage research.

This paper is structured as follows: Section 2 outlines the historical evolution of digital twins, particularly those enhanced by AI, from their origins in engineering and aerospace to their gradual redefinition within the cultural heritage domain. Section 3 introduces the Heritage Digital Twin and its associated ontology, designed to implement cognitively enriched, conceptually expressive constructs. Section 4 explores the uses of new Artificial Intelligence technologies to extract knowledge from cultural heritage documentation and encode it according to the semantics of ontological models widely used in this domain to build the knowledge graphs of Heritage Digital Twins. Section 5 investigates how knowledge graphs can be used to enrich the semantic toolbox of Artificial Intelligences, transforming them into agents capable of reasoning on large and intricate amounts of data and answering complex scientific questions formulated in natural language. Section 6 presents a case study where the entire pipeline is tested on a set of scientific reports from the ARTEMIS project documentation to identify and distil information used to populate the knowledge graphs that serve as the engine of the Heritage Digital Twins and is used to enrich and reinforce AI components, enhancing their ability to support advanced functionalities such as natural language interfaces, intelligent querying, and adaptive interaction with users. Following the discussion in Section 7, which highlights the potential of these technologies while also highlighting the inherent risks in their application, particularly when not subject to rigorous human oversight, this paper moves towards Section 8, offering some conclusive notes and future perspectives.

2. Digital Twins: From Conceptual Models to Operational Systems

The relevance of digital twins has steadily expanded across disciplines, reflecting their growing role as sophisticated digital counterparts of real-world entities and systems [2,3]. While often loosely applied to any virtual replica, the term also designates complex, structured information systems capable of interfacing with the physical world. Initially developed to enhance industrial performance, optimise processes, and anticipate failures, digital twins have since evolved to support various domains for their ability to improve planning decisions [4], especially by integrating multi-source data [5].

The origins of digital twins are often traced to NASA’s simulation technologies in the 1960s, which allowed engineers to replicate space conditions virtually—an epistemological necessity in the face of inaccessibility [6]. Although the term “digital twin” formally emerged only in 2010 [7], the conceptual lineage is deeper and more complex, as is often the case with terms that retrospectively colonise prior practices. The applicability of digital twins has expanded dramatically since then. In manufacturing, for instance, digital twins underpin Industry 4.0 and its successor paradigms, enabling predictive maintenance, lifecycle optimisation, and human–machine collaboration [8]; and in the construction sector, digital twins facilitate accurate simulation of design scenarios before physical implementation, reducing costs and enabling early detection of critical flaws. This evolution is most visible in large-scale projects. Urban applications, perhaps the most ambitious in scale and implication, leverage digital twins to model smart cities, integrating traffic, land use, energy consumption, and citizen feedback into coherent, semantically grounded infrastructures. A digital twin of the city of Zurich is being developed to support sustainable urban planning [4]; and the new Singapore’s city-scale twin integrates data from sensors, satellites, and drones for growth management and environmental monitoring [9]. Moreover, the European Commission’s Destination Earth initiative envisions a planetary-scale digital twin to simulate climate dynamics, incorporating not only physical systems but also social and behavioural data [10]. This human-in-the-loop paradigm reflects a growing recognition that digital twins are not neutral mirrors but value-laden constructs. They encode assumptions about relevance, causality, and intervention and thus require governance frameworks that ensure transparency, ethical integrity, and stakeholder accountability [11].

When transposed to the cultural heritage domain, however, digital twins demand a more nuanced methodological framework. Unlike industrial or urban applications, where twins are created before or alongside their physical counterparts, heritage assets typically predate their digital representations by centuries and embody multiple temporal, material, and cultural layers. Consequently, their digital twins must account for both tangible and intangible dimensions, incorporating expressions of human creativity, identity, collective traditions, performances, and community practices that carry historical, artistic, social, and symbolic significance [12,13,14]. Within this perspective, digital twin technology has increasingly been tested across diverse areas of cultural heritage, producing significant though still heterogeneous results. In archaeology, digital twins have been used to document and virtually reconstruct sites and artefacts, providing new opportunities for exploring contexts that are otherwise fragmented or inaccessible [15]. The museum sector has adopted similar strategies for collections management and preventive conservation [16], with major institutions such as the Victoria & Albert Museum and the Louvre experimenting with large-scale digitisation to safeguard fragile artefacts and to expand access to audiences worldwide [17,18]. Monuments and architectural heritage have also been at the forefront of experimentation: the digital twin of Notre-Dame Cathedral, for instance, now functions as both a record of the building before the devastating fire of 2019 and a central resource for its ongoing restoration [19]. Comparable approaches have been applied to historic buildings, where parametric twins integrate sensor data to monitor indoor climate conditions and support conservation planning [20], as demonstrated by the cases of Löfstad Castle in Sweden [21] and Sant’Andrea’s church in Pistoia, Italy [14].

At the scale of urban and landscape heritage, projects such as the Great Wall Resource Management Information System [22] have generated detailed digital archives of UNESCO World Heritage sites across continents, demonstrating how digital twin technologies can be applied to cultural landscapes of vast territorial extension. Finally, in the field of restoration, digital twins enriched with AI-powered inpainting and 3D reconstruction have enabled the simulation of conservation treatments and the digital recovery of degraded artefacts, offering conservators a dynamic decision-support tool before interventions on the original object.

These examples demonstrate that, in most cases, applications of digital twin technology in cultural heritage remain predominantly tied to 3D visualisations, echoing late twentieth-century efforts to virtually reconstruct monuments and artefacts. As the model is evolving, the digital twin is gradually ceasing to be merely a static reflection, becoming a conceptual framework open to reinterpretation of operational and behavioural dimensions across disciplinary borders.

2.1. Digital Twins and Artificial Intelligence

Artificial Intelligence has increasingly emerged as a transformative force in the cultural heritage domain, offering powerful methods for analysing, interpreting, and even recreating the past. Its capacity to process vast datasets, recognise patterns, and generate new content has enriched the arsenal of digital tools available to scholars, conservators, and institutions in unprecedented ways. Applications span an impressively wide spectrum. Generative systems have been used to complete unfinished compositions by legendary composers, providing novel insights into artistic style and creative processes [23]. In parallel, advances in natural language processing have enabled the reconstruction and interpretation of ancient texts written in extinct or poorly understood languages, contributing to philology, epigraphy, and the study of lost literatures [24]. In archaeology and palaeoenvironmental studies, AI-driven reconstructions have recreated the aspect of long-lost environments and past landscapes, allowing researchers to simulate ecological conditions, visualise historical settings, and contextualise human activity [25,26].

Conservation and restoration represent another key area where AI has proven invaluable. In art history, recent advances have explored the innovative application of AI in the reconstruction and preservation of historical paintings, sculptures, ceramics, and other artefacts, offering new solutions to the challenges of traditional restoration [27]. The same technologies have been applied to questions of provenance, helping to trace the circulation of cultural objects across time and space through automated analysis of stylistic or material features [28]. Systems capable of detecting deterioration patterns in artworks and monuments provide guidance on preventive conservation strategies [29,30], while generative and inpainting algorithms have been successfully employed to support the virtual restoration of damaged paintings, sculptures, ceramics, and manuscripts [31]. These developments not only facilitate better-informed interventions but also open the possibility of testing conservation hypotheses in digital environments before their implementation on the original artefacts.

The incorporation of Artificial Intelligence into digital twin technologies is ushering in a significant conceptual and operational shift. No longer confined to functioning as elaborate digital counterparts of physical entities, digital twins are now evolving into systems endowed with interpretive, adaptive, and semantically structured intelligence, capable not just of replicating but also of understanding and responding to the complexities of their real-world counterparts. This transformation marks a notable epistemological threshold, recasting the digital twin as an active agent of interpretation rather than a passive conduit of representation. It is AI that propels this transition, furnishing digital twins with mechanisms of learning, inference, and autonomous decision-making [8]. As such, digital twins are no longer mere digital projections but are emerging as computational systems capable of analysis, simulation, and strategic recommendation [32]. Evidence of this transformation is manifesting across a broad range of domains. In manufacturing, for example, AI-enhanced digital twins support predictive maintenance, improve workflow design, and enable real-time responsiveness by interpreting sensory inputs and operational histories. Factories thus begin to resemble adaptive environments, governed not solely by pre-programmed rules but by evolving patterns of interaction and feedback [33]. In urban systems, the convergence of AI and digital twins is transforming how cities are modelled, understood, and governed. These intelligent frameworks are being applied to simulate environmental and infrastructural conditions—ranging from carbon emissions and heat distribution to traffic and pedestrian behaviour. As a result, municipalities are empowered to enact more nuanced and anticipatory interventions [34,35].

Importantly, the symbiosis between AI and digital twins is extending its reach beyond industrial or scientific contexts, entering realms such as cultural heritage with promising, often experimental, applications. One particularly innovative avenue involves the reconstruction of lost or undocumented heritage through AI-based image generation. Here, AI is employed to synthesise visualisations derived from oral testimonies and collective memory, complementing tangible-based HBIM methods [36].

In heritage-related scenarios more broadly, AI integration into digital twins is already proving beneficial for documentation, conservation, and public access. AI-driven twins are being employed to improve crisis management by integrating multi-sensor data and supporting real-time decision-making, with clear implications for the protection of heritage assets in emergency situations [37]. In cultural tourism contexts, the combination of digital twins and AI enables predictive monitoring, preventive conservation, and immersive storytelling, enhancing both preservation and visitor engagement [38]. At the same time, AI-enabled digital twins are increasingly able to reshape the relationship with artworks and heritage environments by allowing replication, remote access, and large-scale data integration [17]. More advanced approaches are exploring semantically grounded twins enhanced with memory-enabled AI agents, capable of reasoning over time and providing transparent, value-aligned support for long-term preservation planning [39]. Automation is expediting data collection, facilitating large-scale digitization, and enhancing accessibility via automated transcription and translation tools. At the same time, AI is processing real-time sensor data to detect threats and recommend countermeasures, increasing the system’s responsiveness to environmental risks or structural degradation. In this way, conservation scenarios can be virtually simulated, allowing interventions to be evaluated without exposing heritage objects to physical risk [14].

Despite these advances, the implementation of AI within digital twin ecosystems remains uneven and often constrained. Many applications persist in isolated pilot stages, lacking the infrastructural robustness or institutional momentum to scale effectively [40]. The interdependence of software, hardware, and human expertise highlights the need for deployment strategies that go beyond technical engineering to embrace socio-technical coordination [41]. Also, on a conceptual level, the integration of AI into the epistemic fabric of digital twins remains underdeveloped. While machine learning models excel in identifying correlations and predicting outcomes, they often fall short in contributing to the construction of coherent knowledge systems [42]. Nevertheless, progress continues steadily, and the expectation is that AI will increasingly endow digital twins with the capacity to fulfil their epistemic and operational potential. Yet this trajectory raises critical concerns about transparency and information quality. The internal logic of many AI systems remains opaque, making it difficult to trace how conclusions are reached—especially problematic in domains requiring interpretive subtlety, such as cultural heritage, urban planning, or medicine. Here, data in isolation is insufficient; meaningful engagement demands systems that can not only reason but also explain. An overreliance on autonomous inference without appropriate mechanisms for interpretability risks introducing errors, distortions, or even ethical blind spots. This situation prompts a foundational question: how far can epistemic authority be entrusted to systems incapable of articulating their own reasoning? Especially now that decisions once reserved for human deliberation start to be automated by systems that remain inaccessible to scrutiny, ensuring intelligibility and accountability is thus not a secondary concern but a prerequisite for ethical and sustainable implementation [11].

2.2. Ontologies: Illuminating Artificial Intelligence

In response to these fundamental questions, emerging approaches are foregrounding the importance of structured semantic architectures as a prerequisite for meaningful AI deployment. Rather than relying solely on data quantity or statistical modelling, these strategies emphasise the need for carefully curated knowledge frameworks, defining concepts, relationships, and ontological commitments, as foundational to advanced reasoning and explanation [43]. Ontologies, in this regard, are serving a dual role: they are enabling more nuanced and context-sensitive AI operations and also acting as safeguards against the risks of misinterpretation or hallucination [44]. Additionally, ontologies are employed in the development of explainable machine learning pipelines, addressing crucial aspects like feature categorisation and metadata description, which are fundamental for the transparency of the machine learning process [45]. By embedding formal logic and expert-curated structure within digital twin systems, ontologies help ensure that AI outputs remain anchored in coherent and verifiable knowledge domains. This allows intelligent systems not merely to function but to explain, interrogate, and evolve. In doing so, they contribute to the transformation of digital twins into epistemic infrastructures: semantic, reflexive, and dynamically coupled with the evolving landscape of human understanding [46]. This role of ontologies as a foundation for trustworthy AI is a crucial aspect, since they are able to provide more structured and explicit knowledge that enables AI systems to better articulate their reasoning. This extends beyond mere performance, addressing ethical concerns such as bias mitigation and transparency enhancement.

2.3. Towards the Cognitive Heritage Digital Twins

The Heritage Digital Twin currently being designed and developed [12,13,14,47] is shaped as a point of confluence; a space where these diverse technologies do not merely coexist but actively intertwine. It is precisely at this intersection that ontologies, Artificial Intelligence, and semantic infrastructures converge to generate a new kind of cultural intelligence. As evidenced in Section 2.1, typical applications of digital twins in heritage contexts largely focuse on high-resolution 3D models, often derived from laser scanning or photogrammetry. These visual representations, while technically sophisticated, are semantically shallow and remain inert unless complemented by rich contextual metadata and interpretive frameworks, offering a simulacrum in the visual sense but lacking interpretive depth.

Rather than reducing cultural heritage to its visual or geometric likeness, the paradigm of Heritage Digital Twin embraces the full constellation of digital documentation that relates to real-world cultural entities, whether movable, like artworks and artefacts; immovable, like monuments or architectural sites; or even intangible, such as rituals, traditions, and other expressions of cultural memory. This paradigm shifts the focus away from purely 3D-centred representations, proposing instead a holistic digital ecosystem that brings together visual depictions, textual accounts, scientific analyses, conservation records, and historical interpretations. It is a vision grounded in the belief that meaning arises not from isolated forms but from the interplay of all the fragments through which heritage is remembered, transmitted, studied, and understood.

Cultural entities, in fact, are not governed solely by physics but essentially by memory and cannot be fully described through geometry or physics alone since they carry meanings, contexts, and symbolisms that resist reduction to quantitative attributes. As a result, the replication of a heritage entity does not have to rest on form alone but requires a cognitive and semantic infrastructure and the ability to articulate not only what an object is but what it means, represents, and has undergone across time. As such, the digital twin in this domain demands not only a new technical infrastructure but a rethinking of its very ontological assumptions.

At the heart of any meaningful digital representation of cultural heritage lies a conceptual operation as much as a technical one: the deliberate choice to model reality through shared ontological commitments. Cultural entities are not inert objects, they are constructed through layers of description, interpretation and transmission. If their digital counterparts are to achieve more than a superficial resemblance, they must be capable of reflecting this diachronic and interpretive richness. Ontologies provide the necessary framework for this task: a rigorous grammar through which cultural knowledge may be structured, preserved, and subjected to critical inquiry. By expressing information as entities and relationships, ontologies enable a deeper representation of the cultural object’s life history, its transformations, and the meanings it acquires across time. In this framework, Artificial Intelligence can also reveal its full potential as a catalytic instrument capable of navigating, extracting, and reassembling meaning from the vast, fragmented archives of human knowledge and at the same time ingesting and digesting this knowledge to enhance its capabilities and serve as a privileged tool for exploring and interrogating the information embedded in the digital twin.

The culmination of this trajectory is thus the development of a new construct that unites the expressive power of ontologies with the interpretive capabilities of Artificial Intelligence in order to represent, reason about, and interact with cultural entities. Far from being a mere digital copy of its shape, the Heritage Digital Twin becomes an evolving semantic organism, an animated simulacrum (like Helen’s eidolon) which not only has its external resemblance but reproduces the cultural object in its entirety. In its most advanced form, the Heritage Digital Twin can become an agent (i.e., a cognitive entity) capable of dialogue, inference, and response within a meaningful digital continuum.

In this perspective, the present study proposes some innovative methodological aspects, building upon and extending existing practices. A distinctive contribution lies in the systematic combination of artificial intelligence and ontologies for the construction of Heritage Digital Twins (HDTs). While prior work in cultural heritage has often approached these technologies in isolation—relying either on AI without robust semantic guarantees or on ontological models lacking operational integration, as illustrated above—the proposed framework aims at unifying these two dimensions. Specifically, widely validated ontologies in cultural heritage documentation serve as the semantic backbone, ensuring the transparency, coherence, and interoperability of the data model. Concurrently, AI operates as the cognitive engine, dynamically extracting, enriching, and interpreting knowledge from the heterogeneous body of information that sustains the digital twin. This approach not only leverages the power of AI to process vast amounts of data—from analytical reports and curatorial records to conservation data—but also embeds this process within a structured, semantically rigorous framework. This ensures that the insights generated by the AI are verifiable and contextually relevant, a key advancement over previous approaches.

On this basis, this research pursues two main objectives: (i) to demonstrate how the proposed methodology facilitates the construction of Heritage Digital Twins by systematically examining and integrating heterogeneous documentation into a coherent and semantically grounded knowledge base and (ii) to show how this combined paradigm enhances the reactivity and cognitive potential of Heritage Digital Twins, enabling them to respond more effectively to analytical, conservation, and interpretative needs. The feasibility of these objectives is illustrated through two complementary case studies, aimed at illustrating the practical applicability of the proposed methodology: on one hand, ontologies and AI engage with the epistemic “archaeology” of cultural information, retrieving, structuring, and semantically articulating the dispersed and often latent knowledge embedded in visual and textual heritage records. On the other, they explore how this extracted knowledge, once formalised within a coherent ontological framework, can be reintroduced into AI systems to enhance their capacity for informed reasoning, contextual sensitivity, and traceable inference. A full account of the complete creation of a Cognitive Heritage Digital Twin would require a much larger study, given the scale and complexity of the task, encompassing vast amounts of documentation, data integration, and long-term monitoring. Far from exhausting the spectrum of possibilities opened by these technologies, this study exemplifies one of their most immediately impactful trajectories: the retrieval, organisation, and strategic deployment of knowledge embedded in cultural heritage documentation. The experiments conducted suggest that the procedures used could constitute a valuable contribution to anyone intending to address the same issues, especially in the cultural heritage sector where this type of experimentation is particularly needed.

3. Digital Twins and the Modelling of Knowledge

This paper builds upon the theoretical foundations introduced in our previous work on the Reactive Heritage Digital Twin and its associated ontology, offering a more operational perspective. It presents the design and implementation of AI-assisted semantic pipelines for the extraction, structuring, and reasoning over cultural information. By combining ontologies with intelligent processing components, it explores how to construct digital twins that are not only informative but also sentient in their logic and responsive in their behaviour. More specifically, this paper examines two complementary uses of Artificial Intelligence within this framework, situated at opposite ends of the semantic pipeline. On one side, AI is deployed for the extraction of knowledge from unstructured textual sources, in particular, heritage science reports, and its subsequent alignment with ontological structures, resulting in the enrichment of the digital twin’s semantic graph with scientific information. On the other hand, the populated graph itself becomes a resource for training AI systems, enabling them to perform tasks such as querying semantic information through natural language or assisting in the extraction of new content. This virtuous cycle of knowledge generation and reinforcement creates a recursive architecture in which semantic data and intelligent agents co-evolve, gradually enhancing the digital twin’s cognitive capacity. The goal is to show how this technological convergence can support the development of truly intelligent Heritage Digital Twins: systems that do not merely reflect culture but actively participate in its documentation, understanding, and preservation.

The conceptual architecture upon which the entire system is constructed is the Reactive Heritage Digital Twin Ontology (RHDTO), an extension blossomed from the solid foundations of the CIDOC CRM [48] as a means to organise, interconnect, and semantically sustain the heterogeneous data that forms the informational substrate of cultural digital twins. Among the various ontological frameworks developed for the heritage domain, the CIDOC Conceptual Reference Model (CRM) is an internationally recognised standard (ISO 21127:2023 [49]). More than a model, CIDOC CRM constitutes an ecosystem that includes domain-specific extensions, such as CRMsci for scientific observation [50], CRMdig for digital provenance [51], and CRMhs for heritage science [52], that offer a nuanced vocabulary capable of accommodating the multifaceted nature of cultural data. The adoption of CIDOC CRM offers a new path forward, disclosing the possibility to describe not just the form of an object but the historical events, actors, techniques, and materials that constitute its biography. It is this ontological fluency, this capacity to speak the shared language of heritage knowledge, that renders the CIDOC CRM ecosystem a cornerstone of semantic continuity across the heritage information landscape.

In some previous works, the structural components of the RHDTO have been articulated, delineating its main dimensions: the documentary one, which captures the breadth of scientific, historical, and multimedia documentation [12,13]; the reactive one, which models the operational mechanisms for real-world interaction through sensors, deciders, and actuators [14]; and the intelligent one, in which Artificial Intelligence is integrated to interpret, enrich, and activate knowledge [53]. To operationalise these dimensions, the RHDTO introduces a set of classes and properties designed to model the multifaceted nature of heritage entities and their digital counterparts with semantic precision. At the core lies the class HC1 Heritage Entity, a general abstraction encompassing both tangible (HC3 Tangible Aspect) and intangible (HC4 Intangible Aspect) dimensions of cultural heritage. The digital twin itself is represented by HC2 Heritage Digital Twin, conceived as a structured network of informational components, including digital reproductions, documentation, and the activities underpinning their creation and management. Notably, Ref. [53] illustrates how AI can serve as the cognitive engine of the digital twin, supporting analytical reasoning and decision-making within key components such as the Decider and the Actuator, thereby enhancing the system’s ability to respond to real-world conditions in a meaningful and context-aware manner. Each of these facets are modelled by means of dedicated classes, such as HC10 Decider and HC15 AI Component, and contribute to the evolving ecology of digital twins. Building on this, the subsequent sections describe several ways in which AI can be practically used for this purpose, offering an overview of the most recent methodologies and technologies available.

4. From Fragments to Knowledge

4.1. Natural Language Processing of Textual Documentation

NLP focuses on enabling machines to understand, interpret, and generate human language. As simple as this may sound from an agnostic point of view, handling human language is one of the most difficult tasks a machine can undertake. Unlike programming languages, which are based on mathematical rules and are explicitly designed to be machine-understandable, natural language is not as easily interpreted or represented in numerical form.

Over the past decade, the field of NLP has undergone a major transformation with the advent of deep learning and, more recently, Large Language Models (LLMs). These models are based on the Transformer architecture, which introduced the so-called attention mechanism:

“Given a set of vector values and a vector query, attention is a technique to compute a weighted sum of the values, dependent on the query.” [54]

In particular, LLMs use a specialised version called self-attention.

In traditional attention mechanisms, each query maps exactly to one key–value pair, while in self-attention each query matches each key to varying degrees and then the returned result is a sum of values weighted by the query–key match. This mechanism allows the model to assign importance to different tokens (which are often single words) within a sequence, enabling it to focus on the most relevant parts of the input when generating output.

In addition to this, LLMs are trained on vast, multilingual corpora [55] and have demonstrated impressive capabilities across tasks [56] such as machine translation, question answering, summarisation, and semantic search. Their ability to perform complex tasks such as transfer learning, a machine learning technique in which the knowledge learned from a task is re-used to boost performance on a related task, few-shot generalisation [57], and contextual language modelling [58] has opened new frontiers in computational language understanding, particularly in complex or previously underexplored text domains [59].

One such domain is cultural heritage [60], where textual artefacts may include ancient manuscripts, epigraphic inscriptions, archival inventories, scanned PDFs of scientific analyses, and handwritten letters [61]. These materials offer rich but highly challenging content for computational analysis, often exhibiting historical language variants, inconsistent orthography, multilingual passages, and physical degradation, all of which complicate standard NLP workflows.

Recent efforts by computer scientists have focused on adapting LLMs and developing domain-specific NLP pipelines to address these challenges. Techniques such as fine-tuning on specialised corpora, integrating Optical Character Recognition (OCR) and Handwritten Text Recognition (HTR) outputs [62], and combining textual data with metadata or image features [63] are increasingly used to support tasks like:

- named entity recognition

- semantic enrichment

- machine-assisted transcription

- cross-document linking

As a result, state-of-the-art NLP is becoming an essential component in the digital preservation, accessibility, and scholarly analysis of cultural heritage texts.

4.2. LLMs for Identification and Semantic Extraction of Relevant Entities and Relationships

A critical challenge in the digital processing of cultural heritage texts lies in the identification and extraction of entities and relationships, a foundational step for building structured knowledge, supporting semantic search, and enabling automated reasoning.

Traditionally, this task has been addressed through Named Entity Recognition (NER) pipelines, which label predefined categories such as “person”, “location”, “organisation”, or “date” in unstructured text. Over the last decade, state-of-the-art NER systems have been greatly enhanced by transformer-based models like BERT and RoBERTa, showing high accuracy on well-structured, contemporary texts. However, these systems often struggle when applied to historical documents [64], multilingual corpora, or domain-specific ontologies, where named entities are ambiguous, infrequent, incompletely annotated, or entangled in complex relational structures (even though there are some successfully trained version of some specific domains, such as ArchaeoBERT [65]). In the context of cultural heritage, standard NER often proves insufficient. Texts, such as archival inventories, epigraphs, marginalia, or curatorial notes, frequently reference entities in indirect, partial, or obsolete forms [66]. Furthermore, the relevant concepts often extend beyond standard categories to include historical events, material properties, artistic styles, ritual roles, or geopolitical shifts, many of which are not well covered by off-the-shelf NER models [67].

These limitations pose significant barriers to aligning extracted information with structured ontologies or linking it to a Knowledge Graph.

Recent research has increasingly highlighted the intersection of LLMs and KGs, pointing to a paradigm shift in knowledge representation. On one side, LLMs are used to augment KGs by supporting knowledge extraction, construction, and refinement. On the other, KGs are used to augment LLMs in tasks such as training, prompt learning, or knowledge grounding [68,69]. These works underline a move towards hybrid models and pipelines that integrate both explicit ontological knowledge and the parametric knowledge encoded within LLMs.

KG construction itself is a complex process that requires the integration of information both from structured and unstructured data. Traditional pipelines treat these sources in isolation, struggling with the heterogeneity and noise typical of cultural heritage data. LLMs, by contrast, are trained across diverse sources and exhibit strong performance on tasks such as knowledge extraction [70], entity resolution [71], and relation alignment.

An LLM-based approach is employed in this experiment, enabling flexible, context-aware entity and relation extraction in the cultural heritage domain, eliminating reliance on pre-trained NER schemas.

Instead of classifying entities into fixed types, the LLM is prompted to identify relevant semantic units and their relationships in natural language, guided by domain-specific ontology definitions. This enables extraction and alignment of non-standard or composite entities and their mapping to structured entities in a customised Cultural Heritage Knowledge Graph.

This prompt-based methodology further supports the extraction of complex relationships, including part-whole, temporal, provenance, and scientific analysis relations, which are essential for modelling cultural heritage information in ontological formats.

By bypassing traditional NER in favour of in-context reasoning with LLMs, multiple tasks, such as entity disambiguation, coreference resolution, attribute assignment, and relation typing, are integrated into a single unified pipeline.

While LLM fine-tuning methods can leverage textual information from individual entities, formal semantics are often underutilised. Beyond template-based fine-tuning, additional LLM techniques, such as prompt learning and instruction tuning, present promising avenues to enrich semantic understanding [72,73]. Moreover, cultural heritage applications often rely on cross-domain knowledge, highlighting the importance of ontology alignment, so the identification of cross-ontology mappings among concepts, instances, and properties with equivalence, integration, or membership relationships can occur.

Traditional alignment systems, such as LogMap [74], rely heavily on lexical matching and symbolic reasoning, but this is often not enough. Exploiting textual meta-information through LLMs offers a promising strategy for ontology alignment: even though pretrained models like BERT have been fine-tuned for this task [75], state-of-the-art LLMs are yet to be explored in the context. Our experiments show that this LLM-driven strategy significantly improves recall and semantic accuracy in entity-linking tasks when compared to traditional NER-based pipelines, particularly when dealing with fragmented historical records or low-resource languages. Moreover, the use of an open-source, locally deployable model ensures data privacy, customisability, and scalability, meeting the needs of cultural institutions and digital humanities projects that require transparent, interpretable, and adaptable AI tools.

Transitioning extracted information into an ontological encoding makes it unambiguous, facilitating integration into the Cultural Heritage Knowledge Graph and subsequent incorporation into Heritage Digital Twins, thus supporting richer and more semantically coherent digital representations of cultural assets.

4.3. Building Semantic Knowledge Graphs from Extracted Information

The process of encoding NLP-extracted data to ontological structures is never a mere act of correspondence; it is a conceptual translation that requires both technical rigour and hermeneutic sensitivity. Information drawn from textual descriptions, archival records, scientific datasets, or visual analysis carries within it layers of context, intention, and uncertainty. To encode such data into an ontological framework is to interpret it through a lens that seeks formal clarity without erasing epistemic nuance.

One of the major strengths of the RHDTO model lies in its ability to function as a connective tissue between datasets that originate from heterogeneous contexts, a conceptual harmonisation that permits the assembly of coherent knowledge graphs without sacrificing domain specificity. RHDTO provides the necessary paraphernalia for such knowledge transformation and transcoding, turning textual evidence and visual data into computable knowledge while preserving their contextual richness. This is particularly relevant in domains like heritage science, where the use of these conceptual tools allows for a fine-grained articulation of experimental procedures and datasets, as will be illustrated in Section 6. This semantic articulation reshapes the cognitive architecture through which heritage information is organised, shared, and understood, transforming the ontological framework of the knowledge graph in a philosophical intermediary between language and logic, between human interpretation and machine understanding [76]. This way, the digital twin is endowed with a cognitive dimension. It no longer merely stores or displays data but interprets and internalises it within an ontological order that mirrors, albeit partially and provisionally, the complex web of human understanding.

Artificial Intelligence, particularly in its recent semantic incarnations, also plays an increasingly central role in this mapping process. AI systems can retrieve entities, classify relationships, and extract candidate assertions from unstructured sources and convert them in ontology-grounded semantic triples. This is one of the cases in which the synergy between AI and ontologies reveals its full potential, the former accelerating the identification of candidate knowledge, the latter ensuring that such knowledge is meaningfully situated within a coherent and reusable conceptual space. Yet the true work of semantic alignment remains interpretive, demanding expert judgement in choosing the appropriate classes, properties, and event structures through which to articulate extracted content within the framework of the knowledge graph.

5. From Knowledge to Intelligence

5.1. Using Knowledge Graphs to Build Knowledge-Enriched AI Agents

Once knowledge crystallises in the form of semantic graphs, it can be used to nurture and enhance AIs. The complexity of cultural heritage data demands the use of intelligent systems that can reason over both explicit content and the nuanced context behind it. Knowledge graphs, for this purpose, have emerged as a powerful tool to build knowledge-enriched Artificial Intelligence systems. Information is structured to preserve its rich semantics and remains machine-interpretable, which enables AI systems to achieve context-aware understanding and inference instead of limiting themselves to surface-level pattern recognition [77,78].

Cultural heritage tells a story, and as stories are composed of interrelated entities, they must be represented in such fashion. A knowledge base with its nodes and edges perfectly captures this web of elements, grounded in formal ontologies [78,79], and CIDOC CRM and its extensions play a crucial role in offering a shared conceptual schema that aligns historical data with contemporary semantic technologies [78,80,81].

In recent years, there has been a growing emphasis on reinforcing the reasoning capabilities of LLMs by integrating them with external knowledge structures, particularly knowledge graphs. While LLMs such as GPT, BERT, and Mistral [82,83,84] have demonstrated remarkable abilities in generating fluent and plausible language, they remain prone to hallucinations, generating incorrect or misleading content especially in high-stakes domains like healthcare or science [85].

These failures often stem from limitations in their training objective, typically maximising the log-likelihood of the next token without robust grounding in factual correctness.

To address this, researchers are increasingly integrating LLMs with knowledge graphs, which encode structured, semantically rich, and traceable factual knowledge [86].

Unlike opaque neural representations, knowledge graphs enable models to reason over explicit entities and relations, align responses to verifiable facts, and offer interpretability via provenance metadata [87,88].

This integration allows models not only to retrieve precise domain-specific information but also to construct explainable inference chains, thus improving transparency and trust. Moreover, the inclusion of knowledge graphs supports granular and contextually relevant knowledge retrieval, outperforming naive augmentation with random or noisy data [89]. By aligning entities and relationships from LLM outputs with ontologies and knowledge bases, systems can avoid shallow text pattern recognition and instead achieve conceptual generalisation and symbolic reasoning.

This approach also opens the door to continuous learning pipelines, where AI outputs are validated against, or enriched by, structured data and subsequently reintroduced into the model in the form of feedback loops. These loops allow AI systems to iteratively refine their knowledge representations, adjust for emerging information, and correct previously observed errors. Combined with recent advances in multimodal and temporal input integration—e.g., linking structured data with images or spatial metadata [68]—the use of knowledge graphs is becoming central to the development of knowledge-enriched, context-aware AI systems that are both accurate and adaptable. As structured data continues to permeate all domains of life, designing robust mechanisms to represent and integrate this data into LLMs remains a crucial and active research frontier.

5.2. Embedding Cultural Heritage Knowledge Graphs

To date, much of the literature has concentrated on the construction of knowledge graphs, focusing on entity recognition, ontology alignment, and data integration with ontologies such as CIDOC CRM [90,91]. More recently, however, researchers have begun to investigate the potential of graph representation learning as a means of exploiting these graphs for retrieval, enrichment, and semantic discovery.

A notable example is the CIDOC2VEC approach [92], which develops embeddings from CIDOC-CRM-compliant graphs. The method employs relative sentence walks through the KG to generate sequences of entities, which are then embedded using standard distributional techniques from natural language processing. These embeddings enable similarity-based retrieval and recommendation of cultural heritage objects across collections. CIDOC2VEC represents a significant advancement because it demonstrates how embeddings can facilitate semantic interoperability across institutions, allowing users to discover related artefacts even in the absence of explicitly asserted links.

Nevertheless, a critical limitation of CIDOC2VEC is its entity-centered, relation-agnostic design. By treating walks as linear sequences of entities, the approach largely ignores the semantic role of relations defined in CIDOC CRM, such as the distinction between P108 has produced (linking production events to artefacts) and P50 has current keeper (linking artefacts to institutions). While this simplification improves scalability, it results in embeddings that capture contextual co-occurrence but lack sensitivity to the multi-relational structure that is central to CRM’s expressiveness. Consequently, retrieval is biased toward entity similarity rather than relation-aware reasoning, limiting its ability to support complex queries (e.g., retrieving artefacts created in the same workshop but preserved in different sites).

In contrast, recent advances in graph neural networks (GNNs), and particularly composition-based graph convolutional models such as CompGCN [93], explicitly model both entities and relations during the embedding process. By applying relation-specific transformations during message passing, CompGCN embeddings encode not only which entities are related but also how they are related, producing richer and more semantically faithful representations. In mainstream KG domains (e.g., Freebase, DBpedia), this relation-aware modelling has been shown to significantly improve link prediction and semantic querying [93,94].

Yet, from the review carried out in this research work, relation-aware graph convolutional models have not been applied in the querying phase of cultural heritage KGs, particularly those structured with CIDOC CRM. Existing approaches remain entity-focused, prioritizing similarity-based retrieval (as in CIDOC2VEC) or classification tasks (e.g., GCNBoost [95]) rather than supporting relation-aware querying and ranking. This presents a clear research gap.

This study addresses said gap by exploring the use of CompGCN embeddings to enhance querying in the RHDTO grounded knowledge graph. Rather than focusing exclusively on graph construction or classification, a proposal is extended to apply embeddings post-construction in the querying phase, enabling relation-sensitive similarity scoring across entities and events. This contributes a novel paradigm for semantic search and exploratory browsing, bridging the rigour of ontology-driven reasoning with the flexibility of embedding-based retrieval.

5.3. Access to Heritage Data in Natural Language

As the scale, scope, and complexity of data increase, the need to simplify its querying methods does so equally. Past a time where only select individuals could access certain information thanks to their expertise in the topic and research skills, information is now at everyone’s reach, a half-thought and a click away. Not only archaeologists, scientists, or other specialists may wish to browse the knowledge base, so in the context of Heritage Digital Twins, traditional querying methods would rather prove obsolete in the face of approaches utilising natural language as a medium [96].

If a user expresses their inquiry in their own language, the translation layer—natural language understanding (NLU)—bridges the gap between human intention and structured semantic content [97]. The query gains a certain level of interpretability, relaxing constraints and allowing for fuzzy reasoning during retrieval across both structural and semantic levels [98]. Not only does this present an advantage in query guarantee (retrieving at least one result) but also provides users enough feedback to adjust and shape their queries and best attract the answers they seek within the intricate knowledge graph.

At the core of this capability is a combination of tasks including semantic parsing, entity linking, intent classification, and relationship inference to decompose and interpret the natural language queries [99]. Thanks to recent advances in prompt-based large language models, using separately trained components for each of these tasks is no longer a requirement, as LLMs offer an integrated, zero, or few-shot approach to parsing natural language [100]. In the context of cultural heritage, where user queries are often imprecise, explanatory, and laden with historical, geographical, or culturally specific terms, properly instructed LLMs can produce a variety of structured outputs [77]. The LLM prompt construction includes the general context of the query to focus the interpretation, indications on the what and how of information extraction, and any additional enrichment LLMs should apply to their output. As such, the latter can be in the form of data triples—the building blocks of a graph structure [98].

Intuitively, these triples would be converted into SPARQL fragments to filter the knowledge graph data by mentioned entities, and the results would be layered according to the specific query relationships. The SPARQL query, then, may only be constructed after correct assignment of ontology labels and properties to the processed query elements—a task which would either require the involvement of an expert for labelling and validation or the implementation of an additional layer of entity and relationship classification using an ontology-trained AI model [101]. However, the usage of multiple hierarchical ontologies, one more complex than the other, renders this task more complicated than not, and while the latter option is more viable, the rigidity of SPARQL querying remains a risk; in the case of misclassification, the query will yield no results although they might exist [102].

To address this challenge, a hybrid query execution is adopted that bypasses strict SPARQL-based querying and instead leverages graph neural networks and knowledge embeddings, allowing the system to execute natural language queries in a semantically flexible and structurally tolerant way, specifically via Composition-based Graph Convolutional Networks (CompGCNs).

Following the NLU stage, the nodes represent candidate entities, types, or conceptual elements (e.g., “cathedral”, “bronze”, “Charles II”), and edges represent inferred relations or semantic dependencies (e.g., “located_in”, “issued_by”, “made_of”). The constructed query graph represents the user’s intent directly, without prematurely enforcing alignment to any specific ontology schema, while capturing its semantic and relational structure.

For Heritage Digital Twins, similarity extends beyond the simple attribute matching but rather enters the world of semantic, structural, and contextual equivalence. It is rare—if at all possible—for artefacts, historical and archaeological remains, or intangible heritage to be identical. Instead, they show overlap in historical context or provenance, form, function or symbolism [103], all types of data that are hosted and structured in the knowledge graph according to the RHDTO.

For tangible heritage, this work investigates similarity computation at the following levels, for example, to identify:

- pieces that belong to the same object,

- different objects that were excavated at the same site but from different locations,

- different objects that were crafted in the same workshop,

- different objects made of material originating from the same place; inferred from the data of scientific analysis reports,

- different objects made by the same artist.

This demonstrates the great advantage of similarity computation in querying, since thus far in existing works, most of these results would not be retrieved by a basic querying system alone. In fact, some of these objectives require reliance on visual input [104] such as images or 3D models, but by using intelligent AI models, it may be possible in most cases to identify such similarities just based on metadata stored within the knowledge graph [105].

6. Case Study: Enriching Digital Twins with Heritage Science Data

As a testbed for exploring the integration and synergy between AI techniques and ontological modelling, some reports generated by analysis activities in the heritage science field were selected, part of the documentation of the ARTEMIS initiative [106].

6.1. Application of AI-Driven Semantic Pipelines

A modular AI-based pipeline was designed to extract structured semantic knowledge from unstructured scientific texts belonging to the cultural heritage domain, with a focus on modern preservation and restoration techniques such as, but not limited to, X-Ray Fluorescence (XRF) and 3D modelling techniques.

The system is particularly tailored to support the enrichment and population of an ontology-driven knowledge graph designed to be an efficient base for the construction of Heritage Digital Twins, but its architecture is flexible enough to support other knowledge-driven applications.

The defined methodology combines several state-of-the-art open-source technologies, including Natural Language Processing, Large Language Models, semantic similarity search, and ontology-based reasoning.

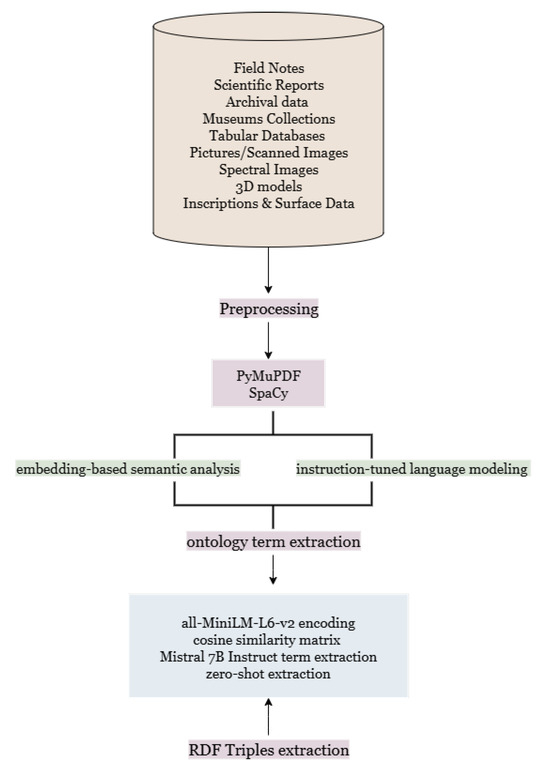

Textual data was primarily sourced from the ARTEMIS initiative documentation, consisting of complex PDF files with multi-column layouts, embedded images, tables, and special characters. The preprocessing and chunking pipeline consisted of the following steps:

- PDF parsing and image extraction: text and images extraction has been performed with PyMuPDF [107], a Python (Python 3.12.7, PyMuPDF 1.25.5) library able to handle even complex textual layout. Images from PDFs were also extracted and kept separately for further experiments.

- Text preprocessing: SpaCy [108] library has been used to perform tokenisation, sentence segmentation, and syntactic parsing to reduce noise and generally improve the handling of textual data by machines.

- Sentence-aware chunking: to enable efficient processing by Transformer-based LLMs, the texts were then segmented into smaller, semantically coherent units called “chunks”. Sentences were aggregated sequentially until the token limit ( in this experiment the limit was 300 token) was reached to avoid splitting syntactically or semantically dependent clauses. This size has been chosen to balance the model’s context window constraints with our need to have meaningful textual context. This segmentation allows the model to focus on localised contexts, ensuring that the extracted knowledge is specific and grounded in the source material.

- Text normalisation: lowercased all text, removed punctuation, standardised whitespace, and ensured consistent character encoding for compatibility with embedding models.

Once the text chunks were generated and normalised, the pipeline employed a combination of embedding-based semantic analysis and instruction-tuned language modelling to perform ontology term extraction and knowledge graph population. Candidate ontology terms extracted from each chunk were first encoded into dense vector representations using the SentenceTransformer model all-MiniLM-L6-v2 [109]. A pairwise cosine similarity matrix was then computed across all term embeddings to identify semantically similar terms. Terms which exceeded a chosen similarity threshold of 0.85 were clustered together, effectively collapsing synonyms and variant spellings into unified conceptual entities. The pairwise cosine similarity matrix is given by:

where

This semantic deduplication approach allowed for a more robust normalization than conventional string-matching methods, ensuring terminological consistency across the corpus.

Term extraction was then performed using Mistral 7B Instruct [110], an instruction-tuned LLM selected for its strong performance and open-source transparency, which guarantees the reproducibility of its outputs.

The prompting strategy was explicitly designed to extract only the entities and concepts relevant to the cultural heritage domain.

Each chunk of text was provided to the model with instructions to identify modern scientific analysis techniques, materials, instruments, and experimental procedures while excluding any narrative or explanatory content.

Prompts were crafted to elicit concise, list-form outputs, containing only the terms of interest. By carefully structuring these prompts, the model could perform zero-shot extraction of domain-specific ontology terms without relying on supervised training data, ensuring that each output was both focused and reproducible.

To ensure the reliability of the extracted terms, a human-in-the-loop validation step was implemented, allowing domain experts to manually review and select relevant terms. This hybrid approach balanced automated efficiency with expert oversight, mitigating the risk of hallucinated or irrelevant outputs while maintaining the accuracy of the ontology term set.

A key innovation of this pipeline lies in the retrieval of semantic context for each validated term: while processes like Term Extraction or Named Entity Recognition successfully provide a list of relevant concepts, their meaning is often best understood in relation to the text from which they originate, and this is especially true in our case, where the data needs to be mapped following domain ontologies rules.

To capture this context, both the refined ontology terms and the original text chunks were embedded using the same SentenceTransformers model, and a FAISS similarity index was constructed over the chunk embeddings.

FAISS [111] is an open-source library for efficient similarity search and clustering of dense vectors, optimised for high-dimensional data, such as embeddings from neural networks. The way it works is by returning k nearest neighbors of a query vector, typically with their indices and distances/similarity scores.

With the relevant contextual chunks retrieved, Mistral 7B Instruct was employed a second time to generate RDF triples.

The model was prompted to extract concise semantic triples aligned both structurally and semantically with existing cultural heritage ontologies. This resulted in a set of triples that formally represents relationships between terms while preserving traceability to the source text.

The final outputs of this AI-assisted pipeline include:

- a validated list of ontology-relevant terms, stored in JSON format,

- a collection of RDF triples, structured and domain ontology-aligned,

- a mapping between each term and its original context, ensuring interpretability and traceability.

Figure 1 is a diagram describing graphically the aforementioned pipeline:

Figure 1.

Process diagram pt.1: automatic ontology term and RDF triples extraction from cultural heritage reports.

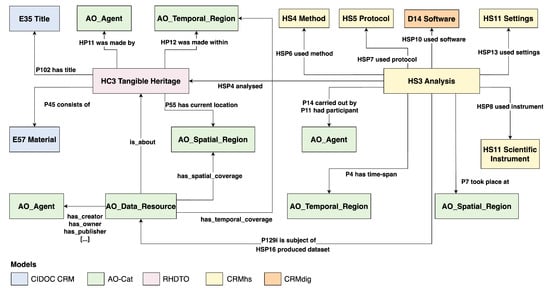

The underlying conceptual model chosen for this effort is CRMhs [52], an ontological component of the CIDOC CRM ecosystem developed to represent the documentation and operational structure of scientific analyses carried out on cultural heritage objects. As an extension of the CIDOC CRM, CRMhs integrates seamlessly with RHDTO and gives it the ability to formalise the domain of heritage science by introducing specific classes and properties that describe scientific activities (such as HS3 Analysis), instruments (e.g., HS11 Scientific Instrument), datasets, sampling procedures, and their relationships to both the physical object under investigation and the broader research context. It supports the representation of tangible and intangible entities involved in the scientific process, such as samples, areas of interest, methods employed, and results obtained. The model also accounts for the configuration of complex devices, their software components, and even the parameters used during analysis. Moreover, since the ARTEMIS ontological architecture is intentionally modular, reflecting the diversity and complexity of the domains it seeks to represent, the project also employs the AO-Cat ontology [112] developed within the ARIADNE initiative [113] to describe datasets and their specific characteristics, including provenance, access conditions, and content typologies. Our encoding experiments, also using AO-Cat to encode these entities, have fully demonstrated how these models are able to interact perfectly with each other, allowing the construction of semantic graphs that, despite being internally heterogeneous, constantly maintain a solid semantic coherence.

A general overview of the ontological module designed by ARTEMIS to model scientific data is shown in Figure 2.

Figure 2.

Classes and properties used by ARTEMIS to model heritage science information.

By anchoring AI outputs to a domain-specific ontology, the system ensures that extracted data is both semantically coherent and interoperable. This ontological framework thus provides the structure for an AI-assisted information extraction workflow aimed at transforming heritage science reports into semantically enriched data. Using the categories defined by this semantic ecosystem as guidance, natural language processing tools are applied to identify and annotate references to analytical techniques, measurements, instruments, and related entities. The extracted knowledge is then converted into structured triples, for instance, in Turtle format, encoded using the grammar provided by the ontological model employed and ingested in the knowledge graph.

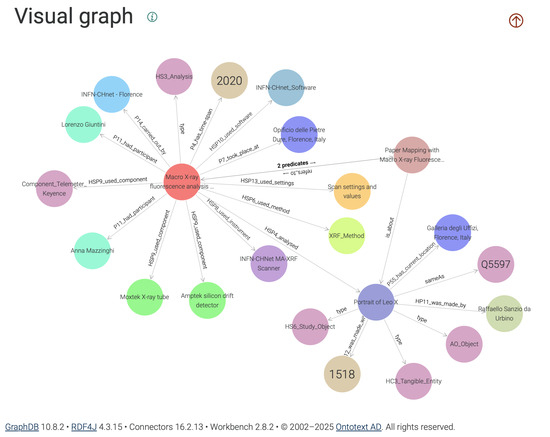

The following examples show some results of the pipeline tested in [114] about the use of Macro X-ray Fluorescence Scanning on a Raffaello’s Portrait of Leo X. Starting from the original PDF, the JSON file containing the triples was successfully and automatically extracted, encoded in Turtle format, and ingested into the ARTEMIS knowledge base to visualise the graph. A fragment of the Turtle encoding (below) shows the ontological representation of Raffaello’s painting as a cultural object by means of the HC3 class of the RHDT Ontology.

artemis:LeoX_Portrait_Study_Object a rhdto:HC3_Tangible_Entity ; rdfs:label "Portrait of Leo X" ; owl:sameAs <http://www.wikidata.org/entity/Q5597> ; crm:P102_has_title "Portrait of Leo X with Cardinals" ; rhdto:HP11_was_made_by artemis:Artist_Raffaello ; crm:P45_consists_of "Oil on wood" ; rhdto:HP12_was_made_within artemis:LeoX_Portrait_Period ; crm:P55_has_current_location artemis:LeoX_Portrait_Place .

The following Turtle fragment, instead, shows the encoding of the analysis event conducted by scholars of the Italian Institute of Nuclear Physics (INFN) on the same cultural object, the way in which CRMhs properties are used to specify the instrumentation and methodology employed, and the location and dates on which the analyses were performed.

artemis:Analysis_MA_XRF_LeoX a crmhs:HS3_Analysis ; rdfs:label "Macro X-ray fluorescence analysis" ; crm:P2_has_type "MA-XRF" ; crmhs:HSP1_has_activity_title "MA-XRF analysis on Leo X Portrait" ; crm:P3_has_note "Macro X-ray fluorescence analysis" ; crmhs:HSP4_analysed artemis:LeoX_Portrait_Study_Object ; crmhs:HSP6_used_method artemis:XRF_Method ; crmhs:HSP8_used_instrument artemis:Device_INFN_CHNET_Scanner ; crmhs:HSP9_used_component artemis:Component_XRay_Tube_Moxtek , artemis:Component_SDD_Amptek , artemis:Component_Telemeter_Keyence ; crmhs:HSP10_used_software artemis:INFN-CHnet_Software ; crmhs:HSP13_used_settings artemis:Settings_001 ; crmhs:HSP16_produced_dataset artemis:XRF_LeoX_Resulting_Paper ; crm:P14_carried_out_by artemis:Group_INFN ; crm:P11_had_participant artemis:Person_Lorenzo_Giuntini ; crm:P4_has_time-span artemis:Analysis_Time_Frame ; crm:P7_took_place_at artemis:Analysis_Place .

A complete encoding of this entities, in Turtle format, can be found in the Supplementary File accompanying this paper.

These outputs can be ingested into Heritage Digital Twin Knowledge Graphs, enabling advanced features such as semantic querying, dynamic visualisation, and automated knowledge integration from heterogeneous scientific sources and the subsequent feeding of AIs for advanced data analysis and interactions.

A visual representation of a section of the Digital Twin Knowledge Graph enriched with semantic context through our AI-assisted process is shown in Figure 3 below.

Figure 3.

Graph visualisation of a scientific analysis of a cultural heritage asset directly obtained from the PDF through our AI-assisted pipeline.

6.2. Populating and Querying the ARTEMIS Knowledge Graph

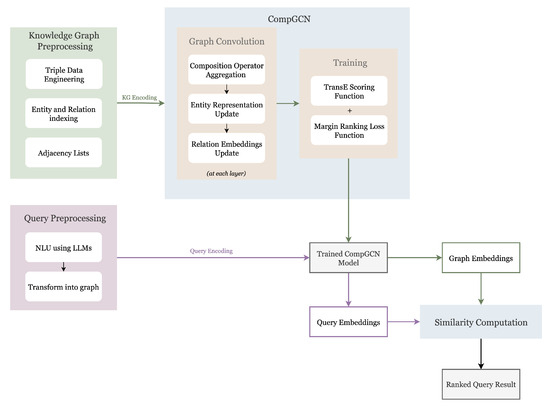

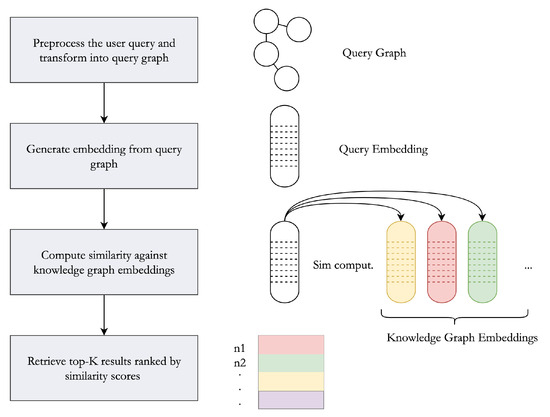

The following subsections describe the second phase of the experiment pertaining to the querying of the knowledge graph, for which the steps are illustrated in the process diagram in Figure 4.

Figure 4.

Process diagram pt.2: querying the KG in natural language using CompGCN embeddings.

Once the HDT knowledge graph has been built and the cultural heritage data is semantically structured, the next step is implementing the querying system, which allows us to access it. This process involves a few steps, beginning with an additional preprocessing stage. This time, we are not preprocessing textual documents but rather preparing the stored data for artificial intelligence model training by indexing each entity and relation with a unique ID and engineering the graph data as described next. The ontology-based graph structure resulting from the preceding work maintains the information in a parametrised way, linking all attributes through property edges, including the node types (e.g., by means of the P2 has type property of CIDOC CRM). It is good practice for data archival, consistency, and reusability; however, it poses particular challenges for an AI model, as then the nodes do not encapsulate enough meaningful information to distinguish them from others. To address this, the knowledge graph is restructured so that each node in the graph corresponds to an instance of an RHDTO class. Therefore, the type of each entity is embedded in its node as a static feature, along with any human-readable label, title, or description it is linked to, for all of which simple embeddings were created using SentenceBERT. An example of the resulting node in JSON format is the following:

{ "id": "obj_0001",

"label": "Mona Lisa",

"type": "E22_Man-Made_Object",

"features": {

"label_embedding": [0.23, 0.78, ...],

"type_embedding": [0.12, -0.09, ...] }

The next step of the process is to feed the new knowledge graph to an AI model and begin training. Though, grounding the knowledge graph in the rich RHTDO makes it too complex for standard neural networks to capture its nuanced relational structure of data. The selected model, CompGCN, explicitly models both nodes and relations jointly. For cultural heritage data where each connection has a semantic meaning that depends on the nature of the relationship, it is critical to be able to make a difference between—for example, whether a person created an object, participated in an event, or curated an exhibition [93]. CompGCN looks at each of these relations as its own learnable element and trains to learn their differences. It also accounts for the directionality of relationships; in the knowledge graph, many relationships are inherently directional and semantically loaded, as there is an asymmetry in reading them (e.g., “was influenced by” and “influenced”). The network learns this by identifying the roles of the relations and the flow of information between nodes [93].

Implementation-wise, the graph is transformed into triple format or adjacency lists, and each relation is modelled explicitly with its inverse counterpart. CompGCN then uses a message-passing mechanism that jointly embeds nodes and relations to spread information throughout the graph during training. The model uses composition operators on the embedding vectors to integrate relation semantics into the neighbourhood aggregation, which allows the network to model logic and semantics of the paths traversed additionally to their proximity. Node embeddings are updated at each graph convolution layer according to the unique semantic composition of the connecting relation and the characteristics of their neighbours [93].

6.3. Message Passing in CompGCN for Cultural Heritage Knowledge Graphs

In CompGCN, the message passing mechanism extends traditional GCNs by explicitly incorporating relation semantics [93,94]. For each triple , the neighbour embedding is composed with the relation embedding using a composition operator , e.g.,

where can be addition, multiplication, or circular correlation. Each entity representation is then updated by aggregating over its adjacency list:

where are relation-specific weight matrices and is a non-linear activation. Unlike earlier relational GCNs, CompGCN also updates relation embeddings:

This joint update allows both entities and relations to evolve during training, which is particularly relevant in cultural heritage graphs where relations (was produced by, participated in, located at) carry rich semantics. For example, the embedding of an entity such as a Vase is updated not only from its neighbouring Potter (was produced by) but also from its participation in a Firing Event [115]. After three layers, entity and relation embeddings are evaluated with a TransE scoring function [116]:

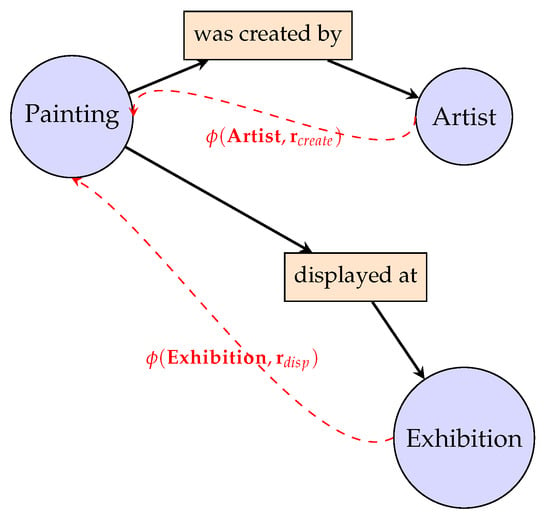

and optimised using a margin ranking loss to ensure valid triples score higher than corrupted ones. This allows embeddings to capture both contextual and relational meaning, enabling similarity-based querying of heritage data. The following schema in Figure 5 illustrates this aggregation.

Figure 5.

Schematic of CompGCN message passing in cultural heritage knowledge graphs. Example: Painting aggregates messages from Artist and Exhibition. Dashed red arrows indicate composed messages being aggregated into the entity embeddings.

6.4. Similarity Computation Between the Query and KG Embeddings

Our system uses vector embeddings to compute similarity between cultural entities, taking into consideration temporal alignment, relational proximity, and typological affinities. These computations involve a combination of two measures between the embedded graph structures: the cosine similarity, which works by calculating the cosine angle between two vector representations (though it is generally used for high dimensions dense vector comparisons), and the contrastive distance learning, which improves the clustering of semantically similar subgraphs and disperses the unrelated ones [117,118].