Abstract

Retrieval-Augmented Generation (RAG) systems are increasingly adopted in government services, yet different administrations have varying customization needs and lack standardized methods to evaluate performance. In particular, general-purpose evaluation approaches fail to show how well a system meets domain-specific expectations. This paper presents CuBE (Customizable Bounds Evaluation), a tailored evaluation framework for RAG systems in public administration. CuBE integrates large language model (LLM) scoring, customizable evaluation dimensions, and a bounded scoring paradigm with baseline and upper-bound reference sets, enhancing fairness, consistency, and interpretability. We further introduce Lightweight Targeted Assessment (LTA) to support efficient customization. CuBE is validated on GSIA (Guizhou Provincial Government Service Center Intelligent Assistant) by using four state-of-the-art language models. The results show that CuBE produces robust, stable, and model-agnostic evaluations while reducing reliance on manual annotation and facilitating system optimization and rapid iteration. Moreover, CuBE informs parameter settings, enabling developers to design RAG systems that better meet customizer needs. This study establishes a replicable paradigm for trustworthy and efficient evaluation of RAG systems in complex government service scenarios.

1. Introduction

With the explosive popularity of the Deepseek series of large models [1] on the Chinese internet in early 2025, AI-enabled government [2] has quickly become a new hot topic for the construction of “digital government” [3] at all levels of government in China. In response to this trend, the Guizhou Provincial Government Service Center proposed the need to deeply integrate large model technology into its existing government service website. Our project team took on this integration task and successfully launched GSIA (Guizhou Provincial Government Service Center Intelligent Assistant), providing users with a brand-new AI-powered government service experience.

Government data are not only complex but also subject to strict regulations. Government services involve a large number of cross-departmental and cross-level policy documents and processes, placing significant pressure on human customer service representatives. Previous studies have shown that AI-driven intelligent platforms and task automation mechanisms can significantly optimize government service processes, improve cross-departmental collaboration efficiency, reduce waiting times, and effectively enhance user satisfaction and service fairness [4]. Recently, scholars have conducted research on the challenges of knowledge accuracy and timeliness in large models within government service scenarios, proposing the development of specialized models tailored to the government sector to improve effectiveness [5]. Furthermore, another study [6] suggests that a modular RAG architecture can enable the automated processing of a vast number of government guidelines, significantly enhancing government online response efficiency and public satisfaction.

Compared with the commercial sector, government-oriented question answering imposes much stricter standards for information accuracy and legal basis, as any misleading information may lead to legal and social risks, as well as public dissatisfaction. In fact, the introduction of generative artificial intelligence into the field of public administration has brought about new risks and governance challenges that differ from those of traditional AI. Cantens (2025) points out that the limited interpretability of generative AI constrains its applicability in public administration [7]. He further emphasizes that generative AI transforms the traditional mode of “intellectual production” within government, imposes new requirements on civil servants’ critical thinking and human–AI collaboration abilities, and raises strategic considerations regarding the neutrality of AI systems and the selection of training corpora.

Reports from Datategy and StateTech further demonstrate that the traceable information sources and generated outputs of RAG (Retrieval-Augmented Generation) contribute to greater transparency and enhanced credibility in government services [8]. Therefore, this project aims to leverage RAG and large language model technologies to develop GSIA (Guizhou Provincial Government Service Center Intelligent Assistant), with the goal of improving the online response speed of the Guizhou Provincial Government Service Center and enhancing citizens’ experience and satisfaction with government services.

However, during the system development process, we faced a key challenge: the Government Service Center required both customized evaluation metrics and the ability for the development team to rapidly and objectively determine whether the system met standards and was suitable for deployment. Since the project is still in the development phase, it is not possible to rely on real user data, and traditional manual simulation—posing questions one by one—is both time-consuming and inadequate for covering the full range of scenarios. Even more challenging, existing automated evaluation methods based on large language models still suffer from significant shortcomings in terms of result stability, interpretability, and alignment with human evaluation standards, making it difficult to satisfy the stringent requirements for transparency and customizability in government service evaluation [9]. In view of this, we propose and define Lightweight Targeted Assessment (LTA), a new evaluation paradigm specifically designed for the rapid scoring of RAG systems. LTA outputs “intuitive scores” as its core feature, enabling decision makers to quickly obtain deployment guidance without needing to focus on underlying technical details.

Building on the concept of LTA, we further designed and implemented the CuBE (Customizable Bounds Evaluation) method, characterized by the following features:

- Locally deployable: CuBE can be fully implemented using local large language models without reliance on external APIs.

- Large-scale automated evaluation: Scoring is performed automatically by the language model, significantly improving evaluation efficiency.

- Customizable scoring dimensions: Government departments can flexibly configure the weighting of dimensions such as “accuracy,” “completeness,” “compliance,” and “language style” according to specific requirements.

- Weighted normalization algorithm: Final scores are mapped to a fixed interval, producing intuitive and comparable system ratings.

- Weighted scoring: CuBE’s unique weighted scoring approach minimizes discrepancies between different models as much as possible.

The main contributions of this paper are as follows:

- Proposing the LTA evaluation paradigm, which focuses on outputting the “final score” to meet the intuitive needs of non-technical decision makers.

- Designing the CuBE scoring method, which enables large-scale, localized, automated, and customizable evaluation of RAG-based question-answering systems.

- Conducting the systematic testing of the “GSIA Consultation Assistant” using real data from the Guizhou Provincial Government Service Center to validate the practicality and reliability of CuBE in government scenarios.

- Providing a replicable and practical paradigm for RAG system evaluation in the public sector, thus promoting the trustworthy application of intelligent government services.

The remainder of this paper is organized as follows: Section 3 introduces the system architecture, knowledge base construction, evaluation challenges, and the LTA paradigm for the GSIA Consultation Assistant. Section 4 details the design and implementation of the CuBE method and its experimental validation. Finally, Section 5 discusses the advantages, limitations, and future prospects of this approach.

2. Related Work

2.1. From Modular Metrics to LLM-Based Holistic Evaluation

Traditional evaluation methods for Retrieval-Augmented Generation (RAG) systems primarily adopt a modular approach, separately assessing the retrieval and generation modules using distinct metrics. Retrieval performance is typically measured by classic information retrieval metrics such as Recall@k and MRR to evaluate the relevance of the retrieved results [10]. The generation component mainly relies on reference-based metrics—including BLEU [11], ROUGE [12], and BERTScore [13]—to assess surface-level similarity to reference answers. However, such modular, reference-based evaluation approaches struggle to capture the end-to-end factual accuracy and knowledge integration capabilities of RAG systems, making them inadequate for the assessment requirements of complex real-world tasks.

In recent years, with the advancement of large language model (LLM) capabilities, automated end-to-end evaluation methods based on LLMs have rapidly developed, becoming an important means to address the limitations of traditional metrics. GPTscore [14] fully leverages the emergent abilities of large models, utilizing natural language instructions to conduct flexible, multi-dimensional quality assessments. Ragas [15] further targets RAG systems, providing multi-dimensional, reference-free automatic evaluation of retrieval relevance, context utilization, and generation accuracy, which significantly improves efficiency and generalizability. LLM-Eval [16] proposes multi-dimensional quality assessment through a single model invocation, effectively reducing the costs associated with human annotation and multiple rounds of prompting. Works such as EvaluLLM [17] and Liusie et al. [18] introduce pairwise comparison and customizable criteria, enhancing the consistency between automated evaluation and human intuition, and further explore and mitigate biases such as positional effects. ChatEval [19] improves evaluation consistency and interpretability through a collaborative multi-LLM mechanism, advancing automated evaluation towards a human team-like decision-making paradigm. The “Language-Model-as-an-Examiner” paradigm by Bai et al. [20], as well as authoritative benchmarks such as MT-bench and Chatbot Arena proposed by Zheng et al. [21], have driven the standardization and systematization of LLM Judge evaluation methods. Gu et al. [22] provide a comprehensive review of the types, challenges, and future directions of LLM Judge methods.

Moreover, while current LLM-driven automated evaluation frameworks have improved efficiency and coverage, they have also revealed new systemic challenges, including social and technical biases [23,24], confidence calibration issues [25], and insufficient coverage and discrimination of evaluation datasets in diverse scenarios [26]. The related literature has proposed multi-level bias detection and mitigation methods and has emphasized that enhancing interpretability and ensuring ethical compliance will become unavoidable research priorities for future automated evaluation systems.

2.2. Diagnosis-Oriented Evaluation Frameworks in Practice

To address the evaluation needs of RAG and large model systems in practical deployment, recent years have seen the emergence of various diagnostic-oriented evaluation frameworks in academia. Fine-grained diagnostic tools, such as RAGChecker [27], are capable of identifying errors across multiple stages, including retrieval failures, generation biases, and factual misalignments. These tools are well-suited for the development and tuning phases, but they often rely heavily on manual annotation and involve complex, multi-dimensional processes.

Overall, with the advancement of LLMs and their automated evaluation tools, the efficiency, coverage, and adaptability of RAG and general generative system evaluation have been significantly enhanced. However, achieving fair, robust, and interpretable evaluation remains a key direction for ongoing optimization and research.

3. GSIA Consultation Assistant

The GSIA (Guizhou Provincial Government Service Center Intelligent Assistant) system is built upon the government service network platform of the Government Service Center. It is seamlessly integrated into the existing web infrastructure and is accessible through a dedicated entry point on the platform. The system aims to provide users with a wide range of government services through an intelligent and conversational interaction experience, achieving a deep integration of government services and advanced artificial intelligence technologies.

To realize this goal, we have designed an intelligent government service module comprising five core functions: “Ask, Guide, Process, Search, and Evaluate.” Among them, the “Ask,” “Guide,” and “Process” modules are all powered by large language model (LLM) technologies, serving as the intelligent core of the system. Notably, the “Ask” module, namely, the GSIA Consultation Assistant, is the primary focus of this research study.

The GSIA Consultation Assistant, built upon the government service network, is dedicated to providing users with an intelligent and conversational policy consultation experience. Through natural language dialogue, the system helps users understand relevant policies, clarify administrative procedures, and identify key points, thereby significantly optimizing service guidance and accessibility. Previous studies have shown that online intelligent support systems can effectively enhance public empowerment, as well as trust and satisfaction with government agencies, by improving decision transparency and satisfaction [28]. To achieve this, GSIA leverages its self-developed “Magic Tree Factory” AI service platform, combining the natural language processing capabilities of large language models with the extensive knowledge base of the government service network. This enables efficient analysis of and intelligent response to policy content. Upon receiving a user inquiry, the system automatically retrieves highly relevant guideline information and generates accurate and clear responses, effectively improving the efficiency of government service acquisition and user satisfaction.

The primary purpose of this system design is to validate the practical effectiveness of the intelligent consultation assistant in government service scenarios. Through subsequent evaluation using the CuBE method, we further assess its effectiveness in enhancing user experience and problem-solving capabilities, with the goal of delivering tangible benefits to users.

3.1. System Architecture and Component Selection

The core architecture of the GSIA Consultation Assistant adopts a Retrieval-Augmented Generation (RAG) approach, integrating locally deployed Chinese embedding models and large language models to create a controllable, secure, and highly responsive intelligent government question-answering system. In recent years, the RAG framework has been recognized as an effective solution for overcoming knowledge limitations in large models and for enhancing the authority and accuracy of the generated results. By seamlessly combining external knowledge base retrieval with generative models, RAG has demonstrated outstanding performance in practical applications [29]. This system is custom-developed on the Magic Tree Factory platform, with the overall architecture being optimized and extended from the open-source RAGFlow framework to meet the requirements of government scenarios—such as data security, on-premise deployment, and Chinese language understanding—providing a stable and reliable intelligent QA experience for government users.

The system architecture mainly consists of the following modules:

- Corpus Management and Preprocessing Module: It supports the ingestion of multi-format documents (PDF, Word, HTML, etc.) and performs automatic text extraction and cleaning. The system employs a character-based chunking strategy, with a default chunk size of 500 characters and an overlap of 50 characters. However, in the actual research process, we adopted the single-document chunking method, that is, treating an entire policy document as an independent chunk. The main reason for this design choice is that government documents often exhibit high similarity, both in titles and in the expressions of the main text, where highly overlapping content may appear. If the documents were divided into smaller segments, the retrieval system might rely only on partial fragments when answering questions, which could lead to a biased understanding of the policy content. In contrast, treating the entire policy document as the minimum retrieval unit ensures that information retrieval and QA generation are always based on the complete policy context, thus avoiding semantic errors caused by fragmented references and improving the consistency and accuracy of retrieval and responses.

- Vectorization and Encoding Module: It utilizes the bge-large-zh v1.5 Chinese embedding model to vectorize the chunked texts. This model exhibits strong Chinese semantic modeling capabilities, is well-suited to government text scenarios, and effectively captures the semantic similarity between policy terminology and query formulations.

- Vector Database and Retrieval Module: In this study, we used Elasticsearch (v8.11.3) as the vector database and retrieval engine. Text fragments were first vectorized using the bge-large-zh v1.5 Chinese embedding model and then stored in Elasticsearch. With the vector retrieval capability of Elasticsearch, the system can perform semantic similarity search, combined with keyword-based retrieval to improve both accuracy and recall. Meanwhile, leveraging its plugin mechanism, we supported multi-strategy hybrid retrieval, which not only ensures high recall and relevance of the Top-K retrieval results but also returns the text fragments that best match the user queries.

- Large Language Model Generation Module: The Qwen2.5-32B model is deployed locally for inference tasks on domestic 910B GPUs, ensuring efficient responses while maintaining privacy and security. This model demonstrates robust capabilities in Chinese comprehension and generation, enabling it to produce complete and semantically coherent answers based on user queries and retrieved content.

- Frontend Interaction Module: The user interface is designed to closely resemble mainstream conversational products such as ChatGPT, enhancing usability and perceived intelligence while lowering the operational threshold for users.

The system achieves a seamless workflow from the automatic vectorization of the policy text knowledge base to the semantic retrieval and answer generation for user queries. Based on this architecture, the GSIA Consultation Assistant effectively supports users in the personalized understanding and rapid search of government service guidelines, providing not only structured knowledge support but also a more natural, conversational interaction experience.

3.2. GSIA Consultation Assistant Dataset

The knowledge dataset used by the GSIA consultation module is primarily sourced from the service guides released by government service centers at all administrative levels across Guizhou Province. Originally intended for public users, these data provide comprehensive references before service handling, including policy basis, requirements, process materials, and more. The dataset is highly structured, semantically clear, and broadly comprehensive. To meet the knowledge base construction requirements of the RAG system, we performed preprocessing and structural reorganization on these data, forming the main knowledge source for the system’s question-answering capability.

The original data are stored in tabular form, with each record corresponding to a specific government service item. The field structure is unified, and the main content fields include the following:

Administrative Region, Publication Address, Item Name, Handling Agency, Department Name, Consultation Method, Requirements, Legal Basis, Statutory Processing Time, Committed Processing Time, Service Object, Processing Type, Online Handling Availability, Processing Time, Processing Location, Fee Standard, Processing Procedure, Application Materials.

Among these, fields such as Requirements, Processing Procedure, and Application Materials are the most frequently referenced by users in their inquiries. These fields are typically described in natural language paragraphs, making them suitable for large language model processing and semantic retrieval. Compared with traditional question–answer pairs, this dataset more closely resembles a structured document knowledge base and is thus more suitable for constructing the semantic foundation of a RAG system.

To date, the system has integrated approximately two million records of service items, covering major cities and service departments across the province. The dataset is large in scale and granular in information, ensuring that the system has sufficient knowledge support when addressing diverse user queries. Through vectorized segmentation strategies and local vector database management, this dataset can efficiently support users in understanding policies, consulting on service matters, and receiving procedural guidance in natural language contexts.

3.3. Knowledge Base Construction Scheme

The knowledge base used in this system is sourced from service guides released by government service centers at all administrative levels throughout the province. The original data are stored in tabular form, with each record containing a set of fields. Since the Magic Tree Factory knowledge base does not support uploading or parsing JSON format, we adopted the following approach: data are stored in TXT format, with each service item written into a text block as key–value pairs and saved as an independent txt file. Compared with Markdown and the original tabular format, this scheme—according to practical tests—performs as well as or even better than other formats in terms of retrieval and generation quality, and it is also convenient for data processing.

When constructing the knowledge base, we divided different regions into separate files, with government service centers in different areas managing their respective affairs as distinct knowledge bases. These knowledge bases are loaded onto the consultation assistants for each region. Once the government service platform detects or the user selects their region, the corresponding consultation assistant with the relevant knowledge base is activated. This reduces the burden on the RAG system and improves response quality.

This study uses province-level service items in Guizhou Province as an example. This scope is exclusive to the Guizhou Provincial Government Service Center and represents the provincial level. Other scopes include municipal- and county-level divisions, mainly determined by the administrative level of the service center. For province-level service guides in Guizhou, there are a total of 6111 entries. We processed these data as follows: each item was stored as an individual txt file, resulting in 6111 files, with each file corresponding to a single service item.

During the chunking strategy stage, after evaluation, we decided to treat each file as a single chunk (setting the parsing mode to one-chunk per file). The reason for this approach is that when using conventional chunking—i.e., splitting all text segments in the knowledge base randomly or by range—there are large amounts of repetitive content among service guide files. If file headers or footers are removed, it becomes difficult for the LLM to recognize which service item each chunk pertains to, leading to potential confusion of local information.

For example, in real cases such as “Approval for Pumped Storage Power Station Projects”, “Approval for Pumped Storage Power Station Projects (New Construction)”, and “Approval for Pumped Storage Power Station Projects (Modification)”, the file names are very similar, and there is considerable overlap in content among the corresponding service guides. If these files were split into smaller chunks, the retrieval model might struggle to distinguish the specific item being handled. Preserving each record as an integral unit maintains the semantic boundaries between items as much as possible and prevents the generation module from producing ambiguous results due to similar names. This design not only meets the system’s need for precise entity distinction but also ensures retrieval efficiency in large-scale (million-level) knowledge base scenarios, providing a solid foundation for efficient and accurate responses in RAG-based government service QA applications.

3.4. Evaluation Challenges and Research Motivation

After completing the initial development of the GSIA Consultation Assistant, we conducted manual tests to verify its response effectiveness on several typical service items. The results indicated that the system performed satisfactorily within a small sample. However, the assistant’s knowledge base comprises approximately two million records, covering a wide range of complex content. Solely relying on manual testing makes it difficult to comprehensively assess overall system performance, especially in terms of stability and accuracy across large-scale data coverage scenarios.

Furthermore, after practical trials, the Government Service Center raised several critical requirements and concerns regarding the system, including the following:

- Is the information on required materials provided by the system authentic and reliable, and does it remain consistent with the actual service guides?

- Can the system accurately understand user queries and provide clear, actionable responses?

- Is the system capable of recognizing and avoiding semantic confusion, thereby preventing erroneous answers caused by similar service items?

In the absence of systematic evaluation methods, it is difficult to provide convincing answers based solely on subjective observation or case-by-case analysis. Therefore, it is urgent to design a quantitative, repeatable, and comprehensive testing approach to thoroughly evaluate the GSIA Consultation Assistant’s performance across the entire knowledge base. In response to this, we propose and implement an automated scoring mechanism for RAG systems that can rapidly provide comprehensive feedback on overall system performance, as well as customized metrics, including but not limited to accuracy and consistency.

This mechanism not only serves the quality verification needs of the current project but also offers a general methodological reference for the performance evaluation of future question-answering systems.

4. CuBE Evaluation Procedure

In this paper, we propose CuBE as a scoring methodology for LTA. CuBE features a flexible scoring mechanism. In this section, we first present the problem statement. Subsequently, we provide a detailed explanation of our approach, including the construction of question set, answer set, and evaluation set, as well as the scoring scheme.

4.1. Setting of Evaluation Criteria

Since the motivation for this method originates from the challenges encountered during the development of the GSIA Consultation Assistant, we directly apply this approach to the evaluation task of the GSIA Consultation Assistant. According to practical requirements, project leadership requested an assessment of the assistant in four dimensions, i.e., answer relevance, answer specificity, answer completeness, and answer quality, as well as a recommendation regarding usability. Therefore, the “Customizable” fields in this evaluation naturally selected these four aspects as the evaluation objectives. Specifically, they are considered as follows:

- Answer relevance: Is the response from the GSIA Consultation Assistant relevant to the actual materials?

- Answer specificity: Does the assistant provide a targeted response to the query?

- Answer completeness: Does the assistant provide all necessary information from the materials?

- Answer quality: Is the response from the assistant clear and easy to understand?

Our task is to provide supporting evidence to demonstrate that the GSIA Consultation Assistant meets usability standards in these four aspects and is ready for deployment.

4.2. Question–Answer Set Collection

The question–answer set is essential to evaluating RAG systems. To assess the GSIA Consultation Assistant, we first needed to obtain its QA set. However, there are several limitations in collecting such a set:

- The GSIA Consultation Assistant has not yet been deployed, making it impossible to acquire real user conversation data.

- Team members are not experts in government affairs and are uncertain about how to formulate appropriate questions.

Recent studies have shown that large language models (LLMs) can generate diverse and high-quality texts, with their outputs increasingly approaching those produced by humans. Inspired by this, we decided to utilize the LLM engine of the GSIA Consultation Assistant, Qwen2.5-32B, to generate the QA set. Specifically, we selected all 6111 province-level service items from the service assistant, encompassing a wide range of government affairs in Guizhou Province, to serve as our source material. The QA set construction proceeded in two steps.

Question set collection: The LLM was prompted to simulate the role of a service user and to generate one question for each service item, thereby forming the question set. The structure consists of a question field, which stores the user question generated by the LLM based on the actual service guide, and a document field, which stores the complete content of the referenced service guide in the same JSON format as in the knowledge base.

QA set collection: Each question from the question set was then input into the GSIA Consultation Assistant to obtain both its answer and the list of retrieved documents. To capture these outputs, an answer field was added to store the assistant’s response, and a document list field was added to record the supporting document references. By merging these outputs with the original question set, we constructed a QA set consisting of four fields: question, document as the standard reference answer, answer, and document list as the evidence for the answer.

4.3. CuBE Scoring Set

The construction of the CuBE scoring set is key to enabling rapid and targeted evaluation. Previous studies have shown that GPT-series models can directly provide evaluations for a given question and express them using a ten-point scale. Inspired by this, we adopted a similar approach in the design of CuBE. The specific process is as follows.

First, we randomly select an entry from the QA set and use its content to design prompts for the large language model to assign scores. For example, for the answer relevance dimension, after multiple rounds of testing, the following prompt was found to yield satisfactory results:

You are a rigorous evaluation assistant responsible for assessing whether the response content of a RAG system is closely related to the retrieved source material. Please output an integer score from 0 to 10 to indicate the degree of relevance, based on the following five criteria, each worth two points:

- Does the answer accurately quote or paraphrase information from the source?

- Are the core points of the answer consistent with the source?

- Is the reasoning in the answer supported by the source?

- Is the key information from the source covered in the answer?

- Are there any distortions, misunderstandings, or unsupported extensions in the answer with respect to the source?

The higher the score, the more closely the response aligns with the source material. Only output the final score; do not include any additional content.

Using this prompt, we obtain a score directly from the large language model. We then add a relevance field to the QA set to store this score. The same process is applied to obtain scores for the other three evaluation dimensions.

Therefore, we first designed the initial prompt templates and continuously refined them manually during the experiments based on the generated results. In the end, four prompts were finalized for scoring. The complete prompt content, including the original Chinese text and its English translation, is provided as Supplementary Materials for reference and reproducibility.

Additionally, as indicated by existing research, it is important to consider the influence of retrieval on RAG performance. Therefore, based on the previously defined document and document list fields, we generate a new field called rank, whose value represents the position of the referenced document in the document list: if present, it is recorded as its corresponding rank; if the document is not retrieved, the value is set to 0.

Through these steps, we construct the CuBE scoring set as the material for subsequent evaluation.

4.4. CuBE

The final score produced by CuBE not only requires the QA set of the target RAG system but also two additional QA sets to serve as scoring references. We refer to these as the zero-score QA set and the full-score QA set.

Zero-score QA set: As the name suggests, this QA set represents the worst-case responses of a RAG system. Under the same prompt, the large language model is required to answer all questions from the question set without being provided with any relevant source materials, relying solely on its base capabilities. This QA set effectively serves as a baseline for our scoring methodology. To construct it, we use our prompts and the question set to batch-generate responses from the model, resulting in the zero-score QA set.

Full-score QA set: The full-score QA set represents the upper bound of RAG system performance. As established during the creation of the CuBE QA set, each question is generated based on a specific service guide. Therefore, for the full-score QA set, we replace the eight chunk fields originally used in retrieval with the single, correct service guide that serves as the gold-standard answer. This simulates a scenario where perfect information retrieval has occurred. The model then answers each question using this prompt and the correct guide, yielding the full-score QA set.

Scoring: Both the zero-score and full-score QA sets are scored using the same CuBE evaluation process as the GSIA QA set, resulting in corresponding scoring sets. These two reference sets are essential components of CuBE and are key to implementing LTA.

Scoring scheme: After completing these three steps, we obtained three CuBE scoring sets. The primary reason for introducing additional zero-score and full-score sets lies in the inherent differences among large language models in their generative behaviors: some models tend to be more stringent in scoring, while others are relatively lenient. If such differences are ignored, it becomes difficult to establish a unified evaluation benchmark across multiple models. To address this, we designed upper- and lower-bound scoring, taking the best and worst answers as boundaries, so that the responses of the target system can be positioned within the “cognitive spectrum” of large models. This design provides an objective and comparable scale for subsequent evaluations. These two scoring sets establish the upper and lower bounds, thereby providing a relatively objective scale for evaluation. Since prompt design is highly dependent on experience and iterative tuning, it is difficult to claim that any prompt will consistently elicit perfect or zero-score responses. In this study, we, therefore, propose using identical prompts as the baseline, treating responses generated from absolutely correct information retrieval as the upper bound or full score and responses generated without any supporting information as the lower bound or zero score. This approach allows us to evaluate the position of the target RAG system within the defined spectrum and to calculate its CuBE score accordingly. The above procedure constitutes the complete CuBE evaluation framework. In the Section 5, we will demonstrate the application of CuBE for LTA evaluation using the GSIA Consultation Assistant as a case study.

5. Results and Discussion

In this section, we report and analyze the LTA evaluation of the GSIA Consultation Assistant using CuBE. We employed four models to generate scoring sets, with particular focus on the following issues: (1) analysis of the CuBE scoring sets from different models for the GSIA Consultation Assistant; (2) the relationship between model scores for each field and retrieval results; (3) comparative analysis of the scoring results for the zero-score set, full-score set, and GSIA Consultation Assistant across different models; (4) calculation of the final CuBE score for the GSIA Consultation Assistant.

5.1. Model Selection

According to existing studies, different large language models (LLMs) exhibit distinct generative behaviors, and we are concerned that model scoring may contain “subjectivity” specific to each model. In fact, addressing this concern is one of the motivations behind the development of the CuBE scoring methodology, where the Bounds design serves as a measure to counteract model subjectivity. To observe how CuBE adjusts for model-specific biases, we employed four different models to conduct separate scoring in this study. Our choices are as follows.

Qwen2.5-32B: This is a small-parameter model from the Qwen2.5 series and serves as the generative model deployed in the GSIA Consultation Assistant. It is deployed locally on domestic 910B hardware. Using it as the evaluation model is essentially a form of self-assessment, which makes its evaluation results particularly interesting to observe.

DeepSeek-V3: The DeepSeek series has driven the AI boom in China to new heights and is known for its large parameter count, reaching up to 685B. It is also deployed locally on 910B domestic hardware. However, due to its high operational cost and computational resource requirements, it has not been adopted as the primary generative model in the GSIA Consultation Assistant.

GPT-4o: As one of the most popular models worldwide, GPT-4o is a closed-source model developed by OpenAI and is widely regarded in the research community as one of the best models for evaluation tasks. Its top-tier performance makes its scoring particularly valuable for reference.

GPT-4.1 nano: The GPT-4.1 series has been claimed to surpass GPT-4o in all respects. Our choice of the nano variant is based on its unique evaluation behavior observed during testing: it is especially stringent with low scores, frequently assigning scores of 3 or 4 to items that GPT-4.1 itself might rate as 7 or 8. This distinctive scoring style makes it a valuable addition to our evaluation “jury”.

5.2. CuBE Scoring Sets for the GSIA Consultation Assistant

The motivation for the development of CuBE arose from a series of issues identified when we first obtained the scoring set for the GSIA Consultation Assistant. Initially, we attempted to use the mean value as the final evaluation metric. However, as shown in Table 1, we found that the standard deviation for some metrics exceeds 2, which is significant given the 0–10 scoring scale and indicates substantial ambiguity. In addition, there are considerable differences in the mean and standard deviation across different models, which is an expected phenomenon. In our experiments, we intentionally selected models with noticeable gaps for comparison: for example, GPT-4o, as a globally leading large model, performs better in terms of completeness and comprehensiveness; GPT-4.1-nano represents small-parameter models, focusing more on lightweight design and efficiency; Qwen2.5-32B, as a domestic large-scale model, demonstrates strong relevance and adaptability in Chinese and multilingual tasks; and Deepseek-V3, another high-performance Chinese-oriented large model, features an even larger parameter scale. The differences among these models in terms of size, corpora, and training objectives lead to significant variations in evaluation scores, further confirming our viewpoint: different LLMs inherently exhibit diverse generative behaviors, and their evaluation results reflect the sensitivity and diversity of the method to model characteristics.

Table 1.

Mean ± standard deviation of CuBE scoring sets for the GSIA Consultation Assistant.

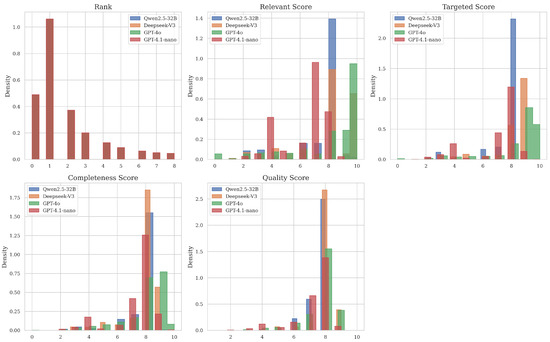

As illustrated in Figure 1, different LLMs indeed have different scoring preferences. For example, the blue bars representing Qwen are characterized by a high frequency of scores of 8 in every field, which is distinctive of its scoring style. In contrast, the red bars for GPT-4.1 nano show a noticeable predominance in the lower score range, particularly scores of 4, for relevance and specificity. The green bars for GPT-4o display a tendency toward high scores (9–10). These patterns demonstrate that each of the four models has unique scoring biases, making it difficult to directly identify a consistent trend. Therefore, further analysis of the scoring sets is necessary.

Figure 1.

Histograms of scores by different models.

5.3. Analysis of Field Scores and Retrieval Scores

As systematically evaluated by Chen et al. (2024), the accuracy and reliability of RAG system outputs significantly decrease when the quality of retrieved information drops—such as when noise increases or retrieval fails [30]. Therefore, exploring the correlation between the rank and each evaluation field has become a primary task. We decided to use the Pearson correlation coefficient to investigate the relationships between rank and the other fields. This is a necessary and customizable step in evaluating RAG systems by using the CuBE method. It is also possible to analyze correlations with custom values instead of rank if desired. This step mainly serves as a foundation for determining the weight coefficients in the subsequent analysis.

To facilitate the calculation of correlation coefficients, we first needed to transform the rank values. The original range of rank is defined as follows: a value of 0 indicates the document was not retrieved; values of 1–8 indicate the retrieved document’s ranking position. Directly using these values is inconvenient for analysis. We used the transformation formula 10 − rank for nonzero cases, converting them into scores ranging from 1 to 9, so that higher scores indicate higher rankings. Additionally, if only the correct document was retrieved, we manually added an extra point to achieve a full score. However, this scenario did not occur in our QA set, so there are no full scores.

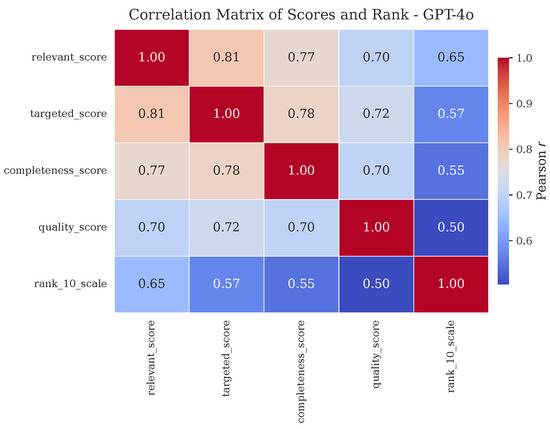

After transforming the rank field, we examined the correlation between rank and other evaluation fields. As shown in Figure 2, within the GPT-4o evaluation system, the relationship between the evaluation fields and rank decreases sequentially for relevance, specificity, completeness, and answer quality. This indicates a moderate correlation between the evaluation scores and the rank. Why is this only moderate and not strong? We anticipate two possibilities: (1) The language model assigns relatively uniform scores regardless of retrieval results, possibly indicating that the model’s scoring does not align with our expectations. (2) Once the correct document appears in the document list, the GSIA Consultation Assistant’s performance stabilizes and becomes independent of the rank, with the current moderate correlation arising only because we did not distinguish between retrieved and non-retrieved cases. Therefore, we must re-examine this correlation by separately analyzing retrieved and non-retrieved instances. If the first scenario holds, the heatmap of retrieved cases should closely resemble the current one; if the second one holds, there should be significant differences.

Figure 2.

Correlation matrix of scores and rank—GPT-4o.

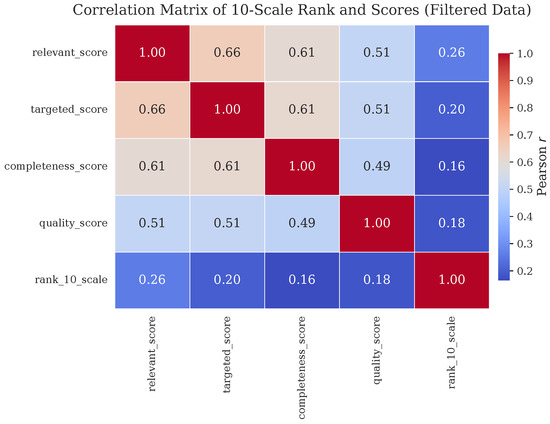

Upon further analysis of the retrieved cases, the correlation between each evaluation field and rank drops significantly into the weak correlation range, as shown in Figure 3. This validates the second scenario: when the correct document appears in the document list (i.e., successful retrieval), with TOP-N set to 8, the impact of the rank on each evaluation field is minimal. This phenomenon indicates that once the correct reference material appears in the candidate set, large models are generally capable of identifying and utilizing it to generate answers. Therefore, the marginal impact of retrieval ranking on the quality of the final answer is limited, whereas whether the correct material can be retrieved is more critical. Based on this observation, we hypothesize that expanding the TOP-N threshold to some extent may help improve retrieval recall without significantly compromising the quality of the generated answers. This hypothesis merits further empirical validation in future work.

Figure 3.

Correlation matrix of 10-scale rank and scores (filtered data).

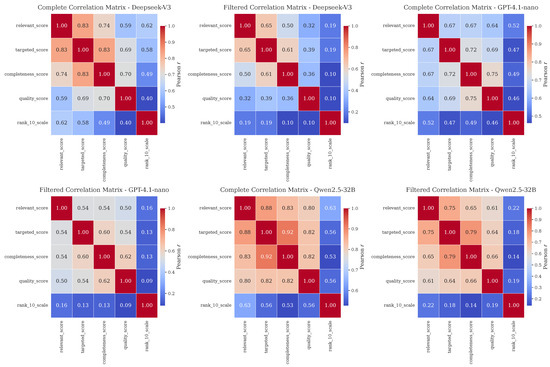

This relationship is not unique to GPT-4o. The other three models exhibit similar trends, as illustrated in Figure 4. Regardless of model size, all consistently show that the correlation between answer scores and rank drops sharply after successful document retrieval. This further confirms our previous observations and demonstrates that although the scoring styles of the four LLMs differ, they can all distinguish the effect of successful document retrieval on QA performance.

Figure 4.

Heatmaps for Deepseek-V3, GPT-4.1-nano, and Qwen2.5-32B.

Furthermore, we provide a table of means and standard deviations for cases with successful document retrieval. For example, in the DeepSeek-V3 evaluation set, the standard deviation for the relevance indicator drops noticeably, indicating more uniform scoring for retrieved documents. This implies that the mean score may be a suitable metric for evaluating certain fields.

5.4. Comparison of Upper- and Lower-Bound Scoring Sets

At this point, we are very close to obtaining the final CuBE score. Recall the two additional scoring sets we created: the zero-score set and the full-score set. These sets serve as references for the scoring set of the GSIA Consultation Assistant, thereby helping us determine its final performance. As previously discussed, after verification, the truncated mean can be adopted to represent the overall performance for a particular field in a given QA set. To achieve greater robustness, we employ a trimmed mean as the representative measure of the average performance level for each field. We calculate the trimmed mean for each field across all three evaluation sets, as shown in Table 2. It is evident that the scores for each field in the GSIA Consultation Assistant fall between those of the zero-score and full-score sets, without any anomalies such as scores exceeding the upper or lower bounds. In theory, we could now simply report these scores to announce the RAG system’s performance in various aspects. However, as our method follows the LTA paradigm, reporting a series of raw scores does not sufficiently reflect the overall effectiveness. Instead, we need to consider more comprehensive factors, such as the correlation between each field and the rank, which will serve as weights in the normalization of scores. The scoring sets generated by different models are associated with different weights, as shown in Table 3.

Table 2.

Trimmed mean scores and weighted total scores for each model on three datasets.

Table 3.

Overview of field weights for each model.

Based on Table 2, we can easily calculate the normalized scores for each metric, with the results presented in Table 4. It can be observed that before weight aggregation, there is considerable discrepancy among the large models’ scores for completeness and answer quality, with substantial variation across models. If only normalization is used without weighting, these differences could influence the final evaluation. However, the CuBE method, through its refined weighting analysis, balances out these deviations. After weight aggregation, the resulting scores exhibit standard deviations within an acceptable range. This weighted aggregate score represents the collective assessment by the LLMs of the GSIA Consultation Assistant’s performance on successfully retrieved cases, with an average score of 78.95. Given a retrieval success rate of 80.41%, the final CuBE score is 63.48.

Table 4.

Comprehensive CuBE score table (out of 100).

5.5. CuBE Score Calculation

With all preparatory steps completed, the final calculation of the CuBE score becomes straightforward. We employ the following formula:

Based on these scores, we can present the LTA results as follows: The GSIA Consultation Assistant achieved a CuBE score of 63.48 across the metrics of relevance, specificity, completeness, and answer quality, indicating a satisfactory level of performance. The retrieval rate reached 80.41%, and the response effectiveness for successfully retrieved cases was 78.95, which can be regarded as a good outcome. Optimization suggestions include adjusting retrieval parameters by increasing the TOP-N value and refining prompt design, with the aim of further enhancing completeness and answer quality.

Through this process, we successfully performed LTA for the GSIA Consultation Assistant using the CuBE methodology.

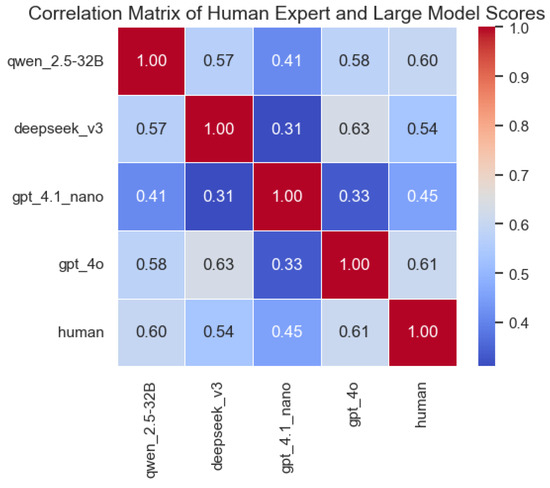

5.6. Analysis of Expert Scoring and Large Model Scoring

As shown in Figure 5, we extracted a small sample of data from the QA set for expert manual scoring verification and conducted a consistency analysis with each model.

Figure 5.

Correlation matrix between model scores and expert evaluation scores (filtered data).

Table 5 presents a comparison between the scores derived from the human scoring set and those obtained by CuBE.

Table 5.

Comparison of CuBE and human ratings.

The above results demonstrate that the evaluation level of LLMs is close to that of humans and that they can partially substitute for human scoring. This viewpoint is also supported in the literature, indicating that our use of LLMs as evaluators is reasonable. To further verify the reliability of the automatic scoring method, we referred to recent related studies. For example, LLM-as-a-judge was compared with expert human scoring on the SciEx benchmark [31], and the results showed that their Pearson correlation coefficient at the exam level reached a score as high as 0.948. This finding suggests that with properly designed prompts and controls, LLM automatic scoring can achieve a high degree of consistency with human expert scoring.

5.7. Comparison Between CuBE and Traditional Methods

We selected a scoring case and chose the dimension of completeness to compare BleuScore, RougeScore, various large models, and human scoring. Completeness is precisely the dimension that evaluates whether the material and the answer are relevant, which makes it particularly suitable for the BleuScore and RougeScore methods.

From Table 6, we can observe that both BLEU and ROUGE gave low scores, while the scores given by humans are close to those of the large models. We further investigated the details of human scoring, as shown in Table 7, where scores can be provided based on different dimensions. This contrasts with BLEU and ROUGE, which merely compute a single value and lack logical insight.

Table 6.

Rating results of humans and AI models with BLEU and ROUGE scores.

Table 7.

Details of human ratings.

5.8. Potential of This Method

The origin of this method lies in the urgent need for a simple and rapid evaluation approach for the RAG system GSIA Consultation Assistant prior to its deployment. Although, in this study, the evaluation is limited to single-turn dialogue, the core idea can be extended to more complex scenarios such as multi-turn conversations. Furthermore, the method allows for customizable evaluation metrics, enabling developers to assign final scores based on various criteria of interest, offering great flexibility.

Our study also demonstrates that CuBE-based scores yield consistent results across diverse models, whether they are full-parameter models, small-parameter models, domestic models, or from OpenAI. This suggests that even in closed intranet environments, locally deployed large language models can be used for collaborative evaluation. Even in cases with only a single model, repeated evaluations or averaging can be employed. In addition, evaluation scores can, in principle, provide references for knowledge base construction, prompt design, and retrieval parameter settings, thus showing potential as a debugging tool in the development process. Future research may further incorporate specific cases to validate their practical effectiveness in system optimization and iteration.

6. Conclusions

This paper addresses the challenge of automated evaluation for Retrieval-Augmented Generation (RAG) systems and proposes CuBE, a novel evaluation methodology designed to overcome the limitations of existing approaches in terms of efficiency, fairness, and adaptability. Current mainstream evaluation systems—whether based on modular traditional metrics or LLM-driven automated scoring—face challenges in terms of system consistency, subjectivity, and generalization across diverse application scenarios. To address these issues, CuBE incorporates a bounded evaluation mechanism and multi-model scoring set design, effectively enhancing the objectivity, consistency, and universality of evaluation results.

Our experiments demonstrate that when applied to the “GSIA” RAG system, CuBE achieves remarkable consistency and adaptability in multi-model evaluation, providing robust tool support for performance assessment in complex knowledge integration tasks. Compared with previous methods, CuBE not only reduces manual intervention and evaluation costs but also significantly improves the fairness and stability of evaluation outcomes.

More importantly, the introduction of the CuBE framework establishes a new evaluation paradigm for RAG systems and the broader field of natural language processing, pointing the way for future performance quantification and optimization of multi-modal and multi-model systems. This research study not only enriches the theoretical foundation of automated evaluation but also lays the groundwork for trustworthy assessment and continuous iteration of intelligent systems.

7. Limitations

7.1. Ethical Considerations in the Use of LLMs

In this study, LLMs were primarily used in CuBE to score the quality of generated results. We fully recognize the importance of ethical considerations, especially in terms of transparency, bias propagation, and accountability. To mitigate the potential bias risks arising from using LLMs as evaluators, we adopted the following measures: (1) employing different types of models for cross-validation in experiments to ensure that the results are not the product of a single model’s bias; (2) using neutral and objective wording in the design of scoring dimensions to avoid cultural or ideological tendencies; (3) standardizing input prompts and materials to ensure neutrality and consistency. In addition, we emphasize transparency by openly disclosing prompt design, scoring dimensions, and experimental details in the paper to ensure reproducibility, and we emphasize accountability by clarifying that the role of LLMs in this study is that of an “auxiliary evaluation tool” rather than the final decision maker, thereby avoiding unclear responsibilities in sensitive application scenarios. Although these measures cannot completely eliminate risks, they can reduce their impact to some extent and provide greater fairness and credibility in the evaluation process.

7.2. Limitations of LLM-Based Question–Answer Generation

In this study, we acknowledge that questions and answers generated by language models may introduce bias or errors, with risks such as factual inaccuracies, distribution shifts, and potential biases. Therefore, relying solely on raw model generation is insufficient to guarantee the reliability of evaluation results. However, in our research context, the manually annotated data are limited in scale and costly to obtain, while the task itself requires a large-scale and diverse set of QA pairs to support evaluation. If we had relied only on human-annotated data, it would have been difficult to cover a sufficiently large sample space and ensure representativeness. Given these practical constraints, we adopted language model generation as a necessary supplementary approach. To enhance the rationality and reliability of the generated QAs, we incorporated knowledge-augmented generation by leveraging real government materials, thereby reducing hallucinated answers and improving factual accuracy. This approach is consistent with existing research: currently, both academia and industry widely adopt LLM-generated QAs as a low-cost, high-coverage supplementary method for evaluation and training. For example, benchmark datasets such as WANLI, GPT3Mix, and Code Alpaca are partially or fully generated by LLMs and have demonstrated performance comparable to human annotations in downstream tasks [32]. A common consensus is that as long as filtering, mixing, and human spot-checking mechanisms are applied, LLM-based QA generation is not only feasible but can also achieve a quality level comparable to human data in specific application scenarios.

7.3. Weaknesses and Limitations of CuBE

Weaknesses: The scoring results of the CuBE system are highly dependent on the underlying large language model. If the base model underperforms, the generated reference answers or evaluation outcomes may be biased, reducing the reliability of the scores. Therefore, to enhance robustness, practical applications should rely as much as possible on high-quality, well-performing large models. Limitations: CuBE is more suitable for providing researchers or teams with rapid feedback during the system development stage, supporting iteration and model adjustments. At the same time, it can also serve as a tool to provide intuitive references for non-experts, helping them understand model performance. However, it must be emphasized that these scores are for reference only, representing an approximate estimate of the general level, rather than a rigorous, industry-standard evaluation metric.

8. Future Work

Our research shows that when the performance of a RAG system approaches that of the underlying LLM, the differences in scores assigned by CuBE become less pronounced, and the evaluation becomes less sensitive. This reveals a limitation in the current prompt design and scoring scale, which lack sufficient sensitivity to subtle differences. In future work, we will focus on the standardization and automation of prompt template design. For example, we plan to fine-tune a set of prompt generators so that scoring prompts can dynamically adapt to different quality levels and task scenarios, thereby enhancing the ability to distinguish fine-grained performance differences between models. We also intend to explore loss functions incorporating contrastive learning to better differentiate scores among closely performing models.

It is worth noting that previous studies have pointed out that long-term improvements in AI capabilities rely more on systematic governance, standardized processes, and sustained organizational capacity building [33]. Therefore, the development of the GSIA system will not stop here; we will continue to optimize it, as we will with CuBE. We plan to develop a Python SDK (version 3.12.3) tool for CuBE, enabling “one-click evaluation” and supporting user-defined evaluation dimensions, data import, and visualization of results. Additionally, we aim to design plugin interfaces to facilitate integration with existing RAG systems.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/app151910447/s1.

Author Contributions

Methodology, X.X.; Writing—review and editing, X.X., X.Y., X.Z. and J.N.; Supervision, X.Y., X.Z. and J.N.; Validation, X.Y., X.Z. and J.N.; Data curation, B.Y.; Writing—original draft preparation, B.Y.; Conceptualization, Z.L. and X.D.; Project administration, Z.L. and X.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Guizhou Science and Technology Department Platform Program (Grant No. [ZSYS]2025 004). Additional support, including equipment and personnel, was provided by Guizhou-Cloud Big Data Group Co., Ltd. The APC was funded by Guizhou Key Laboratory of Advanced Computing, Guizhou Normal University.

Data Availability Statement

The data presented in this study are not publicly available due to confidentiality agreements with the Guizhou Provincial Government Service Center, which provided the dataset for product development. Data may be available from the corresponding author upon reasonable request and with permission of the Guizhou Provincial Government Service Center.

Acknowledgments

The authors would like to express their gratitude to the staff of Guizhou-Cloud Big Data Group Co., Ltd., and the Guizhou Provincial Government Service Center for their valuable support during this project. During the preparation of this study, the authors used Qwen2.5-32B, DeepSeek-V3, GPT-4o, and GPT-4.1-nano for the purposes of generating question–answer sets and producing evaluation scores. The authors have reviewed and edited the output and take full responsibility for the content of this publication.

Conflicts of Interest

Authors Zhentao Liu and Xinmin Dai were employed by Guizhou-Cloud Big Data Group Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CuBE | Customizable Bounds Evaluation |

| LTA | Lightweight Targeted Assessment |

| RAG | Retrieval-Augmented Generation |

| GSIA | Guizhou Provincial Government Service Center Intelligent Assistant |

References

- DeepSeek-AI; Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; et al. DeepSeek-R1: Incentivizing reasoning capability in LLMs via reinforcement learning. arXiv 2025, arXiv:2501.12948. [Google Scholar] [CrossRef]

- Van Noordt, C.; Medaglia, R.; Tangi, L. Policy initiatives for artificial intelligence-enabled government: An analysis of national strategies in europe. Public Policy Adm. 2025, 40, 215–253. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, X. Impact of digital government construction on the intelligent transformation of enterprises: Evidence from China. Technol. Forecast. Soc. Change 2025, 210, 123787. [Google Scholar] [CrossRef]

- Zheng, Y.; Yu, H.; Cui, L.; Miao, C.; Leung, C.; Yang, Q. SmartHS: An AI platform for improving government service provision. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar] [CrossRef]

- Han, J.; Lu, J.; Xu, Y.; You, J.; Wu, B. Intelligent practices of large language models in digital government services. IEEE Access 2024, 12, 8633–8640. [Google Scholar] [CrossRef]

- Papageorgiou, G.; Sarlis, V.; Maragoudakis, M.; Tjortjis, C. Enhancing E-government services through state-of-the-art, modular, and reproducible architecture over large language models. Appl. Sci. 2024, 14, 8259. [Google Scholar] [CrossRef]

- Cantens, T. How will the state think with ChatGPT? The challenges of generative artificial intelligence for public administrations. AI Soc. 2025, 40, 133–144. [Google Scholar] [CrossRef]

- How RAG Systems Improve Public Sector Management. Available online: https://www.datategy.net/2025/04/07/how-rag-systems-improve-public-sector-management/ (accessed on 22 September 2025).

- Gao, M.; Hu, X.; Yin, X.; Ruan, J.; Pu, X.; Wan, X. LLM-based NLG Evaluation: Current Status and Challenges. Comput. Linguist. 2025, 51, 661–687. [Google Scholar] [CrossRef]

- Karpukhin, V.; Oğuz, B.; Min, S.; Lewis, P.; Wu, L.; Edunov, S.; Chen, D.; Yih, W.T. Dense passage retrieval for open-domain question answering. arXiv 2020, arXiv:2004.04906. [Google Scholar] [CrossRef]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar] [CrossRef]

- Lin, C.Y. ROUGE: A package for automatic evaluation of summaries. In Proceedings of the Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating text generation with BERT. arXiv 2020, arXiv:1904.09675. [Google Scholar] [CrossRef]

- Fu, J.; Ng, S.K.; Jiang, Z.; Liu, P. GPTScore: Evaluate as You Desire. arXiv 2023, arXiv:2302.04166. [Google Scholar] [CrossRef]

- Es, S.; James, J.; Espinosa-Anke, L.; Schockaert, S. Ragas: Automated Evaluation of Retrieval Augmented Generation. arXiv 2025, arXiv:2309.15217. [Google Scholar] [CrossRef]

- Lin, Y.T.; Chen, Y.N. LLM-Eval: Unified Multi-Dimensional Automatic Evaluation for Open-Domain Conversations with Large Language Models. arXiv 2023, arXiv:2305.13711. [Google Scholar] [CrossRef]

- Desmond, M.; Ashktorab, Z.; Pan, Q.; Dugan, C.; Johnson, J.M. EvaluLLM: LLM assisted evaluation of generative outputs. In Proceedings of the Companion Proceedings of the 29th International Conference on Intelligent User Interfaces, Greenville, SC, USA, 18–21 March 2024; pp. 30–32. [Google Scholar] [CrossRef]

- Liusie, A.; Manakul, P.; Gales, M.J.F. LLM Comparative Assessment: Zero-shot NLG Evaluation through Pairwise Comparisons using Large Language Models. arXiv 2024, arXiv:2307.07889. [Google Scholar] [CrossRef]

- Chan, C.M.; Chen, W.; Su, Y.; Yu, J.; Xue, W.; Zhang, S.; Fu, J.; Liu, Z. ChatEval: Towards better LLM-based evaluators through multi-agent debate. arXiv 2023, arXiv:2308.07201. [Google Scholar] [CrossRef]

- Bai, Y.; Ying, J.; Cao, Y.; Lv, X.; He, Y.; Wang, X.; Yu, J.; Zeng, K.; Xiao, Y.; Lyu, H.; et al. Benchmarking foundation models with language-model-as-an-examiner. Adv. Neural Inf. Process. Syst. 2023, 36, 78142–78167. [Google Scholar]

- Zheng, L.; Chiang, W.L.; Sheng, Y.; Zhuang, S.; Wu, Z.; Zhuang, Y.; Lin, Z.; Li, Z.; Li, D.; Xing, E.; et al. Judging LLM-as-a-judge with MT-bench and chatbot arena. Adv. Neural Inf. Process. Syst. 2023, 36, 46595–46623. [Google Scholar]

- Gu, J.; Jiang, X.; Shi, Z.; Tan, H.; Zhai, X.; Xu, C.; Li, W.; Shen, Y.; Ma, S.; Liu, H.; et al. A Survey on LLM-as-a-Judge. arXiv 2025, arXiv:2411.15594. [Google Scholar] [CrossRef]

- Chen, G.H.; Chen, S.; Liu, Z.; Jiang, F.; Wang, B. Humans or LLMs as the judge? A study on judgement biases. arXiv 2024, arXiv:2402.10669. [Google Scholar] [CrossRef]

- Guo, Y.; Guo, M.; Su, J.; Yang, Z.; Zhu, M.; Li, H.; Qiu, M.; Liu, S.S. Bias in large language models: Origin, evaluation, and mitigation. arXiv 2024, arXiv:2411.10915. [Google Scholar] [CrossRef]

- Tian, K.; Mitchell, E.; Zhou, A.; Sharma, A.; Rafailov, R.; Yao, H.; Finn, C.; Manning, C.D. Just ask for calibration: Strategies for eliciting calibrated confidence scores from language models fine-tuned with human feedback. arXiv 2023, arXiv:2305.14975. [Google Scholar] [CrossRef]

- Raju, R.; Jain, S.; Li, B.; Li, J.; Thakker, U. Constructing domain-specific evaluation sets for LLM-as-a-judge. arXiv 2024, arXiv:2408.08808. [Google Scholar] [CrossRef]

- Ru, D.; Qiu, L.; Hu, X.; Zhang, T.; Shi, P.; Chang, S.; Jiayang, C.; Wang, C.; Sun, S.; Li, H.; et al. RAGChecker: A Fine-grained Framework for Diagnosing Retrieval-Augmented Generation. Adv. Neural Inf. Process. Syst. 2024, 37, 21999–22027. [Google Scholar]

- Online government advisory service innovation through intelligent support systems. Inf. Manag. 2011, 48, 27–36. [CrossRef]

- A survey on RAG with LLMs. Procedia Comput. Sci. 2024, 246, 3781–3790. [CrossRef]

- Chen, J.; Lin, H.; Han, X.; Sun, L. Benchmarking Large Language Models in Retrieval-Augmented Generation. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 17754–17762. [Google Scholar] [CrossRef]

- Dinh, T.A.; Mullov, C.; Bärmann, L.; Li, Z.; Liu, D.; Reiß, S.; Lee, J.; Lerzer, N.; Ternava, F.; Gao, J.; et al. SciEx: Benchmarking Large Language Models on Scientific Exams with Human Expert Grading and Automatic Grading. arXiv 2024, arXiv:2406.10421. [Google Scholar] [CrossRef]

- Nadas, M.; Diosan, L.; Tomescu, A. Synthetic Data Generation Using Large Language Models: Advances in Text and Code. IEEE Access 2025, 13, 134615–134633. [Google Scholar] [CrossRef]

- Luna-Reyes, L.F.; Harrison, T.M. An enterprise view for artificial intelligence capability and governance: A system dynamics approach. In Digital Government: Research and Practice; Association for Computing Machinery: New York, NY, USA, 2024; Volume 5, pp. 1–23. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).