Single-Image High Dynamic Range Reconstruction via Improved HDRUNet with Attention and Multi-Component Loss

Abstract

1. Introduction

2. Materials and Methods

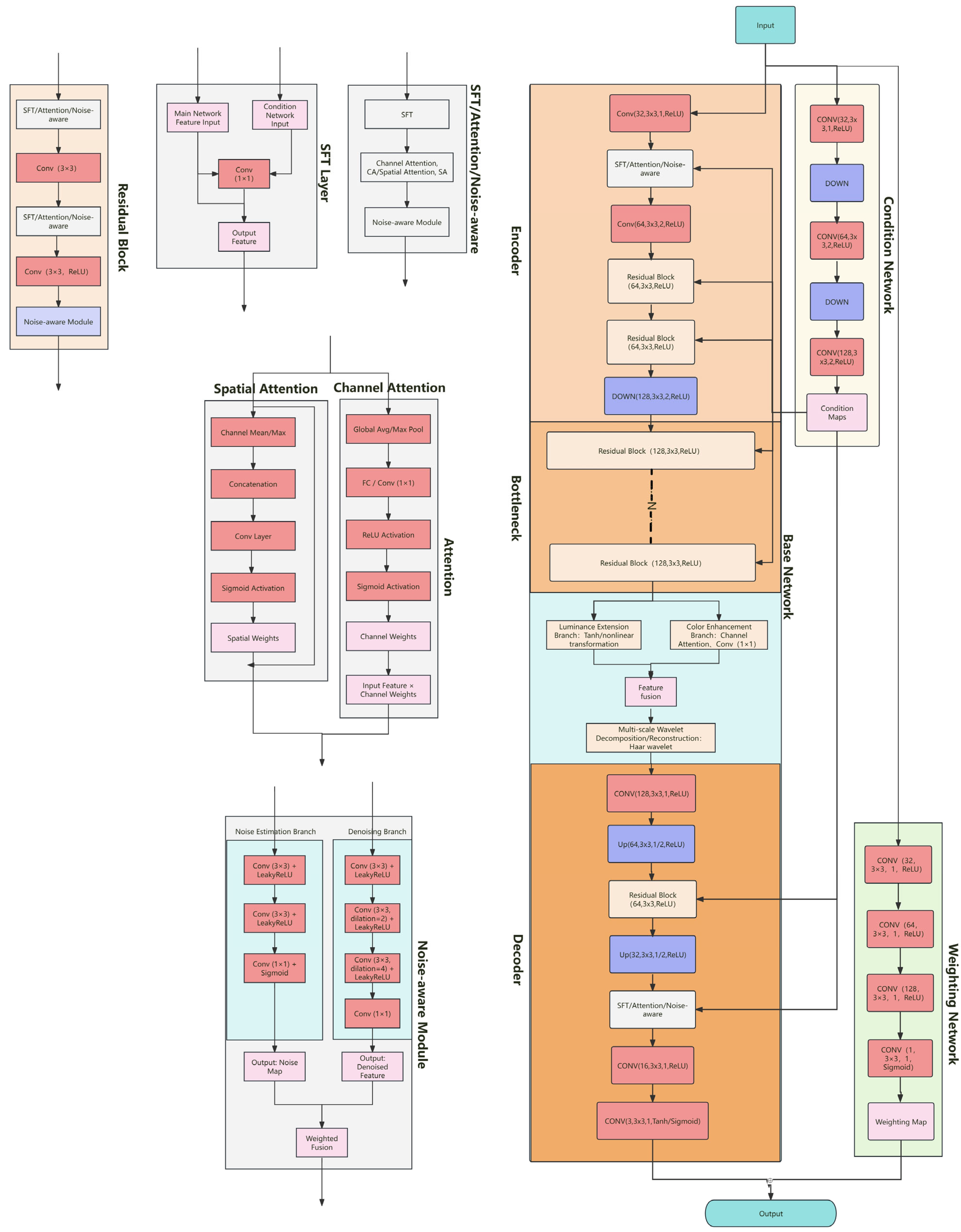

2.1. Model Architecture

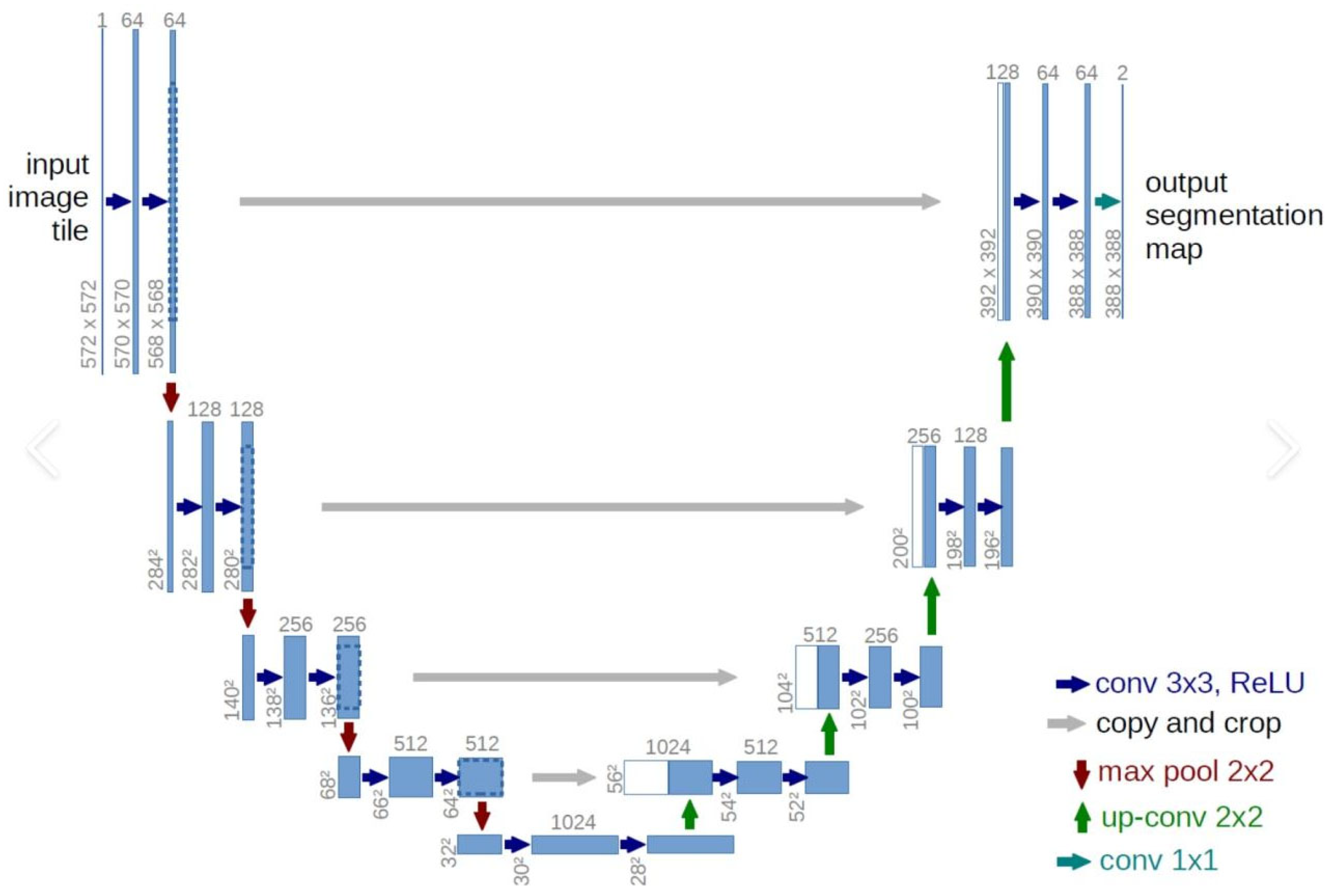

2.1.1. Overall Network Structure

- (1)

- Base Network

- (2)

- Condition Network

- (3)

- Weighting Network

2.1.2. Basic Network Structure

2.1.3. Model Improvements

- (1)

- Introduction and Enhancement of Multi-dimensional Attention Mechanisms

- (2)

- Brightness Expansion and Color-Enhancement Branches

- (3)

- Adaptive Multi-component Loss Function Design

- (4)

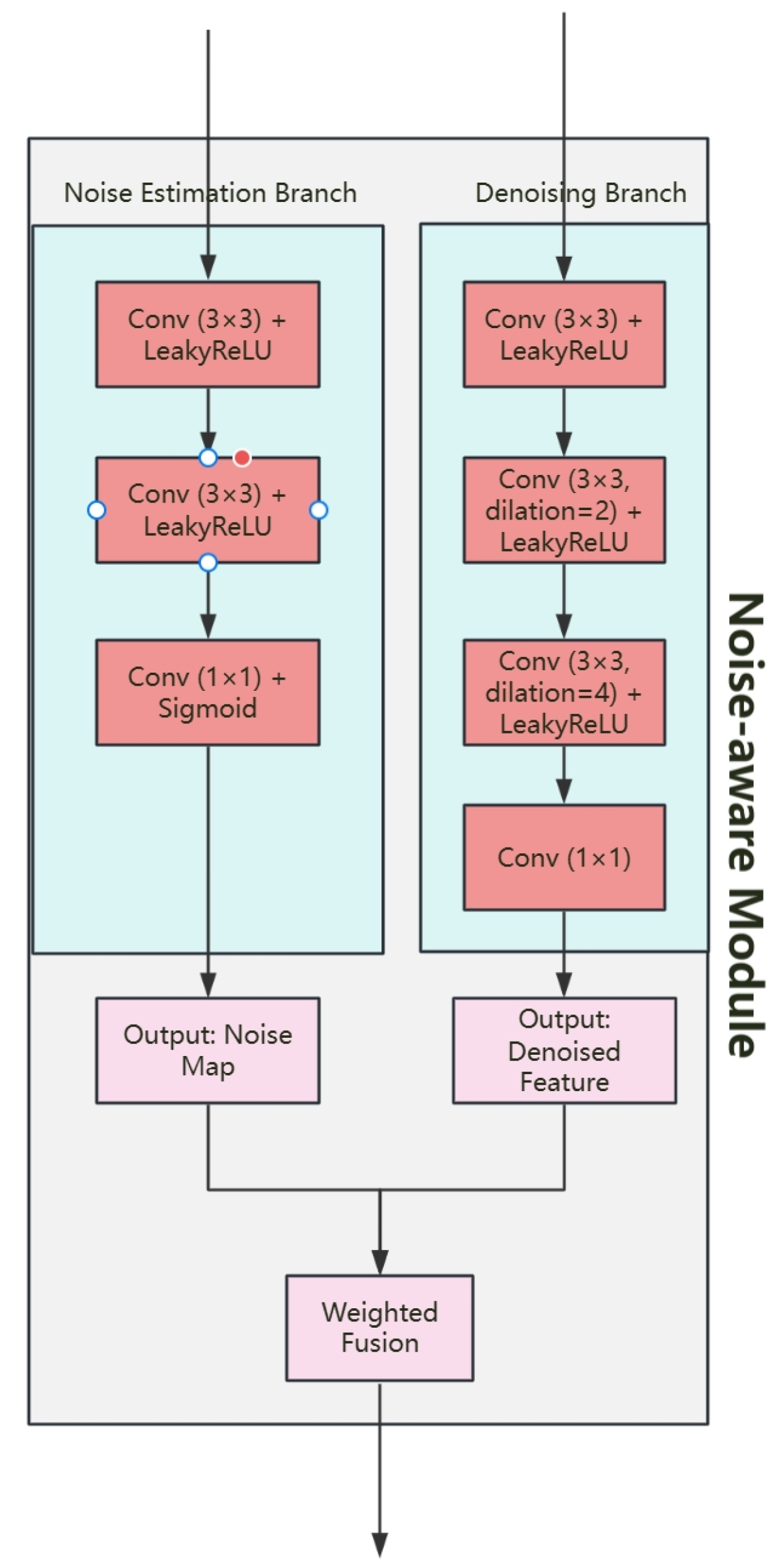

- Adaptive Noise Perception and Denoising Module

- (5)

- Multi-scale (Haar) Wavelet Decomposition and Reconstruction Mechanism

2.2. Tone-Mapping Methods

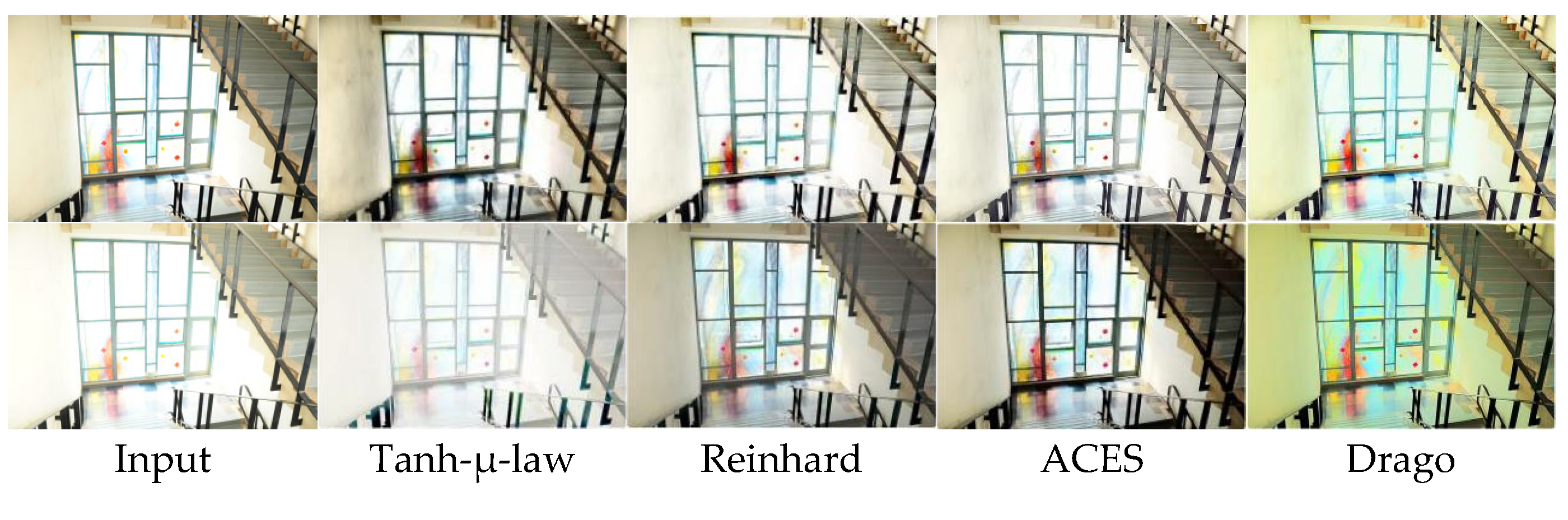

- (1)

- Tanh-μ-law Tone Mapping. The Tanh-μ-law method normalizes the HDR image and then compresses the highlights using a hyperbolic tangent function. The μ parameter controls the intensity of highlight compression, and the percentile determines the normalization range. The formula is as follows:

- (2)

- Reinhard Tone Mapping. The Reinhard method is a classic global tone-mapping algorithm that nonlinearly compresses image luminance to map high dynamic range to low dynamic range. The core formula is as follows:

- (3)

- ACES Tone Mapping. ACES (Academy Color Encoding System) is a standard widely used in the film industry, producing natural colors and rich gradation. The mapping formula is as follows:

- (4)

- Drago Tone Mapping. The Drago method is suitable for high-contrast scenes, using logarithmic compression of luminance and introducing a bias parameter to control highlight compression. The core formula is as follows:

2.3. Datasets

2.4. Implementation Details

- (1)

- The model is implemented in PyTorch 1.9.0 and trained using the Adam optimizer with an initial learning rate of 2 × 10−4. The batch size is set to 16, and the learning rate is decayed at scheduled epochs. Data augmentation techniques such as random cropping, flipping, and rotation are applied to improve generalization.

- (2)

- Loss Weight Selection: The weight parameters λ1, λ2, λ3, and λ4 are determined through extensive ablation studies and grid search optimization. The optimal values are as follows: λ1 = 1.0 (reconstruction loss), λ2 = 0.1 (perceptual loss), λ3 = 0.5 (structural similarity loss), and λ4 = 0.3 (color consistency loss). These weights are selected based on validation performance on the NTIRE 2021 dataset, ensuring balanced contribution from each loss component. The selection process involves testing weight combinations in the ranges: λ1 ∈ [0.5, 1.0], λ2 ∈ [0.05, 0.2], λ3 ∈ [0.3, 0.8], and λ4 ∈ [0.1, 0.5], with step sizes of 0.1, 0.05, 0.1, and 0.1, respectively. Adaptive weight adjustment: During the training process, the weights will be adaptively adjusted according to the convergence of each loss term. If the convergence speed of a specific loss term is too slow or too fast, its weight will be automatically adjusted by ±10% within every 10 cycles to maintain the balance of the training process.

- (3)

- We employ the Haar wavelet transform with 2-level decomposition. It is inserted into the network after the bottleneck layer and before the decoder output to refine high-level features.

- (4)

- Noise model: The noise estimation branch consists of a combination of signal-dependent Poisson noise and signal-independent Gaussian noise. Noise learning strategy: The noise estimation network consists of five convolutional layers with ReLU activation functions, followed by a global average pooling layer for estimating noise parameters. Then, using a learned noise suppression function, the estimated noise map is used to adjust the feature maps. Insertion strategy: Modules with noise perception capabilities are integrated into each residual block of the encoder path, enabling stepwise noise suppression at multiple scales. Noise estimation is performed at the input layer and is passed to various parts of the network through skip connections to ensure noise-perception processing throughout the network.

3. Results

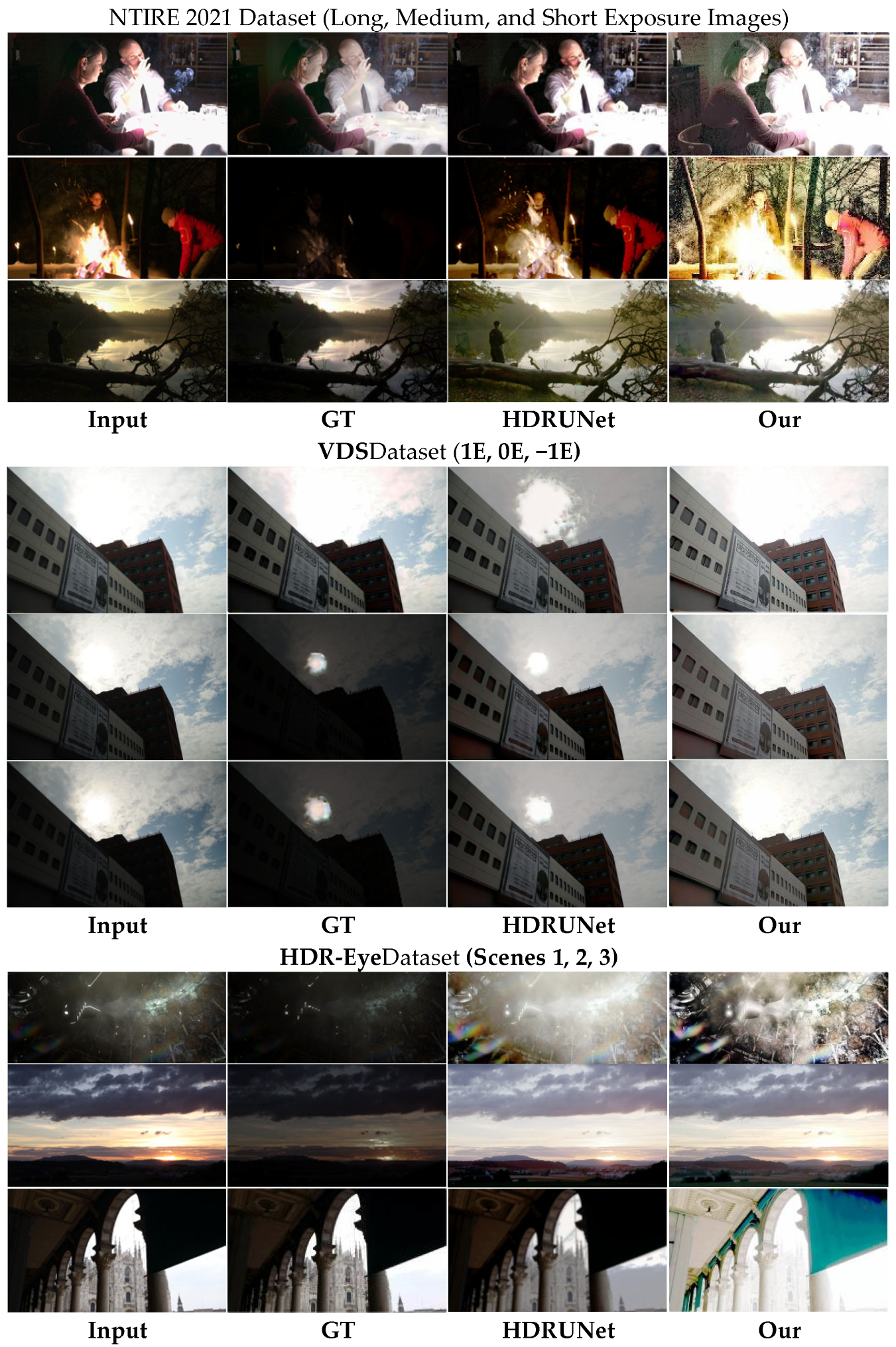

3.1. Experimental Results

3.2. Selection of Tone-Mapping Methods

3.3. Ablation Study

3.4. Comparison with Mainstream Algorithms

3.5. Summary

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- ITU-R BT.601; Studio Encoding Parameters of Digital Television for Standard 4:3 and Wide-Screen 16:9 Aspect Ratios. International Telecommunication Union: Geneva, Switzerland, 2011.

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 4th ed.; Books, Publishing House of Electronics Industry: Beijing, China, 2020. [Google Scholar]

- Eilertsen, G.; Kronander, J.; Denes, G.; Mantiuk, R.K.; Unger, J. HDR image reconstruction from a single exposure using deep CNNs. ACM Trans. Graph. 2017, 36, 1–15. [Google Scholar] [CrossRef]

- Lee, S.; An, G.H.; Kang, S.-J. Deep Chain HDRI: Reconstructing a High Dynamic Range Image from a Single Low Dynamic Range Image. IEEE Access 2018, 6, 49913–49924. [Google Scholar] [CrossRef]

- Liu, Y.-L.; Lai, W.-S.; Chen, Y.-S.; Kao, Y.-L.; Yang, M.-H.; Chuang, Y.-Y.; Huang, J.-B. Single-image hdr reconstruction by learning to reverse the camera pipeline. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1651–1660. [Google Scholar]

- Landis, H. Production-ready global illumination. Siggraph Course Notes 2002, 5, 93–95. [Google Scholar]

- Zou, Y.; Yan, C.; Fu, Y. Rawhdr: High dynamic range image reconstruction from a single raw image. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023. [Google Scholar]

- Conde, M.; Timofte, R.; Berdan, R.; Besbinar, B.; Iso, D.; Ji, P.; Dun, X.; Fan, Z.; Wu, C.; Wang, Z.; et al. Raw image reconstruction from RGB on smartphones. NTIRE 2025 challenge report. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025. [Google Scholar]

- Lin, H.-Y.; Lin, Y.-R.; Lin, W.-C.; Chang, C.-C. Reconstructing High Dynamic Range Image from a Single Low Dynamic Range Image Using Histogram Learning. Appl. Sci. 2024, 14, 9847. [Google Scholar] [CrossRef]

- Chen, X.; Liu, Y.; Zhang, Z.; Qiao, Y.; Dong, C. HDRUNet: Single Image HDR Reconstruction with Denoising and Dequantization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Nashville, TN, USA, 20–25 June 2021; pp. 354–363. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Tan, T.; Gong, Z.; Yang, Z. A High Dynamic Range Image Reconstruction Network Utilizing Feature Pre-alignment. Comput. Eng. 2025, 1–8. [Google Scholar] [CrossRef]

- Ding, S. Research on HDR Image Reconstruction Method Based on Deep Learning. Master’s Thesis, Yunnan Normal University, Kunming, China, 2024. [Google Scholar] [CrossRef]

- Debevec, P.; Malik, J. Recovering High Dynamic Range Radiance Maps from Photographs. In Proceedings of the SIGGRAPH ‘97: Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 3–8 August 1997; pp. 369–378. [Google Scholar]

- Nemoto, H.; Korshunov, P.; Hanhart, P.; Ebrahimi, T. Visual attention in LDR and HDR images. In Proceedings of the 9th International Workshop on Video Processing and Quality Metrics for Consumer Electronics (VPQM), Chandler, AZ, USA, 4–6 February 2015. [Google Scholar]

- Bai, B.; Liu, W. High Dynamic Range Imaging Technology. J. Xi’an Univ. Posts Telecommun. 2020, 25, 63–67+73. [Google Scholar]

- Wu, Z. Research on Generation Algorithms for High Dynamic Range Images; Guilin University of Electronic Technology: Guilin, China, 2021. [Google Scholar]

- Li, H. Research on High Dynamic Range Image Acquisition Methods Based on Deep Learning. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2021. [Google Scholar]

- Deng, Y. Design and Implementation of a High Dynamic Range Image Reconstruction System Based on Multi-Scale Context Awareness; Nanjing University: Nanjing, China, 2020. [Google Scholar]

- Huo, Y.; Yang, F.; Brost, V. Dodging and burning inspired inverse tone mapping algorithm. J. Comput. Inf. Syst. 2013, 9, 3461–3468. [Google Scholar]

- Ye, N. Research on HDR Imaging Methods Based on Deep Learning. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2020. [Google Scholar]

- Chen, A. Research on HDR Imaging Technology Based on Deep Learning. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2022. [Google Scholar]

- Zhang, D.; Huo, Y. Generation of High Dynamic Range Images for Dynamic Scenes. J. Comput.-Aided Des. Graph. 2018, 30, 1625–1636. [Google Scholar] [CrossRef]

- Zeng, H.; Sun, H.; Du, L.; Wang, S. High Dynamic Range Image Synthesis for Spatial Target Observation. Prog. Laser Optoelectron. 2019, 56, 96–103. [Google Scholar]

- Liu, J. Research on High Dynamic Range Image Reconstruction Technology Based on Deep Learning. Master’s Thesis, Xi’an University of Electronic Science and Technology, Xi’an, China, 2023. [Google Scholar]

- Wang, H. Research on High Dynamic Range Image Reconstruction and Display Methods Based on Deep Learning. Doctoral Thesis, Tianjin University, Tianjin, China, 2022. [Google Scholar]

- Ding, W. Research on Color High Dynamic Range Image Quality Evaluation Methods. Master’s Thesis, Tianjin University, Tianjin, China, 2018. [Google Scholar]

- Chen, X. Application of Noise Suppression Technology in the Generation of Single Exposure HDR Images. Audio Eng. Technol. 2024, 48, 42–44. [Google Scholar] [CrossRef]

- Anwar, S.; Barnes, N. Real Image Denoising With Feature Attention. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3155–3164. [Google Scholar] [CrossRef]

- Wang, X.; Liu, S.; Tian, J. High Dynamic Range Image Reconstruction Based on a Dual Attention Network. Adv. Lasers Optoelectron. 2024, 61, 402–409. [Google Scholar]

- Liu, T.; Miao, D.; Bai, Y.; Zhu, Z. Research on Tone Mapping Algorithms for High Dynamic Range Images. Telev. Technol. 2021, 45, 39–45. [Google Scholar]

- Huangfu, Z. Research on Feature-Driven High Dynamic Range Image Tone Mapping Algorithms. Master’s Thesis, Henan University of Science and Technology, Luoyang, China, 2020. [Google Scholar]

- Miao, D. Research on High Dynamic Range Image Tone Mapping Algorithms. Master’s Thesis, Zhengzhou University, Zhengzhou, China, 2020. [Google Scholar]

- Yu, L. Research on High Dynamic Range Image Processing Technology Based on Visual Mechanisms. University of Electronic Science and Technology of China: Chengdu, China, 2020. [Google Scholar]

- Cheng, H. Research on Tone Mapping Algorithms for High Dynamic Range Images; University of Chinese Academy of Sciences (Institute of Optoelectronic Technology, Chinese Academy of Sciences): Beijing, China, 2019. [Google Scholar]

- Jia, A. Research on Tone Mapping Algorithms for High Dynamic Range Images. Master’s Thesis, Southwest University of Finance and Economics, Chengdu, China, 2019. [Google Scholar]

- Sharif, S.M.A.; Naqvi, R.A.; Biswas, M.; Kim, S. A Two-stage Deep Network for High Dynamic Range Image Reconstruction. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Nashville, TN, USA, 19–25 June 2021; pp. 550–559. [Google Scholar] [CrossRef]

- Nemoto, H.; Korshunov, P.; Hanhart, P.; Ebrahimi, T. Visual Attention in LDR and HDR Images. 2015. Available online: https://infoscience.epfl.ch/server/api/core/bitstreams/e9191d72-e6cd-4fc2-965e-0f91092212de/content (accessed on 18 September 2025).

- Fan, K.; Liang, J.; Li, F.; Qiu, P. CNN Based No-Reference HDR Image Quality Assessment. Chin. J. Electron. 2021, 30, 282–288. [Google Scholar]

- Tian, H.; Hao, T.; Zhang, H. A brightness measurement method based on high dynamic range images. Prog. Laser Optoelectron. 2019, 56, 188–193. [Google Scholar]

- Kim, S.Y.; Oh, J.; Kim, M. Deep SRITM: Joint learning of superresolution and inverse tonemapping for 4k uhd hdr applications. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3116–3125. [Google Scholar]

- Chen, X.; Zhang, Z.; Ren, J.S.; Tian, L.; Qiao, Y.; Dong, C. A New Journey from SDRTV to HDRTV. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021. [Google Scholar]

- Kim, S.Y.; Oh, J.; Kim, M. JSIGAN: GANBased Joint SuperResolution and Inverse ToneMapping with PixelWise TaskSpecific Filters for UHD HDR Video. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11287–11295. [Google Scholar]

- Zhang, Z.; Chen, X.; Wang, Y.; Cai, H. HDR Image Reconstruction Algorithm Based on Masked Transformer. Laser Optoelectron. Prog. 2025, 62, 409–420. [Google Scholar]

- Bei, Y.; Wang, Q.; Cheng, Z.; Pan, X.; Yang, M.; Ding, D. A HDR Image Generation Method Based on Conditional Generative Adversarial Network. J. Beijing Univ. Aeronaut. Astronaut. 2022, 48, 45–52. [Google Scholar] [CrossRef]

- Xu, M.; Xie, W.; Yao, B. Research on HDR Reconstruction Method Based on CycleGAN. Intell. Comput. Appl. 2023, 13, 180–185. [Google Scholar]

| Method | CPR | ΔE | SSIM | Processing Time (ms) |

|---|---|---|---|---|

| Tanh-μ-law | 0.85 | 2.8 | 0.91 | 12.3 |

| Reinhard | 0.89 | 2.1 | 0.93 | 8.7 |

| ACES | 0.87 | 2.3 | 0.94 | 15.2 |

| Drago | 0.83 | 2.9 | 0.90 | 11.8 |

| Module Configuration | PSNR (dB) | SSIM | CIEDE2000 | DR Gain (dB) |

|---|---|---|---|---|

| Full Model (Ours) | 45.71 | 0.937 | 3.41 | 45.6 |

| w/o Multi-Scale Feature Fusion | 43.32 | 0.915 | 3.68 | 44.9 |

| w/o Attention Mechanism | 43.08 | 0.911 | 3.74 | 44.7 |

| w/o Luminance and Color-Enhancement | 41.85 | 0.908 | 3.81 | 44.3 |

| Method | PSNR (dB) | SSIM | HDR-VDP3 | |||

|---|---|---|---|---|---|---|

| m | σ | m | σ | m | σ | |

| Deep Chain HDRI [37] | 30.86 | 2.77 | 0.9435 | 0.0369 | - | |

| HDRUNet [10] | 41.61 | 3.36 | - | - | ||

| SingleHDR [5] | 32.32 | 3.27 | - | - | ||

| ResNet (L1) [3] | 39.82 | 2.21 | 0.9213 | 0.0497 | 8.192 | 0.441 |

| Deep SR-ITM [41] | 43.29 | 2.81 | 0.9396 | 0.0469 | 8.311 | 0.568 |

| HDRTV [42] | 37.21 | 3.04 | 0.9199 | 0.0295 | 8.569 | 0.498 |

| JSI-GAN [43] | 37.08 | 3.36 | 0.9489 | 0.0361 | 8.339 | 0.559 |

| Transformer-HDR [44] | 31.95 | 4.28 | 0.9317 | 0.0593 | - | |

| Generative Adversarial Network [45] | 44.37 | 4.07 | 0.9692 | 0.0392 | - | |

| CycleGAN-HDR [46] | 42.89 | 4.31 | 0.9450 | 0.0564 | - | |

| Ours | 45.71 | 4.34 | 0.9579 | 0.0571 | 8.716 | 0.567 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, L.; Tong, X.; Zhang, L. Single-Image High Dynamic Range Reconstruction via Improved HDRUNet with Attention and Multi-Component Loss. Appl. Sci. 2025, 15, 10431. https://doi.org/10.3390/app151910431

Gao L, Tong X, Zhang L. Single-Image High Dynamic Range Reconstruction via Improved HDRUNet with Attention and Multi-Component Loss. Applied Sciences. 2025; 15(19):10431. https://doi.org/10.3390/app151910431

Chicago/Turabian StyleGao, Liang, Xiaoyun Tong, and Laixian Zhang. 2025. "Single-Image High Dynamic Range Reconstruction via Improved HDRUNet with Attention and Multi-Component Loss" Applied Sciences 15, no. 19: 10431. https://doi.org/10.3390/app151910431

APA StyleGao, L., Tong, X., & Zhang, L. (2025). Single-Image High Dynamic Range Reconstruction via Improved HDRUNet with Attention and Multi-Component Loss. Applied Sciences, 15(19), 10431. https://doi.org/10.3390/app151910431