Open-Vocabulary Crack Object Detection Through Attribute-Guided Similarity Probing

Abstract

1. Introduction

- ●

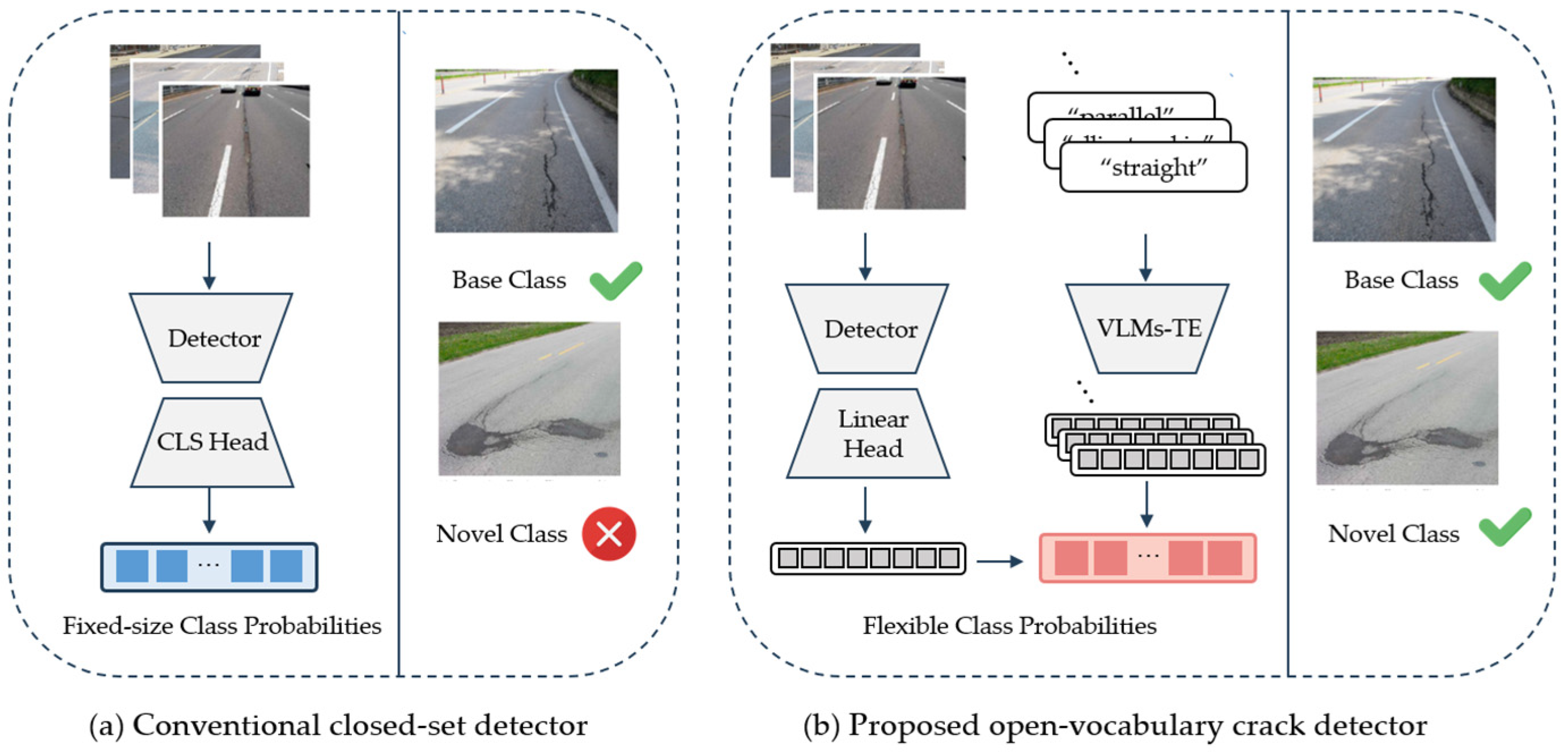

- This study addresses the need for a universal system capable of adapting to various defect types and real-world environmental changes and proposes a flexible and scalable detection framework for road maintenance. By applying open-vocabulary detection to the road defect detection domain, it overcomes the limitations of models trained on fixed class sets.

- ●

- We introduce an extended annotation for the PPDD dataset in which road crack types are defined as combinations of visual attributes, allowing for representation learning that can generalize to novel classes.

- ●

- We empirically demonstrate that a model trained with attribute-based alignment achieves better performance in detecting unseen categories (novel classes) compared to conventional class-only models.

2. Related Work

2.1. Object Detection of Pavement Detection

2.2. Open-Vocabulary Detection

3. Methods

3.1. Preliminaries

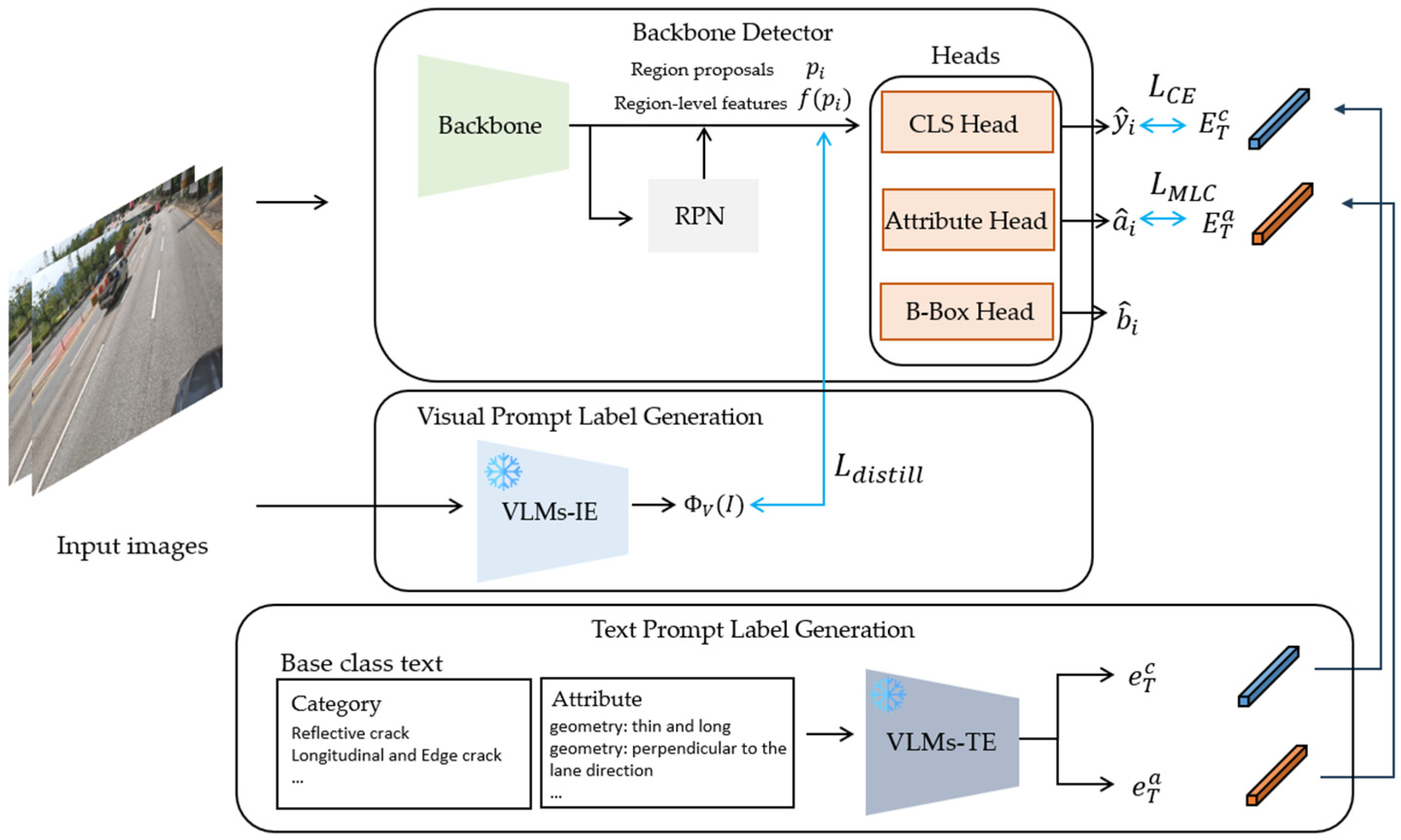

3.2. AOVCD Baseline Methods

3.3. Crack Category Alignment Loss

3.4. Crack Attribute Alignment Loss

3.5. Total Training Objective

4. Experiments

4.1. Dataset and Preprocessing

4.2. Experimental

4.2.1. Experimental Details

4.2.2. Code Availability

5. Results

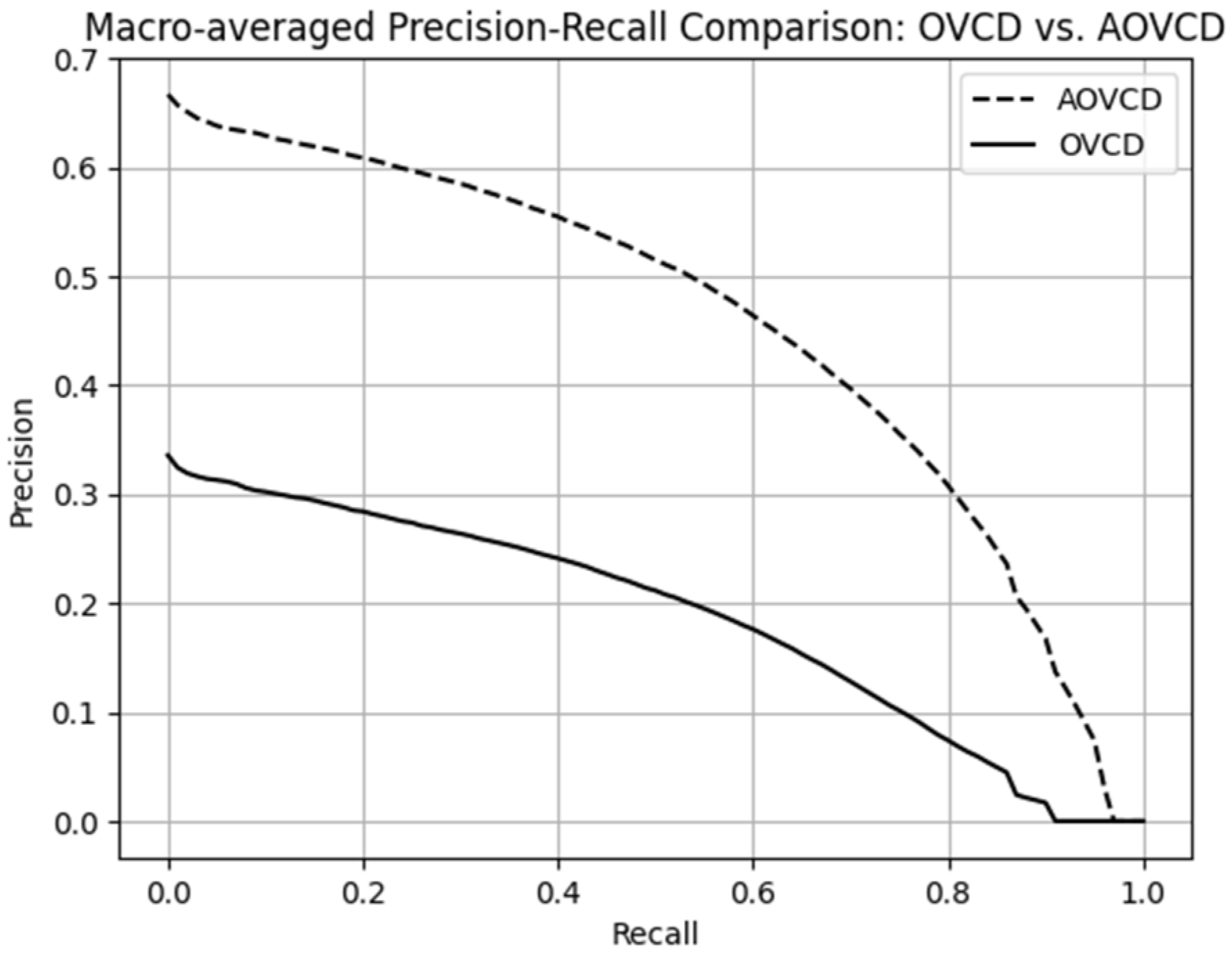

5.1. Comparison of Baseline Models by Attribute Aligned

5.2. Performance Comparison by Crack Type

5.3. Ablation Study of Attribute Detection

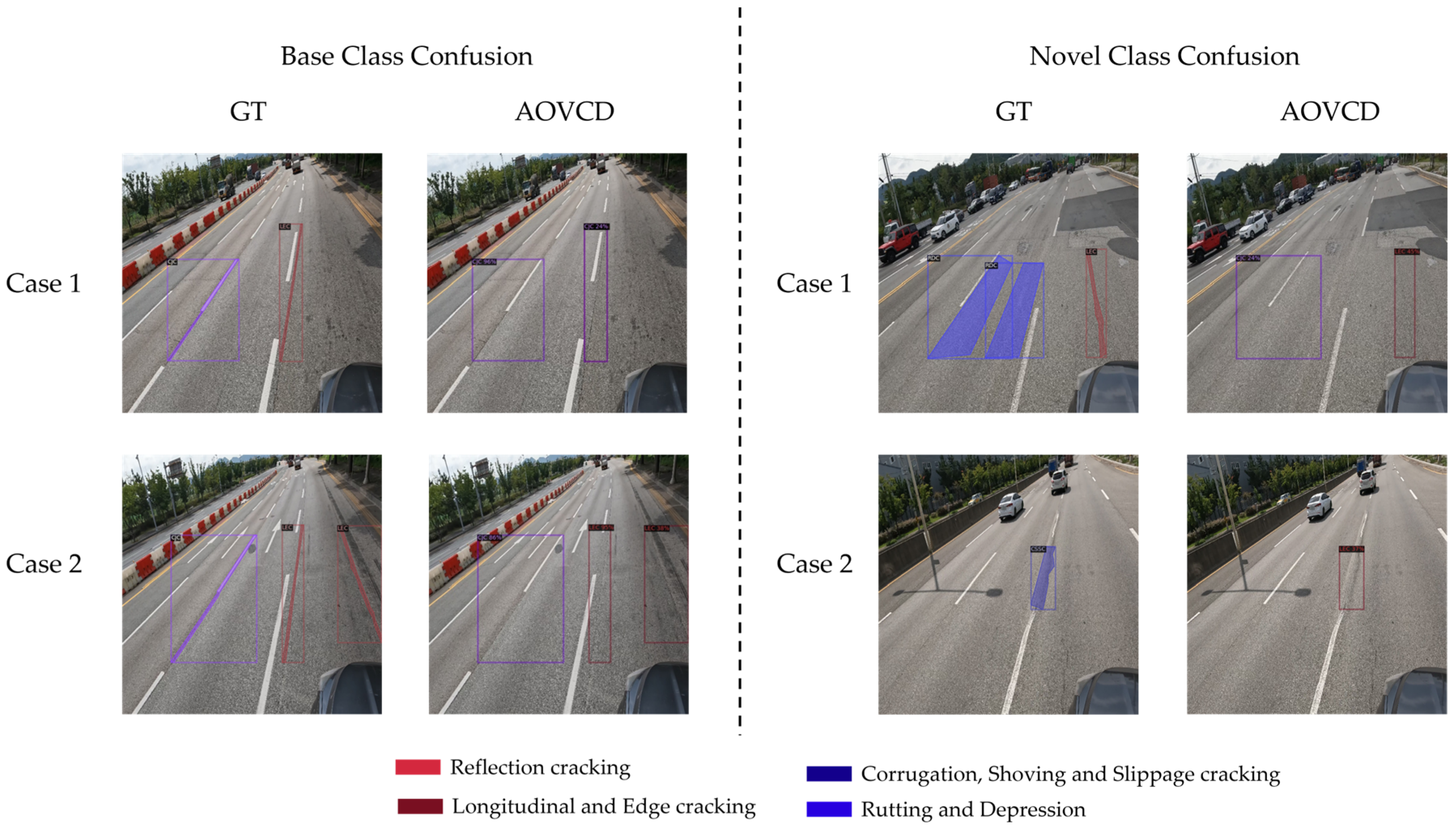

5.4. Qualitative Evaluation by Attribute Alignment

6. Discussion

6.1. Road Crack Detection and Segmentation Dataset

6.2. Limitation and Future Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Naddaf-Sh, S.; Naddaf-Sh, M.-M.; Kashani, A.R.; Zargarzadeh, H. An efficient and scalable deep learning approach for road damage detection. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5602–5608. [Google Scholar]

- Li, S.; Zhang, D. Deep Learning-Based Algorithm for Road Defect Detection. Sensors 2025, 25, 1287. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Meng, R.; Huang, Y.; Zhou, L.; Huo, L.; Qiao, Z.; Niu, C. Road defect detection based on improved YOLOv8s model. Sci. Rep. 2024, 14, 16758. [Google Scholar] [CrossRef]

- Liu, H.; Miao, X.; Mertz, C.; Xu, C.; Kong, H. Crackformer: Transformer network for fine-grained crack detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3783–3792. [Google Scholar]

- Yang, Y.; Niu, Z.; Su, L.; Xu, W.; Wang, Y. Multi-scale feature fusion for pavement crack detection based on Transformer. Math. Biosci. Eng. 2023, 20, 14920–14937. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual Event, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the COMPUTER vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part v 13. pp. 740–755. [Google Scholar]

- Gupta, A.; Dollar, P.; Girshick, R. Lvis: A dataset for large vocabulary instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5356–5364. [Google Scholar]

- Zareian, A.; Rosa, K.D.; Hu, D.H.; Chang, S.-F. Open-vocabulary object detection using captions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14393–14402. [Google Scholar]

- Zhong, Y.; Yang, J.; Zhang, P.; Li, C.; Codella, N.; Li, L.H.; Zhou, L.; Dai, X.; Yuan, L.; Li, Y. Regionclip: Region-based language-image pretraining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16793–16803. [Google Scholar]

- Lin, C.; Sun, P.; Jiang, Y.; Luo, P.; Qu, L.; Haffari, G.; Yuan, Z.; Cai, J. Learning object-language alignments for open-vocabulary object detection. arXiv 2022, arXiv:2211.14843. [Google Scholar]

- Bravo, M.A.; Mittal, S.; Ging, S.; Brox, T. Open-vocabulary attribute detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7041–7050. [Google Scholar]

- Thompson, E.M.; Ranieri, A.; Biasotti, S.; Chicchon, M.; Sipiran, I.; Pham, M.-K.; Nguyen-Ho, T.-L.; Nguyen, H.-D.; Tran, M.-T. SHREC 2022: Pothole and crack detection in the road pavement using images and RGB-D data. Comput. Graph. 2022, 107, 161–171. [Google Scholar] [CrossRef]

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Sekimoto, Y. RDD2020: An annotated image dataset for automatic road damage detection using deep learning. Data Brief 2021, 36, 107133. [Google Scholar] [CrossRef] [PubMed]

- Mandal, V.; Mussah, A.R.; Adu-Gyamfi, Y. Deep learning frameworks for pavement distress classification: A comparative analysis. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5577–5583. [Google Scholar]

- Zhao, C.; Shu, X.; Yan, X.; Zuo, X.; Zhu, F. RDD-YOLO: A modified YOLO for detection of steel surface defects. Measurement 2023, 214, 112776. [Google Scholar] [CrossRef]

- Yang, F.; Zhang, L.; Yu, S.; Prokhorov, D.; Mei, X.; Ling, H. Feature pyramid and hierarchical boosting network for pavement crack detection. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1525–1535. [Google Scholar] [CrossRef]

- Eisenbach, M.; Stricker, R.; Seichter, D.; Amende, K.; Debes, K.; Sesselmann, M.; Ebersbach, D.; Stoeckert, U.; Gross, H.-M. How to get pavement distress detection ready for deep learning? A systematic approach. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 2039–2047. [Google Scholar]

- Choi, W.; Cha, Y.-J. SDDNet: Real-time crack segmentation. IEEE Trans. Ind. Electron. 2019, 67, 8016–8025. [Google Scholar] [CrossRef]

- Tang, Y.; Zhang, A.A.; Luo, L.; Wang, G.; Yang, E. Pixel-level pavement crack segmentation with encoder-decoder network. Measurement 2021, 184, 109914. [Google Scholar]

- Guo, J.-M.; Markoni, H.; Lee, J.-D. BARNet: Boundary aware refinement network for crack detection. IEEE Trans. Intell. Transp. Syst. 2021, 23, 7343–7358. [Google Scholar] [CrossRef]

- Qu, Z.; Cao, C.; Liu, L.; Zhou, D.-Y. A deeply supervised convolutional neural network for pavement crack detection with multiscale feature fusion. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 4890–4899. [Google Scholar]

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Sekimoto, Y. RDD2022: A multi-national image dataset for automatic road damage detection. Geosci. Data J. 2024, 11, 846–862. [Google Scholar]

- Kortmann, F.; Talits, K.; Fassmeyer, P.; Warnecke, A.; Meier, N.; Heger, J.; Drews, P.; Funk, B. Detecting various road damage types in global countries utilizing faster R-CNN. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5563–5571. [Google Scholar]

- Zeng, J.; Zhong, H. YOLOv8-PD: An improved road damage detection algorithm based on YOLOv8n model. Sci. Rep. 2024, 14, 12052. [Google Scholar]

- Jia, C.; Yang, Y.; Xia, Y.; Chen, Y.-T.; Parekh, Z.; Pham, H.; Le, Q.; Sung, Y.-H.; Li, Z.; Duerig, T. Scaling up visual and vision-language representation learning with noisy text supervision. In Proceedings of the International Conference on Machine Learning, Virtual Event, 18–24 July 2021; pp. 4904–4916. [Google Scholar]

- Kamath, A.; Singh, M.; LeCun, Y.; Synnaeve, G.; Misra, I.; Carion, N. Mdetr-modulated detection for end-to-end multi-modal understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 1780–1790. [Google Scholar]

- Li, L.H.; Zhang, P.; Zhang, H.; Yang, J.; Li, C.; Zhong, Y.; Wang, L.; Yuan, L.; Zhang, L.; Hwang, J.-N. Grounded language-image pre-training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10965–10975. [Google Scholar]

- Gu, X.; Lin, T.-Y.; Kuo, W.; Cui, Y. Open-vocabulary object detection via vision and language knowledge distillation. arXiv 2021, arXiv:2104.13921. [Google Scholar]

- Zhou, X.; Girdhar, R.; Joulin, A.; Krähenbühl, P.; Misra, I. Detecting twenty-thousand classes using image-level supervision. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 350–368. [Google Scholar]

- Cherti, M.; Beaumont, R.; Wightman, R.; Wortsman, M.; Ilharco, G.; Gordon, C.; Schuhmann, C.; Schmidt, L.; Jitsev, J. Reproducible scaling laws for contrastive language-image learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 2818–2829. [Google Scholar]

- Chen, K.; Jiang, X.; Hu, Y.; Tang, X.; Gao, Y.; Chen, J.; Xie, W. Ovarnet: Towards open-vocabulary object attribute recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision And Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 23518–23527. [Google Scholar]

- Yoon, H.; Kim, H.-K.; Kim, S. PPDD: Egocentric Crack Segmentation in the Port Pavement with Deep Learning-Based Methods. Appl. Sci. 2025, 15, 5446. [Google Scholar] [CrossRef]

- Miller, J.S.; Bellinger, W.Y. Distress Identification Manual for the Long-Term Pavement Performance Program; Department of Transportation, Federal Highway Administration: Washington, DC, USA, 2003. [Google Scholar]

- Liu, L.; Jiang, H.; He, P.; Chen, W.; Liu, X.; Gao, J.; Han, J. On the variance of the adaptive learning rate and beyond. arXiv 2019, arXiv:1908.03265. [Google Scholar]

- Loshchilov, I.; Hutter, F. Sgdr: Stochastic gradient descent with warm restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar]

- Yaseen, M. What is YOLOv9: An in-depth exploration of the internal features of the next-generation object detector. arXiv 2024, arXiv:2409.07813. [Google Scholar]

- Liu, Y.; Yao, J.; Lu, X.; Xie, R.; Li, L. DeepCrack: A deep hierarchical feature learning architecture for crack segmentation. Neurocomputing 2019, 338, 139–153. [Google Scholar] [CrossRef]

- Zou, Q.; Cao, Y.; Li, Q.; Mao, Q.; Wang, S. CrackTree: Automatic crack detection from pavement images. Pattern Recognit. Lett. 2012, 33, 227–238. [Google Scholar] [CrossRef]

- Zou, Q.; Zhang, Z.; Li, Q.; Qi, X.; Wang, Q.; Wang, S. Deepcrack: Learning hierarchical convolutional features for crack detection. IEEE Trans. Image Process. 2018, 28, 1498–1512. [Google Scholar] [CrossRef]

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Omata, H.; Kashiyama, T.; Sekimoto, Y. Global road damage detection: State-of-the-art solutions. In Proceedings of the 2020 IEEE International Conference on Big Data (Big Data), Atlanta, GA, USA, 10–13 December 2020; pp. 5533–5539. [Google Scholar]

- Gui, R.; Xu, X.; Zhang, D.; Pu, F. Object-based crack detection and attribute extraction from laser-scanning 3D profile data. IEEE Access 2019, 7, 172728–172743. [Google Scholar]

- Li, X.L.; Liang, P. Prefix-tuning: Optimizing continuous prompts for generation. arXiv 2021, arXiv:2101.00190. [Google Scholar] [CrossRef]

- Wang, H.; Du, W.; Xu, G.; Sun, Y.; Shen, H. Automated crack detection of train rivets using fluorescent magnetic particle inspection and instance segmentation. Sci. Rep. 2024, 14, 10666. [Google Scholar] [CrossRef] [PubMed]

- El-Din Hemdan, E.; Al-Atroush, M. A review study of intelligent road crack detection: Algorithms and systems. Int. J. Pavement Res. Technol. 2025, 1–31. [Google Scholar] [CrossRef]

- Kim, J.; Seon, J.; Kim, S.; Sun, Y.; Lee, S.; Kim, J.; Hwang, B.; Kim, J. Generative AI-driven data augmentation for crack detection in physical structures. Electronics 2024, 13, 3905. [Google Scholar] [CrossRef]

- Wang, Y.; Zhu, H.; Wang, Y.; Liu, J.; Xie, J.; Zhao, B.; Zhao, S. GSBYOLO: A lightweight Multi-Scale fusion network for road crack detection in complex environments. Sci. Rep. 2025, 15, 26615. [Google Scholar] [CrossRef]

| Reflective Crack | Longitudinal Edge Crack | Corrugation Shoving Slippage Crack | Rutting Depression Crack | Construction Joint Crack | Alligator Crack | |

|---|---|---|---|---|---|---|

| Visual pattern |  |  |  |  |  |  |

| Number of crack instances | 124,780 | 183,094 | 20,210 | 68,434 | 109,625 | 88,571 |

| Crack | Attribute of Crack | Category (Class) |

|---|---|---|

| Reflective crack | “geometry: thin and long”, “geometry: perpendicular to the lane direction” | RC |

| Longitudinal crack | “geometry: thin and long”, “geometry: parallel to the lane direction”, “spatial: pavement edge” | LEC |

| Edge crack | ||

| Corrugation | “geometry: perpendicular to the lane direction”, “texture: wave-like”, “geometry: crescent-shape”, “geometry: curved” | CSSC |

| Shoving | ||

| Slippage crack | ||

| Rutting | “geometry: depressed”, “geometry: being lower”, “texture: widely fragmented and finely broken”, “spatial: aligned with wheel paths” | RDC |

| Depression | ||

| Construction joint crack | “geometry: straight”, “geometry: thin and long”, “geometry: parallel to the lane direction”, “spatial: aligned with lane markings” | CJC |

| Alligator crack | “geometry: polygonal”, “texture: finely cracked”, “texture: alligator skin”, “texture: finely fragmented” | AC |

| Base Class | Novel Class | Total | |

|---|---|---|---|

| Baseline (CLIP zero-shot) | 0.05/0.14 | 0.74/1.21 | 0.12/0.51 |

| CLIP-ViT-B32 (category) | 38.28/68.02 | 2.20/3.24 | 17.69/35.63 |

| CLIP-ViT-B32 (category + attribute) | 40.52/70.98 | 6.27/13.83 | 22.32/42.40 |

| Base Class | Novel Class | |||||

|---|---|---|---|---|---|---|

| RC | LEC | CJC | AC | CSSC | RDC | |

| Baseline (CLIP zero-shot) | 0.11 | 0.12 | 0.16 | 0.0 | 0.16 | 2.17 |

| OVCD | 40.87 | 47.20 | 67.78 | 76.30 | 1.98 | 4.03 |

| AOVCD | 46.33 | 52.06 | 70.80 | 78.58 | 15.56 | 10.83 |

| Total | Geometry | Spatial | Texture | |||||

|---|---|---|---|---|---|---|---|---|

| BACC | AP | BACC | AP | BACC | AP | BACC | AP | |

| Baseline(CLIP zero-shot) | 0.11 | 0.09 | 0.19 | 0.03 | 0.04 | 0.15 | 0.19 | 0.03 |

| OVCD | 1.12 | 1.08 | 0.21 | 0.05 | 0.13 | 0.24 | 2.23 | 2.20 |

| AOVCD | 41.04 | 25.73 | 38.74 | 25.59 | 45.01 | 35.54 | 43.26 | 20.12 |

| Geometry | Spatial | Texture | Base | Novel | Total |

|---|---|---|---|---|---|

| O | X | X | 68.94 | 10.07 | 40.56 |

| O | O | X | 69.11 | 9.89 | 40.59 |

| O | O | O | 70.98 | 13.83 | 42.40 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoon, H.; Kim, S. Open-Vocabulary Crack Object Detection Through Attribute-Guided Similarity Probing. Appl. Sci. 2025, 15, 10350. https://doi.org/10.3390/app151910350

Yoon H, Kim S. Open-Vocabulary Crack Object Detection Through Attribute-Guided Similarity Probing. Applied Sciences. 2025; 15(19):10350. https://doi.org/10.3390/app151910350

Chicago/Turabian StyleYoon, Hyemin, and Sangjin Kim. 2025. "Open-Vocabulary Crack Object Detection Through Attribute-Guided Similarity Probing" Applied Sciences 15, no. 19: 10350. https://doi.org/10.3390/app151910350

APA StyleYoon, H., & Kim, S. (2025). Open-Vocabulary Crack Object Detection Through Attribute-Guided Similarity Probing. Applied Sciences, 15(19), 10350. https://doi.org/10.3390/app151910350