Abstract

With the rapid development of energy transition and power system informatization, the efficient integration and feature migration of multimodal power data have become critical challenges for intelligent power systems. Existing methods often overlook fine-grained semantic relationships in cross-modal alignment, leading to low information utilization. This paper proposes a multimodal power sample feature migration method based on dual cross-modal information decoupling. By introducing a fine-grained image–text alignment strategy and a dual-stream attention mechanism, deep integration and efficient migration of multimodal features are achieved. Experiments demonstrate that the proposed method outperforms baseline models (e.g., LLaVA, Qwen) in power scenario description (CSD), event localization (CELC), and knowledge question answering (CKQ), with significant improvements of up to 12.8% in key metrics such as image captioning (IC) and grounded captioning (GC). The method provides a robust solution for multimodal feature migration in power inspection and real-time monitoring, showing high practical value in industrial applications.

1. Introduction

The Internet of Things (IoT) refers to an expansive network of heterogeneous devices—ranging from sensors and actuators to embedded processors—that are interconnected through communication networks to collect, exchange, and act upon data. These systems typically follow a multi-tier architecture composed of perception/sensing layers, network gateways or edge nodes, and cloud or application platforms, enabling real-time monitoring, analytics, and intelligent control across diverse domains.

Driven by the energy revolution and the vigorous advancement of power system informatization, power systems and renewable energy play a critical role in the energy transition [1]. The development of the Energy IoT and communication technologies has enhanced the richness and reliability of power system data, while rapid information exchange provides a solid data foundation for big data applications in power systems. Power systems are embedded with various types of sensors generating massive heterogeneous data, represented in different forms such as numbers, images, text, and time series. Efficiently integrating these diverse, multi-structural, and multi-source data forms and extracting valuable information for application in various scenarios presents a significant challenge for the future development of smart power systems [2].

Multimodal power data possesses a high degree of correlation. Through comprehensive analysis, it can enable accurate, unified, and holistic perception of power systems across various links and scenarios, holding significant importance for fields such as power inspection and real-time monitoring [3,4,5]. Currently, the informatization level of China’s power systems is deepening, and comprehensive data coverage and collection have largely been achieved. Considering the convenience of data acquisition and processing complexity, mainstream existing solutions tend to leverage the fusion of textual and image information for the integrated utilization of power system data. Although the scale of data continues to expand, the density of valuable data remains relatively low, with considerable redundant information present. Furthermore, the widespread use of multiple categories of sensors leads to increasingly complex network structures in power grid systems, significantly elevating the difficulty of calibrating heterogeneous data [6,7,8].

Current deep learning-based methods for multimodal data processing can integrate different multimodal information into a unified semantic space, which can be specifically categorized into implicit alignment and explicit alignment [9,10]. Implicit alignment utilizes encoders to represent each modality’s information and employs pixel-level attention to fuse language representations directly with visual features. However, this approach overlooks the intrinsic information within the expressions and the fine-grained relationships between images and textual descriptions, resulting in suboptimal comprehensive utilization of information and difficulty in widespread application to downstream tasks [11,12]. Explicit alignment aims to use a unified codebook or prototypes to represent different modalities, serving as a bridge to facilitate cross-modal alignment. Currently, there is a lack of an efficient paired multimodal data alignment method for learning more discriminative multimodal representations.

To enhance the capability for extracting features from both image and textual data within power systems while achieving information alignment between paired modalities, this paper first proposes a fine-grained text–image alignment strategy. This strategy jointly utilizes input images and their corresponding textual features to achieve more discriminative cross-modal representations. Secondly, an image–text attention mechanism is designed to effectively model the correlations between data from different modalities and reduce redundant information. This paper applies the proposed multimodal data feature alignment framework to downstream tasks in power systems. Through the comprehensive utilization of paired text–image information, efficient operation of power system tasks is achieved.

The specific contributions of this paper are as follows:

- We design a fine-grained image–text alignment strategy that aligns hierarchical visual features from various stages of the encoder with corresponding linguistic features. This effectively captures discriminative multimodal representations.

- We propose a multimodal data feature alignment framework tailored for power systems. By implementing a dual-stream text–image attention mechanism, it achieves richer cross-modal interactions and successfully realizes multimodal power sample feature migration.

- We conduct evaluation experiments on multimodal tasks within the power industry. The results demonstrate that our proposed framework can effectively extract shared semantic information from paired multimodal data and project it into a fine-grained, common quantized latent space. This provides a practical solution for multimodal feature migration tasks in the power sector.

2. Related Work

2.1. Multimodal Sample Feature Migration Method

The multimodal sample feature migration method represents a significant research direction in the field of multimodal learning. It aims to enhance models’ understanding and processing capabilities for multimodal data by transferring features or knowledge from one modality to others via transfer learning. In recent years, substantial progress has been achieved in this domain, primarily focused on feature alignment, fusion strategies, and optimization of cross-modal interactions.

Early research predominantly centered on simple fusion strategies, such as early fusion and late fusion. However, these approaches often failed to adequately capture complex inter-modal relationships. To overcome this limitation, researchers have proposed various advanced methods. For instance, MTFR (modal transfer and fusion refinement) introduces dedicated modules for modal transfer and fusion refinement [12,13], performing feature fusion in both early and late stages of the model to enhance generalization capabilities. Furthermore, the proposal of Cross-Modal Generalization (CMG) tasks has further advanced the learning of fine-grained unified representations for multimodal sequences.

Regarding feature alignment, recent studies have explored enhancing multimodal fusion effectiveness by optimizing inter-modal alignment mechanisms. For example, the AlignMamba method [14] significantly improves multimodal fusion performance through localized and global cross-modal alignment strategies. Additionally, certain studies [15] explicitly reduce modal discrepancies by introducing optimal transport plans to transform and align features across different temporal phases.

Despite notable advancements in multimodal sample feature migration methods, several challenges persist, such as significant representational disparities across different modal data and issues with noise and missing values in multimodal datasets. Future research will likely concentrate on developing more efficient feature alignment and fusion algorithms, as well as exploring the utilization of pre-trained models to further enhance feature migration performance.

2.2. Multimodal Unified Representation

In previous research, multimodal unified representation has been categorized into implicit and explicit approaches. Implicit multimodal unified representation aims to arrange different modalities within a shared latent space or attempts to learn a modality-agnostic encoder for information extraction across modalities [16,17]. These methods explore unified representations for various modality combinations, such as speech–text [18], video–audio [19], and vision–text [17]. To achieve these objectives, diverse methodologies have been proposed. Pedersoli et al. [19] and Sarkar et al. [20] introduced cross-modal knowledge distillation to transfer knowledge across modalities. CLIP-based approaches [21] employ contrastive loss to learn image–text consistency from large-scale paired datasets, demonstrating remarkable zero-shot capabilities in various downstream tasks.

Recently, several studies have investigated explicit multimodal unified representation using universal codebooks [22] and prototypes [23]. Duan et al. [24] applied optimal transport to map feature vectors extracted from different modalities into prototypes. Zhao et al. [22] utilized self-cross reconstruction to enhance mutual information between modalities. However, such coarse-grained alignment methods [22] are only suitable for simple tasks like retrieval and failure with fine-grained understanding in downstream tasks. In recent years, [25] achieved unified alignment of text and speech within a discrete token space. Liu et al. [26] employed a similar scheme to align short videos with speech/text. Nevertheless, a pivotal assumption enabling their success is the one-to-one alignment of selected modalities (e.g., text-to-speech), whereas in more general scenarios, it is challenging to guarantee complete semantic consistency between two paired modalities (e.g., unconstrained video and audio).

2.3. Cross-Modal Mutual Information Assessment

Cross-modal mutual information (MI) assessment aims to quantify the dependency between two random variables. Recent work has primarily focused on integrating MI assessment with deep neural networks [27], encompassing both MI maximization and minimization.

MI maximization seeks to learn representations that capture meaningful and useful information about input data, thereby enhancing performance across various downstream tasks [28,29]. To maximize mutual information within sequences, Contrastive Predictive Coding (CPC) [30] employs autoregressive models combined with contrastive estimation to capture long-term dependencies while preserving local features within sequences.

MI minimization attempts to reduce correlations between two random variables while retaining relevant information. This approach has been successfully applied to disentangled representation learning [31].

3. Power System-Oriented Multimodal Data Feature Alignment Framework

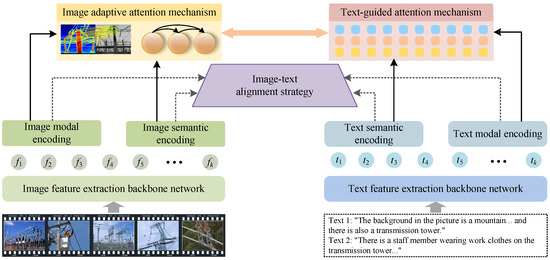

This paper addresses multimodal feature extraction and alignment from two perspectives: First, we introduce a dual cross-modal information decoupling module designed to extract fine-grained semantic information and disentangle it from modality-specific characteristics within each modality. Second, we align the extracted semantic features and modality-specific features through a fine-grained image–text alignment strategy to capture more discriminative representations. Concurrently, the framework employs an image-adaptive attention mechanism and a text-guided attention mechanism to enhance critical information extraction, strengthen structural-level features, and improve semantic alignment in cross-modal analysis using weakly-paired data. The design process of our network is illustrated in Figure 1.

Figure 1.

A multimodal data feature alignment framework for power systems. It consists of image and text feature extraction modules, semantic and modal encoders, and two attention mechanisms (image-adaptive and text-guided) integrated into a fine-grained alignment strategy.

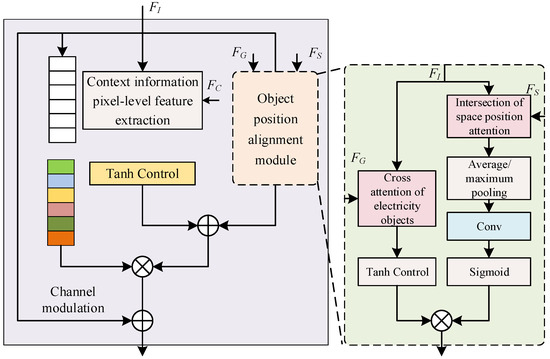

Unlike conventional image–text alignment methods employed in prior approaches, we introduce a novel multimodal fusion strategy from a fine-grained alignment perspective to capture more discriminative representations. Specifically, given the visual features and linguistic features , , , our image–text alignment strategy facilitates the deep interaction of features between these visual and linguistic representations. Here, C, H, and W denote the channel number, height, and width of the visual feature map, respectively. D represents the dimension of word embeddings. , , and indicate the sequence lengths of contextual expressions, ground-truth targets, and spatial positional expressions, respectively.The detailed structural implementation is illustrated in Figure 2.

Figure 2.

Fine-grained image–text alignment strategy. The module integrates object position alignment, context alignment, and channel modulation, using cross-attention and gating mechanisms to enhance semantic consistency between visual and textual features.

The core components of the fine-grained image–text alignment strategy consist of an object position alignment module, context alignment, and channel modulation. We propose the object position alignment module to implement deep interaction between power objects/spatial positions and visual representations. This module achieves precise alignment of object-related and spatial features, enabling the model to capture more accurate relationships between objects and their locations within images, thereby enhancing referring segmentation performance. The detailed structure of this module is illustrated in Figure 2.

As illustrated in Figure 2, the fine-grained alignment module is composed of two synergistic sub-branches. The left sub-block processes semantic alignment, where the “Tanh Control” module refers to a non-linear gating operation designed to enhance feature expressiveness. It includes a linear transformation followed by a Tanh activation, which modulates the contextual features before channel recalibration. To clarify, this is not a control unit in the hardware sense but a semantic gating mechanism that adjusts pixel-level features. The right-hand side represents spatial alignment, incorporating both average and max pooling, followed by a 1×1 convolution and Sigmoid nonlinearity to compute spatial attention weights, which are then used to refine image features guided by spatial text cues. Specifically, the dual-branch architecture is constructed with a power object branch and a spatial position branch. The primary element of the power object branch is a power object cross-attention mechanism that integrates visual features, , and textual features, . Here, we utilize as the Query and as the Key and Value to implement feature fusion. This implementation can be formally defined as

Formally, for each row vector, , in , the softmax is computed as

where , , and represent linear projections of the corresponding Query, Key, and Value, respectively. C denotes the dimensionality of the Query vector.

Additionally, the image–language features are further modulated by a Tanh gating mechanism to provide enhanced local details, thereby generating the output for the ground-truth object branch. The computation is formally defined as

where , are learnable linear projection matrices. and are bias terms. ReLU introduces non-linearity for feature activation, and Tanh serves as a final gating activation to suppress noise and retain information. Tanh_Gate(.) denotes a sequential operation comprising linear projection, ReLU activation, linear projection, and Tanh activation. The cross-attention output in this stage serves as a fine-grained modulation signal between visual and textual features. Using Tanh allows the gate to produce both positive and negative scaling values (in the range ), which enables the model to not only enhance but also suppress irrelevant local channels in the fused representation.In contrast, a Sigmoid gate () can only perform soft activation and lacks the capacity to inhibit noisy or redundant signals, which is often necessary in complex inspection scenes with background clutter. We also consider residual connections and linear projections as alternatives. However, residuals tend to preserve all information (including noise), and linear projections lack dynamic channel-level selectivity. In contrast, Tanh_Gate(.) provides data-dependent, bi-directional feature modulation, which empirically leads to more discriminative attention maps and stronger grounding results in early-stage prototypes.

The spatial position branch aims to capture spatial priors guided by positional descriptions, , in relation to the original visual features, . Specifically, and are included in a cross-attention module where serves as the Query while is the Key and Value:

where is the visual features as the Query input. is the spatial positional expression features as the Key and Value. , , and are learnable projection matrices for the Query, Key, and Value, respectively.

Subsequently, is processed through a series of layers to compute spatial attention, implementing average pooling, max pooling, 1 × 1 convolution, and Sigmoid nonlinearity. This is mathematically described as

where denotes the output of this spatial position branch, representing multimodal spatial priors integrating visual and textual features. is further integrated with ground-truth object features to produce the final output:

Here, ⊗ signifies matrix multiplication. The resulting features represent refined allocation functionality that simultaneously incorporates both power equipment semantics and their corresponding spatial attentions.

In addition to object position alignment, we introduce a context alignment mechanism to capture global relationships between image and linguistic features. Compared to sentence fragments for power objects and spatial locations, the original referring expressions are treated as contextual descriptions containing richer contextual information. Given linguistic context features, , and visual features, , pixel-wise attention is employed to combine these two representations:

Here, the pixel attention module implements per-pixel attention that aligns visual representations with linguistic features from the original descriptions. Similarly to ground-truth object cross-attention and spatial position cross-attention, we utilize as the Query and as the Key/Value for the pixel attention. Furthermore, the image–language features are modulated by a Tanh gate:

After obtaining and , multimodal features, , are derived by combining them:

To facilitate cross-channel information exchange, we propose a channel modulation operation to refine the extracted multimodal features, enhancing the representational discriminability. Specifically, channel dependencies are captured via

where the weight matrices and perform channel reduction and expansion, respectively, denotes the ReLU function, and represents the Sigmoid activation.

The channel weights then recalibrate multimodal features using the input :

Through fine-grained image–text alignment, effectively integrates visual features with hierarchical textual representations covering context, power objects, and spatial positions. Compared to existing methods, our network extracts more discriminative features, enabling more accurate pixel-level segmentation results.

4. Experiments and Analysis

4.1. Datasets

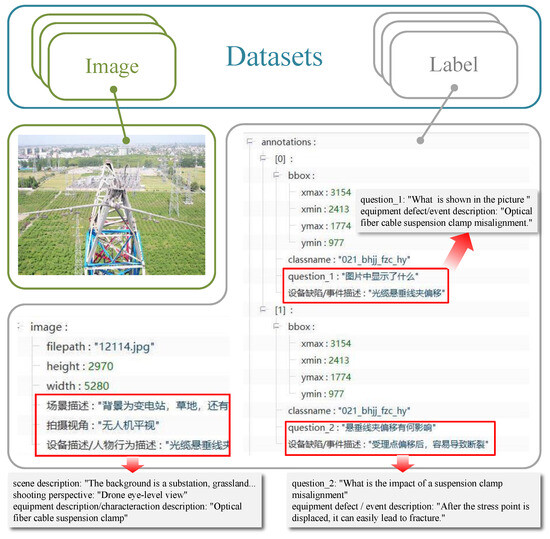

The dataset employed in this study was collected from transmission–substation–safety monitoring scenarios within a regional power grid. It comprises rich multimodal information, covering diverse annotation formats including scene descriptions, professional knowledge Q&A, and instance detection. The dataset was formatted in JSON to facilitate subsequent data processing and analysis.

The primary components of the dataset include the following three aspects:

- Scene Descriptions: Detailed textual characterizations of operational environments.

- Professional Knowledge Q&A: Expert-curated question–answer pairs on grid operations.

- Instance Detection: Annotated visual targets with bounding boxes and defect labels.

As illustrated in Figure 3, a sample transmission scenario visualization is provided, demonstrating the multimodal annotations.

Figure 3.

A sample from the multimodal transmission–substation–safety monitoring dataset. The annotations include scene descriptions, professional knowledge Q&A, and instance detection with bounding boxes.

Specifically, the instance detection data within the dataset comprises the following primary categories:

- Safety Monitoring—Operational Violations: Includes violations related to fall protection for aerial work, safety warnings at job sites, and personal protective equipment compliance. Examples encompass failure to wear safety harnesses during high-altitude operations, unattended ladder work, and uncovered or unguarded openings at work sites.

- Substation Equipment: Covers inspection data for various substation devices such as GIS/SF6 pressure gauges, lightning arresters, transformers, and reactors.

- Transmission Equipment: Includes detection data for transmission components including protective fittings, conductors/ground wires, and bird-deterrence facilities.

In subsequent experiments, this multimodal dataset was utilized to validate the effectiveness and performance of our proposed feature migration method based on dual cross-modal information decoupling. Through decoupling and migrating multimodal information within the dataset, we aimed to enhance model performance in power sample feature extraction and classification tasks.

4.2. Experimental Setup

We evaluated the proposed method’s effectiveness through three downstream tasks:

- Cross-modal Scenario Description (CSD): Each image contained corresponding scene descriptions. We assessed model capability by evaluating scene description accuracy.

- Cross-modal Event Localization/Classification (CELC): We evaluated the method’s capability in power defect detection and domain knowledge by localizing and classifying fine-grained events.

- Cross-modal Knowledge Quiz (CKQ): We assessed whether models effectively migrate features between image and text modalities for power knowledge question answering.

4.3. Experimental Evaluation

We employed multiple metrics to evaluate multimodal feature understanding and migration capabilities across the three tasks:

- Image Captioning (IC): We used GPT-4 as an objective benchmark to evaluate caption adequacy, linguistic fluency, and accuracy against reference answers. Scores were in the range 0–10 with comprehensive evaluation explanations.

- Grounded Captioning (GC): GPT-4 evaluated category recall, coordinate deviation, fluency, and the accuracy of responses against references. Scores were in the range 0–10 with detailed explanations.

- Referential Expression Comprehension (REC): GPT-4 assessed false negatives/positives, coordinate deviation, linguistic fluency, and informativeness. Scores (0–10, higher being better) included comprehensive explanations.

- Referential Expression Generation (REG): GPT-4 evaluated

- –

- Correct category identification in specified regions;

- –

- Correspondence between generated and target categories;

- –

- Linguistic fluency and informativeness.

- Our model is built upon ViT-Tiny as the visual encoder (∼5.7 M parameters, ∼1.3 GFLOPs for 224 × 224 inputs). With the addition of our dual-stream decoupling, attention-based alignment modules, and lightweight fusion heads, the total parameter count remains under ∼25 M, with FLOPs below 5 GFLOPs per sample. In contrast, LLaVA (∼7 B parameters) and Qwen-VL (>14 B) are orders of magnitude larger, as they use large-scale vision-language backbones. Our architecture is designed for modularity and efficiency. Despite having multiple attention components, they operate on compact intermediate features, not raw high-res inputs. The observed performance gain arises from structural enhancements (semantic decoupling and fine-grained alignment) rather than brute-force scaling. The design is thus suitable for downstream adaptation, and we envision real-time potential through model distillation or partial encoder freezing.Scores were in the range 0–10 (higher being better) with full evaluation explanations.

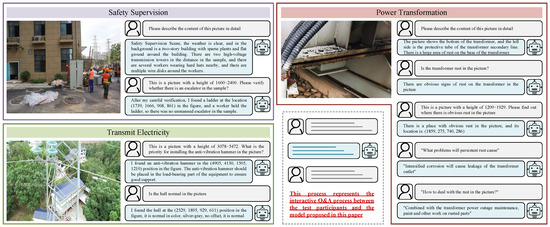

Figure 4 demonstrates the multimodal Q&A interaction results of our method in power safety monitoring and equipment analysis. The left image displays the model’s analytical output for a substation scene, where the model successfully detected and annotated critical equipment and potential anomalies within the image. Simultaneously, it generated a textual scene description summarizing equipment conditions and abnormalities.

Figure 4.

Qualitative experimental results in safety supervision and equipment monitoring. The model detected objects, identified anomalies, and answered professional questions by integrating image evidence with textual knowledge.

The right section of Figure 4 illustrates the multimodal question-answering capability of our method. When users pose professional questions about the scene (e.g., inquiring about fault causes or mitigation measures), the model accurately responds by integrating image information with its embedded knowledge base. These responses include causal explanations and recommended action plans. Visualization results confirm that the model’s attention maps correspond precisely to actual fault locations, indicating that cross-modal decoupling effectively focuses the model on semantically relevant regions, thereby enhancing interpretability and reliability. During human–machine interactions, responses demonstrate robust adaptation to multimodal data by simultaneously referencing visual evidence and domain expertise. The model handles all interaction modes seamlessly, including pure vision tasks (defect localization), text-based Q&A (operational guidelines), and cross-modal queries (“Explain the overheating near bushing A in image X”). This adaptability enables the model to function as an intelligent assistant for field engineers, delivering real-time monitoring, diagnostics, and decision support in complex operational environments.

This paper further conducted quantitative experiments on the proposed framework, as detailed in Table 1. These experiments quantitatively evaluated the performance of our dual cross-modal information decoupling approach for multimodal power sample feature migration using the transmission–substation–safety monitoring dataset. The evaluation employed the aforementioned four key metrics to assess model performance across diverse tasks. Compared to baseline methods (e.g., LLaVA, Qwen), our feature decoupling and migration strategy significantly enhanced the model’s generalization capability in multimodal tasks, particularly outperforming existing methods in scene understanding, equipment fault detection, and knowledge-based Q&A. For example, in substation corrosion detection tasks, our method not only accurately localized corroded regions but also provided professional diagnostic insights by integrating textual knowledge, thereby demonstrating potential to enhance the interpretability and decision support capabilities of intelligent monitoring systems. The results demonstrate that our approach effectively improves the robustness of cross-modal feature migration, demonstrating high practical value in power industry scenarios.

Table 1.

Quantitative experiments: CSD, CELC, and CKQ mission evaluation on power datasets.

The experimental results reveal that compared to traditional cross-modal alignment methods, our method achieved an IC score of 5.3, indicating its ability to generate semantically accurate descriptions aligned with power scenarios. Regarding the GC metric, our approach outperformed LLaVA by 12.8%, demonstrating that the decoupling strategy effectively enhances multimodal feature consistency and consequently improves visual–textual joint task performance. For cross-modal retrieval tasks, our method attained an REC score of 6.4, highlighting its proficiency in precise cross-modal matching. On the REG metric, our approach showed a 10.8% improvement over Qwen, confirming its superior capability in fault detection and equipment region localization. This enables more precise identification of critical areas such as corrosion and cable aging while providing actionable explanations.

To further validate the contributions of individual components in our methodology, ablation studies examining different alignment strategies and attention mechanisms were designed, with specific results presented in Table 2 and Table 3.

Table 2.

Impact of different alignment strategies on multimodal task performance.

Table 3.

Impact of different attention mechanisms on multimodal task performance.

Table 2 demonstrates model performance across the CSD, CELC, and CKQ tasks under different alignment strategies. The baseline without alignment modules showed poor performance across all metrics, particularly in the GC and REC scores, reflecting deficient cross-modal correlation modeling capabilities. Incorporating coarse-grained alignment improved GC and REC by 0.6 points respectively, indicating partial enhancement of multimodal fusion effectiveness. However, coarse-grained methods still suffered from inadequate detail representation. Our proposed fine-grained image–text alignment strategy boosted GC and REC to 6.0 and 6.1 even without decoupling, confirming that fine-grained feature alignment more accurately captured multimodal semantic relationships. The complete method combining dual cross-modal information decoupling with fine-grained alignment achieved optimal results, elevating IC, GC, REC, and REG to 5.3, 6.5, 6.4, and 4.1, respectively, validating the overall framework design.

Furthermore, this paper analyzes the effectiveness of attention mechanisms, with results presented in Table 3. Compared to the baseline without attention mechanisms, individually incorporating either image-adaptive attention or text-guided attention improved multimodal task performance to varying degrees. This demonstrates their positive roles in enhancing interactions between visual and linguistic information. Notably, text-guided attention yielded particularly significant gains in the REG metric, highlighting the critical importance of textual information in region localization and description tasks.

It is noteworthy that when the dual-stream attention mechanism was jointly applied, the model achieved optimal performance across all metrics: IC = 5.3; GC = 6.5; REC = 6.4; and REG = 4.1. These results validate the synergistic enhancement effect of the dual-stream attention mechanism in multimodal information fusion and feature migration, further advancing the model’s capability to comprehend and express multimodal data within complex power scenarios.

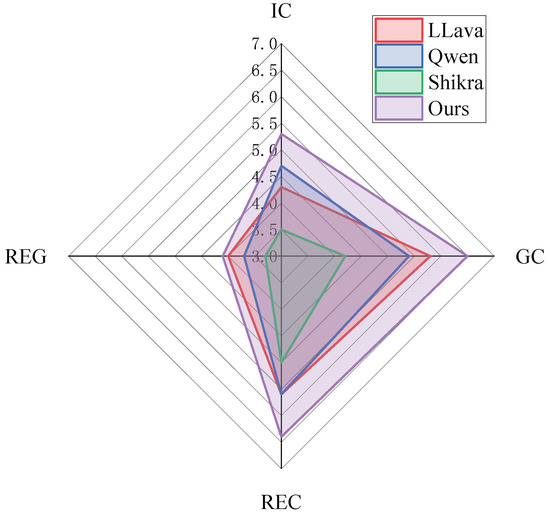

To more clearly demonstrate the advantages of our method, we visualize the performance of various approaches across the four key metrics in Figure 5. The radar chart visualization reveals that our method (purple area) outperformed all baselines—including LLaVA, Qwen, and Shikra—across the IC, GC, REC, and REG metrics, demonstrating the superiority of our proposed dual cross-modal information decoupling approach in multimodal tasks.

Figure 5.

Radar chart comparison of the IC, GC, REC, and REG metrics. The proposed method outperformed baseline models (LLaVA, Qwen, Shikra), demonstrating superior multimodal feature alignment and task performance.

Specifically:

- IC Metric: Our method achieved significantly higher scores than alternatives, indicating superior precision in generating image descriptions highly aligned with power scenarios and more effective extraction/expression of critical information.

- GC Metric: Substantial improvements over LLaVA/Qwen/Shikra confirmed that our decoupled cross-modal alignment mechanism enabled more effective capture of semantic consistency across modalities, enhancing model comprehension.

- REC Metric: Dominant performance signified enhanced precision in semantic matching for cross-modal retrieval tasks, improving information reliability.

- REG Metric: The largest performance gap demonstrated higher accuracy in region alignment tasks (e.g., fault region localization), directly boosting target detection and failure analysis capabilities in power monitoring scenarios.

5. Discussion

In this work, we propose a dual cross-modal information decoupling framework for fine-grained multimodal feature alignment in power system scenarios. Unlike many existing methods that perform fusion at a single latent level or rely on implicit attention (e.g., CLIP, ALBEF, BLIP), our model explicitly separates modality-specific and semantic representations through a dual-branch architecture. This structural decoupling allows for hierarchical abstraction of semantic content while preserving low-level visual and linguistic cues, which is critical for tasks involving both global descriptions and localized reasoning. In contrast to codebook-based methods, which often rely on static prototype spaces, our framework enables dynamic alignment between modalities across multiple semantic layers, offering improved flexibility and robustness in complex industrial scenes.

From a practical point of view, our design is effective in power inspection tasks that require precise grounding and semantic matching, even under weak supervision or noisy visual conditions. The modular structure and low parameter count (under 25 M) also highlight the model’s potential for deployment in edge-based or real-time applications.

However, several limitations remain. Our current training data are limited to one regional power grid system and the generalization across domains has not yet been formally evaluated. Furthermore, the absence of large-scale pretraining can limit performance on more open tasks compared to foundation models. Future work will focus on extending the dataset to cover geographically diverse systems, integrating structured domain knowledge (e.g., power equipment ontologies) and developing model compression strategies for real-world deployment.

6. Conclusions

This paper proposes a dual cross-modal information decoupling approach to address challenges in multimodal feature migration for power systems. By designing a fine-grained image–text alignment strategy and dual-stream attention mechanism, we effectively resolve cross-modal semantic inconsistencies and redundant data issues. Experiments demonstrate that our method outperformed existing models in power scenario description, equipment fault localization, and knowledge-based question answering tasks, achieving particularly notable performance on metrics such as image captioning (IC = 5.3) and grounded captioning (GC = 6.5). Visualization results further validate the model’s high interpretability and reliability in safety monitoring and substation equipment inspection. Future research may explore real-time migration optimization for multimodal data in dynamic power operation scenarios and extend applications to more complex industrial contexts, ultimately advancing the evolution of intelligent power systems.

Author Contributions

Conceptualization, Z.C., H.Y. and J.D.; Methodology, Z.C., H.Y., J.D. and Y.C.; Software, Z.C., H.Y., J.D. and Y.C.; Validation, Z.C., H.Y., Y.C. and S.Z.; Formal analysis, Z.C., H.Y. and S.Z.; Investigation, Y.Z. and S.Z.; Data curation, Z.C., J.D., Y.Z., Y.Y. and S.Z.; Writing – original draft, Z.C., H.Y., Y.Z. and Y.C.; Writing – review & editing, Y.Z., Y.C. and Y.Y.; Visualization, Y.Z., Y.C. and Y.Y.; Supervision, J.D. and Y.Y.; Project administration, H.Y., J.D., Y.Z., Y.Y. and S.Z.; Funding acquisition, Z.C., H.Y. and J.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Science and Technology Project of the State Grid Corporation Headquarters (Grant No. 5700-202490330A-2-1-ZX).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Acknowledgments

The authors would like to thank the editorial board and reviewers for the improvement of this paper.

Conflicts of Interest

Authors Zhenyu Chen, Huaguang Yan and Jianguang Du were employed by the company Big Data Center of State Grid Corporation of China. Author Shuai Zhao was employed by the company State Grid Zhejiang Electric Power Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. The authors declare that this study received funding from State Grid Corporation Headquarters. The funder was not involved in the study design, collection, analysis, interpretation of data, the writing of this article or the decision to submit it for publication.

References

- Shan, L.; Song, H.; Tang, Q.; Guo, H.; Hou, S.; Kang, C. Analysis and implications of power balancing mechanisms under decentralized decision-making: A case study of German balancing settlement units. Power Syst. Technol. 2025, 1–12. [Google Scholar] [CrossRef]

- Dan, Z.; Wang, L. Research on the application of smart grid technology in power system optimization. China Strateg. Emerg. Ind. 2025, 26–28. [Google Scholar]

- Pu, T.; Zhao, Q.; Wang, X. Research framework, application status and prospects of electric power artificial intelligence technology. Power. Syst. Technol. 2025, 1–21. Available online: http://kns.cnki.net/kcms/detail/11.2410.tm.20241024.1500.002.html (accessed on 2 March 2025).

- Ji, X.; Chen, Y.; Wang, J.; Zhou, G.; Sing Lai, C.; Dong, Z. Time-Frequency Hybrid Neuromorphic Computing Architecture Development for Battery State-of-Health Estimation. IEEE Internet Things J. 2024, 11, 39941–39957. [Google Scholar] [CrossRef]

- Dong, Z.; Gu, S.; Zhou, S.; Yang, M.; Sing Lai, C.; Gao, M.; Ji, X. Periodic Segmentation Transformer-Based Internal Short Circuit Detection Method for Battery Packs. IEEE Trans. Transp. Electrif. 2025, 11, 3655–3666. [Google Scholar] [CrossRef]

- Li, G.; Wang, N.; Gao, R.; Wang, B.; Yin, Z.; Zhu, M. Research on multi-source heterogeneous data fusion technology for intelligent efficiency improvement in new power systems. Electr. Meas. Instrum. 2024, 61, 116–121. [Google Scholar] [CrossRef]

- Dong, Z.; Yang, M.; Wang, J.; Wang, H.; Sing Lai, C.; Ji, X. PFFN: A Parallel Feature Fusion Network for Remaining Useful Life Early Prediction of Lithium-Ion Battery. IEEE Trans. Transp. Electrif. 2025, 11, 2696–2706. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Ji, X.; Dong, Z.; Gao, M.; Sing Lai, C. Metaverse Meets Intelligent Transportation System: An Efficient and Instructional Visual Perception Framework. IEEE Trans. Intell. Transp. Syst. 2024, 25, 14986–15001. [Google Scholar] [CrossRef]

- Zhou, Y.; Chen, C.; Dong, P.; Xue, W.; Zhai, Y. Research on multimodal fusion technology for power grid engineering data recognition. Inf. Technol. 2025, 61–65. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Ji, X.; Dong, Z.; Gao, M.; He, Z. SpikeTOD: A Biologically Interpretable Spike-Driven Object Detection in Challenging Traffic Scenarios. IEEE Trans. Intell. Transp. Syst. 2024, 25, 21297–21314. [Google Scholar] [CrossRef]

- Ji, X.; Dong, Z.; Lai, C.; Zhou, G.; Qi, D. A physics-oriented memristor model with the coexistence of NDR effect and RS memory behavior for bio-inspired computing. Mater. Today Adv. 2022, 16, 100293. [Google Scholar] [CrossRef]

- Ji, X.; Lai, C.S.; Zhou, G.; Dong, Z.; Qi, D.; Lai, L.L. A Flexible Memristor Model With Electronic Resistive Switching Memory Behavior and Its Application in Spiking Neural Network. IEEE Trans. NanoBiosci. 2023, 22, 52–62. [Google Scholar] [CrossRef] [PubMed]

- Sun, S.; Wei, S.; Meng, J.; Lin, H.; Xiao, W.; Liu, S. Fine-grained text-guided cross-modal style transfer. J. Chin. Inf. Process. 2024, 38, 170–180. [Google Scholar]

- Wang, S. Research on Unsupervised Deep Learning Methods for Robust Multimodal Emotion Recognition. Ph.D. Thesis, Xi’an University of Technology, Xi’an, China, 2024. [Google Scholar] [CrossRef]

- Ji, X.; Dong, Z.; Han, Y.; Lai, C.S.; Zhou, G.; Qi, D. EMSN: An Energy-Efficient Memristive Sequencer Network for Human Emotion Classification in Mental Health Monitoring. IEEE Trans. Consum. Electron. 2023, 69, 1005–1016. [Google Scholar] [CrossRef]

- Chen, Y.C.; Li, L.; Yu, L.; El Kholy, A.; Ahmed, F.; Gan, Z.; Cheng, Y.; Liu, J. Uniter: Universal image-text representation learning. In Proceedings of the Computer Vision-ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXX. Springer: Cham, Switzerland, 2020; pp. 104–120. [Google Scholar]

- Wang, T.; Jiang, W.; Lu, Z.; Zheng, F.; Cheng, R.; Yin, C.; Luo, P. Vlmixer: Unpaired vision-language pre-training via cross-modal cutmix. In Proceedings of the International Conference on Machine Learning, PMLR, Baltimore, MD, USA, 17–23 July 2022; pp. 22680–22690. [Google Scholar]

- Wang, C.; Wu, Y.; Qian, Y.; Kumatani, K.; Liu, S.; Wei, F.; Zeng, M.; Huang, X. Unispeech: Unified speech representation learning with labeled and unlabeled data. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual Event, 18–24 July 2021; pp. 10937–10947. [Google Scholar]

- Pedersoli, F.; Wiebe, D.; Banitalebi, A.; Zhang, Y.; Yi, K.M. Estimating visual information from audio through manifold learning. arXiv 2022, arXiv:2208.02337. [Google Scholar] [CrossRef]

- Sarkar, P.; Etemad, A. Xkd: Cross-modal knowledge distillation with domain alignment for video representation learning. arXiv 2022, arXiv:2211.13929. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual Event, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Zhao, Y.; Zhang, C.; Huang, H.; Li, H.; Zhao, Z. Towards effective multi-modal interchanges in zero-resource sounding object localization. Adv. Neural Inf. Process. Syst. 2022, 35, 38089–38102. [Google Scholar]

- Duan, J.; Chen, L.; Tran, S.; Yang, J.; Xu, Y.; Zeng, B.; Chilimbi, T. Multi-modal alignment using representation codebook. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 15651–15660. [Google Scholar]

- Lu, J.; Clark, C.; Zellers, R.; Mottaghi, R.; Kembhavi, A. Unified-io: A unified model for vision, language, and multi-modal tasks. arXiv 2022, arXiv:2206.08916. [Google Scholar]

- Ao, J.; Wang, R.; Zhou, L.; Wang, C.; Ren, S.; Wu, Y.; Liu, S.; Ko, T.; Li, Q.; Zhang, Y.; et al. Speecht5: Unified-modal encoder-decoder pre-training for spoken language processing. arXiv 2021, arXiv:2110.07205. [Google Scholar]

- Liu, A.H.; Jin, S.; Lai, C.I.J.; Rouditchenko, A.; Oliva, A.; Glass, J. Cross-modal discrete representation learning. arXiv 2021, arXiv:2106.05438. [Google Scholar] [CrossRef]

- Belghazi, M.I.; Baratin, A.; Rajeshwar, S.; Ozair, S.; Bengio, Y.; Courville, A.; Hjelm, D. Mutual information neural estimation. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 531–540. [Google Scholar]

- Boudiaf, M.; Ziko, I.; Rony, J.; Dolz, J.; Piantanida, P.; Ben Ayed, I. Information maximization for few-shot learning. Adv. Neural Inf. Process. Syst. 2020, 33, 2445–2457. [Google Scholar]

- Dong, Z.; Ji, X.; Lai, C.S.; Qi, D. Design and Implementation of a Flexible Neuromorphic Computing System for Affective Communication via Memristive Circuits. IEEE Commun. Mag. 2023, 61, 74–80. [Google Scholar] [CrossRef]

- van den Oord, A.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. [Google Scholar]

- Zhu, W.; Zheng, H.; Liao, H.; Li, W.; Luo, J. Learning bias-invariant representation by cross-sample mutual information minimization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 15002–15012. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).