1. Introduction

In recent years, network attacks have shown an increasing trend [

1]. Among them, Advanced Persistent Threats (APT), as a complex, long-term, and targeted form of cyber attack, stand out. APTs are complex, long-term network attacks launched by highly skilled attackers, often covert with specific targets, and, once successful, can cause significant damage [

2]. Therefore, the defense against APTs not only relies on traditional intrusion detection techniques but also requires a deep understanding of the attack chain, attack techniques, and the attacker’s intentions [

3]. How to trace the origin of an APT attack [

4], identify the attacking organization, reveal the attack methods, and summarize their tactics has become an important topic in cybersecurity research.

Cyber Threat Intelligence (CTI) plays a key role in this context as a core means to address these challenges. CTI refers to the actionable knowledge derived from collecting, processing, and analyzing raw data related to cyber threats, used to uncover attack mechanisms [

5], identify threat indicators [

6], assess potential impacts, and provide decision support for defense [

7]. Unlike traditional security mechanisms that rely on signature matching or rule-based detection, CTI plays a crucial role in addressing APT attacks. Specifically, CTI helps security teams gain an advantage at various stages of the attack chain: before the attack, CTI provides intelligence on potential attackers to predict and prevent potential threats [

8]; during the attack, CTI provides key indicators such as malicious domains, IP addresses, and file hashes to assist in detecting covert intrusions [

9]; after the attack, CTI supports event tracing and pattern recognition, revealing the attacker’s behavioral traits and strengthening subsequent defenses [

10]. Thus, CTI can fill the gaps of traditional intrusion detection, providing systematic and intelligence-driven support for APT detection and defense.

Despite its undeniable importance, threat intelligence is often disseminated in unstructured formats such as web pages [

11], emails [

12], and other sources. This unstructured nature presents significant challenges in quickly extracting relevant information from massive datasets. Therefore, Named Entity Recognition (NER), the task of identifying and classifying specific entities (such as malware names, IP addresses, attack techniques, and related organizations) in unstructured text, has become a crucial technique for automatically extracting key information related to cyberattacks [

13]. NER for threat intelligence plays an important role in maintaining network security [

14].

In this context, Natural Language Processing (NLP) technology has gradually become an important tool for threat intelligence processing. Among them, Named Entity Recognition (NER), as a core method for information extraction, can automatically identify and extract key entities related to cyberattacks from unstructured text, providing support for the structuring and automation of threat intelligence processing. With NER, large volumes of dispersed security texts can be transformed into usable structured data, enabling the knowledge-based description of APT activities and cross-source information correlation analysis. However, due to the high number of specialized terms, inconsistent naming, and the scarcity of labeled corpora in the cybersecurity field, existing general-purpose NER methods still face performance bottlenecks in the threat intelligence domain [

15]. Therefore, domain-specific NER research for threat intelligence has become one of the key directions for improving APT detection and tracing capabilities.

At present, research on Named Entity Recognition (NER) in the field of Cyber Threat Intelligence (CTI) faces multiple challenges, primarily arising from dataset-level and model-level issues.

First, at the dataset level, existing datasets fail to meet research needs. Most current datasets rely on semi-structured text (such as forums, blogs, and emails) and lack standardization. They do not provide large-scale, uniformly annotated corpora, nor do they ensure consistent data distributions or label definitions. Consequently, there is no unified evaluation benchmark, making it difficult to measure actual performance. Furthermore, high-quality, manually annotated cybersecurity datasets are extremely scarce, especially for domain-specific NER tasks. Existing datasets suffer from incomplete annotations, and most corpora contain limited entity types, often failing to fully cover the complex and diverse entities within the CTI domain. Such dataset deficiencies restrict model training effectiveness and generalization ability, thereby hindering the advancement of CTI-oriented NER research.

Second, at the model level, existing CTI-specific NER models mainly focus on dictionary-based, rule-based, machine learning, and traditional deep learning methods, without keeping pace with the latest paradigm shifts in general NER research. When using general-purpose NER models for CTI-NER tasks, threat intelligence is often treated as ordinary text, overlooking the unique characteristics of cybersecurity reports. As a result, these models fail to effectively leverage domain-specific knowledge, making it difficult to capture the technical details embedded in threat intelligence.

To address the above problems, we propose the following approaches, aiming to solve these two critical challenges.

First, to address dataset limitations, we improve the standards of entity definition and the structural novelty of samples. We begin by establishing a generalized standard for entity type definitions, drawing inspiration from the STIX 2.1 standard [

16], an important framework in the threat intelligence field that provides a structured approach for representing and exchanging cybersecurity threat information. STIX 2.1 enables analysts, organizations, and automated systems to share information efficiently and in a standardized manner. Therefore, we adopt this standard as the foundation for our entity classification scheme. To transform the widely available unstructured and unlabeled semi-structured text into structured training samples, we designed an annotation system and rigorous annotation methodology, recruiting volunteers with professional backgrounds to perform manual labeling.

Second, to address model limitations, we incorporate recent advances in general-purpose NER research and use Large Language Models (LLMs) as the backbone of our approach. To capture the unique characteristics of cybersecurity reports within CTI, we inject expert knowledge into the model through prompt engineering. In order to provide precise guidance for knowledge injection, we employ three types of prompts (prefix prompts, demonstration prompts, and template prompts) to steer the model toward producing the desired outputs.

In this paper, we tackle two key challenges in CTI-oriented NER research following the above approaches. Our contributions are as follows:

- 1.

Dataset improvement: We propose a new entity type definition standard based on the STIX 2.1 framework to enhance the structuring and annotation of CTI datasets. We further develop a systematic annotation methodology and recruit domain experts to annotate large-scale datasets, thereby improving data quality and coverage.

- 2.

Model enhancement: We introduce a novel NER model, PROMPT-BART, which leverages large language models (LLMs) and incorporates domain-specific expert knowledge through prompt engineering. We design three types of prompts (prefix, demonstration, and template prompts) to guide the model in effectively capturing technical details in cybersecurity reports.

- 3.

Evaluation and analysis: Using our newly created CTI NER dataset, we conduct a comprehensive evaluation of the proposed model, demonstrating significant improvements in accuracy and generalization compared to traditional NER methods.

The remainder of this paper is organized as follows:

Section 2 reviews related work,

Section 3 introduces the CTINER dataset,

Section 4 presents the PROMPT-BART model based on prompt learning,

Section 5 provides experimental evaluations, and

Section 6 concludes the paper.

3. Construction of Cyber Threat Intelligence Datasets

Through an investigation and analysis of existing datasets, it has been observed that publicly available NER datasets in the domain of cyber threat intelligence generally suffer from several limitations. First, most datasets are outdated and fail to incorporate emerging entities that have appeared in recent years. Second, their scale is relatively small, which restricts their suitability for large-scale training and applications. Third, many datasets define entity labels without adhering to the STIX 2.1 specification, resulting in difficulties when applying them to downstream research tasks. Finally, data distribution within these datasets is imbalanced, with certain entity categories being significantly under-represented, which compromises completeness and introduces bias. These issues collectively limit the practical applicability and research value of existing datasets.

To address these problems, this study redefines entity categories in accordance with the STIX 2.1 specification and common paradigms of cyber threat intelligence. The focus is placed on entities that contribute to threat understanding and detection, while discarding easily falsified indicators such as file hashes and IP addresses. By conducting a semi-automated annotation process on a large collection of APT (Advanced Persistent Threat) reports, we ultimately construct a domain-specific dataset for entity recognition, named CTINER. Our dataset has been publicly released on GitHub and is available for download.

3.1. Dataset Extraction Methodology and Corresponding Modules

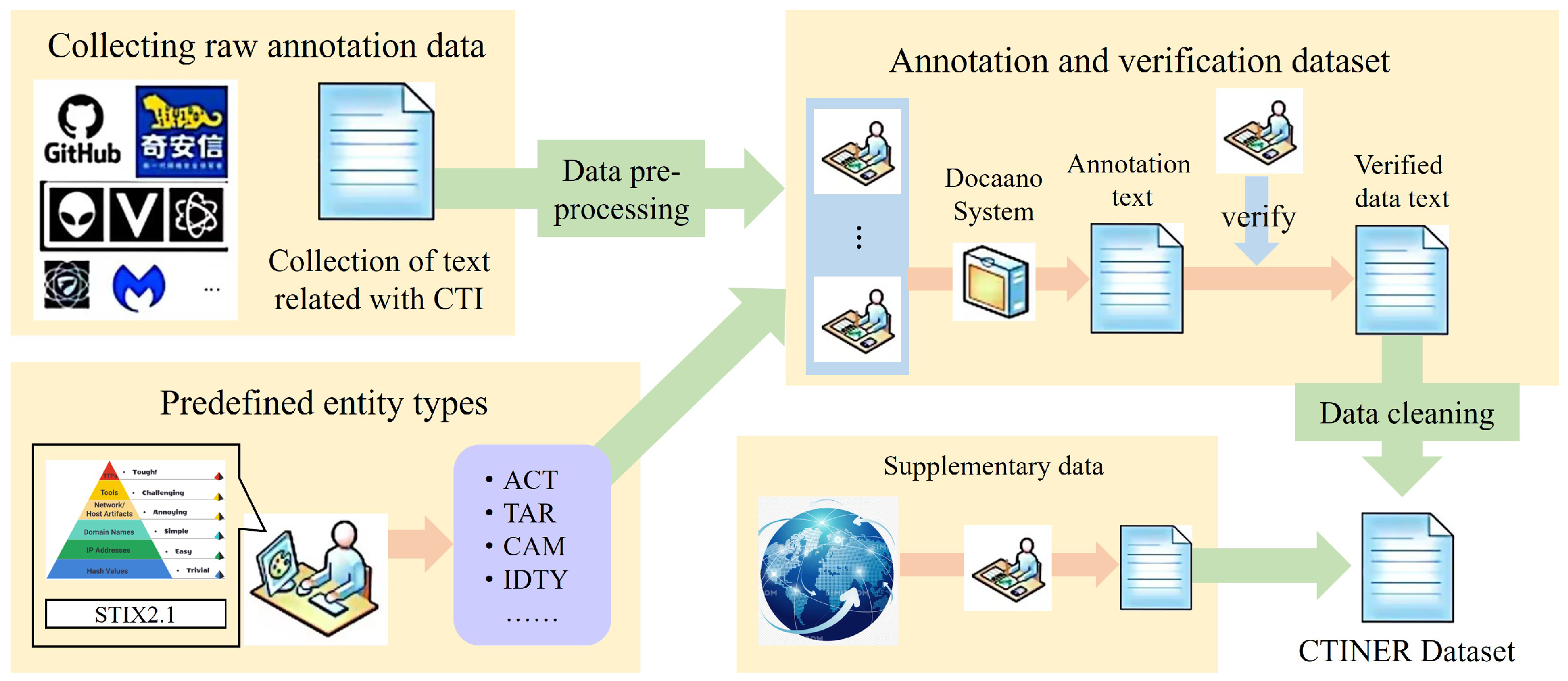

The construction process of the CTINER dataset is illustrated in

Figure 1. Specifically, the dataset is built through seven modules: threat intelligence acquisition, data preprocessing, entity definition, annotation and validation, data format conversion, annotated data cleaning, and rare data supplementation. A detailed description of each module is provided below.

3.1.1. Threat Intelligence Acquisition Module

The dataset primarily consists of APT analysis reports, which describe in detail the attack methods employed against specific organizations and their consequences. Since constructing the dataset requires a large volume of raw intelligence corpora, we first collected documents from cybersecurity companies and open-source repositories such as GitHub. The APT reports published by security companies are summarized in

Table 1. All collected reports were converted into TXT format. To ensure the high relevance of the corpus to the cyber threat intelligence domain, the keyword “APT” was explicitly specified during the search and selection process. Finally, only the main body of the reports was retained as the textual content for further processing.

3.1.2. Data Preprocessing Module

After obtaining the raw corpus, we performed standardization and cleaning procedures to transform the data into valuable training samples.

First, text formatting was conducted. Since some paragraphs in the raw text lacked punctuation at the end, which affected sentence segmentation, we examined the final character of each paragraph. If a punctuation mark was already present, the text was left unchanged; otherwise, an English period was appended.

Next, sentence segmentation and tokenization were applied. Given that the reports are typically presented in long paragraphs while entity annotation is sentence-based, we employed nltk.sent_tokenize(all_text) to split the corpus into individual sentences, ensuring that each sentence occupies one line. Since entity annotation is token-level, we further applied nltk.word_tokenize(sentence) to separate words and punctuation with spaces.

Subsequently, data cleaning was performed. Using automated scripts, we corrected spacing inconsistencies and removed sentences that were either too short (fewer than 10 tokens) or excessively long (more than 50 tokens), as such sentences were found to contain little valuable information or entity content. In addition, we detected a small number of garbled sentences, most of which did not begin with uppercase English letters. Therefore, sentences whose initial character was not within the range “A–Z” were discarded.

After these preprocessing steps, the corpus was transformed into a clean and standardized form suitable for use as training samples, as illustrated in

Figure 2.

3.1.3. Entity Pre-Definition Module

In the process of defining entities, the first step is to determine which types of entities should be extracted, namely, which attack indicators in CTI carry substantial analytical value. As shown in

Figure 1, the pyramid model [

49] illustrates the relative practical value of different attack indicators. We excluded the three lower-value and easily mutable categories in the pyramid (file hashes, IP addresses, and domain names) since adversaries frequently alter such indicators to evade detection.

Among the remaining layers of the pyramid, the following categories were retained: network or host characteristics, including URLs, sample files, and Operating Systems (OSs); attack tools, including benign tools and malware; Tactics, Techniques, and Procedures (TTPs), including vulnerabilities, campaigns, and malicious emails.

Subsequently, we referred to the STIX 2.1 specification, which defines 18 types of domain objects in threat intelligence, such as attack patterns, campaigns, courses of action, intrusion sets, infrastructure, indicators, malware, malware analysis, threat actors, reports, tools, vulnerabilities, and others. Finally, considering practical attack scenarios, we additionally incorporated “attack target” as a crucial entity type that warrants attention.

Based on these considerations, we defined a total of 13 entity labels, as shown in

Table 1. These labels not only align with the domain objects specified in widely adopted standards, thereby enabling the extraction of comprehensive attack descriptors, but also reflect realistic attack scenarios. This design ensures standardized transmission of threat intelligence and enhances compatibility between the dataset and both current and future threat analysis systems.

3.1.4. Annotation and Verification Module

Since this dataset is specifically designed for the cyber threat intelligence domain, it contains unique entity types and domain-specific vocabulary. As a result, automated annotation tools are not sufficiently accurate in recognizing the relevant named entities. Therefore, this study adopts a manual annotation approach. Annotators were first provided with preliminary training: each annotator was required to read a certain number of threat intelligence reports and acquire the necessary domain knowledge before being allowed to perform annotations.

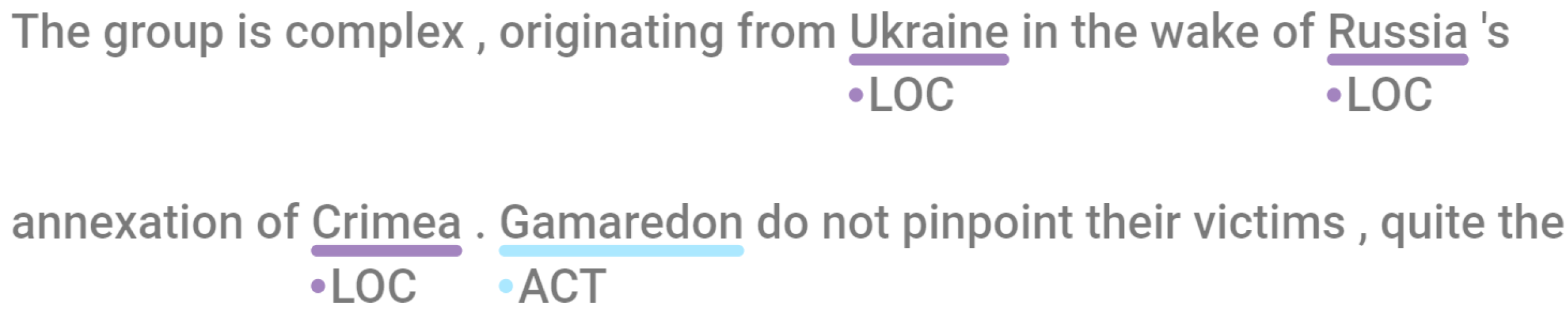

The data to be annotated consisted of the TXT corpus obtained from the data preprocessing module, while the entity categories were defined according to the 13 entity labels established in the entity definition module. The annotation process was carried out using the Doccano system, and an example of annotated data is shown in

Figure 3.

3.1.5. Data Format Conversion Module

To facilitate processing and visualization, the module first converts the JSONL annotation files exported from Doccano into JSON format, and subsequently into TXT format, adopting the BIO labeling scheme for the named entity recognition task.

The final CTINER dataset consists of two columns, where each line of data contains a token and its corresponding label, separated by a space. Different sentences in the dataset are separated by a blank line. The entity label format is defined as follows:

. Here, {B, I} represent the boundary tags for tokens belonging to entities, while {ACT, TAR, CAM, IDTY, VUL, TOOL, MAL, LOC, TIME, FILE, URL, OS, EML, O} correspond to the set of predefined entity categories. In this study, the label “O-O” is simplified to “O,” indicating non-entity tokens.

3.1.6. Annotation Data Cleaning Module

Cyber threat intelligence exhibits unique characteristics: its sentences are generally long, but the density of entities is relatively low. As a result, a large number of sentences contain no entities. In this study, such sentences were removed in order to increase the overall proportion of entities and to address the problem of imbalanced label distribution. Finally, the dataset was divided into training, testing, and validation sets in a 7:2:1 ratio.

3.1.7. Rare Data Supplementation Module

Certain entity types in APT reports are underrepresented due to the nature of the reports. To address this, open-source data was sourced from the internet to supplement underrepresented entities, based on statistical analysis of the dataset.

3.2. Dataset Overview and Entity Distribution

The dataset is split into training, testing, and validation sets with a ratio of 7:2:1, as shown in

Table 2. The dataset comprises 16,573 sentences, 459,308 words, 42,549 entities, and 60,167 entity tokens. On average, each sentence contains approximately 28 words, indicating that the sentences in cyber threat intelligence texts are relatively lengthy but contain fewer entities. This characteristic presents challenges in constructing a knowledge-rich named entity recognition dataset for the cyber threat intelligence domain.

The top five most frequent entities in the CTINER dataset are IDTY (6778), TOOL (6544), TAR (6358), TIME (5171), and ACT (4607), which play a critical role in network threat assessment and enhancing cybersecurity.

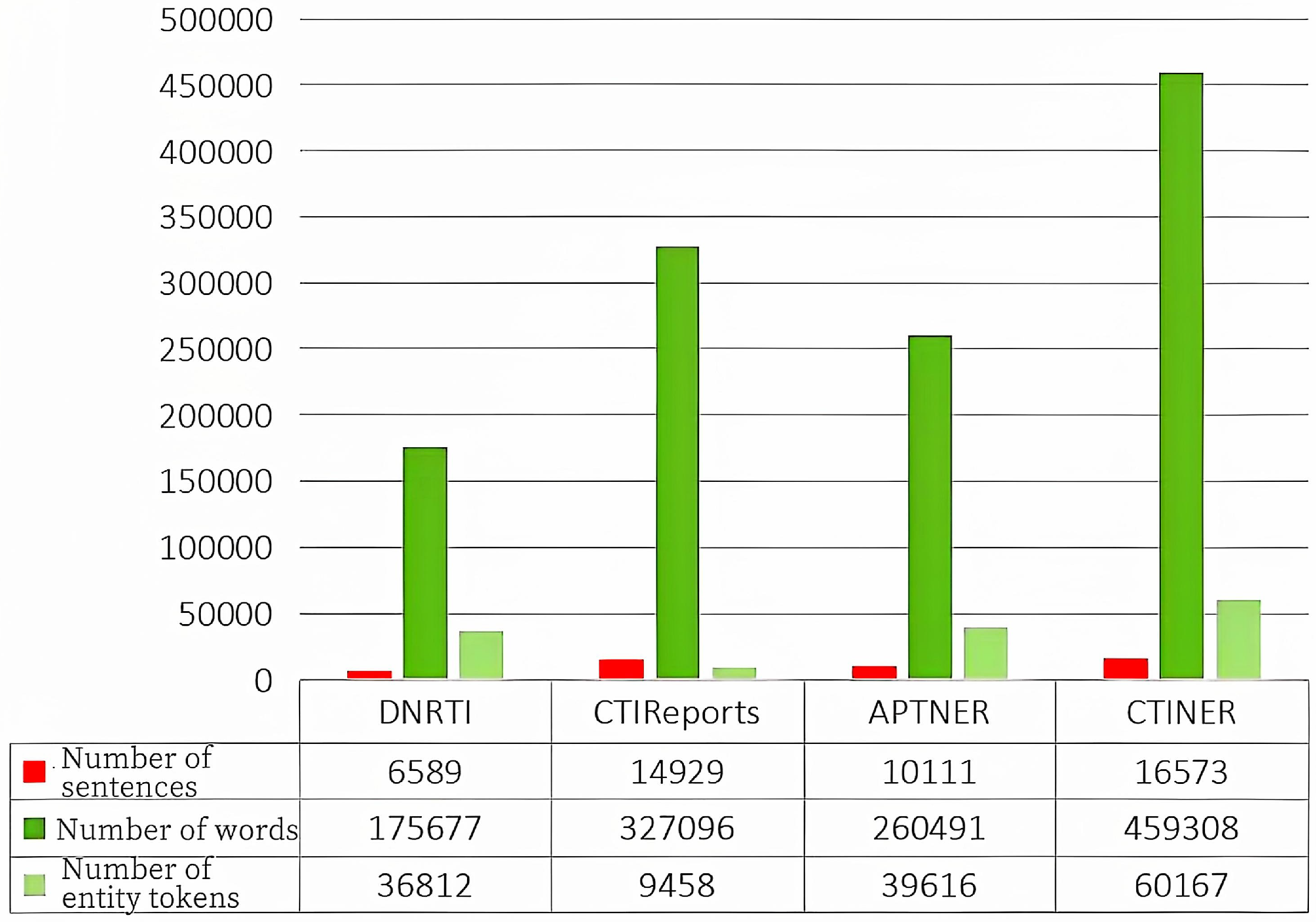

3.3. Comparison with Other Datasets: DNRTI, CTIReports, and APTNER

To assess the CTINER dataset’s advancement and rationality, it is compared with other open-source datasets in the cyber threat intelligence domain. The comparison includes predefined label rationality, entity density, and overall dataset characteristics. The number of labels and their specific categories for each dataset are shown in

Table 3.

3.3.1. Label Rationality

The APTNER dataset [

48] defines 21 entity types, increasing annotation complexity and compromising dataset quality. Certain entity types, such as IP addresses and MD5 values, have limited utility due to their time-sensitive nature, with attackers frequently altering these indicators. To address this, CTINER eliminates these time-sensitive entities and merges those with overlapping meanings (e.g., merging security teams and identity authentication into IDTY, domain names and URLs into URL, and vulnerability names and identifiers into VUL).

In the DNRTI dataset [

46], CTINER modifies the attack objective to “attack target” based on the threat information expression model. DNRTI also suffers from overlapping or ambiguously defined entity types, such as spear phishing, and lacks critical entities like malware.

The CTIReports dataset [

47] primarily focuses on IP addresses, malware, and URLs, which are insufficient for downstream tasks such as knowledge graph construction or machine-readable intelligence generation.

In contrast, the CTINER dataset incorporates the STIX 2.1 specification, the pyramid model, and the threat information expression model, defining 13 distinct entity types. These types are independent and better support downstream research in threat intelligence.

3.3.2. Dataset Scale Comparison

An analysis of dataset characteristics (sentence count, word count, and entity token count) reveals that the CTINER dataset contains significantly more sentences, words, and entity tokens than the other three datasets in comparison, shown in

Figure 4, summarizes the CTINER dataset alongside other cyber threat intelligence datasets, with the data split ratio of training, testing, and validation sets.

6. Conclusions

In this study, our research improves the NER task for CTI in two key areas: dataset improvement and model enhancement. To improve the structure and annotation of Cyber Threat Intelligence (CTI) datasets, we propose a new entity type definition standard based on the STIX 2.1 framework. Additionally, we develop a systematic annotation methodology and collaborate with domain experts to annotate large-scale datasets, i.e., CTINER, significantly enhancing both data quality and coverage. We introduce a novel Named Entity Recognition (NER) model, PROMPT-BART, which integrates Large Language Models (LLMs) with domain-specific knowledge through prompt engineering. We design three distinct types of prompts (prefix, demonstration, and template prompts) to guide the model in effectively extracting technical details from cybersecurity reports.

Based on the experimental results, the PROMPT-BART model demonstrates significant improvements over existing NER models. PROMPT-BART achieves an F1 score improvement ranging from 4.26% to 8.3% over the baseline deep learning models, showcasing the superiority of more advanced architectures in the NER field. It also outperforms the prompt-based Template-NER model by 1.31% in F1 score, highlighting its advantage in capturing technical details from cybersecurity reports compared to generic methods. Furthermore, the ablation study confirms that each prompt component plays a crucial role in maximizing the model’s performance.

In future research, we will further investigate NER in the CTI domain based on more advanced architectures, such as incorporating a multi-agent framework for more accurate identification of error-prone entity types. This will help improve the performance of NER models and contribute more effectively to maintaining cybersecurity.