A Multimodal Deep Learning Framework for Accurate Wildfire Segmentation Using RGB and Thermal Imagery

Abstract

1. Introduction

- We introduce a novel edge-guided multi-level semantic segmentation network (BFCNet) tailored for wildfire detection.

- To the best of our knowledge, this is the first work to incorporate edge supervision into wildfire segmentation, significantly improving segmentation performance.

- We design three specialized modules to effectively fuse features at different semantic levels, addressing the distinct characteristics of low, mid, and high-level representations.

2. Methods

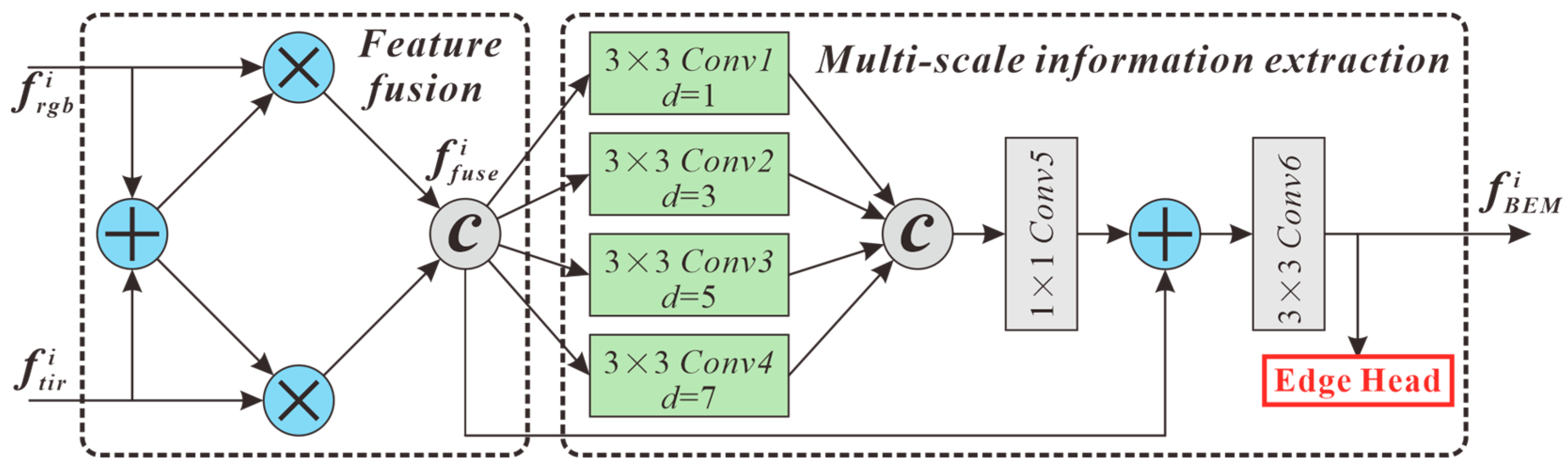

2.1. Boundary Enhancement Module (BEM)

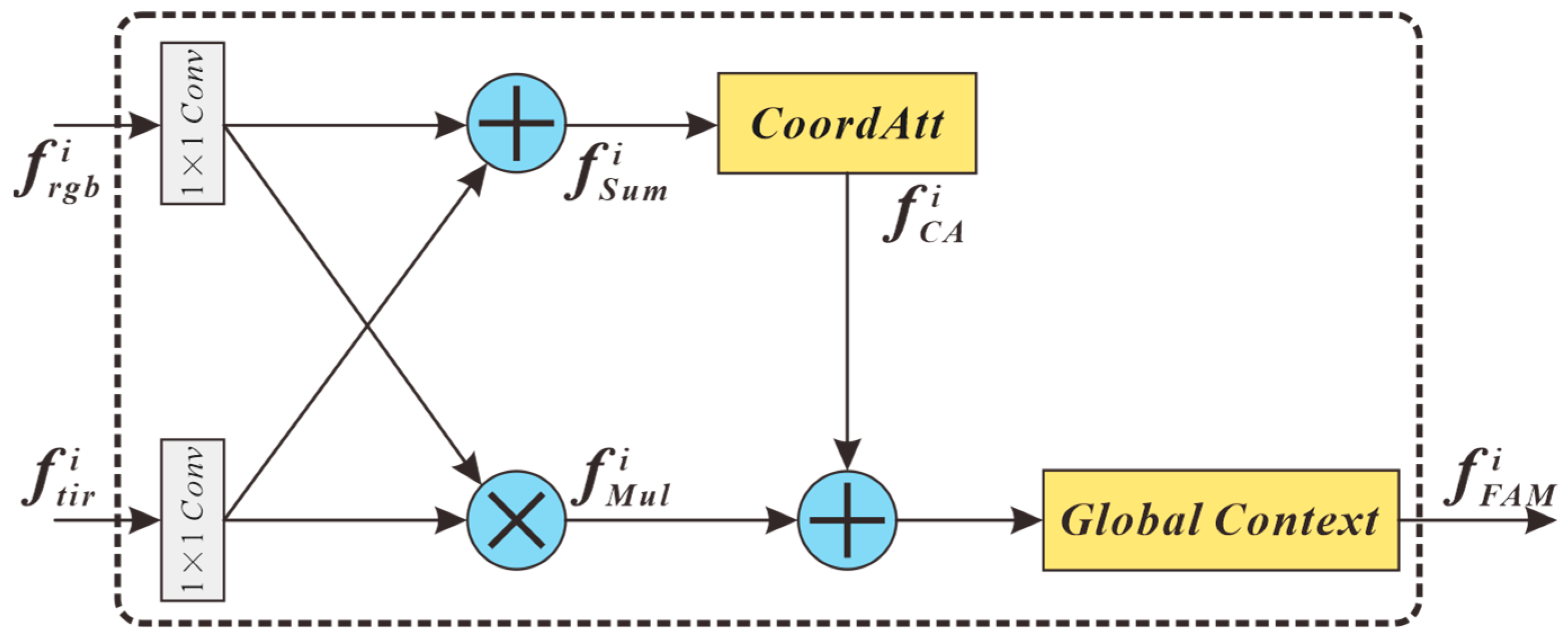

2.2. Fusion Activation Module (FAM)

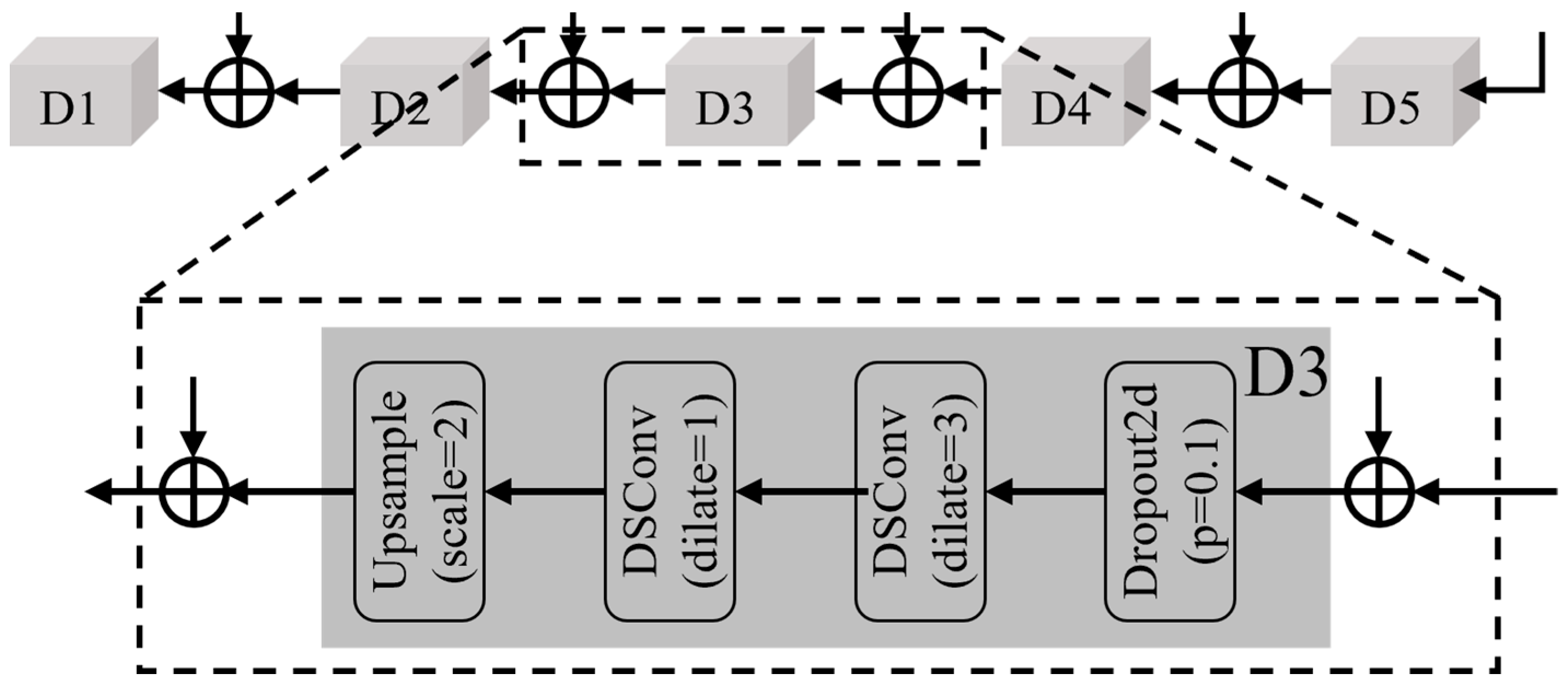

2.3. Cross-Localization Module (CLM)

- (1)

- Cross-layer correlation

- (2)

- Salient Feature Map Generation

2.4. Structure of Decoder

2.5. Loss Function

3. Experiments and Analysis

3.1. Experimental Protocol

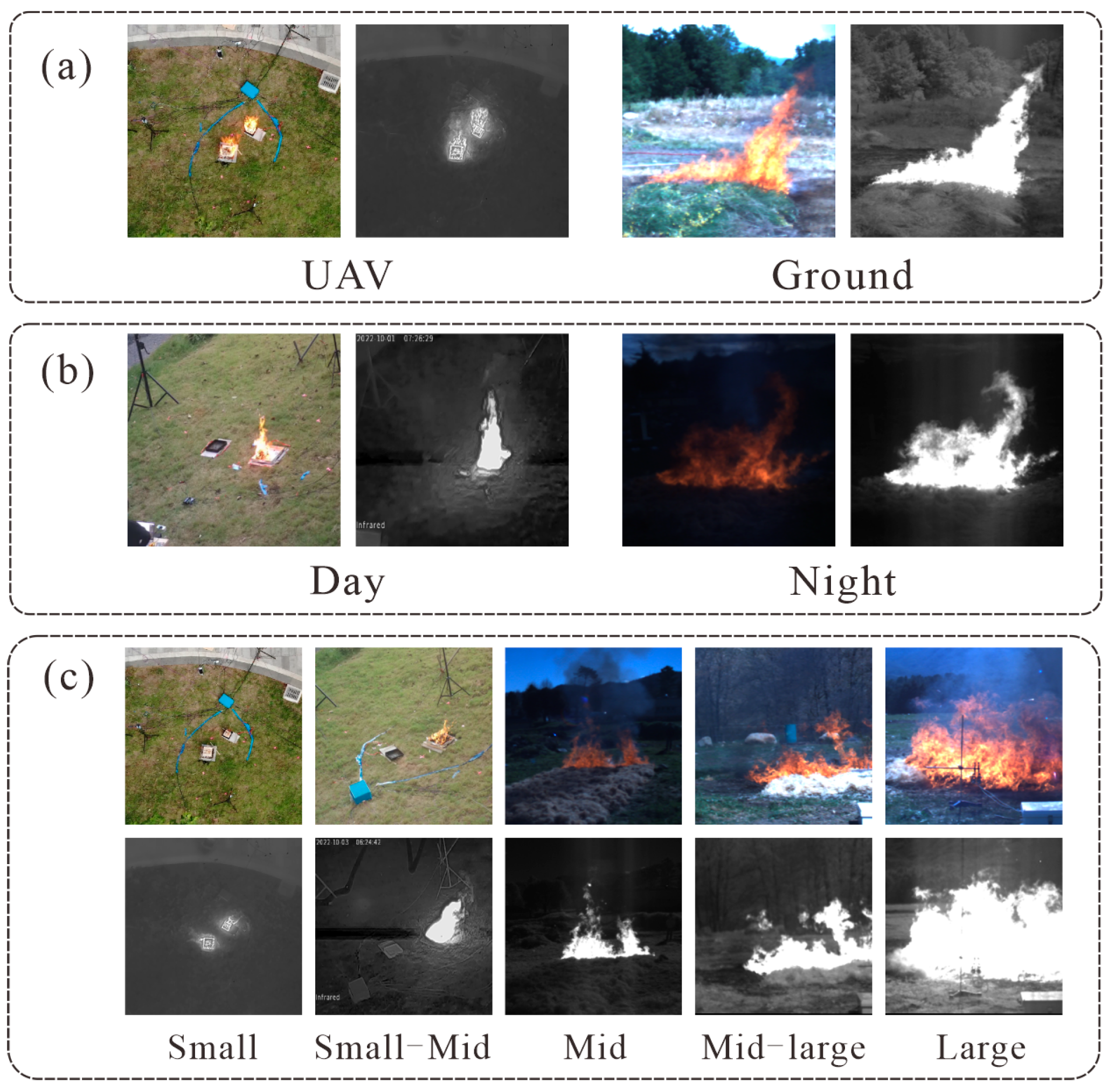

3.1.1. Dataset

3.1.2. Evaluation Metrics

3.1.3. Experimental Details

3.2. Experimental Results and Comparison

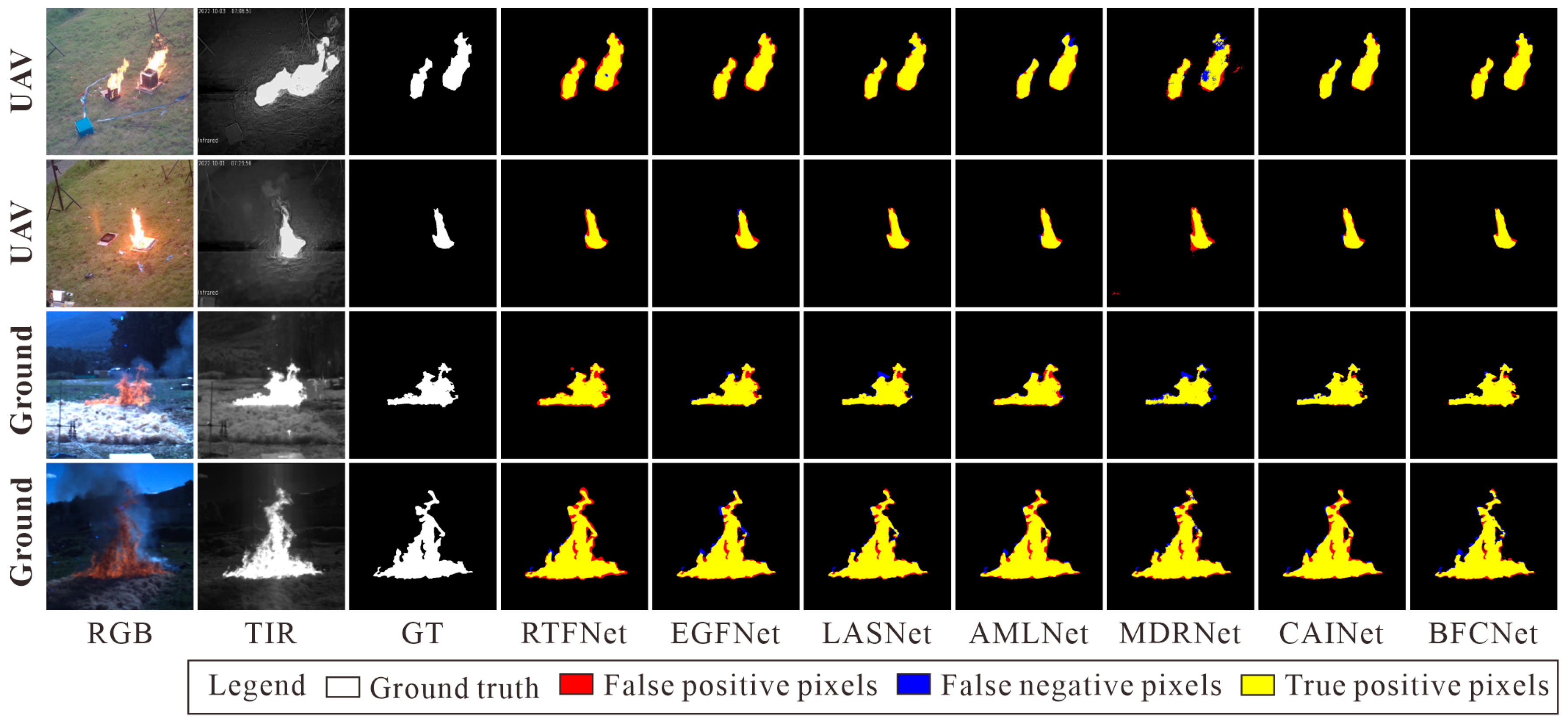

3.2.1. Experiments with Image Sources

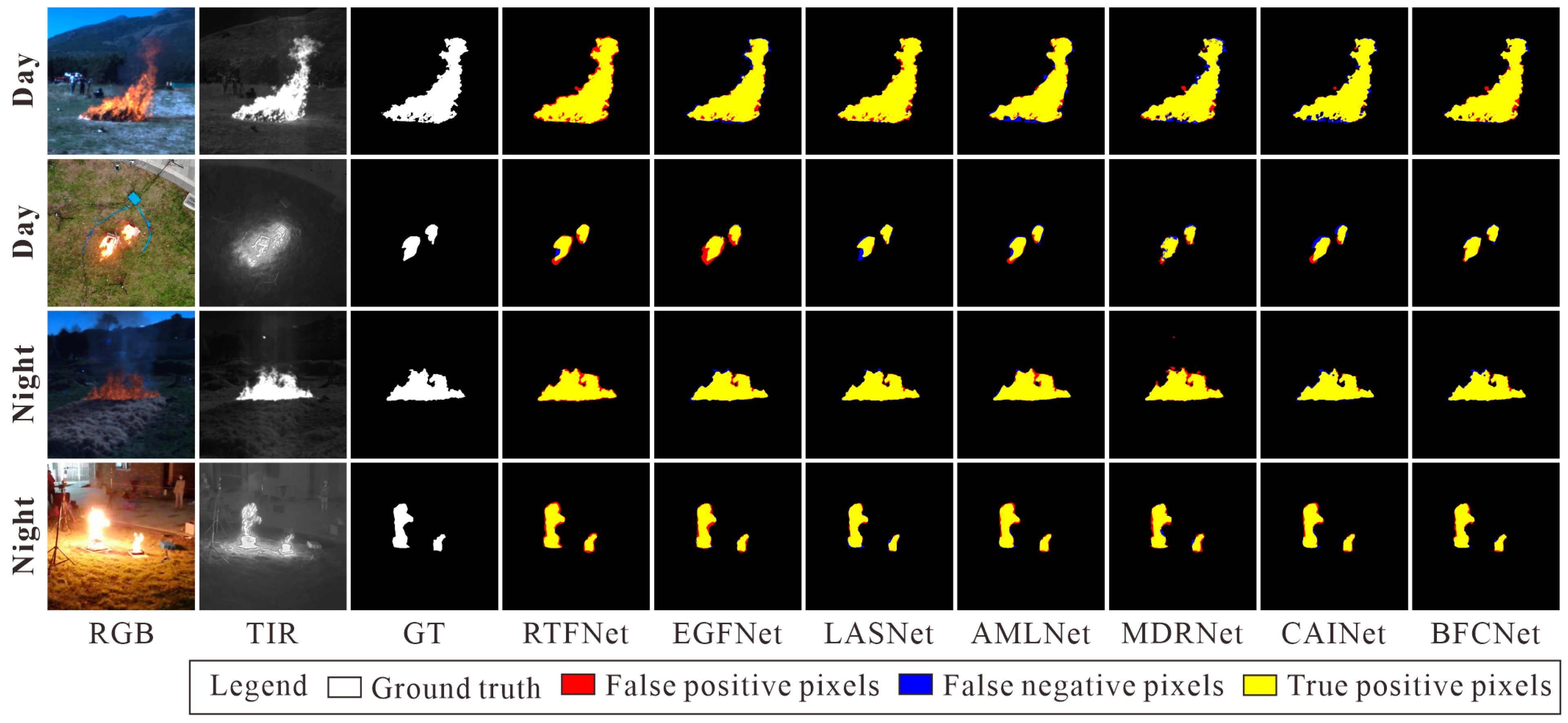

3.2.2. Experiments with Lighting Conditions

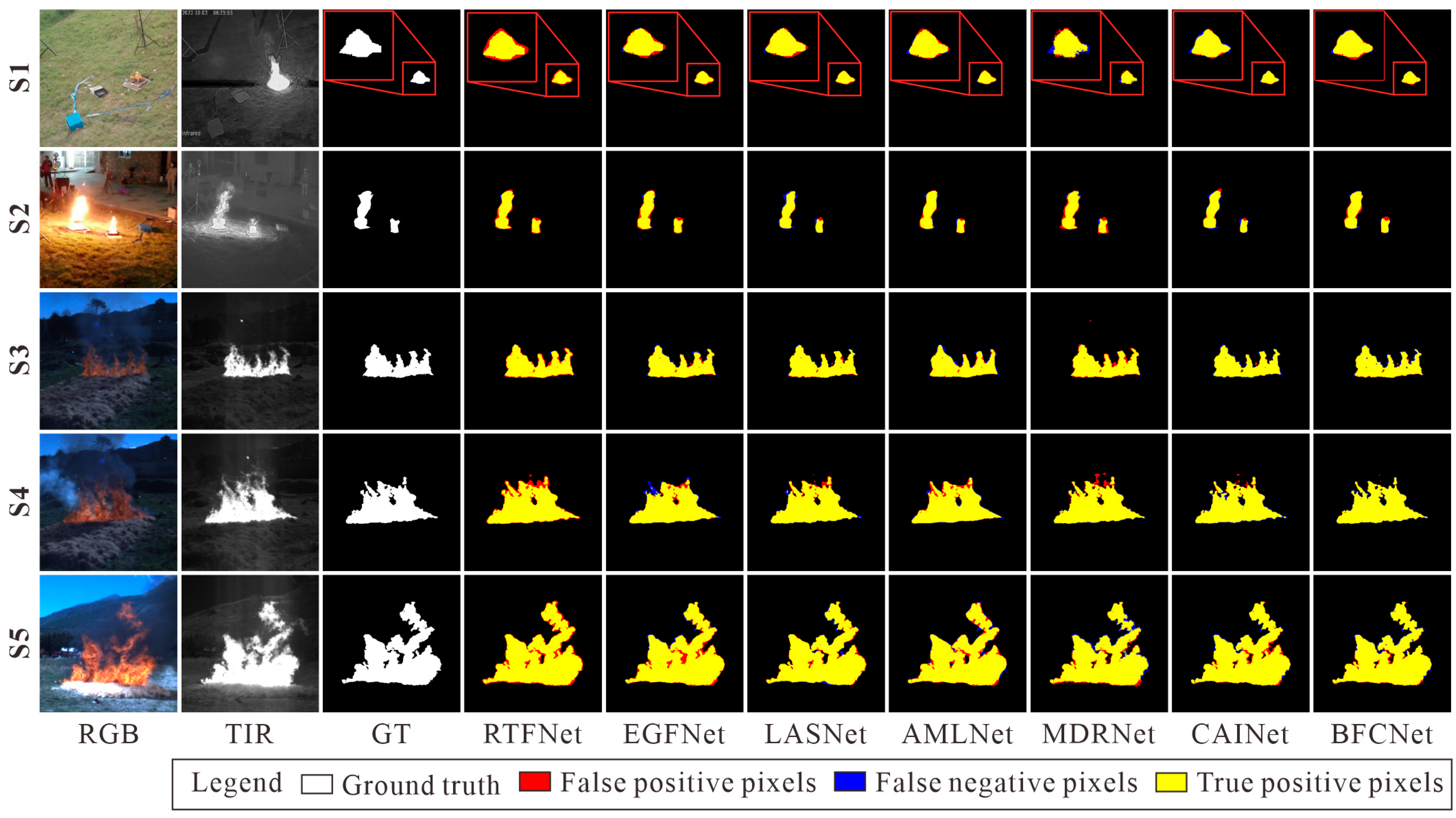

3.2.3. Experiments with Flame Type

3.3. Ablation Study

3.3.1. Ablation of the Modules

3.3.2. Ablation of the Components in Modules

3.4. Inference Time and Model Size

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Akhloufi, M.A.; Couturier, A.; Castro, N.A. Unmanned aerial vehicles for wildland fires: Sensing, perception, cooperation and assistance. Drones 2021, 5, 15. [Google Scholar] [CrossRef]

- Rajoli, H.; Khoshdel, S.; Afghah, F.; Ma, X. FlameFinder: Illuminating Obscured Fire Through Smoke with Attentive Deep Metric Learning. IEEE Trans. Geosci. Remote Sens. 2024, 62, 3440880. [Google Scholar] [CrossRef]

- Sun, Y.; Jiang, L.; Pan, J.; Sheng, S.; Hao, L. A satellite imagery smoke detection framework based on the Mahalanobis distance for early fire identification and positioning. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103257. [Google Scholar] [CrossRef]

- Wang, M.; Yu, D.; He, W.; Yue, P.; Liang, Z. Domain-incremental learning for fire detection in space-air-ground integrated observation network. Int. J. Appl. Earth Obs. Geoinf. 2023, 118, 103279. [Google Scholar] [CrossRef]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.Z.; Blasch, E. Aerial imagery pile burn detection using deep learning: The FLAME dataset. Comput. Netw. 2021, 193, 108001. [Google Scholar] [CrossRef]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Spatio-temporal flame modeling and dynamic texture analysis for automatic video-based fire detection. IEEE Trans. Circuits Syst. Video Technol. 2014, 25, 339–351. [Google Scholar] [CrossRef]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Higher order linear dynamical systems for smoke detection in video surveillance applications. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 1143–1154. [Google Scholar] [CrossRef]

- Dimitropoulos, K.; Barmpoutis, P.; Kitsikidis, A.; Grammalidis, N. Classification of multidimensional time-evolving data using histograms of grassmannian points. IEEE Trans. Circuits Syst. Video Technol. 2016, 28, 892–905. [Google Scholar] [CrossRef]

- Chen, J.; He, Y.; Wang, J. Multi-feature fusion based fast video flame detection. Build. Environ. 2010, 45, 1113–1122. [Google Scholar] [CrossRef]

- Mueller, M.; Karasev, P.; Kolesov, I.; Tannenbaum, A. Optical flow estimation for flame detection in videos. IEEE Trans. Image Process. 2013, 22, 2786–2797. [Google Scholar] [CrossRef]

- Zhang, Z.; Shen, T.; Zou, J. An improved probabilistic approach for fire detection in videos. Fire Technol. 2014, 50, 745–752. [Google Scholar] [CrossRef]

- Marbach, G.; Loepfe, M.; Brupbacher, T. An image processing technique for fire detection in video images. Fire Saf. J. 2006, 41, 285–289. [Google Scholar] [CrossRef]

- Töreyin, B.U.; Dedeoğlu, Y.; Güdükbay, U.; Çetin, A.E. Computer vision based method for real-time fire and flame detection. Pattern Recognit. Lett. 2006, 27, 49–58. [Google Scholar] [CrossRef]

- Celik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158. [Google Scholar] [CrossRef]

- Emmy Prema, C.; Vinsley, S.S.; Suresh, S. Efficient flame detection based on static and dynamic texture analysis in forest fire detection. Fire Technol. 2018, 54, 255–288. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Campana, A.; Celik, T.; Akhloufi, M.A. Computer vision for wildfire research: An evolving image dataset for processing and analysis. Fire Saf. J. 2017, 92, 188–194. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- Bouguettaya, A.; Zarzour, H.; Taberkit, A.M.; Kechida, A. A review on early wildfire detection from unmanned aerial vehicles using deep learning-based computer vision algorithms. Signal Process. 2022, 190, 108309. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A review on early forest fire detection systems using optical remote sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef]

- Martínez-de Dios, J.R.; Merino, L.; Ollero, A. Fire detection using autonomous aerial vehicles with infrared and visual cameras. IFAC Proc. Vol. 2005, 38, 660–665. [Google Scholar] [CrossRef]

- Rossi, L.; Toulouse, T.; Akhloufi, M.; Pieri, A.; Tison, Y. Estimation of spreading fire geometrical characteristics using near infrared stereovision. In Proceedings of the Three-Dimensional Image Processing (3DIP) and Applications, Burlingame, CA, USA, 12 March 2013; Volume 8650, pp. 65–72. [Google Scholar]

- Lu, Y.; Wu, Y.; Liu, B.; Zhang, T.; Li, B.; Chu, Q.; Yu, N. Cross-modality person reidentification with shared-specific feature transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 13379–13389. [Google Scholar]

- Zhao, Y.; Ma, J.; Li, X.; Zhang, J. Saliency detection and deep learning-based wildfire identification in UAV imagery. Sensors 2018, 18, 712. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Stathaki, T.; Dimitropoulos, K.; Grammalidis, N. Early fire detection based on aerial 360-degree sensors, deep convolution neural networks and exploitation of fire dynamic textures. Remote Sens. 2020, 12, 3177. [Google Scholar] [CrossRef]

- Tsalera, E.; Papadakis, A.; Voyiatzis, I.; Samarakou, M. CNN-based, contextualized, real-time fire detection in computational resource-constrained environments. Energy Rep. 2023, 9, 247–257. [Google Scholar] [CrossRef]

- Shirvani, Z.; Abdi, O.; Goodman, R.C. High-resolution semantic segmentation of woodland fires using residual attention UNet and time series of Sentinel-2. Remote Sens. 2023, 15, 1342. [Google Scholar] [CrossRef]

- de Almeida Pereira, G.H.; Fusioka, A.M.; Nassu, B.T.; Minetto, R. Active fire detection in Landsat-8 imagery: A large-scale dataset and a deep-learning study. ISPRS J. Photogramm. Remote Sens. 2021, 178, 171–186. [Google Scholar] [CrossRef]

- Hu, X.; Jiang, F.; Qin, X.; Huang, S.; Yang, X.; Meng, F. An optimized smoke segmentation method for forest and grassland fire based on the UNet framework. Fire 2024, 7, 68. [Google Scholar] [CrossRef]

- Fahim-Ul-Islam, M.; Tabassum, N.; Chakrabarty, A.; Aziz, S.M.; Shirmohammadi, M.; Khonsari, N.; Kwon, H.-H.; Piran, J. Wildfire Detection Powered by Involutional Neural Network and Multi-Task Learning with Dark Channel Prior Technique. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 19095–19114. [Google Scholar] [CrossRef]

- Zhou, T.; Fu, H.; Chen, G.; Zhou, Y.; Fan, D.-P.; Shao, L. Specificity-preserving RGB-D saliency detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 4681–4691. [Google Scholar]

- Rui, X.; Li, Z.; Zhang, X.; Li, Z.; Song, W. A RGB-Thermal based adaptive modality learning network for day–night wildfire identification. Int. J. Appl. Earth Obs. Geoinf. 2023, 125, 103554. [Google Scholar] [CrossRef]

- Safder, Q.; Zhou, F.; Zheng, Z.; Xia, J.; Ma, Y.; Wu, B.; Zhu, M.; He, Y.; Jiang, L. BA_EnCaps: Dense capsule architecture for thermal scrutiny. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Li, H.; Chu, H.K.; Sun, Y. Improving RGB-Thermal Semantic Scene Understanding with Synthetic Data Augmentation for Autonomous Driving. IEEE Robot. Autom. Lett. 2025, 10, 4452–4459. [Google Scholar] [CrossRef]

- Wang, Y.; Chu, H.K.; Sun, Y. PEAFusion: Parameter-efficient Adaptation for RGB-Thermal fusion-based semantic segmentation. Inf. Fusion 2025, 120, 103030. [Google Scholar] [CrossRef]

- Li, X.; Chen, S.; Tian, C.; Zhou, H.; Zhang, Z. M2FNet: Mask-Guided Multi-Level Fusion for RGB-T Pedestrian Detection. IEEE Trans. Multimed. 2024, 26, 8678–8690. [Google Scholar] [CrossRef]

- Zhao, H.; Zhang, L. Dual-stream siamese network for RGB-T dual-modal fusion object tracking on UAV. J. Supercomput. 2025, 81, 1–22. [Google Scholar] [CrossRef]

- Sun, Y.; Zuo, W.; Liu, M. RTFNet: RGB-thermal fusion network for semantic segmentation of urban scenes. IEEE Robot. Autom. Lett. 2019, 4, 2576–2583. [Google Scholar] [CrossRef]

- Deng, F.; Feng, H.; Liang, M.; Wang, H.; Yang, Y.; Gao, Y.; Chen, J.; Hu, J.; Guo, X.; Lam, T.L. FEANet: Feature-enhanced attention network for RGB-thermal real-time semantic segmentation. In Proceedings of the 2021 IEEE/RSJ international conference on intelligent robots and systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 4467–4473. [Google Scholar]

- Zhou, W.; Liu, J.; Lei, J.; Yu, L.; Hwang, J.-N. GMNet: Graded-feature multilabel-learning network for RGB-thermal urban scene semantic segmentation. IEEE Trans. Image Process. 2021, 30, 7790–7802. [Google Scholar] [CrossRef] [PubMed]

- Dong, S.; Zhou, W.; Xu, C.; Yan, W. EGFNet: Edge-aware guidance fusion network for RGB–thermal urban scene parsing. IEEE Trans. Intell. Transp. Syst. 2023, 25, 657–669. [Google Scholar] [CrossRef]

- Guo, S.; Hu, B.; Huang, R. Real-time flame segmentation based on rgb-thermal fusion. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO), Sanya, China, 27–31 December 2021; pp. 1435–1440. [Google Scholar]

- Chen, X.; Hopkins, B.; Wang, H.; O’nEill, L.; Afghah, F.; Razi, A.; Fulé, P.; Coen, J.; Rowell, E.; Watts, A. Wildland fire detection and monitoring using a drone-collected rgb/ir image dataset. IEEE Access 2022, 10, 121301–121317. [Google Scholar] [CrossRef]

- Qiao, L.; Li, S.; Zhang, Y.; Yan, J. Early wildfire detection and distance estimation using aerial visible-infrared images. IEEE Trans. Ind. Electron. 2024, 71, 16695–16705. [Google Scholar] [CrossRef]

- Lu, J.; Yang, J.; Batra, D.; Parikh, D.; Tech, V.; Georgia Institute of Technology. Hierarchical question-image co-attention for visual question answering. Adv. Neural Inf. Process. Syst. 2016, 29, 289–297. [Google Scholar]

- Berman, M.; Triki, A.R.; Blaschko, M.B. The lovász-softmax loss: A tractable surrogate for the optimization of the intersection-over-union measure in neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4413–4421. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Li, G.; Wang, Y.; Liu, Z.; Zhang, X.; Zeng, D. RGB-T semantic segmentation with location, activation, and sharpening. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 1223–1235. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhao, S.; Luo, Y.; Zhang, D.; Huang, N.; Han, J. ABMDRNet: Adaptive-weighted bi-directional modality difference reduction network for RGB-T semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 2633–2642. [Google Scholar]

- Lv, Y.; Liu, Z.; Li, G. Context-aware interaction network for RGB-T semantic segmentation. IEEE Trans. Multimed. 2024, 26, 6348–6360. [Google Scholar] [CrossRef]

| Image Source | UAV | Ground | |||

|---|---|---|---|---|---|

| Count (%) | 976 (48.75%) | 1026 (51.25%) | |||

| Lighting Conditions | Day | Night | |||

| Count (%) | 1073 (53.6%) | 929 (46.4%) | |||

| Flame type (percentage) | Small (S1) (0–1%) | Small-mid (S2) (1–5%) | Mid (S3) (5–10%) | Mid-large (S4) (10–15%) | Large (S5) (15–100%) |

| Count (%) | 951 (47.5%) | 522 (26.1%) | 264 (13.2%) | 139 (6.9%) | 126 (6.3%) |

| Method | Fusion Strategy | Feature Utilization Level | Supervision Mechanism | Limitations |

|---|---|---|---|---|

| RTFNet [37] | Element-wise addition in the encoding stage | Single fusion layer | None | Simple fusion, ignores modality differences |

| EGFNet [40] | Edge-guided fusion + multi-task supervision | Multi-scale fusion | Edge + semantic supervision | Edge extraction relies on traditional operators, lacking cross-modality interaction |

| LASNet [47] | Independent processing of high/mid/low-level features | Hierarchical fusion | Multi-level supervision | Complex modules, limited generalization, edges not emphasized |

| AMLNet [31] | Triple-decoder structure + modality-specific/shared features | Parallel modality-specific and shared features | Three supervision branches | Large number of parameters, complex training, slow inference |

| MDRNet [48] | Bidirectional modality difference reduction + channel-weighted fusion | Multi-scale context modeling | None | Heavy modality conversion modules, high computational cost |

| CAINet [49] | Context-aware interaction + auxiliary supervision | Multi-level interaction space | Multi-task supervision | Complex model structure, high inference cost |

| BFCNet (Ours) | Edge-guided + multi-level fusion | Low/mid/high levels processed separately | Edge supervision + semantic supervision | Clear structure, sufficient fusion, strong edge awareness |

| Methods | UAV | Ground | ||

|---|---|---|---|---|

| IoU | F1 | IoU | F1 | |

| UNet-RGB | 63.88 | 77.96 | 80.38 | 89.13 |

| UNet-T | 61.91 | 76.48 | 76.11 | 86.44 |

| RTFNet | 69.94 | 82.31 | 78.43 | 87.91 |

| EGFNet | 58.40 | 73.74 | 73.68 | 84.84 |

| LASNet | 74.61 | 85.46 | 83.26 | 90.87 |

| AMLNet | 76.39 | 86.61 | 81.61 | 89.88 |

| MDRNet | 67.34 | 80.48 | 79.31 | 88.46 |

| CAINet | 76.86 | 86.91 | 84.94 | 91.85 |

| BFCNet | 78.24 | 87.79 | 86.10 | 92.53 |

| Methods | Day | Night | ||

|---|---|---|---|---|

| IoU | F1 | IoU | F1 | |

| RTFNet | 78.44 | 87.92 | 82.48 | 90.40 |

| EGFNet | 74.02 | 85.07 | 79.97 | 88.87 |

| LASNet | 83.46 | 90.99 | 86.96 | 93.03 |

| AMLNet | 81.58 | 89.86 | 86.35 | 92.68 |

| MDRNet | 79.45 | 88.55 | 84.44 | 91.56 |

| CAINet | 85.05 | 91.92 | 89.88 | 94.67 |

| BFCNet | 86.19 | 92.58 | 89.61 | 94.52 |

| Methods | S1 | S2 | S3 | S4 | S5 | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| IoU | F1 | IoU | F1 | IoU | F1 | IoU | F1 | IoU | F1 | |

| UNetRGB | 57.04 | 72.64 | 71.65 | 83.48 | 84.77 | 91.76 | 85.97 | 92.45 | 91.34 | 95.48 |

| UNetT | 65.96 | 79.49 | 71.83 | 83.61 | 78.56 | 87.99 | 86.10 | 92.53 | 91.07 | 95.33 |

| RTFNet | 65.19 | 78.93 | 74.29 | 85.25 | 79.19 | 88.39 | 83.32 | 90.90 | 87.97 | 93.60 |

| EGFNet | 51.55 | 68.03 | 67.42 | 80.54 | 77.64 | 87.41 | 82.67 | 90.51 | 88.44 | 93.87 |

| LASNet | 70.49 | 82.69 | 78.26 | 87.80 | 85.69 | 92.29 | 86.18 | 92.58 | 92.26 | 95.98 |

| AMLNet | 73.40 | 84.66 | 78.13 | 87.72 | 83.03 | 90.73 | 85.23 | 92.03 | 90.62 | 95.08 |

| MDRNet | 63.84 | 77.93 | 73.58 | 84.78 | 82.23 | 90.25 | 84.62 | 91.67 | 90.53 | 95.03 |

| CAINet | 74.39 | 85.31 | 80.70 | 89.32 | 87.86 | 93.54 | 89.12 | 94.25 | 93.81 | 96.81 |

| BFCNet | 75.14 | 85.81 | 81.94 | 90.08 | 87.50 | 93.33 | 89.07 | 94.22 | 94.44 | 97.14 |

| No. | Baseline | BEM | FAM | CLM | IoU | F1 |

|---|---|---|---|---|---|---|

| 1 | ✓ | 83.57 | 91.05 | |||

| 2 | ✓ | ✓ | 85.61 | 92.23 | ||

| 3 | ✓ | ✓ | 86.37 | 92.69 | ||

| 4 | ✓ | ✓ | 85.88 | 92.40 | ||

| 5 | ✓ | ✓ | ✓ | 86.72 | 92.89 | |

| 6 | ✓ | ✓ | ✓ | 86.28 | 92.64 | |

| 7 | ✓ | ✓ | ✓ | 86.97 | 93.03 | |

| 8 | ✓ | ✓ | ✓ | ✓ | 88.25 | 93.76 |

| Aspects | Models | IoU | F1 |

|---|---|---|---|

| BFCNet (Ours) | 88.25 | 93.76 | |

| w/o sum | 86.79 | 92.93 | |

| BEM | w/o mul | 87.88 | 93.55 |

| w/o MSFE | 87.85 | 93.53 | |

| w/o mul | 86.27 | 92.63 | |

| FAM | w/o CA | 87.55 | 93.36 |

| w/o GC | 86.79 | 92.93 | |

| w/o Linear | 85.44 | 92.15 | |

| CLM | w/o Att | 86.55 | 92.79 |

| w/o Residual | 87.07 | 93.09 |

| Models | FLOPs (G) | Params (M) |

|---|---|---|

| RTFNet | 286.82 | 254.5 |

| EGFNet | 171.57 | 62.77 |

| LASNet | 198.74 | 93.57 |

| AMLNet | 240.94 | 123.19 |

| MDRNet | 483.45 | 210.87 |

| CAINet | 123.62 | 12.16 |

| BFCNet | 121.79 | 41.52 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yue, T.; Huang, H.; Wang, Q.; Song, B.; Chen, Y. A Multimodal Deep Learning Framework for Accurate Wildfire Segmentation Using RGB and Thermal Imagery. Appl. Sci. 2025, 15, 10268. https://doi.org/10.3390/app151810268

Yue T, Huang H, Wang Q, Song B, Chen Y. A Multimodal Deep Learning Framework for Accurate Wildfire Segmentation Using RGB and Thermal Imagery. Applied Sciences. 2025; 15(18):10268. https://doi.org/10.3390/app151810268

Chicago/Turabian StyleYue, Tao, Hong Huang, Qingyang Wang, Bo Song, and Yun Chen. 2025. "A Multimodal Deep Learning Framework for Accurate Wildfire Segmentation Using RGB and Thermal Imagery" Applied Sciences 15, no. 18: 10268. https://doi.org/10.3390/app151810268

APA StyleYue, T., Huang, H., Wang, Q., Song, B., & Chen, Y. (2025). A Multimodal Deep Learning Framework for Accurate Wildfire Segmentation Using RGB and Thermal Imagery. Applied Sciences, 15(18), 10268. https://doi.org/10.3390/app151810268