Abstract

Adversarial attacks against deep learning models achieve high performance in white-box settings but often exhibit low transferability in black-box scenarios, especially against defended models. In this work, we propose Multi-Path Random Restart (MPRR), which initializes multiple restart points with random noise to optimize gradient updates and improve transferability. Building upon MPRR, we propose the Channel Shuffled Attack Method (CSAM), a new gradient-based attack that generates highly transferable adversarial examples via channel permutation of input images. Extensive experiments on the ImageNet dataset show that MPRR substantially improves the success rates of existing attacks (e.g., boosting FGSM, MI-FGSM, DIM, and TIM by 22.4–38.6%), and CSAM achieves average success rates 13.8–24.0% higher than state-of-the-art methods.

1. Introduction

Deep neural networks (DNNs) have achieved remarkable performance in a wide range of tasks. However, Szegedy et al. [1] demonstrated that DNNs are vulnerable to adversarial examples, generated by applying small, carefully crafted perturbations that induce incorrect model predictions. Consequently, there has been growing interest in developing methods to generate more potent and transferable adversarial examples.

Depending on the attacker’s knowledge of the target model, adversarial attacks are commonly categorized into white-box or black-box attacks. In the white-box scenario, adversarial examples are generated with full access to the model and its gradients; representative methods include FGSM [2], I-FGSM [3], and PGD [4], which typically achieve high success rates. In contrast, black-box attacks operate without direct access to model internals: query-based black-box methods can achieve strong performance but require repeated queries to the target model [5,6,7], while transfer-based approaches generate adversarial examples on a surrogate model and then apply them to the target [8,9,10,11,12].

Numerous approaches have been proposed to enhance the transferability of adversarial examples, including improved gradient estimation techniques (e.g., MI-FGSM [8] and NI-FGSM [13]) and various input transformations such as random resizing and padding [10], translation [11], and scaling [13]. Random restarts further broaden the exploration of the input space by perturbing the initial input with small amounts of random noise instead of using the original image directly; these perturbations are typically drawn from simple distributions (e.g., uniform or Gaussian) in the pixel domain. Despite the substantial gains achieved by these methods, a notable gap persists between the effectiveness of white-box and black-box attacks.

In this work, we propose an adversarial attack enhancer named Multi-Path Random Restarts (MPRR), a novel random-restart mechanism designed to improve adversarial transferability. Unlike conventional single-path random restarts, MPRR simultaneously initializes multiple restart points at each restart and aggregates gradients computed along these parallel paths to better guide the update direction. Empirical results show that the attack success rates increase with the number of restart paths. We further apply MPRR to develop a new gradient-based attack that generates highly transferable adversarial examples. Our main contributions are as follows.

- We propose Multi-Path Random Restarts (MPRR), a novel random-restart mechanism that simultaneously initializes multiple perturbed starting points at each restart and aggregates gradients across these parallel paths to better guide gradient updates. MPRR can be easily combined with existing gradient-based attacks to improve adversarial transferability.

- We empirically demonstrate that MPRR is effective when the perturbations are sampled from various distributions (Gaussian, uniform, Bernoulli and Poisson); in all cases MPRR yields substantial improvements in attack performance.

- We introduce the Channel Shuffled Attack Method (CSAM), a gradient-based attack that employs channel permutations as a form of data augmentation and leverages MPRR to produce highly transferable adversarial examples. CSAM can be integrated with existing input-transformation techniques to further enhance transferability.

- Extensive experiments on the ImageNet dataset show that integrating MPRR with standard attacks increases average black-box success rates by approximately 22.4–38.2%, and that CSAM outperforms state-of-the-art methods by about 13.8–24.0% on average.

2. Related Work

In this section, we focus on random restarts, adversarial transferability, and adversarial defenses.

2.1. Random Restarts

Random restart refers to random sampling in the vicinity of the input during the attack, rather than the input itself. Most of the attacks benefit from random restarts. For example, PGD [4] introduces random restarts on the basis of BIM, which can substantially improve adversarial transferability. DAA [14] improves on PGD by proposing a powerful variant based on random restarts. FAB [15] generates the minimum perturbation with a single restart. ODS [16] performs a random restart of the output space to further improve the attack performance of both white-box and black-box attacks. Since random restarts allow for a wider exploration of the input or output space, they are particularly effective in attacking models with gradient masking defenses [17].

2.2. Adversarial Transferability

Adversarial transferability refers to the ability of adversarial examples generated on a model to attack other models. Several studies have been proposed to improve the transferability of adversarial attacks. MI-FGSM [8] introduces momentum into the iterative process of attacks and shows that attacking an ensemble model could generate more transferable adversarial examples. NI-FGSM [13] uses Nesterov Accelerated Gradient (NAG) to improve adversarial transferability. DIM [10] further improves transferability by randomly resizing and padding input examples. TIM [11] uses the translation-invariant of the input examples to achieve better transferability. SIM [13] scales the input examples and proposes the definition of model augmentation to improve adversarial transferability. Inspired by the mixup [18], AAM [9] takes examples from other categories and adds them to the original input example by linear interpolation. Then it calculates the average gradient to generate highly transferable adversarial examples. VT [19] uses the variance between gradients to tune the update direction of the current gradient and thus improve the transferability of adversarial attacks.

2.3. Adversarial Defenses

The existence of adversarial examples raise a security concern for the deployment of neural networks, especially in some security-sensitive areas, such as autonomous driving and face recognition. Therefore, the study of defense is particularly important. Adversarial training [2,20] adds adversarial examples to the training set and retrains the model to improve robustness, which is one of the most effective defense methods. Ensemble-adversarial training [21] uses adversarial examples generated on multiple models to extend the training set and train a more robust classifier. Feature compression methods such as bit-reduction (Bit-Red) [22] and spatial smoothing remove the effects of adversarial perturbations on the model. High-level representation guided denoiser (HGD) [23] is designed to defend against adversarial examples. The use of random resizing and padding (R&P) [24] for the input examples can also be used to mitigate the effect of adversarial examples on the model. Comdefend [25] is an end-to-end network that compresses and reconstructs adversarial examples to eliminate the adversarial perturbations. Random smoothing (RS) [26] can train a robust classifier that can be certified. The neural representation purifier (NRP) [27] is trained to learn how to purify adversarial examples.

3. Methodology

For the methodology section, we first discuss the preliminaries in Section 3.1. Then, we describe MPRR in detail in Section 3.2, and then describe our proposed CSAM in Section 3.3.

3.1. Preliminaries

Let f be a classifier with parameters and x be an example with the ground-true label y. denotes the loss function of f (e.g., the cross-entropy loss). Adversarial attacks aim to find an adversarial example that satisfies subject to , where denotes the p-norm distance between x and . In this work, we focus on .

Gradient-based Attacks belong to white-box attacks, which access the gradient of the model to generate adversarial examples. They can be considered transfer-based black-box attacks if the generated adversarial examples can successfully attack the black-box model. Our proposed CSAM belongs to the transfer-based black-box attack. The following approaches are most relevant to our work, and we give a brief description of them.

Fast Gradient Sign Method (FGSM) [2] maximizes the loss function by adding increments to the gradient direction, which in turn generates adversarial examples through a single-step update. It is formalized as follows:

where α is the step size, sign is the sign function, and ∇ is the gradient of the model.

Iterative Fast Gradient Sign Method (I-FGSM) [3] is an iterative variant of FGSM with a smaller perturbation:

where restricts the value of the generated adversarial example is restricted to the ϵ-ball of the original example.

Momentum Iterative Fast Gradient Sign Method (MI-FGSM) [8] enhances the transferability by introducing momentum into I-FGSM:

where g0 = 0 and μ is the decay factor

Scale-Invariant Method (SIM) [13] introduces the scale-invariant property and calculates the average gradient over a set of images scaled by factor on the input image to enhance the adversarial transferability:

where and n is the number of scale copies of input images.

3.2. The Random Multi-Path Restarts

The adversarial attacks can be analogized to the standard neural model optimization process [13], which leads to low transferability of attacks due to the ‘overfitting’ of the model. Random restarts are used to find different starting points to search the input space more extensively to reduce the ‘overfitting’ and thus improve the transferability. However, random restarts are optimized for points on a single path, which are still prone to fall into local optima. Therefore, we propose Multi-Path Random Restarts (MPRR), which initializes multiple restart points simultaneously and optimizes adversarial examples to improve adversarial transferability.

Definition 1

(Single-Path Random Restarts). Given a classifier f with parameter θ and its loss function . For an arbitrary input example x, a restart path represents the process from x to the computation of the obtained model gradient. It can be defined as:

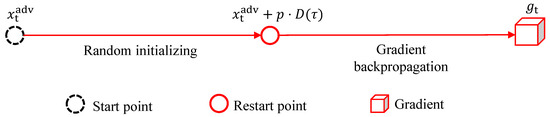

where ∂ refers to the partial derivative, refers to the random noise sampled from the D distribution (e.g., Gaussian distribution, uniform distribution), τ is the parameter of this distribution and p is the proportion of random noise. We also visualize the single-path random restarts in Figure 1.

Figure 1.

Single-path random restarts.

Definition 2

(Multi-Path Random Restarts). Adding and subtracting random noise to restart input example for the t-th iteration. The input space is further explored through multiple paths, i.e., multiple restart points, avoiding local optima:

The multi-path random restart can be defined as follows:

Each restart point constitutes a separate path and m is the number of groups in the restart path. At each restart, the aggregated gradient is calculated from the gradient of multiple restart paths to improve adversarial transferability. We simply use to denote MPRR without ambiguity in the following. MPRR can be combined with any gradient-based attacks, such as FGSM [2], MI-FGSM [8], TIM [11], DIM [10], SIM [13]. Specifically, we have combined MPRR with FGSM, denoted as FGSM(MPRR). Its gradient update can be expressed as follows.

is the gradient obtained by MPRR, which can be expressed as:

where and the process of generating adversarial examples is expressed as:

3.3. The Channel Shuffled Attack Method

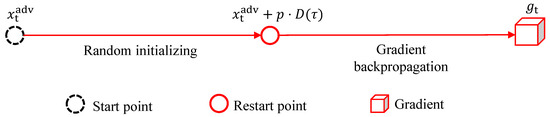

DIM [10] and SIM [13] use input transformation to mitigate the ‘overfitting’ of the model and improve adversarial transferability. In addition, attacking ensemble-model can also be very effective to improve the transferability of adversarial attacks. Inspired by them, we employ MPRR to propose a channel-shuffled attack method, called CSAM. CSAM begins by shuffling the input image channels into six permutations, such as converting RGB to RBG by swapping the blue and green channel. Each permuted image undergoes MPRR-based noise injection, where gradients are computed for noise-augmented variants. The aggregated gradients update adversarial examples, leveraging both channel diversity and multi-path exploration. As shown in Figure 2.

Figure 2.

The overview of proposed CSAM.

The channels of the original RGB input image are shuffled, resulting in six input images according to all possible channel arrangements. We denote the set of these six input images by :

where each element corresponds to a unique permutation of the RGB channels. For instance, swaps the blue and green channels of the original image x, enhancing input diversity.

During each iteration, we replace the input image x with , iteratively updating a set of adversarial examples instead of a single one. The gradient updated at the th iteration can be expressed as:

For the input image , i.e., the adversarial image in the -th iteration, we use MPRR to optimize the gradient, which can be expressed as:

where m is the number of groups of restart paths, n is the number of each restart points, and control the portion of random noise sampled from distribution D. Through the above analysis, the gradient update rule of CSAM is as follows:

We describe CSAM in detail in Algorithm 1.

The computational complexity of CSAM arises from its multi-path. Time complexity is dominated by gradient computations: for each iteration (T), we process m restart groups, n scale copies, and 6 channel permutations, leading to O(T × m × n * 6).

| Algorithm 1 CSAM |

| Require: A classifier f with loss function L, A benign example x with ground-truth label y, the maximum perturbation , number of iterations T and decay factor Ensure: An adversarial example |

4. Experiments

To validate the effectiveness of the proposed MPRR and CSAM, we conduct extensive experiments on the standard ImageNet dataset [28]. We first specify the experimental setups. Then we compare our method with other competitive methods in various experimental settings. Finally, we provide a further investigation on hyper-parameters for CSAM.

4.1. Experimental Setups

Dataset. We select 1000 benign images from 1000 classes from the ILSVRC 2012 validation set [28]. All models almost correctly classify these images.

Models. We validate the effectiveness of MPRR and CSAM on four popular normally trained models, namely Inception-v3 (Inc-v3) [29], Inception-v4 (Inc-v4), Inception-Resnet-v2 (IncRes-v2) [30] and Resnet-v2-101 (Res-101) [31] as well as three ensemble adversarially trained models, i.e., Inc-v3ens3, Inc-v3ens4 and IncRes-v2ens [21]. We also attack seven advanced defense models, which are robust against black-box attacks on the ImageNet, i.e., HGD [23], R&P [24], NIPS-r3, NRP [27], RS [26], Bit-Red [22] and ComDefend [25].

Evaluation criteria. We use the attack success rate to compare the performance of different methods. The success rate is an important metric in adversarial attacks, which divides the number of misclassified adversarial examples by the total number of images.

Comparison methods. To evaluate the effectiveness of MPRR, we combined it with several adversarial attack methods, including FGSM [2], MI-FGSM [8], TIM [11], and DIM [10]. Furthermore, to benchmark the performance of CSAM, we compared it against three well-known transfer-based adversarial attacks: SIM [13], AAM [9], and VT [19]. Notably, since SIM, CSAM and AAM involve scaling the input image, we applied the same input image scaling to VT as well, in the interest of fair comparison, and denote this variant as VTS.

Implementation details. We follow the attack setting in [8], the maximum perturbation , the number of iteration and the step size . For MI-FGSM, the decay factor . For TIM, we adopt the Gaussian kernel with kernel size . For DIM, the transformation probability . For AAM, the number of samples of different categories and images for mixing with . For VT, the number of images for variance tuning and the bound for variance tuning . For the proposed CSAM, we set the number of each restart points , the number of groups of restart paths , the weight of the gradient , , the portion of random noise and is random sampled from .

4.2. Evaluation of MPRR

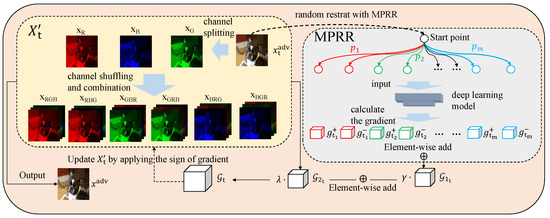

We first combine MPRR with four well-known attacks, i.e., FGSM, MI-FGSM, DIM and TIM, denoted as FGSM (MPRR), MI-FGSM (MPRR), DIM (MPRR) and TIM (MPRR), respectively. We generate adversarial examples on Inc-v3 and test them on seven neural networks. We calculate average attack success rates to verify the effectiveness of MPRR. For the uniform distribution, we fix . The random noise in MPRR we sampled from Gaussian, uniform, Bernoulli and Poisson distributions. These are denoted MPRR-G, MPRR-U, MPRR-B, and MPRR-P, respectively. Figure 3 shows the effect of these attacks combined with MPRR.

Figure 3.

Average success rates (%) on seven models with adversarial examples crafted on Inc-v3. When , it means success rates achieved by attacks without MPRR.

Existing attacks combined with MPRR can substantially improve adversarial transferability. As the number of restart paths m increases, the average attack success rate continues to increase, but growth is decreasing. Due to device limitations, we set m to a maximum of 10, i.e., the number of restart paths equals 20. As shown in Figure 3, we observe that Gaussian noise or other noise can substantially improve adversarial transferability. For example, MPRR-G could improve the average success rates of these attacks against seven models by about 22.4∼38.6%.

4.3. Evaluation of CSAM

We conducted three experiments: (1) attack on baseline models, (2) combined with transformation-based attacks, and (3) attack on advanced defense models.

4.3.1. Attacking on Baseline Models

We first perform three adversarial attacks, i.e., AAM [9], VTS [19], and our proposed CSAM on four normally trained neural networks and test them on seven neural networks. Table 1 shows the success rates of these attacks.

Table 1.

Success rates (%) of AAM, VTS and CSAM on seven baseline models. The adversarial examples are crafted on Inc-v3, Inc-v4, IncRes-v2, and Res-101, respectively. The best attack success rates are highlighted in bold. * represents white-box attacks.

We can observe that CSAM achieves higher attack success rates for both the normally trained and adversarially trained black-box models, while maintaining similar white-box attack performance. In particular, the improvement of success rates for the adversarially trained model is much higher than that of the other three attacks. For example, when adversarial examples crafted on Inc-v3, CSAM achieves a 44.4% higher attack success rate than SIM, 39.4% higher than AAM and 20.6% higher than VTS on Inc-v3ens3; 43.6% higher than SIM, 39.9% higher than AAM and 22.3% higher than VTS on Inc-v3ens4, 43.5% higher than SIM, 41.2% higher than AAM and 41.2% and 20.9% higher than VTS on IncRes-v2ens. In general, the average attack success rate of CSAM on the seven models is improved by 29.7% compared to SIM, 24% compared to AAM and 13.8% compared to VTS.

4.3.2. Combined with Transformation-Based Attacks

CSAM can also be simply integrated with transformation-based attacks. We combine SIM, AAM, VTS, and CSAM with input transformation-based attacks, i.e., DIM, TIM, denoted TD-SIM, TD-AAM, TD-VTS and TD-CSAM, respectively. The experimental results are shown in Table 2.

Table 2.

Success rates (%) of AAM, VTS and CSAM on seven baseline models. The adversarial examples are crafted on Inc-v3, Inc-v4, IncRes-v2, and Res-101, respectively. The best attack success rates are highlighted in bold. * represents white-box attacks.

As we can see in Table 2, TD-CSAM achieves better transferability than TD-SIM, TD-AAM and TD-VTS. When adversarial examples are crafted on Inc-v3, Inc-v4 and IncRes-v2, TD-CSAM achieves an average attack success rate of 90.3%, 90%, and 91.5% on the seven baseline models, respectively, which are significantly higher than the other three attacks.

4.3.3. Attacking on Advanced Defense Models

To further validate the effectiveness of the proposed approach, we craft adversarial examples on Inc-v3 to attack seven advanced defense models, including HGD, R&P, NIPS-r3, NRP, RS, Bit-Red and ComDefend. For HGD, R&P, NIPS-r3, RS and Comdefend, we calculate the success rates on the official models provided in the corresponding papers. For all other defense methods, we calculate the success rates on Inc-v3ens3. The results are shown in Table 3.

Table 3.

Success rates (%) on seven advanced models by TD-AAM, TD-VTS and TD-CSAM respectively. The adversarial examples are crafted on Inc-v3. The best attack success rates are highlighted in bold.

As shown in Table 3, the proposed TD-CSAM outperforms both TD-AAM and TD-VTS across all defense models, while SIM and TD-SIM are excluded from this comparison due to their relatively weaker performance. Specifically, TD-CSAM achieves an average attack success rate that is 22.4% higher than TD-AAM and 14.6% higher than TD-VTS, demonstrating its effectiveness.

4.4. Ablation Studies of Hyper-Parameters

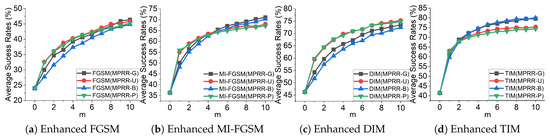

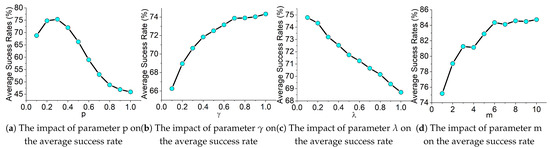

For the scale of copies n and the input portion , we followed the setup in [13]. Gaussian distribution is adopted in CSAM and we perform a series of ablation experiments to investigate the four hyper-parameters and m of CSAM, and all adversarial examples are crafted on the Inc-v3 model. The results are shown in Figure 4.

Figure 4.

Average success rates (%) on seven models with adversarial examples crafted by CSAM on Inc-v3 for various hyper-parameters.

For the coefficient p of random noise, we first fix , , and , then test the effect of different values of p on the performance of CSAM, as shown in Figure 4a. The average attack success rate is highest when and decreases continuously with increasing p.

For the coefficient of , we fix , , and . As shown in Figure 4b, the average success rate is increasing and the growth rate slows down from 0.6 to 1.

For the coefficient of , we fix and and . As shown in Figure 4c. As lambda increases, the average success rate decreases.

For the group number m of restart paths, we fix , , . As shown in Figure 4d. As m increases, the average success rate continues to increase, and when m is greater than 6, the success rate increases slower.

In summary, for the hyper-parameters of CSAM, is set to 1, is set to 0.1, and m is set to 6. The magnitude of the p value can indicate the distance from the original input. In order to search the input space more extensively, we randomly sampled p from U(0.1, 0.5).

5. Conclusions

In this work, we propose multi-path random restarts to enhance the transferability of the existing adversarial attacks. Specifically, we define the input-to-gradient process as a path and initialize multiple paths using random noise to optimize the gradient update. Then we use multiple paths to enhance existing adversarial attacks. In addition, we employ MPRR to design a new gradient-based adversarial attack, called CSAM. It could generate highly transferable adversarial examples. Extensive experiments on seven baseline models demonstrate that MPRR could significantly improve the transferability of existing competitive attacks, and CSAM outperforms the state-of-the-art attacks for 13.8∼24% on average. In addition, MPRR and CSAM are generally applicable to any gradient-based attack. We use CSAM integrated with input transformation (e.g., DIM, TIM) that can further enhance transferability. Empirical results on seven advanced defense models show that our integrated method outperforms the state-of-the-art attacks 14.6∼22.4%.

Limitations and Future Work

The proposed CSAM demonstrates compelling performance in boosting adversarial transferability, yet it faces key limitations: its high computational complexity restricts real-time applications. To address these challenges, future work will prioritize model distillation to streamline computational demands, extend CSAM to natural language processing domains, investigate ensemble defenses for enhanced robustness, and leverage federated learning techniques to overcome dataset constraints and improve generalizability.

Author Contributions

Conceptualization, J.C. and W.Z.; methodology, H.H.; validation, J.C. and W.Z.; formal analysis, W.Z.; data curation, J.C.; writing—original draft preparation, J.C.; writing—review and editing, J.C.; visualization, W.Z.; supervision, H.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Key Laboratory of Higher Education of Sichuan Province for Enterprise informatization and Internet of Thing grant number 2023WYJ03, the National Natural Science Foundation of China (NSFC) under Grant No. 42574208 and the Chinasoft Education Industry University Collaborative Education Program under Grant No. 240805181282846 and the APC was funded by The Key Laboratory of Higher Education of Sichuan Province for Enterprise informatization and Internet of Thing.

Data Availability Statement

The original data presented in the study are openly available in GitHub at https://github.com/jxdaily/ (accessed on 20 August 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S. Adversarial examples in the physical world. In Artificial Intelligence Safety and Security; Chapman and Hall/CRC: Boca Raton, FL, USA, 2018; pp. 99–112. [Google Scholar]

- Mądry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards deep learning models resistant to adversarial attacks. Stat 2017, 1050, 9. [Google Scholar]

- Chen, P.Y.; Zhang, H.; Sharma, Y.; Yi, J.; Hsieh, C.J. Zoo: Zeroth order optimization based black-box attacks to deep neural networks without training substitute models. In Proceedings of the International Workshop on Artificial Intelligence and Security (AISec), Dallas, TX, USA, 3 November 2017; pp. 15–26. [Google Scholar]

- Cheng, S.; Dong, Y.; Pang, T.; Su, H.; Zhu, J. Improving black-box adversarial attacks with a transfer-based prior. In Proceedings of the NIPS’19: 33rd International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; p. 15947. [Google Scholar]

- Li, H.; Xu, X.; Zhang, X.; Yang, S.; Li, B. Qeba: Query-efficient boundary-based blackbox attack. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1221–1230. [Google Scholar]

- Dong, Y.; Liao, F.; Pang, T.; Su, H.; Zhu, J.; Hu, X.; Li, J. Boosting adversarial attacks with momentum. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 9185–9193. [Google Scholar]

- Wang, X.; He, X.; Wang, J.; He, K. Admix: Enhancing the transferability of adversarial attacks. In Proceedings of the International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 16158–16167. [Google Scholar]

- Xie, C.; Zhang, Z.; Zhou, Y.; Bai, S.; Wang, J.; Ren, Z.; Yuille, A.L. Improving transferability of adversarial examples with input diversity. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 2730–2739. [Google Scholar]

- Dong, Y.; Pang, T.; Su, H.; Zhu, J. Evading defenses to transferable adversarial examples by translation-invariant attacks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4312–4321. [Google Scholar]

- He, Z.; Duan, Y.; Zhang, W.; Zou, J.; He, Z.; Wang, Y.; Pan, Z. Boosting adversarial attacks with transformed gradient. Comput. Secur. 2022, 118, 102720. [Google Scholar] [CrossRef]

- Lin, J.; Song, C.; He, K.; Wang, L.; Hopcroft, J.E. Nesterov Accelerated Gradient and Scale Invariance for Adversarial Attacks. In Proceedings of the International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Zheng, T.; Chen, C.; Ren, K. Distributionally adversarial attack. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 2253–2260. [Google Scholar]

- Croce, F.; Hein, M. Minimally distorted adversarial examples with a fast adaptive boundary attack. In Proceedings of the International Conference on Machine Learning (ICML), Online, 13–18 July 2020; pp. 2196–2205. [Google Scholar]

- Tashiro, Y.; Song, Y.; Ermon, S. Diversity can be transferred: Output diversification for white-and black-box attacks. NeurIPS 2020, 33, 4536–4548. [Google Scholar]

- Mosbach, M.; Andriushchenko, M.; Trost, T.; Hein, M.; Klakow, D. Logit pairing methods can fool gradient-based attacks. arXiv 2018, arXiv:1810.12042. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. Mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Wang, X.; He, K. Enhancing the transferability of adversarial attacks through variance tuning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Virtually, 19–25 June 2021; pp. 1924–1933. [Google Scholar]

- Kurakin, A.; Goodfellow, I.; Bengio, S. Adversarial machine learning at scale. arXiv 2016, arXiv:1611.01236. [Google Scholar]

- Tramèr, F.; Boneh, D.; Kurakin, A.; Goodfellow, I.; Papernot, N.; McDaniel, P. Ensemble adversarial training: Attacks and defenses. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Xu, W.; Evans, D.; Qi, Y. Feature squeezing: Detecting adversarial examples in deep neural networks. arXiv 2017, arXiv:1704.01155. [Google Scholar] [CrossRef]

- Liao, F.; Liang, M.; Dong, Y.; Pang, T.; Hu, X.; Zhu, J. Defense against adversarial attacks using high-level representation guided denoiser. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 1778–1787. [Google Scholar]

- Xie, C.; Wang, J.; Zhang, Z.; Ren, Z.; Yuille, A. Mitigating Adversarial Effects Through Randomization. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Jia, X.; Wei, X.; Cao, X.; Foroosh, H. Comdefend: An efficient image compression model to defend adversarial examples. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 6084–6092. [Google Scholar]

- Cohen, J.; Rosenfeld, E.; Kolter, Z. Certified adversarial robustness via randomized smoothing. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; pp. 1310–1320. [Google Scholar]

- Naseer, M.; Khan, S.; Hayat, M.; Khan, F.S.; Porikli, F. A self-supervised approach for adversarial robustness. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 262–271. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).