Fault Identification of Seismic Data Based on SEU-Net Approach

Abstract

1. Introduction

2. Materials and Methods

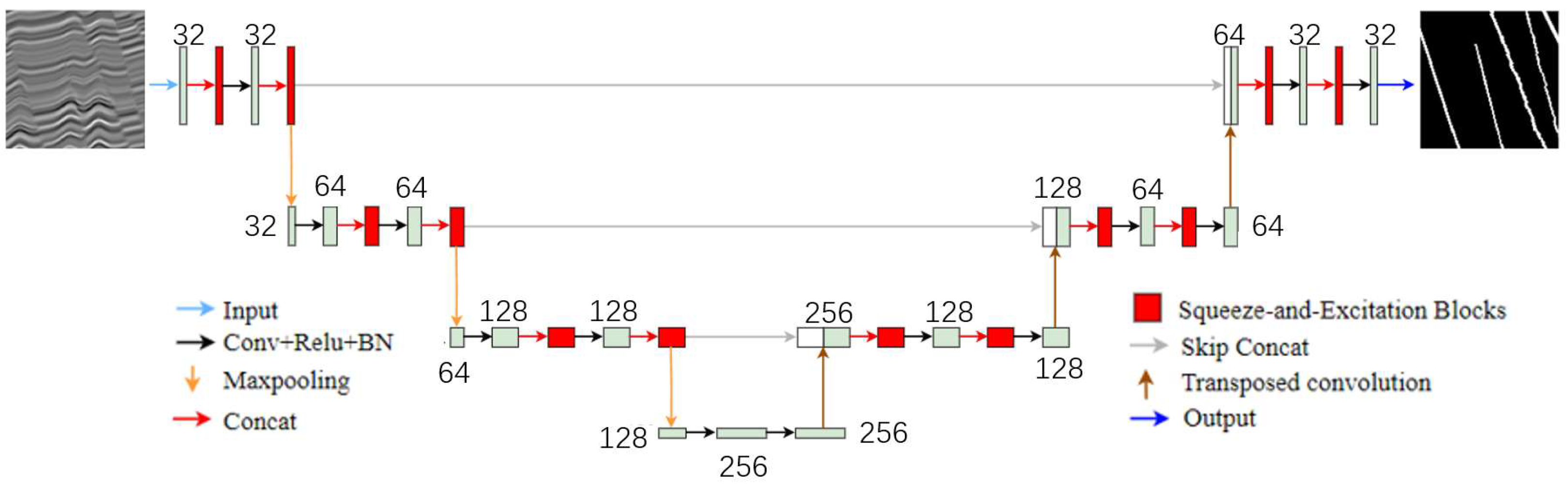

2.1. Network Structure

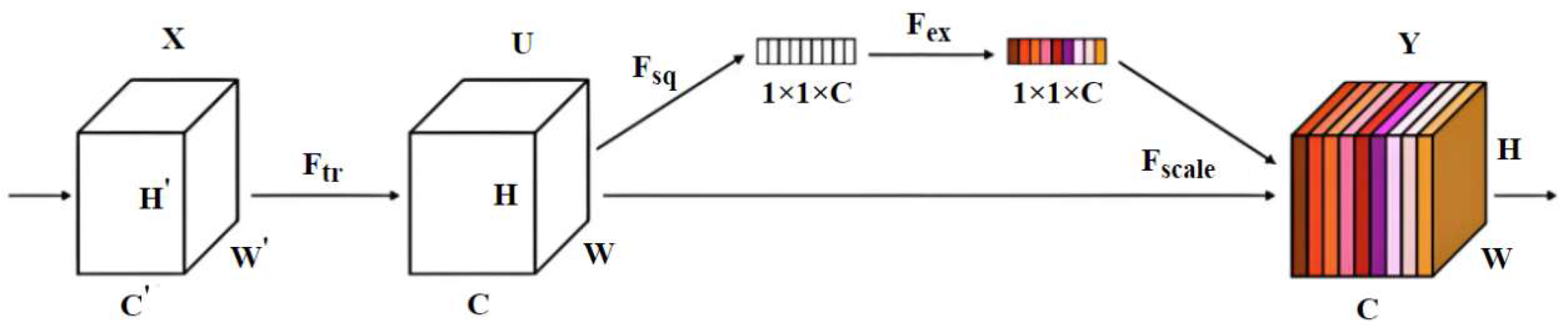

2.2. The SE Block

3. Results and Discussion

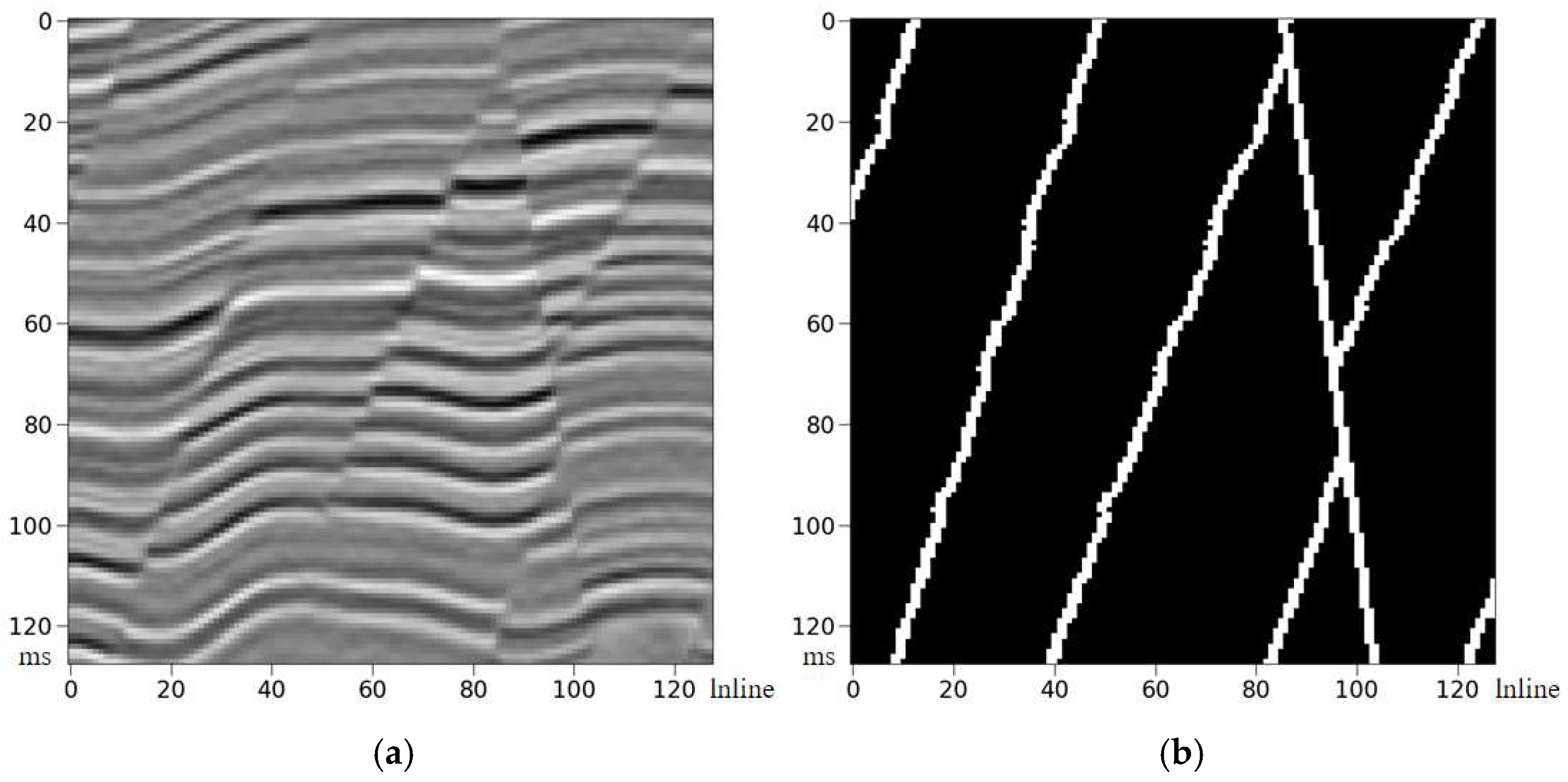

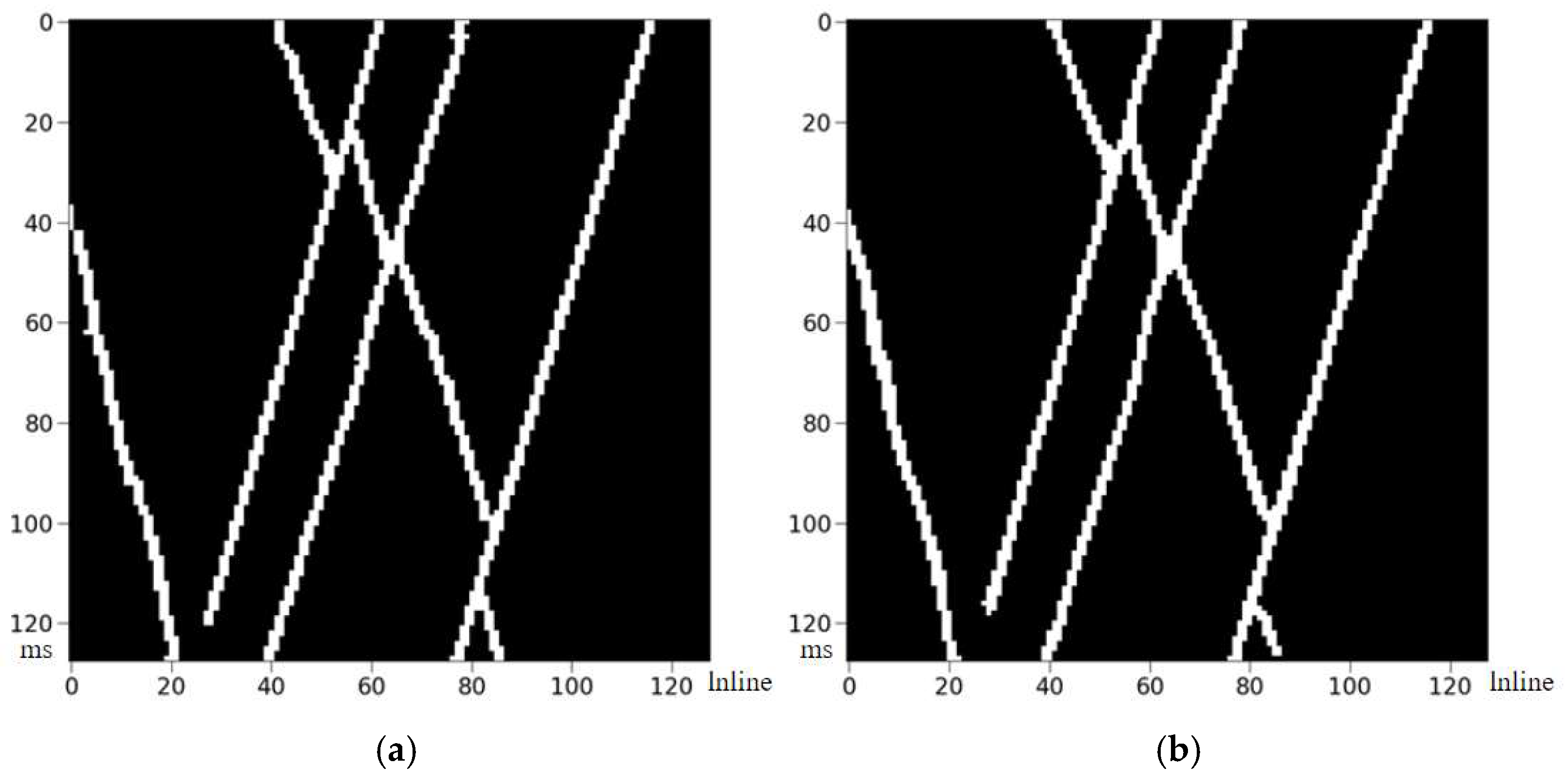

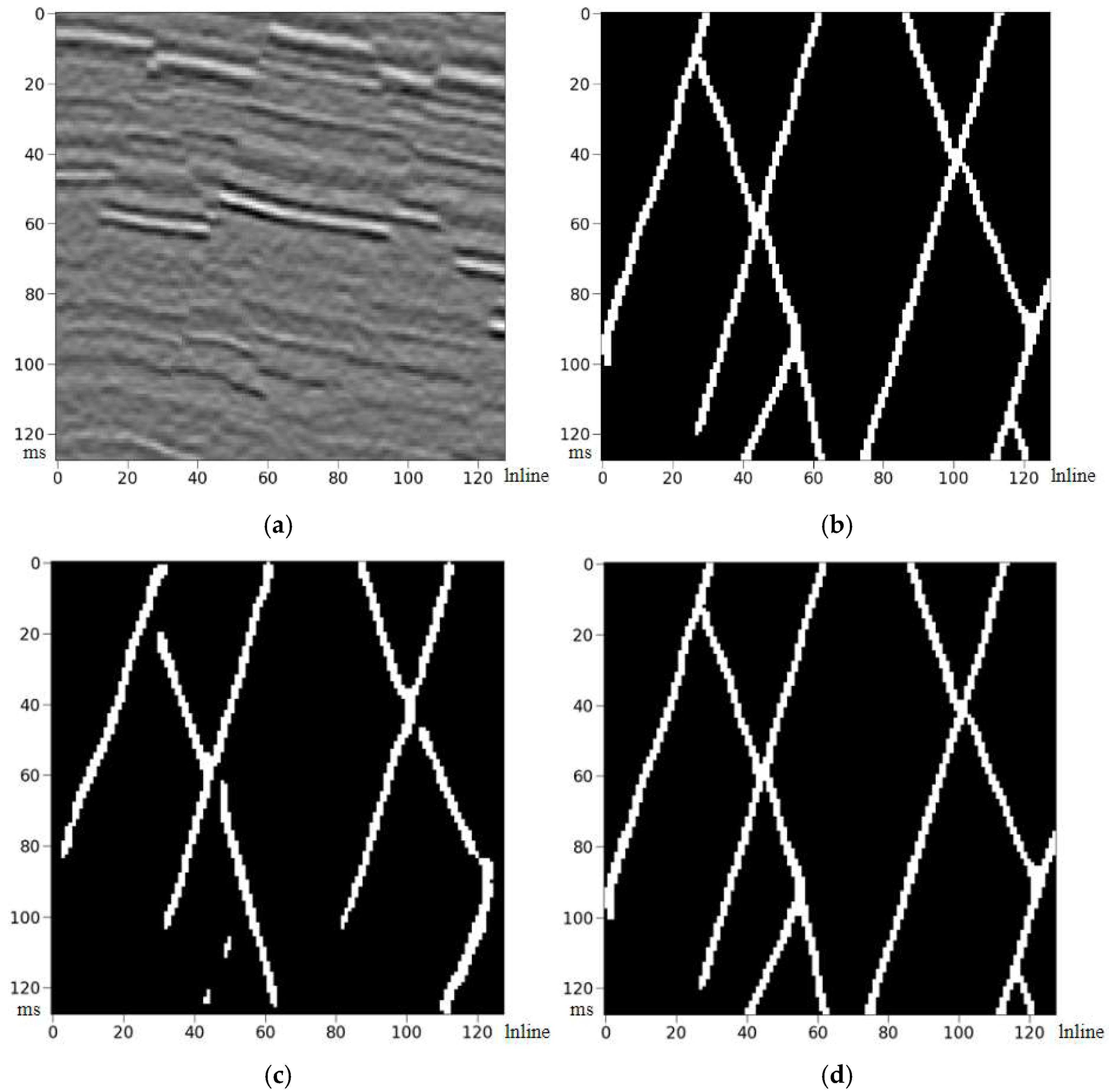

3.1. Dataset Generation

3.2. Loss Function and Evaluation Metrics

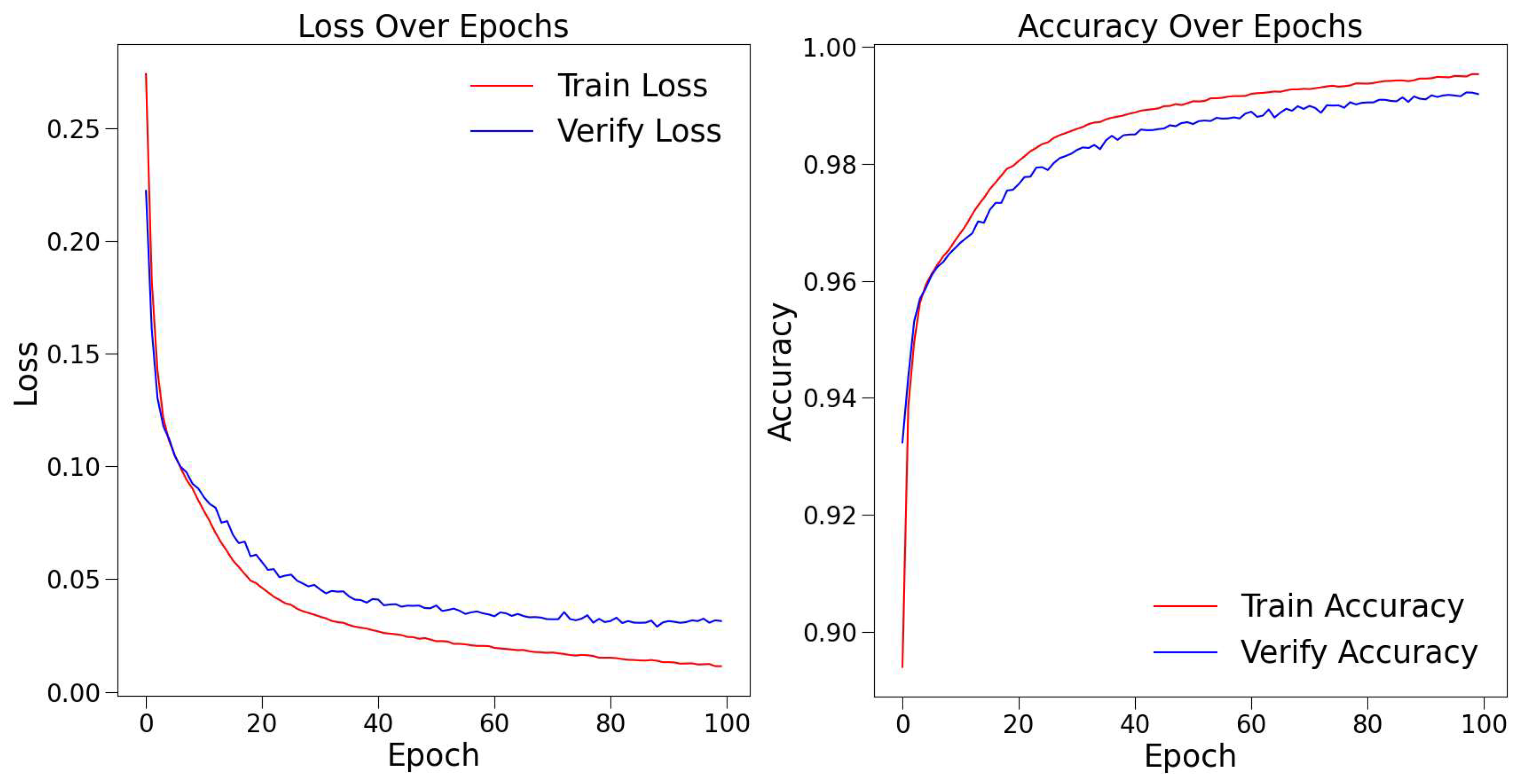

3.3. Model Training

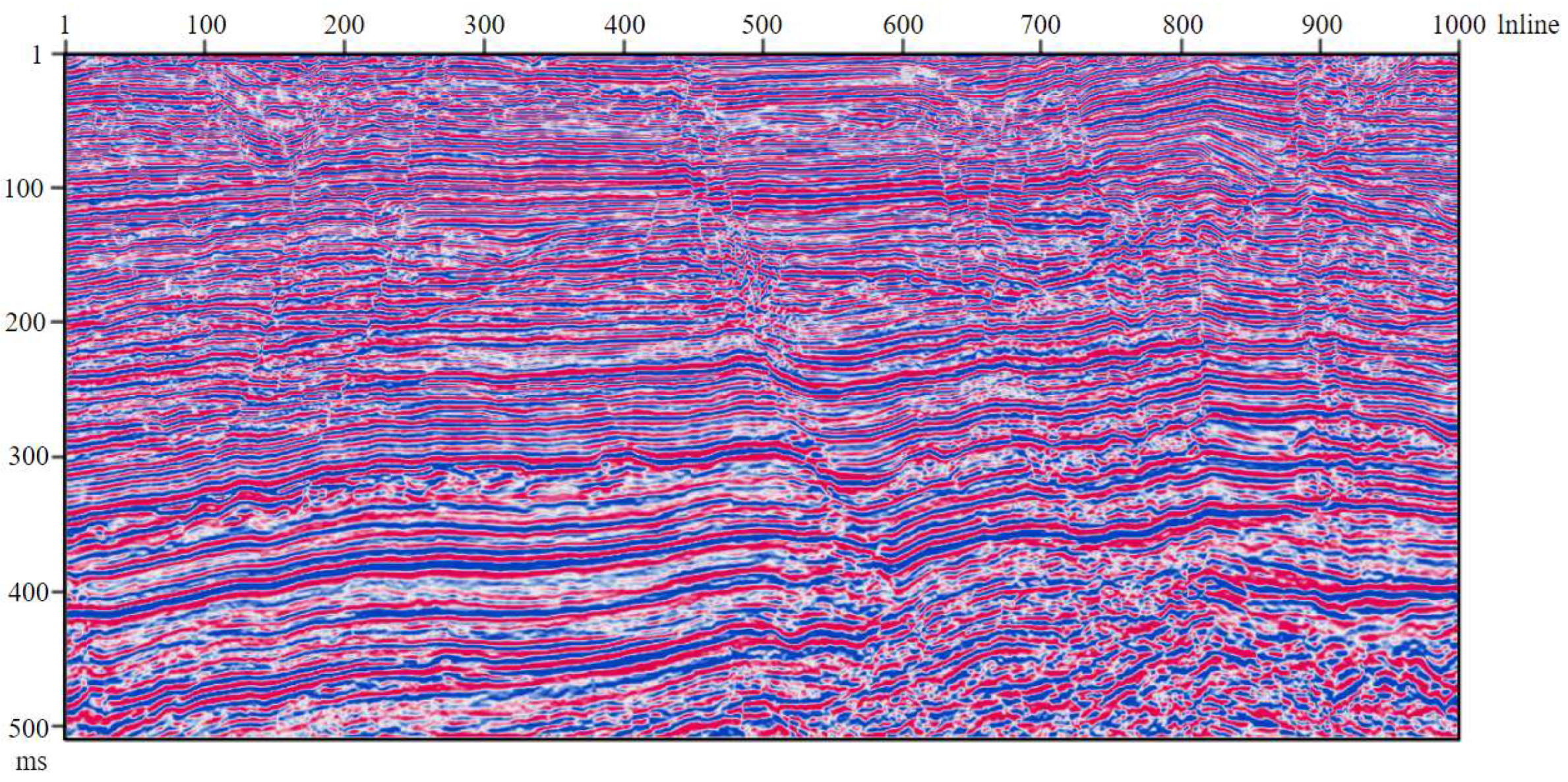

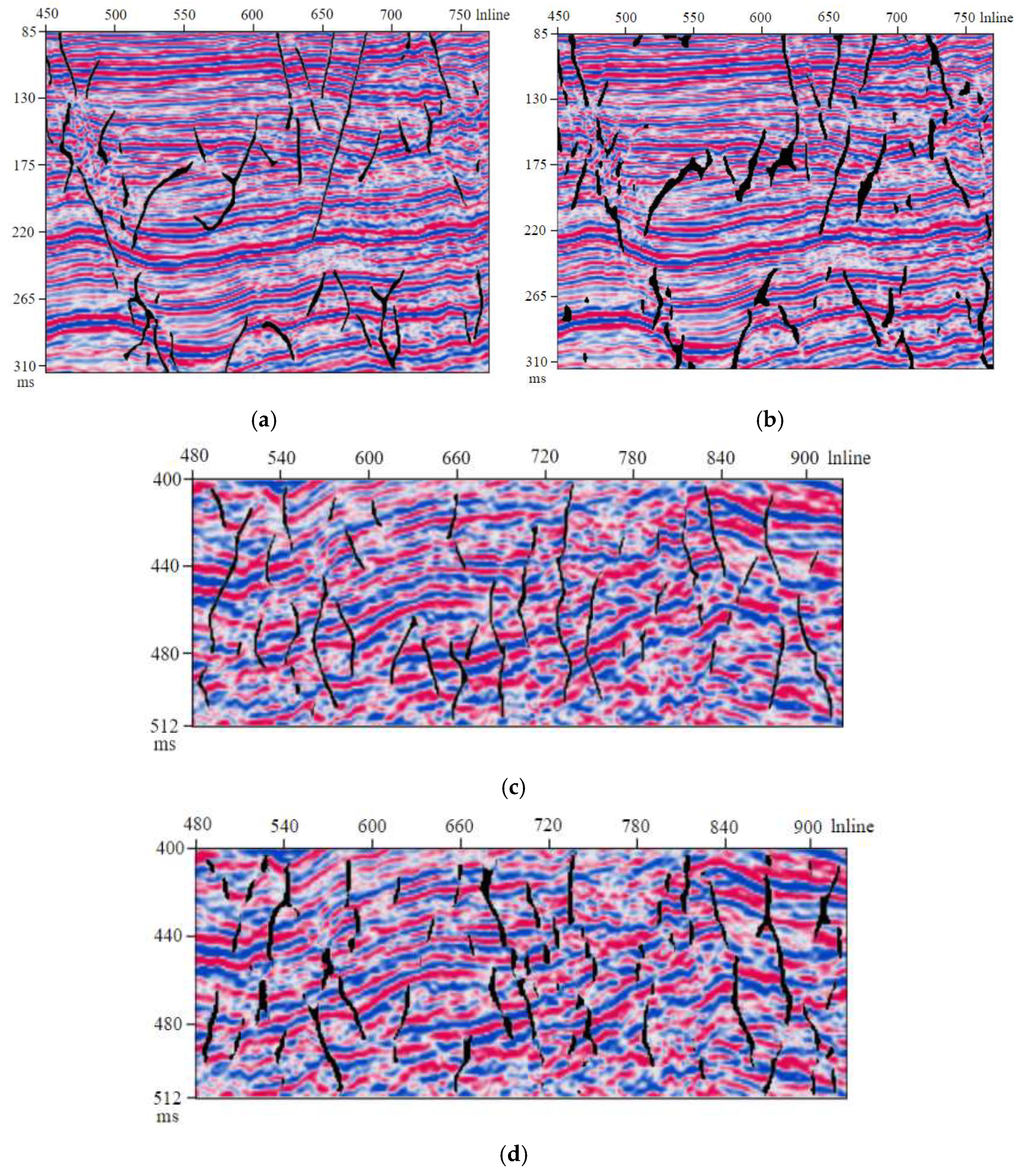

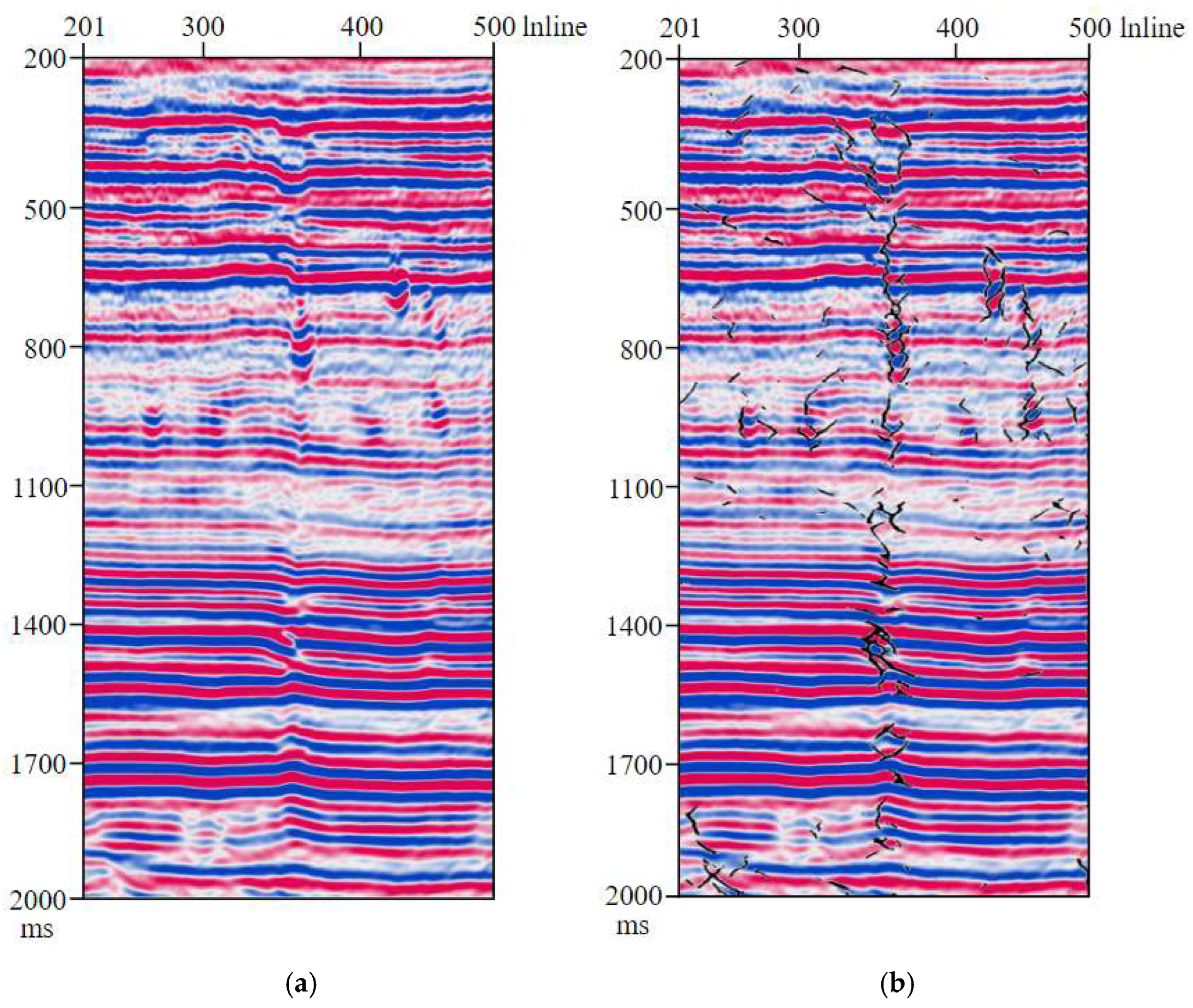

3.4. Original Data

4. Conclusions

- Structural Optimization: Exploring lightweight network designs, such as using more efficient channel attention mechanisms or reducing redundant feature channels, to lower computational complexity.

- Hardware Acceleration: Utilizing more powerful hardware resources (e.g., multi-GPU clusters or TPUs) and distributed training frameworks to accelerate the training process for large-scale data.

- Efficient Inference: Researching methods like model pruning and quantization to reduce the model’s memory footprint and computational cost during deployment, facilitating practical application.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, L.X.; GAO, J.T.; MA, Q.; Yang, R.T.; Wang, Z.B.; Li, F. Research on Seismic Fault Identification Methods Based on Improved UNet++. Comput. Technol. Dev. 2023, 33, 199–205+213. [Google Scholar]

- Alohali, R.; Alzubaidi, F.; Van Kranendonk, M.; Clark, S. Automated fault detection in the Arabian Basin. Geophysics 2022, 87, IM101–IM109. [Google Scholar] [CrossRef]

- Gao, K.; Huang, L.J.; Zheng, Y.C. Fault detection on seismic structural images using a nested residual U-Net. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4502215. [Google Scholar] [CrossRef]

- Gao, K.; Huang, L.J.; Zheng, Y.C.; Lin, R.R.; Hu, H.; Cladohous, T. Automatic fault detection on seismic images using a multiscale attention convolutional neural network. Geophysics 2021, 87, N13–N29. [Google Scholar] [CrossRef]

- Zhou, R.S.; Yao, X.M.; Hu, G.M.; Yu, F.C. Learning from unlabelled real seismic data: Fault detection based on transfer learning. Geophys. Prospect. 2021, 69, 1218–1234. [Google Scholar] [CrossRef]

- Zhu, D.L.; Li, L.; Guo, R.; Tao, C.F.; Zhan, S.F. 3D fault detection: Using human reasoning to improve performance of convolutional neural networks. Geophysics 2022, 87, IM143–IM156. [Google Scholar] [CrossRef]

- Di, X.; Liu, Y. Research progress of deep learning in seismic fault interpretation. Prog. Geophys. 2025, 40, 1688–1716. [Google Scholar]

- Bahorich, M.; Farmer, S. 3-D seismic discontinuity for faults and stratigraphic features: The coherence cube. Lead. Edge 1995, 14, 1053–1058. [Google Scholar] [CrossRef]

- Yang, G.Q.; Liu, Y.L.; Zhang, H.W. The calculation method of curvature attributes and its effect analysis. Prog. Geophys. 2015, 30, 2282–2286. [Google Scholar]

- Ma, Y.X.; Li, H.L.; Liu, K.Y.; Gu, H.M.; Ren, H. Application of an ant-tracking technique based on spectral de-composition to fault characterization. Geophys. Prospect. Pet. 2020, 59, 258–266. [Google Scholar]

- Pedersen, S.I.; Randen, T.; Sonneland, L.; Steen, Ø. Automatic fault extraction using artificial ants. In SEG Technical Program Expanded Abstracts; Society of Exploration Geophysicists: Salt Lake City, UT, USA, 2002; pp. 107–116. [Google Scholar]

- Wu, T.R.; Gao, J.H.; Chang, D.K.; Wang, H.L.; Tao, H.F.; Li, M.Y. Faults identification of seismic data based on Transformer. Oil Geophys. Prospect. 2024, 59, 1217–1224. [Google Scholar]

- Jing, J.K.; Yan, Z.; Zhang, Z.; Gu, H.M.; Han, B.K. Fault detection using a convolutional neural network trained with point-spread function-convolution-based samples. Geophysics 2023, 88, IM1–IM14. [Google Scholar] [CrossRef]

- Di, H.B.; Wang, Z.; AlRegib, G. Why using CNN for seismic interpretation? An investigation. In Proceedings of the SEG International Exposition and Annual Meeting, Anaheim, CA, USA, 14–19 October 2018; pp. 2216–2220. [Google Scholar]

- Chen, L.; Xiao, C.B.; Yu, J.; Wang, Z.L.; Li, X.L. Fault recognition based on affinity propagation clustering and principal component analysis. Oil Geophys. Prospect. 2017, 52, 826–833. [Google Scholar]

- Huang, L.; Dong, X.S.; Clee, T.E. A scalable deep learning platform for identifying geologic features from seismic attributes. Lead. Edge 2017, 36, 249–256. [Google Scholar] [CrossRef]

- Wu, X.M.; Liang, L.M.; Shi, Y.Z.; Sergey, F. FaultSeg3D: Using synthetic data sets to train an end-to-end convolutional neural network for 3D seismic fault segmentation. Geophysics 2019, 84, IM35–IM45. [Google Scholar] [CrossRef]

- Wu, X.M.; Shi, Y.Z.; Fomel, S.; Liang, L.M.; Zhang, Q.; Yusifov, A.Z. FaultNet3D: Predicting Fault Probabilities, Strikes, and Dips With a Single Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9138–9155. [Google Scholar] [CrossRef]

- Liu, N.H.; He, T.; Tian, Y.J.; Wu, B.Y.; Gao, J.H.; Xu, Z.B. Common-azimuth seismic data fault analysis using residual UNet. Interpretation 2020, 8, SM25–SM37. [Google Scholar] [CrossRef]

- Tang, Z.X.; Wu, B.Y.; Wu, W.H.; Ma, D.B. Fault detection via 2.5D transformer U-Net with seismic data pre-processing. Remote Sens. 2023, 15, 1039. [Google Scholar] [CrossRef]

- Zhang, Z.; Yan, Z.; Gu, H.M. Automatic fault recognition with residual network and transfer learning. Oil Geophys. Prospect. 2020, 55, 950–956. [Google Scholar]

- Chang, D.K.; Yong, X.S.; Wang, Y.H.; Yang, W.Y.; Li, H.S.; Zhang, G.Z. Seismic fault interpretation based on deep convolutional neural networks. Oil Geophys. Prospect. 2021, 56, 1–8. [Google Scholar]

- Liu, G.X.; MA, Z.H. Fault Identification of Post Stack Seismic Data by Improved Unet Network. Chin. J. Comput. Phys. 2023, 40, 742–751. [Google Scholar]

- Gao, X.C.; Liang, Y.H.; Wang, L.L.; WU, J.Z. Three-dimensional fault identification using RAtte-UNet. Oil Geophys. Prospect. 2025, 60, 12–20. [Google Scholar]

- Wu, X.M.; Geng, Z.C.; Shi, Y.Z.; Pham, N.; Fomel, S.; Caumon, G. Building realistic structure models to train convolutional neural networks for seismic structural interpretation. Geophysics 2020, 85, WA27–WA39. [Google Scholar] [CrossRef]

- Guarido, M.; Wozniakowska, P.; Emery, D.J.; Lume, M.; Trad, D.O.; Innanen, K.A. Fault detection in seismic volumes using a 2.5D residual neural networks approach. In Proceedings of the First International Meeting for Applied Geoscience & Energy Expanded Abstracts, Denver, CO, USA, 26 September–1 October 2021; pp. 1626–1629. [Google Scholar]

- Li, S.J.; Gao, J.H.; Gui, J.Y.; Wu, L.K.; Liu, N.H.; He, D.Y.; Guo, X. Fully connected U-Net and its application on reconstructing successively sampled seismic data. IEEE Access 2023, 11, 99693–99704. [Google Scholar] [CrossRef]

- Lin, L.; Zhong, Z.; Cai, Z.X.; Sun, A.Y.; Li, C.L. Automatic geologic fault identification from seismic data using 2.5D channel attention U-Net. Geophysics 2022, 87, IM111–IM124. [Google Scholar] [CrossRef]

- Wu, W.H.; Yang, Y.; Wu, B.Y.; Ma, D.B.; Tang, Z.X.; Yin, X. MTL-FaultNet: Seismic data reconstruction assisted multitask deep learning 3-D fault interpretation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5914815. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015 Part III, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Hu, J.; Li, S.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

| Type of Network | Accuracy Rate | Recall | F1-Score | IoU |

|---|---|---|---|---|

| U-Net | 0.9623 | 0.9611 | 0.9614 | 0.9602 |

| SEU-Net | 0.9856 | 0.9821 | 0.9853 | 0.9747 |

| Current Limitation | Impact on High-Noise Data Processing | Proposed Improvement |

|---|---|---|

| Sensitivity of SE Blocks to Feature Quality | Noisy features are squeezed and excited alongside clean features, potentially amplifying noise in certain channels. | Integrate a Spatial-Channel Concurrent Attention mechanism. |

| Limited Multi-scale Context Capture | The U-Net backbone may lose subtle fault features amidst strong noise, as small faults can be easily obscured. | Incorporate an Atrous Spatial Pyramid Pooling (ASPP) module in the bottleneck. |

| Fixed Feature Extraction | The standard convolutional kernels are static after training and may not adapt optimally to varying local noise conditions. | Replace standard convolutions with Dynamic Convolutions or Attention-augmented Convolutions. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ren, W.; Chen, X.; Zhu, X.; Bao, D.; He, X.; Zhao, Y.; Zhao, M. Fault Identification of Seismic Data Based on SEU-Net Approach. Appl. Sci. 2025, 15, 10152. https://doi.org/10.3390/app151810152

Ren W, Chen X, Zhu X, Bao D, He X, Zhao Y, Zhao M. Fault Identification of Seismic Data Based on SEU-Net Approach. Applied Sciences. 2025; 15(18):10152. https://doi.org/10.3390/app151810152

Chicago/Turabian StyleRen, Wenbo, Xuan Chen, Xiansheng Zhu, Dian Bao, Xinming He, Yan Zhao, and Ming Zhao. 2025. "Fault Identification of Seismic Data Based on SEU-Net Approach" Applied Sciences 15, no. 18: 10152. https://doi.org/10.3390/app151810152

APA StyleRen, W., Chen, X., Zhu, X., Bao, D., He, X., Zhao, Y., & Zhao, M. (2025). Fault Identification of Seismic Data Based on SEU-Net Approach. Applied Sciences, 15(18), 10152. https://doi.org/10.3390/app151810152