1. Introduction

Malware constitutes one of the most significant threats facing the modern information technology ecosystem. Various types of malicious software—such as viruses, trojans, worms, rootkits, and ransomware—have the potential to enable unauthorized system access, cause data theft, disrupt services, and result in severe financial losses. Malware detection and classification have thus become critical research areas within cybersecurity. Malicious URLs are fundamental components of many cyberattacks, including phishing, malware dissemination, and financial fraud, posing serious threats to individuals and organizations. The inadequacy of traditional signature-based and blacklist approaches to detect emerging attack types has increased interest in machine learning (ML) and deep learning (DL)-based methods.

In recent years, a multitude of models have been proposed in the literature for malware and malicious URL detection. The deepBF model by Patgiri et al. integrates a Bloom Filter with deep learning to detect malicious URLs rapidly and resource-efficiently, using a two-dimensional Bloom Filter and evolutionary CNN that maintains system flexibility by updating the filter for new URLs [

1]. In 2023, Hilal et al. introduced the AFSADL-MURLC model, combining Glove-based word embeddings, a GRU classifier, and Artificial Fish Swarm Optimization to achieve high detection accuracy, particularly for URLs spread via social engineering [

2]. Alsowail (2025) presented an effective approach by modeling spatial relationships among URL features using a self-attention-based CapsNet architecture, achieving an accuracy as high as 99.2% [

3]. Zaimi et al. utilized DistilBERT for text-based feature extraction and a hybrid CNN-LSTM model to analyze local and temporal URL patterns, reporting an accuracy of 98.19% [

4]. Similarly, Alsaedi et al. designed a multimodal CNN-based model by combining textual and visual features, achieving a 4.3% improvement in performance and a 1.5% reduction in the false positive rate [

5].

Machine-learning-based solutions are also widely represented in the literature. Raja et al. performed effective detection using a model based solely on URL structural features with a count vectorizer and Random Forest [

6]. Tashtoush et al. employed certain statistical features and a CNN, achieving 94.09% accuracy with low complexity [

7]. Gupta et al. (2024) improved CNN hyperparameters with the Brown Bear optimization algorithm, attaining 93% accuracy and 92% precision [

8].

Transformer and language-model-based approaches have also attracted attention. For example, the PMANet model adapts a pretrained language model to the URL domain via post-training and leverages layer-wise attention, achieving an AUC of 99.41% [

9]. Do et al. addressed the shortcomings of CNNs and RNNs by utilizing a Temporal Convolutional Network (TCN) and Multi-Head Self-Attention, achieving 98.78% accuracy [

10].

There has also been progress in parallel processing and real-time detection. In 2023, Nagy et al. used different parallel processing strategies to train ML and DL models, improving speed and accuracy [

11]. Lavanya and Shanthi increased host security using URL-API intensity-based feature selection and spectral deep learning, achieving about 96% accuracy [

12]. In a 2025 study, a method using only 14 basic features yielded 94.46% accuracy for low-cost phishing detection [

13]. Similarly, recent studies have leveraged the most popular ML and DL algorithms—including quantum machine learning—in URL classification [

14]. A contemporary study emphasized the failure of traditional methods in detecting malicious Uniform Resource Locators, reporting approximately 98% accuracy using ML and DL on 5000 real-world URLs [

15]. The TransURL model by Liu et al. provides an innovative transformer-based solution that integrates multiscale feature learning and regional attention mechanisms, outperforming many existing methods in certain scenarios but facing challenges with computational cost and real-time system integration [

16]. A CNN-based model, surpassing blacklisting and heuristic approaches, achieved high F1-scores (98.99%) in experiments on more than 651,000 labeled URLs by capturing local character-level patterns [

17]. Su et al. (2023) demonstrated that a BERT-based method could leverage self-attention to learn semantic relationships in URLs and achieve 98–99% accuracy on various datasets, including tests against IoT and DoH attacks [

18]. Similarly, Elsadig et al. combined BERT-based feature extraction with a CNN classifier for phishing URL detection, achieving 96.66% accuracy and highlighting the role of NLP features in this domain [

19]. Islam et al. used ensemble models such as Random Forest and XGBoost on URL content and metadata, reaching an accuracy of 97% [

20]. Hani et al. (2024) compared various ML techniques for malicious URL detection, obtaining successful results but noting the lack of optimization techniques as a limitation [

21]. Gupta et al. compared the performance of LSTM, Bi-LSTM, RNN, and CNN models for detecting malicious web addresses [

22].

In summary, the literature clearly demonstrates the critical importance of malicious URL detection for cybersecurity. Deep learning and machine learning techniques have shown superior performance compared to traditional systems, providing more adaptable solutions for new threats encountered in real-world scenarios. Future research is expected to focus on real-time processing, minimizing resource consumption, and enhancing resistance to adversarial attacks.

The main contributions of this study to the field of science can be summarized as follows:

Comprehensive comparison of different models: For the first time, machine learning, deep learning, and hybrid optimization-based methods are systematically compared for multiclass and binary classification on the Malicious Phish dataset.

Optimization-based feature selection: GA, PSO, and HHO algorithms are jointly used for feature selection and hyperparameter tuning, resulting in significant improvements in model performance.

Advanced deep learning utilization: The superiority of next-generation language-model-based deep learning approaches, such as ELECTRA, for malicious URL detection is directly demonstrated in comparison to conventional methods.

Analysis of hybrid and optimized models: CNN+LSTM and optimization-supported hybrid models reduce error rates and enhance performance compared to traditional approaches.

Feature contribution analysis: The impact of selected features on model decisions is thoroughly examined, revealing which attributes are most decisive.

2. Materials and Methods

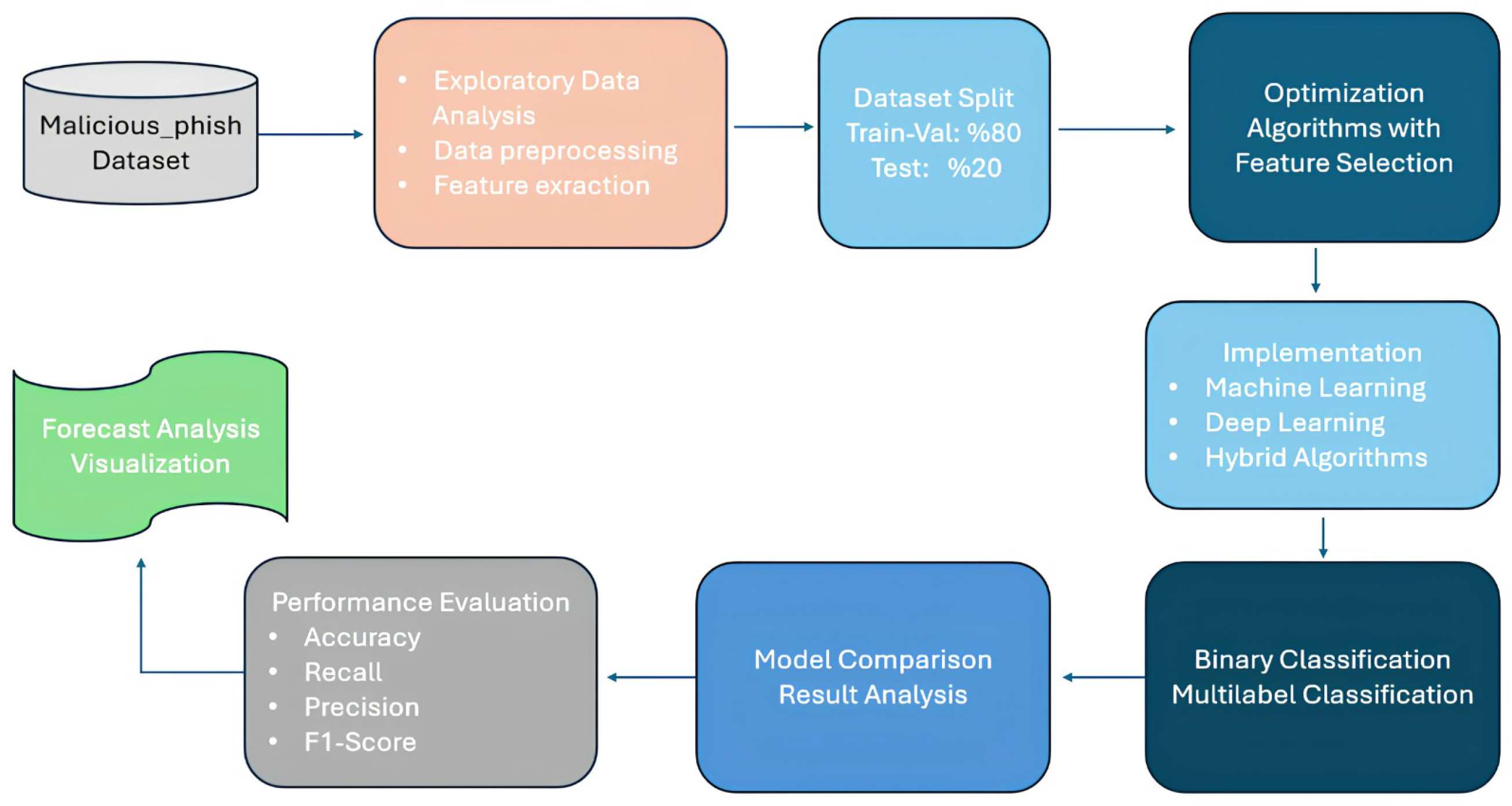

Figure 1 presents the overall workflow of the proposed study. Initially, exploratory data analysis, preprocessing, and feature extraction were performed on the Malicious Phish dataset. Subsequently, various optimization algorithms were applied for feature selection. Using the selected features, machine learning, deep learning, and hybrid methods were employed to perform binary and multiclass classification tasks. The results obtained from the models were compared and analyzed using performance metrics such as accuracy, recall, precision, and F1-score. In the final stage, the prediction results were further analyzed and visualized to comprehensively demonstrate the effectiveness of the models.

In this study, model performances were compared using different classification algorithms, and the proposed hybrid structure was evaluated. The methods utilized are detailed below.

Light Gradient Boosting Machine (LightGBM), developed by Microsoft, is a gradient boosting framework optimized for large datasets and high-dimensional feature spaces. Its histogram-based splitting approach ensures low memory usage and significantly reduces training time. LightGBM aims to improve accuracy through a leaf-wise growth strategy while also offering depth control to mitigate overfitting [

23]. LGBM is another gradient boosting framework designed for efficiency and scalability. It leverages Gradient-based One-Side Sampling (GOSS) and Exclusive Feature Bundling (EFB) to reduce computational costs. The objective function is similar to XGBoost:

but LightGBM optimizes the tree growth using a leaf-wise strategy rather than a level-wise approach. At each step, the leaf with the maximum loss reduction is expanded, which significantly improves model accuracy. The gain of a split is computed as

where

G and

H denote the first- and second-order gradients, respectively, and

and

are regularization parameters. This formulation ensures both computational efficiency and high accuracy. Another method, Extreme Gradient Boosting (XGBoost), is an advanced variant of gradient boosting algorithms that delivers superior performance in both classification and regression problems. By incorporating a regularized objective function (L1 and L2 norms), XGBoost controls overfitting, while parallel computation and pre-sorting features optimize processing time. XGBoost (Extreme Gradient Boosting) is a highly optimized implementation of gradient boosting that introduces regularization terms to control overfitting and improve generalization. The objective function is defined as

where

is the loss function, and

is the regularization term penalizing the complexity of tree

with

T leaves and weights

w. The additive model update at iteration

t is

where F represents the functional space of regression trees. This formulation allows XGBoost to achieve superior efficiency and performance in large-scale datasets.

The gradient boosting method involves the sequential training of weak learners (decision trees), minimizing errors at each step. In this ensemble-based approach, models are trained in sequence, and each new model attempts to correct the residuals of its predecessor [

24]. Gradient boosting is an ensemble learning method that constructs predictive models in a forward stage-wise manner by sequentially adding weak learners. At each iteration, the algorithm fits a new learner to the negative gradient of the loss function with respect to the current model. Formally, the procedure begins with an initial model:

where

denotes the chosen loss function. At iteration

m, pseudo-residuals are calculated as

and a weak learner

is trained to approximate these residuals. The optimal step size

is obtained by

Finally, the model is updated as

where

is the learning rate. This iterative optimization ensures gradual improvement of the predictive accuracy while controlling overfitting.

Random Forest is a powerful ensemble learning technique widely used for classification and regression problems, comprising a collection of decision trees. Each tree is trained on a randomly sampled subset of the training data, and a random subset of features is used at each node split. This reduces correlation among trees, decreases overall model error, and prevents overfitting. In classification problems, predictions from individual trees are combined by majority vote, while in regression, the average of tree outputs is taken. Random Forest performs effectively on high-dimensional data, is robust to missing data and imbalanced class distributions, and provides feature importance scores for variable selection. However, increasing the number of trees can raise computational cost and memory requirements, and interpretability is lower compared to a single decision tree [

25,

26,

27].

Long Short-Term Memory (LSTM) networks are a variant of Recurrent Neural Networks (RNNs) extensively used for sequential data and time-series analysis. Developed to address the problem of learning long-term dependencies in standard RNNs, LSTM cells utilize forget, input, and output gates to dynamically control which information is retained or forgotten. This architecture enables the network to capture both short- and long-term dependencies effectively [

28,

29,

30].

A Small Convolutional Neural Network (CNN) is a deep learning model designed for automated feature extraction and classification on high-dimensional data such as images or sequential data but with reduced parameters and computational cost. Through convolution and pooling operations between layers, local patterns, edges, and structural details are efficiently captured from input data, while fully connected layers perform classification based on the extracted features. The compact design of small CNNs with fewer layers and filters compared to conventional deep CNN architectures offers low computational overhead and faster processing, making them suitable for embedded systems and resource-constrained environments.

One of the effective hybrid methods proposed is the CNN+LSTM hybrid model. This architecture aims to improve classification performance by learning both spatial and temporal patterns simultaneously. The small CNN component automatically extracts local spatial features from input data, while the LSTM layer processes feature vectors obtained from the CNN in sequential order to learn long-term dependencies. This combined structure delivers superior performance, especially in the analysis of time series or dynamic pattern image sequences. The low computational cost of the small CNN enables faster operation, while the gating mechanisms of the LSTM contribute to efficient integration of past and present information.

ELECTRA (Efficiently Learning an Encoder that Classifies Token Replacements Accurately) is a deep learning model used in natural language processing that learns by detecting whether words in the text have been replaced. The model replaces certain words in input texts and predicts whether these replacements are correct. This approach allows ELECTRA to be trained faster and more efficiently than traditional masked language models, yielding high accuracy in tasks such as text classification. The ability to achieve effective results with lower computational requirements is a major advantage [

31].

To optimize model hyperparameters and enhance classification performance, we employed three widely used nature-inspired optimization algorithms—Genetic Algorithm (GA), Particle Swarm Optimization (PSO), and Harris Hawk Optimizer (HHO). This selection not only allowed us to utilize algorithms that are popular and benchmarked in the literature but also enabled us to test the capability of an innovative optimizer (HHO) within the context of feature selection for malicious URL detection. Prior research has demonstrated the success of GA and PSO in cybersecurity-related tasks, while HHO has recently gained attention for its ability to avoid premature convergence. Thus, including these three optimizers provides a meaningful balance between established reliability and novelty, ensuring that the feature selection results are both comparable and innovative [

32,

33,

34,

35,

36].

The GA is a stochastic population-based optimization technique inspired by the principle of natural selection. A set of candidate solutions, referred to as chromosomes, evolves over generations using genetic operations such as selection, crossover, and mutation. The quality of each solution is measured by a fitness function .

Mathematically, given a population

, the GA updates solutions as

where t denotes the current generation. Selection is based on fitness probability:

This ensures that high-quality solutions are more likely to contribute to the next generation [

37,

38].

The PSO algorithm models the social behavior of bird flocks or fish schools. Each solution, called a particle, has a position vector and velocity vector . Particles move in the search space by combining their own best-known position pbest and the global best position gbest.

The update rules are

where

w is the inertia weight,

and

are learning factors, and

and

are random numbers in

. This mechanism provides both exploration and exploitation in research spaces [

39].

The HHO algorithm is another algorithm inspired by the cooperative hunting strategy. It dynamically balances exploration and exploitation. The position of each hawk is updated based on the prey position

as

where

represents the escaping energy of the prey,

J is the jump strength, and

T is the maximum number of iterations. The adaptive switching makes HHO highly effective in complex, high-dimensional spaces [

40].

The main reason for choosing GA, PSO, and HHO in this study is that their diverse search strategies and problem-solving approaches provide both variety and balance in feature selection and hyperparameter optimization. GA offers a robust exploration strategy by mimicking biological evolution and promoting genetic diversity among solutions. PSO, based on social behavior, ensures rapid convergence and low computational cost as each particle learns from its own experience and that of the best neighbors. The HHO algorithm, with its predator–prey-based dynamic exploration and exploitation, excels particularly in complex, high-dimensional spaces. Using all three algorithms together enables more effective feature selection and better generalization of models for various data structures. Algorithms 1, 2 and 3 show the pseudocode for the GA, PSO, and HHO approaches, respectively.

| Algorithm 1 Pseudocode of GA. |

- 1:

Initialize the population randomly - 2:

Evaluate the fitness of each individual - 3:

A predefined number of generations has not been met - 4:

Select the best individuals - 5:

Apply crossover to generate offspring - 6:

Apply mutation to offspring - 7:

Evaluate the fitness of the new individuals - 8:

Form a new population from the best individuals - 9:

Return the best individual as the solution

|

| Algorithm 2 Pseudocode of PSO. |

- 1:

Initialize particles’ positions and velocities randomly - 2:

Evaluate the fitness of each particle - 3:

Set each particle’s personal best (pBest) and global best (gBest) - 4:

For a predefined number of iterations: - 5:

4.1. For each particle: - 6:

- Update velocity based on pBest and gBest - 7:

- Update position using the new velocity - 8:

- Evaluate the new fitness - 9:

- If improved, update pBest - 10:

4.2. Update gBest if needed - 11:

Return gBest as the optimal solution

|

| Algorithm 3 Pseudocode of HHO. |

- 1:

Initialize the hawk population randomly - 2:

Evaluate the fitness of each hawk - 3:

Set the best solution as X_best - 4:

For a predefined number of iterations: - 5:

4.1. For each hawk: - 6:

- Calculate escape energy E - 7:

- If : apply exploration strategy - 8:

- If : apply exploitation strategy - 9:

- Update position accordingly - 10:

- Evaluate new fitness - 11:

- Update X_best if improved - 12:

Return X_best as the best solution

|

3. Experimental Results and Discussion

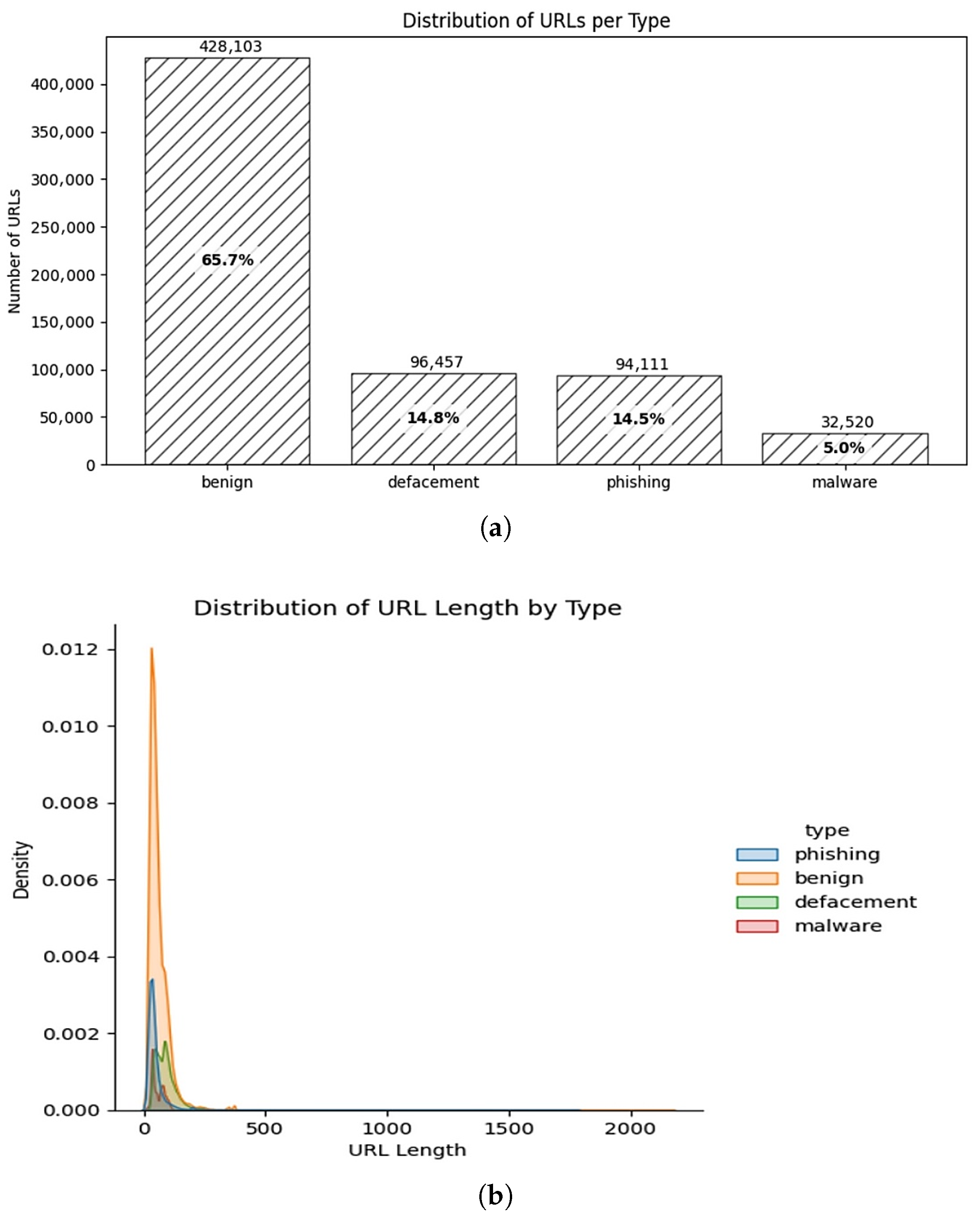

The Malicious Phish dataset is a widely used and comprehensive resource for cybersecurity and malware detection research, based on the analysis of various URLs. This dataset comprises approximately 650,000 URLs, categorized into four main classes: “benign”, “defacement”, “phishing”, and “malware”. Each URL in the dataset is annotated with detailed textual and numerical features. In particular, characteristics such as domain length, IP address usage, suspicious keywords, and the presence of special characters within the URL play a crucial role in enabling machine learning and deep learning algorithms to identify malicious links. The Malicious Phish dataset is frequently used in academic and industrial research to model current threats and enable early detection of next-generation cyberattacks. Visualizations related to the dataset distribution are provided in

Figure 2.

The dataset was partitioned into training–validation (80%) and testing (20%) subsets using a stratified random split with a fixed random seed to preserve class distributions across subsets. Prior to splitting, duplicate entries were removed to ensure data integrity. Furthermore, domain-level isolation was applied, ensuring that URLs originating from the same domain were not distributed across different subsets, thereby preventing potential data leakage. This protocol provides a fair and robust evaluation setting for the proposed models.

The available features in the dataset are categorized as follows:

‘use_of_ip’,

‘abnormal_url’,

’count.’,

‘count-www’,

‘count@’,

‘count_dir’,

‘count_embed_domain’,

‘short_url’,

‘count-https’,

‘count-http’,

‘count%’,

‘count-’,

‘count=’,

‘url_length’,

‘hostname_length’,

‘sus_url’,

‘fd_length’,

‘tld_length’,

‘count-digits’, and

‘count-letters’. The features selected by the GA, PSO, and HHO optimization algorithms are presented in

Table 1.

In cases where GA, PSO, and HHO selected overlapping but not identical feature subsets (as shown in

Table 1), we adopted an intersection–union strategy. Specifically, we constructed a consensus feature set by (i) prioritizing features selected by at least two optimizers and (ii) including additional unique features only if they contributed significantly to validation performance. This approach ensured that the final feature set was both compact and robust, minimizing redundancy while retaining discriminative power. Moreover, feature selection results demonstrated that lexical and entropy-based features (e.g., URL length, the number of special characters, and randomness) were consistently prioritized across GA, PSO, and HHO, highlighting their strong discriminative power for detecting malicious obfuscation. Additionally, contextual attributes such as domain age and WHOIS records were often selected by GA and HHO, reflecting their importance in capturing the temporal and reliability aspects of domains. HHO further identified less common but critical content-based indicators, such as iframe or script intensity, which align with known attack behaviors. These findings indicate that optimization algorithms not only improve model performance but also uncover domain-relevant features that are strongly linked to malicious URL characteristics.

In this study, the features selected by three different algorithms are compared. As seen, the GA, PSO, and HHO algorithms selected some features in common (e.g., ‘count_embed_domain’, ‘count-https’, and ‘count-letters’) while differing on others. For instance, the ‘short_url’ feature was selected only by GA, whereas ‘count@’ was among the features chosen by both GA and HHO. Notably, the HHO algorithm identified a greater number of features compared to the others, whereas PSO focused on a more limited subset. These differences indicate that each algorithm’s feature selection strategies and evaluation criteria exhibit diversity depending on the dataset’s structure. Therefore, employing multiple algorithms together offers variety and flexibility in determining the most effective features for the dataset.

In this study, we employed the ELECTRA-Base variant, which balances computational efficiency and representational capacity, and used the WordPiece tokenizer with case-insensitive settings and a maximum sequence length of 128 tokens to ensure that URL structures such as ‘http’, and ‘www’, domain endings, and subdomain fragments were preserved. The model was fine-tuned end to end, with all layers updated instead of freezing pretrained parameters, and the training was conducted using the AdamW optimizer with a learning rate of , 12 layers, 768 hidden units, 12 attention heats, a batch size of 32, a weight decay of 0.01, and a dropout rate of 0.1 for 10 epochs. Pre-processing involved stripping URL protocols (e.g., ‘http://’), removing dynamic query strings to reduce noise, normalizing percent-encoded characters (e.g., ‘%20’) into their ASCII equivalents, and deduplicating identical samples to avoid data leakage, while post-processing ensured consistent token representation across the dataset. This configuration was adopted to guarantee reproducibility and transparency while enabling robust fine-tuning of ELECTRA for the classification task.

The search spaces, population sizes, and iteration budgets of the metaheuristic algorithms used are explicitly defined. For feature selection and hyperparameter optimization, the Genetic Algorithm (GA), Particle Swarm Optimization (PSO), and Harris Hawk Optimization (HHO) were applied. For GA, the population size was set to 50 with a maximum of 100 iterations, and chromosomes were represented in a binary format covering 30 features. In PSO, the population size was set to 40 with 80 iterations, and particles were updated under velocity clamping with learning coefficients (). For the HHO algorithm, the population size was configured as 30 with 70 iterations, where the exploration and exploitation phases were dynamically balanced. The search spaces, along with model hyperparameters, were defined as follows: learning rate (0.0001–0.01), batch size (16–128), dropout rate (0.1–0.5), and maximum depth (3–12) for tree-based models. These parameter ranges are consistent with widely adopted optimization limits in the literature and were determined considering the size of the Malicious URL dataset and the available computational resources. Thus, the performance of the algorithms in both feature selection and hyperparameter optimization was evaluated on a comparable basis.

When the results presented in

Table 2 are analyzed, it becomes clear that both machine learning and deep learning approaches deliver highly competitive performances for multiclass malicious URL classification. Among machine learning algorithms, RF emerged as the strongest performer with an accuracy of 97% and an F1-score of 0.95, highlighting its robustness in handling feature variability within the dataset. Similarly, LGBM and XGB achieved accuracy rates of 96%, accompanied by balanced precision and recall values (0.95–0.96), demonstrating their strong capability to generalize across different malicious URL categories. In contrast, GB produced slightly lower results (94% accuracy and 0.92 F1-score), suggesting that while effective, it may be more sensitive to parameter tuning compared to the other ensemble-based methods. For deep learning models, both LSTM and CNN individually demonstrated competitive performance, each achieving around 95% accuracy, although CNN showed slightly lower recall (0.91). The hybrid CNN+LSTM model without optimization provided a modest improvement (95.6% accuracy), indicating that combining convolutional layers for feature extraction with sequential layers for temporal dependencies can enhance classification performance. However, optimization-based enhancements led to varied outcomes. For instance, while HHO+CNN+LSTM achieved the highest performance among optimization-supported models (95.7% accuracy and F1-score 0.92), the PSO+CNN+LSTM combination performed poorly (81% accuracy and F1-score 0.70). This suggests that the effectiveness of meta-heuristic optimization is highly dependent on the alignment between the algorithm’s search dynamics and the underlying data distribution. The underperformance of PSO may indicate premature convergence or insufficient exploration of the hyperparameter space for this dataset. This outcome is likely due to PSO’s tendency to converge prematurely to local optima, especially in high-dimensional feature spaces like the malicious URL dataset.

Finally, the ELECTRA model clearly outperformed all other methods, achieving 99% accuracy, precision, recall, and F1-score. This remarkable performance highlights the superiority of transformer-based pretrained language models in malicious URL detection. Unlike traditional ML and DL models, ELECTRA benefits from contextual understanding and deep semantic representation, which allows it to detect subtle differences in URL patterns more effectively. These results confirm that while classical machine learning and CNN/LSTM-based architectures remain highly competitive, transformer-based models offer a distinct advantage, especially in large-scale and complex text-based cybersecurity tasks.

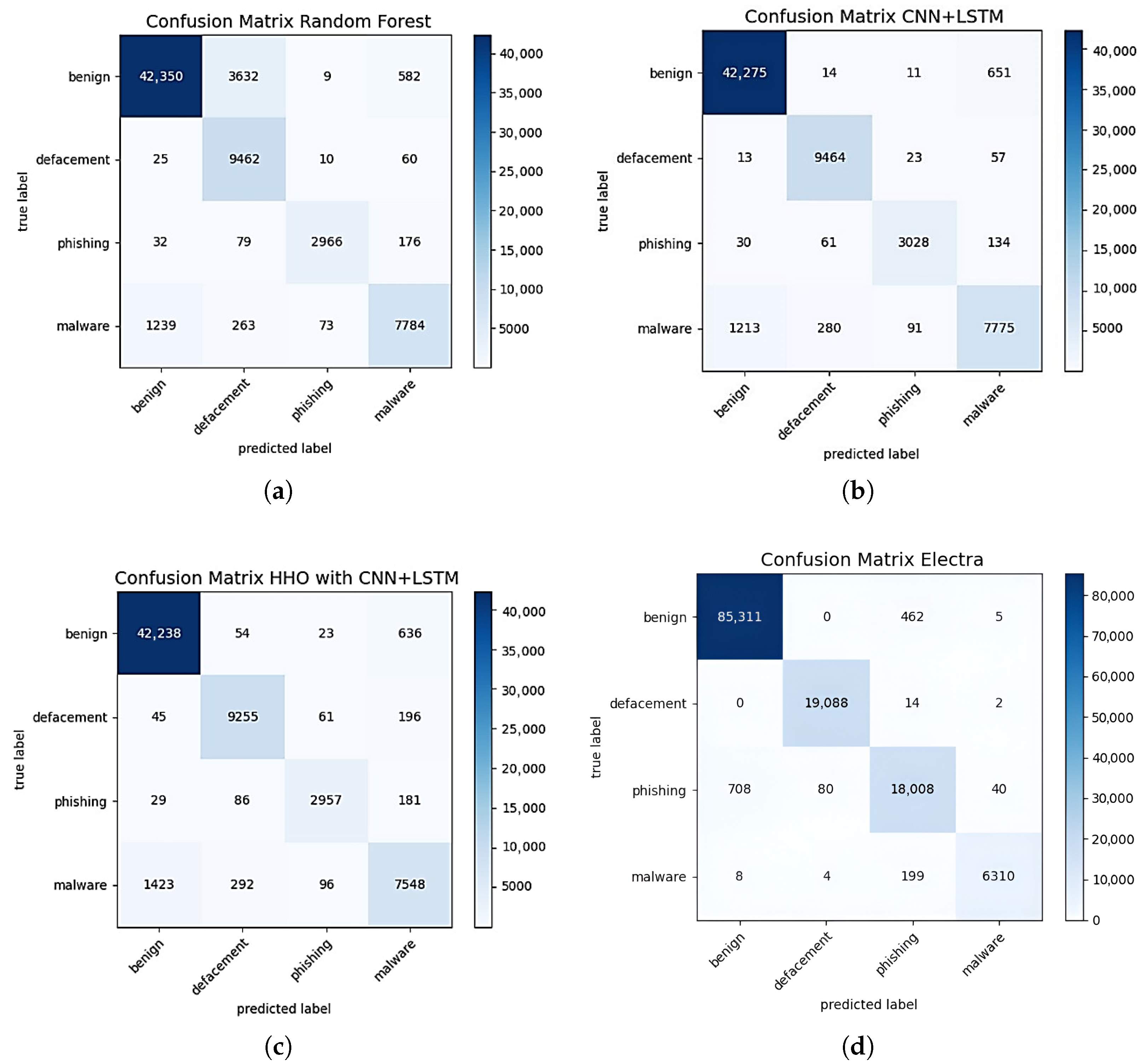

The confusion matrices presented in

Figure 3 provide a detailed illustration of the successes and errors of the models across the four main classes: benign, defacement, phishing, and malware. The Random Forest (RF) model correctly classified 42,350 samples in the benign class while misclassifying 3632 benign samples as defacement; additionally, within the malware class, it achieved 7784 correct classifications but erroneously identified 1239 malware samples as benign. With the CNN+LSTM model, misclassifications in the benign and defacement classes decreased significantly, and high accuracy was achieved for phishing and malware examples. Notably, in the CNN+LSTM model, only 651 misclassifications were observed in the benign class out of 42,275 correct classifications. The CNN+LSTM model optimized with HHO achieved higher correct classification rates in the phishing and defacement classes, making 9255 and 2957 accurate predictions, respectively. Furthermore, mislabeling in the benign and malware classes also decreased. The ELECTRA-based model demonstrated a clearly superior performance compared to the other models. In this model, only 5 out of 85,311 benign samples were misclassified into another class. Almost all samples in the defacement and phishing categories were also correctly classified, while in the malware class, only 19 out of 6310 samples were mislabeled. In particular, the ELECTRA model exhibited consistently high true classification rates and extremely low misclassification rates across all four classes. Overall, while classical machine learning and basic deep learning models showed relatively higher misclassification rates in dominant classes such as benign and defacement, language-model-based approaches like ELECTRA minimized errors for both minority and majority classes, yielding a much more balanced and higher overall performance. This explains why advanced models are preferred, especially in cybersecurity problems requiring multiclass classification and handling imbalanced data distributions.

In

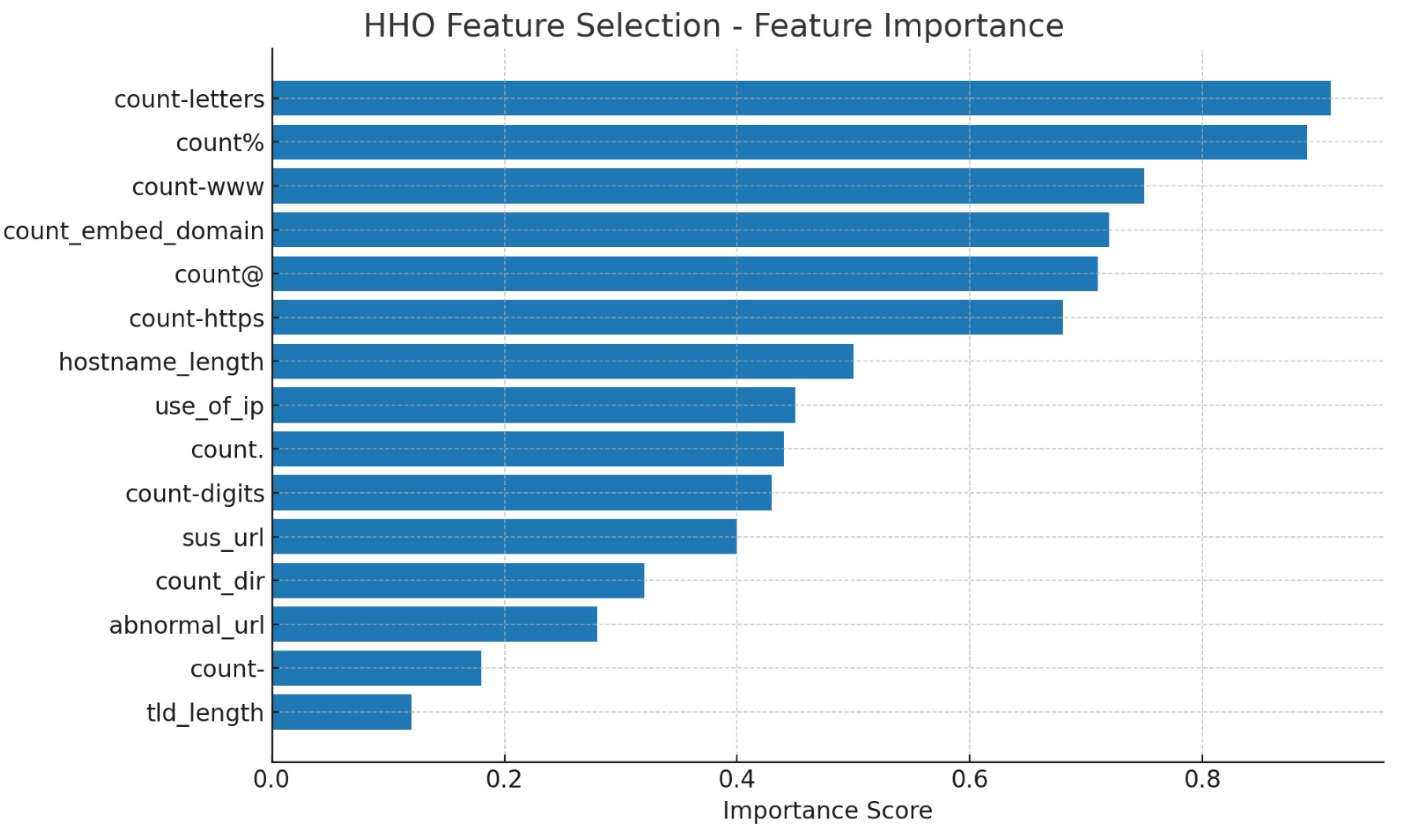

Figure 4, the distribution of feature importance values for the attributes selected by the HHO algorithm is represented alongside the corresponding feature names. Upon examination, it is evident that ‘count-letters’ (Feature 19), ‘count%’ (Feature 10), and ‘count-www’ (Feature 3) contribute most significantly to the model’s success in detecting malicious URLs. In other words, the number of letters within a URL, the use of the percent (%) character, and the frequency of ‘www’ are highly decisive in distinguishing malicious links. Additionally, features such as ‘count_embed_domain’ (Feature 6), ‘count@’ (Feature 4), and ‘count-https’ (Feature 8) also possess high importance and play a critical role in the model’s decision-making process. Conversely, attributes like ‘tld_length’ (Feature 17), ‘count-’ (Feature 11), and ‘fd_length’ (Feature 16) hold relatively lower importance for the model. These findings indicate that URL characteristics—such as the count of letters, special characters, and keywords—are prominent determinants in identifying malicious content and that the HHO algorithm effectively highlights such informative features. As a result, features with unnecessary or low informational value are eliminated, enhancing the effectiveness and accuracy of the model.

Table 3 provides a comprehensive comparison of performance metrics for binary classification of malicious and benign URLs using machine learning, deep learning, and optimization-supported hybrid models. Among the traditional machine learning approaches, RF achieved the highest overall performance, with an accuracy of 0.95, precision of 0.96, recall of 0.95, and F1-score of 0.95. This result highlights RF’s robustness in handling the feature set of the Malicious URL dataset, likely due to its ensemble nature and ability to reduce variance. XGBoost also exhibited strong performance with a 0.94 accuracy and a 0.94 F1-score, which is consistent with its reputation for efficient gradient boosting and strong generalization capability. LGBM followed closely with a 0.93 accuracy, demonstrating that boosting-based models provide a competitive edge in detecting malicious URLs. Deep learning models also performed competitively. The LSTM model reached a 0.95 accuracy, while CNN achieved 0.94; LSTM and convolutional structures (CNN) are both capable of learning relevant URL patterns. However, the hybrid CNN+LSTM model, contrary to expectations, performed considerably worse (accuracy = 0.84, and F1 = 0.80). This suggests that combining convolutional and recurrent layers without careful optimization may introduce complexity without yielding additional representational benefits. A likely explanation is that feature redundancy or overfitting negatively impacted its generalization ability. When metaheuristic optimization algorithms were applied to hybrid deep learning models, moderate improvements were observed compared to the basic CNN+LSTM. Both PSO+CNN+LSTM and HHO+CNN+LSTM achieved similar performance levels (accuracy ≈ 0.90, and F1 ≈ 0.89), demonstrating the potential of optimization methods in enhancing feature selection and hyperparameter tuning. However, GA+CNN+LSTM performed the worst among these, with only a 0.81 accuracy and a 0.76 F1-score. This result indicates that the effectiveness of metaheuristic optimization is highly algorithm-dependent; while PSO and HHO could better balance exploration and exploitation during the search process, GA may have failed to converge effectively in the given parameter space. Finally, the ELECTRA-based transformer model substantially outperformed all other approaches, achieving 0.99 across all metrics (accuracy, precision, recall, and F1-score). This remarkable result underscores the strength of pretrained language models in capturing semantic and structural patterns within URLs. Unlike classical ML and deep learning models that rely heavily on handcrafted feature representations or limited training, ELECTRA leverages large-scale pretraining and contextual embeddings, enabling it to generalize better and achieve near-perfect classification. In summary, while classical ML and optimized deep learning models provided solid baselines, ELECTRA demonstrated superior scalability and reliability. These results confirm that advanced transformer-based architectures are significantly more effective in malicious URL detection tasks compared to both ensemble machine learning and conventional neural networks.

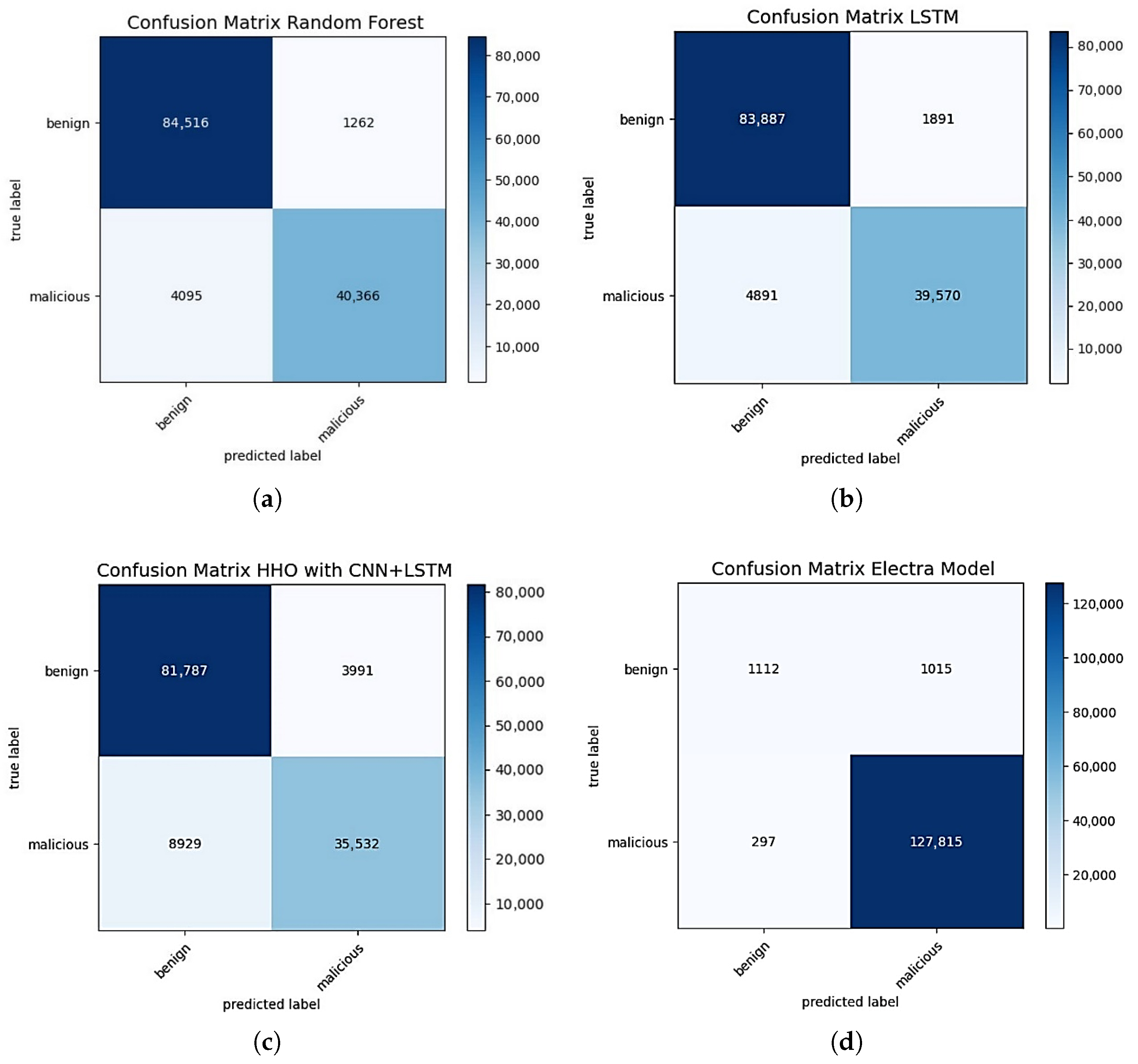

In

Figure 5, the binary classification results of the four best-performing algorithms are visualized using confusion matrices. The RF and LSTM models achieved high true classification rates in the benign class, but a portion of malicious class examples were incorrectly labeled as benign. The CNN+LSTM model optimized with HHO, on the other hand, correctly classified 81,787 benign samples but misclassified 3991 benign instances as malicious, and in the malicious class, 8929 samples were incorrectly classified; this indicates that the misclassification rate for this model is somewhat higher compared to the others. The ELECTRA-based DML, however, produced much more balanced and highly accurate results. This table clearly demonstrates that the ELECTRA model keeps errors to a minimum in both classes, exhibiting superior discriminatory performance in the binary classification task compared to all other methods. In conclusion, while classical ML and basic DL approaches yield moderate results regarding misclassifications, advanced language-model-based methods—especially in large and imbalanced datasets—stand out with high overall accuracy and low error rates.

Table 4 demonstrates that the performance of different algorithms was evaluated for both binary and multiclass classification problems. The binary classification results indicated that Random Forest and LSTM models achieved comparable performance levels (F1 = 0.95 and 0.94, respectively). The approximate confidence intervals, [0.93–0.97] for Random Forest and [0.92–0.96] for LSTM, suggest that their performances are statistically similar. In contrast, the ELECTRA model demonstrated a substantially higher performance with an F1-score of 0.99, and its confidence interval remained within the [0.98–1.00] range. This highlights the superior and consistent effectiveness of language-model-based approaches in binary classification tasks. For the multiclass scenario, both LightGBM and XGBoost yielded comparable results with F1-scores of 0.94, and their estimated confidence intervals clustered around [0.92–0.96]. This suggests no significant performance difference between the two models. However, once again, the ELECTRA model stood out with an F1-score of 0.99, clearly surpassing the traditional machine learning algorithms. The findings indicate that while classical machine learning and conventional deep learning methods achieve satisfactory results, transformer-based models such as ELECTRA provide more reliable and robust performance in both binary and multiclass classification tasks. This suggests that transformer-based approaches are likely to play a more prominent role in the future of malicious URL detection and similar cybersecurity applications.

Table 5 illustrates sample predictions and misclassification cases for different models (ELECTRA, HHO+CNN+LSTM, and Random Forest) under multiclass and binary classification scenarios. The ELECTRA model produced mostly accurate predictions and achieved high precision, especially in the phishing and benign classes. However, it did misclassify, for example, the URL

transit-port.net/AI.CogSci.Robotics/robotics.html, which is benign, as phishing. The HHO+CNN+LSTM and Random Forest models also performed well overall but occasionally produced false positives in the benign class, such as labeling a benign URL as phishing or malware. Similarly, in the binary classification scenario, the ELECTRA model correctly identified most benign URLs; although in some cases, it mislabeled malicious URLs as benign. Overall, this table demonstrates that the ELECTRA model consistently has the lowest error rate; however, no model is entirely flawless, and there remains potential for misclassification, particularly with the diverse URL structures encountered in real-world data. It is also notable that most misclassified examples were labeled as benign, which highlights the importance of minimizing false negatives in cybersecurity applications.

Table 6 provides a comprehensive comparison of the proposed approach against several state-of-the-art methods, considering both the multiclass (Scenario-1) and binary (Scenario-2) classification tasks. In the multiclass scenario (Scenario-1), the proposed ELECTRA-based model achieved highly competitive results, with accuracy, precision, recall, and F1-score all reported at 0.99. This performance underscores the robustness of the ELECTRA architecture, which effectively balances false positives and false negatives. When compared to other multiclass approaches in the literature, such as ref. [

4] (Scenario-1) with 96.04% accuracy and 93.97% F1, ref. [

18] (Scenario-1) with 98.78% accuracy, and ref. [

20] (Scenario-1) with 97% accuracy, the proposed model demonstrates superior performance, positioning it as a state-of-the-art solution in multiclass classification. In contrast, the binary classification task (Scenario-2) employed a hybrid HHO+CNN+LSTM model, which obtained an accuracy of 90.4%, precision of 0.90, recall of 0.87, and F1-score of 0.89. Although these results confirm the adaptability of our optimization-driven pipeline, they fall short when compared with stronger binary baselines. For example, ref. [

4] (Scenario-2) reported 98.19% accuracy and 97.26% F1, ref. [

17] (Scenario-2) achieved 99.26% precision, 98.73% recall, and 98.99% F1, and ref. [

41] (Scenario-2) outperformed all other methods with 99.82% accuracy, supported by its integration of n-gram features, CNN, BiLSTM, and Attention mechanisms. The high performance of these approaches is largely due to the use of more complex and computationally intensive architectures, which enable deeper feature representation but at the cost of increased training and inference complexity.

The findings highlight two important aspects. First, the proposed method delivers state-of-the-art performance in multiclass classification, surpassing most competing methods in the literature. Second, while the binary scenario results are competitive, they remain below those of heavily engineered or ensemble-based methods such as ref. [

41]. This trade-off emphasizes the efficiency–performance balance of our approach: ELECTRA achieves near-ceiling performance with a relatively streamlined architecture in the multiclass setting, while the binary model offers a resource-efficient yet flexible framework. Future work will focus on enhancing the binary case by incorporating more powerful representation learning techniques, such as transformer fine-tuning or calibrated ensembling, to bridge the performance gap with highly complex architectures.