A Multi-Scale Feature Fusion Dual-Branch Mamba-CNN Network for Landslide Extraction

Abstract

Featured Application

Abstract

1. Introduction

- MSCG-Net is a novel landslide detection network combining omnidirectional multiscale Mamba modules and convolutional layers in a dual-branch architecture. This enables effective fusion of global and local features, improving boundary preservation and segmentation quality.

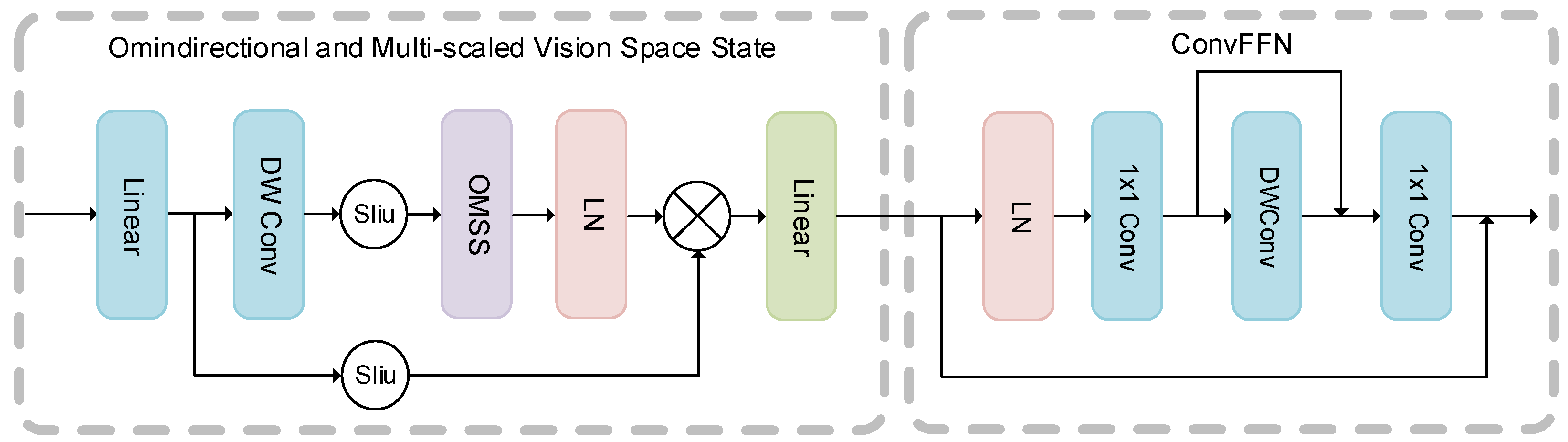

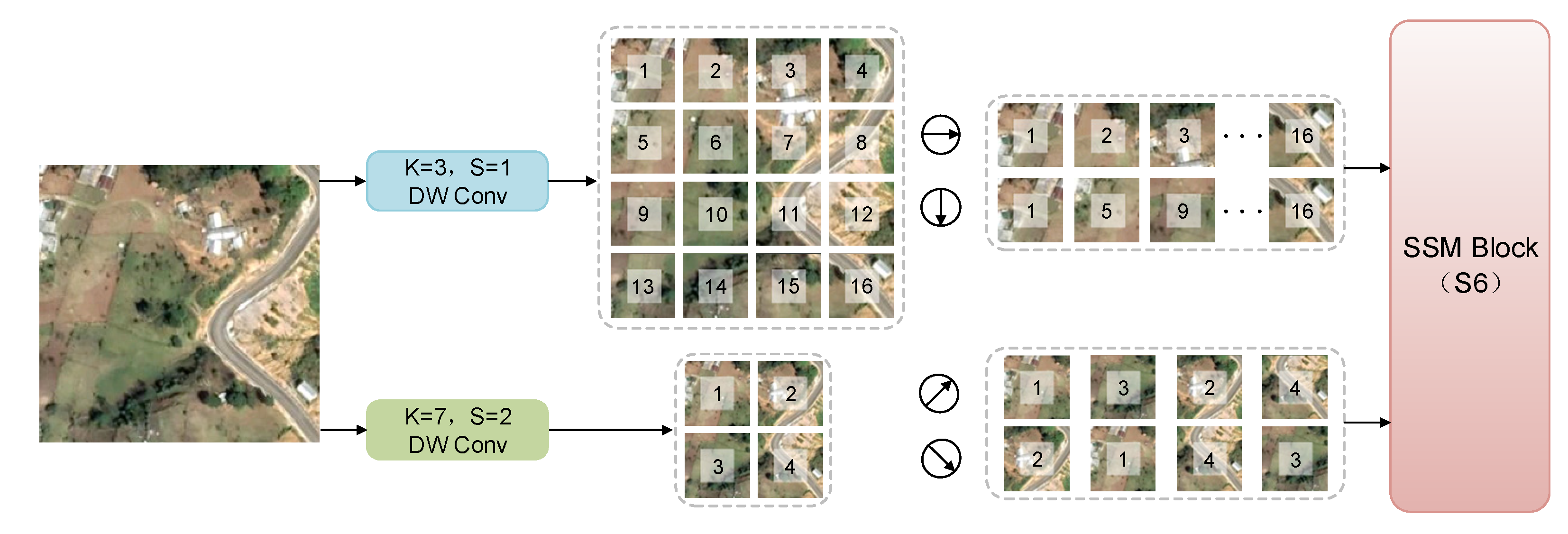

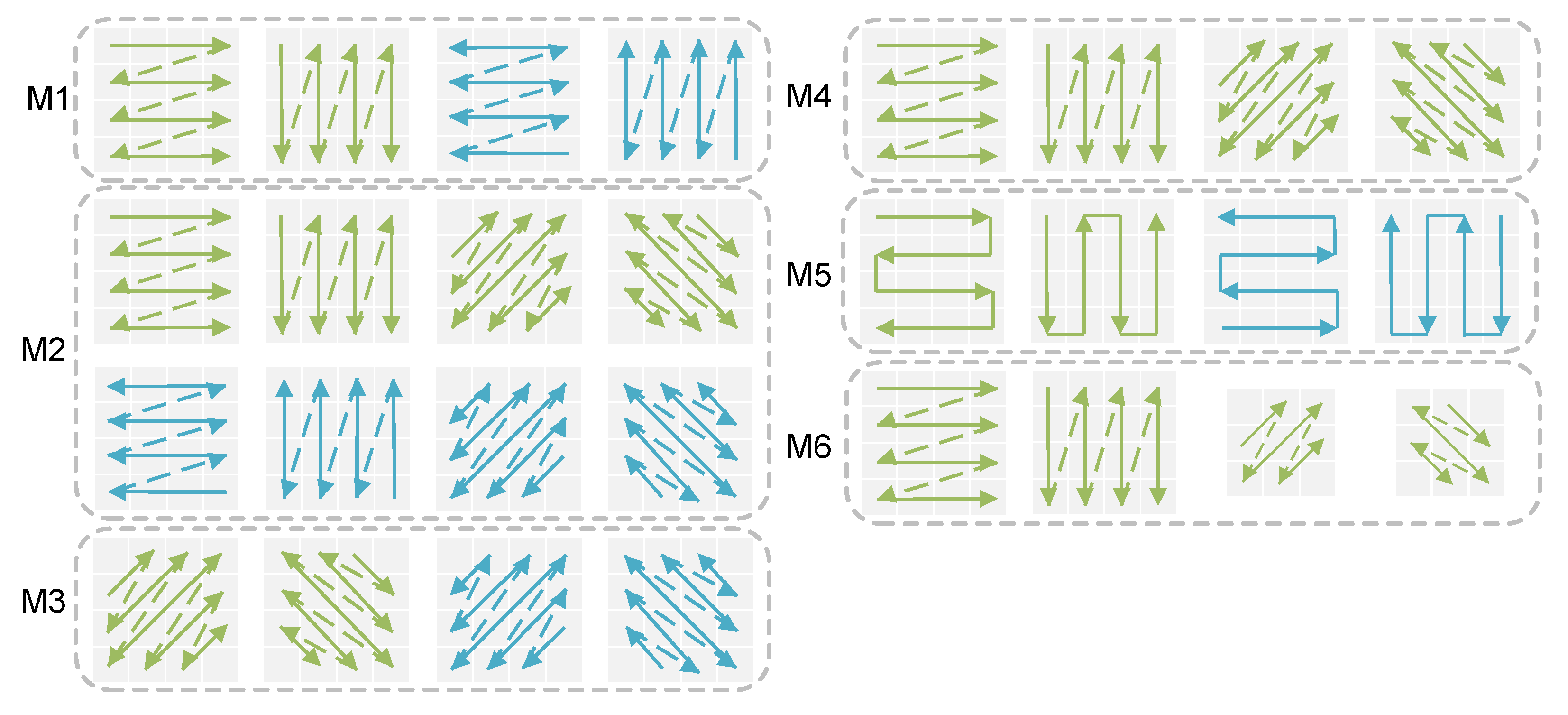

- The OMSS module is proposed by employing a multidirectional and multiscale scanning strategy to enhance boundary perception in complex and heterogeneous landslide regions while improving computational efficiency.

- The Adaptive Feature Enhancement Module (AFEM) is introduced to effectively enhance the spatial details and global dependencies extracted from both branches.

2. Materials and Methods

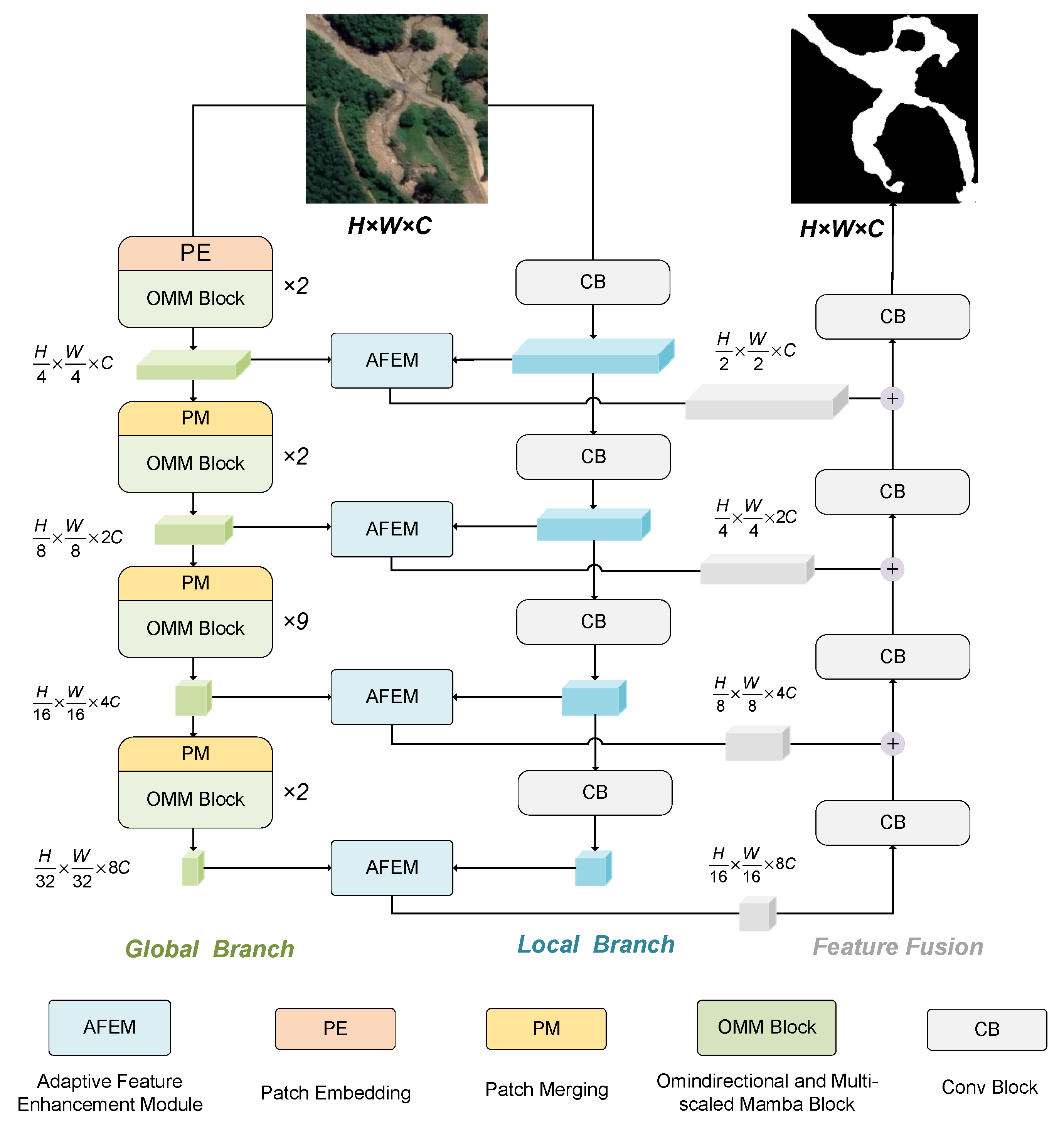

2.1. Overall Framework of the Model

- A dual-branch architecture is designed to extract both contextual dependencies and boundary details.

- The OMSS module introduces diagonal semantic information and integrates multiscale features to enhance the model’s perception of features across different spatial scales.

- The AFEM module filters noise and enhances features from both branches to accurately refine contextual representations and edge information.

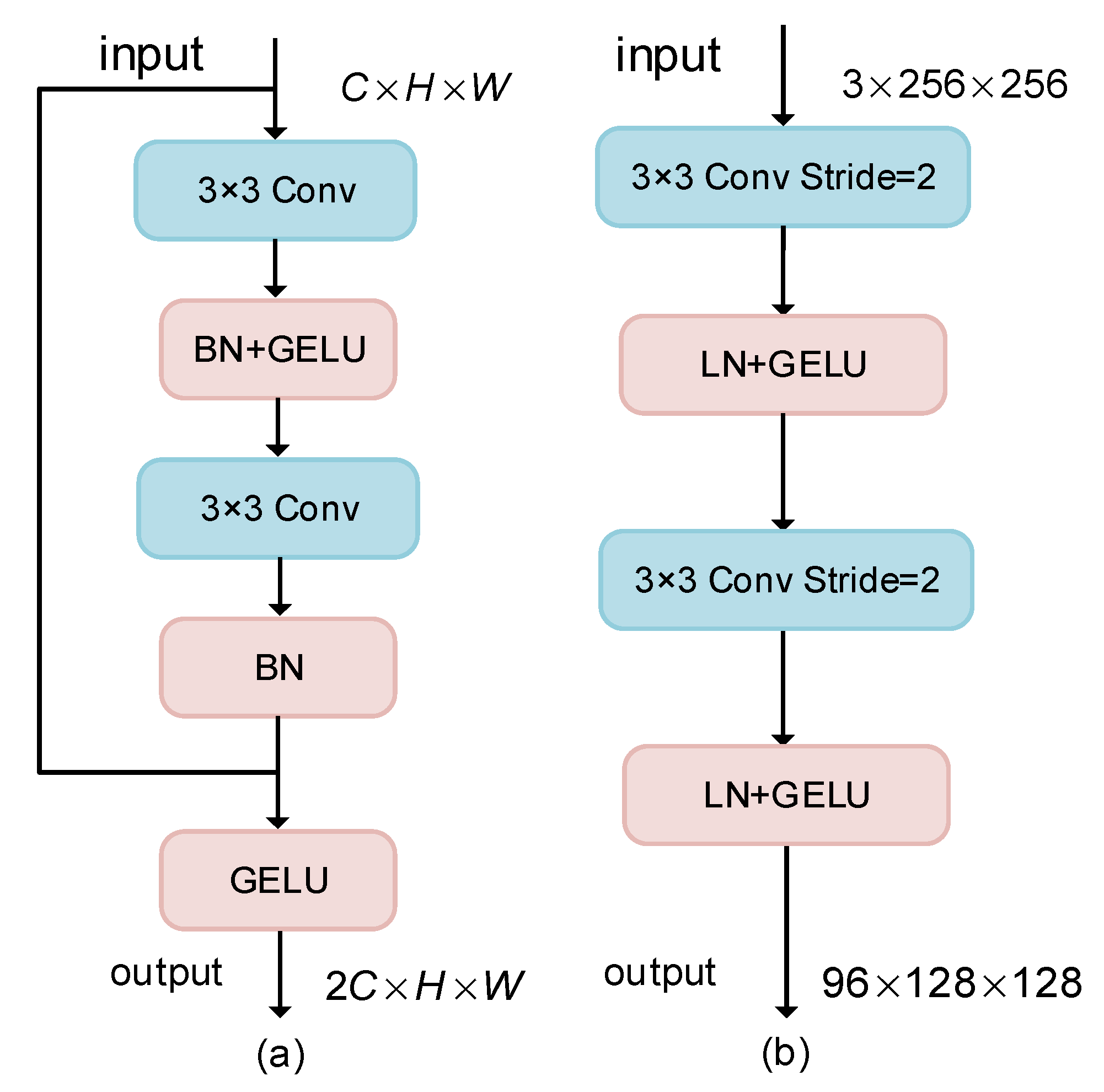

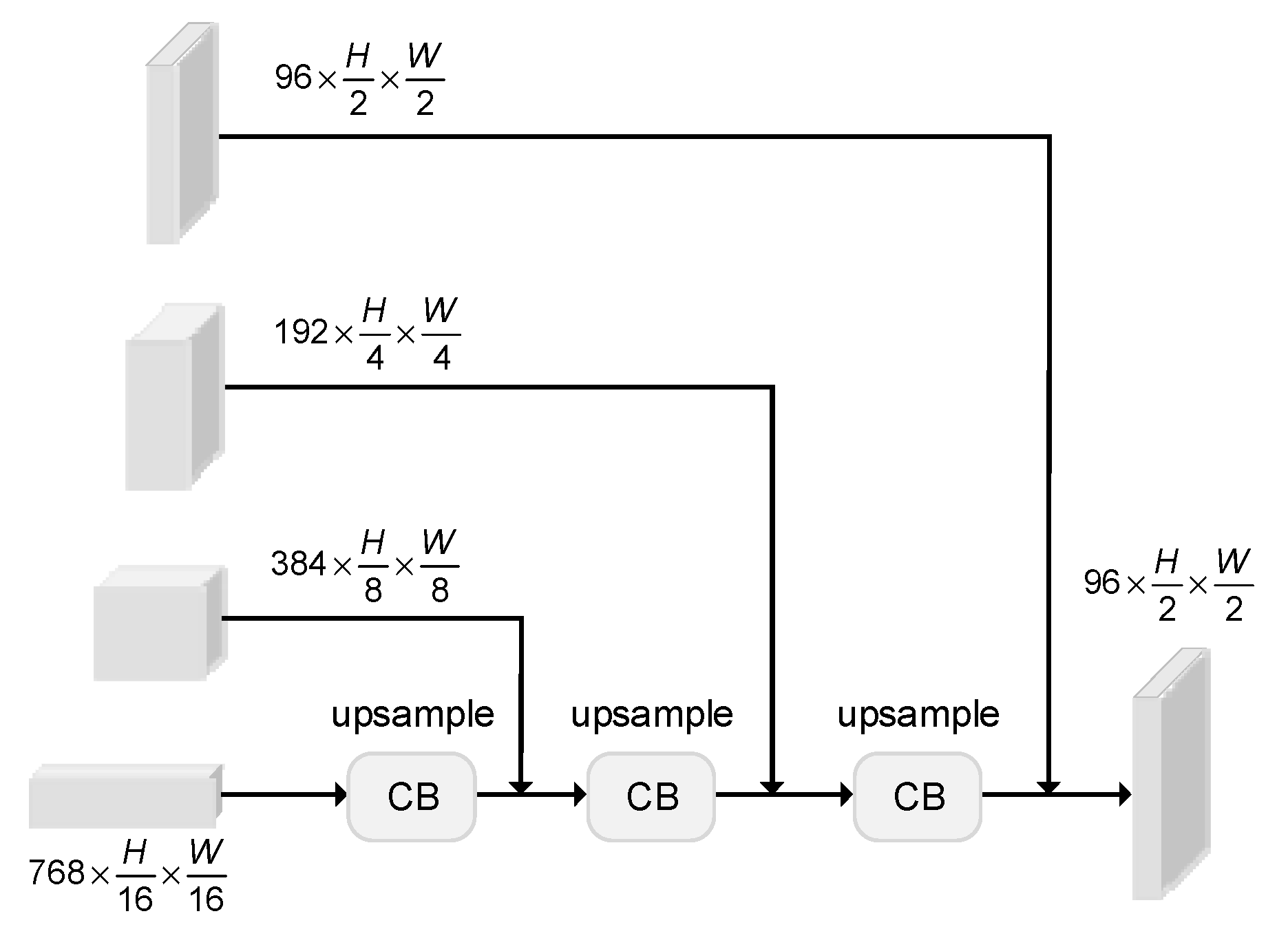

2.2. The Local Detail Extraction Branch

2.3. The Contextual Feature Extraction Branch

2.4. Adaptive Feature Enhancement Module

2.5. Feature Fusion Module

2.6. Performance Metrics

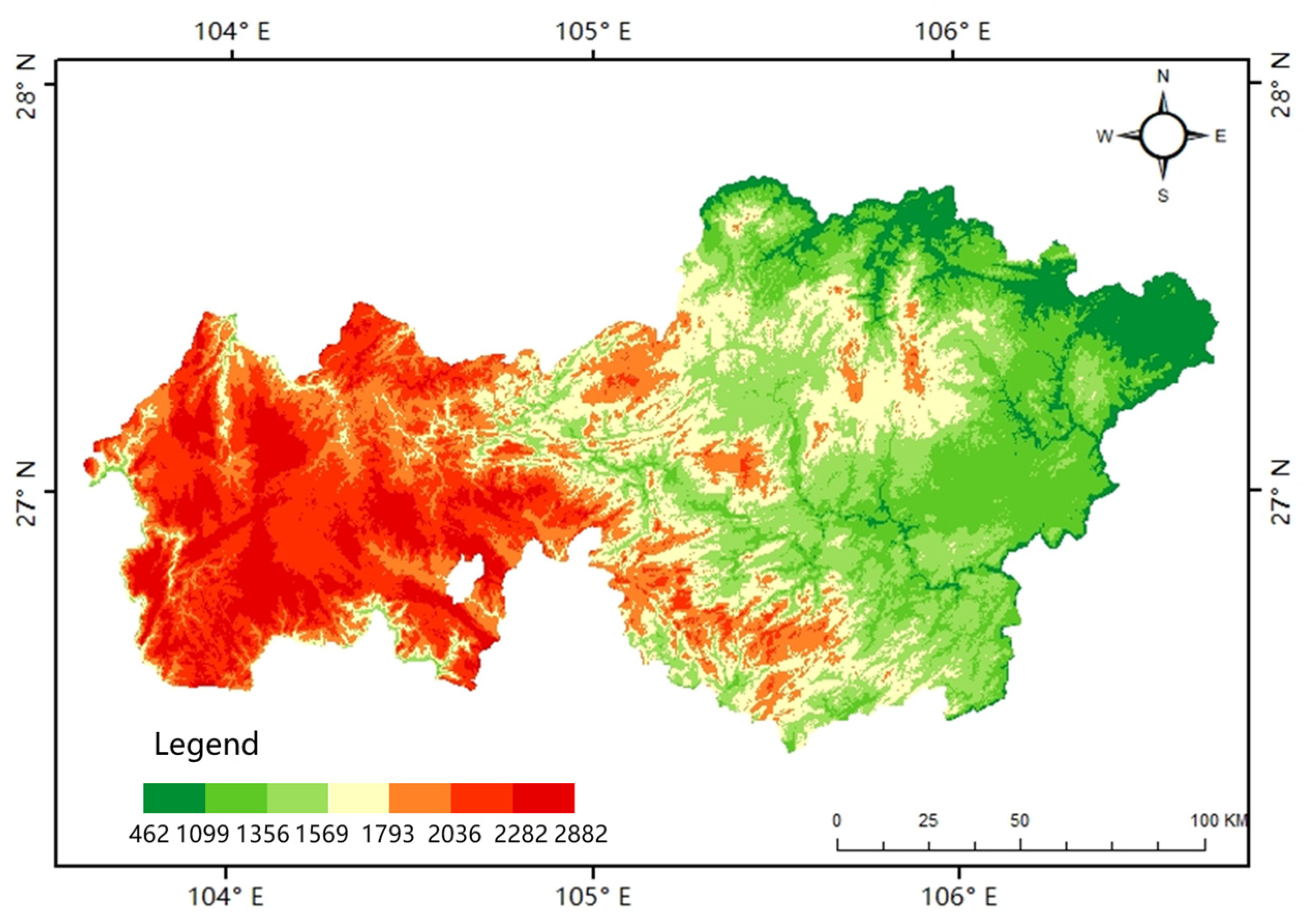

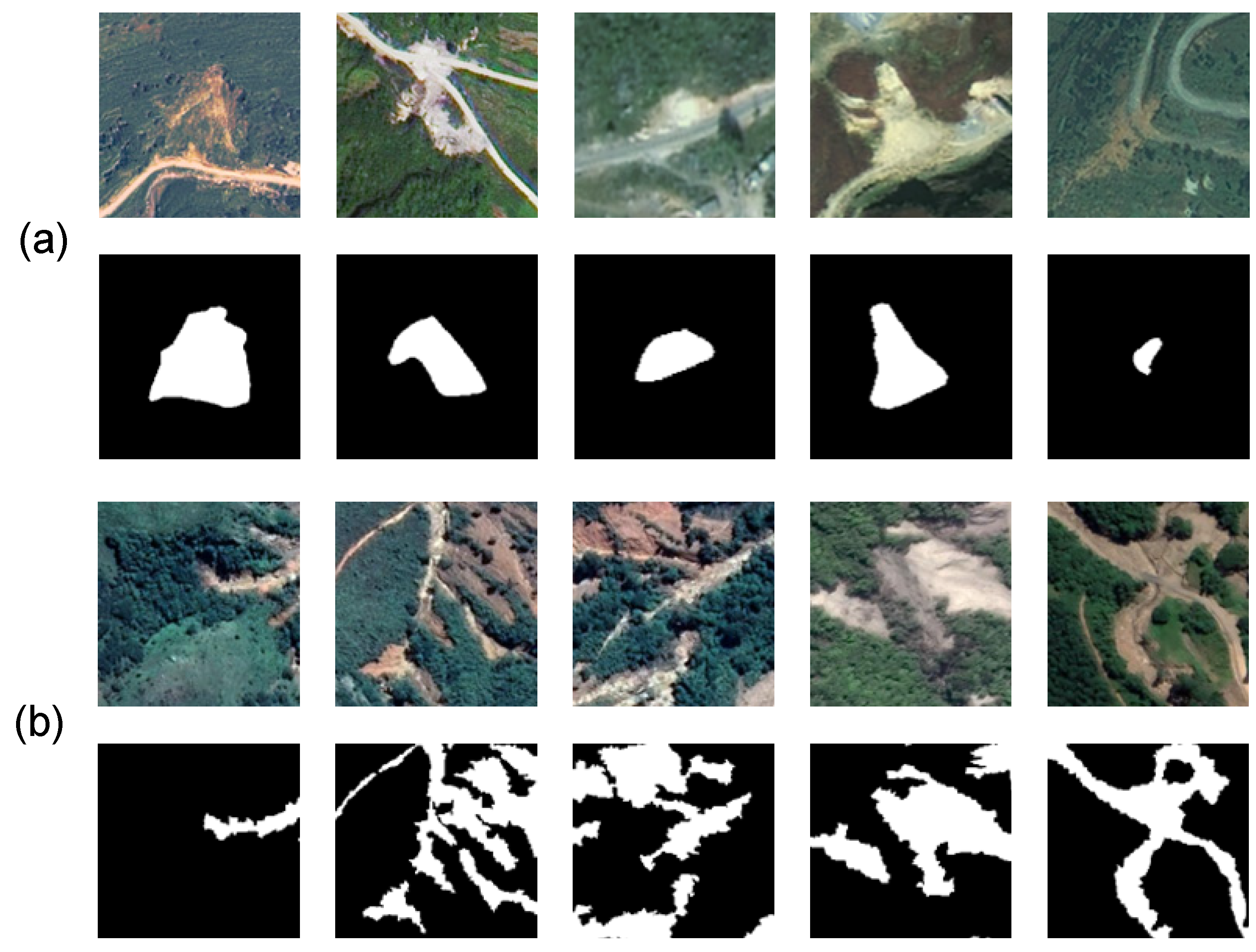

2.7. Datasets and Study Areas

3. Results

3.1. Experiment Settings

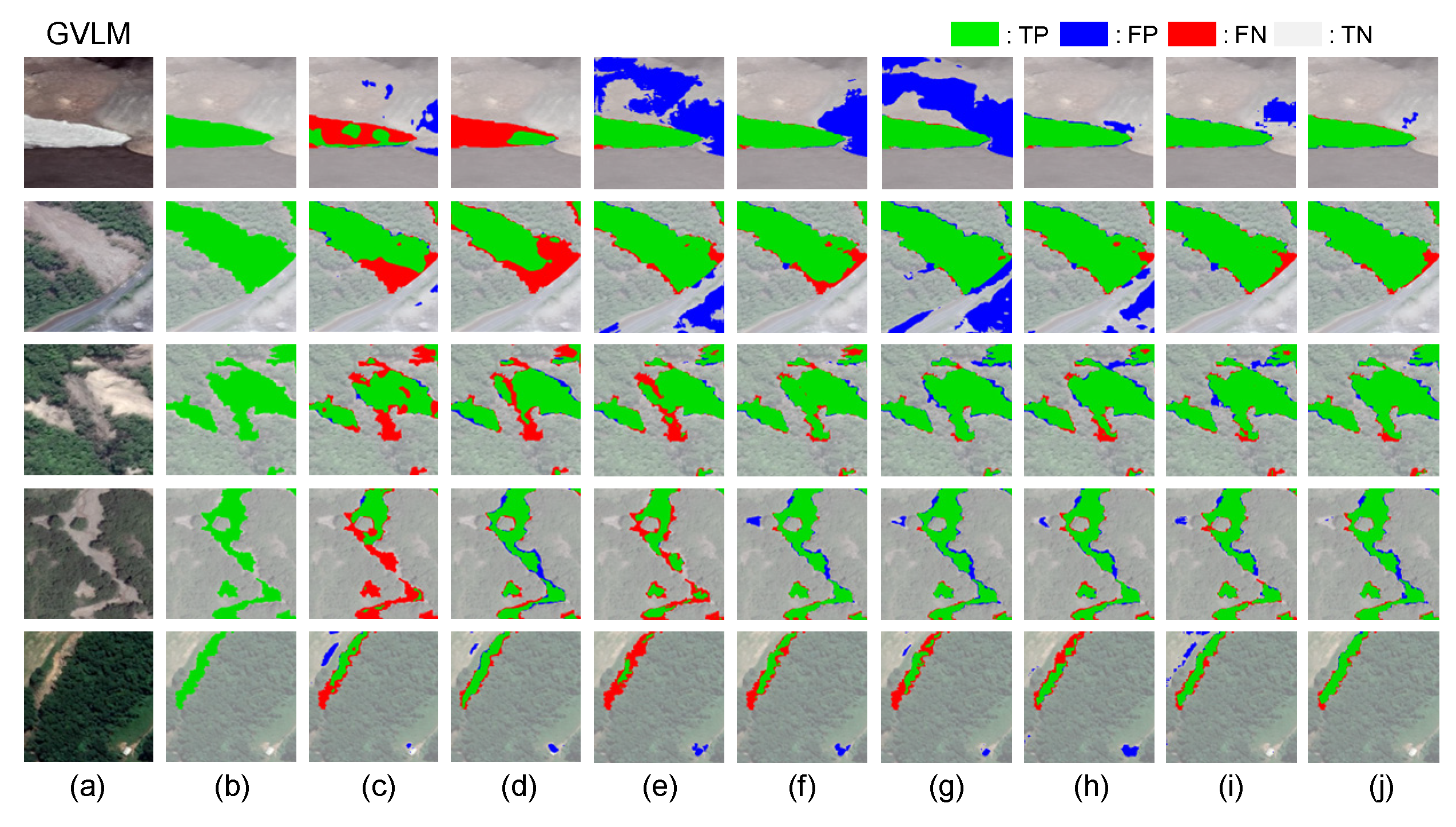

3.2. Comparative Experiments

3.3. Computational Efficiency

4. Discussion

4.1. Ablation Study

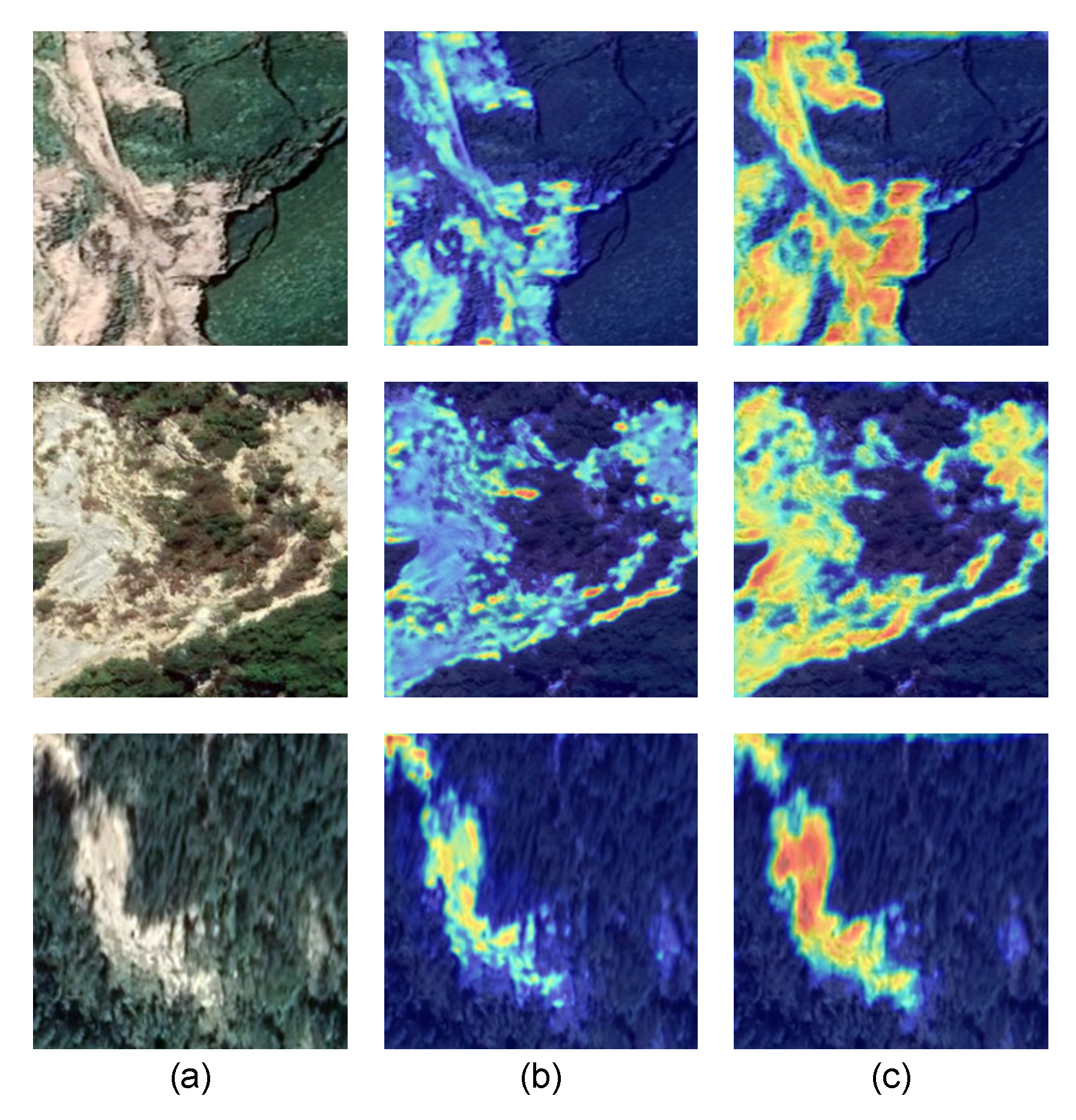

4.2. CAM Visualization

4.3. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| MSCG-Net | Multi-Scale Spatial Context-Guided Network |

| CNN | Convolutional Neural Network |

| AFEM | Adaptive Feature Enhancement Module |

| SFA | Structural Feature Attention |

| SAHF | Soft Adaptive High-Pass Filter |

| OMSS | Omnidirectional and Multi-Scaled Scan 2D |

References

- Klose, M.; Highland, L.; Damm, B.; Terhorst, B. Estimation of direct landslide costs in industrialized countries: Challenges, concepts, and case study. In Proceedings of the Landslide Science for a Safer Geoenvironment: Volume 2: Methods of Landslide Studies; Springer: Cham, Switzerland, 2014; pp. 661–667. [Google Scholar]

- Ozturk, U.; Bozzolan, E.; Holcombe, E.A.; Shukla, R.; Pianosi, F.; Wagener, T. How climate change and unplanned urban sprawl bring more landslides. Nature 2022, 608, 262–265. [Google Scholar] [CrossRef]

- Stumpf, A.; Kerle, N. Object-oriented mapping of landslides using Random Forests. Remote Sens. Environ. 2011, 115, 2564–2577. [Google Scholar] [CrossRef]

- Prakash, N.; Manconi, A.; Loew, S. A new strategy to map landslides with a generalized convolutional neural network. Sci. Rep. 2021, 11, 9722. [Google Scholar] [CrossRef]

- Niu, C.; Gao, O.; Lu, W.; Liu, W.; Lai, T. Reg-SA–UNet++: A lightweight landslide detection network based on single-temporal images captured postlandslide. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 9746–9759. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Chen, X.; Zhao, C.; Lu, Z.; Xi, J. Landslide Inventory Mapping Based on Independent Component Analysis and UNet3+: A Case of Jiuzhaigou, China. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 17, 2213–2223. [Google Scholar] [CrossRef]

- Qi, W.; Wei, M.; Yang, W.; Xu, C.; Ma, C. Automatic Mapping of Landslides by the ResU-Net. Remote Sens. 2020, 12, 2487. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Crivellari, A.; Ghamisi, P.; Shahabi, H.; Blaschke, T. A comprehensive transferability evaluation of U-Net and ResU-Net for landslide detection from Sentinel-2 data (case study areas from Taiwan, China, and Japan). Sci. Rep. 2021, 11, 14629. [Google Scholar] [CrossRef]

- Peta, K.; Stemp, W.J.; Stocking, T.; Chen, R.; Love, G.; Gleason, M.A.; Houk, B.A.; Brown, C.A. Multiscale Geometric Characterization and Discrimination of Dermatoglyphs (Fingerprints) on Hardened Clay—A Novel Archaeological Application of the GelSight Max. Materials 2025, 18, 2939. [Google Scholar] [CrossRef]

- Lei, T.; Zhang, Y.; Lv, Z.; Li, S.; Liu, S.; Nandi, A.K. Landslide inventory mapping from bitemporal images using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 982–986. [Google Scholar] [CrossRef]

- Yi, Y.; Zhang, W. A new deep-learning-based approach for earthquake-triggered landslide detection from single-temporal RapidEye satellite imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6166–6176. [Google Scholar] [CrossRef]

- Lu, W.; Hu, Y.; Shao, W.; Wang, H.; Zhang, Z.; Wang, M. A multiscale feature fusion enhanced CNN with the multiscale channel attention mechanism for efficient landslide detection (MS2LandsNet) using medium-resolution remote sensing data. Int. J. Digit. Earth 2024, 17, 2300731. [Google Scholar] [CrossRef]

- Lv, P.; Ma, L.; Li, Q.; Du, F. ShapeFormer: A shape-enhanced vision transformer model for optical remote sensing image landslide detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 2681–2689. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, T.; Dou, J.; Liu, G.; Plaza, A. Landslide susceptibility mapping considering landslide local-global features based on CNN and transformer. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 7475–7489. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision Mamba: Efficient Visual Representation Learning with Bidirectional State Space Model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual state space model. arXiv 2024, arXiv:2401.10166. [Google Scholar]

- Zhao, S.; Chen, H.; Zhang, X.; Xiao, P.; Bai, L.; Ouyang, W. Rs-mamba for large remote sensing image dense prediction. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–14. [Google Scholar] [CrossRef]

- Zhu, Q.; Fang, Y.; Cai, Y.; Chen, C.; Fan, L. Rethinking scanning strategies with vision mamba in semantic segmentation of remote sensing imagery: An experimental study. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 18223–18234. [Google Scholar] [CrossRef]

- Yang, C.; Chen, Z.; Espinosa, M.; Ericsson, L.; Wang, Z.; Liu, J.; Crowley, E.J. Plainmamba: Improving non-hierarchical mamba in visual recognition. arXiv 2024, arXiv:2403.17695. [Google Scholar]

- Shi, Y.; Dong, M.; Xu, C. Multi-scale vmamba: Hierarchy in hierarchy visual state space model. arXiv 2024, arXiv:2405.14174. [Google Scholar] [CrossRef]

- Zhao, C.; Chen, S.; Wu, F.; Li, H. Feasibility analysis of the Mamba-based landslide mapping from remote sensing images. In Proceedings of the 5th International Conference on Artificial Intelligence and Computer Engineering, Wuhu, China, 8–10 November 2024; pp. 501–505. [Google Scholar]

- Ma, X.; Zhang, X.; Pun, M.-O. Rs 3 mamba: Visual state space model for remote sensing image semantic segmentation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Wu, R.; Liu, Y.; Liang, P.; Chang, Q. Ultralight vm-unet: Parallel vision mamba significantly reduces parameters for skin lesion segmentation. arXiv 2024, arXiv:2403.20035. [Google Scholar] [CrossRef]

- Yu, W.; Wang, X. Mambaout: Do we really need mamba for vision? In Proceedings of the Computer Vision and Pattern Recognition Conference (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 4484–4496. [Google Scholar]

- Xu, Y.; Ouyang, C.; Xu, Q.; Wang, D.; Zhao, B.; Luo, Y. CAS landslide dataset: A large-scale and multisensor dataset for deep learning-based landslide detection. Sci. Data 2024, 11, 12. [Google Scholar] [CrossRef]

- Ruan, J.; Li, J.; Xiang, S. Vm-unet: Vision mamba unet for medical image segmentation. arXiv 2024, arXiv:2402.02491. [Google Scholar] [CrossRef]

- Liu, M.; Dan, J.; Lu, Z.; Yu, Y.; Li, Y.; Li, X. CM-UNet: Hybrid CNN-Mamba UNet for remote sensing image semantic segmentation. arXiv 2024, arXiv:2405.10530. [Google Scholar]

- Cao, Y.; Liu, C.; Wu, Z.; Zhang, L.; Yang, L. Remote SensingImage Segmentation Using Vision Mamba and Multi-Scale Multi-Frequency Feature Fusion. Remote Sens. 2025, 17, 1390. [Google Scholar] [CrossRef]

- Liu, J.; Yang, H.; Zhou, H.-Y.; Xi, Y.; Yu, L.; Li, C.; Liang, Y.; Shi, G.; Yu, Y.; Zhang, S. Swin-umamba: Mamba-based unet with imagenet-based pretraining. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Marrakesh, Morocco, 6–10 October 2024; pp. 615–625. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10022. [Google Scholar]

- Yang, J.; Cai, W.; Chen, G.; Yan, J. A State Space Model-Driven Multiscale Attention Method for Geological Hazard Segmentation. IEEE Trans. Instrum. Meas. 2025, 74, 1–12. [Google Scholar] [CrossRef]

- Fan, Y.; Ma, P.; Hu, Q.; Liu, G.; Guo, Z.; Tang, Y.; Wu, F.; Zhang, H. SCGC-Net: Spatial Context Guide Calibration Network for multi-source RSI Landslides detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–17. [Google Scholar] [CrossRef]

- Huang, T.; Pei, X.; You, S.; Wang, F.; Qian, C.; Xu, C. Localmamba: Visual state space model with windowed selective scan. In Proceedings of the Computer Vision—ECCV 2024 Workshops, Milan, Italy, 29 September–4 October 2024; pp. 12–22. [Google Scholar]

- Huang, Z.; Wei, Y.; Wang, X.; Liu, W.; Huang, T.S.; Shi, H. Alignseg: Feature-aligned segmentation networks. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 550–557. [Google Scholar] [CrossRef] [PubMed]

- Cheng, B.; Girshick, R.; Dollár, P.; Berg, A.C.; Kirillov, A. Boundary IoU: Improving object-centric image segmentation evaluation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15334–15342. [Google Scholar]

- Ji, S.; Yu, D.; Shen, C.; Li, W.; Xu, Q. Landslide detection from an open satellite imagery and digital elevation model dataset using attention boosted convolutional neural networks. Landslides 2020, 17, 1337–1352. [Google Scholar] [CrossRef]

- Zhang, X.; Yu, W.; Pun, M.-O.; Shi, W. Cross-domain landslide mapping from large-scale remote sensing images using prototype-guided domain-aware progressive representation learning. ISPRS J. Photogramm. Remote Sens. 2023, 197, 1–17. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Xue, H.; Liu, C.; Wan, F.; Jiao, J.; Ji, X.; Ye, Q. Danet: Divergent activation for weakly supervised object localization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6589–6598. [Google Scholar]

- Lian, S.; Luo, Z.; Zhong, Z.; Lin, X.; Su, S.; Li, S. Attention guided U-Net for accurate iris segmentation. J. Vis. Commun. Image Represent. 2018, 56, 296–304. [Google Scholar] [CrossRef]

- Li, C.; Tan, Y.; Chen, W.; Luo, X.; Gao, Y.; Jia, X.; Wang, Z. Attention unet++: A nested attention-aware u-net for liver ct image segmentation. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 345–349. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. arXiv 2021, arXiv:2105.15203. [Google Scholar] [CrossRef]

| Method | Bijie | |||||

|---|---|---|---|---|---|---|

| OA (%) | P (%) | R (%) | F1 (%) | IoU (%) | BIoU (%) | |

| DeepLabV3Plus | 96.85 | 85.41 | 84.12 | 84.76 | 73.55 | 62.81 |

| DANet | 97.09 | 87.29 | 84.25 | 85.74 | 75.05 | 65.93 |

| AttUnet | 96.59 | 83.62 | 83.56 | 83.59 | 71.81 | 61.22 |

| TransUnet | 94.52 | 73.40 | 74.25 | 73.82 | 58.51 | 48.00 |

| Nested Attention U-Net | 98.87 | 83.67 | 86.87 | 85.24 | 74.28 | 63.86 |

| SegFormer | 96.81 | 84.63 | 84.67 | 84.65 | 73.38 | 62.62 |

| VMUnet | 97.33 | 88.82 | 85.07 | 84.91 | 76.84 | 66.81 |

| Ours | 97.44 | 87.74 | 87.60 | 87.67 | 78.04 | 69.09 |

| Method | GVLM | |||||

|---|---|---|---|---|---|---|

| OA (%) | P (%) | R (%) | F1 (%) | IoU (%) | BIoU (%) | |

| DeepLabV3Plus | 94.14 | 90.66 | 79.28 | 84.59 | 73.29 | 48.49 |

| DANet | 94.22 | 86.14 | 85.22 | 85.68 | 74.95 | 53.74 |

| AttUnet | 94.88 | 90.83 | 83.13 | 86.81 | 76.69 | 49.07 |

| TransUnet | 94.13 | 82.44 | 90.29 | 86.19 | 75.73 | 53.07 |

| Nested Attention U-Net | 95.50 | 88.98 | 88.80 | 88.89 | 80.00 | 59.00 |

| SegFormer | 94.75 | 86.23 | 88.19 | 87.20 | 77.31 | 51.03 |

| VMUnet | 95.36 | 88.65 | 88.46 | 88.56 | 79.46 | 57.85 |

| Ours | 95.66 | 87.31 | 91.98 | 89.58 | 81.13 | 62.10 |

| Model | FLOPs (G). | Params (M). |

|---|---|---|

| DeepLabV3Plus | 20.854 G | 54.708 M |

| DANet | 144.637 G | 65.182 M |

| AttUnet | 66.632 G | 34.879 M |

| TransUnet | 32.633 G | 66.815 M |

| Nested Attention U-Net | 34.903 G | 9.163 M |

| SegFormer | 3.273 G | 7.713 M |

| VMUnet | 7.531 G | 60.273 M |

| Ours | 47.531 G | 39.442 M |

| Exp ID | FPS | FLOPs | F1 (%) | IoU (%) | BIoU (%) |

|---|---|---|---|---|---|

| A1 | 29.84 | 47.478 G | 88.64 | 79.60 | 59.37 |

| A2 | 17.23 | 47.478 G | 89.26 | 80.60 | 59.96 |

| A3 | 17.90 | 47.478 G | 88.90 | 79.77 | 60.02 |

| A4 | 21.63 | 47.478 G | 89.09 | 80.49 | 60.06 |

| A5 | 23.48 | 35.210 G | 89.11 | 80.35 | 59.16 |

| A6 | 22.34 | 47.531 G | 89.58 | 81.13 | 62.10 |

| Exp ID | CNN | SFA | SAHF | Param (M) | FLOPs (G) | F1 (%) | IoU (%) | BIoU (%) |

|---|---|---|---|---|---|---|---|---|

| B1 | 19.62 | 43.31 | 86.6 | 79.42 | 58.05 | |||

| B2 | √ | 39.44 | 47.53 | 88.8 | 79.92 | 58.77 | ||

| B3 | √ | √ | 39.19 | 35.26 | 89.3 | 80.67 | 59.99 | |

| B4 | √ | √ | 44.42 | 72.02 | 89.1 | 80.28 | 60.73 | |

| B5 | √ | √ | √ | 39.44 | 47.53 | 89.4 | 81.13 | 62.10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Z.; Zhang, H.; Zheng, N. A Multi-Scale Feature Fusion Dual-Branch Mamba-CNN Network for Landslide Extraction. Appl. Sci. 2025, 15, 10063. https://doi.org/10.3390/app151810063

Yang Z, Zhang H, Zheng N. A Multi-Scale Feature Fusion Dual-Branch Mamba-CNN Network for Landslide Extraction. Applied Sciences. 2025; 15(18):10063. https://doi.org/10.3390/app151810063

Chicago/Turabian StyleYang, Zhiheng, Hua Zhang, and Nanshan Zheng. 2025. "A Multi-Scale Feature Fusion Dual-Branch Mamba-CNN Network for Landslide Extraction" Applied Sciences 15, no. 18: 10063. https://doi.org/10.3390/app151810063

APA StyleYang, Z., Zhang, H., & Zheng, N. (2025). A Multi-Scale Feature Fusion Dual-Branch Mamba-CNN Network for Landslide Extraction. Applied Sciences, 15(18), 10063. https://doi.org/10.3390/app151810063