1. Introduction

With the continuous evolution of Internet technologies and the growing acceptance of lifelong learning, online education has gradually become a crucial channel for knowledge acquisition. In particular, MOOCs (Massive Open Online Courses), characterized by openness and high accessibility, have attracted widespread attention from learners worldwide. According to UNESCO’s 2023 Global Education Monitoring Report, the number of MOOC learners reached approximately 220 million in 2021. Leading platforms such as Coursera, edX, and XuetangX now cover a wide range of disciplines, including computer science, medicine, and art design, with the number of courses continuing to grow. While the abundance of educational resources greatly expands learners’ horizons and opportunities, it also exacerbates the problem of information overload. Many learners face difficulties in selecting courses that match their interests and needs, which hinders learning efficiency and reduces platform engagement. Although current recommendation systems have alleviated this issue to some extent, user satisfaction with the recommendations remains suboptimal [

1,

2]. In particular, in terms of personalized recommendation and semantic understanding, existing approaches still face significant challenges, highlighting the need for more accurate and intelligent recommendation techniques.

CF (collaborative filtering) is a classical and widely applied approach in recommendation systems. User-based CF generates recommendation lists by modeling similarities between users [

3], while item-based CF captures co-occurrence relationships between items [

4]. Both methods have demonstrated solid performance across various recommendation tasks. However, in the MOOC domain, the vast number of courses and the sparsity of user interactions result in a user–item interaction matrix with a sparsity level often exceeding 95%, which limits the effectiveness of CF methods [

5]. Such extreme sparsity significantly degrades the performance of classical collaborative filtering and matrix factorization methods, as they rely heavily on sufficient co-occurrence information to infer user preferences [

6]. To address the sparsity problem, matrix factorization techniques have been extensively adopted [

7]. For example, ADLRS incorporates additional information sources within a matrix factorization framework and leverages language models to represent contextual information, thereby embedding key features effectively [

8]. Nevertheless, traditional CF and matrix factorization methods remain fundamentally limited in capturing complex user preference dynamics [

9] and fail to exploit the rich semantic information embedded in course descriptions and titles [

10]. Consequently, their ability to model fine-grained relationships between courses and handle cold-start scenarios in large-scale MOOC platforms remains inadequate.

In recent years, the integration of deep learning techniques has greatly enhanced the modeling capabilities of recommendation systems. RNN (recurrent neural network)-based sequential recommendation models, such as GRU4Rec [

11] and NARM [

12], effectively capture temporal dependencies in user behavior sequences, thereby improving dynamic modeling of user interests. CNN (convolutional neural network)-based approaches, such as Caser [

13], leverage convolutional operations to extract local patterns from short-term user interests, making them well-suited for short-horizon behavioral modeling. With the rise of the Transformer architecture, the self-attention mechanism has attracted significant attention for its excellent ability to capture long-range dependencies. The SASRec (Self-Attentive Sequential Recommendation) model [

14] employs a multi-head self-attention mechanism to capture relationships between different positions in behavior sequences, achieving state-of-the-art performance in various sequential recommendation tasks and becoming one of the mainstream models in this domain. However, these approaches primarily focus on behavioral sequences while neglecting the rich semantic information contained in course descriptions and titles, which limits their ability to model fine-grained course relationships and handle cold-start scenarios.

Despite the success of these methods in behavioral modeling, the semantic information of course content in MOOC recommendations has not been fully exploited. MOOCs typically include detailed titles and descriptions that reflect their knowledge structure and learning objectives, serving as valuable signals for characterizing course attributes. However, most recommendation models treat courses merely as discrete IDs, overlooking the value of textual semantics in understanding user interests. Early works that incorporated textual information into recommendation models primarily relied on static representations such as TF-IDF and Word2Vec, which suffer from insufficient contextual awareness and are thus limited in accurately capturing semantic distinctions between courses.

With the advancement of pre-trained language models, BERT (Bidirectional Encoder Representations from Transformers) has achieved state-of-the-art performance in numerous natural language processing tasks through its bidirectional Transformer architecture [

15], demonstrating outstanding semantic understanding capabilities. Nevertheless, BERT entails high computational costs and exhibits limitations in representing infrequent words, making it less suitable for large-scale deployment in resource-constrained settings. In contrast, FastText employs subword modeling and hierarchical softmax [

16], delivering strong efficiency and performance in low-resource scenarios and short-text modeling tasks. Its F1 scores in multiple text classification tasks surpass those of Word2Vec, indicating strong practical value. Given their complementary strengths, combining FastText and BERT can balance modeling depth and efficiency.

In designing the semantic modeling component, we considered several alternative architectures, including RoBERTa, DistilBERT, and ERNIE, as well as the possibility of using a single fine-tuned deep model [

17,

18,

19]. However, given the unique characteristics of MOOC datasets—such as the large number of courses, short course titles, and frequent cold-start situations—these alternatives exhibit certain limitations. While RoBERTa achieves slightly better semantic accuracy than BERT in some NLP benchmarks, its significantly higher computational cost makes it less suitable for large-scale course recommendation [

17]. DistilBERT offers faster inference but tends to lose semantic precision in understanding Chinese text, especially for domain-specific educational content [

17]. Similarly, ERNIE requires large-scale domain-specific corpora to achieve competitive performance, which introduces additional training complexity [

19].

To balance modeling effectiveness and computational efficiency, we adopt a dual-channel semantic encoding strategy combining FastText and BERT. FastText captures low-dimensional, dense word-level embeddings, providing stable representations for rare or unseen terms and benefiting cold-start courses with limited contextual information. BERT, on the other hand, offers deep contextualized semantic representations that enhance the understanding of course descriptions and prerequisite relationships. Integrating these complementary features enables our model to achieve better trade-offs between precision and efficiency, making it well-suited for large-scale MOOC recommendation scenarios.

To address the above challenges, this paper proposes a personalized MOOC recommendation method that integrates user behavioral sequences with course textual semantic features. First, FastText is employed to efficiently capture low-dimensional word-level semantics from course titles and descriptions, while a fine-tuned BERT model is used to extract deeper contextual semantic representations. The semantic features from both models are concatenated to form a multi-level semantic representation of course content. Next, the fused semantic features are mapped into the same vector space as that of hte course ID embeddings via a linear projection layer and integrated with the original course ID embeddings using an additive fusion strategy, thereby enhancing the model’s semantic perception of course content. Finally, these fused features, together with users’ historical click sequences, are fed into an improved SASRec model, where multi-head self-attention layers model the evolution of user interests, producing more accurate, dynamic, and context-aware recommendation outputs.

The main contributions of this paper are as follows: (1) Fusion strategy innovation: We propose a dual-semantic modeling mechanism that leverages FastText and fine-tuned BERT to extract course textual semantics at both shallow and deep levels. This design enhances semantic understanding while maintaining computational efficiency, addressing the challenges of short course descriptions and cold-start scenarios. (2) Modeling improvement: We design an enhanced feature fusion mechanism that integrates semantic embeddings into the SASRec framework, enabling joint modeling of user behavioral sequences and course textual semantics. This results in a behavior–semantics multimodal collaborative recommendation framework, which captures both preference evolution and content relationships more effectively. (3) MOOC-specific validation: Extensive experiments on the large-scale MOOCCubeX dataset demonstrate that our method achieves significant improvements in key metrics such as NDCG and HRs, showcasing strong recommendation accuracy, robustness, and generalization capability for real-world MOOC recommendation scenarios.

2. Materials and Methods

2.1. Dataset Description

The dataset used in this study is derived from the publicly available MOOCCubeX large-scale MOOC recommendation research dataset [

20]. Constructed by Tsinghua University and other research institutions, MOOCCubeX is collected from major MOOC platforms in China and contains user click behavior logs, course metadata, and related teaching resource data. It is one of the most widely used benchmark datasets in the field of MOOC recommendation systems. To ensure controllable model training and enhance the specificity of experiments, we constructed a refined subset of MOOCCubeX and further augmented it by crawling additional course description texts, thereby strengthening the semantic modeling capability of course content.

During data preprocessing, we removed users with no interaction records and retained all remaining valid samples containing user behavior sequences, course IDs, and course names. Redundant or missing course information was corrected where necessary. The final dataset comprises 1,265,156 users and 632 courses. User behavior data are chronologically ordered to form individual click behavior sequences, reflecting the temporal evolution of user interests. Each course is associated with a unique course ID and a course name, covering distinct thematic and disciplinary areas such as computer science, literature, history, and psychology.

To better characterize the dataset and clarify the difficulty of the recommendation task, we provide additional descriptive statistics of user–course interactions. The dataset contains a total of 3,072,920 interactions, with an average of 2.43 clicks per user and a median of 1.0, indicating that most users interact with only a few courses. For courses, the number of clicks is highly imbalanced, with an average of 4862.22 clicks per course but a median of 1692.5, suggesting a long-tail distribution where a small fraction of popular courses attract disproportionately more interactions. Overall, the user–course interaction matrix has a sparsity of approximately 99.6%, making this a challenging large-scale recommendation scenario.

After integration, the dataset used in this study contains user IDs with their time-ordered course click records, as well as the corresponding course IDs, course names, domain labels, and crawled course descriptions. Domain labels are directly provided in the MOOCCubeX dataset and represent the academic fields associated with each course. These labels are treated as categorical tokens and concatenated with the course title and introduction as input to BERT. Moreover, it covers real platform interaction behavior over a two-year period from January 2019 to December 2020, ensuring strong temporal continuity and behavioral diversity. By preserving user interaction characteristics while supplementing semantic information on course content, this dataset provides a reliable foundation for developing a personalized MOOC recommendation model that integrates behavioral modeling and content understanding.

To provide a more comprehensive understanding of the dataset characteristics, we further analyze the distribution of user behavior sequence lengths and the popularity of courses. Among the 1,265,156 users, the number of courses clicked by each user exhibits a highly skewed long-tail distribution. Specifically, 63.93% of users interacted with only one course, while 14.84% interacted with exactly two courses. Users with fewer than five clicks account for 88.88% of the total population, whereas those with more than 20 clicks represent less than 0.1% of all users. This indicates that the majority of users have very short behavior sequences, which highlights the sparsity of user preference data and increases the difficulty of accurately modeling user interests.

A similar long-tail effect is observed in course popularity. The most popular course (Introduction to Psychology) received 125,789 clicks, accounting for 4.09% of total interactions. The top 10 most popular courses together attracted 21.9% of all clicks, while more than 60% of courses had fewer than 1000 clicks. This severe imbalance poses significant challenges for personalized recommendation, as models may easily overfit to popular courses and struggle to recommend less popular ones effectively.

2.2. Data Preprocessing

To ensure the consistency and standardization of input data during model training, the raw dataset underwent a systematic cleaning and preprocessing pipeline, including user behavior sequence normalization, course text information completion, positive and negative sample generation, and conversion into structured formats.

For the user behavior sequence data, the course IDs and user IDs in the refined MOOCCubeX subset were re-encoded into continuous integers starting from zero. This facilitated the initialization and indexing operations of the embedding layers in subsequent model training. Considering that incomplete behavior records and insufficient behavior counts may affect model stability, users with missing, abnormal, or zero-length behavior sequences were removed. After cleaning, all user click behaviors were organized as time-ordered sequences of course IDs and stored in JSON format. For seamless integration with the model interface, the JSON files were subsequently converted into a structured TXT format, serving as the input for the sequential modeling module.

During the automated collection of course descriptions from multiple MOOC platforms, we encountered several cases of duplicate, missing, or conflicting textual information. To ensure the accuracy and consistency of the corpus, a systematic deduplication and disambiguation process was conducted. First, exact duplicates were identified based on character-level similarity after normalization and were removed to avoid redundancy. In cases where multiple versions of a course description were found across different platforms, we prioritized the official description provided by the platform on which the course was originally hosted, ensuring the semantic accuracy of the textual content. If a course lacked a valid description, supplementary information was retrieved from other open educational resources whenever available, thereby minimizing missing data and preserving the completeness of the corpus.

To manage the large-scale retrieval and normalization process efficiently, we developed automated scanning scripts in Python 3.9.18. These scripts queried major MOOC platforms such as XuetangX and iCourse based on course titles, retrieved corresponding course descriptions via secure HTTP requests, and performed standardized text cleaning operations. Specifically, HTML tags, special symbols, and redundant whitespace were removed, punctuation styles were unified, and character encoding was normalized. Finally, a one-to-one mapping between course IDs, titles, and descriptions was constructed, ensuring that each course was associated with a unique, unambiguous textual representation. This automated pipeline significantly improved data quality, reduced semantic ambiguity, and provided a robust textual foundation for extracting course-level semantic features in the proposed recommendation model.

For the course text data, the original dataset only provided course IDs and titles without detailed semantic content. To enrich the model’s understanding of course information, an automated web crawler was implemented based on course titles to retrieve corresponding course descriptions from multiple open educational platforms, including XuetangX and iCourse. The crawled text was standardized by removing HTML tags, unifying punctuation styles, and normalizing formatting. This process resulted in a one-to-one mapping table between course IDs, titles, and descriptions, aligned with the re-encoded course indices, thereby forming a comprehensive semantic feature repository for courses.

For training sample generation, we adopted a positive–negative sampling strategy based on users’ historical behaviors. Specifically, for each user, courses already clicked were labeled as positive samples, while negative samples were randomly drawn from the pool of courses not clicked by the user. Each user–course interaction was then transformed into a triplet sample containing the user ID, a positive course (title and description), and a negative course (title and description). This step was implemented using a Python program, which first constructed a mapping from course IDs to course information (title and description) and then iterated through user click logs to produce the triplet training data. The final triplets were stored in a structured CSV format, with each sample containing both positive and negative course titles and descriptions for subsequent input into the FastText and BERT semantic modeling modules.

While alternative negative sampling strategies, such as popularity-based sampling and hard-negative mining, could potentially provide more informative training signals, we chose uniform random sampling for its simplicity and computational efficiency. We acknowledge that this choice may have introduced bias, as it did not prioritize more challenging negative samples, and we leave exploration of alternative sampling methods for future work.

Through this preprocessing workflow, we successfully constructed a unified, semantically enriched training dataset that preserves user behavior sequences while incorporating course-level textual semantic features, providing a solid foundation for building the proposed behavior–semantics fusion personalized recommendation model.

2.3. Design of the Recommendation Model Framework for Integrating User Behavior Sequences and Text Semantics

To alleviate the problems of user behavior sparsity and insufficient content understanding in MOOC platforms, this paper designs a personalized recommendation model that integrates user behavior sequences and course text semantic features based on the SASRec model. On the basis of the original sequential modeling mechanism, this method introduces a dual-channel semantic encoding method to model course text so that the recommendation results not only rely on the user’s click history but also have the ability to deeply understand the course content, thereby improving the accuracy and interpretability of recommendations.

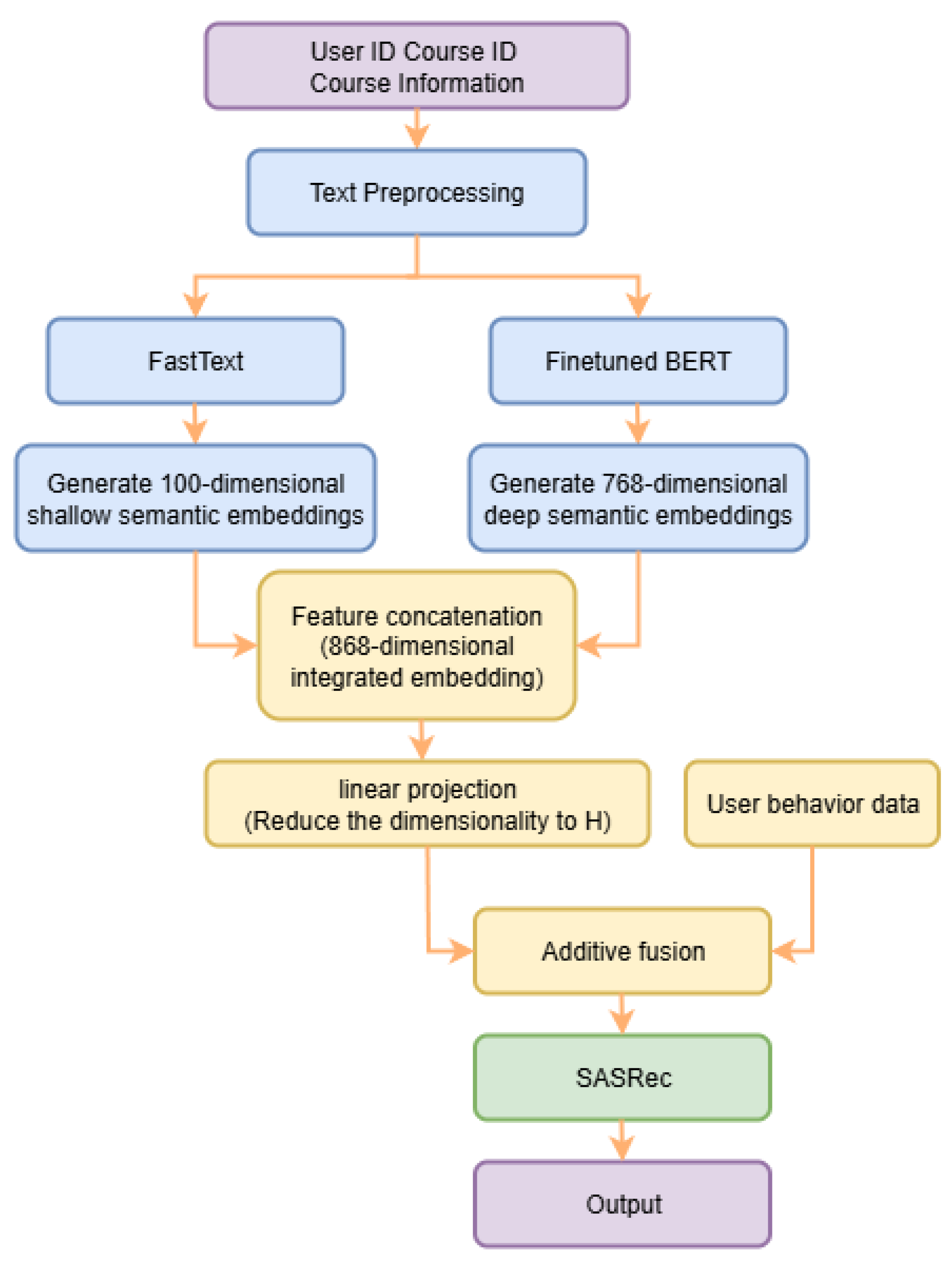

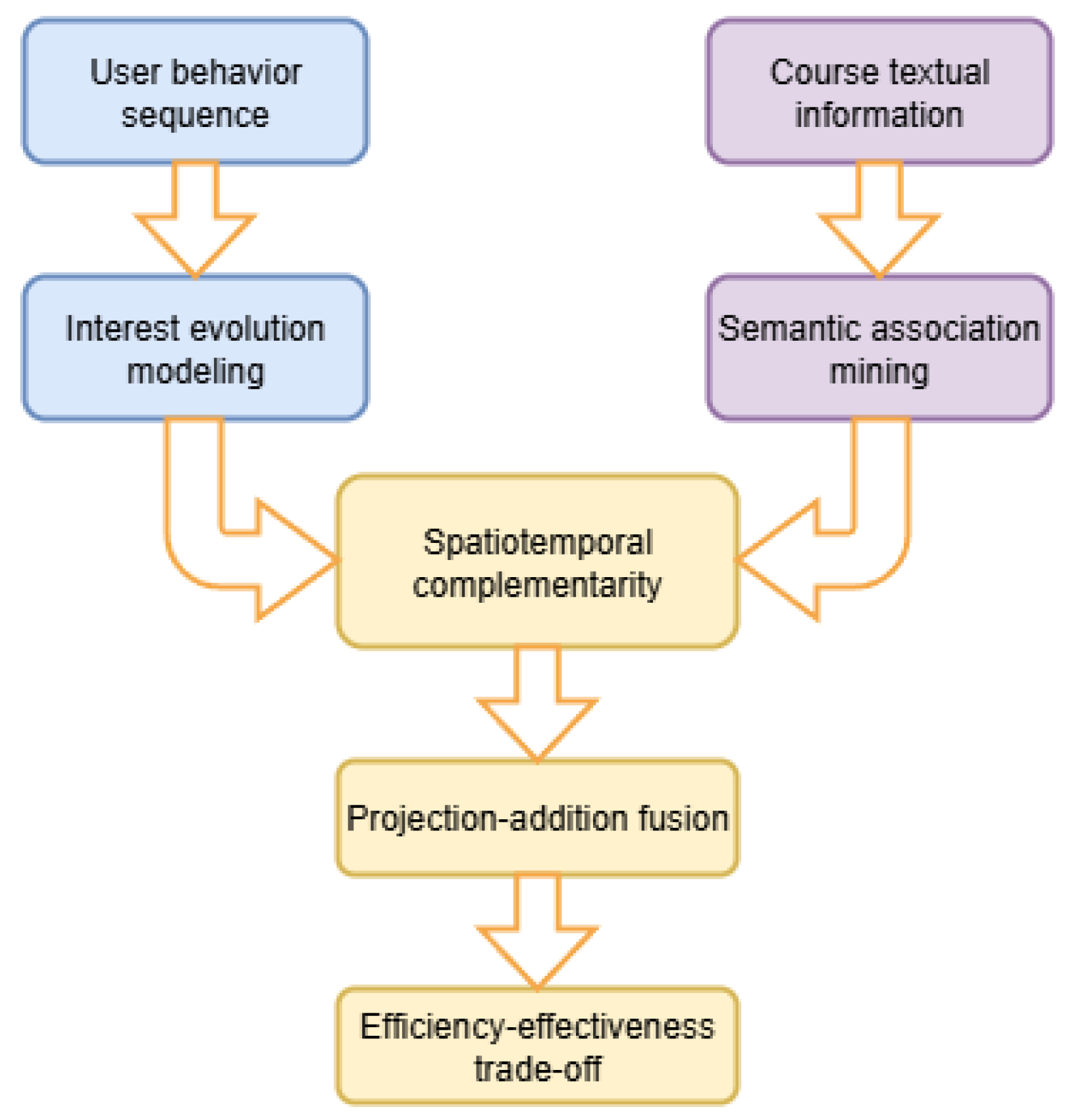

The overall structure of the model includes three main modules: the course text semantic modeling module, the feature fusion module, and the sequence modeling and prediction module, as shown in

Figure 1.

In terms of course semantic modeling, this paper uses the FastText and fine-tuned BERT models to extract semantic representations of course description texts, respectively. FastText, based on subword modeling mechanism and hierarchical Softmax, can efficiently capture shallow semantic features in low-resource scenarios; BERT relies on the Transformer structure to model the context and has a stronger ability for deep semantic understanding. In order to enhance the adaptability of the BERT model to the recommendation task, this paper fine-tunes it in a supervised manner based on course click behavior samples and adopts the Pairwise Ranking Loss optimization objective so that its output is closer to the user’s interest preferences.

In the feature fusion part, this paper concatenates the course semantic vectors extracted by FastText and BERT to form a course representation vector containing multi-level semantic information,

. In order to keep the dimensionality consistent with the course ID embedding

, this paper introduces a linear projection layer to map

to the same dimension, H, as that of the ID embedding, obtaining

. Finally, the projected semantic representation

and ID embedding

are fused through element-wise addition to form the final course representation:

Equation (

1) is used as the embedding input of each item in the user behavior sequence and is fed into the sequence modeling module.

In the sequence modeling part, the model adopts the original SASRec structure, using the multi-layer, multi-head self-attention mechanism to model the user behavior sequence and depict the dynamic evolution of user interests over time. Positional encoding is input together with the course representation to retain the positional information in the behavior sequence. SASRec finally outputs the user’s interest representation at the current moment and matches it with the representation of candidate courses to complete recommendation prediction.

During training, the model received the user behavior sequence, course IDs, and text semantic information of positive and negative samples and was optimized using the binary cross-entropy loss function with negative sampling, jointly modeling user behavior and course semantic features. The dataset was split chronologically, with the most recent 20% of each user’s click sequence reserved for validation and testing and the remaining 80% used for training. Early stopping was applied based on the validation NDCG@K and HR@K to prevent overfitting, and hyperparameters were chosen to balance convergence speed and model performance.

In summary, the recommendation framework proposed in this paper integrates the course semantic information extracted by FastText and BERT into the traditional sequential modeling and combines them into the user behavior modeling process through projection and additive fusion, realizing deep collaboration between user behavior and course content understanding, thereby significantly improving personalized recommendation performance in MOOC platforms.

2.4. Detailed Core Modules

To comprehensively enhance the performance of the MOOC recommendation system, the proposed model incorporates semantic enhancement mechanisms and structural optimizations into several key modules. Specifically, these include the course text semantic modeling module, the feature fusion module, the sequence modeling module, and the loss function and training strategy. The technical details of each component are described below.

2.4.1. Course Text Semantic Modeling Module

To effectively capture the semantic features of MOOC course content, this paper proposes a dual-channel semantic encoding mechanism combining the FastText and BERT models. This module takes the course title, domain labels, and introduction as the basic textual inputs, learns embeddings from the perspectives of local subword structures and contextual dynamic semantics, and constructs the final semantic representation of the course through vector concatenation.

For shallow semantic modeling, the FastText model is used to train course text representations. Let the textual representation of the course

be

. FastText embeds each word and its subword n-grams in the text and averages them to construct a low-dimensional semantic vector:

where

denotes the set of all subwords in the course text

, and

is the embedding vector of the subword

g. The FastText embeddings trained in this work have a dimensionality of 100, providing good training efficiency and vocabulary coverage, making them suitable for large-scale course modeling scenarios.

For deep semantic modeling, this paper introduces a BERT model (BERT-base-Chinese) and fine-tunes it using user click behaviors to learn deep contextual semantic representations of courses. We checked the supplementary course descriptions and confirmed that over 98% of the content is in Chinese. Therefore, we adopt BERT-base-Chinese for text encoding, which ensures efficient tokenization and semantic understanding. Non-Chinese content is either filtered out or normalized during preprocessing. The course title and introduction are concatenated into text,

, and encoded using the pre-trained BERT model, with the

token vector taken as the course representation:

In addition, to strengthen semantic discriminability, triplet training samples

are constructed based on the user’s historical click behaviors, where

is a positive sample clicked by the user and

is a negative sample not clicked. By maximizing the cosine similarity margin between positive and negative samples, the BERT model is guided to learn discriminative semantic features. The training objective is defined as

where

is the margin hyperparameter controlling the minimum similarity difference between positive and negative samples, and

denotes the fine-tuned BERT output representation.

Next, the 100-dimensional vector output from FastText and the 768-dimensional vector output from BERT are concatenated to form the course text representation vector that integrates shallow and deep semantics:

To ensure dimensional consistency with the course ID embedding, a linear projection layer is introduced in the feature fusion module to project to the same dimensionality H as the ID embedding for subsequent additive fusion. This semantic embedding captures multi-granularity semantic features of course content while providing rich contextual information for behavior modeling, obtaining .

In designing the semantic modeling module, we evaluated several potential alternatives, including advanced architectures such as RoBERTa, DistilBERT, and ERNIE, as well as the possibility of using a single fine-tuned deep model. However, the characteristics of MOOC datasets—large-scale course collections, short and sparse textual descriptions, and frequent cold-start scenarios—impose specific constraints on model selection.

RoBERTa generally achieves slightly better semantic accuracy than BERT on standard NLP benchmarks, but its substantially higher computational and memory requirements make it less practical for large-scale course recommendation. DistilBERT, while more lightweight and faster, tends to lose semantic precision for Chinese educational content, especially when dealing with technical terminology. Similarly, ERNIE requires large-scale domain-specific corpora to reach competitive performance, which introduces significant training overhead.

To balance semantic representation quality and computational efficiency, we adopt a dual-channel encoding strategy that integrates FastText and BERT. FastText efficiently captures low-dimensional, dense word-level embeddings, providing stable representations for rare terms and cold-start courses with limited context. BERT complements this by learning deep, context-aware semantic features that enhance understanding of course descriptions and prerequisite relationships. This combination leverages the complementary strengths of shallow and deep semantic models, achieving a more effective trade-off between performance and efficiency while remaining well-suited for large-scale MOOC recommendation tasks.

2.4.2. Feature Fusion Module

To achieve unified modeling of course semantic information and discrete ID information, this paper introduces a feature fusion module based on course text semantic modeling, integrating course content features and structured information into a unified representation vector as the input for subsequent sequence modeling.

In this study, we adopt an additive fusion strategy to combine the course semantic features and ID embeddings. Compared with concatenation followed by a multi-layer perceptron or attention-based mechanisms, the additive fusion strategy has been shown in prior studies to achieve competitive performance while maintaining computational efficiency and model simplicity [

21,

22]. Concatenation-based methods generally increase the parameter size and risk of overfitting, while attention-based fusion often requires substantial computational overhead. Considering the large-scale nature of the MOOC dataset, we strike a balance between accuracy and efficiency by choosing additive fusion.

First, the course is mapped through an embedding layer into a fixed-dimensional vector, , representing the discrete structural features of the course. At the same time, after dual-channel modeling with FastText and fine-tuned BERT, the course text yields a concatenated semantic vector, .

To ensure consistency with the ID embedding dimension, the semantic vector is mapped via a linear projection layer,

:

The final fused representation of the course is defined as

This additive fusion method is simple and efficient, avoiding the introduction of additional nonlinear transformations, preserving the original structural information of the semantic vector, and enhancing its interpretability and generalization capability in the recommendation task.

For the user behavior sequence

, the model sequentially takes the fused representation

of each course to construct the input sequence

. To model the temporal evolution of user interests, a positional encoding vector,

, is added to the input sequence, yielding the final sequence input:

This feature sequence is then fed into the subsequent multi-layer self-attention mechanism for sequence modeling, in order to capture contextual relationships between courses and dynamic changes in user preferences.

2.4.3. Sequence Modeling Module

To model user behavior patterns on the MOOC platform, this paper constructs a sequence modeling module based on SASRec, leveraging multi-layer self-attention mechanisms to capture the dynamic evolution of user interests and matching them with candidate courses to achieve personalized recommendations.

This module takes as input the fused representation sequence output by the feature fusion module, where each position’s representation is the sum of the course ID embedding and the text semantic vector, combined with positional encoding information.

The input sequence is processed sequentially through multi-layer self-attention modules and feed-forward neural networks. In each layer, the input is first mapped onto query (Q), key (K), and value (V) vectors, and the attention distribution is computed to capture the correlations between different courses in the sequence. Then, residual connections and feed-forward networks are applied for nonlinear transformation, and Layer Normalization is introduced to stabilize the training process. The entire process can be expressed as

where

denotes the input of the

i-th layer, MultiHead represents the multi-head attention mechanism, and FeedForward represents the feed-forward network. Finally, the stacked multi-layer output

represents the user’s interest representation at the current time.

In the recommendation phase, the model computes similarity scores between the output at the last position of the sequence (representing the latest interest) and the fused representation of candidate courses to perform scoring and ranking. Let the user representation at the current time be

and the fused representation of a candidate course be

. The recommendation score is computed by the inner product:

To train the model, this paper adopts triplet-based binary cross-entropy loss, performing supervised learning on positive and negative samples to maximize the similarity between user interests and positive samples, while minimizing that with negative samples.

In summary, the sequence modeling module not only effectively captures the temporal dynamics of user interests but also deeply integrates the semantic content of courses, providing a more expressive representation foundation for the recommendation task.

2.4.4. Loss Function and Training Strategy

In this study, the training objective of the model is to minimize the difference in predicted scores between courses of interest and courses of no interest to the user, which is achieved by optimizing a loss function based on BCE (binary cross-entropy). Specifically, for each user click behavior sequence, the model simultaneously predicts a positive sample (a course the user has clicked) and one or more negative samples (courses the user has not clicked) to learn the user’s true preferences.

In our model, the user representation

is dynamically constructed based on the user’s historical interaction sequence. Specifically, for a user,

i, we first obtain the final representation of each course,

, in the interaction sequence

, where

is derived from the semantic fusion of the course ID embedding and the textual semantic features (as described in

Section 2.4.2). These course embeddings are then fed into the SASRec module, where multiple self-attention layers are used to capture contextual dependencies within the sequence.

For the prediction in time step

t, the user representation

is defined as the contextualized hidden state at the most recent position:

where

denotes the output of the top self-attention layer in SASRec in step

t.

Given the user representation

and the candidate course representation

, the predicted click probability

is calculated using the following prediction function:

where

is the sigmoid activation function.

Let the predicted score for a positive sample be

and that for a negative sample be

, with corresponding labels of

and

. The loss function is defined as

where

denotes the total number of training samples, and

is the predicted click probability output by the model, normalized to the range [0, 1] via the sigmoid activation function.

Considering that in the actual training process, negative samples greatly outnumber positive samples—potentially biasing the training—our negative sampling strategy randomly selects several negative samples for each positive sample to maintain a balanced ratio of positive and negative samples during training. In addition, to further enhance the model’s generalization ability and training stability, the AdamW optimizer is adopted for parameter updates, with an added regularization term to constrain the magnitude of the weights. The updated optimization objective is

where

represents the trainable parameters of the model, and

is the regularization strength coefficient.

During training, we adopt a two-stage optimization paradigm to ensure stable convergence and effective semantic–behavioral feature integration.

In Stage 1, the BERT encoder is fine-tuned to learn discriminative semantic representations of course descriptions. We use the AdamW optimizer with an initial learning rate of , a linear warm-up over the first 10% of training steps, and a cosine decay scheduler to gradually reduce the learning rate thereafter. The mini-batch size is set to 32, and the SASRec backbone as well as the course ID embeddings are frozen in this stage to prevent interference with semantic feature learning.

In Stage 2, the entire recommendation model is trained end-to-end. We unfreeze the SASRec backbone and course ID embeddings, while maintaining a smaller learning rate of for BERT to avoid catastrophic forgetting. The SASRec backbone and fusion layers are trained with a higher learning rate of using the AdamW optimizer and a step decay scheduler, which halves the learning rate every 10 epochs. The mini-batch size is increased to 256 to accelerate convergence.

Throughout both stages, we monitor the validation metrics NDCG@10 and HR@10 and apply an early stopping strategy with a patience of 5 epochs to prevent overfitting, thereby obtaining the optimal model parameters.

2.5. Baseline Models

To comprehensively evaluate the effectiveness of the proposed model in integrating semantic information and user behavior sequence modeling, multiple representative recommendation methods are selected as baseline models for comparison, covering three categories: traditional recommendation methods, sequence modeling methods, and semantic-enhanced models.

Among traditional recommendation methods, Item-KNN is a memory-based collaborative filtering algorithm that makes recommendations based on the similarity between items in historical click sequences [

23]. It offers high interpretability and is well-suited for capturing users’ short-term interest preferences.

In the category of sequence modeling methods, three deep learning architectures are introduced. GRU4Rec captures temporal dependencies in user behavior sequences through GRUs (Gated Recurrent Units) [

11] and is one of the earliest representative works applying sequence modeling to recommender systems. Caser (Convolutional Sequence Embedding Recommendation Model) adopts one-dimensional convolutional neural networks to model user behavior sequences, extracting local interest patterns while incorporating sequential information. SASRec (Self-Attentive Sequential Recommendation) is a Transformer-based sequential recommendation model [

14] that leverages self-attention mechanisms to learn long-term dependencies in user interests and is currently one of the mainstream approaches in sequential recommendation. The proposed model in this study is an extension of SASRec with semantic enhancement and thus also serves as one of the main comparison objects.

To further verify whether the incorporation of textual semantic information improves model performance, two semantic-enhanced models are also constructed as baselines. SASRec+FastText augments item representations by concatenating course text embeddings pre-trained with FastText and ID embeddings [

16], thereby enhancing their semantic expressiveness. SASRec+BERT employs the pre-trained BERT model to extract deep linguistic features from course descriptions [

15], which are then fused with course ID embeddings. Both models retain the sequential modeling capacity of SASRec while integrating semantic information at different levels, representing two mainstream approaches in current content-enhanced recommendation research.

These baseline methods, spanning collaborative filtering, deep sequential modeling, and semantic enhancement, provide a comprehensive comparison framework to assess the advantages and improvements of the proposed model.

2.6. Evaluation Metrics and Experimental Setup

In this study, HR@K (Hit Ratio@K) and NDCG@K (Normalized Discounted Cumulative Gain@K) are adopted as the primary evaluation metrics to measure the accuracy and ranking ability of the recommendation models.

HR@K reflects whether the user’s actual next course is included in the Top-K recommendations generated by the model. Its formulation is as follows:

where

denotes the ground-truth next course for user

u,

represents the Top-K recommendations predicted for user

u, and

is the indicator function.

NDCG@K evaluates the rationality of ranking in the recommendation list. It is computed as

where

is the position of the ground-truth course in the Top-K recommendation list. Compared with the HR, NDCG provides a more fine-grained evaluation by considering the position weights of the target item in the ranking.

For the experimental setup, all models were trained and tested under the same data split and training strategy. Each user’s interaction history was first sorted chronologically, and we adopted a leave-one-out evaluation protocol to simulate a realistic recommendation scenario. Specifically, all interactions except the last two were used for training, the penultimate interaction was used for validation, and the most recent interaction was used for testing. During evaluation, we followed a sampled ranking strategy in which the model ranked a ground-truth positive item together with 100 randomly sampled negative items from the pool of unclicked courses, ensuring both computational efficiency and fair comparability with existing studies.

During training, the mini-batch mechanism was employed with a batch size of 256. The optimizer was set to AdamW with an initial learning rate of 0.001, and a weight decay coefficient of 0.01 was applied to prevent overfitting. All hyperparameters followed standard practice and prior work.

All experiments were conducted on a local machine equipped with an NVIDIA GeForce RTX 4060 Ti GPU, using the PyTorch 1.12.0+cu113 deep learning framework for model implementation and training. For all reported results, we present the mean ± standard deviation over five independent runs to ensure statistical reliability.

3. Results and Discussion

3.1. Overall Performance Comparison

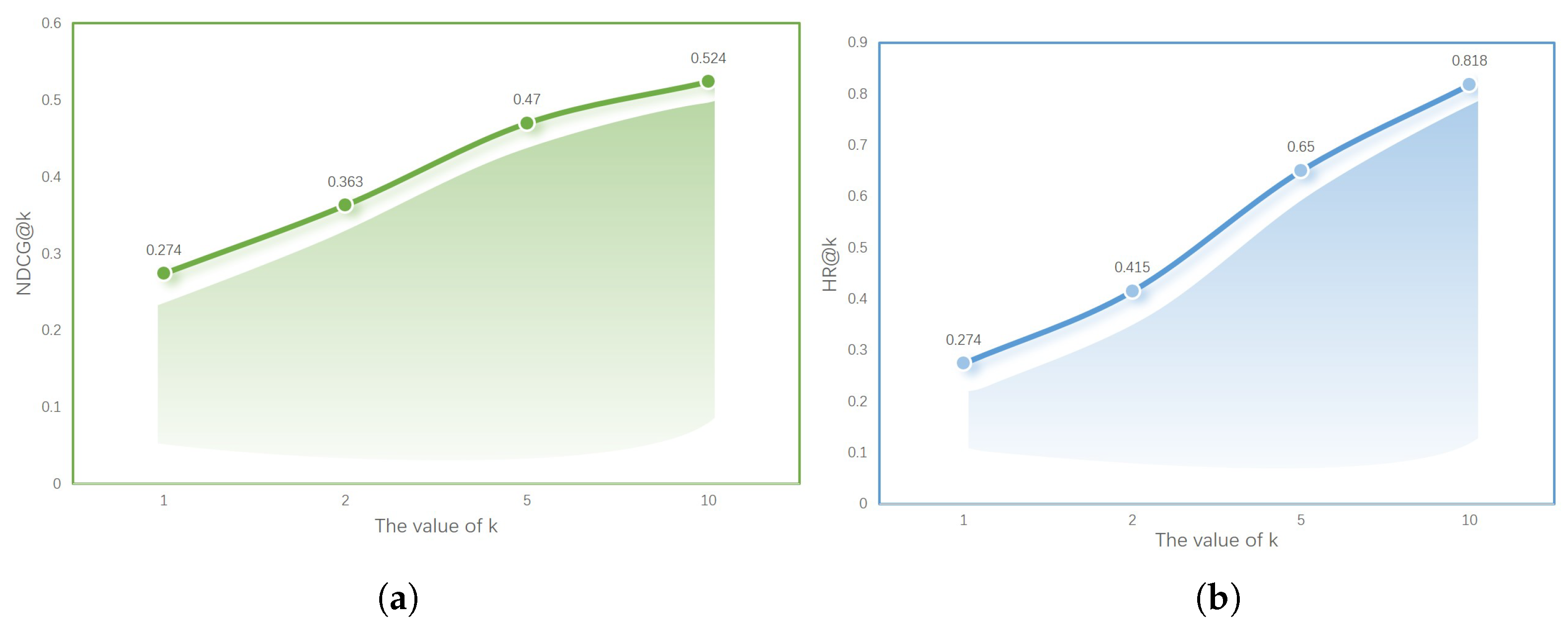

To comprehensively evaluate the performance of the proposed model, several representative recommendation methods were selected for comparison on the MOOCCubeX dataset, including traditional collaborative filtering (Item-KNN), sequential modeling methods (GRU4Rec, Caser, SASRec), and two semantic-enhanced models (SASRec+FastText, SASRec+BERT). “Ours” refers to the model proposed in this study, which integrates both BERT and FastText textual embeddings. The experimental results of our model are shown in

Figure 2.

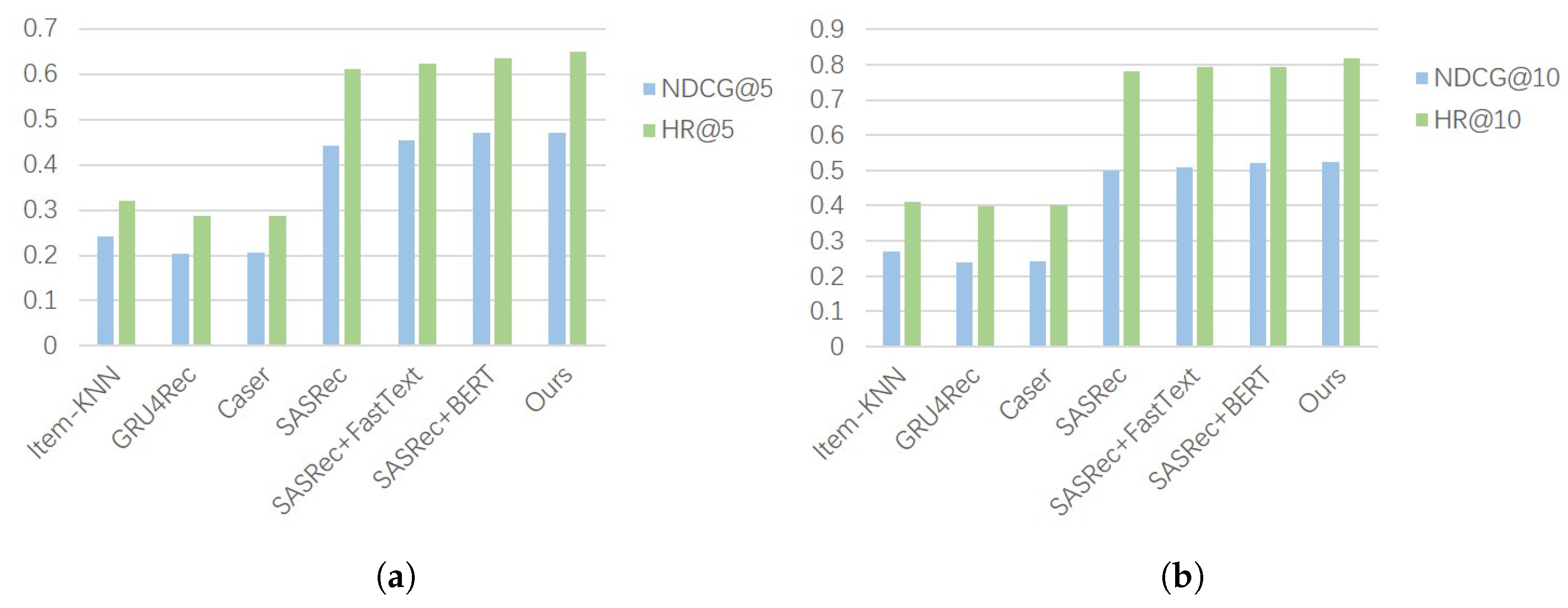

The experiments employed HR@K and NDCG@K to evaluate the accuracy of each model in Top-K recommendation tasks. The results are presented in

Figure 3 and

Table 1. Traditional collaborative filtering methods (Item-KNN) performed relatively weakly, with an HR@10 of 0.412 and NDCG@10 of 0.270, due to their inability to model the temporal dynamics of user behavior.

Among sequential modeling methods, GRU4Rec captured long-term dependencies in user behavior sequences via Gated Recurrent Units, showing moderate improvement. Caser leveraged convolutional structures to extract local sequential patterns and performed slightly better than GRU4Rec. SASRec, based on the self-attention mechanism, effectively captured global behavioral dependencies and significantly outperformed the previous two methods, achieving an NDCG@10 of 0.499 and HR@10 of 0.782, making it the primary baseline in this experiment.

On this basis, two semantic-enhanced models were constructed by incorporating course textual information: SASRec+FastText and SASRec+BERT. FastText extracts keyword features by averaging word vectors, while BERT provides stronger contextual semantic modeling capability. The results indicate that both models improved upon the original SASRec model, with SASRec+BERT reaching an HR@10 of 0.794 and NDCG@10 of 0.522.

The descriptive statistics indicate that although there are more than 1.26 million users, the interaction density is extremely low, resulting in a highly sparse user–course matrix. At the same time, the course popularity follows a long-tail distribution, where a small set of popular courses dominate user interactions. This dual characteristic—extreme sparsity combined with marked popularity dynamics—significantly increases the difficulty of accurately modeling user preferences. Consequently, the reported performance improvements demonstrate the effectiveness of incorporating course semantic features alongside behavioral sequences, as they help alleviate sparsity issues and improve personalization.

The above dataset analysis reveals two important characteristics: (1) Short user behavior sequences—Over 63% of users interact with only one course, and nearly 89% of users click fewer than five courses. This limited behavioral information makes it difficult for traditional sequence-based recommendation methods to capture comprehensive user preferences. (2) Highly imbalanced course popularity—A small number of courses dominate user interactions, with the top 10 courses contributing almost 22% of total clicks. Models that rely solely on interaction frequencies may overfit to popular courses while neglecting the majority of less popular content. These findings strongly motivate the integration of multi-level textual semantics into the recommendation framework. By combining behavioral sequences with semantic features extracted from course descriptions and titles, our model effectively alleviates sparsity issues, improves the visibility of less popular courses, and enhances personalized recommendations.

A preliminary analysis on users and courses with fewer than five historical interactions shows that our model achieved higher NDCG@10 and HR@10 scores compared with all baselines, indicating that incorporating semantic features helps address the cold-start problem.

Finally, the proposed model integrating dual textual semantic representations from BERT and FastText (Ours) achieved the best results across all metrics, with NDCG@10 and HR@10 reaching 0.524 and 0.818, respectively, representing improvements of approximately 0.050 and 0.046 over the original SASRec. These results clearly demonstrate that incorporating multi-granularity textual semantic features into behavioral modeling can effectively enhance recommendation performance, especially in MOOC scenarios where rich course textual information is available. These improvements validate that incorporating textual semantics into a sequence-based framework provides additional contextual knowledge, enabling the model to better capture user preferences and outperform existing RNN-, CNN-, and Transformer-based baselines.

3.2. Ablation Study

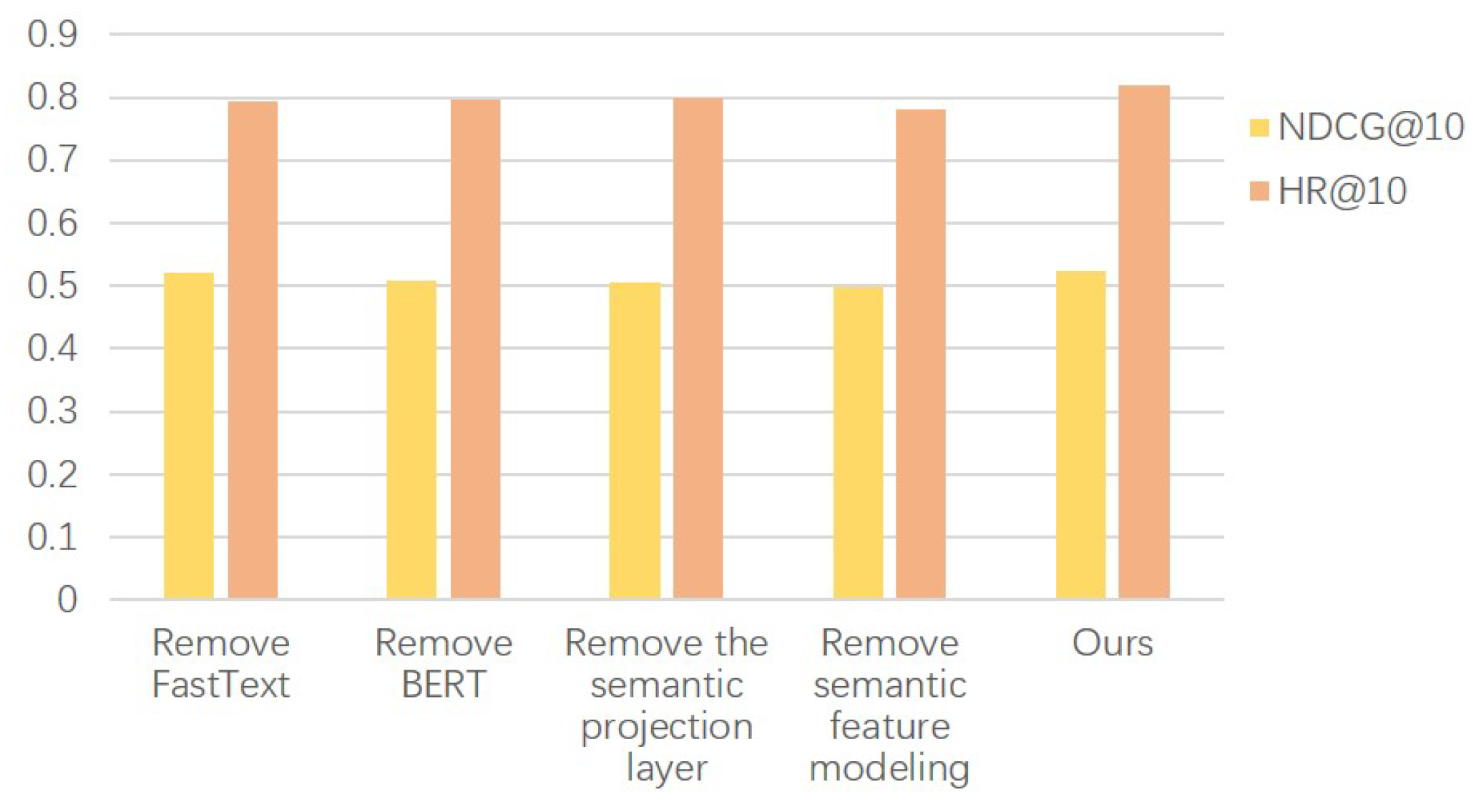

To precisely quantify the contribution of each component to the overall performance, a systematic ablation study was designed. Specifically, under consistent training data, hyperparameter settings, and training epochs, key modules of the model were individually removed or replaced, and the resulting performance changes were evaluated on a validation set (20% randomly selected from the original user set). The experiments employed HR@10 and NDCG@10 as evaluation metrics, as shown in

Figure 4 and

Table 2. The full model achieved the best results on both metrics, validating the effectiveness and synergistic benefit of the proposed module designs.

Overall, the complete model achieved optimal performance on both metrics, confirming the effectiveness of the collaborative design of the modules. When the FastText semantic features were removed and only BERT text representations were retained, HR@10 decreased by approximately 0.024. This indicates that the shallow word-level information provided by FastText plays a significant complementary role. While BERT emphasizes global contextual modeling, FastText focuses on local word-level semantics, effectively compensating for short texts and cold-start courses with limited context. Moreover, the dense and stable nature of FastText embeddings enhances the model’s generalization capability during early training, allowing for a more detailed and comprehensive user interest representation. The performance of the variant without FastText matched the SASRec+BERT baseline (HR@10 = 0.794; NDCG@10 = 0.522), which was expected because the architecture becomes identical to SASRec+BERT when FastText embeddings are removed. This consistency across tables validates the correctness of our implementation.

When BERT embeddings were removed and only FastText representations were retained, the model’s performance declined more noticeably, with HR@10 decreasing by about 0.023 and NDCG@10 by about 0.016. This demonstrates that BERT plays a core role in understanding course texts. Its multi-layer Transformer structure effectively captures complex semantic relationships in course titles and descriptions, constructing context-aware dynamic representations rather than relying solely on static word meanings. Therefore, the absence of BERT weakens the model’s deep understanding of course content, adversely affecting the alignment between user interests and course semantics.

Regarding semantic fusion, removing the semantic projection layer caused HR@10 to drop by approximately 0.020. This indicates that the projection layer is crucial for unifying the feature space and compressing representations when fusing multi-source semantic features. Since BERT and FastText outputs differ in feature dimensions and semantic distributions, directly concatenating them as model input can result in dimensional redundancy, information redundancy, or feature conflict. The projection layer uses a linear mapping mechanism to achieve feature alignment and effective compression, enhancing the fusibility and stability of semantic representations.

Although more complex fusion strategies, such as concatenation with a non-linear projection layer and attention-based mechanisms, have been explored in related studies [

24,

25], they typically involve a significantly larger number of parameters and longer training times. Given our objective of designing an efficient and scalable recommendation model, we prioritize approaches that balance accuracy and computational efficiency. The additive fusion strategy effectively integrates semantic and ID embeddings while keeping the model lightweight, making it more suitable for large-scale MOOC scenarios.

When all semantic features were removed, relying solely on user behavior sequences, the performance decline was the most significant: HR@10 decreased by over 0.035, and NDCG@10 dropped by more than 0.020. This demonstrates that although user behavior sequences can capture interest evolution, they often fail to provide sufficient context for new courses, interest shifts, and sparse historical behaviors. Textual semantic features, as a direct representation of course content, help the model more accurately capture users’ latent interests and current intentions, playing a key supplementary role in personalized recommendations. Their absence diminishes the model’s understanding and modeling capability of user needs.

Beyond the ablation study on FastText and BERT, we also conducted preliminary experiments with alternative semantic models, including RoBERTa and DistilBERT. The results showed that while RoBERTa achieved slightly higher NDCG and HR scores compared to BERT, the computational and memory overhead increased by more than 50%, making it unsuitable for large-scale MOOC recommendation tasks where efficiency is critical. DistilBERT, on the other hand, offered faster inference but resulted in noticeable performance degradation on Chinese educational content, particularly for domain-specific terminology. Similarly, ERNIE demonstrated potential advantages in domain-specific modeling but required large-scale pretraining on education-oriented corpora, which significantly increased the complexity of deployment.

Therefore, combining FastText and BERT provides a better trade-off between efficiency and performance. FastText contributes low-dimensional, dense word-level embeddings that are especially helpful for cold-start courses and rare terms, while BERT captures rich, context-aware semantic representations. This dual-channel encoding strategy not only achieved the best overall performance in our experiments but also ensures scalability for real-world MOOC platforms.

In summary, the ablation study clearly illustrates the independent contributions and synergistic effects of each module. FastText and BERT offer complementary advantages in semantic modeling: the former provides stable word-level support, while the latter emphasizes context-aware semantic understanding. The semantic projection mechanism plays a bridging role in dimension compression and information alignment. The joint modeling of semantics and behavior significantly enhances the model’s ability to perceive dynamic user interests. The proposed multimodal fusion method demonstrates superior performance in MOOC recommendation tasks and provides both practical and theoretical guidance for building scalable and generalizable educational recommendation systems.

3.3. Analysis of Experimental Results

The proposed recommendation framework achieves excellent performance in the MOOC scenario, owing to the deep synergy between user behavior sequence modeling and multi-level semantic fusion mechanisms. This approach effectively establishes a multi-dimensional complementary relationship between user interests and course content, demonstrating a “triple complementary” structural advantage, mainly reflected in the following two aspects:

First, a significant spatiotemporal complementarity exists between behavior trajectories and semantic content, as shown in

Figure 5. User behavior sequences reflect the dynamic evolution of learning interests, for example, progressing from “Introduction to Programming” to “Java” and then to “Distributed Systems”, illustrating the hierarchical development of cognitive structures. However, behavioral information has limited capability to capture long-term interest changes, especially when users experience large time gaps or breaks in their learning cycle, which reduces the ability of behavior trajectories to represent user intent. In contrast, course textual semantic information provides stable content associations, helping establish semantic links even when behavior trajectories are interrupted. For instance, if the “Distributed Systems” course description mentions “implemented based on Java”, the model can identify the semantic connection between this course and the user’s historical preferences.

Such mechanisms demonstrate complementary advantages when handling recommendation tasks across different time spans. Behavior sequence models are more suitable for continuous learning scenarios, while semantic modeling is better at capturing users’ long-term migrating interests, enhancing the model’s robustness and generalization in non-continuous learning situations. For example, for users who have studied “Literary Theory” and “Classical Chinese”, the model can leverage keywords like “Tang and Song intellectual history” in the Zizhi Tongjian course description to build semantic bridges, recommending content that better matches user interests rather than relying solely on co-occurring popular courses.

Second, the introduction of semantic information is not a simple concatenation but forms a hierarchical semantic understanding structure through FastText and BERT. FastText excels at capturing word-level semantic structures, covering a large vocabulary of educational terms, effectively reducing sparsity in word vector representations, and avoiding term misinterpretation, such as misidentifying “gradient descent” as “slope reduction”. BERT, on the other hand, provides stronger contextual modeling capabilities, extracting deep semantics from sentence structures and discourse logic, demonstrating higher expressive power when handling nested expressions, causal relationships, and prerequisite dependencies. For example, when a course description mentions “requires Java foundation”, BERT can accurately recognize this prerequisite, guiding the model to activate relevant historical behaviors and improve recommendation personalization.

This semantic fusion structure is particularly important for cross-disciplinary user transitions. When a user shifts from technical courses to economics courses, the model can establish cross-domain content connections through keywords in course descriptions (e.g., “Python data analysis”) and the user’s historical records, effectively modeling migrating interests.

In summary, the proposed model exhibits clear structural design and significant empirical advantages in fusing behavior sequences with semantic features. It not only improves recommendation accuracy but also provides a robust foundation for intelligent personalized matching of educational resources.

4. Conclusions

This paper proposes a personalized MOOC course recommendation method that integrates user behavior sequences with course text semantic features. Building upon the traditional SASRec model, the method incorporates course text semantic information jointly extracted via FastText and BERT and employs a linear projection combined with course ID embedding through element-wise addition. This strategy effectively enhances the model’s understanding of course content and the accuracy of user interest modeling.

Experiments conducted on the public MOOCCubeX dataset demonstrated that the proposed method significantly outperforms multiple mainstream baseline models on key metrics such as NDCG@10 and HR@10, validating both the effectiveness of the fusion strategy and the advanced design of the model architecture. Further ablation studies showed that the text semantic modeling module and feature fusion mechanism make substantial contributions to recommendation performance, highlighting the importance of incorporating course text information to understand user interest evolution and match course content accurately.

In addition, through the comparative analysis of the optimizer and the loss function, the proposed method shows excellent training stability and generalization ability while maintaining the recommendation accuracy, which meets the application needs of actual educational scenarios. In particular, this study optimizes semantic representation for Chinese education scenarios, which provides a practical reference for cross-language education recommendations.

Despite these promising results, there remain areas for improvement. For instance, the model’s adaptability to cold-start users can be further enhanced, and potential multimodal information in course content (e.g., video, images) has not yet been modeled. Future research will focus on the following directions: (1) introducing graph neural networks to explore structural relationships between courses; (2) extending multimodal content modeling to integrate video, image, and other data sources; (3) investigating model interpretability and fairness to meet real-world educational platform deployment needs, thereby improving the practical utility and trustworthiness of recommendation systems.

In summary, the proposed fusion strategy and model method demonstrate technical innovation and practical applicability, providing strong support for advancing MOOC platforms toward intelligent and personalized services.