Abstract

Low-power microcontrollers, wireless sensors, and embedded gateways form the backbone of many Internet of Things (IoT) deployments. However, their limited memory, constrained energy budgets, and lack of standardized firmware make them attractive targets for diverse attacks, including bootloader backdoors, hardcoded keys, unpatched CVE exploits, and code-reuse attacks, while traditional single-layer defenses are insufficient as they often assume abundant resources. This paper presents a Systematic Literature Review (SLR) conducted according to the PRISMA 2020 guidelines, covering 196 peer-reviewed studies on cross-layer security for resource-constrained IoT and Industrial IoT environments, and introduces a four-axis taxonomy—system level, algorithmic paradigm, data granularity, and hardware budget—to structure and compare prior work. At the firmware level, we analyze static analysis, symbolic execution, and machine learning-based binary similarity detection that operate without requiring source code or a full runtime; at the network and behavioral levels, we review lightweight and graph-based intrusion detection systems (IDS), including single-packet authorization, unsupervised anomaly detection, RF spectrum monitoring, and sensor–actuator anomaly analysis bridging cyber-physical security; and at the policy level, we survey identity management, micro-segmentation, and zero-trust enforcement mechanisms supported by blockchain-based authentication and programmable policy enforcement points (PEPs). Our review identifies current strengths, limitations, and open challenges—including scalable firmware reverse engineering, efficient cross-ISA symbolic learning, and practical spectrum anomaly detection under constrained computing environments—and by integrating diverse security layers within a unified taxonomy, this SLR highlights both the state-of-the-art and promising research directions for advancing IoT security.

1. Introduction

Securing IoT/IIoT at global scale requires a cross-layer lens that reconciles heterogeneous devices, tight memory and energy budgets, and adversaries who exploit interactions among firmware, networks, behavior, and trust controls. Accordingly, this review synthesizes mechanisms across layers and data granularities, evaluates accuracy/latency/deployability under resource constraints, and sets a research agenda oriented toward evidence-attached detection and zero-trust-aligned operation.

1.1. Motivation and Scope

The Internet of Things (IoT) is rapidly evolving into a global-scale ecosystem expected to encompass tens of billions of heterogeneous devices deployed across enterprise, industrial, and consumer domains. These devices range from simple sensors and actuators to complex embedded controllers and edge computing nodes, all of which must collaborate in real time across organizational and national boundaries [1,2,3]. The scale of this deployment enables data-driven optimization of production lines, logistics, healthcare services, and smart city infrastructures. However, it also significantly broadens the attack surface—extending from bootloader firmware and wireless protocols to cloud integration and even physical process control.

Recent security investigations have highlighted multiple attack vectors that undermine the integrity of IoT deployments: firmware backdoors intentionally inserted during the manufacturing supply chain, encrypted command-and-control channels designed to bypass perimeter defenses, and manipulation of sensor readings to evade traditional network monitoring tools [4]. These challenges are aggravated by the hardware limitations of microcontrollers and low-power system-on-chip (SoC) platforms typically found in IoT devices. Constrained in memory, energy, and bandwidth, such devices are often unable to support heavyweight cryptographic or intrusion detection solutions, leaving them particularly vulnerable.

Traditional single-layer defenses are insufficient in this context, as attackers can compromise different system layers simultaneously. Cloud-based security services, although powerful, introduce latency, bandwidth overhead, and additional risks related to data sovereignty and privacy. This creates a strong demand for a cross-layer security perspective, where vulnerabilities are addressed holistically across firmware, network, and behavioral layers. For instance, firmware-level analysis can expose insecure reused libraries or misconfigured bootloaders, while lightweight, deep learning, and graph-based intrusion detection can flag anomalies in constrained wireless traffic. Complementary research has also begun exploring behavior-based process-level anomaly detection models, continuous risk assessment using adaptive policy engines, and the adoption of zero trust architecture (ZTA) in distributed IoT environments [5,6].

Based on these insights, this study seeks to consolidate and advance state-of-the-art approaches by combining firmware-level vulnerability analysis with lightweight network-level anomaly detection, while ensuring computational efficiency suitable for resource-constrained environments.

1.2. Contributions and Article Organization

The contributions of this paper are threefold:

- Cross-layer taxonomy: We propose a systematic taxonomy that organizes IoT security mechanisms by system level, algorithmic paradigm, data granularity, and hardware budget. This classification not only clarifies the design trade-offs of existing solutions but also offers a practical framework for positioning emerging research.

- Focused benchmarking: We integrate state-of-the-art firmware security techniques (e.g., static analysis, symbolic execution, machine learning–based binary similarity) with representative network intrusion detection models. Their performance is empirically evaluated on real IoT hardware with respect to accuracy, latency, and deployability.

- Research agenda and exploration goals: Instead of posing open-ended questions, we outline concrete directions for exploration:

- Exploring compatibility challenges of binary analysis across diverse instruction set architectures (ISAs).

- Investigating strategies to minimize the footprint of intrusion detectors while maintaining high accuracy in anomaly detection.

- Advancing hybrid symbolic–learning procedures that combine the rigor of symbolic execution with the adaptability of machine learning.

- Extending cross-layer approaches to incorporate behavioral anomaly detection and zero-latency verification aligned with ZTA principles.

The rest of this document is structured as follows. Section 2 provides an overview of the IoT context and threat landscape, covering typical IoT architectures, resource constraints, multi-layer attack surfaces, and security requirements for edge-bound deployments. Section 3 describes the survey methodology, including the search strategy, inclusion/exclusion criteria, and the review workflow based on the PRISMA 2020 guidelines. Section 4 introduces a taxonomy for cross-layer IoT security, organized by security level, data granularity, deployment model, and algorithmic paradigm. Section 5 focuses on firmware-level security, detailing static, symbolic, and machine learning techniques for binary similarity analysis. Section 6 examines integrated security at the network, behavioral, and policy levels, focusing on lightweight intrusion detection, deep/graph-based models, sensor-actuator/RF spectrum analysis, and zero-trust implementation. Section 7 concludes the paper, summarizes the findings, and outlines future research directions for IoT security.

2. Background and Threat Landscape

To understand the challenges in securing IoT systems, it is first necessary to examine the underlying architecture, the typical resource limitations of devices, and the evolving multi-layer threats that adversaries exploit.

2.1. Typical IoT Architecture and Resource Constraints

Most academic and commercial roadmaps still state that the IoT consists of three layers: the perception layer (or sensor layer), the network layer, and the application/cloud layer [7]. The Industrial Internet of Things (IIoT) often includes an edge control layer, but the basic data path (physical signal → digital data packet → cloud analytics) remains the same [8].

At the perception layer, a single node can have up to 256 kB of RAM, a CPU running at less than 1 GHz, and milliwatt-level battery capacity. Encryption, secure boot logic, and real-time control operations are still required [9]. The number of these billions of diverse devices far exceeds the number of hardware, operating systems, and wireless protocols that security solutions must support [10].

2.2. Multi-Layer Attack Surface

In this section, we use the term multi-layer attack surface to refer to the combined vulnerabilities across firmware, network, and behavioral layers. A detailed taxonomy is provided in later sections, but here we briefly introduce the concept for consistency. The purpose of this section is to show how attacks span across different technical levels, making cross-layer defenses indispensable.

Table 1 summarizes representative threats, consequences, and representative research at each layer of the IoT. This table provides a brief overview of vulnerabilities and illustrates the need for cross-layer security research. IoT systems face a variety of threats at multiple levels, including firmware vulnerabilities, weak network authentication, behavioral deception, and inadequate trust management. These attack surfaces collectively highlight the importance of a cross-layer security approach.

Table 1.

Representative Multi-Layer Attack Surface in IoT Systems.

- Firmware level. The ZigBee Light-Link worm showed that a single unpatched binary could set off a chain reaction that affected all lights in an installation [11].

- Network level. More than 70% of tested BLE devices still default to “Just Works” pairing within local radio range. This makes it easier for attackers to forge connections and launch man-in-the-middle attacks [12]. In the backbone, common messaging protocols such as MQTT or AMQP make it easier for attackers to steal session tokens and send forged commands through the cloud [13].

- Behavioral level. Real-world spoofing can evade conventional (network-centric) intrusion detection systems (IDS) because it manipulates sensor inputs rather than packet semantics; for example, laboratory GPS spoofing has been shown to alter the course of a GPS-guided drone [10,14].

- Trust level. Zero Trust Architecture (ZTA) eliminates mutual trust between users, devices, and workloads, but most current pilots only consider user access to the cloud without checking the provenance of firmware or real-time sensor data [15].

2.3. Security Requirements for Edge-Bound Deployments

Strong IoT security requires more than just cloud-based cryptographic primitives or firewalls. Instead, each layer must have defense-in-depth built-in, especially for devices that operate offline or are occasionally connected.

- Lightweight, with both integrity and privacy. IoT devices typically do not have enough RAM or code space to use standard protocols such as TLS or DTLS. Therefore, security suites must use cryptographic algorithms with smaller memory footprints and simpler handshakes, while still being able to protect against active attackers on the wireless channel [7].

- Multi-layered, fine-grained access control. Security measures should be implemented not only at the cloud API level, but also in the firmware call graph, wireless MAC layer, and lightweight message brokers. If permissions are not enforced at each layer, there is a risk of lateral movement and escalation of the trust chain [8].

- Continuously audit and grant as few access permissions as possible. Modern designs must rethink the identification, localization, and context of each abnormal state change based on zero trust principles [15,16]. However, these checks are currently performed only at the cloud edge, not in embedded runtime environments.

- Local actionability and explainability are critical. Engineers need to understand the meaning of alerts without being able to view cloud logs or developer consoles. Compact on-device summaries (ideally using reduced or quantized language models) are still an emerging research topic [9], but can provide explainable diagnostic results without sacrificing latency or power consumption.

To meet these requirements, security measures must work together at multiple levels. Firmware-level risk assessment can support network-level traffic filtering, anomaly models can enable adaptive privilege reassignment, and policy enforcement points must consider all lower-level inputs. The next section explores how current work addresses (or fails to address) these requirements in both firmware and network contexts.

3. Survey Methodology

To ensure rigor and reproducibility, the survey follows a predefined protocol: broad database searches using a transparent query, explicit inclusion/exclusion criteria, and PRISMA-2020 screening and eligibility. The aim is to bound the corpus to resource-aware, cross-layer IoT security and make subsequent analyses traceable.

3.1. Search Strategy and Databases Queried

Systematic literature search queries were executed on eight digital libraries that jointly cover computer-security, embedded-systems and industrial-informatics research:

- IEEE Xplore and ACM Digital Library—core venues for networked-system security and embedded software;

- ScienceDirect and SpringerLink—major publishers of IoT-specific journals;

- Web of Science and Scopus—multidisciplinary indexing and citation tracing;

- Google Scholar—discovery of gray literature and early-view conference papers;

- arXiv (cs.CR, cs.NI, eess.SP)—access to pre-publication manuscripts.

The search string combined four concept groups with Boolean AND operators:

(“IoT” OR “IIoT” OR “cyber-physical”) AND (“cross-layer” OR firmware OR network OR behavio* OR “zero trust”) AND (security OR “intrusion detection” OR vulnerability OR “access control”) AND (“resource-constrained” OR edge OR embedded OR “low power”).

Results coincided with the rise in BLE (Bluetooth Low Energy)/ZigBee security work and the formalization of zero-trust architecture. Reference lists of selected papers were snow-balled to capture additional studies.

3.2. Inclusion and Exclusion Criteria

To ensure methodological rigor, we established explicit inclusion and exclusion criteria before screening the literature. These criteria are summarized in Table 2, which outlines the types of studies that were retained or discarded during the review process.

Table 2.

Inclusion and Exclusion Criteria for the Systematic Review.

3.3. Review Workflow

The systematic review followed the PRISMA 2020 guidelines [17] and was divided into three main stages:

- Article identification. A comprehensive keyword-based search of major academic databases and preprint servers retrieved a total of 1084 articles. After automatically removing 142 duplicates by matching DOI, title, and arXiv ID, 942 unique articles remained for further review.

- Screening. Titles and abstracts were evaluated according to the inclusion and exclusion criteria defined in Section 3.2. In this step, a total of 579 articles were excluded, including editorials, position papers, short abstracts, purely cloud-centric studies, and articles not related to IoT or resource-constrained environments. A total of 363 articles were reviewed in full text.

- Eligibility review. Afterwards, we reviewed the full text to ensure methodological soundness and direct relevance to firmware, lightweight network defense, behavioral modeling, zero trust implementation, or device-side explainability. Papers that did not conduct empirical evaluation, papers that were superseded by new versions, or papers that only focused on traditional IT systems were excluded.

After eliminating 160 papers, a total of 196 studies were finally available for inclusion. Screening and eligibility assessments were conducted in parallel by two reviewers. In nine instances of disagreement, consensus was reached through discussion, and in one borderline case a third reviewer mediated. This consensus procedure is consistent with PRISMA-2020 guidelines and helps ensure transparency and reproducibility.

4. Taxonomy of Cross-Layer IoT Security

Cross-layer security in the Internet of Things (IoT) requires a systematic overview of where defenses are deployed and how they combine across different layers.

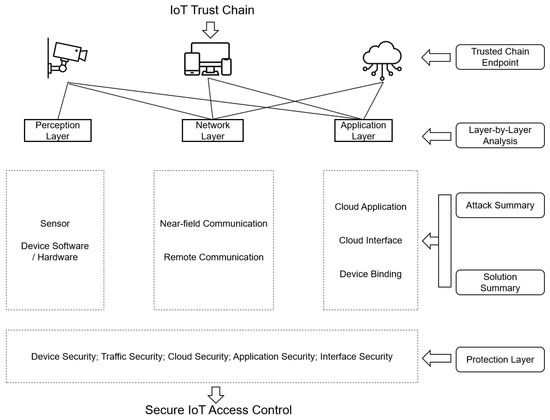

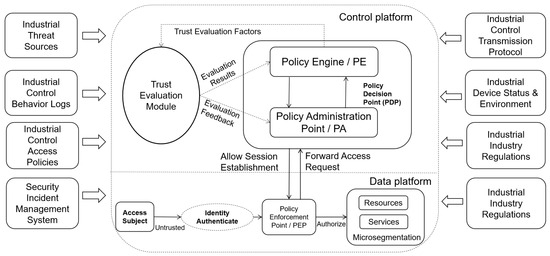

Figure 1 visually illustrates the IoT trust chain, from the perception layer through the network layer to the application layer, listing representative components at each layer (e.g., sensor and device hardware and software, near-field and long-range communications, cloud applications, interfaces, and device connectivity). The right-hand pane captures a layer-by-layer analysis, including attack and solution summaries, culminating in the trust chain endpoints. These insights inform the overall protection layers (device, traffic, cloud, application, and interface security), ultimately enabling secure IoT access control.

Figure 1.

Layered IoT Trust Chain and Component-wise Security Concerns (Arrows indicate trust propagation and the directional flow of information between layers (perception, network, and application) toward endpoint analysis and protection. Boxes denote representative elements of each layer (sensors, communication protocols, cloud services), while summary blocks on the right illustrate how attacks and defenses are analyzed. The protection layer enforces secure IoT access control across all components.).

Based on this layered approach, we organize prior research along four orthogonal axes used in this section: security layer (firmware/device, network/transport, behavior/application, trust/policy), data granularity (binary, packet/stream, telemetry, RF spectrum), deployment model (on-device, hybrid edge cloud, cloud/OTA), and algorithmic paradigm (rule-based/machine learning/deep learning/optimization).

This categorization clarifies scope and limitations, highlights integration points between layers, and provides a common foundation for comparing heterogeneous approaches.

4.1. Security-Layer Perspective

The system architecture level at which these methods are applied is a key element in understanding a cross-layer security strategy for the Internet of Things (IoT). We use four levels of abstraction: firmware/device, network/transport, behavior/application, and trust/policy. Each layer presents its own threats and limitations, and security solutions vary in terms of data availability, computational power, and risk models. As shown in Figure 1, the IoT trust chain covers the perception layer, network layer, and application layer, each of which includes different components such as sensors, communication interfaces, and cloud services. The figure illustrates how security issues, from device software integrity to interface protection, are addressed layer by layer, laying the foundation for secure IoT access control and trusted system behavior.

- Firmware/Device Layer. This layer includes security features that directly affect hardware peripherals, microcontroller instructions, or device firmware. This layer provides both active analysis (e.g., vulnerability detection) and runtime protection mechanisms (e.g., attestation and execution control). Many embedded systems lack memory protection or statically link binaries, making them vulnerable to attacks such as memory corruption, control flow hijacking, and binary reuse. Recent research has focused on finding parallels between symbolic execution [18], lightweight taint tracking [19], and semantic hashing [20] and firmware. Researchers have also explored shadow stack enforcement [21], TrustZone isolation [22], and secure boot [23] to ensure runtime security. These approaches are particularly important in mission-critical applications such as drones, industrial actuators, and medical sensors, where firmware compromise can have severe practical consequences.

- Network/Transport Layer. The network layer encompasses communications based on Bluetooth Low Energy (BLE), ZigBee, LoRa, Wi-Fi, and MQTT-based cloud protocols. Security at this layer includes device fingerprinting, traffic injection, spoofing, replay, and protocol degradation. Existing defenses include Bluetooth Low Energy fingerprinting [24], packet timing and channel hopping anomaly detection [25], and lightweight intrusion detection systems (IDS) using compressed CNNs or GNNs [26,27]. Additionally, there are numerous examples of attacks targeting specific protocols, such as ZigBee Touch Link Hijacking [28], MQTT Hijacking [29], and Bluetooth Address Randomization Failures [30]. Many embedded IDS engines use bitfield encoding or Bloom filters [31,32] and operate on systems with less than 100 kB of RAM.

- Behavioral/application layer. This layer monitors the consistency of higher-level application logic, actuator control, and sensor data. It provides both direct models of time-varying physical processes (e.g., the water level in a tank) and indirect models, such as policies for monitoring context-aware applications. Graph-based models, such as GAT-GRU [33], temporal sensor anomaly detectors [34], and hybrid context/state machine verifiers [35,36], are widely used in industry. To prevent privilege abuse, smart homes utilize tools such as ContexIoT [37] and Soteria [38] to record user interactions with devices. Modeling the overall behavior of a device is crucial for detecting hidden behaviors or policy violations that cannot be detected by firmware or packet filters.

- Trust/Policy Layer. This layer adds logical structure and architectural guidance to ensure reliable system operation across different domains. It includes zero-trust identity enforcement, continuous authentication, remote attestation chains, and federated policy control. Systems such as WAVE [39], XToken [40], and MPA [41] aim to achieve fine-grained authorization and feature propagation in resource-constrained multi-tenant systems. Trust layer approaches also include device access protocols [42] for protection against forged configuration attacks (e.g., ghost device attacks) and distributed ledger systems for trust traceability [43]. These approaches are particularly important when IoT devices are owned by multiple parties and policies need to be coordinated without a shared physical infrastructure.

4.2. Data Granularity

Cross-layer security solutions for the Internet of Things (IoT) process input data at different levels of detail. This affects how well they detect threats, their operational costs, and the difficulty of integration. There are four levels of data granularity: binary level, packet/stream level, time series telemetry level, and radio frequency spectrum (RF) level. Each level has its own advantages and disadvantages that affect the design and use of IoT security measures.

Binary-level analysis looks for static vulnerabilities, backdoors, and cryptographic errors in firmware images or executable code (usually in raw or disassembled format). The tool used in [44] uses a secure stream processing framework to inspect the firmware without executing it. This ensures that vulnerabilities can be found even when the device is not connected to the Internet. Ref. [45] also discusses trusted execution environments (TEEs), which can assist binary analysis by providing a secure code inspection setup that is independent of the rest of the system. These methods are ideal for detecting zero-day vulnerabilities, but lack runtime behavioral context, making it difficult to detect dynamic threats. For example, static analysis in a trusted execution environment (TEE) [45] can find firmware errors but not problems that occur during program execution.

Packet and flow-level inspection is a common feature of network intrusion detection systems (NIDS), which analyze PCAP files or network traffic in real time. Approaches include statistical feature extraction [46], protocol-specific filtering for protocols such as MQTT [47], and compressed stream embedding using advanced models such as graph neural networks (GNNs) [47]. These approaches strike a good balance between coverage and speed, making them well suited for detecting network-based issues. However, since they primarily analyze packet metadata, they may miss aspects of semantic intent or high-level device logic. For example, ref. [46] discusses edge computing frameworks that allow real-time traffic analysis. However, these approaches struggle with context-sensitive attacks and require more information about the application layer.

Time series telemetry analysis uses data such as actuator state changes, energy consumption, or sensor values to understand device functionality and identify issues. Increasingly, users are leveraging this level of detail for general modeling and predictive anomaly detection [48,49]. For example, ref. [48] discusses edge computing applications that analyze telemetry data to detect behavioral changes, providing it with rich semantic context. However, this approach requires longer observation windows and complex correlation models, which can be challenging for resource-limited IoT devices. Systems such as TEEs used to protect telemetry processing in [49] are often migrated to edge or cloud infrastructure due to their high computational power requirements.

Radio frequency (RF) spectrum analysis is critical for detecting problems in wireless networks, especially in the presence of denial of service (DoS) attacks, malicious base stations, or protocol spoofing [50]. These systems analyze raw I/Q signal data or spectrum fingerprints to detect unwanted transmissions or interference. RF detection is applicable to any protocol and can therefore detect threats that are not present in traditional protocol stacks such as ZigBee and LoRa. For example, ref. [50] describes a TEE-based framework for protecting wireless communications by analyzing RF signals. However, RF analysis is difficult to apply to low-power devices because it requires a lot of computational power and therefore typically requires specialized hardware.

When choosing the level of detail in the data, trade-offs must be made between latency, interpretability, and hardware feasibility. New hybrid approaches combine simple flow-level inspection with deeper binary or RF analysis to enable immediate action when suspicious events are discovered [50]. For example, a system could start with packet-level monitoring to detect threats and then leverage RF spectrum analysis to more precisely detect wireless attacks. This can improve both efficiency and accuracy.

4.3. Implementation Models and Algorithmic Paradigms

This section explores the practical aspects of how these security solutions are actually implemented, based on a layer-oriented approach and data granularity. We will therefore discuss deployment models, which define the placement of defensive measures within the IoT stack, and algorithmic paradigms, which determine how threats are detected and mitigated in practice.

To achieve practical integration of IoT security, it is important to consider not only where defensive measures are implemented but also how their algorithms operate. Deployment models determine the physical and architectural placement of defensive mechanisms within devices, edge nodes, and cloud infrastructure. Algorithmic paradigms define computational strategies for implementing intrusion detection, anomaly detection, and adaptive defenses within resource-constrained environments. Together, these two perspectives form the foundation for the practical implementation of cross-layer IoT security.

4.3.1. Deployment Models

IoT security solutions must adapt to the hardware and deployment environment of edge devices. There are three primary deployment models: on-device deployment, hybrid or collaborative deployment, and cloud-based over-the-air (OTA) delivery. Each type addresses specific operational and security requirements.

With on-device deployment, security logic is implemented directly on a microcontroller (MCU), system-on-chip (SoC), or edge gateway. This allows devices to be protected in real time and with very low latency. This model is best suited for IoT applications that need to run quickly but cannot offer many features due to low processing power and memory capacity. Firmware integrity checking [51] and lightweight intrusion detection systems (IDS) [47] are two examples of technologies that are often deployed on devices. For example, ref. [51] describes the trend among IoT developers to use ARM TrustZone to secure devices. On the other hand, ref. [47] describes a trusted execution environment for a lightweight IDS. Newer platforms can leverage advanced hardware such as ARM TrustZone [52] to separate critical tasks. These systems provide strong protection but must be optimized for specific hardware to run within limited resources.

Hybrid implementations distribute security tasks between cloud infrastructure and edge devices. We perform simple or real-time analysis on our own computers, but move computationally intensive activities (such as graph-based threat detection or advanced analytics) to the cloud. This approach strikes a balance between edge responsiveness and scalable analysis. For example, ref. [46] discusses an edge computing framework that supports local packet filtering, while a cloud-based system [48] performs more complex threat analysis. For centralized correlation, the system can upload PCAP files or extracted features, or local anomaly alerts can trigger cloud-based processing [47]. Hybrid implementations achieve scalability but require secure network connectivity and strong cloud security.

Cloud-based OTA implementations can implement vulnerability scanning, security updates, and anomaly detection through regular cloud updates. This model enables rapid response to new threats, but can be risky if the update channel is not secure. For example, ref. [53] discusses an open TEE framework that supports secure OTA updates. Digital signatures and encrypted sessions are essential to ensure the security of the update chain [50]. OTA technology can provide updated anomaly detection models for edge devices [49]. However, flaws in the update process may allow attackers to inject malicious code. Therefore, a strong authentication mechanism is required.

From a hardware perspective, the choice of deployment model is inevitably constrained by the available computational resources on the IoT platform. Consumer devices are typically based on microcontrollers (MCUs) such as ESP32 or STM32, while industrial IoT implementations often use more powerful edge accelerators such as TPUs or NVIDIA Jetson boards. These heterogeneous hardware configurations mean that security architectures cannot be applied uniformly but must be adapted to the device class. In practice, this adaptation often requires pruning, quantization, or simple optimization of anomaly and intrusion detection models to ensure feasibility within tight memory and power budgets. Frameworks such as TinyML and platforms such as EdgeImpulse [54] demonstrate how compressed inference runtimes can be efficiently executed on MCUs, enabling real-time anomaly detection directly at the edge. This hardware-centric perspective complements the previous discussion of deployment models by showing that practical feasibility depends not only on the location of the security logic (on-device, hybrid, or cloud-based), but also on how it fits within the computational constraints of the target device.

When choosing a deployment strategy, latency, update frequency, hardware capabilities, and operational considerations must be taken into account. Recent research has explored techniques for dynamically switching between models, including sending complex analytics to the cloud only when local monitoring detects suspicious conditions [50]. This flexible approach maximizes resource utilization while maintaining high security standards.

4.3.2. Algorithm Paradigms in Cyberspace Security Applications

Cybersecurity has already implemented significant qualitative leaps in the use of artificial intelligence (AI) algorithms toward the fulfillment of such core functions as intrusion detection, security posture assessment, and related dimensions of defense strategy enhancement. Types of algorithms discussed in this section that have proven useful toward the advancement of cybersecurity include machine learning (ML), deep learning (DL), and optimization algorithms.

- Machine Learning Paradigms

Most of the algorithms used in network security are machine learning because they are easy to implement and support good computer implementation. The methodologies that these methods embrace include supervised, unsupervised, semi-supervised, and reinforcement learning. These algorithms find their best application where there is a need for fast sorting as well as where the computers are not performing at a high level.

Supervised learning: K-nearest neighbor (K-NN), support vector machine (SVM), naive Bayes (NB), and decision tree (DT) are the most popular intrusion detection algorithms. For example, Al-Omari et al. [55] developed a DT-based intelligent intrusion detection model that achieved 98% accuracy on the UNSW-NB15 dataset by evaluating security variables using the Gini coefficient. This reduces computational time compared to typical machine learning methods. Sapavath et al. [56] also used NB to detect cyberattacks in virtualized wireless networks in real time. They achieved this by modeling an adaptive agent system and achieved 99.8% accuracy. Abuali et al. [57] developed a DT-based intelligent intrusion detection model that achieved 98% accuracy on the UNSW-NB15 dataset by evaluating security variables using the Gini coefficient. This reduces computational time compared to typical machine learning methods. Sapavath et al. [56,58] also used a black box model to detect cyberattacks in virtualized wireless networks in real time. They achieved this by modeling an adaptive agent system and achieved 99.8% accuracy [59] combined support vector machines (SVM) with deep learning for intrusion detection. They achieved 98.50% accuracy on the CSE-CIC-IDS2018 dataset, showing that this approach is well suited for detecting complex patterns. These models are good at ranking known attack patterns but struggle with high-dimensional data or novel attacks [57].

Unsupervised learning: To protect against distributed denial of service (DDoS) attacks, algorithms such as K-Means are used. Gu et al. [58] developed a semi-supervised K-Means algorithm based on hybrid feature selection (SKM-HFS), which achieved the lowest detection latency on datasets such as CICIDS2017. Unsupervised algorithms are well-suited for detecting unusual patterns in unlabeled data, but may not be as accurate in complex attack scenarios [60].

Semi-supervised learning: Nishiyama et al. [61] developed the SILU algorithm, which uses large amounts of unlabeled data to improve supervised classifiers such as SVMs and Random Forests (RFs). This approach improves detection accuracy and reduces the number of false positives, making it suitable for real-time applications with limited labeled data [62].

Reinforcement Learning (RL): Reinforcement learning is increasingly being used to develop defense methods. For example, Liu et al. [63] used a deep recurrent Q-network (DRQN) to make defense decisions in a stochastic game system. This approach reaches the optimal policy faster than standard methods. Reinforcement learning is well-suited to dynamic situations because it can adapt to changing threats, but it requires a lot of computational power [64].

Representative IoT evidence: Doshi et al. used classic machine learning traffic features to detect consumer IoT DDoS attacks and demonstrated high accuracy of a lightweight model.

- Deep Learning Paradigms

Deep learning algorithms, especially those using neural networks, are well-suited to processing high-dimensional, complex data. This makes them ideal for detecting sophisticated attacks and assessing security posture.

Convolutional Neural Networks (CNNs): CNNs are well suited to detecting cyberattacks in many different domains. Khaw et al. [65] developed a one-dimensional CNN with an autoencoder (AE) for protecting transmission line relays. They achieved 100% accuracy on the OPAL-RT HYPERSIM dataset. Ho et al. [66] developed a CNN-based multi-class classification model that achieved 99.78% accuracy on the CICIDS2017 dataset by fine-tuning hyperparameters through repeated testing. Nazir et al. [67] developed a hybrid CNN-LSTM architecture for IoT threat detection that achieved 99.20% accuracy on the IoTID20 dataset by capturing both spatial and temporal variables. CNNs perform well on structured data but require extensive parameter tuning [68].

Different types of recurrent neural networks (RNNs): Long short-term memory (LSTM) and gated recurrent unit (GRU) are examples of RNNs used to analyze sequential data. Hossain et al. [69] used LSTM to detect SSH and FTP brute force attacks, achieving 99.88% accuracy on CICIDS2017. Ma et al. [70] developed XBiLSTM-CRF for network security entity detection, achieving 90.54% accuracy on the open source NER dataset. Bukhari et al. [71] used a federated learning approach of sparse CNN and bidirectional LSTM (SCNN + Bi-LSTM) to improve defense strategies, achieving 97.80% accuracy on the WSN-DS dataset while maintaining training confidentiality. These models are well suited to capture temporal relationships but require a lot of computational power [72].

Autoencoders (AEs) address the data imbalance problem in attack detection. Kunang et al. [73,74] used AE for feature discovery and achieved 86.96% accuracy on NSL-KDD. Qazi et al. [75] developed a stacked asymmetric deep autoencoder (S-NDAE) and achieved 99.65% accuracy on the KDD CUP99 zero-day attack test. AEs are helpful for feature learning but may have difficulties with highly imbalanced datasets [76]. Generative adversarial networks (GANs) improve datasets by creating fake data. Ding et al. [77] developed a table-assisted classifier GAN (TACGAN) and achieved 95.86% accuracy on CICIDS 2017. GANs make models more robust but must be carefully tuned to avoid mode collapse [78].

Representative IoT evidence: Meidan et al.’s N-BaIoT uses deep autoencoders to flag botnet traffic from compromised IoT devices (Mirai/BASHLITE), achieving near-instant detection.

- Optimization Algorithm Paradigms

In the field of cybersecurity, optimization algorithms such as classical methods and swarm intelligence (SI) methods are very important for tuning parameters and selecting features.

Classical optimization methods such as genetic algorithms (GA) and simulated annealing are often used for feature selection. Seth and Chandra [79] combined KNN with modified gray wolf optimization (MGWO) and achieved 99.87% accuracy on the Solaris dataset. Anonymous et al. [74] combined SVM, KNN, RF, DT, LSTM, and ANN with fuzzy clustering to find intruders. They improved feature selection by using fuzzy clustering and achieved 99.10% accuracy on CICIDS2017. These strategies are well suited for simple problems but are not suitable for more complex non-convex problems [80].

Swarm intelligence methods: SI methods such as particle swarm optimization (PSO) and gray wolf optimization (GWO) can improve model performance and feature selection. Kan et al. [81] developed an adaptive PSO-CNN (APSO-CNN) for IoT intrusion detection with an accuracy of 96%. Chohra et al. [82] used the Chameleon model with ensemble learning and achieved an F-value of 97.3% on the IoT-Zeek dataset. SI techniques are well suited for solving multidimensional problems, but their convergence may take a long time [83].

Game theory and stochastic models: Game theory helps improve defense techniques by simulating the interactions between attackers and defenders. Hyder and Govindarasu [84] used game theory to protect smart grids from cyber attacks and reduce the probability of attacks. Bhuiyan et al. [85] developed a risk-free Stackelberg game model that reduced computational time by 71%. These models can help develop strategies, but they must make correct assumptions about the attacker’s behavior [86].

Benchmark IoT evidence: Benmalek et al. hybridize an enhanced PSO with deep models for real-time IoT cyber-attack detection on a benchmark IoT, shedding light on the place of metaheuristics in the constrained tuning/feature selection space.

Table 3 sums up disclosed performances by different algorithms in intrusion detection, security scenario assessment, and defense optimization. It also lists their datasets, accuracy, and main advantages.

Table 3.

Performance Comparison of AI Algorithms in Cyberspace Security.

As summarized in Table 3, three themes emerge: first, classical ML baselines provide very strong accuracy–latency tradeoffs on structured datasets, though they fail under conditions of concept drift and novel attack families; second, DL models discover spatial–temporal structure and frequently outperform ML on complex traffic and telemetry with higher compute and tuning effort—thus good for gateways and edge nodes rather than tiny MCUs; third, optimization and game-theoretic methods complement detection by feature selection, hyper-parameter tuning or defense allocation increasing robustness at the cost of increased design complexity. These lead us to propose hybrid pipelines motivated by lightweight on-device screening paired with deeper edge/cloud analytics.

Machine learning algorithms have gained attention in the attack detection community due to their simplicity and their ability to perform well in resource-constrained environments. SVM + DL [59] and other hybrid methods are more robust to complex datasets but require higher computational power. Deep learning algorithms such as CNN, LSTM, and hybrid models such as CNN + LSTM [67] and SCNN + Bi-LSTM [71] perform better on complex and imbalanced datasets. They can be used in IoT and wireless sensor networks but require high computational power and sophisticated parameter tuning [66,69]. Optimization methods such as SI and fuzzy clustering [74] can improve feature selection and strategic decision making. Models such as APSO-CNN and Stackelberg games show potential in IoT and smart grid applications [81,85]. The choice of algorithm depends on the specific security task, the specifics of the dataset, and the performance limitations of the computer. Future research should explore hybrid models that leverage the best parts of ML, DL, and optimization techniques to address emerging threats and data imbalance. This trend of adapting classical ML/DL paradigms to resource-constrained IoT devices has also been recognized in recent surveys, which emphasize that IoT-specific constraints—limited memory, energy, and heterogeneity—necessitate tailored versions of otherwise general algorithms.

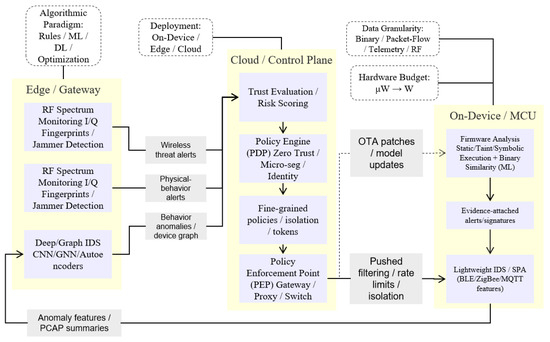

Figure 2 brings together the entire pipeline across On-Device/MCU → Edge/Gateway → Cloud/Control Plane to make concrete the implementation view. The mechanisms are grouped by tiers so that the components represent where their most natural place of execution might be—running firmware analysis and lightweight IDS on constrained MCUs; deep/graph IDS, sensor–actuator consistency, and RF monitoring at the edge; trust evaluation, policy decision, and enforcement in the control plane. Solid arrows denote data flow (evidence-attached alerts, anomaly features, behavioral/RF signals); return path contains control/feedback from trust and policy back to enforcement (fine-grained tokens, rate limits, isolation) to devices as OTA patches or model updates. It will stack MCU-side and cloud-side blocks vertically to minimize horizontal span but keep signal collection left-to-right logic through to decision and action. The four design axes—data granularity, algorithmic paradigm, deployment, hardware budget—are called out with dotted boxes so that a reader can immediately relate methods to where they execute and why.

Figure 2.

Cross-layer IoT security pipeline with taxonomy overlay (Arrows denote data or control flow between modules (e.g., anomaly features flowing from edge devices to cloud analysis, or OTA updates pushed back to MCUs). Colored boxes highlight the taxonomy dimensions: yellow = deployment location (edge, cloud, MCU), blue = trust/policy components, and purple = analysis/defense functions. This visual coding emphasizes how algorithmic paradigms, deployment constraints, data granularities, and hardware budgets interact in a layered security design.).

5. Firmware-Level Security

Academic and industrial circles have, in recent years, directed significant attention toward the security evaluation of embedded firmware. Techniques for vulnerability discovery in conventional computer systems typically prove inapplicable to firmware assessment owing to the considerable diversity among embedded devices, their specialized functionalities, and their constrained execution contexts. Static analysis and symbolic execution represent two principal methodologies for program examination that can identify security flaws by analyzing a program’s architecture and logic (without running the code). This renders them quite valuable for assessing the security of embedded firmware.

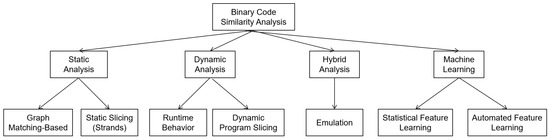

Figure 3 presents an organization of the typical approaches that would facilitate your understanding of the technical landscape about firmware similarity analysis techniques applied in vulnerability detection. They are usually organized by the type of analysis as static, dynamic, hybrid, and machine learning with accuracy versus coverage versus runtime applicability versus applicability to pure binary firmware paradigms.

Figure 3.

Classification of Firmware-Level Binary Similarity Analysis Techniques by Static, Dynamic, Hybrid and ML methods (Arrows indicate hierarchical relationships, where broader categories (e.g., static, dynamic, hybrid, and ML-based analysis) decompose into sub-techniques (e.g., graph matching, program slicing, emulation, or feature learning). The diagram is intended to show methodological groupings rather than temporal or directional process flows.).

Static analysis examines the structure and logic of firmware binary code to find potential security vulnerabilities, such as buffer overflows or hard-coded passwords. It does not require a runtime environment, making it well suited for embedded devices. However, it can produce false positives due to the lack of context. Recent research has found new algorithms and techniques to make it more efficient and accurate.

Symbolic execution checks for vulnerabilities by simulating all possible execution modes of a program. It is particularly suitable for finding complex logic errors. However, firmware analysis faces challenges due to path explosion and peripheral device interaction modeling. Recent research has improved performance through code slicing and domain-related knowledge.

Recent research has made firmware security analysis more automated and accurate. For example, a framework for detecting application privacy leaks uses static analysis to identify privacy issues in IoT applications. Hardware-based fuzz testing uses hybrid modeling to improve path exploration. Reverse engineering methods for smart home IoT firmware can discover network vulnerabilities, while tracing hardware information flows helps analyze the security of firmware-hardware collaboration. These improvements significantly promote the discovery of vulnerabilities in embedded device firmware and are both accurate and scalable.

5.1. Static and Symbolic Analysis Techniques

This section covers the ideas, applications, current research, and limitations of static and symbolic analysis methods. A comprehensive evaluation will be conducted in this section, focusing on their usefulness and associated challenges in analyzing the security of embedded firmware.

5.1.1. Static Analysis Techniques

Static analysis techniques examine the binary code or intermediate representation of a program to determine control flow, data flow, and semantic information. They can also discover potential security vulnerabilities such as buffer overflows, command injection, or hard-coded credential backdoors. Static analysis does not require the program to be running, so it is suitable for embedded devices with limited resources, especially when the firmware source code is not available or has limited documentation. The typical steps of firmware security analysis are as follows:

Deploy the firmware and make it available for use: Extract the executable code or application from the firmware image using tools such as Binwalk [94] or Binary Analysis Next Generation (BANG) [95]. These programs can identify file systems, compression schemes, or binary structures to extract the firmware into an analyzable binary.

Code conversion: Convert the binary code to assembly language or an intermediate representation such as VEX or LLVM-IR using reverse engineering tools such as IDA Pro [96] or Ghidra [97]. These tools are available for many different instruction set architectures (ISAs), such as ARM, MIPS, and x86. They can also find function call links, string references, and control flow graphs (CFGs).

Program analysis: Uses static analysis methods such as fuzzy hashing [98], taint analysis, or control flow analysis to identify program features and potential security vulnerabilities. For example, fuzzy hashing can find re-used third-party libraries or code snippets that are known to be vulnerable. On the other hand, taint analysis tracks the flow of data from user input to sensitive processes to identify instruction injection or buffer overflows.

Vulnerability detection: Uses special techniques to search for backdoors, memory corruption, or other security issues by analyzing program behavior.

Prior research and surveys have consistently demonstrated that static analysis is highly effective in detecting vulnerabilities in embedded device firmware, particularly in uncovering backdoors and taint-based attacks [99,100,101,102,103,104,105,106].

Here are some examples of research:

- Costin et al. [99] developed a method for analyzing large numbers of firmware files using fuzzy hashing. The method finds backdoor vulnerabilities such as weak keys and hardcoded credentials by finding similarities between firmware files. By examining 693 firmware images, 38 known vulnerable files were identified. However, the file-level granularity makes the method less precise, and the additional effort makes it unsuitable for large-scale firmware.

- Stringer [100] developed a method for comparing static data in commercial off-the-shelf (COTS) firmware to detect hardcoded credentials and undocumented functions. The method finds potential backdoors by comparing static strings in binary files. The method is well suited for large-scale analysis, but has a high false positive rate.

- HumIDIFy [101] proposed a semi-supervised learning method for finding binary functions in firmware and comparing them to predicted functions to detect hidden functions or backdoors. Tests show that the analysis of COTS firmware has a low false positive rate, although the method requires a high-quality training dataset.

- Firmlice [102]: Uses static analysis to build a program dependency graph (PDG), uses authentication slices from entry points to privileged operations, and combines symbolic execution to check fixed-value routing restrictions to find backdoors that bypass authentication. The method is very accurate, but only works for certain types of backdoors and is difficult to apply to other types of vulnerabilities.

- DTaint [103]: A method for vulnerability detection using taint analysis is proposed, which traces data from sensitive inputs (sources) to dangerous functions (sinks) by building data flow graphs of intra-procedural and inter-procedural data flows, and finds buffer overflows and instruction injections. DTaint’s bottom-up analysis can detect vulnerabilities more accurately, but takes longer for complex firmware.

- SainT [104]: In IoT applications, it monitors sensitive data (such as device status or user information) sent to external outputs and finds possible data leaks. SainT works well for type I firmware, but does not work well for type II and type III firmware because these formats are too complex.

- Zheng et al. [105]: They combined protocol parsing with static taint analysis to find protocol fields and key functions in firmware, thereby accelerating the search for taint-based vulnerabilities. This strategy reduces the amount of analysis by selecting appropriate keywords, thereby improving efficiency.

- Karonte [106] proposed a multi-binary static analysis method to simulate the communication between firmware components (such as web servers and background services) to discover insecure data transmission or logic vulnerabilities. Karonte solves some of the problems of single-component analysis, but needs to better handle more complex firmware.

- PrivacyGuard [107]: A framework has been developed for detecting privacy leaks in applications. It uses static analysis to check trigger condition-action rules in IoT applications to identify privacy risks such as location tracking and activity analysis vulnerabilities. The system is well suited for evaluating large IoT application datasets and significantly improves the level of privacy protection [107].

- Smart Home IoT Firmware Analysis and Exploitation Framework [108]: The framework supports reverse analysis of smart home IoT firmware to identify network weaknesses (such as unencrypted communication and weak authentication) and missing protection mechanisms (such as NX and Stack Canary). This helps in analyzing the security of smart home devices [108].

- Hardware Information Flow Tracing [109]: A simple path-based hardware information flow tracing method is proposed. It uses static analysis to examine firmware-hardware interactions and detect potential information leaks and dangerous operations. The method works well on low-resource devices and is fairly accurate [109].

One of the advantages of static analysis is that it does not require a runtime environment. This makes it suitable for embedded devices with limited resources and can find many types of vulnerabilities. However, it also brings some problems to firmware analysis:

High false positive rate: Static analysis often produces false positive results because firmware code is complex and diverse and lacks sufficient context information. For example, fuzzy hashing may mistakenly mark code that has the same functionality as the vulnerable code as vulnerable [99].

Not very helpful for Type II and Type III firmware: Type I firmware is usually based on standard file systems such as Squashfs, which are easy to decompress and analyze. On the other hand, Type II and Type III firmware lack a standard format or clear loading standards, which makes it difficult for static analysis tools to find code segments and data structures [110].

Insufficient analysis of the interactions between different parts of the firmware: Firmware is usually composed of many parts that work together, such as a web interface and background functions. Current static analysis methods only consider one component at a time, ignoring the interactions and data flows between components that may lead to logical vulnerabilities [106].

Computational complexity: Static analyses are difficult to perform at scale because they require processing complex control flow or data flow graphs and are therefore inefficient [103].

5.1.2. Symbolic Execution Techniques

Symbolic execution considers all different execution paths for a program input and represents them as symbolic values. Then, a constraint solver (such as Z3) is used to check for vulnerabilities by checking the path constraints. It is able to precisely analyze how a program runs, especially in detecting hard-to-find vulnerabilities such as memory corruption and authentication bypass backdoors. On the other hand, path explosion and environment dependencies make analysis of embedded firmware more difficult.

When analyzing firmware, the typical steps of symbolic execution are as follows:

- Firmware preprocessing: As with static analysis, the executable code is extracted from the firmware and converted into an intermediate representation, such as LLVM-IR or VEX.

- Input symbolization: The program input (e.g., user input or peripheral input) is converted into symbolic variables.

- Path analysis: All possible paths of the program are explored using a symbolic execution engine (e.g., KLEE [111] or angr [112]) and path constraints are imposed.

- Constraint solving: A constraint solver is used to check whether the input can trigger the vulnerability by checking the satisfiability of the path constraints.

When analyzing embedded firmware, symbolic execution is often used to investigate specific hardware types or vulnerabilities. Here are some research examples:

- Avatar [113]: A hybrid execution framework that leverages both symbolic execution and full-system simulation is proposed. It uses S2E [114] as a symbolic execution engine, dynamically intercepts simulation events (such as memory access or instruction execution), and selectively symbolizes important code segments to find vulnerabilities. This approach works for class I, II, and III firmware, but not for all peripherals.

- FIE [115]: Based on KLEE, a symbolic execution tool for MSP430 microcontrollers is developed that can simulate memory and interrupt operations to find security vulnerabilities. FIE only works for MSP430 and has many bugs due to path explosion.

- FirmUSB [103]: This is a USB controller firmware for the 8051/52 architecture that leverages domain knowledge and symbolic execution to create functional models and find BadUSB vulnerabilities. FirmUSB limits the scope of symbolic execution, making it seven times more efficient than conventional symbolic execution.

- Inception [116]: Created a symbolic virtual machine based on KLEE to transfer firmware code to LLVM-IR to find memory leaks in ARM Cortex-M3 firmware. Inception can analyze assembly and library functions, but it does not work well for complex firmware.

- Gerbil [117]: Searches for privilege separation vulnerabilities by using backward slicing to find potentially vulnerable code regions and combining symbolic execution to search deep paths. Gerbil avoids path explosion by skipping complex library functions, but requires rich domain knowledge.

- FIoT [118]: Uses static analysis and symbolic execution to build firmware control flow graphs to represent the paths of critical function inputs. Dynamic fuzzing is also used to find memory corruption vulnerabilities [119]. FIoT limits the scope of analysis but has difficulties in managing peripheral information.

- HD-FUZZ [120]: Proposes a hardware-based firmware fuzzing framework that combines hybrid MMIO modeling with symbolic execution to improve pathfinding efficiency. This significantly increases the number of vulnerabilities in the firmware of small embedded devices [120].

The strengths of symbolic execution lie in its high accuracy and ability to find complex logic errors. However, it is not suitable for all situations for the following reasons:

- Path explosion: Firmware code with complex control flows and loops can generate exponentially many paths, placing higher demands on computer performance [115].

- Peripheral dependencies: Embedded firmware often works with peripherals such as MMIO or interrupts, which are difficult for symbolic execution engines to model correctly. This can cause the analysis to abort or lead to incorrect conclusions [113].

- Complex environment setup: Symbolic execution requires complex tools such as disassemblers, instrumentation tools, and constraint solvers, which makes it difficult to use on resource-constrained embedded devices [117].

- Limitations of constraint solving: Constraints imposed by cryptographic algorithms or complex hash functions in firmware are sometimes difficult to solve, reducing its practicality [103].

5.1.3. Analysis and Evaluation

Static analysis and symbolic analysis each have their own advantages and disadvantages when analyzing embedded firmware security [121]. Table 4 summarizes their technical characteristics and some typical research results.

Table 4.

Comparison of Static and Symbolic Analysis Techniques.

Applicability: Static analysis is well suited for analyzing large amounts of firmware, especially class I firmware, as it is independent of the runtime environment. However, it struggles with class II and class III firmware, which require more robust base address detection and file format analysis [110]. Symbolic execution works well on some platforms, such as MSP430 or 8051, but does not work well when very broad coverage is required.

Accuracy and false positives: Static analysis suffers from high false positives, especially when using coarse-grained methods such as fuzzy hashing [99]. Symbolic execution finds fewer false positives through detailed path analysis, but may miss vulnerabilities due to path explosion or peripheral emulation issues [115].

Efficiency: The computational complexity of static analysis increases significantly with the size of the firmware, so feature extraction and matching methods must be optimized [103]. The efficiency of symbolic execution is limited by the number of paths and the difficulty of considering constraints. Slicing or domain knowledge is required to simplify the analysis [117].

Scalability: Static analysis has made great progress in analyzing interactions between multiple binaries (e.g., Karonte [106]), but analyzing the complexity between components remains difficult. Hardware emulation and environment configuration limit the scalability of symbolic execution, thus requiring lightweight execution frameworks.

5.1.4. Future Improvements

Future improvements to firmware security analysis should focus on supporting non-standard formats (Type II and Type III) through robust base address detection and file format analysis techniques, simplifying the processing of diverse binaries. Static analysis frameworks can be extended to capture interactions between firmware components in real time, thereby detecting cross-module logic vulnerabilities [125]. Furthermore, the efficiency of symbolic execution must be improved through methods such as code slicing, selective symbolization, and domain-specific constraints to minimize the challenges posed by path explosion and peripheral emulation [126]. Finally, combining static and symbolic methods with dynamic testing methods such as fuzz testing is a promising direction, as runtime feedback can improve the accuracy of static analysis and guide symbolic path exploration to high-impact execution traces.

5.2. Machine Learning-Assisted Binary Similarity Analysis

Cui et al. [124] pointed out that many firmware updates contain third-party libraries that have known security vulnerabilities that persist for years. Their study found that 80.4% of vendor-provided firmware had known vulnerabilities. This poses a significant problem for embedded systems that control safety-critical infrastructure. The Mirai botnet [127] demonstrated that exploitation of such vulnerabilities could cause widespread disruption of public systems, such as a distributed denial of service (DDoS) attack against DNS servers.

Finding vulnerabilities in embedded systems is difficult because they use many different hardware platforms, have their own implementations, and run in limited environments. Dynamic testing tools are widely available on desktop computers, but embedded firmware often lacks easily discoverable source code, standardized instruction set architectures (ISAs), or consistent compilation parameters [122]. This renders traditional tools useless. Researchers have increasingly used binary similarity analysis to find known vulnerabilities in firmware and have made significant progress. However, binary similarity techniques are difficult to apply to embedded firmware due to the inaccessibility of source code, the presence of different instruction sets (ISAs), and the variability of compilation settings [128,129,130,131].

This section focuses on how to use machine learning to support binary similarity analysis to find firmware vulnerabilities. This paper introduces some of the most prominent methods proposed between 2015 and 2024. These methods were published in leading security and software development forums (such as CCS, NDSS, RAID, ASIACCS, DIMVA, FSE, ASE, ICSE, TSE, ESORICS, IEEE Access, and arXiv) and analyzed within a unified four-stage framework. This report covers the technical characteristics, datasets, CVE (Common Vulnerabilities and Exposures) search performance, and computational complexity of these methods. Finally, this paper proposes some open research issues.

5.2.1. Necessity of ML-Assisted Binary Similarity Analysis

- Why binary similarity is needed at the firmware level.

Firmware binaries are stripped, optimized, and compiled for diverse ISAs, so a single vulnerable routine appears in many surface forms; purely syntactic signatures and naïve graph matching therefore miss semantically equivalent functions compiled under different settings [123]. Graph-based embeddings over attributed control-flow graphs (ACFGs) are designed to preserve structural/relational semantics across such transformations, making similarity search viable when syntax diverges [130]. Hand-crafted statistical descriptors over ACFGs were the first step toward this robustness but remain sensitive to inlining and compiler idiosyncrasies, which motivates learning richer representations [113].

- Closing the gap between breadth and evidence.

Static analyzers scale but often lack behavioral evidence and thus inflate analyst workload; in contrast, symbolic execution yields precise witnesses but struggles with path explosion and incomplete models of peripherals [132]. Using learned similarity as a front-end turns the global hunt into a nearest-neighbor retrieval problem that can surface high-yield candidate functions quickly, after which targeted slicing or feasibility checks can attach concrete, auditable artifacts to each alert. PDG-driven authentication slicing exemplifies how post-retrieval evidence can be produced for security-critical flows [102].

- What makes ML similarity robust in practice.

ACFG embeddings trained on diversified builds learn invariants that survive ISA and optimization variance, enabling cross-architecture matching at scale [130]. Token/sequence models learn instruction-level semantics and can remain useful even when control-flow is distorted, complementing graph approaches in obfuscated or flattened binaries [133]. Self-attention over token streams further improves the model’s ability to focus on security-relevant motifs such as bounds checks and sanitizer calls [134]. Where syntax diverges dramatically, cross-ISA alignment at the basic-block level can be learned by treating translation as a sequence-to-sequence task, which keeps semantically equivalent blocks close even across architectures [135]. When static evidence is insufficient, micro-executions provide short semantic trajectories that help disambiguate look-alikes during retrieval and ranking [136].

- Supply chain reuse and fleet-wide recall.

When a CVE is disclosed, the operational question is where else the same or near-same code landed across vendor forks and backports; ML similarity helps enumerate these variants and makes large-fleet recall feasible [137]. Aligning a matched binary to its source repository and patch lineage turns findings into actionable SBOM updates and targeted remediation plans [138].

- How this complements traditional dynamic testing.

Emulation-based firmware testing remains indispensable but is often tied to specific device classes and requires extensive environment modeling, which limits throughput on large vendor corpora; similarity-guided triage can prioritize where emulation time is best spent [131]. Semi-emulation frameworks reduce modeling overhead but still constrain the set of peripherals and firmware families that can be covered quickly; again, learned retrieval provides high-probability targets to feed these pipelines [139]. Firmware migration techniques extend dynamic testing by transplanting code into instrumented environments, yet the migration effort remains significant; similarity search can narrow the set of functions whose migration yields maximal payoff [140].

5.2.2. ML-Assisted Binary Similarity Workflow

- Stage 1—Acquisition and normalization.

Firmware images are unpacked to recover file systems and binaries; functions are disassembled and, where possible, lifted to an IR to harmonize instruction semantics across toolchains. A multi-view artifact is built per function: token sequences, basic-block boundaries, and program graphs such as CFGs and PDGs. Because inlining erodes function boundaries, a pre-processing pass that detects or reconstructs inlined regions improves downstream matching and reduces systematic false negatives on utility routines [141].

- Stage 2—Feature extraction.

Build a multi-view representation per function by jointly harvesting (i) lightweight statistical descriptors over graphs—e.g., ACFG constant histograms, instruction counts, and degree profiles—as fast, interpretable pre-filters [113]; (ii) dynamic/semantic signals from partial emulation that yield short traces (library-call sequences, I/O pairs, value evolution) to stabilize against compiler/inliner variance and supply high-quality cues for later verification [142]; and (iii) learned representations that generalize across ISAs and builds, including GNN embeddings over ACFGs for structure-aware matching [130], distributional/token embeddings that retain instruction-level semantics under moderate CFG distortion [133], self-attention to emphasize vulnerability-relevant motifs such as bounds checks and sanitizers [134], cross-ISA basic-block alignment via neural machine translation when opcode vocabularies diverge strongly [135], and micro-execution–derived trajectories that disambiguate near-neighbors during ranking [136]. Fuse these views with early fusion (feature concatenation) or late fusion (calibrated ensembling) and train with diversified architectures/opt-levels plus hard-negative mining so the model collapses benign compiler drift while preserving differences between patched and vulnerable variants; in inline- or obfuscation-heavy firmware, bias the mix toward token/attention features and dynamic traces, optionally preceded by inline-recovery preprocessing, to maintain recall without sacrificing precision.

- Stage 3—Representation and retrieval.

Functions are embedded into a vector space or encoded as structured signatures; approximate nearest-neighbor indexes enable sub-linear retrieval on large corpora. During training, hard-negative mining—near-misses produced by different compilers or opt-levels for the same routine—forces the model to collapse benign compiler drift while preserving discriminative cues that separate patched from vulnerable variants [143]. Calibrating similarity scores stabilizes thresholds across firmware families so that ranking quality translates into predictable analyst effort [144].

- Stage 4—Triage, attribution, and verification.

Top-k candidates are clustered to remove near-duplicates and ranked under a budget-aware objective that maximizes time-to-first-vulnerability. PDG slicing from entry points to privileged operations can then attach concrete paths that indicate likely bypasses in authentication or authorization logic [102]. Targeted symbolic checks validate path feasibility on a small set of high-scoring functions, providing inputs or states that witness the issue without incurring whole-program path explosion. When provenance matters, source/patch alignment maps the binary hit to a repository and patch lineage for SBOM updates and coordinated remediation [138].

- Design choices that materially improve outcomes.

Training on mixed architectures and compiler/opt-level variants is necessary to achieve cross-variant robustness in graph embeddings; models trained narrowly tend to overfit to build idiosyncrasies and collapse out-of-domain [130]. Inline-heavy builds benefit from an inline-recovery step before representation learning; without it, token and graph models systematically miss small utility routines implicated in many bugs [141]. Discriminating patched from vulnerable near-duplicates requires exposing the learner to such pairs and backing retrieval with a verification gate that checks for the presence of the specific guard or sanitizer introduced by the fix, which reduces false positives during patch cycles [145]. Finally, leakage-safe evaluation must split by project/version rather than at the function level to avoid inflating scores through microscopic code duplication across train and test [143].

5.2.3. Technical Challenges (Root Causes, Symptoms, and Single-Source Mitigations)

There are several issues with using machine learning for binary similarity analysis, as listed below:

- C1—Information loss after compilation. Root cause: symbols and high-level structure are erased; inlining collapses call boundaries. Symptom: unstable matches and high analyst burden. Mitigation: rely on relational views such as ACFG/PDG to preserve structural semantics during retrieval, then attach PDG-based slices to produce auditable evidence for security-critical flows [102].

- C2—ISA diversity. Root cause: the same semantics map to different opcode vocabularies and calling conventions. Symptom: high same-ISA accuracy that degrades across architectures. Mitigation: learn ACFG embeddings explicitly trained to be stable across architectures so that semantically equivalent routines cluster together during retrieval [130].

- C3—Compiler/opt-level variance. Root cause: O-level changes, inlining decisions, and link-time optimization reshape CFGs. Symptom: hand-crafted graph features drift; recall collapses under O3 vs. O0. Mitigation: augment structural features with short semantic traces from partial emulation, which smooth compiler noise while preserving the cues needed for disambiguation [142].

- C4—Function inlining. Root cause: small helpers vanish as standalone functions; boundaries blur. Symptom: systematic false negatives for utility routines commonly implicated in bugs. Mitigation: detect and recover inlined regions before modeling so that both token and graph learners operate on function units that better reflect source semantics [141].

- C5—Obfuscation and CFG distortion. Root cause: control-flow flattening and bogus edges decouple CFG shape from behavior. Symptom: CFG-centric descriptors lose discriminative power. Mitigation: emphasize token/sequence embeddings with attention, which learn instruction-level semantics less sensitive to CFG distortion and retain discriminative power under moderate obfuscation [134].

- C6—Multi-block/function vulnerabilities. Root cause: end-to-end flaws span helpers, drivers, or inter-process boundaries. Symptom: function-only retrieval flags fragments without a triggerable path. Mitigation: perform multi-binary flow reasoning during verification so that candidate matches are elevated only when an inter-component data path to a dangerous sink exists [106].

- C7—Patch similarity and false positives. Root cause: patched and vulnerable variants remain structurally close. Symptom: alerts on already-fixed code waste patch windows. Mitigation: include patched/unpatched pairs during training and add a verification gate that checks for the specific guard or bounds fix introduced by the patch before escalating a finding [145].

- C8—Accuracy versus verifiability. Root cause: high cosine similarity does not imply exploitability. Symptom: strong ranking with weak artifacts slows engineering sign-off. Mitigation: attach PDG slices or targeted symbolic inputs to each high-rank match so that every alert is accompanied by concrete paths or inputs that are auditable by developers [102].

- C9—Throughput and indexing at scale. Root cause: naïve all-pairs comparison is quadratic in corpus size. Symptom: time budgets exhausted before useful recall is reached. Mitigation: use approximate nearest-neighbor indexing and batched inference so that retrieval time scales sub-linearly with corpus size; design ranking to maximize early discovery under fixed budget [146].

- C10—Sustained scalability under fleet growth. Root cause: the number of images and variants grows faster than analyst/compute budgets. Symptom: thresholds drift upward, recall declines, and backlogs accumulate. Mitigation: adopt two- or three-stage cascades—lightweight pre-filters, semantic enrichment, then targeted verification—so recall per unit compute remains stable as the fleet expands [147].

5.2.4. ML-Assisted Techniques and Tools

Machine learning (ML) has become a central driver of modern binary similarity analysis, enabling firmware vulnerability detection to go beyond rule-based or handcrafted approaches. Broadly, these techniques can be grouped into statistical, dynamic, and learning-based categories, each offering different balances between accuracy, scalability, and interpretability [148].

- Statistical Feature-Based Methods

Statistical approaches rely on manually extracted features such as instruction counts, control-flow graph (CFG) attributes, or text constants. These methods are lightweight and interpretable, but their effectiveness depends heavily on feature engineering. A representative example is Genius [113], which constructs attributed CFGs (ACFGs) using constants, instruction counts, and descendant nodes to compare binary functions. Building on this idea, Gemini [130] integrates ACFGs with graph neural networks (GNNs, e.g., Structure2Vec [149]) to generate embeddings, significantly improving scalability and robustness against compiler diversity. This transition from handcrafted to embedding-based statistical features marks a key inflection point: it reduces sensitivity to syntactic changes while retaining the structural cues essential for detecting reused or vulnerable code [150].

- Dynamic Feature-Based Methods