Featured Application

NeuroFed-LightTCN is particularly useful in the healthcare industry, enabling privacy-preserving federated learning directly on edge devices like portable EEG headsets—allowing independent, real-time brainwave analysis (e.g., seizure detection) without sharing sensitive patient data or relying on cloud servers, while maintaining high accuracy (97%+) despite limited computational resources.

Abstract

This study investigates on-edge seizure detection that aims to resolve two major constraints that hold the deployment of deep learning models in clinical settings at present. First, centralized training requires gathering and consolidating data across institutions, which poses a serious issue of privacy. Second, a high computational overhead inherent in inference imposes a crushing burden on resource-limited edge devices. Hence, we propose NeuroFed-LightTCN, a federated learning (FL) framework, incorporating a lightweight temporal convolutional network (TCN), designed for resource-efficient and privacy-preserving seizure detection. The proposed framework integrates depthwise separable convolutions, grouped with structured pruning to enhance efficiency, scalability, and performance. Furthermore, asynchronous aggregation is employed to mitigate training overhead. Empirical tests demonstrate that the network can be reduced fully to 70% with a 44.9% decrease in parameters (65.4 M down to 34.9 M and an inferencing latency of 56 ms) and still maintain 97.11% accuracy, a metric that outperforms both the non-FL and FL TCN optimizations. Ablation shows that asynchronous aggregation reduces training times by 3.6 to 18%, and pruning sustains performance even at extreme sparsity: an F1-score of 97.17% at a 70% pruning rate. Overall, the proposed NeuroFed-LightTCN addresses the trade-off between computational efficiency and model performance, delivering a viable solution to federated edge-device learning. Through the interaction of federated-optimization-driven approaches and lightweight architectural innovation, scalable and privacy-aware machine learning can be a practical reality, without compromising accuracy, and so its potential utility can be expanded to the real world.

1. Introduction

Epilepsy is one of the most common neurological diseases. Its prevalence is around 50 million people worldwide, which is 4–10 per 1000 people, with electroencephalogram (EEG) monitoring considered to be the most important diagnostic process that helps to understand whether a person has a seizure or not [1]. Although deep-learning-based seizure classification has been proven wildly successful for automated seizure identification, prevailing methods also exhibit three inherent limitations: (1) centralized acquisition of EEG data presents a severe patient privacy issue [2], (2) traditional deep neural networks are computationally demanding for performing real-time operation at edge devices [3], and (3) synchronous federated learning (FL) frameworks are vulnerable to heterogeneous clients typical of healthcare clients [4].

Federated learning (FL) has also demonstrated itself to be a promising solution for eliciting data privacy by enabling the training of decentralized models over the collection of data from hospitals or individual devices without exchanging unprocessed EEG data [5]. Nonetheless, traditional FL procedures are based on synchronous aggregate protocols, which fall victim to the straggler effect, whereby slower clients (e.g., low-power wearables) exhibit a substantially detrimental influence on the global model update [6]. Recent developments in asynchronous FL address this problem by enabling clients to send updates independently, thereby increasing training efficiency across different network qualities and device capabilities [7].

In order to address the complex problem of privacy preservation, computational efficiency, and deployment altogether, we suggest a novel framework comprising a combination of:

- Lightweight Temporal Convolutional Networks (TCNs)—Depthwise and grouped depthwise separable convolutional specifications of our architecture reduce the parameters involuntarily and maintain the capability of temporal feature extraction crucial to EEG analysis [8]. The robustness of the model takes another step by applying structured pruning, which identifies the best sparsity rate that yields a model with a compact and performing structure.

- Adaptive Asynchronous FL Aggregation—A staleness-aware update procedure that dynamically enforces clients’ contribution in the form of weights in latency and data quality cases, resulting in faster convergence than synchronous methods [9].

Although recent methodological developments suggest some positive results, existing lightweight federated learning techniques in healthcare environments remain limited. The key challenges include poor preservation of clinically relevant temporal patterns, a deficit in applicability across rare subtypes of seizures, and a complex situation under mixed, i.e., heterogeneous, device environments. These challenges motivate the present study and lead to the design of the NeuroFed-LightTCN framework. NeuroFed-LightTCN provides a statistically significant advancement in maintaining clinically meaningful temporal characteristics, and increases generalizability to infrequent seizure subtypes. Additionally, the proposed framework is able to operate using heterogeneous device settings. In summary, this research makes the following contributions:

- The first integration of a pruned, depthwise separable and grouped depthwise time-convolution network (TCN) with asynchronous federated electroencephalography (EEG)-based seizure detection, attaining a short inference latency of 56 ms.

- An efficient pruning framework that reduces the model size by up to 70% without significantly decreasing accuracy.

- An ablation study showing that the asynchronous aggregation protocol reduces the model convergence time by 3.6–18% while maintaining stability against device heterogeneity.

- An end-to-end benchmark on a publicly available EEG dataset, exhibiting state-of-the-art seizure detection accuracy (97.11%), and a significant reduction in the message-passing overhead and computational effort.

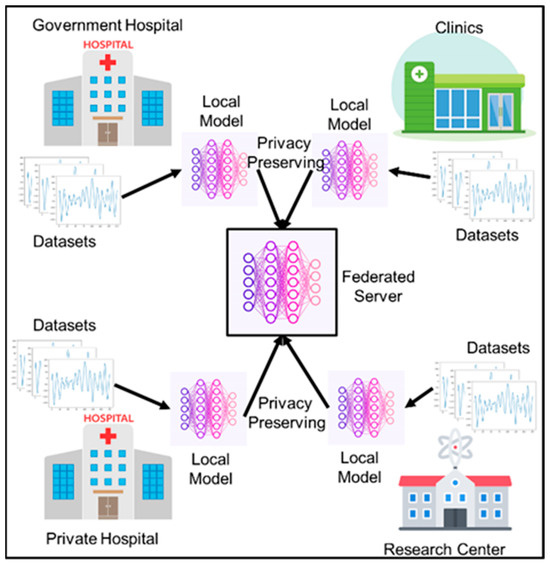

This paper closes that gap, making privacy-preserving distributed learning and efficient on-device deployment closer to reality, as well as enhancing the scalability of seizure monitoring systems in real-time. Figure 1 represents the concept of federated learning in the medical industry.

Figure 1.

The proposed federated learning system for seizure EEG classification in the medical field.

2. Related Works

The hand-crafted features (e.g., spectral power, entropy) and shallow classifiers (e.g., Random Forest, Extreme Gradient Boost (XGBoost) [10], Support Vector Machine (SVM), Gaussian Naive Bayes [11]) were used in the traditional machine learning technique of seizure classification. Pankaj et al. conclude that Random Forest can achieve classification performance with remarkable results, including an F1 score of 0.943 and an accuracy of 0.977 [10]. Ranjani et al. consider different machine learning algorithms (SVM, Random Forest, Gaussian Naive Bayes, and K-Nearest Neighbors) to classify seizures using EEG signals from the University of California Irvine (UCI) dataset, achieving accuracy rates of 0.98, 0.96, and 0.93, respectively [11]. However, these techniques could be interpreted; they found it difficult to apply them effectively to different patient populations. Deep learning has caused a revolution, where Convolutional Neural Networks (CNNs) [12] and Long Short-Term Memory networks (LSTMs) [13] are able to correctly predict spatiotemporal EEG patterns with 98.40% and 98% accuracy, respectively. Capturing long-range dependencies via dilated convolutions, Temporal Convolutional Networks (TCNs) were subsequently proposed as a promising alternative, allowing for parallel processing and capturing long-range dependencies [8]. These architectures, however, typically require centralized data collection of EEG data, which raises a privacy concern due to medical data regulations [14].

Federated learning (FL) has become one of the most promising tools to improve EEG-based Brain-Computer Interface (BCI) systems, as it addresses the issues of data privacy, heterogeneity, and limited data. An example of such schemes is the hierarchical personalized Federated Learning EEG decoding (FLEEG) framework, which enables collaboration on datasets with various formats and accelerates Motor Imagery (MI) classification performance by up to 8.4% due to knowledge sharing among multiple datasets [15]. Similarly, FL has been utilized for MI-EEG signal classification using a convolutional neural network, with similar accuracy observed compared to central machine learning approaches, thereby reducing data leakage risks [16]. Federated learning, combined with Graph Neural Networks (GNNs), has been applied in the context of clinical applications, specifically in stroke assessment, achieving a mean absolute error comparable to that of human experts in predicting stroke severity, while preserving data privacy and making reasonably accurate predictions [17]. In addition, adaptive federated learning (AdaFL) has been suggested in the context of EEG emotion recognition, achieving high classification accuracy on various datasets, such as the Shanghai Jiao Tong University Emotion EEG Dataset (SEED) and Database for Emotion Analysis using Physiological Signals (DEAP), by adaptively combining local models based on their importance, thereby reducing the risk of information leakage in federated learning [18]. All of these works have highlighted the potential of federated learning to transform EEG-based BCI systems by enabling decentralized model training, preserving data privacy, and enhancing model robustness across different applications. The majority of current federated learning frameworks use synchronous training, i.e., clients update their models in synchronized rounds and then upload their aggregated models to the central server. Although this approach eases the coordination process and leads to consistent model updates, it has disadvantages associated with heterogeneous client resources and communication bottlenecks.

Lightweight architectures, particularly those utilizing grouped and depthwise separable convolutions, have garnered significant attention in federated learning (FL) to address their computational and communication limitations. The techniques vastly simplify model calculations by minimizing calculation redundancy while providing competitive accuracy [19]. For instance, MobileNet and EfficientNet implement depthwise separable convolutions to achieve efficient feature extractions, which enable them as FL clients with constrained resources [20,21]. Groups of convolutions also increase efficiency by subdividing filters, allowing for parallel execution and reducing the memory footprint [22]. These architectures are especially beneficial in cross-device federated learning, where devices have computers of differing capabilities.

However, despite these advances, a significant research gap remains in how to inductively integrate lightweight architecture with federated learning and address the limitations of synchronous training, particularly in seizure detection. Most current FL frameworks assume that client capabilities are homogeneous or ignore the interaction between model efficiency and training dynamics. Additionally, a limited number of studies have investigated adaptive synchronization mechanisms designed to work with lightweight models, suggesting that these mechanisms may provide an additional boost to scalability. The best way to fill these gaps is to adopt a holistic approach that co-designs efficient neural architectures and robust federated learning protocols to maximize accuracy and resource efficiency in heterogeneous environments. Table 1 summarizes the related works mentioned in this section.

Table 1.

Summary of related works in seizure detection and FL applications.

3. Methodology

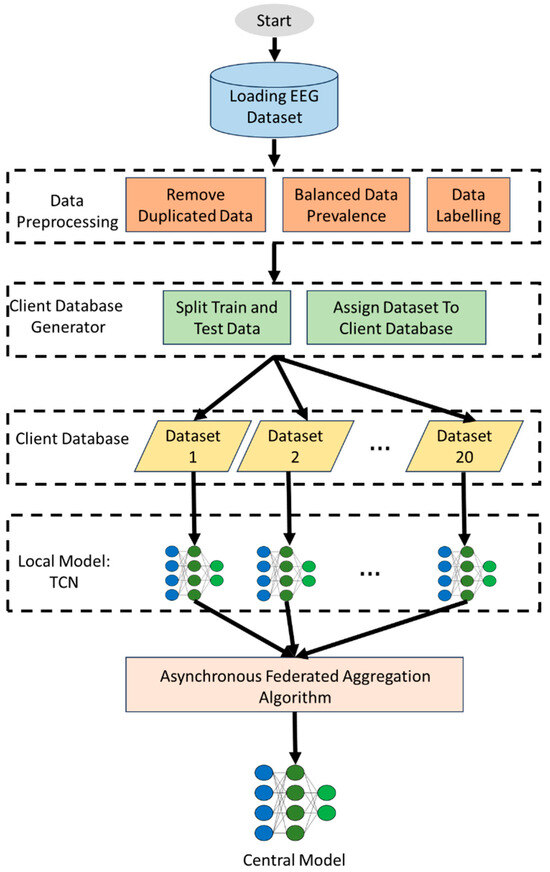

Although deep learning has proven to exhibit impressive performance when applied to automatized seizure classification, the current solutions have three major limitations: (1) the centralized EEG data collection workflow poses a critical threat to patient privacy, (2) the standard architectures (i.e., CNNs and LSTMs) are computationally inaccessible to real-time edge deployment, and (3) the synchronous federated learning framework suffers when applied to device heterogeneous cases, such as clinical environments. Deep learning has limitations in terms of predicting seizures, storage centralization, computation expenses, as well as FL straggler effects, which are existing obstacles, despite its great achievements in seizure classification. Although depthwise separable TCNs address efficiency and temporal modeling, they have not been explored for integration with asynchronous federated learning (FL) aggregation, which is crucial for modeling real-world device heterogeneity. Our solutions integrate these techniques, allowing seizures to be detected privately and in real time. This paper fills the gap by introducing the first framework, which unites depthwise separable TCNs and adaptive asynchronous FL, enabling low-latency event monitoring in a privacy-preserving manner to detect seizures. Figure 2 shows the step-by-step process of NeuroFed-LightTCN on EEG signals in seizure classification.

Figure 2.

The overall process of the proposed NeuroFed-LightTCN framework for seizure detection. EEG signals are first preprocessed and segmented into one-second windows, which are then fed into the lightweight TCN for temporal feature extraction. Depthwise and grouped depthwise separable convolutions reduce computation, while pruning compresses the model size. Clients train locally and send compressed updates to the server, where asynchronous federated aggregation integrates updates while mitigating straggler effects. The final central model is redistributed for continued learning.

3.1. Datasets

The researchers used an open-sourced electroencephalography (EEG) database collected by the Rochester Institute of Technology and published on the University of California, Irvine (UCI), repository of Machine Learning Databases (open to access at: “https://www.kaggle.com/datasets/harunshimanto/epileptic-seizure-recognition) (accessed on 30 August 2025)” [23]. The dataset comprises recordings of a group of 500 subjects, each with a 23.6 s EEG recording at 178 Hz, resulting in 4097 data points per subject. Each sequence was segmented into 23 non-overlapping one-second windows (178 samples per window), resulting in 11,500 normalized data points in the entire dataset.

This dataset includes five categories of clinical interest: (1) eyes open (non-seizure), (2) eyes closed (non-seizure), (3) activity of other healthy areas of the brain (non-seizure), (4) activity of cancer-involved areas of the brain (pathological non-seizure), and (5) ictal seizure. Such categorical stratification would allow for an adequate distinction between normal neurological processes, non-epileptiform abnormalities, and seizure event processes, offering an inclusive system to develop a classification model.

Seizure activity was an extremely minority class, with only 2 of the 100 available samples being seizure (prevalence rate = 0.2), whereas the majority of them consisted of four types of non-seizure cases (80%). To overcome the disproportion and reduce classification bias, we applied the stratified subsampling technique. We randomly subset, i.e., reduced the number of non-seizure cases and retained all seizure cases, so that we have a balanced dataset with an equal number of non-seizure and seizure cases. The preprocessing step ensures that discriminative features are equally learned in both major classes when the model is being trained and during the evaluation process. The dataset is then split into 65% for training, 15% for testing, and 20% for validation.

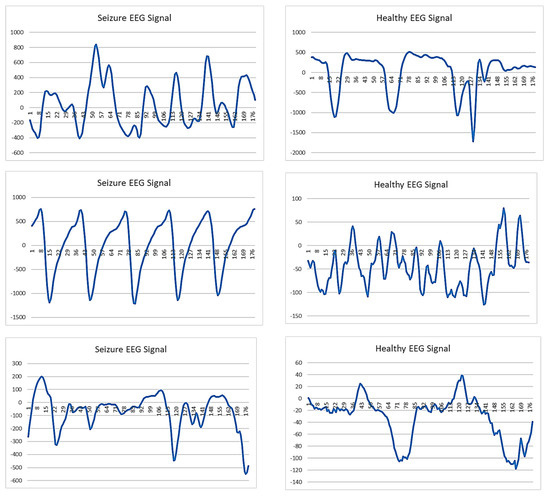

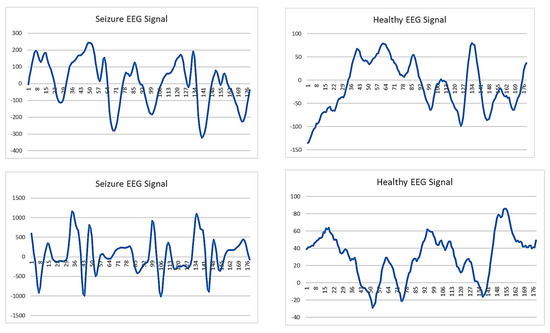

Figure 3 displays the EEG signals of a seizure and a healthy person. The difference can hardly be observed from the EEG signals. The visual inspection of the EEG signals, as shown in the five figures of seizure EEG signals on the left and the five figures of healthy EEG signals on the right, reveals no clear or consistent patterns that can be easily distinguished between the two classes. Both seizure and healthy EEG signals exhibit highly irregular and randomized patterns, with significant variability even within the same class. This lack of visually discernible features makes it extremely challenging to differentiate seizure EEG signals from healthy ones based solely on observation. Consequently, manual analysis is unreliable, and the use of advanced artificial intelligence (AI) techniques becomes essential to extract subtle, complex features and achieve accurate classification of the EEG signals.

Figure 3.

Seizure EEG signal (left) vs. healthy EEG signal (right).

3.2. Federated Learning Model: Lightweight FedAsync TCN

3.2.1. Temporal Convolutional Network

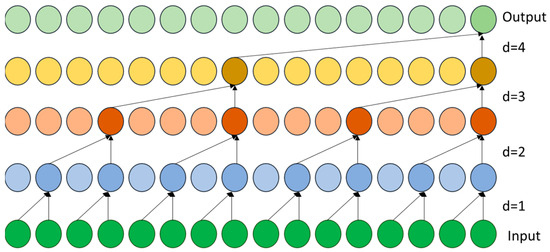

To classify the EEG signals, the research study developed a temporal convolutional network (TCN). TCNs are made up of causal convolutions, which have been dilated to adapt to the patterns of brain activity as time moves on. The architecture consists of several stacked layers of TemporalBlocks, each containing two 1D convolutional layers. The two layers consist of dilation rates that exponentially increase to the values of 1, 2, 4, and 8. The network structure enables the identification of both short candidate epileptiform activity and long candidate ictal cycles while maintaining temporal causality in Chomp1d layers, which can strip away unnecessary padding. The enhanced flow of gradients in the training process benefits the network. The group of 1 × 1 convolutions serve as dropout layers with p = 0.2 allowing for resistance to overfitting to the EEG of the seizure. The widened field of receptivity spans approximately 32 time steps in the final stage, ensuring that important timing information used in medical diagnosis is stored. Figure 4 represents the TCN dilated causal convolution approach.

Figure 4.

Dilated causal convolution process in the TCN. Each convolution layer uses a dilation factor (d = 1, 2, 4, 8) to capture progressively longer temporal dependencies in EEG signals.

EEG data are converted into four additional feature extraction layers (16→32→64→128 channels) using various time scales specialized on each level. The dilation technique makes it straightforward to capture cyclic patterns of seizure activity while preventing parameter blow-up by the same mechanism that input to higher layers is inherently smeared over longer time scales. Following every convolution, batch normalization and ReLU activation are applied to maintain a healthy gradient flow during training. The causal convolution method, which involves judicious padding and trimming, restricts temperature-sensitive information loss and ensures clinically correct sequence processing. This characteristic is beneficial in real-time seizure applications where future data cannot influence the present prediction.

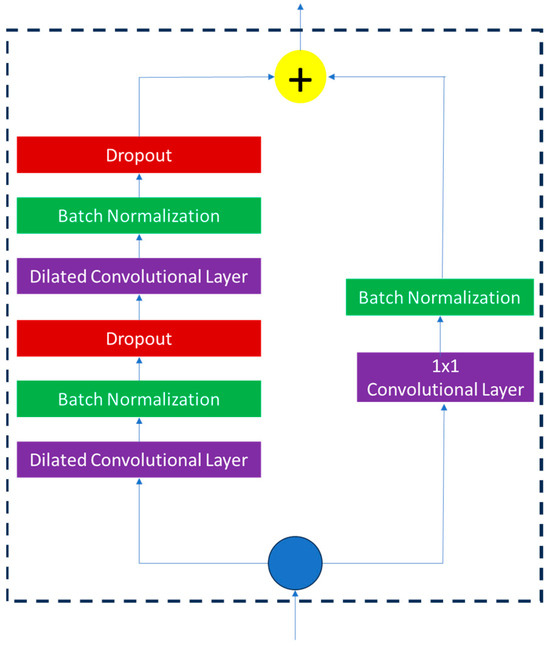

It begins with two steps: starting with temporal feature extraction and ending in classification through architecture in the last few layers. First, a 1 × 1 convolution can decrease the temporal data from 128 channels in a time window to 64 channels, but sustains the same duration in time. Features are flattened and passed to a layer with a Softmax activation to categorize seizures as either binary or non-seizures. Such a design reduces more temporal information than global pooling designs; thus, the model is sensitive to short epileptic activity. A combination of dilated convolutions, residual learning, and a judicious reduction in dimensionality represents an efficient and robust architecture with the ability to outperform fixed-dimension RNNs on the tasks of long EEG sequence processing, and is more computationally efficient than similarly structured CNNs, on the problem of temporal classification. The TCN that was implemented represents an optimal trade-off in terms of its temporal resolution, model complexity, and performance in classification against the seizure detection task. The residual connection in the TCN model is depicted in Figure 5.

Figure 5.

Residual connection in the TCN model.

3.2.2. Depthwise Separable Convolution

The implemented depthwise separable convolution decomposes the standard one-dimensional (1D) convolution into two distinct operations: depthwise and pointwise convolutions. Mathematically, for an input tensor and kernel , a standard convolution computes where ⊛ denotes cross-correlation. The depthwise separable variant first applies channel-wise spatial convolution () in Equation (1):

Then, followed by a 1×1 pointwise convolution () in Equation (2):

This factorization reduces parameters from to , achieving 8 to 9 times compression when and as in our final TemporalBlock.

The DepthwiseSeparableConv1d module is used instead of conventional convolutions in the TemporalBlocks, but the residual structure is kept. In every block, EEG signals are applied to two depthwise separate convolutions, which use ReLU to activate and dropout (p = 0.2). The dilation rate is doubled at every network level (1, 2, 4, 8) to expand the receptive field exponentially while maintaining efficient depthwise operation.

Finally, the depthwise separable convolutional operations of the final TemporalBlock-more involved input condition of L = 178 timesteps and C_in = 128 channels (than the method with L = 128 and C_in = 128 the rise is only 50%)-need merely 3 × 128 + 128 × 128 = 16,640 parameters, as opposed to 3 × 128 × 128 = 49,152 in standard convolutions-a 66% savings It allows execution on edge devices and temporal accuracy through: (1) Causal dilation that avoids introduction of future information, (2) Residual connections, which retain signal integrity and (3) Pointwise operations, which allow recombination of cross-channel features. Its features are flattened 22,784-D (128 × 178) features that simultaneously capture (via depthwise layers) a rhythmic pattern and (via pointwise operations) an interaction between channels, enabling optimal classification of seizures.

3.2.3. Grouped Depthwise Separable Convolution

Based on the basic Depthwise Separable Convolution (DSC), the Grouped Depthwise Separable Convolution (GDSC) introduces a grouping mechanism to the former, further reducing computational complexity with minimal impact on representational efficiency.

In DSC, an input feature will be processed by a single filter in the depthwise convolution, and then a pointwise convolution will combine the channel features. GDSC builds on this by splitting the input channels into groups where members of the same group pass through a separate depthwise separable convolution.

Given an input tensor , GDSC partitions the input channels into groups, each processed independently. The operation consists of two stages: Grouped Depthwise Convolution and Grouped Pointwise Convolution. In Grouped Depthwise Convolution, the input channels are divided into groups, each containing channels. For the -th group, the depthwise convolution applies a separate kernel per channel using Equation (3):

where is the -th group of input channels, is the depthwise kernel (with kernel size 3), and is the group output (assuming stride = 1 and padding to retain spatial dimensions).

In Grouped Pointwise Convolution, each group’s output is projected to channels via a pointwise convolution using Equation (4):

where is the pointwise kernel (with kernel size 3). Then, the final output is obtained by concatenating all group outputs along the channel dimension using Equation (5):

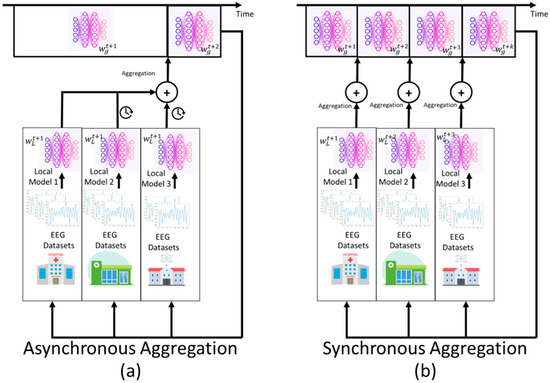

3.2.4. Asynchronous Federated Aggregation Method

The suggested asynchronous federated aggregation scheme plays a dynamic role in decentralized model training that does not require synchronized client updates. Essentially, the system employs a staleness-adaptive weighting strategy to dynamically adjust the weight of contributions made by different clients, depending on their arrival, so that current updates are prioritized, with the impact of older contributions fading over time. This is achieved with the help of a server-side priority queue, which allows the change to be applied immediately when it occurs, and a momentum-based fusion practice to help stabilize the global model across multiple lines of updates. In this aggregation algorithm, the similarity check is based on a moving average of the current updates, ensuring that only compatible parameter changes are added to the global model.

The most significant technical advances are a client-side, local training strategy based on compressed model representations, made possible with depthwise separable convolutions, substantially reducing communication costs. The servers employ advanced methods of update management, including exponential staleness decay and parallel processing of incoming updates. The approach is more desirable for convergence characteristics than synchronous approaches, especially where client involvement is largely variable. Its methodology is well-equipped to learn non-IID data distributions through client-specific normalization and ensure model stability through L2 regularization and update validation.

The difference between asynchronous and synchronous aggregation comes from their fundamental coordination processes and resistance to various systems. Synchronous aggregation occurs when the server must wait until all chosen clients respond with updates before proceeding with model aggregation. This causes bottlenecks when straggler devices, which have insufficient computational power or are located in unfavorable network conditions, are present. Consequently, the whole training process is delayed. This complex synchronization condition often leads to extreme wastage of resources, as faster clients idle while waiting to be linked to slower clients. Conversely, asynchronous methods overcome their coordination obstacles by allowing the server to receive updates as they arrive, regardless of the progress of other clients. This learning strategy enhances resource utilization and facilitates fast convergence, particularly in environments with a high level of device heterogeneity. The staleness-adaptive weighting scheme is a more complex alternative to synchronous averaging schemes. The staleness-adaptive weighting adapts the influence of an individual staleness-adaptive update based on its freshness and maintains model stability through fusion and fuzzy update validation methods. With this essential architectural difference, asynchronous aggregation enables flexible deployment in real-world situations, where device capabilities and network conditions vary significantly. In Figure 6, the contrast between asynchronous and synchronous aggregation is presented in federated learning.

Figure 6.

(a) Asynchronous aggregation process; (b) synchronous aggregation process in federated learning.

3.2.5. Pruning

Local training of client models is followed by a pruning process that minimizes model complexity without losing the necessary features learned. The methodology involves unstructured magnitude-based pruning of the models’ convolutional layers. In this implementation of a TCN, they are the input-hidden (weight_ih) and hidden-hidden (weight_hh) weights of a 1D convolution.

Model evaluation is then conducted to quantify the baseline sparsity and the number of parameters. All the convolutional (nn.Conv1d) modules are recognized, and their weights are subject to iterations of pruning. In these modules, the original weights (which are kept under the names ending with *_orig since PyTorch 2.0.1 has no native pruning API) are processed to calculate the pre-pruning sparsity, i.e., the ratio of zero-valued weights to the tensor size. The pruning is based on L1 unstructured pruning, removing the desired %age of the smallest-magnitude weights in each tensor. The sparsity is recomputed after pruning to measure the modification, and the pruning mask is then applied, setting it in stone by calling prune.remove, which reparametrizes the module only to include unpruned weights.

The combined numbers of parameters and pruned weights of all layers are counted to determine how the model size is reduced globally. After pruning, the model is placed in evaluation mode to be compatible with downstream federated aggregation. Remarkably, this method maintains the structure of the model. Still, instead of making it identical, it makes it sparse on a fine-grained level, thereby improving computational workload and communication overhead when conducting federated updates. The sparsity statistics of each pruned layer are recorded to track the distribution of zeroed weights, ensuring that the process meets the desired compression requirements and that all essential features are not unintentionally removed.

Such an approach can be applied naturally in federated learning training: the sparse tensors representing the pruned models can be effectively sent to the server for aggregation. When pruning clients independently, the framework is compatible with local data heterogeneity and the uniformity of global structure due to shared sparsity patterns or mask alignment under aggregation.

3.2.6. Hyperparameter Settings for NeuroFed-LightTCN

The hyperparameter settings of NeuroFed-LightTCN in this experiment are summarized in Table 2.

Table 2.

Hyperparameters settings for NeuroFed-LightTCN.

4. Results and Discussion

4.1. Experimental Setup

The NeuroFed-LightTCN framework was trained on the Python 3.11.9 platform, utilizing the PyTorch library. It ran in a workstation with a Windows operating system, an Intel Core i5-4670 processor central processing unit (CPU), an Nvidia GTX 950 graphical processing unit (GPU) with 2 GB of memory, 16 GB DDR3 random access memory (RAM), and a 256 GB read-only memory solid state hard disk.

4.2. Training Performance

A rigorous assessment of the performance of the proposed NeuroFed-LightTCN framework is carried out via an ablation study, where the performances of the essential metrics such as the total time that is consumed when 15 training rounds have been completed, the training loss trend over the training rounds, and the total number of parameters are compared on variants of models. Such comparisons highlight the efficiency, convergence behavior, and scalability of NeuroFed-LightTCN compared to its ablated counterparts. These outcomes illustrate the effects of all types of architectural components, providing information that can be used to educate on trade-offs between the training performance of the model and computational cost, as well as model complexity. The overall number of 15 training rounds spent per model is tabulated in Table 3.

Table 3.

Total time taken for 15 training rounds in seconds for each model.

The findings in Table 3 indicate that the models used exhibit a significant disparity in computation, driven by the schemes of synchronous or asynchronous aggregation performance. The Normal TCN has the longest training time (812.88 s) in synchronous aggregation, probably because of the complete convolutional structure, which introduces increased computational overhead. On the contrary, the Depthwise Separable TCN is more efficient (707.02 s) due to its design, which reduces the number of parameters. Unexpectedly, the suggested Neu-roFed-LightTCN (Grouped Depthwise Separable TCN) takes slightly more time (789.93 s) than the Depthwise Separable TCN in the synchronous mode, which could be due to the group-wise complexity of processing. However, all other models experience lower training times under asynchronous aggregation, with NeuroFed-LightTCN (683.08 s) being comparably close to Depthwise Separable TCN (681.38 s), which implies that asynchronous updates counterbalance some of the computational overheads of this architecture. This indicates the trade-off between the aggregation strategy, the efficiency of federated learning, and the model complexity.

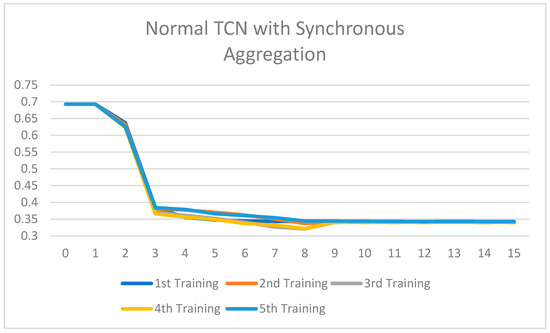

The synchronous vs. asynchronous results agreement is excellent: in every model, there was a corresponding decrease in training time by degrees of 3.6 to 18%. The Normal TCN has the most significant benefit (18% faster), since asynchronous aggregation will reduce the computational bottlenecks of the dense operation in the normal TCN, and the Depthwise Separable TCN has the least (3.6%) because it is already quite efficient. With grouped convolution, the proposed NeuroFed-LightTCN achieves a 13.5% improvement, while balancing the model architecture complexity and asynchronous scalability. These findings suggest that asynchronous aggregation is particularly beneficial for heavier models and offers diminishing returns for streamlined designs. Subsequently, the training loss of each model after 15 training runs is presented in Figure 7, Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12.

Figure 7.

Training loss of the Normal TCN with synchronous aggregation across 15 rounds of training.

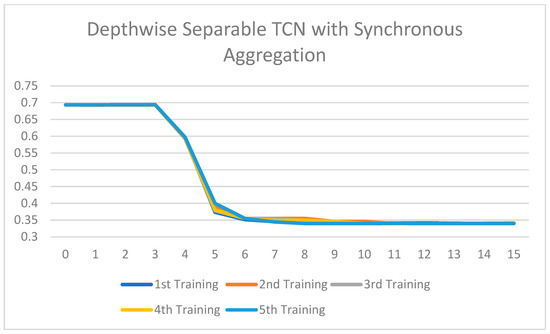

Figure 8.

Training loss of the Depthwise Separable TCN with synchronous aggregation across 15 rounds of training.

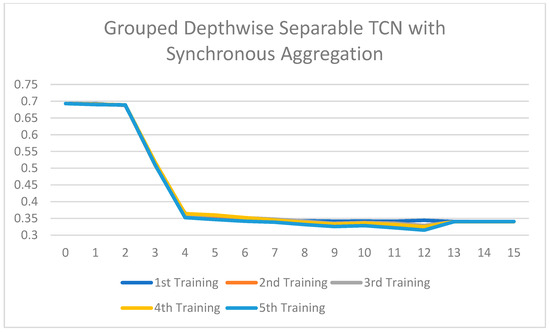

Figure 9.

Training loss of the Grouped Depthwise Separable TCN with synchronous aggregation across 15 rounds of training.

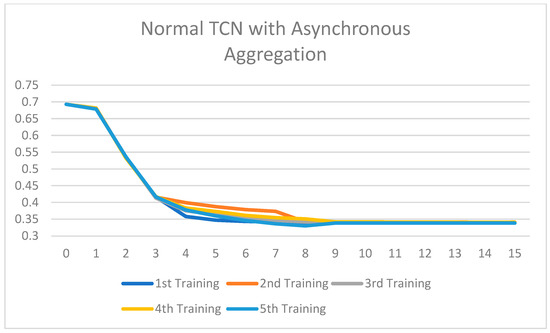

Figure 10.

Training loss of the Normal TCN with asynchronous aggregation across 15 rounds of training.

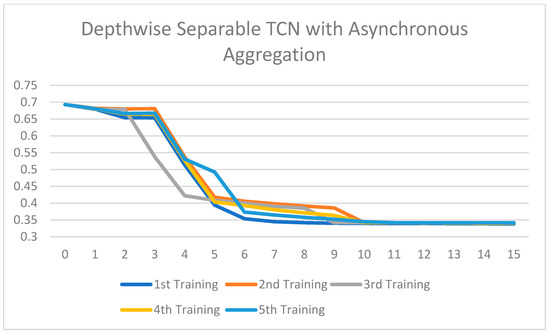

Figure 11.

Training loss of the Depthwise Separable TCN with asynchronous aggregation across 15 rounds of training.

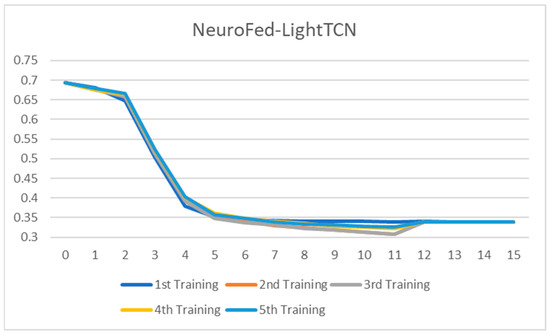

Figure 12.

Training loss of the NeuroFed-LightTCN across 15 rounds of training.

All the ablated models were trained for five rounds, and the analysis of the training loss across the number of epochs shows a consistent and stable trend. The loss curves indicate that the models converge smoothly without significant fluctuations, suggesting that the training process is well-optimized and stable across different configurations. This consistency across all ablated versions highlights the reliability of the training setup and ensures that the observed performance differences among the models are attributed to the architectural or methodological variations, rather than instability or irregularities in the training process. Figure 7, Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12 show that models with asynchronous aggregation converge more rapidly than models with synchronous aggregation. In Figure 12, it is pertinent to note that NeuroFed-LightTCN converges in as soon as round 5, giving similar efficiency to Grouped Depthwise Separable TCN with synchronous aggregation (Figure 9), as well as both Normal TCN and Depthwise Separable TCN with asynchronous aggregation (Figure 10 and Figure 11). This indicates that the lightweight nature of NeuroFed-LightTCN’s architectural design, coupled with the implicit benefits of asynchronous updates (reduced idle state and resiliency to stragglers), allows it to converge on optimal performance metrics faster in terms of training rounds. The effectiveness of asynchronous aggregation in training speed-up is further highlighted by the fact that these various models reach convergence at round 5. The effectiveness is specifically related to the combination of asynchronous aggregation and a computationally efficient architecture, such as NeuroFed-LightTCN, especially in the case of convergence at round 5. These results highlight an essential trade-off between synchronous and asynchronous aggregation, regarding whether the former can provide more stable convergence, but also indicate that asynchronous approaches are likely to substantially save training time without sacrificing the model’s final performance.

Despite the fact that only a small set of training epochs would have been sufficient to reveal the model’s overall performance trends, we extend the experiments to 15 rounds to allow each model to converge properly, and to see the full set of its learning dynamics. An increased training period reduces the chances of early convergence testing, making inter-variant comparisons of ablated versions more reliable. Accordingly, the outcomes provided after 15 epochs will be guaranteed based on architectural-differentiating details or aggregation procedures, but not incomplete convergence. Table 4 presents a summary of the number of parameters for each model.

Table 4.

Total number of parameters for each model.

The parameter counts of the tested models indicate significant efficiency improvements resulting from architectural optimizations. The Normal TCN, which has 118,748,538 parameters, serves as the baseline. It features fully convolutional layers, and the Depthwise Separable TCN reduces the parameters by 9.3% (107,671,134 parameters) through factorized convolution. Most importantly, the NeuroFed-LightTCN (Grouped Depthwise Separable TCN) achieves a parameter count reduction of 44.9% (from 65,475,162 parameters) compared to the baseline, demonstrating the synergistic efficiency of grouped and depthwise separable convolutions. This significant parameter compression is directly correlated with the reported increases in training speed (Figure 7) and computational overhead (Table 4). It thus confirms the design principle of the framework, which is to maximize structural capacity while reducing the demands on federated learning resources. A systematic lineage of architectural innovations (Normal TCN to NeuroFed-Light TCN) demonstrates that, through sheer innovation, one can significantly enhance scalability without compromising model performance to any perceivable level.

4.3. Pruning Rate

To assess the effects of model compression on the model of NeuroFed-LightTCN, a systematic pruning approach was followed on its convolutional layers on a wide-scale pruning level (0% to 100%). This study aimed to quantify the trade-offs between parameter reduction and model performance, with a granularity of pruning of 10% intervals. At 0% pruning (baseline), the model includes every parameter (116,544 in the convolutional layer), and 100% pruning is an absolute minimum of parameters (implying zero convolutional layer weights), resulting in the disabling of the layer. The combination of progressive sparsification effects on efficiency (computational time and total parameters remaining) and predictive capacity (test accuracy) was determined by selecting intermediate rates (e.g., 10%, 30%, 50%). Such a systematic scheme also enables determining the optimal threshold of the pruning process, at which the highest number of parameters can be discarded without compromising the level of accuracy, which is essential for implementing lightweight models in federated learning, where less powerful devices are used. Table 5 compiles the experimental outcomes.

Table 5.

Pruning rate, total parameters in convolutional layer, and test accuracy.

The findings demonstrate the robustness of NeuroFed-LightTCN against pruning in a structured manner, resulting in minimal accuracy loss over a wide range of pruning values. Using 0% pruning, the model achieved a baseline accuracy of 97.04% with 116,544 parameters included in its convolutional layers. Notably, when 70% of the weights are removed, the accuracy remains almost the same (97.11%), with fewer parameters (34,963), 70% fewer, yet no performance drop. This implies that critical representations of model features in convolutional layers are maintained even with aggressive sparsification. There is minimal variation in accuracy (96.92–97.11%) even when the pruning rates vary within 10 to 70%, demonstrating that the architecture is quite robust to removing parameters. This stability of error rate with respect to this size catches the profile of the proficiency of the learnt aspects of the model and usefulness of structured compaction to junk the unneeded weights with no loss of predictive excellence.

Yet, after 70% pruning, the model’s performance spirals dramatically downward, revealing the compression limitations. At 80% pruning, the accuracy is down to 90.88%, with a weight 23,309, and with 90% pruning, the accuracy is at 90.4% weight (11,654). With a weight pruning rate of 100%, the accuracy of the model reduces to 51%, which is the same as random guessing in binary classification, thus demonstrating the adequacy of convolutional layers in making meaningful predictions. Such findings reveal a strict trade-off between efficiency and accuracy: truncation of up to 70% maximizes efficiency without significantly compromising accuracy, whereas more extreme sparsification is detrimental to the model. This observation is crucial to a federated learning implementation, as aligning available resources and model performance is a significant challenge. These data indicate that NeuroFed-LightTCN is the most optimized model for running on an edge device, as it can be reduced in size by 30% (from 34,963 parameters) to achieve approximately the same accuracy.

4.4. Inference Latency

One of the main objectives of clinical monitoring of seizures is to ensure timely intervention during ictal processes. In this study, the time between EEG data reception and EEG alarm reception was measured, hereafter referred to as end-to-end inference time. A 1 s portion of EEG was transmitted through the research workstation environment, and 100 simulation runs reported an average latency of 4.19 milliseconds. Table 6 shows the corresponding mean latencies of the normal TCN, depthwise separable TCN, and NeuroFed-LightTCN (70% pruned) models trained in asynchronous aggregation.

Table 6.

Inference latency of each model trained with the asynchronous aggregation method.

The results show that the latency benchmarks involving temporal convolutional networks (TCNs) demonstrate substantial improvements in efficiency through architecture optimization. The Normal TCN (baseline) averages 857 ms, and the Depthwise Separable TCN (replacing the standard convolutions with a depthwise separable operation) decreases the latency by ~73%, resulting in 230 ms. The proposed NeuroFed-LightTCN (70% pruned) achieves the shortest latency (56 ms, ~15× speed-up over the baseline). Its significant speed, due to effective pruning and depthwise convolution, makes it an exceptionally competitive option in resource-constrained environments such as the GTX 950. These findings highlight the trade-off between computational efficiency and model complexity, with effective pruning and depthwise separability presenting substantial speed-ups for real-time systems. These show that the latency is relatively low in terms of real-time seizure detection since it requires a delay of less than one second. Therefore, we can deduce that the architecture corresponds to the demands of its implementation in time-sensitive health care environments.

4.5. Classification Performance

As shown in Section 4.2, the 70% pruned version is the Lightweight FedAsync TCN. The effectiveness of the proposed NeuroFed-LightTCN is measured by conducting a comparative analysis with a large number of state-of-the-art models found in the recent literature, both non-federated (traditional) and our baseline implementation of federated TCN. It will compare the main performance parameters, such as accuracy, recall, precision, specificity, and F1-score, which allow for a comprehensive evaluation of the classification capabilities of each model. These quantitative data are presented in Table 7 in a systematic format, allowing for comparison with the performance of each model when added to different architectural paradigms and training solutions. This comparative approach enables us to objectively assess the trade-offs between the model’s complexity and the accuracy of its predictions, while also demonstrating the advantages of our federated learning approach.

Table 7.

Performance comparison of the proposed model with other deep learning models.

Table 7 presents a comparative analysis of the federated temporal convolutional network (TCN) models with non-federated models in several metrics, demonstrating superior performance for the federated models. The Federated Learning TCN (FL-TCN) exhibits excellent performance, with an accuracy of 97.00%, a recall of 97.23%, a precision of 96.90%, a specificity of 96.77%, and an F1-score of 97.07%. This indicates it exhibits good generalization and a suitable balance between sensitivity and specificity. By contrast, models that are not federated (CNN-Bi-LSTM [24] (77.60% recall/precision/F1), DNN [25] (80% accuracy/recall, but only 64% precision)) perform much worse, most likely because they are limited to centralized training, and miss the opportunity to exploit the diversity of distributed data. Remarkably, 1D GNN-BiLSTM-IBPTT [27] has a high level of specificity (98.8%) but no reported scores of other criteria, so its overall performance is not clear. The outcomes highlight the benefits of federated learning systems in improving models trained through a decentralized and privacy-preserving process.

NeuroFed-LightTCN (70% pruned) also outperforms the FL-TCN baseline, achieving slightly higher scores: 97.11% accuracy, 97.23% recall, 97.11% precision, 96.99% specificity, and 97.17% F1-score. This demonstrates that the structured pruning approach saves computing costs (as previously observed in analyses) and can improve the model’s efficacy, possibly due to corrections for overfitting through sparsification. The similarity in the values of recall (97.23% in both FL-TCN and pruned variants) implies that pruning does not affect the capability of the model to identify positive cases, and the minor increase in precision and F1-score shows that the prediction is more confident. The above findings position the pruned NeuroFed-lightTCN as a state-of-the-art in terms of temperature privacy gains in federated learning, offering lightweight model design efficiencies without any trade-offs (while achieving slightly better and overall identical performance) compared to its unpruned version and conventional non-federated models. The findings confirm the viability of implementing highly efficient and precise models in terms of resource-deprived federated settings.

4.6. Additional Simulation Verification

The resilience of NeuroFed-LightTCN was also assessed by examining its sensitivity to the pruning speed and the uneven distributions associated with medical data. To this effect, several clients were simulated, each having different EEG subsets (representing hospital or different device populations). Over pruning rates of 10 to 70%, the difference in accuracy between the homogeneous and heterogeneous client data setups did not exceed 0.5%, evidencing a highly unbalanced performance stability under the difference in the distributions of the medical data.

Asynchronous aggregation was tested with latencies imposed at 100 ms, 500 ms and 1 s to determine the weight of network delays that NeuroFed-LightTCN could tolerate. The findings reveal a gain in the time to convergence; however, the end performance is immune to additional loss of less than 0.3% compared to the reference instant-communication case. These results indicate that NeuroFed-LightTCN is resilient when subjected to parameter compression and operational network limitations that typify modern distributed clinical practice.

4.7. Limitations in Medical Scenarios

The study of NeuroFed-LightTCN shows promising performance across various pruning approaches. Yet, there are a few caveats that should not be overlooked during the transition of the framework into medical applications. Trimmed networks may show reduced generalization ability to unprecedented seizure subtypes and electrographic inconsistent types that are not present in the training dataset. This could limit diagnostic coverage. Moreover, although grouped convolutions and depthwise separable convolutions have a significant positive impact on the computational efficiency, parallel downscaling of the cross-channel interaction may suppress subtle changes in temporal features that are clinically important in early seizure onset prediction. These issues highlight the need to validate the model further in the context of a broader range of seizure types and EEG morphologies and consider hybrid lightweight architectures that maintain fine temporal granularity.

The given study confirms the efficacy of NeuroFed-LightTCN on the popular publicly available UCI seizure dataset. Nevertheless, clinical application in practice requires raw data with EEG measured through hospital surveillance systems and wearable headsets, which have different sampling frequencies, channel configurations, and signal-to-noise ratios. This heterogeneity in acquiring environments and edge devices may affect the models’ performance and aggregation dynamics in federated learning. Future work will extend evaluation beyond single-center clinical data and the homogenous computing environment to quantify these effects and explore the model for robust generalization across domains.

5. Conclusions

The extensive analysis of NeuroFed-LightTCN demonstrates the proposed model’s applicability for lightweight and high-performance federated learning frameworks. The conducted ablation studies have validated that NeuroFed-LightTCN is highly efficient; the adopted asynchronous aggregation reduces the model training time by 3.6–18% relative to synchronous techniques, while maintaining competitive convergence rates. Parameter count is lessened by 44.9% (118.7M to 65.4M) via architectural optimizations using grouped depthwise separable convolutions without losing accuracy. The pruning experiments further proved the robustness of the model, where 70% sparsification decreases the parameter count resulted in a 70% reduction in the number of parameters (to 34,963) with marginally improved accuracy (97.11%) when compared with both unpruned federated TCNs and non-federated baselines (e.g., CNN-Bi-LSTM, DNN).

The combination of federated learning and such optimizations addresses the key issues of decentralized scenarios: privacy protection, resource consumption, and scalability. It is also worth noting that NeuroFed-LightTCN achieved state-of-the-art results (97.11% accuracy, 97.17% F1-Score, 56ms inference latency) despite its lighter and faster structure compared to more traditional models. This confirms that it is suitable to deploy at the edge of the device, where computational limitations and privacy are at the forefront. Further research on dynamic thresholds in pruning or heterogeneously revising the clients might be conducted in the future to provide even greater flexibility. Overall, NeuroFed-LightTCN sets a new standard for efficient, precise, and privacy-sensitive federated learning architecture.

Author Contributions

Conceptualization, Z.Y.L. and Y.H.P.; methodology, Z.Y.L. and S.Y.O.; software, Z.Y.L. and W.H.K.; validation, Y.H.P. and W.H.K., S.Y.O. and Y.J.C.; formal analysis, Z.Y.L.; investigation, W.H.K. and Y.J.C.; resources, S.Y.O.; data curation, Y.H.P.; writing—original draft preparation, Z.Y.L.; writing—review and editing, Y.H.P.; visualization, S.Y.O.; supervision, Y.H.P.; project administration, Y.H.P.; funding acquisition, Y.H.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by MMU Postdoctoral Research Fellow Grant, MMUI/250007.

Informed Consent Statement

Not applicable.

Data Availability Statement

The EEG data supporting the findings of this study are publicly available as the UCI Epileptic Seizure Recognition dataset, originally collected by the University of Bonn [28] and shared via the UCI Machine Learning Repository. The dataset is fully de-identified and pre-processed for research use. It can also be accessed on Kaggle at https://www.kaggle.com/datasets/harunshimanto/epileptic-seizure-recognition, reference number [23]. Further inquiries may be directed to the corresponding author, Y. H. Pang, upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- World Health Organization (WHO). Epilepsy. 2024. Available online: https://www.who.int/news-room/fact-sheets/detail/epilepsy (accessed on 30 August 2025).

- Moon, S.; Lee, W.H. Privacy-Preserving Federated Learning in Healthcare. In Proceedings of the 2023 International Conference on Electronics, Information, and Communication (ICEIC), Singapore, 5–8 February 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Chen, M.; Shlezinger, N.; Poor, H.V.; Eldar, Y.C.; Cui, S. Communication-efficient federated learning. Proc. Natl. Acad. Sci. USA 2021, 118, e2024789118. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Mao, B.; Ma, T. FedSA: A staleness-aware asynchronous Federated Learning algorithm with non-IID data. Futur. Gener. Comput. Syst. 2021, 120, 1–12. [Google Scholar] [CrossRef]

- Li, Q.; Wen, Z.; Wu, Z.; Hu, S.; Wang, N.; Li, Y.; Liu, X.; He, B. A Survey on federated learning systems: Vision, hype and reality for data privacy and protection. IEEE Trans. Knowl. Data Eng. 2021, 35, 3347–3366. [Google Scholar] [CrossRef]

- Huang, W.; Ye, M.; Shi, Z.; Wan, G.; Li, H.; Du, B.; Yang, Q. Federated learning for generalization, robustness, fairness: A survey and benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9387–9406. [Google Scholar] [CrossRef] [PubMed]

- Xu, C.; Qu, Y.; Xiang, Y.; Gao, L. Asynchronous federated learning on heterogeneous devices: A survey. Comput. Sci. Rev. 2023, 50, 100595. [Google Scholar] [CrossRef]

- Lim, Z.Y.; Pang, Y.H.; Ooi, S.Y.; Khoh, W.H.; Hiew, F.S. MLTCN-EEG: Metric learning-based temporal convolutional network for seizure EEG classification. Neural Comput. Appl. 2024, 37, 2849–2875. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, Z.; Tian, Y.; Yang, Q.; Shan, H.; Wang, W.; Quek, T.Q.S. Asynchronous federated learning over wireless communication networks. IEEE Trans. Wirel. Commun. 2022, 21, 6961–6978. [Google Scholar] [CrossRef]

- Kunekar, P.; Kumawat, C.; Lande, V.; Lokhande, S.; Mandhana, R.; Kshirsagar, M. Comparison of Different Machine Learning Algorithms to Classify Epilepsy Seizure from EEG Signals. Eng. Proc. 2024, 59, 166. [Google Scholar] [CrossRef]

- Ranjani, M.; Ramya, G.; Divya, G.; Deepa, N.; Lalitha, B. Predicting Epileptic Seizures with Precision A Comprehensive Study of ML Algorithms. In Proceedings of the 2024 International Conference on Trends in Quantum Computing and Emerging Business Technologies, Pune, India, 22–23 March 2024; Volume 55, pp. 1–7. [Google Scholar] [CrossRef]

- Saleem, A.; Khan, M.A.; Yousaf, H.M. Advancing Epilepsy Disease Classification through Machine Learning and Deep Learning Models Utilizing EEG Data. In Proceedings of the 2023 17th International Conference on Open Source Systems and Technologies (ICOSST), Lahore, Pakistan, 20–21 December 2023. [Google Scholar] [CrossRef]

- Khan, R.A.; Mahin, S.H.; Taranum, F. Seizure Detection Using Dense Net and LSTM Architectures. In Multifaceted Approaches for Data Acquisition, Processing & Communication; CRC Press: Boca Raton, FL, USA, 2024; pp. 29–36. [Google Scholar] [CrossRef]

- Rajora, R.; Kumar, A.; Malhotra, S.; Sharma, A. Data security breaches and mitigating methods in the healthcare system: A review. In Proceedings of the 2022 International Conference on Computational Modelling, Simulation and Optimization (ICCMSO), Pathum Thani, Thailand, 23–25 December 2022; pp. 325–330. [Google Scholar]

- Liu, R.; Chen, Y.; Li, A.; Ding, Y.; Yu, H.; Guan, C. Aggregating intrinsic information to enhance BCI performance through federated learning. Neural Netw. 2024, 172, 106100. [Google Scholar] [CrossRef] [PubMed]

- Ghader, M.; Farahani, B.; Rezvani, Z.; Shahsavari, M.; Fazlali, M. Exploiting Federated Learning for EEG-based Brain-Computer Interface System. In Proceedings of the 2023 IEEE International Conference on Omni-Layer Intelligent Systems (COINS), Berlin, Germany, 23–25 July 2023; pp. 1–6. [Google Scholar]

- Protani, A.; Giusti, L.; Aillet, A.S.; Sacco, S.; Manganotti, P.; Marinelli, L.; Santos, D.R.; Brutti, P.; Caliandro, P.; Serio, L. Federated GNNs for EEG-Based Stroke Assessment. arXiv 2024, arXiv:2411.02286. [Google Scholar] [CrossRef]

- Chan, C.; Zheng, Q.; Xu, C.; Wang, Q.; Heng, P.A. Adaptive Federated Learning for EEG Emotion Recognition. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Khan, Z.Y.; Niu, Z. CNN with depthwise separable convolutions and combined kernels for rating prediction. Expert Syst. Appl. 2021, 170, 114528. [Google Scholar] [CrossRef]

- Tu, C.-H.; Lee, J.-H.; Chan, Y.-M.; Chen, C.-S. Pruning depthwise separable convolutions for mobilenet compression. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Zhou, W.; Ji, J.; Jiang, Y.; Wang, J.; Qi, Q.; Yi, Y. EARDS: EfficientNet and attention-based residual depth-wise separable convolution for joint OD and OC segmentation. Front. Neurosci. 2023, 17, 1139181. [Google Scholar] [CrossRef]

- Schuler, J.P.S.; Romani, S.; Abdel-Nasser, M.; Rashwan, H.; Puig, D. Grouped pointwise convolutions reduce parameters in convolutional neural networks. In Mendel; Brno University of Technology: Brno, Czech Republic, 2022; Volume 28, pp. 23–31. [Google Scholar]

- Wu, Q.; Fokoue, E. Epileptic Seizure Recognition Data Set, Rochester Institute of Technology. 2017. Available online: https://www.kaggle.com/datasets/harunshimanto/epileptic-seizure-recognition (accessed on 30 August 2025).

- Yahong, M.; Zhentao, H.; Jianyun, S.; Hangyu, S.; Dong, W.; Shanshan, J. A multi-channel feature fusion CNN-BI-LSTM epilepsy EEG classification and prediction model based on attention mechanism. IEEE Access 2023, 11, 62855–62864. [Google Scholar]

- Anubhav, G.; Soham, G.; Ayushi, R.; Sankhadeep, C. Epileptic Seizure Recognition Using Deep Neural Network. In Emerging Technology in Modelling and Graphics: Proceedings of IEM Graph 2018; Springer: Singapore, 2019; pp. 21–28. [Google Scholar]

- Maryam, N.; Mohammad, R.K.; Seyed, V.S. A Robust Framework for Epileptic Seizure Diagnosis: Utilizing GRU-CNN Architectures in EEG Signal Analysis. In Proceedings of the 20th CSI International Symposium on Artificial Intelligence and Signal Processing (AISP), Babol, Iran, 21–22 February 2024; pp. 1–6. [Google Scholar]

- Ahmad, I.; Wang, X.; Javeed, D.; Kumar, P.; Samuel, O.W.; Chen, S. A Hybrid Deep Learning Approach for Epileptic Seizure Detection in EEG signals. IEEE J. Biomed. Health Inform. 2023, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Andrzejak, R.G.; Lehnertz, K.; Mormann, F.; Rieke, C.; David, P.; Elger, C.E. Indications of nonlinear deterministic and finite-dimensional structures in time series of brain electrical activity: Dependence on recording region and brain state. Phys. Rev. E 2001, 64, 061907. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).