Big Loop and Atomization: A Holistic Review on the Expansion Capabilities of Large Language Models

Abstract

1. Introduction

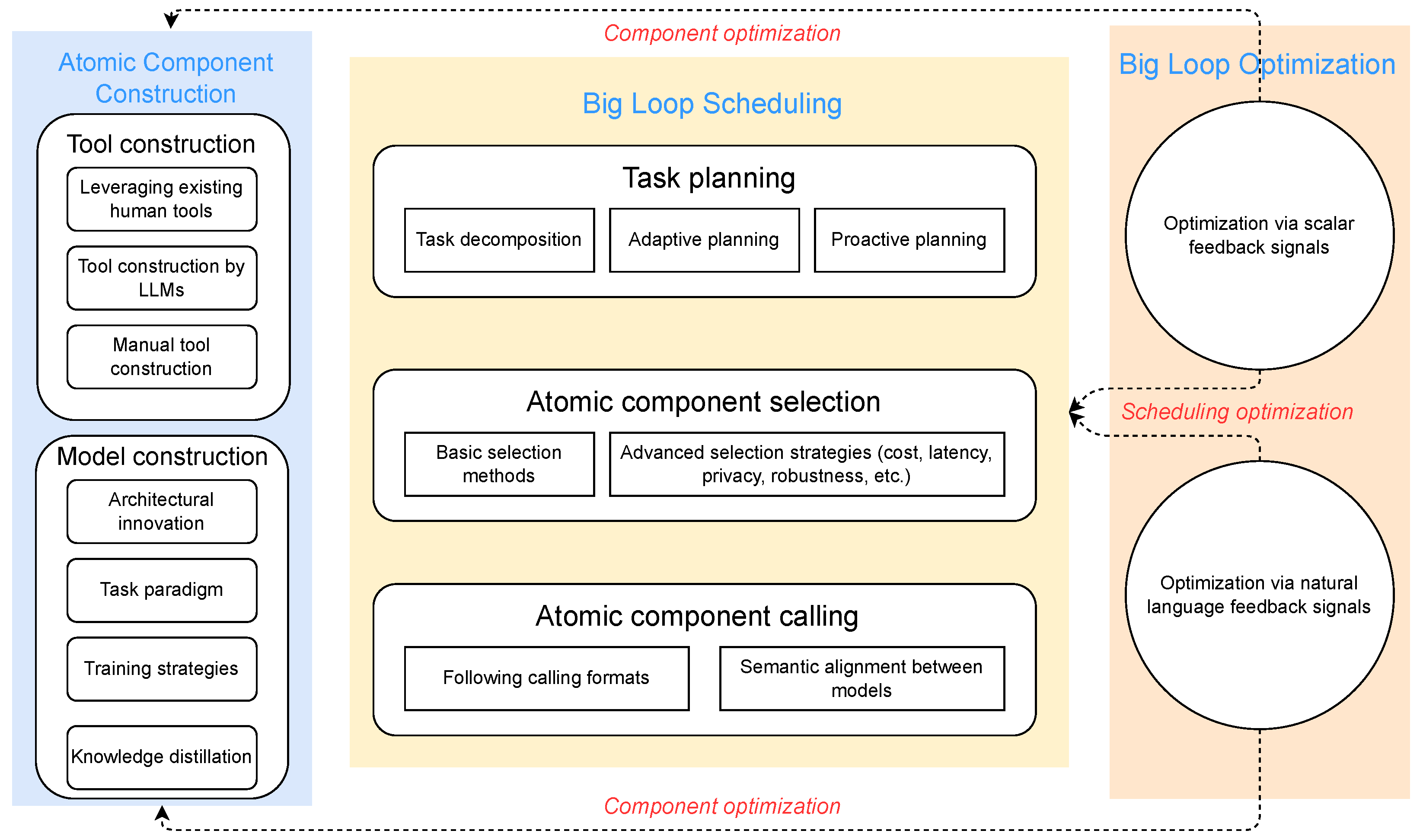

- First, we propose a systematic Construction–Scheduling–Optimization taxonomy for Big Loop and Atomization, deconstructing the overall system into core methodological components. This allows us to build a comprehensive theoretical framework and provide clearer definitions for both concepts. This is also the first systematic review dedicated specifically to Big Loop and Atomization.

- Second, by applying the Big Loop and Atomization framework, we unify and integrate fragmented research efforts—especially by treating tools and models as atomic components—thereby fostering cross-pollination among different research paradigms.

- Finally, the review delves deeply into real-world challenges and future directions of Big Loop and Atomization, offering a forward-looking perspective to support the transition of these technologies from research to real-world deployment.

2. Definition of Big Loop and Atomization

2.1. Classification of Atomic Components

2.1.1. Tool Components

- Non-learnability: The logic of the tool is fixed and cannot be optimized through data-driven training;

- Strict formatting: Calling must adhere to predefined input–output formats; missing parameters may cause failure;

- Deterministic execution: The same input will always yield the same output, with little to no randomness in execution.

2.1.2. Model Components

- Learnability: They can be optimized through data training to improve task performance;

- Flexible interaction: They impose looser constraints on input formats than tools, tolerating semantically equivalent variations in expressions;

2.1.3. Agent Components

2.2. Hierarchical Composability of Big Loop

- Task decomposition mechanism: Complex application scenarios can be decomposed into multiple subtasks, each corresponding to an independent sub-loop. For example, a medical diagnosis system can be divided into four sub-loops: “symptom collection–examination suggestion–diagnostic reasoning–treatment planning”;

- Component reusability principle: Basic atomic components can be reused across different loops; for instance, the retrieval tool can serve both the symptom collection and diagnostic reasoning stages;

- Standardized interaction protocols: By defining unified interaction interfaces (e.g., function call formats, data transmission protocols), smooth coordination across loops can be ensured.

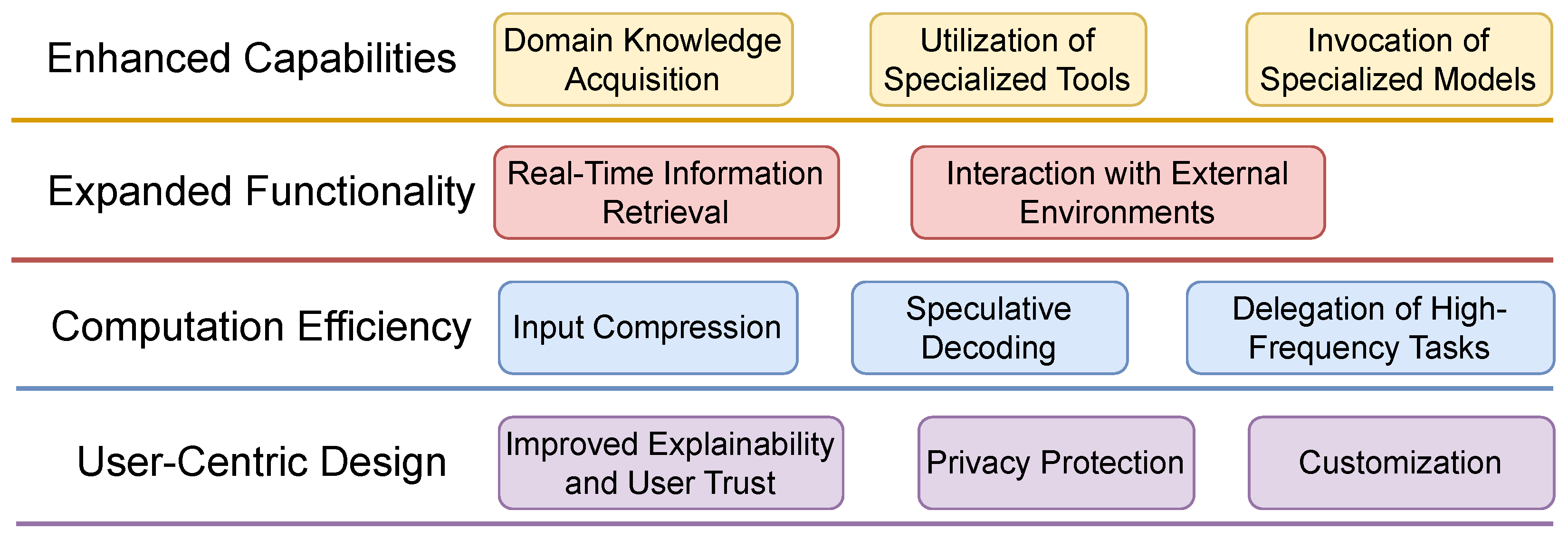

3. Advantages of Big Loop Systems

3.1. Enhanced Capability

- Acquisition of Domain Knowledge: Big Loop systems enhance the ability of LLMs to dynamically access external tools, enabling them to retrieve and integrate external knowledge. By integrating database tools, LLMs can access structured databases to perform specific information retrieval and complex queries, effectively expanding their knowledge base [31,32,33]. The retrieval process often relies on specific atomic models, such as vector models [34,35,36,37,38], query optimization modules [39,40,41], and re-ranking components [42,43].

- Use of Domain-Specific Tools: Integrating tools specific to certain domains enhances the LLM’s domain knowledge [22,23,24,25]. For instance, LLMs can use online calculators or mathematical tools to perform complex calculations, solve equations, and conduct statistical analyses [23,44,45,46]; external programming resources (such as Python compilers and interpreters) allow LLMs to execute and refine code based on feedback, improving code quality [47,48,49]. This approach compensates for the lack of professional knowledge and enhances practical utility in specialized scenarios.

- Calling of Specialized Models: In domains such as finance [50,51], law [52,53], healthcare [54,55], and education [56,57], dedicated models can be trained to meet specific needs. Even though general-purpose models have evolved into multi-modal ones, they still struggle with certain niche modalities (e.g., hyperspectral images [58], point clouds [59], LiDAR [60], MRI [61]). General models can significantly enhance their domain-specific performance by calling specialized models.

3.2. Functionality Expansion

- Access to Real-Time Information: By integrating search engine tools, LLMs can access the latest information [20,21]; with weather tools, they can provide real-time weather updates, forecasts, and historical data [17,62]; interaction with map tools enables geographic data access and location-based queries [7]; in the financial sector, real-time exchange rate APIs and stock market tools allow for precise valuation of international asset portfolios [63].

- Interaction with External Environments: LLMs are essentially language processors and lack the capability to independently execute external operations such as booking meeting rooms or flights [9], scheduling [63], setting reminders [64], or filtering emails [65]. Big Loop systems can integrate project management and workflow tools to manage tasks, monitor progress, and optimize processes [65]; integrate online shopping assistants to streamline the shopping process [66]; and utilize spreadsheet tools for direct execution of data analysis and visualization [7].

3.3. Computational Efficiency

3.4. User-Centered Design

- Enhanced Interpretability and User Trust: Most existing LLMs operate as “black boxes” with non-transparent decision processes, lacking interpretability [78,79]. This leads to user concerns about the reliability of responses, especially in high-stakes domains like healthcare and finance, where interpretability is critical [7,51]. Big Loop systems, by orchestrating multiple components, can expose the decision-making process, increasing transparency. Even when errors occur, users can quickly identify the source, improving understanding and trust and facilitating more effective human–AI collaboration.

- Privacy Protection: LLM applications face major challenges in protecting user privacy [80]. Directly transmitting personal data to general models risks data leakage. Big Loop systems can locally deploy small models to handle user requests and only transmit privacy-stripped queries to server-side LLMs [81]; additionally, LLMs can utilize federated learning to transfer knowledge without transmitting raw data [82,83].

- Customization Capability: Since Big Loop systems consist of multiple atomic components, specific small models or tools can be optimized or replaced according to user needs, without relying on large-scale labeled datasets or extensive training, thereby enabling easier customization [84].

4. Construction of Atomized Components

4.1. Tool Construction

4.1.1. Using Human Tools Directly as Model Tools

4.1.2. Manually Constructed Tools

4.1.3. Automatic Construction of Tools by LLMs

4.1.4. Tool Documentation Optimization

4.2. Model Construction

4.2.1. Architectural Innovations

- Limitations and Improvements of Transformer: Despite dominating natural language processing, Transformers suffer efficiency bottlenecks in long-context memory handling (e.g., quadratic computational complexity) and high computational costs, prompting researchers to explore hybrid architectures. For example, recurrent neural networks (LSTM/GRU) remain indispensable for temporal data (such as financial sequences, speech signals) by capturing long-term dependencies through gating mechanisms [103,104].

- Continuous Evolution and Cross-modal Fusion of CNNs: Convolutional neural networks, through innovations like residual connections (ResNet), depthwise separable convolutions (MobileNet), and attention mechanisms (CBAM), maintain core status in image recognition. For example, visual-language models (such as CLIP) combining CNNs achieve more accurate cross-modal understanding by local feature extraction aligned with global semantics [105,106].

- Challenges and Frontiers in Graph Neural Networks (GNNs): GNNs excel in relational tasks like social network analysis and molecular structure prediction but face issues of poor generalization (e.g., over-smoothing) and large-scale graph processing efficiency. Topological deep learning, with higher-order neighborhood aggregation and dynamic graph representations, is gradually overcoming traditional GNN expressive limitations [107].

- Technical Iterations of Generative Models: GANs generate highly realistic images via adversarial training but suffer from mode collapse; VAEs offer structured latent spaces through variational inference but face blurry outputs; diffusion models balance generation quality (e.g., Stable Diffusion) and training stability via denoising processes, becoming the mainstream for multi-modal generation [103].

4.2.2. Task Paradigms

4.2.3. Training Strategies

- Supervised Fine-tuning (SFT): Using domain-specific labeled data to adapt pretrained LLMs to target tasks.

- Reinforcement Learning with Human Feedback (RLHF): Incorporates human feedback signals into policy optimization for more aligned behavior.

- Self-Supervised Learning: Leverages massive unlabeled data via pretext tasks (e.g., masked modeling, contrastive learning) to enhance representations.

- Knowledge Distillation: Transfers knowledge from large teacher models to smaller student models, enabling lightweight yet performant components.

4.2.4. Knowledge Distillation

- Logits Distillation: Matching the output probabilities of teacher and student models.

- Feature Distillation: Aligning intermediate layer representations.

- Relation Distillation: Preserving inter-sample or inter-class relationships.

- Data-Free Distillation: Generating synthetic data for distillation without original training data.

5. Big Loop Scheduling

5.1. Task Planning

5.1.1. Task Decomposition

5.1.2. Component-Based Planning

5.1.3. Adaptive Planning

5.1.4. Proactive Planning

5.2. Atomic Component Selection

5.2.1. Basic Selection Methods

5.2.2. Advanced Selection Strategies

5.3. Atomic Component Calling

5.3.1. Adhering to Calling Formats

5.3.2. Semantic Alignment Between Models

5.4. Future Directions

- Robustness and Reliability in Complex Multi-Agent Systems: Handling error accumulation, knowledge drift, and conflict alignment remain key challenges in highly dynamic LLM multi-agent systems [115]. Future work needs to focus on quantifiable uncertainty management, formal verification, and adaptive runtime monitoring.

- Explainability and Transparency: As LLM-driven closed-loop systems grow increasingly complex, understanding their decision-making processes and ensuring transparency are crucial for building trust and facilitating debugging. This includes explaining internal reasoning steps of LLMs as well as how components interact.

- Ethical Considerations and Governance: The growing autonomy and impact of these systems demand sound ethical frameworks, bias mitigation strategies, and clear accountability mechanisms, especially in sensitive domains [112,114]. This also involves addressing potential issues such as hallucination propagation and implicit collusion [115].

- Scalability and Efficiency: Although modularization improves scalability, optimizing computational costs and latency across networks of models and tools remains an ongoing challenge, particularly for real-time and resource-constrained environments [116].

- Generalization to Novel and Open-Ended Tasks: Enhancing these systems’ ability to generalize to truly novel, underspecified, or open-ended tasks without extensive retraining or human intervention is a critical long-term goal. This includes improving LLMs’ inference of new action semantics and adaptation to unknown environments [133].

6. Big Loop Optimization

6.1. Atomic Component Construction Optimization

6.1.1. Model Optimization

- Supervised Fine-Tuning (SFT): Directly optimizes model parameters using labeled data. For example, SiriuS [145] collects high-performance reasoning trajectories to perform independent supervised fine-tuning on multi-role LLM nodes; multi-agent fine-tuning [146] generates training data via multi-agent debates to optimize both generative and critic models.

- Reinforcement Learning (RL): Guides model optimization through reward functions. MAPoRL [147] trains verifier LLMs to assign correctness rewards for multi-agent debates, combining impact-aware rewards to promote cooperation; GPTSwarm [148] uses the REINFORCE algorithm to optimize the connectivity probability distribution within an agent swarm.

- Natural Language Feedback Optimization: Utilizes LLM-generated textual guidance to update models. For example, TextGrad [149] employs an evaluator LLM to generate textual loss signals and a gradient estimator LLM to produce node optimization suggestions, simulating natural language backpropagation; LLM-AutoDiff [149] introduces temporal gradient accumulation of textual feedback for cyclic system structures to optimize multi-component LLM workflows.

6.1.2. Tool Optimization

- Tool Creation and Integration: The ToolOptimizer component in Agent Symbolic Learning (ASL) [150] supports tool creation and optimizes node additions or deletions via PipelineOptimizer; ADAS uses Python code as the first system representation and iteratively designs workflows containing tool calls (such as web search and code interpreter integration) based on meta-LLMs.

- Tool Parameter Tuning: DSPy [151] applies rejection sampling (Bootstrap-) to generate high-quality context examples for optimizing tool call parameters; MIPRO jointly optimizes tool instructions and demonstration configurations using Bayesian surrogate models.

- Collaborative Tool Optimization: GASO [152] addresses the ignored interactions among sibling tools in TextGrad by proposing semantic gradient descent, aggregating context-aware gradients to optimize credit assignment across tools.

6.2. Big Loop Scheduling Optimization

- Topology Optimization: Topology optimization can be divided into two categories. The first involves fixed-structure adjustment. For example, Trace updates all LLM prompts at once based on global natural language feedback, using minimal subgraphs to reduce LLM calls; DSPy [151] uses guided sampling to optimize tool calling order within fixed pipelines, enhancing overall task efficiency. The second category involves flexible structure design. AFlow [153] applies Monte Carlo Tree Search (MCTS) to explore optimal system topologies within predefined operator spaces, preserving historical design experience to support efficient iterative improvements; DebFlow [154] introduces a multi-agent debate framework with debaters and judge roles to dynamically generate optimized workflow structures via collaborative debate.

- Dynamic Workflow Generation: The generation of dynamic workflows primarily depends on numerical signals or natural language feedback. In numerical-signal-driven scenarios, DyLAN [155] models multi-round debates as a time-feedforward network, incrementally optimizing workflow structure by pruning ineffective agents and adaptively reconnecting surviving nodes; ScoreFlow uses a Score-DPO strategy to sample candidate workflows from meta-LLMs, collecting preference data based on task performance differences to improve decision policies. In natural language feedback-driven scenarios, AutoFlow [156] prompts meta-LLMs to generate candidate workflows using CoRE syntax, applying task data average scores as rewards for reinforcement fine-tuning; MAS-GPT constructs planner-query-MAS training sets, fine-tuning meta-LLMs to produce optimal workflows adapted to different tasks.

- System Representation and Execution Optimization: Scheduling logic is implemented using graph structures or code representations. For example, GPTSwarm [148] models the system as a hierarchical graph of nodes–agents–swarms, optimizing cross-level connection weights; FlowReasoner combines Python code representations with multi-purpose rewards to dynamically generate efficient workflows that include conditional logic and loops.

7. Challenges and Future Trends

7.1. Technical Challenges

7.2. Data Challenges

7.3. Optimization Strategy Challenges

7.4. Future Trends

8. Conclusions

- Modular Collaboration: Forming a complementary system of “professional capability–generalization capability–decision-making capability” through the deterministic execution of tools, the learnability of models, and the environmental adaptability of agents.

- Dynamic Optimization Mechanism: Achieving the joint iteration of component parameter tuning and topological structure optimization by combining natural language feedback and numerical signals.

- Hierarchical Task Decomposition: Reducing the implementation cost of complex tasks through sub-loop reuse and standardized interaction protocols.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.-A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Liu, A.; Feng, B.; Xue, B.; Wang, B.; Wu, B.; Lu, C.; Zhao, C.; Deng, C.; Zhang, C.; Ruan, C.; et al. Deepseek-v3 technical report. arXiv 2024, arXiv:2412.19437. [Google Scholar]

- Yang, A.; Li, A.; Yang, B.; Zhang, B.; Hui, B.; Zheng, B.; Yu, B.; Gao, C.; Huang, C.; Lv, C.; et al. Qwen3 technical report. arXiv 2025, arXiv:2505.09388. [Google Scholar] [CrossRef]

- Feng, J. Systematic artificial intelligence. J. Beijing Univ. Posts Telecommun. 2024, 47, 1. [Google Scholar]

- Qin, Y.; Hu, S.; Lin, Y.; Chen, W.; Ding, N.; Cui, G.; Zeng, Z.; Zhou, X.; Huang, Y.; Xiao, C.; et al. Tool learning with foundation models. ACM Comput. Surv. 2024, 57, 1–40. [Google Scholar] [CrossRef]

- Qu, C.; Dai, S.; Wei, X.; Cai, H.; Wang, S.; Yin, D.; Xu, J.; Wen, J.-R. Tool learning with large language models: A survey. Front. Comput. Sci. 2025, 19, 198343. [Google Scholar] [CrossRef]

- Wang, Z.; Cheng, Z.; Zhu, H.; Fried, D.; Neubig, G. What Are Tools Anyway? A Survey from the Language Model Perspective. In Proceedings of the First Conference on Language Modeling, Philadelphia, PA, USA, 7–9 October 2024. [Google Scholar]

- Wang, F.; Zhang, Z.; Zhang, X.; Wu, Z.; Mo, T.; Lu, Q.; Wang, W.; Li, R.; Xu, J.; Tang, X.; et al. A Comprehensive Survey of Small Language Models in the Era of Large Language Models: Techniques, Enhancements, Applications, Collaboration with LLMs, and Trustworthiness. arXiv 2024, arXiv:2411.03350. [Google Scholar] [CrossRef]

- Lu, J.; Pang, Z.; Xiao, M.; Zhu, Y.; Xia, R.; Zhang, J. Merge, ensemble, and cooperate! a survey on collaborative strategies in the era of large language models. arXiv 2024, arXiv:2407.06089. [Google Scholar] [CrossRef]

- Wang, L.; Ma, C.; Feng, X.; Zhang, Z.; Yang, H.; Zhang, J.; Chen, Z.; Tang, J.; Chen, X.; Lin, Y.; et al. A survey on large language model based autonomous agents. Front. Comput. Sci. 2024, 18, 186345. [Google Scholar] [CrossRef]

- Xi, Z.; Chen, W.; Guo, X.; He, W.; Ding, Y.; Hong, B.; Zhang, M.; Wang, J.; Jin, S.; Zhou, E.; et al. The rise and potential of large language model based agents: A survey. Sci. China Inf. Sci. 2025, 68, 121101. [Google Scholar] [CrossRef]

- Luo, J.; Zhang, W.; Yuan, Y.; Zhao, Y.; Yang, J.; Gu, Y.; Wu, B.; Chen, B.; Qiao, Z.; Long, Q.; et al. Large language model agent: A survey on methodology, applications and challenges. arXiv 2025, arXiv:2503.21460. [Google Scholar] [CrossRef]

- Guo, T.; Chen, X.; Wang, Y.; Chang, R.; Pei, S.; Chawla, N.V.; Wiest, O.; Zhang, X. Large language model based multi-agents: A survey of progress and challenges. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence, Jeju, Republic of Korea, 3–9 August 2024; pp. 8048–8057. [Google Scholar]

- Patil, S.G.; Zhang, T.; Wang, X.; Gonzalez, J.E. Gorilla: Large Language Model Connected with Massive APIs. In Proceedings of the Thirty-Eighth Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024. [Google Scholar]

- Xu, Q.; Hong, F.; Li, B.; Hu, C.; Chen, Z.; Zhang, J. On the tool manipulation capability of open-source large language models. arXiv 2023, arXiv:2305.16504. [Google Scholar] [CrossRef]

- Anantha, R.; Bandyopadhyay, B.; Kashi, A.; Mahinder, S.; Hill, A.W.; Chappidi, S. ProTIP: Progressive Tool Retrieval Improves Planning. arXiv 2023, arXiv:2312.10332. [Google Scholar] [CrossRef]

- Li, M.; Zhao, Y.; Yu, B.; Song, F.; Li, H.; Yu, H.; Li, Z.; Huang, F.; Li, Y. API-Bank: A Comprehensive Benchmark for Tool-Augmented LLMs. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 3102–3116. [Google Scholar]

- Xiong, H.; Bian, J.; Li, Y.; Li, X.; Du, M.; Wang, S.; Yin, D.; Helal, S. When search engine services meet large language models: Visions and challenges. IEEE Trans. Serv. Comput. 2024, 17, 4558–4577. [Google Scholar] [CrossRef]

- Jin, B.; Zeng, H.; Yue, Z.; Yoon, J.; Arik, S.; Wang, D.; Zamani, H.; Han, J. Search-r1: Training llms to reason and leverage search engines with reinforcement learning. arXiv 2025, arXiv:2503.09516. [Google Scholar]

- He-Yueya, J.; Poesia, G.; Wang, R.; Goodman, N. Solving Math Word Problems by Combining Language Models with Symbolic Solvers. In Proceedings of the 3rd Workshop on Mathematical Reasoning and AI at NeurIPS’23, Los Angeles, CA, USA, 15 December 2023. [Google Scholar]

- Kadlčík, M.; Štefánik, M.; Sotolar, O.; Martinek, V. Calc-X and Calcformers: Empowering Arithmetical Chain-of-Thought through Interaction with Symbolic Systems. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 12101–12108. [Google Scholar]

- Jin, Q.; Yang, Y.; Chen, Q.; Lu, Z. Genegpt: Augmenting large language models with domain tools for improved access to biomedical information. Bioinformatics 2024, 40, btae075. [Google Scholar] [CrossRef]

- Kim, Y.; Park, C.; Jeong, H.; Chan, Y.S.; Xu, X.; McDuff, D.; Lee, H.; Ghassemi, M.; Breazeal, C.; Park, H.W. Mdagents: An adaptive collaboration of llms for medical decision-making. Adv. Neural Inf. Process. Syst. 2024, 37, 79410–79452. [Google Scholar]

- OpenAI. ChatGPT. 2025. Available online: https://openai.com/index/chatgpt/ (accessed on 1 August 2025).

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions. ACM Trans. Inf. Syst. 2025, 43, 1–55. [Google Scholar] [CrossRef]

- Liu, H.; Xue, W.; Chen, Y.; Chen, D.; Zhao, X.; Wang, K.; Hou, L.; Li, R.; Peng, W. A survey on hallucination in large vision-language models. arXiv 2024, arXiv:2402.00253. [Google Scholar] [CrossRef]

- Bai, Z.; Wang, P.; Xiao, T.; He, T.; Han, Z.; Zhang, Z.; Shou, M.Z. Hallucination of multimodal large language models: A survey. arXiv 2024, arXiv:2404.18930. [Google Scholar] [CrossRef]

- Bang, Y.; Ji, Z.; Schelten, A.; Hartshorn, A.; Fowler, T.; Zhang, C.; Cancedda, N.; Fung, P. Hallulens: Llm hallucination benchmark. arXiv 2025, arXiv:2504.17550. [Google Scholar] [CrossRef]

- Liang, L.; Bo, Z.; Gui, Z.; Zhu, Z.; Zhong, L.; Zhao, P.; Sun, M.; Zhang, Z.; Zhou, J.; Chen, W.; et al. Kag: Boosting llms in professional domains via knowledge augmented generation. In Proceedings of the Companion the ACM on Web Conference 2025, Sydney, NSW, Australia, 28 April–2 May 2025; pp. 334–343. [Google Scholar]

- Zhang, Q.; Chen, S.; Bei, Y.; Yuan, Z.; Zhou, H.; Hong, Z.; Dong, J.; Chen, H.; Chang, Y.; Huang, X. A Survey of Graph Retriev-al-Augmented Generation for Customized Large Language Models. arXiv 2025, arXiv:2501.13958. [Google Scholar]

- Cheng, M.; Luo, Y.; Ouyang, J.; Liu, Q.; Liu, H.; Li, L.; Yu, S.; Zhang, B.; Cao, J.; Ma, J.; et al. A survey on knowledge-oriented re-trieval-augmented generation. arXiv 2025, arXiv:2503.10677. [Google Scholar]

- Chen, J.; Xiao, S.; Zhang, P.; Luo, K.; Lian, D.; Liu, Z. Bge m3-embedding: Multi-lingual, multi-functionality, multi-granularity text embeddings through self-knowledge distillation. arXiv 2024, arXiv:2402.03216. [Google Scholar]

- AI21. Embeddings. Available online: https://docs.ai21.com/docs/embeddings-api (accessed on 1 August 2025).

- Amazon. Amazon Titan Text Embeddings Models. Available online: https://docs.aws.amazon.com/bedrock/latest/userguide/titanembedding-models.html (accessed on 1 August 2025).

- OpenAI. Embeddings. Available online: https://platform.openai.com/docs/guides/embeddings (accessed on 1 August 2025).

- Voyage AI. Embeddings. Available online: https://docs.voyageai.com/docs/embeddings (accessed on 1 August 2025).

- Dhuliawala, S.; Komeili, M.; Xu, J.; Raileanu, R.; Li, X.; Celikyilmaz, A.; Weston, J. Chain-of-Verification Reduces Hallucination in Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics ACL 2024, Bangkok, Thailand, 11–16 August 2024; pp. 3563–3578. [Google Scholar]

- Peng, W.; Li, G.; Jiang, Y.; Wang, Z.; Ou, D.; Zeng, X.; Xu, D.; Xu, T.; Chen, E. Large language model based long-tail query rewriting in taobao search. In Proceedings of the Companion the ACM on Web Conference 2024, Singapore, 13–17 May 2024; pp. 20–28. [Google Scholar]

- Gao, L.; Ma, X.; Lin, J.; Callan, J. Precise zero-shot dense retrieval without relevance labels. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, Canada, ON, 9–14 July 2023; Volume 1: Long Papers, pp. 1762–1777. [Google Scholar]

- Zhang, Y.; Li, M.; Long, D.; Zhang, X.; Lin, H.; Yang, B.; Xie, P.; Yang, A.; Liu, D.; Lin, J. Qwen3 Embedding: Advancing Text Em-bedding and Reranking Through Foundation Models. arXiv 2025, arXiv:2506.05176. [Google Scholar]

- Yang, E.; Yates, A.; Ricci, K.; Weller, O.; Chari, V.; Van Durme, B.; Lawrie, D. Rank-K: Test-Time Reasoning for Listwise Reranking. arXiv 2025, arXiv:2505.14432. [Google Scholar]

- Shao, Z.; Huang, F.; Huang, M. Chaining Simultaneous Thoughts for Numerical Reasoning. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 2533–2547. [Google Scholar]

- Gou, Z.; Shao, Z.; Gong, Y.; Yang, Y.; Huang, M.; Duan, N.; Chen, W. ToRA: A Tool-Integrated Reasoning Agent for Mathematical Problem Solving. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Veerendranath, V.; Shah, V.; Ghate, K. Calc-CMU at SemEval-2024 Task 7: Pre-Calc-Learning to Use the Calculator Improves Nu-meracy in Language Models. In Proceedings of the 18th International Workshop on Semantic Evaluation (SemEval-2024), Vienna, Austria, 7–11 May 2024; pp. 1468–1475. [Google Scholar]

- Gao, L.; Madaan, A.; Zhou, S.; Alon, U.; Liu, P.; Yang, Y.; Callan, J.; Neubig, G. Pal: Program-aided language models. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 10764–10799. [Google Scholar]

- Wang, X.; Wang, Z.; Liu, J.; Chen, Y.; Yuan, L.; Peng, H.; Ji, H. MINT: Evaluating LLMs in Multi-turn Interaction with Tools and Language Feedback. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Hu, Q.; Long, Q.; Wang, W. BOOST: Bootstrapping Strategy-Driven Reasoning Programs for Program-Guided Fact-Checking. arXiv 2025, arXiv:2504.02467. [Google Scholar]

- Zhang, X.; Yang, Q. Xuanyuan 2.0: A large chinese financial chat model with hundreds of billions parameters. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, Birmingham, UK, 21–25 October 2023; pp. 4435–4439. [Google Scholar]

- Liu, X.-Y.; Wang, G.; Yang, H.; Zha, D. FinGPT: Democratizing Internet-scale Data for Financial Large Language Models. In Proceedings of the NeurIPS 2023 Workshop on Instruction Tuning and Instruction Following, New Orleans, LA, USA, 15 December 2023. [Google Scholar]

- Yue, S.; Chen, W.; Wang, S.; Li, B.; Shen, C.; Liu, S.; Zhou, Y.; Xiao, Y.; Yun, S.; Huang, X.; et al. Disc-lawllm: Fine-tuning large language models for intelligent legal services. arXiv 2023, arXiv:2309.11325. [Google Scholar]

- Fei, Z.; Zhang, S.; Shen, X.; Zhu, D.; Wang, X.; Ge, J.; Ng, V. InternLM-Law: An Open-Sourced Chinese Legal Large Language Model. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; pp. 9376–9392. [Google Scholar]

- Singhal, K.; Tu, T.; Gottweis, J.; Sayres, R.; Wulczyn, E.; Amin, M.; Hou, L.; Clark, K.; Pfohl, S.R.; Cole-Lewis, H.; et al. Toward expert-level medical question answering with large language models. Nat. Med. 2025, 31, 943–950. [Google Scholar] [CrossRef]

- Chen, J.; Wang, X.; Ji, K.; Gao, A.; Jiang, F.; Chen, S.; Zhang, H.; Dingjie, S.; Xie, W.; Kong, C.; et al. HuatuoGPT-II, One-stage Training for Medical Adaption of LLMs. In Proceedings of the First Conference on Language Modeling, Philadelphia, PA, USA, 7–9 October 2024. [Google Scholar]

- Dan, Y.; Lei, Z.; Gu, Y.; Li, Y.; Yin, J.; Lin, J.; Ye, L.; Tie, Z.; Zhou, Y.; Wang, Y.; et al. Educhat: A large-scale language model-based chatbot system for intelligent education. arXiv 2023, arXiv:2308.02773. [Google Scholar]

- Yu, J.; Zhu, J.; Wang, Y.; Liu, Y.; Chang, H.; Nie, J.; Kong, C.; Chong, R.; Liu, X.; An, J.; et al. Taoli Llama. Available online: https://github.com/blcuicall/taoli (accessed on 22 August 2025).

- Pang, L.; Cao, X.; Tang, D.; Xu, S.; Bai, X.; Zhou, F.; Meng, D. Hsigene: A foundation model for hyperspectral image generation. arXiv 2024, arXiv:2409.12470. [Google Scholar]

- Xue, L.; Yu, N.; Zhang, S.; Panagopoulou, A.; Li, J.; Martín-Martín, R.; Wu, J.; Xiong, C.; Xu, R.; Niebles, J.C.; et al. Ulip-2: Towards scalable multimodal pre-training for 3d understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 27091–27101. [Google Scholar]

- Yang, S.; Liu, J.; Zhang, R.; Pan, M.; Guo, Z.; Li, X.; Chen, Z.; Gao, P.; Li, H.; Guo, Y.; et al. Lidar-llm: Exploring the potential of large language models for 3d lidar understanding. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 27 February–2 March 2025; Volume 39, pp. 9247–9255. [Google Scholar]

- Tak, D.; Garomsa, B.A.; Chaunzwa, T.L.; Zapaishchykova, A.; Pardo, J.C.C.; Ye, Z.; Zielke, J.; Ravipati, Y.; Vajapeyam, S.; Mahootiha, M.; et al. A foundation model for generalized brain MRI analysis. medRxiv 2024. [Google Scholar] [CrossRef]

- Huang, Y.; Shi, J.; Li, Y.; Fan, C.; Wu, S.; Zhang, Q.; Liu, Y.; Zhou, P.; Wan, Y.; Gong, N.Z.; et al. MetaTool Benchmark for Large Language Models: Deciding Whether to Use Tools and Which to Use. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Schick, T.; Dwivedi-Yu, J.; Dessì, R.; Raileanu, R.; Lomeli, M.; Hambro, E.; Zettlemoyer, L.; Cancedda, N.; Scialom, T. Toolformer: Language models can teach themselves to use tools. Adv. Neural Inf. Process. Syst. 2023, 36, 68539–68551. [Google Scholar]

- Zhuang, Y.; Yu, Y.; Wang, K.; Sun, H.; Zhang, C. Toolqa: A dataset for llm question answering with external tools. Adv. Neural Inf. Process. Syst. 2023, 36, 50117–50143. [Google Scholar]

- Qin, Y.; Liang, S.; Ye, Y.; Zhu, K.; Yan, L.; Lu, Y.; Lin, Y.; Cong, X.; Tang, X.; Qian, B.; et al. ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs. arXiv 2023, arXiv:2307.16789. [Google Scholar]

- Yao, S.; Chen, H.; Yang, J.; Narasimhan, K. Webshop: Towards scalable real-world web interaction with grounded language agents. Adv. Neural Inf. Process. Syst. 2022, 35, 20744–20757. [Google Scholar]

- Miao, X.; Oliaro, G.; Zhang, Z.; Cheng, X.; Wang, Z.; Wong, R.Y.Y.; Chen, Z.; Arfeen, D.; Abhyankar, R.; Jia, Z. Specinfer: Accelerating generative llm serving with speculative inference and token tree verification. arXiv 2023, arXiv:2305.09781. [Google Scholar]

- Ali, M.A.; Li, Z.; Yang, S.; Cheng, K.; Cao, Y.; Huang, T.; Hu, G.; Lyu, W.; Hu, L.; Yu, L.; et al. Prompt-saw: Leveraging relation-aware graphs for textual prompt compression. arXiv 2024, arXiv:2404.00489. [Google Scholar]

- Huang, X.; Zhang, L.L.; Cheng, K.T.; Yang, F.; Yang, M. Fewer is more: Boosting LLM reasoning with reinforced context pruning. arXiv 2023, arXiv:2312.08901. [Google Scholar]

- Liu, J.; Li, L.; Xiang, T.; Wang, B.; Qian, Y. TCRA-LLM: Token Compression Retrieval Augmented Large Language Model for Inference Cost Reduction. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; pp. 9796–9810. [Google Scholar]

- Fei, W.; Niu, X.; Zhou, P.; Hou, L.; Bai, B.; Deng, L.; Han, W. Extending Context Window of Large Language Models via Semantic Compression. In Proceedings of the Findings of the Association for Computational Linguistics ACL 2024, Bangkok, Thailand, 11–16 August 2024; pp. 5169–5181. [Google Scholar]

- Li, J.; Lan, Y.; Wang, L.; Wang, H. PCToolkit: A Unified Plug-and-Play Prompt Compression Toolkit of Large Language Models. arXiv 2024, arXiv:2403.17411. [Google Scholar]

- Huang, K.; Guo, X.; Wang, M. SpecDec++: Boosting Speculative Decoding via Adaptive Candidate Lengths. In Proceedings of the Workshop on Efficient Systems for Foundation Models II@ ICML2024, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Liu, J.; Wang, Q.; Wang, J.; Cai, X. Speculative Decoding via Early-exiting for Faster LLM Inference with Thompson Sampling Control Mechanism. In Proceedings of the Findings of the Association for Computational Linguistics ACL 2024, Bangkok, Thailand, 11–16 August 2024; pp. 3027–3043. [Google Scholar]

- Lu, Y.; Zhu, W.; Li, L.; Qiao, Y.; Yuan, F. LLaMAX: Scaling Linguistic Horizons of LLM by Enhancing Translation Capabilities Beyond 100 Languages. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; pp. 10748–10772. [Google Scholar]

- Yun, T.; Oh, J.; Min, H.; Lee, Y.; Bang, J.; Cai, J.; Song, H. ReFeed: Multi-dimensional Summarization Refinement with Reflective Reasoning on Feedback. arXiv 2025, arXiv:2503.21332. [Google Scholar]

- Shu, L.; Luo, L.; Hoskere, J.; Zhu, Y.; Liu, Y.; Tong, S.; Chen, J.; Meng, L. Rewritelm: An instruction-tuned large language model for text rewriting. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 18970–18980. [Google Scholar]

- Linardatos, P.; Papastefanopoulos, V.; Kotsiantis, S. Explainable ai: A review of machine learning interpretability methods. Entropy 2020, 23, 18. [Google Scholar] [CrossRef]

- Zhao, H.; Chen, H.; Yang, F.; Liu, N.; Deng, H.; Cai, H.; Wang, S.; Yin, D.; Du, M. Explainability for large language models: A survey. ACM Trans. Intell. Syst. Technol. 2024, 15, 20. [Google Scholar] [CrossRef]

- Chen, K.; Zhou, X.; Lin, Y.; Feng, S.; Shen, L.; Wu, P. A Survey on Privacy Risks and Protection in Large Language Models. arXiv 2025, arXiv:2505.01976. [Google Scholar] [CrossRef]

- Chong, C.J.; Hou, C.; Yao, Z.; Talebi, S.M.S. Casper: Prompt Sanitization for Protecting User Privacy in Web-Based Large Language Models. arXiv 2024, arXiv:2408.07004. [Google Scholar] [CrossRef]

- Vu, M.; Nguyen, T.; Jeter, T.; Thai, M.T. Analysis of Privacy Leakage in Federated Large Language Models. In Proceedings of the 27th International Conference on Artificial Intelligence and Statistics (AISTATS), Palacio de Congresos de València, Valencia, Spain, 2–4 May 2024. [Google Scholar]

- Ahmadi, K.; Kim, H.W.; Sharma, R. An Interactive Framework for Implementing Privacy-Preserving Federated Learning: Experi-ments on Large Language Models. arXiv 2025, arXiv:2502.08008. [Google Scholar]

- Asai, A.; Wu, Z.; Wang, Y.; Sil, A.; Hajishirzi, H. Self-rag: Learning to retrieve, generate, and critique through self-reflection. In Proceedings of the Twelfth International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Nakano, R.; Hilton, J.; Balaji, S.; Wu, J.; Ouyang, L.; Kim, C.; Hesse, C.; Jain, S.; Kosaraju, V.; Saunders, W.; et al. Webgpt: Brows-er-assisted question-answering with human feedback. arXiv 2021, arXiv:2112.09332. [Google Scholar]

- Zhang, K.; Zhang, H.; Li, G.; Li, J.; Li, Z.; Jin, Z. Toolcoder: Teach code generation models to use api search tools. arXiv 2023, arXiv:2305.04032. [Google Scholar] [CrossRef]

- Gehring, J.; Zheng, K.; Copet, J.; Mella, V.; Carbonneaux, Q.; Cohen, T.; Synnaeve, G. Rlef: Grounding code llms in execution feedback with reinforcement learning. arXiv 2024, arXiv:2410.02089. [Google Scholar] [CrossRef]

- Wang, X.; Chen, Y.; Yuan, L.; Zhang, Y.; Li, Y.; Peng, H.; Ji, H. Executable code actions elicit better llm agents. In Proceedings of the Forty-First International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Paranjape, B.; Lundberg, S.; Singh, S.; Hajishirzi, H.; Zettlemoyer, L.; Ribeiro, M.T. Art: Automatic multi-step reasoning and tool-use for large language models. arXiv 2023, arXiv:2303.09014. [Google Scholar]

- Descope. What Is the Model Context Protocol (MCP) and How It Works. Available online: https://www.descope.com/learn/post/mcp (accessed on 22 August 2025).

- Surapaneni, R.; Jha, M.; Vakoc, M.; Segal, T. Announcing the Agent2Agent Protocol (A2A). Google Developers Blog 2025. Available online: https://developers.googleblog.com/en/a2a-a-new-era-of-agent-interoperability/ (accessed on 22 August 2025).

- Cai, T.; Wang, X.; Ma, T.; Chen, X.; Zhou, D. Large Language Models as Tool Makers. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Qian, C.; Han, C.; Fung, Y.; Qin, Y.; Liu, Z.; Ji, H. CREATOR: Tool Creation for Disentangling Abstract and Concrete Reasoning of Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Suzhou, China, 5–9 November 2023; pp. 6922–6939. [Google Scholar]

- Liu, X.; Yin, D.; Wu, Z.; Feng, Y. RefTool: Enhancing Model Reasoning with Reference-Guided Tool Creation. arXiv 2025, arXiv:2505.21413. [Google Scholar]

- Ma, Z.; Huang, Z.; Liu, J.; Wang, M.; Zhao, H.; Li, X. Automated creation of reusable and diverse toolsets for enhancing llm reasoning. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 24821–24830. [Google Scholar]

- Wang, Z.Z.; Neubig, G.; Fried, D. TROVE: Inducing verifiable and efficient toolboxes for solving programmatic tasks. In Proceedings of the 41st International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024; pp. 51177–51191. [Google Scholar]

- Stengel-Eskin, E.; Prasad, A.; Bansal, M. ReGAL: Refactoring Programs to Discover Generalizable Abstractions. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024; pp. 46605–46624. [Google Scholar]

- Wang, Z.Z.; Gandhi, A.; Neubig, G.; Fried, D. Inducing programmatic skills for agentic tasks. arXiv 2025, arXiv:2504.06821. [Google Scholar] [CrossRef]

- Zheng, B.; Fatemi, M.Y.; Jin, X.; Wang, Z.Z.; Gandhi, A.; Song, Y.; Gu, Y.; Srinivasa, J.; Liu, G.; Neubig, G.; et al. Skillweaver: Web agents can self-improve by discovering and honing skills. arXiv 2025, arXiv:2504.07079. [Google Scholar]

- Hsieh, C.-Y.; Chen, S.-A.; Li, C.-L.; Fujii, Y.; Ratner, A.; Lee, C.-Y.; Krishna, R.; Pfister, T. Tool documentation enables zero-shot tool-usage with large language models. arXiv 2023, arXiv:2308.00675. [Google Scholar]

- Lumer, E.; Subbiah, V.K.; Burke, J.A.; Basavaraju, P.H.; Huber, A. Toolshed: Scale tool-equipped agents with advanced rag-tool fusion and tool knowledge bases. arXiv 2024, arXiv:2410.14594. [Google Scholar]

- Qu, C.; Dai, S.; Wei, X.; Cai, H.; Wang, S.; Yin, D.; Xu, J.; Wen, J.-R. From Exploration to Mastery: Enabling LLMs to Master Tools via Self-Driven Interactions. In Proceedings of the Thirteenth International Conference on Learning Representations, Singapore, 24–28 April 2025. [Google Scholar]

- Bengesi, S.; El-Sayed, H.; Sarker, M.K.; Houkpati, Y.; Irungu, J.; Oladunni, T. Advancements in Generative AI: A Comprehensive Review of GANs, GPT, Autoencoders, Diffusion Model, and Transformers. IEEE Access 2024, 12, 69812–69837. [Google Scholar] [CrossRef]

- Bulatov, A.; Kuratov, Y.; Kapushev, Y.; Burtsev, M. Beyond Attention: Breaking the Limits of Transformer Context Length with Recurrent Memory. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 22–27 February 2024; Volume 38, pp. 17700–17708. [Google Scholar]

- Khan, S.H.; Almaktoof, A.; Abo-Al-Ez, K. A Comprehensive Survey on Architectural Advances in Deep CNNs: Challenges, Applications, and Emerging Research Directions. Sensors 2025, 25, 531. [Google Scholar]

- Haruna, Y.; Qin, S.; Chukkol, A.H.A.; Yusuf, A.A.; Bello, I.; Lawan, A. Exploring the synergies of hybrid convolutional neural network and Vision Transformer architectures for computer vision: A survey. Eng. Appl. Artif. Intell. 2025, 144, 110057. [Google Scholar] [CrossRef]

- Zhou, Y.; Feng, L.; Ke, Y.; Jiang, X.; Yan, J.; Yang, X.; Zhang, W. Towards vision-language geo-foundation model: A survey. arXiv 2024, arXiv:2406.09385. [Google Scholar]

- Jayaram, R.; Dhulipala, L.; Hadian, M.; Lee, J.D.; Mirrokni, V. MUVERA: Multi-Vector Retrieval via Fixed Dimensional Encoding. Adv. Neural Inf. Process. Syst. 2024, 37, 101042–101073. [Google Scholar]

- Cao, Y.; Yao, L.; McAuley, J.; Sheng, Q.Z. Reinforcement Learning for Generative AI: A Survey. arXiv 2023, arXiv:2308.14328. [Google Scholar] [CrossRef]

- Christian, B.; Kirk, H.R.; Thompson, J.A.F.; Summerfield, C.; Dumbalska, T. Reward Model Interpretability via Optimal and Pessimal Tokens. arXiv 2025, arXiv:2506.07326. [Google Scholar] [CrossRef]

- Anonymous. Decomposed Reward Models: Learning Multi-Dimensional Human Preferences from Binary Comparisons. arXiv 2025, arXiv:2502.13131. [Google Scholar]

- Ren, S.; Ren, S.; Jian, P.; Ren, Z.; Leng, C.; Xie, C.; Zhang, J. Towards scientific intelligence: A survey of llm-based scientific agents. arXiv 2025, arXiv:2503.24047. [Google Scholar] [CrossRef]

- Su, Y.; Ai, Q.; Zhan, J.; Dong, Q.; Liu, Y. Dynamic and Parametric Retrieval-Augmented Generation. arXiv 2025, arXiv:2506.06704. [Google Scholar] [CrossRef]

- Tran, K.-T.; Huang, W.C.; Wu, Y.; Chen, Y.; Miao, C.; Nguyen, H.; Zhou, Y.; Zhang, W.; Fang, L.; He, L. A Survey on Large Language Model based Human-Agent Systems. arXiv 2025, arXiv:2505.00753. [Google Scholar] [CrossRef]

- Zhao, Z.; Dong, H.; Saha, A.; Xiong, C. Automatic Curriculum Expert Iteration for Reliable LLM Reasoning. In Proceedings of the International Conference on Learning Representations (ICLR), Singapore, 24–28 April 2025. [Google Scholar]

- Tran, K.-T.; Dao, D.; Nguyen, M.-D.; Pham, Q.-V.; O’Sullivan, B.; Nguyen, H.D. Multi-Agent Collaboration Mechanisms: A Survey of LLMs. arXiv 2025, arXiv:2501.06322. [Google Scholar] [CrossRef]

- Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.; Cao, Y.; Narasimhan, K. Tree of Thoughts: Deliberate Problem Solving with Large Language Models. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 4–9 December 2023; Volume 36, pp. 11809–11822. [Google Scholar]

- Xu, Y.; Guo, X.; Zeng, Z.; Miao, C. Softcot: Soft chain-of-thought for efficient reasoning with llms. arXiv 2025, arXiv:2502.12134. [Google Scholar]

- Hao, S.; Sukhbaatar, S.; Su, D.; Li, X.; Hu, Z.; Weston, J.; Tian, Y. Training large language models to reason in a continuous latent space. arXiv 2024, arXiv:2412.06769. [Google Scholar] [CrossRef]

- Wu, X.; Shen, Y.; Shan, C.; Song, K.; Wang, S.; Zhang, B.; Feng, J.; Cheng, H.; Chen, W.; Xiong, Y.; et al. Can graph learning improve planning in LLM-based agents? Adv. Neural Inf. Process. Syst. 2024, 37, 5338–5383. [Google Scholar]

- Yao, S.; Zhao, J.; Yu, D.; Du, N.; Shafran, I.; Narasimhan, K.; Cao, Y. React: Synergizing reasoning and acting in language models. In Proceedings of the International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Li, C.; Yang, R.; Li, T.; Bafarassat, M.; Sharifi, K.; Bergemann, D.; Yang, Z. Stride: A tool-assisted llm agent framework for strategic and interactive decision-making. arXiv 2024, arXiv:2405.16376. [Google Scholar] [CrossRef]

- Wu, J.; Zhu, J.; Liu, Y.; Xu, M.; Jin, Y. Agentic Reasoning: A Streamlined Framework for Enhancing LLM Reasoning with Agentic Tools. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics, Vienna, Austria, 27 July–1 August 2025; Volume 1: Long Papers, pp. 28489–28503. [Google Scholar]

- Zhuang, Y.; Chen, X.; Yu, T.; Mitra, S.; Bursztyn, V.; Rossi, R.A.; Sarkhel, S.; Zhang, C. ToolChain*: Efficient Action Space Navigation in Large Language Models with A* Search. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Wang, Y.; Yu, J.; Yao, Z.; Zhang, J.; Xie, Y.; Tu, S.; Fu, Y.; Feng, Y.; Zhang, J.; Zhang, J.; et al. SoAy: A Solution-based LLM API-using Methodology for Academic Information Seeking. In Proceedings of the 31st ACM SIGKDD Conference on Knowledge Discovery and Data Mining V. 1, Toronto, ON, Canada, 3–7 August 2025; pp. 2660–2671. [Google Scholar]

- Shen, W.; Li, C.; Chen, H.; Yan, M.; Quan, X.; Chen, H.; Zhang, J.; Huang, F. Small LLMs Are Weak Tool Learners: A Multi-LLM Agent. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; pp. 16658–16680. [Google Scholar]

- Qian, C.; Xiong, C.; Liu, Z.; Liu, Z. Toolink: Linking Toolkit Creation and Using through Chain-of-Solving on Open-Source Model. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Mexico City, Mexico, 16–21 June 2024; pp. 831–854. [Google Scholar]

- Chen, S.; Wang, Y.; Wu, Y.-F.; Chen, Q.; Xu, Z.; Luo, W.; Zhang, K.; Zhang, L. Advancing tool-augmented large language models: Integrating insights from errors in inference trees. In Proceedings of the 37th Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024; Volume 37, pp. 106555–106581. [Google Scholar]

- Zheng, Y.; Li, P.; Yan, M.; Zhang, J.; Huang, F.; Liu, Y. Budget-Constrained Tool Learning with Planning. In Proceedings of the Findings of the Association for Computational Linguistics ACL 2024, Bangkok, Thailand, 7–12 August 2024; pp. 9039–9052. [Google Scholar]

- Kumar, S.S.; Jain, D.; Agarwal, E.; Pandey, R. Swissnyf: Tool grounded llm agents for black box setting. arXiv 2024, arXiv:2402.10051. [Google Scholar] [CrossRef]

- Erbacher, P.; Falissar, L.; Guigue, V.; Soulier, L. Navigating uncertainty: Optimizing api dependency for hallucination reduction in closed-book question answering. arXiv 2024, arXiv:2401.01780. [Google Scholar] [CrossRef]

- Qiao, S.; Gui, H.; Lv, C.; Jia, Q.; Chen, H.; Zhang, N. Making Language Models Better Tool Learners with Execution Feedback. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Mexico City, Mexico, 16–21 June 2024; Volume 1: Long Papers, pp. 3550–3568. [Google Scholar]

- Zhu, W.; Singh, I.; Jia, R.; Thomason, J. Language Models can Infer Action Semantics for Classical Planners from Environment Feedback. In Proceedings of the North American Association for Computational Linguistics (NAACL), Albuquerque, NM, USA, 22–27 June 2025. [Google Scholar]

- Tantakoun, M.; Zhu, X.; Muise, C. LLMs as Planning Modelers: A Survey for Leveraging Large Language Models to Construct Au-tomated Planning Models. arXiv 2025, arXiv:2503.18971. [Google Scholar]

- Wang, Y.; Wang, P. LLM A*: Human in the Loop Large Language Models Enabled A* Search for Robotics. arXiv 2023, arXiv:2312.01797. [Google Scholar] [CrossRef]

- Andukuri, C.; Fränken, J.-P.; Gerstenberg, T.; Goodman, N.D. STaR-GATE: Teaching Language Models to Ask Clarifying Questions. In Proceedings of the Conference on Logic, Language, and Computation (COLL 2024), Stanford, CA, USA, 10–12 July 2024; pp. 1–12. [Google Scholar]

- Kranti, C.; Hakimov, S.; Schlangen, D. clem:todd: A Framework for the Systematic Benchmarking of LLM-Based Task-Oriented Dialogue System Realisations. arXiv 2025, arXiv:2505.05445. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.; Rocktäschel, T. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. arXiv 2020, arXiv:2005.11401. [Google Scholar]

- Lu, Y.; Yuan, H.; Yuan, Z.; Lin, R.; Lin, J.; Tan, C.; Zhou, C.; Zhou, J. INSTAG: Automated Instruction Tagging for Enhanced Instruction Tuning. arXiv 2024, arXiv:2402.05123. [Google Scholar]

- Si, C.; Li, R.; Luo, Z.; Wang, Z.; Li, D.; Jing, L.; He, K.; Wu, P.; Michalopoulos, G. LMR-BENCH: Evaluating LLM Agent’s Ability on Reproducing Language Modeling Research. arXiv 2025, arXiv:2506.17335. [Google Scholar]

- Romijnders, R.; Laskaridis, S.; Shamsabadi, A.S.; Haddadi, H. NoEsis: Differentially Private Knowledge Transfer in Modular LLM Adaptation. arXiv 2025, arXiv:2504.18147. [Google Scholar] [CrossRef]

- Cardiel, A.; Zablocki, E.; Ramzi, E.; Siméoni, O.; Cord, M. LLM-wrapper: Black-Box Semantic-Aware Adaptation of Vi-sion-Language Models for Referring Expression Comprehension. In Proceedings of the International Conference on Learning Representations, Singapore, 24–28 April 2025. [Google Scholar]

- Liu, D.; Niehues, J. Middle-Layer Representation Alignment for Cross-Lingual Transfer in Fine-Tuned LLMs. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vienna, Austria, 7–11 July 2025. [Google Scholar]

- Jiang, S.; Liang, J.; Wang, J.; Dong, X.; Chang, H.; Yu, W.; Du, J.; Liu, M.; Qin, B. From Specific-MLLMs to Omni-MLLMs: A Survey on MLLMs Aligned with Multi-modalities. arXiv 2024, arXiv:2412.11694. [Google Scholar]

- Zhao, W.; Yuksekgonul, M.; Wu, S.; Zou, J. Sirius: Self-improving Multi-Agent Systems via Bootstrapped Reasoning. arXiv 2025, arXiv:2502.04780. [Google Scholar]

- Subramaniam, V.; Du, Y.; Tenenbaum, J.B.; Torralba, A.; Li, S.; Mordatch, I. Multiagent Finetuning: Self Improvement with Diverse Reasoning Chains. In Proceedings of the Thirteenth International Conference on Learning Representations (ICLR 2025), Singapore, 24–28 April 2025. [Google Scholar]

- Park, C.; Han, S.; Guo, X.; Ozdaglar, A.; Zhang, K.; Kim, J.-K. MAPoRL: Multi-Agent Post-Co-Training for Collaborative Large Language Models with Reinforcement Learning. arXiv 2025, arXiv:2502.18439. [Google Scholar]

- Zhuge, M.; Wang, W.; Kirsch, L.; Faccio, F.; Khizbullin, D.; Schmidhuber, J. GPTSwarm: Language Agents as Optimizable Graphs. In Proceedings of the Forty-First International Conference on Machine Learning (ICML 2024), Vienna, Austria, 17–23 July 2024. [Google Scholar]

- Yuksekgonul, M.; Bianchi, F.; Boen, J.; Liu, S.; Huang, Z.; Guestrin, C.; Zou, J. TextGrad: Automatic “Differentiation” via Text. arXiv 2024, arXiv:2406.07496. [Google Scholar] [CrossRef]

- Zhou, W.; Ou, Y.; Ding, S.; Li, L.; Wu, J.; Wang, T.; Chen, J.; Wang, S.; Xu, X.; Zhang, N.; et al. Symbolic Learning Enables Self-Evolving Agents. arXiv 2024, arXiv:2406.18532. [Google Scholar] [CrossRef]

- Khattab, O.; Singhvi, A.; Maheshwari, P.; Zhang, Z.; Santhanam, K.; Haq, S.; Sharma, A.; Joshi, T.T.; Moazam, H.; Miller, H.; et al. DSPy: Compiling Declarative Language Model Calls into State-of-the-Art Pipelines. In Proceedings of the Twelfth International Conference on Learning Representations (ICLR 2024), Vienna, Austria, 24–28 April 2024. [Google Scholar]

- Wang, W.; Alyahya, H.A.; Ashley, D.R.; Serikov, O.; Khizbullin, D.; Faccio, F.; Schmidhuber, J. How to Correctly Do Semantic Backpropagation on Language-Based Agentic Systems. arXiv 2024, arXiv:2412.03624. [Google Scholar] [CrossRef]

- Zhang, J.; Xiang, J.; Yu, Z.; Teng, F.; Chen, X.-H.; Chen, J.; Zhuge, M.; Cheng, X.; Hong, S.; Wang, J.; et al. AFlow: Automating Agentic Workflow Generation. In Proceedings of the Thirteenth International Conference on Learning Representations (ICLR 2025), Singapore, 24–28 April 2025. [Google Scholar]

- Su, J.; Xia, Y.; Shi, R.; Wang, J.; Huang, J.; Wang, Y.; Shi, T.; Yang, J.; He, L. DebFlow: Automating Agent Creation via Agent Debate. In Proceedings of the ICML 2025 Workshop on Collaborative and Federated Agentic Workflows, Vancouver, Canada, 14 July 2025. [Google Scholar]

- Liu, Z.; Zhang, Y.; Li, P.; Liu, Y.; Yang, D. A Dynamic LLM-Powered Agent Network for Task-Oriented Agent Collaboration. In Proceedings of the First Conference on Language Modeling, Philadelphia, PA, USA, 7–9 October 2024. [Google Scholar]

- Li, Z.; Xu, S.; Mei, K.; Hua, W.; Rama, B.; Raheja, O.; Wang, H.; Zhu, H.; Zhang, Y. AutoFlow: Automated Workflow Generation for Large Language Model Agents. arXiv 2024, arXiv:2407.12821. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, Z.; Huang, Y.; Feng, J.; Deng, C. Big Loop and Atomization: A Holistic Review on the Expansion Capabilities of Large Language Models. Appl. Sci. 2025, 15, 9466. https://doi.org/10.3390/app15179466

Hu Z, Huang Y, Feng J, Deng C. Big Loop and Atomization: A Holistic Review on the Expansion Capabilities of Large Language Models. Applied Sciences. 2025; 15(17):9466. https://doi.org/10.3390/app15179466

Chicago/Turabian StyleHu, Zefa, Yi Huang, Junlan Feng, and Chao Deng. 2025. "Big Loop and Atomization: A Holistic Review on the Expansion Capabilities of Large Language Models" Applied Sciences 15, no. 17: 9466. https://doi.org/10.3390/app15179466

APA StyleHu, Z., Huang, Y., Feng, J., & Deng, C. (2025). Big Loop and Atomization: A Holistic Review on the Expansion Capabilities of Large Language Models. Applied Sciences, 15(17), 9466. https://doi.org/10.3390/app15179466