5.1. Experimental Settings

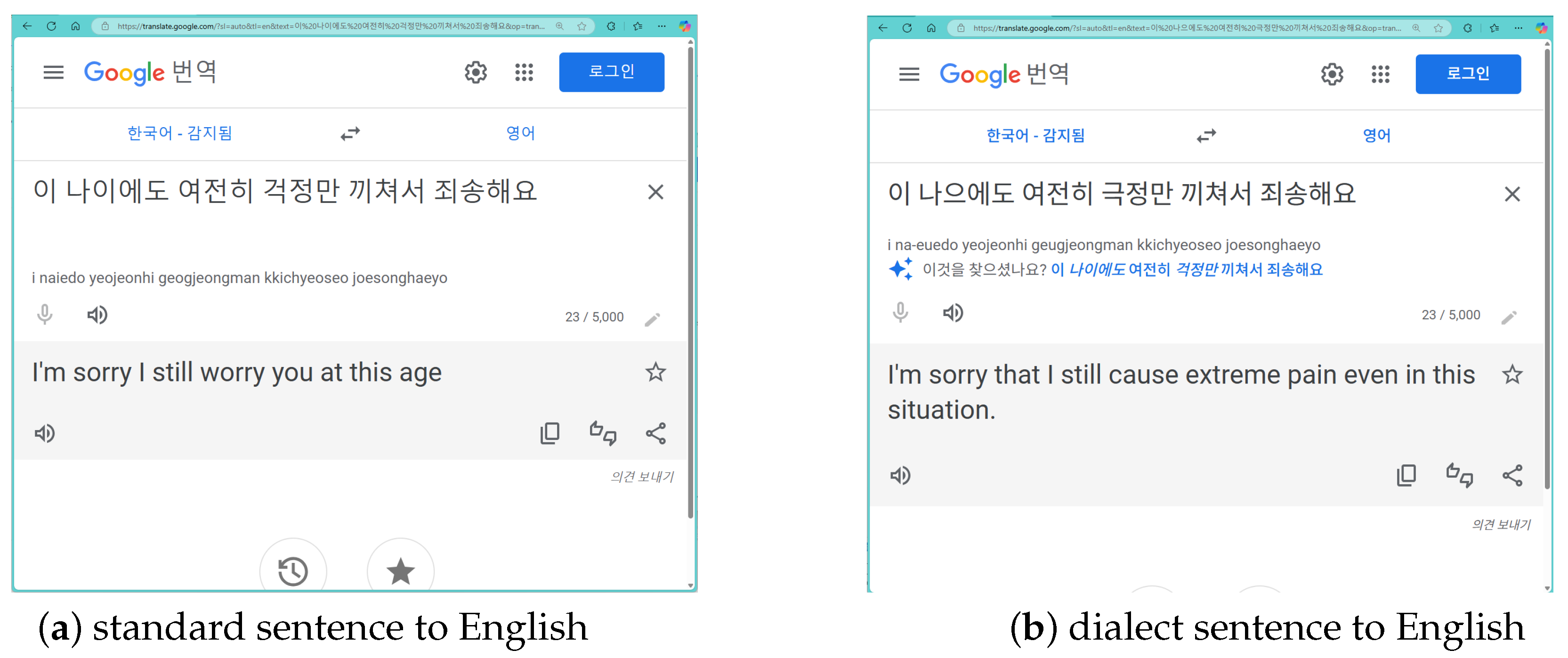

The

Korean Dialect Speech Dataset released at the Korea AI Hub (

https://aihub.or.kr (accessed on 28 July 2025)) is used for the training and evaluation of Korean dialect normalization. This dataset contains five South Korean dialects of

Gyeongsang,

Jeolla,

Jeju,

Gangwon, and

Chungcheong. Two dialects of

Pyeongan and

Hamgyeong are not included in this dataset. This dataset was collected and published in South Korea, where it is impossible to collect data on these two dialects since they are mainly spoken in North Korea. Further details and linguistic features of these dialects can be found in the work of Choi [

1]. The dataset consists of audio dialogue and its corresponding labels. The labels include various information about the dialect speakers, such as their age and place of residence. The labeled sentences are presented in two forms: a dialect sentence and its standard equivalent, with the two forms aligned and provided for every speech. Thus, the pairs of a dialect sentence and its standard sentence extracted from this dataset are regarded as a parallel corpus for normalizing Korean dialects.

Since the sentences in the dataset are spoken dialects, they are pre-processed to remove their noise such as stutters and laughter. In addition, sentences that are too short, with fewer than four

eojeols, are also removed. An

eojeol is a spacing unit in Korean. The original dataset contains a large portion of pairs in which the standard and dialect sentences are exactly the same. This is because the dataset comprises spoken dialogues. If speakers conversed in standard Korean, the standard and dialect forms are labeled as the same. Thus, all such cases are filtered out of the dataset. A simple statistic on the final pre-processed dataset is provided in

Table 3. The number in the parenthesis indicates the number of original pairs. The ratio of dialect pairs in the training set varies considerably from 12% to 35%. Nevertheless, there are enough pairs to train and test a model for normalizing Korean dialects.

Four kinds of tokenizations are evaluated for normalizing Korean dialects: syllable-level SentencePiece, byte-level BPE, morpheme level, and alphabet level. SentencePiece tokenization is implemented with the

SentencePiece module from GitHub (v. 0.2.0) and BPE is implemented with the

ByteLevelBPETokenizer class from the

tokenizers module of HuggingFace. Korean morphemes are analyzed by the MeCab-ko (

https://github.com/hephaex/mecab-ko (accessed on 28 July 2025)) analyzer, and the decomposition of a syllable to an alphabet sequence is accomplished with the

jamo module from GitHub.

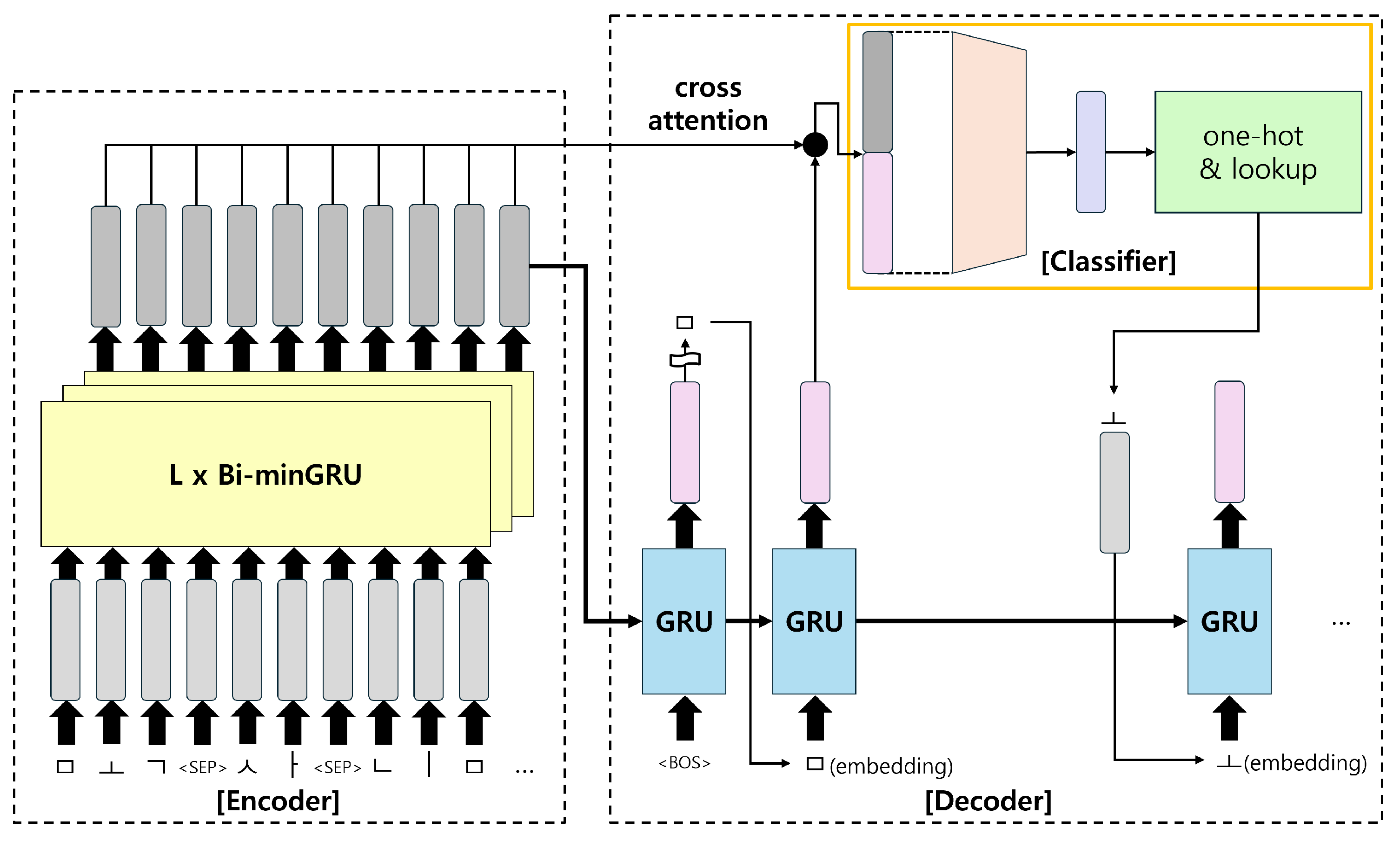

The normalization models are trained to optimize the cross-entropy loss with the Adam optimizer and the ReduceLROnPlateau scheduler. The batch size was set to 200 for all tokenization and dialects except for morpheme-level tokens. As the vocabulary size of morpheme-level tokens is larger than others, the batch size for these was set to 64. The learning rate was initialized at 5 ×

. The weight decay is set to 1 ×

. Additionally,

b in Equation (

1), the dimension of the hidden state vectors of an encoder, is set as 128. Thus, that of a decoder is 256. The number of layers,

L, is set to three.

The translation results are evaluated with ChrF++, BLEU, and BERT score. Among the metrics, BLEU and ChrF++ are used to assess the normalization results. Note that phonemic transitions are predominantly observed in Korean dialects. Thus, the primary work of the normalization models is to reconstruct phonemic varieties to restore the standard form. This is why n-gram-based metrics are useful for evaluating dialect normalization models. In particular, ChrF++ is designed for character-level evaluation, while BLEU focuses at the word level. On the other hand, the results of pivot translation are evaluated with ChrF++ and BERT score. Since this task is an ordinary translation task, the BERT score is adopted to evaluate the semantic similarity between original and translated sentences.

The translation models used to translate normalized dialects into foreign languages are (i) Opus-MT [

33], (ii) m2m_100_1.2B [

34], and (iii)

EXAONE-3.0-7.8B-Instruct [

27].

Llama-3.1-8B-Instruct is used to generate reference translations for pivot translation experiments. The

Llama model is adopted since it is one of the most popular publicly available LLMs. Opus-MT and m2m_100_1.2B are neural machine translators. Opus-MT is designed based on Marinan NMT [

35] and is trained using OPUS datasets [

36]. On the other hand, m2m_100_1.2B is a many-to-many translation model. It can translate any pair of the one hundred languages it has been trained on. Their trained checkpoints are loaded and used by

easyNMT, where

easyNMT is a Python library (v. 2.0.2) for neural machine translation which provides a unified interface to various translation models. Two neural translation models have been selected as a baseline because they are effective and can be easily adapted for practical use through

easyNMT. Exaone is a large language model (LLM) developed by LG AI Research. According to Sim et al. [

37], it is specialized in understanding Korean culture, which implies that it is better at processing Korean dialects. This is the core reason why Exaone was adopted for this experiment. The

LGAI-EXAONE/EXAONE-3.0-7.8B-Instruct checkpoint from HuggingFace is used in the experiments. Translations into English, Japanese, and Chinese are evaluated for m2m_100_1.2B and Exaone. However, only the English translation is reported for Opus-MT because the only available Opus-MT checkpoint is from Korean to English.

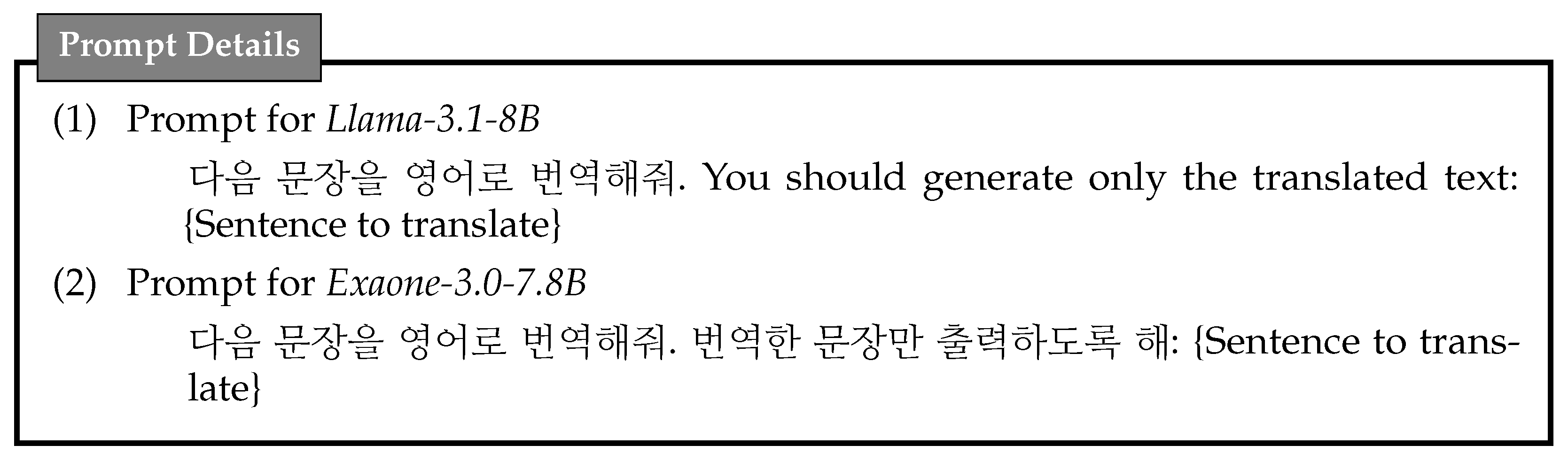

Two LLMs,

Llama-3.1-8B-Instruct and

Exaone-3.0-7.8B-Instruct, are used in the experiments. The prompts for each model are designed to elicit the desired translation outcomes. The prompt examples for each model are shown in

Figure 4. The prompts are given in a zero-shot setting with no additional fine tuning. The prompts commonly include “다음 문장을 영어로 번역해줘 (

Please translate the following sentence into English)”. For other languages, the term ‘영어 (English)’ is replaced with ‘일본어 (Japanese)’ or ‘중국어 (Chinese)’. The prompts are written in Korean for both models, forcing the language model to focus more on the Korean translation task. On the other hand, instruction for their output is in two forms: “

You should generate only the translated text” for Llama-3.1-8B-Instruct and “번역한 문장만 출력하도록 해 (

Please output only the translated sentence)” for Exaone-3.0-7.8B-Instruct. This is because Llama-3.1-8B-Instruct was primarily trained on English data, whereas Exaone-3.0-7.8B-Instruct was specialized for Korean.

Normalization experiments were performed three times with randomly initialized weights. The table states the average and standard deviation of the evaluation metrics across these three runs. This is crucial for evaluating the efficiency of each tokenization methods. Conversely, pivot translation experiments are conducted once for each translation model. This is because the translation models are pre-trained and their weights are fixed. The normalization model used in the pivot translation experiments is the model that performed best in the normalization experiments.

5.2. Evaluations on Normalization from Dialect to Standard

Table 4 and

Table 5 compare the performance of tokenization methods when normalizing Korean dialects. According to these tables, alphabet-level tokenization outperforms all other methods. Its average chrF++ score is over 90, implying that it restores almost perfect standard sentences from dialect sentences. Alphabet-level tokenization has a statistically significant advantage over other tokenization methods except for the Jeju dialect. A similar tendency is observed when BLEU is used as an evaluation metric. This is because the Korean dialects share their grammar and most words with standard Korean, as shown in

Table 2.

One thing to note about these tables is that sub-words are ineffective for this task. They are heavily influenced by the initial weight of the model. The standard deviations of the sub-word tokenizers are higher than those of alphabet-level tokenization. SentencePiece and BPE are more complex and restrictive than the others. They model the natural language within a pre-defined vocabulary size and require a larger corpus to capture all the necessary patterns for the task. The vocabulary size in sub-word tokenization is set to 30,000, which is smaller than that in morpheme-level tokenization. The difference in vocabulary size is clearly evident in the performance gap between sub-word tokenization and morpheme-level tokenization.

Another thing to note is that the performance of the Jeju dialect is consistently lower than that of other dialects, whichever tokenization is used. That is, even though the Jeju dialect has the largest number of training instances, its performance is the worst. This is due to the geographical characteristics of the Jeju area. Jeju is an isolated island located far south of Seoul. Thus, the Jeju dialect differs significantly from standard Korean primarily at its surface form, resulting in poor normalization performance. This is also the reason why the sub-word tokenizers perform better with the Jeju dialect than with other dialects. No statistical significance was observed between sub-word tokenization and alphabet-level tokenization in the Jeju dialect.

The size of the proposed normalization model with alphabet-level tokenization is much smaller than the model with sub-word tokenizers. The proposed model with alphabet-level tokenization has 1.2M trainable parameters for 156 Korean alphabets including numbers and symbols. In constrast, the model with syllable-level SentencePiece has 21M parameters. That is, the alphabet-level tokenization achieves a higher performance with much fewer parameters.

The proposed model adopts minGRU as its encoder because the length of an input sequence becomes longer due to its alphabet-level tokenization. However, GRU can also be used as an encoder. According to

Table 6, the normalizing performance of using a bidirectional GRU is slightly better than that of using a bidirectional minGRU. However, this difference is not statistically significant with

. The true advantage of minGRU lies in its efficiency during its training and inference. That is, its execution time is faster than that of GRU.

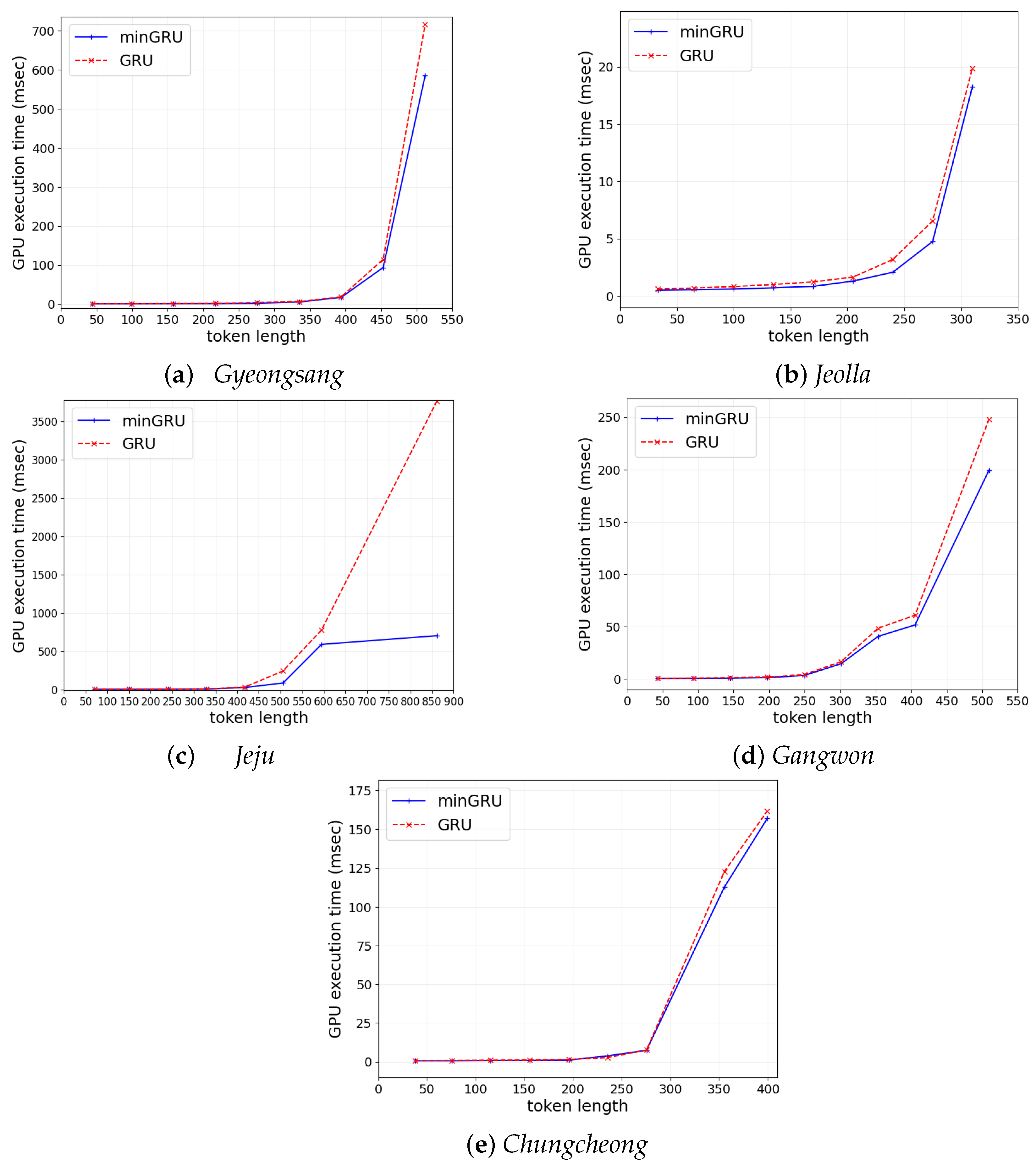

Figure 5 depicts how much faster minGRU is than GRU during the training. MinGRU takes less time to execute each epoch than GRU for all types of dialect. Overall, using a minGRU encoder saves about 15% of epoch time, even though the minGRU encoder consists of three minGRU layers and the GRU encoder has only one GRU layer. The three-layered minGRU and the one-layered GRU are compared because they demonstrate similar performance in character-level tokenization.

Figure 6 compares minGRU and GRU regarding the GPU processing time used for normalizing dialects to the standard. In this figure,

X-axis is the number of tokens in a dialect sentence, and

Y-axis represents the GPU time (msec) consumed to normalize a dialect sentence. This figure proves that minGRU always consumes less GPU time than GRU. The difference between minGRU and GRU is not significant when the sentence length is less than 400. However, the longer a dialect sentence is, the larger time gain minGRU has.

5.3. Evaluations on Pivot Translation

There is no parallel corpus for Korean dialects and foreign languages. Thus, a parallel corpus has been constructed from the normalization dataset. Note that the normalization dataset contains a standard Korean sentence for each dialect sentence. The standard sentences of the test set are first translated into foreign languages by an LLM, Llama-3.1-8B-Instruct, under the assumption that the translated sentences in this way are correct. Although the model has limited language modeling capability, it was chosen due to resource constraints. Then, three translation models—m2m_100_1.2B, Opus-MT and Exaone—are used to prepare pairs of a dialect sentence and its translated foreign equivalent as well as pairs of a normalized sentence and its translated foreign equivalent.

Table 7 shows the evaluation results of the proposed pivot-based translation model for English. Here, ‘Direct Translation’ means that the dialects are translated directly into English without using a pivot standard. This table reports the ChrF++ and BERT score for pivot-based dialect translations. The proposed model achieves better performances than direct translation for all dialects, proving the effectiveness of using standard Korean as a pivot language. Although the improvement in performance is modest for both ChrF++ and BERT score, it is still significant. The ChrF++ score indicates the surface form distance, but this is not the only factor that determines the translation quality. The BERT score measures the semantic similarity between two sentences. However, according to Hanna and Bojar [

38], the BERT score often assigns a high score even to incorrect translations. In the experiment of Hanna and Bojar [

38], sentences containing several grammatical errors achieved a BERT score of around 82, while grammatically correct sentences achieved a score of around 83. The BERT score assigns a high score to incorrect translations, but it definitely penalizes defective translations. This shows that an improvement in the BERT score, even by less than 1.0 points, still implies a certain amount of improvement in the semantic level. In summary, the table shows that the proposed pivot-based translation is better in terms of both surface form and semantics.

Table 8 and

Table 9 demonstrate performance when translating dialects into Chinese and Japanese, respectively. Since neither language has word spacing, ChrF is used instead of ChrF++. Unlike

Table 7, these tables do not include the Opus-MT’s performance, as there is no checkpoint for translating Korean to Chinese and Japanese. A similar phenomenon to that observed for English is seen for Chinese and Japanese, too. The proposed pivot-based translation consistently outperforms direct translation in these languages.

Exaone is a general-purpose LLM, while m2m_100_1.2B is a specialized neural machine translation model. It is important to note that both models outperform direct translation when the proposed pivot-based approach is used. This tendency is observed in all three foreign languages. This demonstrates the robustness and effectiveness of the proposed pivot-based translation approach with character-level tokenization for dialect normalization.