1. Introduction

Respiratory diseases rank among the most prevalent medical conditions worldwide, affecting a significant portion of the global population [

1]. Despite their widespread impact, many individuals struggle to recognize or interpret the symptoms associated with these conditions. Auscultation of lung sounds remains a fundamental diagnostic technique in clinical settings for identifying respiratory abnormalities [

2]. These sounds—characteristically aperiodic and non-stationary—carry vital diagnostic information pertinent to the early detection of pulmonary diseases [

3]. Lung sounds are typically classified into three main categories: wheezes, crackles, and rhonchi. Wheezes are continuous, high-pitched, abnormal sounds that occur when airflow is partially obstructed, often observed in patients suffering from pneumonia or interstitial pulmonary fibrosis [

4,

5]. Crackles are sudden, discontinuous sounds heard during both inspiration and expiration, and they are commonly associated with conditions such as asthma and Chronic Obstructive Pulmonary Disease (COPD). Rhonchi are low-pitched, coarse, snoring-like sounds produced by airway secretions and turbulent airflow. Accurate classification of these lung sounds requires detailed analysis of their acoustic properties. Normal respiratory sounds typically occupy a frequency range of 100 to 200 Hz and may be perceptible up to 800 Hz under sensitive detection conditions. In contrast, abnormal respiratory sounds span a broader frequency range, from approximately 200 to 2000 Hz, and are often characterized by lower pitch and more irregular patterns [

6,

7,

8].

In recent years, deep learning-based artificial intelligence (AI) research has been actively pursued for the automated analysis of respiratory sounds [

9,

10]. These studies often involve converting raw audio signals into two-dimensional representations—such as spectrograms or Mel-Frequency Cepstral Coefficients (MFCC)—which serve as inputs to Convolutional Neural Network (CNN)-based models for disease classification. CNN-based approaches have demonstrated high accuracy by effectively extracting discriminative features and recognizing patterns within the image-like representations of audio data [

11]. However, these AI models typically require substantial computational resources, often necessitating the use of high-performance server infrastructure. As a result, auscultated lung sound data must be transmitted from the patient’s device to remote servers for inference, raising significant concerns regarding data privacy and security. To address these issues, recent efforts have turned toward on-device AI, which allows models to perform inference locally without relying on server-side processing [

12]. By confining data processing to the device itself, on-device AI offers improved data confidentiality and mitigates the risk of security breaches associated with cloud-based systems. Nevertheless, most existing AI models are designed under the assumption of ample computational availability, as found in server environments [

13]. This poses substantial challenges when attempting to deploy such models on resource-constrained devices, where executing traditional architecture remains impractical due to hardware limitations.

To address the aforementioned challenges, this study proposes a lung sound classification model specifically designed for on-device AI applications. The proposed scheme extracts essential features from lung sound recordings, including Mel spectrograms, chromagrams, and MFCC. These features serve as the input to a CNN that performs disease classification. The CNN architecture is carefully designed to capture both spectral characteristics and temporal dynamics inherent in respiratory sounds. The proposed model is designed as a lightweight CNN architecture optimized for on-device use. It features a repeated structure consisting of two Inception blocks followed by a single 2D convolutional layer, repeated three times. This design enables the extraction of rich multi-scale features while maintaining low computational complexity. The compact architecture ensures efficient inference and reduced model size, making it suitable for resource-constrained environments.

The remainder of this study is organized as follows:

Section 2 describes the lung sound classification model and on-device AI;

Section 3 presents the lightweight lung sound classification model for on-device AI; in

Section 4, the experimental results of the proposed scheme are presented; and the conclusion and future work are provided in

Section 5.

2. Related Work

2.1. Lung Sound Dataset

Several publicly available datasets have been introduced to support the training of AI models for pulmonary disease detection. Among them, the ICBHI 2017 dataset [

14] was developed to facilitate research on the automatic classification of respiratory diseases and the analysis of respiratory sounds. The data were collected from clinical institutions located in Portugal and Greece. A detailed summary of the dataset’s composition is provided in

Table 1.

The ICBHI 2017 dataset alone is limited in volume and, therefore, insufficient for training robust and generalizable AI models. To overcome this limitation, Acharya and Basu employed data augmentation techniques to effectively expand the dataset. In addition to ICBHI, the KAUH dataset—comprising respiratory sound recordings collected at King Abdullah University Hospital in Jordan—has also been utilized to support model development. The detailed composition of the KAUH dataset is summarized in

Table 2.

The Peking University dataset was developed to address the imbalance in age distribution and disease categories observed in the ICBHI dataset. It comprises a total of 11,968 respiratory sound recordings collected from 40 individuals, including 20 hospitalized patients with respiratory diseases and 20 healthy participants. This dataset is approximately 1.5 times larger than the ICBHI dataset in terms of recording volume. The Coswara dataset consists of respiratory and cough sound recordings intended for the screening and diagnosis of conditions such as SARS-CoV-2. It includes audio data from 2635 participants, totaling approximately 65 h of recorded data.

In this study, the ICBHI 2017 and KAUH datasets were employed to train the proposed AI model. Given the limited volume of available training data, several data augmentation techniques were applied to enhance dataset diversity and improve model generalization. These techniques included time stretching, pitch shifting, background noise addition, time shifting, and volume scaling.

2.2. Lung Sound Classification

To achieve high performance in lung sound classification, a variety of deep learning (DL)-based approaches have been investigated [

15,

16]. Basu et al. [

17] extracted MFCC from lung sound recordings to classify five distinct respiratory diseases. Bardou et al. [

18] extracted MFCC and LBP features from lung sound recordings and achieved high classification accuracy using a CNN-based model. Petmezas et al. [

19] proposed a CNN–LSTM hybrid model that extracts features from STFT spectrograms and captures temporal dependencies for lung sound classification. Li et al. [

20] proposed a residual network with augmented attention convolution, leveraging variable Q-factor wavelet and triple STFT transforms to enhance lung sound feature representation and classification performance.

The models discussed above generally require deployment on high-performance servers to achieve optimal accuracy [

21]. To address the computational demands of such models, recent research has focused on lightweight models. Shuvo et al. [

22] proposed a lightweight CNN with four convolutional blocks that classifies six respiratory conditions using scalogram-based features. Wanasinghe et al. [

23] introduced a lightweight CNN that classifies ten lung sound categories using stacked representations of Mel spectrograms, MFCC, and chromagrams for efficient and low-complexity feature extraction.

In this study, we propose the scheme that utilizes stacked image representations composed of MFCC, Mel spectrograms, and chroma features extracted from audio signals as input to the model. To achieve model compactness, Inception blocks are employed, and their output features are integrated to enhance model stability. This design is expected to achieve high classification accuracy while maintaining a lightweight model size.

2.3. On-Device AI

AI-based digital healthcare technologies have recently garnered significant attention as a transformative domain in medical innovation, prompting extensive research efforts. Deep Neural Networks (DNN), which form the foundation of modern AI, are particularly effective at learning complex patterns from large-scale data. However, conventional DNN models typically require computationally intensive server environments to operate efficiently [

13]. This requirement renders them unsuitable for deployment in resource-constrained settings—such as regions with limited communication infrastructure—thereby restricting access to advanced healthcare services. To address these limitations, on-device AI technologies have been explored within the medical domain. On-device AI refers to the execution of AI inference algorithms directly on embedded systems, such as those integrated into wearable devices, clinical instruments, or smart medical equipment. Unlike traditional cloud-based systems that depend on internet connectivity and remote servers, on-device AI offers several advantages, including real-time inference, improved data security, operational autonomy, and uninterrupted service continuity. Malche et al. [

24] proposed a Tiny Machine Learning (TinyML) model tailored for wearable Internet of Things (IoT) devices. Designed for snore detection, the model demonstrated low-latency performance in real-world sleep environments while operating with minimal computational resources. Roy et al. [

25] introduced an on-device Semi-Supervised Learning (SSL) system capable of detecting and predicting human activity patterns in real time. Their system utilizes data collected from Inertial Measurement Unit (IMU) sensors embedded in wearable devices to identify user activity events. Based on these events, a cluster-based learning strategy is employed to preserve data privacy. This clustering mechanism incorporates both population-based and distance-based strategies to enhance performance. As a result, the system achieved high accuracy and computational efficiency under constrained hardware conditions.

Since on-device AI performs real-time inference directly on the device, power efficiency becomes a critical design consideration. While Graphics Processing Units (GPU)—commonly used in cloud server environments—function as general-purpose hardware accelerators, they are associated with substantial power consumption. To address this limitation, Neural Processing Units (NPU) have emerged as a promising low-power alternative [

26]. NPU is architecturally inspired by the human brain, employing a large number of interconnected nodes to process information efficiently. They are specifically optimized for artificial intelligence and machine learning workloads, offering significantly lower power consumption than GPU and greater task-specific efficiency compared to conventional Central Processing Units (CPU). As the adoption of on-device AI continues to expand, there is growing interest in leveraging NPUs to support energy-efficient, high-performance inference on edge devices.

In this paper, we evaluate the performance of the proposed scheme in an NPU-based on-device environment to validate its effectiveness under realistic deployment conditions. This work offers practical value by demonstrating that a lightweight yet accurate model can operate efficiently on resource-constrained edge devices. Furthermore, it contributes to the field by providing a scalable architecture that balances computational efficiency and classification performance for respiratory sound analysis.

3. Proposed Scheme

This section represents the lung sound classification model. First, we introduce the preprocessing of lung sound. Second, we present the lightweight classification model.

3.1. Lung Sound Preprocessing

To classify respiratory diseases using a CNN-based model, time–frequency domain features are extracted from audio signals, specifically Mel spectrograms, chroma, and MFCC. These features are then combined and represented as a composite image, which serves as the input to the CNN. To compute the Mel spectrogram, the STFT is first applied to the audio signal. The STFT captures the signal’s frequency content over time and is computed as shown in Equation (1).

denotes the input signal,

denotes the index of the input signal,

denotes the window function,

denotes the frame index, and

denotes the frequency index. Based on the STFT values, the power spectrogram is computed as shown in Equation (2).

The power spectrogram is then passed through a Mel filter bank to compute the Mel spectrogram, as shown in Equation (3).

denotes the filter index, and

denotes the frequency of i-th filter. The Mel spectrogram represents the short-term power spectrum of a sound produced by sampling air pressure over time, transforming it from the time domain to the frequency domain using the Fast Fourier Transform (FFT), and then converting frequency to the Mel scale and color dimension to amplitude [

27]. The chroma feature is computed by mapping the power spectrum to pitch classes, as expressed in Equation (5).

denotes the pitch class. MFCC represent the short-term power spectrum of a sound and are derived from the Mel frequency cepstrum. They are computed by applying a linear discrete cosine transform to the logarithmic power spectrum on a nonlinear Mel-scaled frequency axis [

2]. The log–Mel spectrogram undergoes DCT to decorrelate the coefficients and capture the most salient features. The resulting coefficients are referred to as MFCC. The MFCC is calculated as shown in Equation (6).

denotes the MFCC dimension, and

denotes the set of MFCC dimension. The audio feature representations corresponding to the Mel spectrogram, chroma, and MFCC are illustrated in

Figure 1. The extracted audio features are stacked and used as the input to the CNN model for disease classification. To enhance the frequency resolution of audio signals and improve the representational capacity for speech patterns, we employed a Mel scale with 128 frequency bins. Accordingly, the frequency dimension was set to 128. The temporal dimension, set to 216, was determined based on the length of the audio and calculated as shown in Equation (7). Algorithm 1 shows the preprocessing of lung sound pseudo code. The audio signal is first resampled according to the sampling rate (sr) to ensure temporal consistency across recordings. The signal is then formatted to match a fixed input duration, using padding or truncation if necessary, to standardize the temporal dimension for model input. Next, three distinct audio features are extracted to capture complementary characteristics of the lung sounds. The Mel spectrogram is computed as described in Equation (3), providing a time–frequency representation with perceptually scaled frequency bins. The chroma feature, extracted via Equation (5), captures pitch class distribution, which is relevant for tonal components present in pathological sounds. Additionally, MFCC are derived using Equation (6) to represent the spectral envelope of the sound, which is known to be effective in characterizing respiratory acoustics. Once these features are extracted, each is resized to a common image resolution of 128 × 216, allowing compatibility with CNN input formats. Feature normalization is applied to standardize value ranges. Finally, the three features—Mel spectrogram, chroma, and MFCC—are stacked along the channel dimension.

denotes the audio length,

denotes sampling rate of audio, and

denotes hop length.

| Algorithm 1: Preprocessing of Lung Sound |

| | Data: Lung sound signal |

| | Result: Preprocessed audio feature |

| 1: | Sampling the audio signal based on |

| 2: | Formatting the audio based on input audio length |

| 3: | Extract Mel spectrogram feature using Equation (3) |

| 4: | Extract chroma feature using Equation (5) |

| 5: | Extract MFCC feature using Equation (6) |

| 6: | Transform the audio feature to 2D image (128 × 216) |

| 7: | Normalize the feature value |

| 8: | Stack Mel spectrogram, chroma, and MFCC |

3.2. Lightweight Classification Model

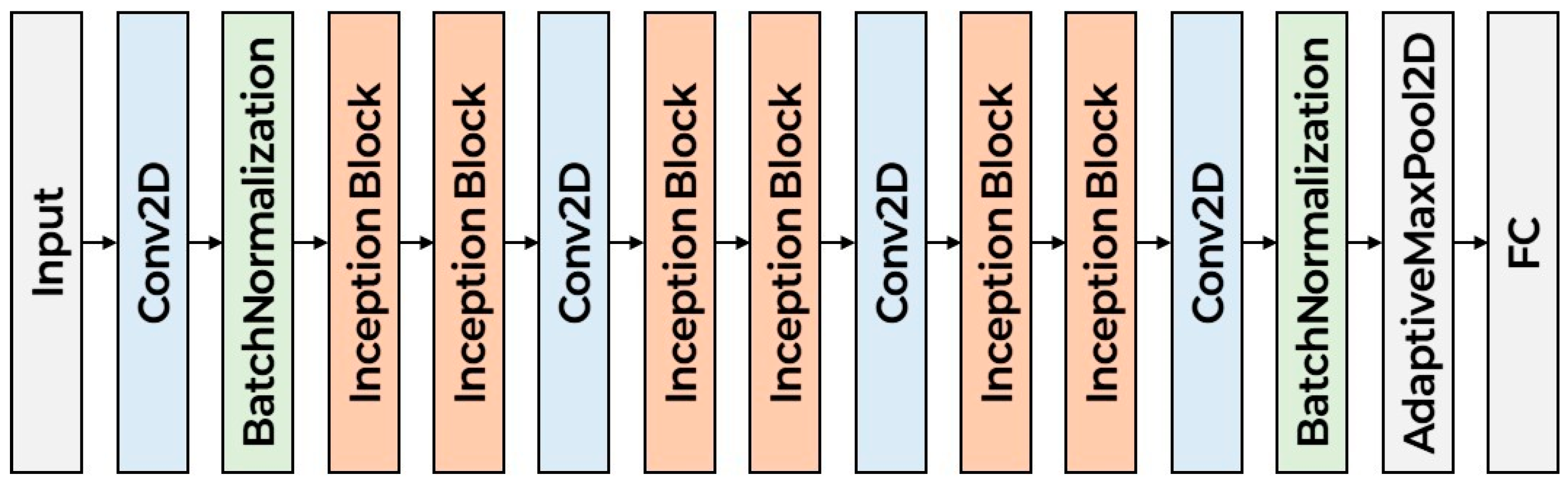

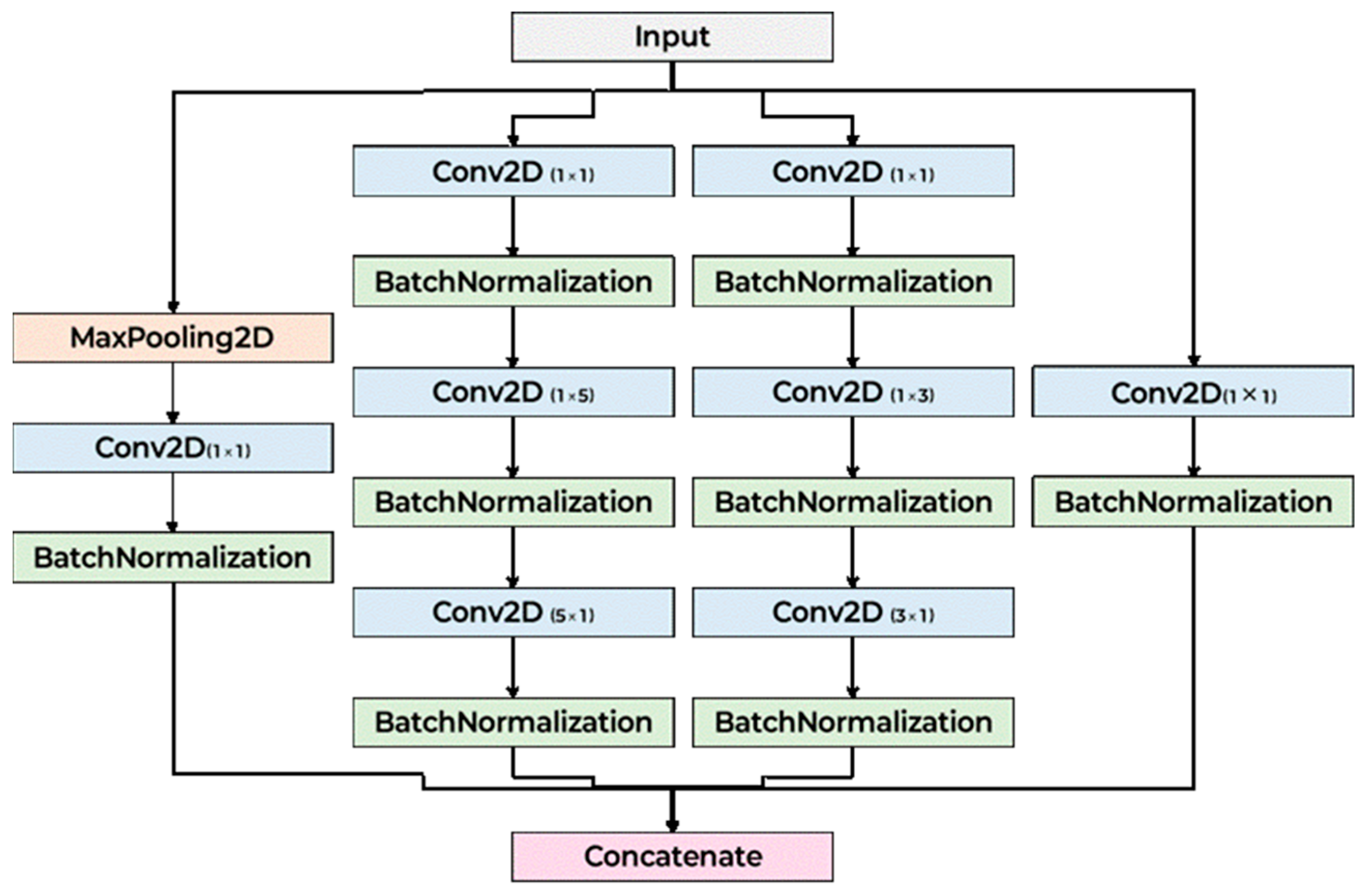

Figure 2 illustrates the architecture of the proposed CNN model. The model begins with an initial convolutional layer designed to stabilize and normalize the input data. It is subsequently organized into three primary stages, each comprising multiple Inception blocks. These Inception blocks incorporate parallel convolutional branches with varying kernel sizes, allowing the network to simultaneously extract both local and global features across different receptive fields. By stacking multiple Inception blocks in sequence, the computational complexity. Following the final Inception block, the spatial dimensions of the feature maps are reduced to a fixed size, which is then passed through a Fully Connected (FC) layer for classification. The detailed layer configuration of a single Inception block is presented in

Figure 3. In designing the proposed scheme, we considered time–frequency representations such as the Mel spectrogram, chroma, and MFCC, each of which captures complementary aspects of the acoustic signal. Asymmetric convolutional kernels were selected for their ability to effectively model distinct temporal and spectral patterns within these features, while maintaining computational efficiency. Although depthwise separable convolutions can isolate individual feature maps with reduced complexity, they are inherently limited in capturing cross-feature dependencies and integrating information across dimensions. By contrast, the Inception block’s multi-branch structure enables simultaneous extraction of features at multiple receptive fields and facilitates the integration of diverse representations, thereby enhancing the model’s capacity to capture the complex interdependencies present in lung sound data. Careful consideration was given to the selection of kernel sizes to effectively capture the spatiotemporal patterns inherent in time–frequency representations such as Mel spectrograms, chromagrams, MFCC. Given the rectangular shape of the input (e.g., 128 × 216), the architecture incorporates asymmetric convolutional kernels—specifically combinations of 1 × 3 and 3 × 1, as well as 1 × 5 and 5 × 1 convolutions—in place of standard 3 × 3 and 5 × 5 kernels. This design maintains a comparable receptive field while reducing computational complexity. These asymmetric kernels are particularly well-suited for processing spectrogram-like inputs, as they can independently model temporal variations (along the width) and frequency patterns (along the height). Additionally, 1 × 1 convolution layers are employed in transition blocks to adjust channel dimensions without altering spatial resolution, enabling flexible depth control while preserving localization information. Max pooling with a 3 × 3 kernel and stride 1 is also included in each Inception block branch to enhance local feature abstraction without reducing feature map size. Finally, the classifier uses a 3 × 3 convolution to integrate local features, followed by an adaptive max pooling layer to aggregate global features regardless of input size, ensuring compatibility with variable input resolutions and robust classification performance.

4. Performance Evaluation

4.1. Experiment Setup

To evaluate the performance of the proposed scheme, we implemented the model using the PyTorch framework (ver. 2.7.1) [

28].

Table 3 summarizes the parameter settings applied during audio data preprocessing. The experiments were conducted using the ICBHI 2017 and KAUH datasets, both of which contain lung sound recordings with variable durations ranging from 5 to 90 s. To standardize input lengths, all recordings were segmented into 5 -s clips. For example, a 12 s audio file was split into two 5 s segments, with the remaining 2 s discarded. This segmentation strategy enabled an increase in the number of usable training samples. Despite this preprocessing step, the combined dataset yielded only 1,298 lung sound segments—insufficient for training a robust deep learning model. To address this limitation, we applied various data augmentation techniques, including tempo modification, pitch shifting, background noise addition, time shifting, and volume scaling. The time stretch rate was set to 0.9, corresponding to a 10% decrease in playback speed, which reflects variability in patients’ respiratory rates. A pitch shift of +1 and −1 semitone was applied to simulate pathological frequency changes observed in abnormal breath sounds. The standard deviation of additive noise was set to 0.005, which reflects moderate ambient noise levels commonly encountered in real clinical environments, as opposed to extreme industrial noise. A time shift of 0.5 s was included to account for variability in auscultation timing or onset delays. Volume scaling was applied in the range of 0.5 to 1.5× to simulate variability in recording intensity due to stethoscope pressure, device sensitivity, or patient condition. These augmentation parameters were chosen to reflect both the physiological variability of lung sounds and the diversity of recording conditions encountered in real-world clinical settings. After the augmentation, we used the 19,894 data samples.

Table 4 presents the parameter settings used for training the CNN model. For model evaluation, 80% of the dataset was allocated for training and the remaining 20% for validation. Classification performance was assessed using four standard metrics: accuracy, F1-score, precision, and recall, calculated according to Equations (8)–(11).

denotes the outcome where the model correctly predicts the positive class, denotes the outcome where the model correctly predicts the negative class, denotes the cases where the model inaccurately predicts the positive class, and denotes the outcome where the model incorrectly predicts the negative class. In our experiments, 80% of the entire dataset was used for training, while the remaining 20% was allocated for validation. To mitigate potential performance bias due to data imbalance, each model was trained ten times, and the corresponding performance metrics were reported. All metrics were computed as macro-averaged scores.

4.2. Proposed Scheme Validation

Table 5 presents the classification results according to the number of Inception blocks in the proposed scheme. The preceding number indicates the number of repetitions of the Inception block. In the case of 2T, the shallow network depth limits the model’s ability to fully capture time–frequency features of the audio, resulting in lower accuracy, F1-score, precision, and recall. Conversely, 4T, with a deeper network, is able to extract and recognize audio features more effectively, thereby achieving higher performance; however, it also exhibits signs of overfitting as the number of layers increases. The 3T configuration, having a greater depth than 2T, demonstrates the highest performance with minimal overfitting to the audio features.

Table 6 shows classification performance according to the audio feature combination. The configuration of Mel spectrogram, chroma, and MFCC consistently yielded superior results compared to alternative permutations. This arrangement allows the model to process the global spectral energy distribution first (Mel spectrogram), refine the representation with harmonic and pitch-related information (chroma), and finally encode a compressed summary of the spectral envelope (MFCC). Such ordering aligns with a hierarchical progression from low-level acoustic structure to mid-level harmonic content and finally to high-level compressed descriptors. This hierarchical feature integration appears to facilitate more stable learning in the initial convolutional layers, enabling the network to more effectively capture both temporal and frequency–domain dependencies present in lung sound data. The three methods take an average of 42.22 ms to process audio data.

Based on these experimental results, we selected the configuration with three iterations of the Inception block. Also, we select the audio feature combination that contained Mel spectrogram, chroma, and MFCC.

4.3. Classification Results in GPU

The experiments were conducted on a system equipped with an Intel Core i9-13990K CPU, 64 GB of RAM, and an NVIDIA GeForce RTX 4090 GPU.

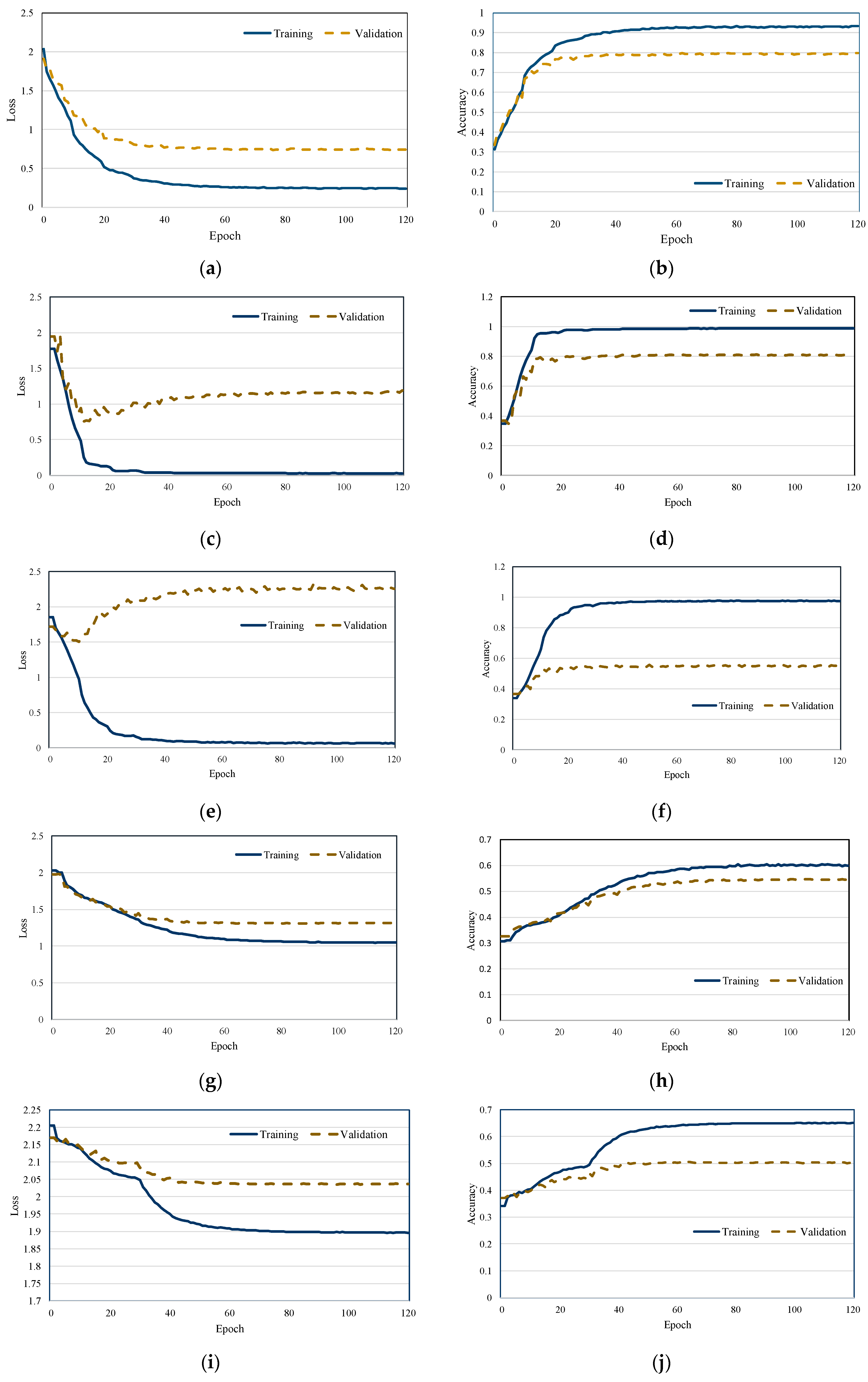

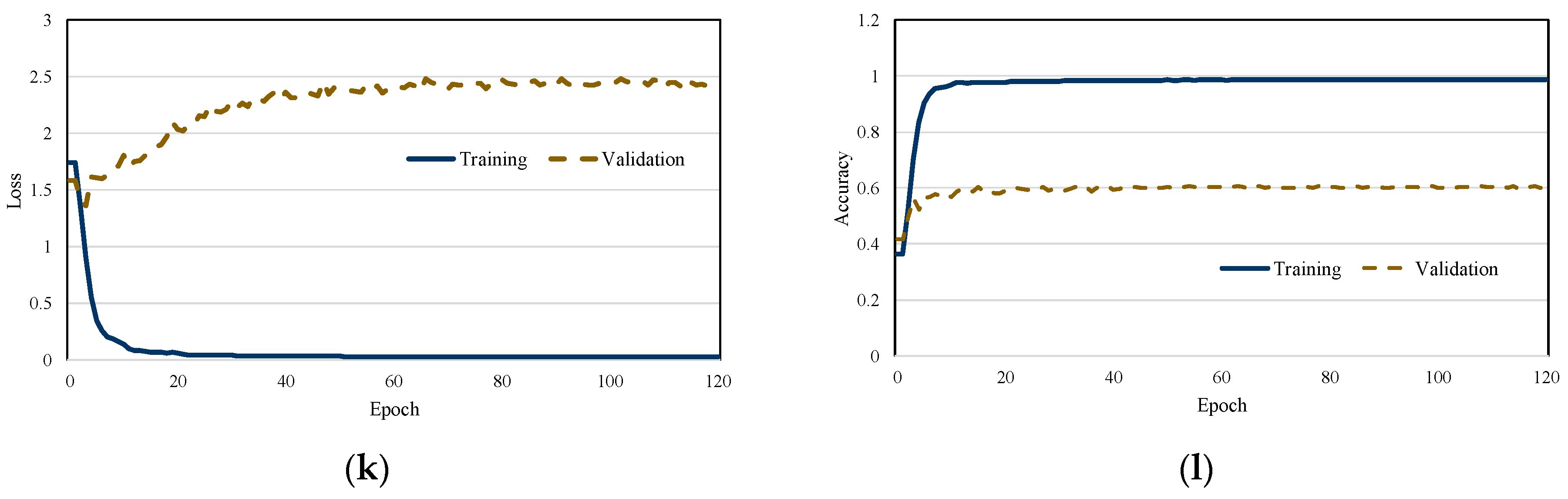

Figure 4 illustrates the training and loss curves of each scheme. As the training loss gradually decreases, a corresponding increase in classification accuracy is observed, indicating effective model learning. Moreover, the proposed scheme demonstrates stable training dynamics, with no significant fluctuations in either the loss or accuracy curves. The results also reveal that the model converges rapidly, highlighting the efficiency and robustness of the training process. We observed that the validation loss of both MobileNetV2 and EfficientNet did not decrease. This is attributed to the depthwise convolution mechanism of MobileNetV2 and the regularization strategy of EfficientNet, which were not effective in extracting discriminative features from audio spectrograms.

Table 7 presents the classification results of the proposed scheme in comparison with existing lung sound classification models, while

Table 8 summarizes the number of parameters and model sizes for each approach. ResNet50, which employs a deep architecture with residual blocks, achieves the highest classification performance due to its strong capability to extract and model time–frequency features. However, its deep structure results in the largest model size and the highest number of parameters among all compared schemes. MobileNetV2, conversely, is specifically designed for efficient deployment on mobile and embedded platforms. It utilizes an architecture based on inverted residuals and linear bottlenecks. Although it offers a compact model size and a significantly reduced parameter count, its limited channel capacity leads to lower classification performance, particularly in complex audio scenarios, when compared to ResNet50. InceptionV2 employs Inception blocks to reduce model complexity. However, despite having more parameters and a larger model size than MobileNetV2, it exhibits inefficient learning in deeper layers, which contributes to its relatively low classification accuracy. The Stacked model represents a lightweight architecture composed of six convolutional blocks. Owing to its simplicity, it contains the fewest parameters and the smallest model size among the evaluated baselines. Nonetheless, its shallow depth limits it to capturing robust features—especially under noisy input conditions—resulting in subpar classification performance. The EfficientNet shows higher performance than another lightweight model because it scales up the model according to the depth, width, and resolution. However, this model focuses on one point in the image and is not suitable for audio features. In contrast, the proposed scheme effectively captures both temporal and spectral characteristics of lung sounds through the use of Inception blocks. Unlike InceptionV2, the proposed architecture incorporates additional convolutional layers following each pair of Inception blocks to stabilize the feature maps. This design enables the model to achieve classification performance comparable to ResNet50 while outperforming all other lightweight baselines. Furthermore, it achieves the smallest overall model size and maintains a moderate parameter count—greater than that of the Stacked model, but considerably lower than ResNet50 and InceptionV2.

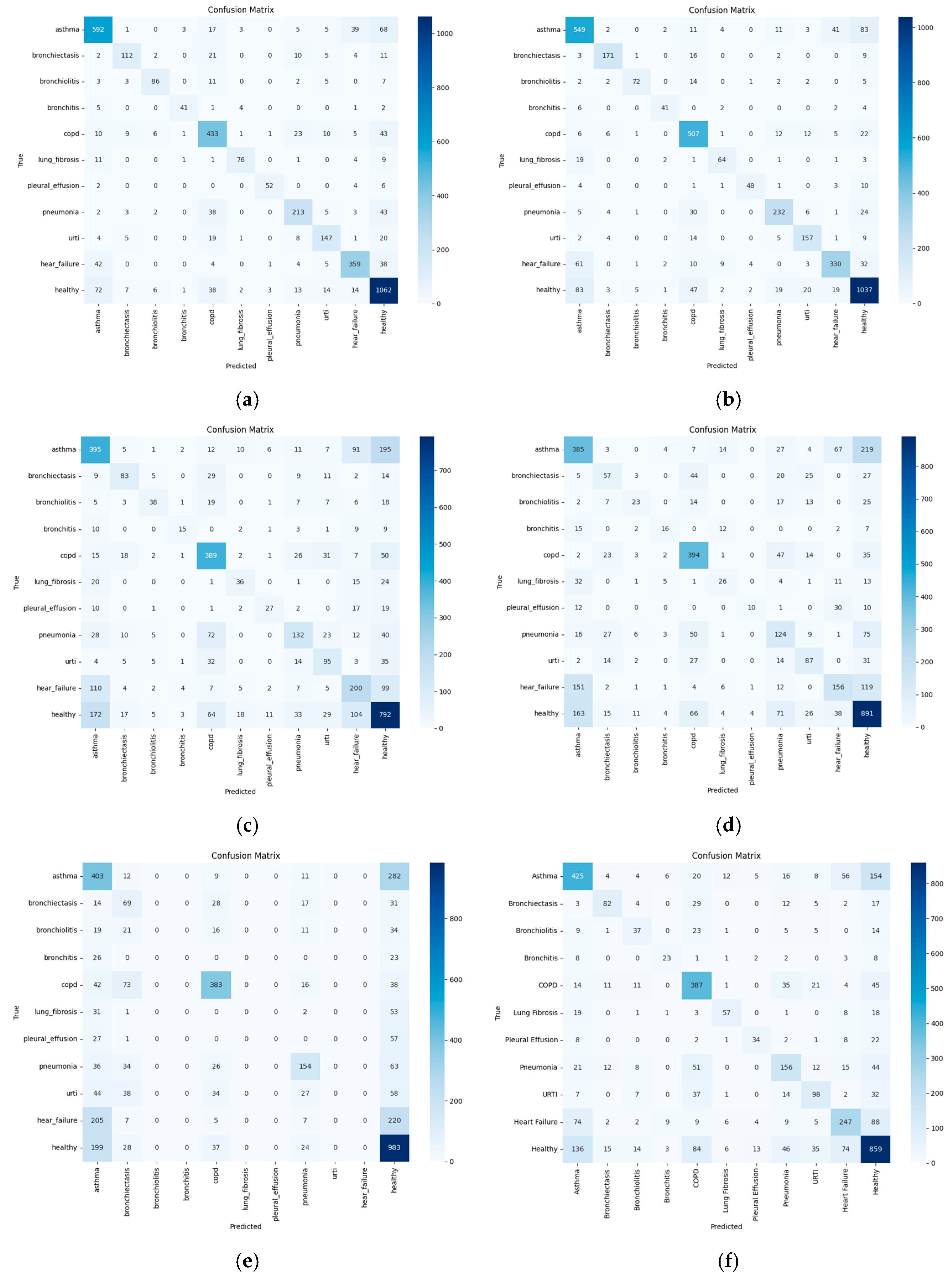

Figure 5 presents the confusion matrices for each classification scheme. ResNet50 demonstrates high accuracy for classes with a larger number of samples and well-defined features, such as healthy, COPD, pneumonia, and asthma. However, it exhibits misclassifications among respiratory conditions with overlapping symptoms, including URTI, bronchitis, and bronchiectasis. Similar confusion is also observed between heart failure and asthma. Conditions with fewer samples—such as bronchiolitis, pleural effusion, and lung fibrosis—tend to show lower classification accuracy due to data scarcity. MobileNetV2 shows reduced accuracy, particularly for asthma and heart failure. Notably, frequent confusion occurs among the classes of asthma, heart failure, and URTI. InceptionV2 also exhibits frequent misclassifications among heart failure, asthma, and healthy, as well as mutual confusion among URTI, pneumonia, and bronchitis. Stacked scheme demonstrates inaccurate classification between asthma and healthy, heart failure and asthma, and pneumonia and healthy classes. The proposed scheme, while achieving better overall performance, still exhibits some misclassification between specific class pairs, notably asthma and healthy, pneumonia and URTI, and heart failure and asthma. The EfficientNet achieves high classification accuracy for the healthy class, as well as COPD and asthma, which are relatively well-represented and acoustically distinguishable. In contrast, substantial confusion is observed between asthma, heart failure, and URTI, suggesting overlapping symptom-related acoustic features. Rare classes such as bronchiolitis, pleural effusion, and lung fibrosis exhibit consistently low true positive rates, likely due to insufficient training samples and limited variability. Notably, heart failure is often misclassified as healthy or asthma, indicating challenges in differentiating cardiovascular-related sounds from respiratory ones.

Table 9 shows the

p-value between the proposed scheme and existing schemes. The difference between existing schemes was statistically significant

, indicating that the observed improvement is highly unlikely to have occurred by chance.

Table 10 shows the performance of each scheme in ONNX framework. All schemes exhibited comparable performance to GPU-based evaluations; however, a slight degradation in performance was observed. This is because although both the baseline model and the ONNX model operate with FLOAT32 precision, subtle variations occur during the conversion process due to parameter scaling, making the ONNX model less sensitive to fine-grained representations.

4.4. Classification Results in NPU

Figure 6 illustrates the test module employed for the NPU-based evaluation. The system utilizes a Raspberry Pi 5 as the baseboard, paired with the Hailo-8 NPU. The Raspberry Pi is equipped with a 64-bit Arm Cortex-A76 CPU and 16 GB of RAM, while the Hailo-8 NPU delivers up to 26 Tera Operations Per Second (TOPS) of inference performance. The NPU supports the M.2 connection and operates −40 °C to 80 °C on a Linux-based system. Due to limited computational resources, the device does not support inference using Float32. To enable model deployment in such environments, quantization must be performed in accordance with the requirements of the supported NPU module. The Hailo-8 NPU only supports the 8-bit unsigned integer. Accordingly, all model parameters originally represented in Float32 were quantized by mapping their values to the range of 0–255. This mapping was performed by linearly rescaling each parameter based on its original minimum and maximum values, such that the minimum was mapped to 0 and the maximum to 255. The resulting quantized model sizes are as follows: the proposed model—2.64 MB, ResNet50—37.6 MB, MobileNetV2—3.8 MB, InceptionV2—6.47 MB, and Stacked—835 KB.

Table 11 presents the classification performance of each model evaluated on the NPU-based platform, while

Figure 7 displays the confusion matrices for all classification schemes under NPU execution. EfficientNet shows reduced classification performance after quantization, as the model tends to focus on a limited region of the input image, leading to a decline in overall accuracy for audio feature recognition. Both InceptionV2 and Stacked exhibit a decline in performance following 8-bit quantization. Specifically, InceptionV2 experienced a 6.47% reduction in accuracy when evaluated on the NPU compared to its GPU-based performance, while Stacked shows a 2.29% decrease under the same conditions. Interestingly, despite the drop in accuracy, both models demonstrate improvements in F1-score, precision, and recall. This counterintuitive result can be attributed to the class imbalance present in the dataset. For example, classes such as healthy and asthma are overrepresented, whereas categories like pleural effusion and bronchiolitis contain relatively few samples. In contrast, the proposed scheme, along with ResNet50 and MobileNetV2, exhibited overall performance improvements on the NPU compared to the GPU. Notably, the proposed scheme achieved classification performance comparable to ResNet50 while operating on the NPU, demonstrating its effectiveness and robustness in resource-constrained environments. As the number of target classes increases in a classification task, the number of parameters learned by the network also grows, potentially leading to overfitting toward specific classes. In GPU-based training environments, high-capacity floating-point models may become overly sensitive to dominant class signals, resulting in biased predictions and reduced generalization capability. However, when such models are quantized from FP32 to UINT8 and deployed on integer-based NPUs, the reduced numerical precision inherently acts as a form of regularization, mitigating the risk of overfitting and improving generalization. Quantization compresses the dynamic range of weights and activations, effectively suppressing the influence of overly dominant parameters and reducing the model’s sensitivity to minor fluctuations. This attenuates class-specific bias, enabling more balanced inference across all classes. Empirically, we observed that the inference accuracy after quantization on the NPU surpasses that of the original GPU-based inference in certain scenarios. In addition to this implicit regularization view, we analyze that three deployment-side factors also contribute to the observed gains: First, batch normalization parameters are folded into adjacent convolutions during export, eliminating potential running statistics mismatch at inference; second, per channel weight scaling on the NPU equalizes channel contributions and reduces sensitivity to poorly conditioned filters; and third, deterministic integer accumulation with bounded activation ranges curtails extreme logits, yielding more stable and better-calibrated decisions. Furthermore, quantization implicitly reduces over-parameterization and acts as a noise stabilizer, which is particularly beneficial in cases where the training data exhibit label imbalance or noise. Quantization improved the classification accuracy of the COPD and Pneumonia classes. Since these classes exhibit large-scale patterns, they are less sensitive to quantization, thereby reducing the likelihood of misclassification. Furthermore, such classes often possess strong channel specificity, which allows the normalization effect introduced during quantization to further enhance classification accuracy. In contrast, classes such as bronchiolitis, which rely on subtle high-frequency features, may suffer from information loss in the layers after quantization, leading to reduced classification accuracy.

Table 12 presents a result of inference time and power consumption in NPU. Among the baseline models, MobileNetV2 achieves the fastest average inference time but exhibits the highest standard deviation and the highest power consumption, indicating potential instability and inefficiency under real-time constraints. InceptionV2, EfficientNet, and Stacked models maintain relatively low inference times, but all show high variance in latency and elevated power demands. ResNet50 demonstrates stable performance with the lowest standard deviation among the baselines, though its average inference time remains the highest, limiting its real-time applicability. In contrast, the proposed model offers a more balanced and efficient trade-off. Although its average inference time is slightly higher than that of lightweight architectures, it achieves notably low variability and moderate power consumption. Although 5 s audio segments are required for disease classification based on lung sound data, the proposed model completes inference in only 245.9 ms, enabling real-time processing.

We analyzed the memory footprint of each model when deployed on a Raspberry Pi with NPU support. As shown in

Table 13, the proposed model consumed approximately 60.3 MB of system memory, while ResNet exhibited the highest usage at 150.4 MB. In contrast, lightweight architectures such as MobileNet and EfficientNet maintained significantly lower memory requirements at 15.1 MB and 20.2 MB, respectively. These results confirm the suitability of the proposed and lightweight models for real-time inference on memory-constrained edge devices. We also wanted to analyze the resource usage of the NPU, but this was not possible because the NPU manufacturer did not support this feature.

Table 14 shows the

p-value between the proposed scheme and existing schemes in NPU. Although the

p-values of conventional methods before quantization vary, the

p-values of models quantized to the UINT8 format are below 0.05, indicating a lack of statistical significance.

5. Conclusions

In this study, we proposed a lung sound classification model specifically designed for on-device AI. The proposed approach extracts relevant features from audio data—namely, Mel spectrograms, chromagrams, and MFCC—that are transformed into image representations and stacked to serve as the model input. The model architecture performs convolutional operations that capture both temporal and frequency–domain characteristics of lung sounds. To ensure computational efficiency, the architecture adopts a lightweight design consisting of a repeated structure: two Inception blocks followed by a single 2D convolutional layer, repeated three times. Experimental results demonstrate that the proposed scheme achieves classification performance comparable to that of ResNet50, while outperforming other lightweight models in terms of disease classification performance. Furthermore, it accomplishes this with a significantly smaller model size and reduced parameter count. Evaluation in an NPU-based environment further confirms that the proposed model achieves lower inference latency compared to more complex network architectures, underscoring its suitability for deployment in resource-constrained settings. We measured an active power draw of 4.6 W for the NPU path. Under continuous operation, this implies very short endurance on typical wearable energy budgets: a 2 Wh pack (≈520 mAh at 3.85 V) lasts ≈ 0.43 h (≈26 min), and a 3 Wh pack (≈780–800 mAh) lasts ≈ 0.65 h (≈39 min). In addition to the NPU used in this study, there exist modules with various specifications. The NPU we employed delivers high performance with 26 TOPS; however, its relatively high power consumption poses challenges for long-term operation in wearable devices. Therefore, when deploying NPUs in such devices, a trade-off between performance and battery consumption must be carefully considered.

As future work, we aim to reduce computational complexity in order to shorten inference time while maintaining or improving classification performance on resource-constrained devices. Since the ICBHI 2017 dataset and the KAUH dataset differ in disease types, recording conditions, and demographic characteristics, we will expand the evaluation to cover a more diverse real-world cohort. Public corpora such as Coswara and SPRSound are not directly suitable for this disease classification setting, as they are either COVID-19-centric or encompass a much broader acoustic scope. Therefore, validating the model in a wider context remains a key direction. We also plan to integrate an on-demand AI module into an actual stethoscope and evaluate end-to-end accuracy, power consumption, and inference latency during real use. Complementary to these efforts, we will investigate hardware-efficient uncertainty estimation methods compatible with integer-only NPUs and benchmark on-device calibration and risk-aware decision thresholds for quantized models under strict latency and power constraints. Finally, if limited probabilistic inference becomes feasible in the toolchain, we will explore bounded-sample Monte Carlo methods that can still be executed on the target hardware.

Author Contributions

Conceptualization, J.P.; methodology, J.P.; software, J.P. and C.J.; validation, J.P., C.J., Y.C., H.-k.H. and Y.J.; formal analysis, J.P., C.J., Y.C. and H.-k.H.; investigation, J.P. and C.J.; writing—original draft preparation, J.P.; writing—review and editing, J.P.; funding acquisition, H.-k.H. and Y.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Korea Evaluation Institute of Industrial Technology (KEIT) grant funded by the Korean government (No. RS-2025-09872968, Development of small, low-power on-device AI core technologies for digital healthcare and commercialization of digital medical products for respiratory diseases). It was also supported by the Institute of Information and Communications Technology Planning and Evaluation (IITP) grant funded by the Korean government (No. RS-2024-00399373, Development of Core Technology for Multidimensional Attack Surface Management for High-Reliability Medical Device Risk Management).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Acknowledgments

OpenAI’s GPT-5 for translation and grammar checking.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Soriano, J.B.; Kendrick, P.J.; Paulson, K.R.; Gupta, V.; Abrams, E.M.; Adedoyin, R.A.; Adhikari, T.B.; Advani, S.M.; Agrawal, A.; Ahmadian, E. Prevalence and Attributable Health Burden of Chronic Respiratory Diseases, 1990–2017: A Systematic Analysis for the Global Burden of Diseases Study 2017. Lancet Respir. Med. 2020, 8, 585–596. [Google Scholar] [CrossRef]

- Tariq, Z.; Shah, K.; Lee, Y. Feature-based Fusion using CNN for Lung and Heart Sound Classification. Sensors 2022, 22, 1521. [Google Scholar] [CrossRef]

- Dar, J.A.; Srivastava, K.K.; Lone, S.A. Spectral Features and Optimal Hierarchical Attention Networks for Pulmonary Abnormality Detection from the Respiratory Sound Signals. Biomed. Signal Process. Control 2022, 78, 103905. [Google Scholar] [CrossRef]

- Acharya, J.; Basu, A. Deep Neural Network for Respiratory Sound Classification in Wearable Devices Enabled by Patient Specific Model Tuning, IEEE Trans. Biomed. Circuits Syst. 2020, 14, 535–544. [Google Scholar]

- Kim, Y.; Hyon, Y.; Jung, S.S.; Lee, S.; Yoo, G.; Chung, C.; Ha, T. Respiratory Sound Classification for Crackles, Wheezes, and Rhonchi in the Clinical Field using Deep Learning. Sci. Rep. 2021, 11, 17186. [Google Scholar] [CrossRef]

- Zhang, X.; Maddipatla, D.; Narakathu, B.B.; Bazuin, B.J.; Atashbar, M.Z. Development of a Novel Wireless Multi-Channel Stethograph System for Monitoring Cardiovascular and Cardiopulmonary Diseases. IEEE Access 2021, 9, 128951–128964. [Google Scholar] [CrossRef]

- Gupta, S.; Agrawal, M.; Deepak, D. Gammatonegram based Triple Classification of Lung Sounds using Deep Convolutional Neural Network with Transfer Learning. Biomed. Signal Process. Control 2021, 70, 102947. [Google Scholar] [CrossRef]

- Zulfiqar, R.; Majeed, F.; Irfan, R.; Rauf, H.T.; Benkhelifa, E.; Belkacem, A.N. Abnormal Respiratory Sounds Classification using Deep CNN through Artificial Noise Addition. Front. Med. 2021, 8, 714811. [Google Scholar] [CrossRef]

- Faruqui, N.; Yousuf, M.A.; Whaiduzzaman, M.; Azad, A.K.M.; Barros, A.; Moni, M.A. LungNet: A Hybrid Deep-CNN Model for Lung Cancer Diagnosis using CT and Wearable Sensor-based Medical IoT Data. Comput. Biol. Med. 2021, 139, 104961. [Google Scholar] [CrossRef]

- Zhao, Z.; Gong, Z.; Niu, M.; Ma, J.; Wang, H.; Zhang, Z.; Li, Y. Automatic Respiratory Sound Classification via Multi-branch Temporal Convolutional Network. In Proceedings of the ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 9102–9106. [Google Scholar]

- Le, K.N.T.; Byun, G.; Raza, S.M.; Le, D.T.; Choo, H. Respiratory Anomaly and Disease Detection using Multi-Level Temporal Convolutional Networks. IEEE J. Biomed. Health Inform. 2025, 29, 4834–4846. [Google Scholar] [CrossRef]

- Wang, X.; Tang, Z.; Guo, J.; Meng, T.; Wang, C.; Wang, T.; Jia, W. Empowering Edge Intelligence: A Comprehensive Survey on On-Device AI Models. ACM Comput. Surv. 2025, 57, 1–39. [Google Scholar] [CrossRef]

- Mazumder, A.N.; Meng, J.; Rashid, H.-A.; Kallakuri, U.; Zhang, X.; Seo, J.-S. A Survey on the Optimization of Neural Network Accelerators for Micro-AI On-Device Inference. IEEE J.Emerg. Sel. Top. Circuits Syst. 2021, 11, 532–547. [Google Scholar] [CrossRef]

- ICBHI 2017 Challenge. Available online: https://bhichallenge.med.auth.gr/ICBHI_2017_Challenge (accessed on 21 August 2025).

- Messner, E.; Fediuk, M.; Swatek, P.; Scheidl, S.; Smolle-Juttner, F.-M.; Olschewski, H.; Pernkopf, F. Multi-Channel Lung Sound Classification with Convolutional Recurrent Neural Networks. Comput. Biol. Med. 2020, 122, 103831. [Google Scholar] [CrossRef]

- Lella, K.K.; Pja, A. Automatic Diagnosis of COVID-19 Disease using Deep Convolutional Neural Network with Multi-Feature Channel from Respiratory Sound Data: Cough, Voice, and Breath. Alex. Eng. J. 2022, 61, 1319–1334. [Google Scholar] [CrossRef]

- Basu, V.; Rana, S. Respiratory Diseases Recognition through Respiratory Sound with the Help of Deep Neural Network. In Proceedings of the 2020 4th International Conference on Computational Intelligence and Networks (CINE), Kolkata, India, 27–29 February 2020; pp. 1–6. [Google Scholar]

- Bardou, D.; Zhang, K.; Ahmad, S.M. Lung Sounds Classification using Convolution Neural Networks. Artif. Intell. Med. 2018, 88, 58–69. [Google Scholar] [CrossRef]

- Petmezas, G.; Cheimariotis, G.A.; Stefanopoulos, L.; Rocha, B.; Paiva, R.P.; Katsaggelos, A.K.; Maglaveras, N. Automated Lung Sound Classification using a Hybrid CNN-LSTM Network and Focal Loss Function. Sensors 2022, 22, 1232. [Google Scholar] [CrossRef]

- Shuvo, S.B.; Ali, S.N.; Swapnil, S.I.; Hasan, T.; Bhuiyan, M.I.H. A Lightweight CNN Model for Detecting Respiratory Diseases from Lung Auscultation Sounds using EMD-CWT-based Hybrid Scalogram. IEEE J. Biomed. Health Inform. 2021, 25, 2595–2603. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1566. [Google Scholar]

- Li, J.; Yuan, J.; Wang, H.; Liu, S.; Guo, Q.; Ma, Y.; Li, Y.; Zhao, L.; Wang, G. LungAttn: Advanced Lung Sound Classification using Attention Mechanism with Dual TQWT and Triple STFT Spectrogram. Physiol. Meas. 2021, 42, 105006. [Google Scholar] [CrossRef]

- Wanasinghe, T.; Bandara, S.; Madusanka, S.; Meedeniya, D.; Bandara, M.; Diez, I.D.L.T. Lung Sound Classification with Multi-Feature Integration Utilizing Lightweight CNN Model. IEEE Access 2024, 12, 21262–21276. [Google Scholar] [CrossRef]

- Malche, T.; Tharewal, S.; Macheshwary, P. A TinyML Approach to Real-Time Snoring Detection in Resource-Constrained Wearable Devices. Eng. Proc. 2024, 82, 55. [Google Scholar]

- Roy, A.; Dutta, H.; Bhuyan, A.K.; Biswas, S. On-Device Semi-Supervised Activity Detection: A New Privacy-Aware Personalized Health Monitoring Approach. Sensors 2024, 24, 4444. [Google Scholar] [CrossRef]

- Xun, L.; Hare, J.; Merrett, G.V. Dynamic DNNs and Runtime Management for Efficient Inference on Mobile/Embedded Devices. arXiv 2024, arXiv:2041.08965. [Google Scholar] [CrossRef]

- Srivastava, A.; Jain, S.; Miranda, R.; Patil, S.; Pandya, S.; Kotecha, K. Deep Learning based Respiratory Sound Analysis for Detection of Chronic Obstructive Pulmonary Disease. PeerJ Comput. Sci. 2021, 7, e369. [Google Scholar] [CrossRef]

- Imambi, S.; Prakash, B.K.; Kanagachidambaresan, G.R. PyTorch. In Programming with TensorFlow: Solution for Edge Computing Applications; Springer: Cham, Germany, 2021; pp. 87–104. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sung, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks, In Proceedings of the 36th International Conference on Machine Learning, PMLR. Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

Figure 1.

Audio feature images. (a) Mel spectrogram. (b) Chroma. (c) MFCC.

Figure 1.

Audio feature images. (a) Mel spectrogram. (b) Chroma. (c) MFCC.

Figure 2.

Proposed CNN architecture.

Figure 2.

Proposed CNN architecture.

Figure 3.

Detail of Inception block in proposed scheme.

Figure 3.

Detail of Inception block in proposed scheme.

Figure 4.

Training and loss curves of each scheme. (a) Loss curve of proposed scheme. (b) Training curve of proposed scheme. (c) Loss curve of ResNet50. (d) Training curve of ResNet50. (e) Loss curve of MobileNetV2. (f) Training curve of MobileNetV2. (g) Loss curve of InceptionV2. (h) Training curve of InceptionV2. (i) Loss curve of Stacked. (j) Training curve of Stacked. (k) Loss curve of EfficientNet. (l) Training curve of EfficientNet.

Figure 4.

Training and loss curves of each scheme. (a) Loss curve of proposed scheme. (b) Training curve of proposed scheme. (c) Loss curve of ResNet50. (d) Training curve of ResNet50. (e) Loss curve of MobileNetV2. (f) Training curve of MobileNetV2. (g) Loss curve of InceptionV2. (h) Training curve of InceptionV2. (i) Loss curve of Stacked. (j) Training curve of Stacked. (k) Loss curve of EfficientNet. (l) Training curve of EfficientNet.

Figure 5.

Confusion matrix of each scheme. (a) Proposed scheme. (b) ResNet50. (c) MobileNetV2. (d) InceptionV2. (e) Stacked. (f) EfficientNet.

Figure 5.

Confusion matrix of each scheme. (a) Proposed scheme. (b) ResNet50. (c) MobileNetV2. (d) InceptionV2. (e) Stacked. (f) EfficientNet.

Figure 6.

The module for NPU-based testbed.

Figure 6.

The module for NPU-based testbed.

Figure 7.

Confusion matrix of each scheme in NPU. (a) Proposed scheme. (b) ResNet50. (c) MobileNetV2. (d) InceptionV2. (e) Stacked. (f) EfficientNet.

Figure 7.

Confusion matrix of each scheme in NPU. (a) Proposed scheme. (b) ResNet50. (c) MobileNetV2. (d) InceptionV2. (e) Stacked. (f) EfficientNet.

Table 1.

Configuration of ICBHI 2017 dataset.

Table 1.

Configuration of ICBHI 2017 dataset.

| | Configuration |

|---|

| Number of Data | 920 |

| Audio Length | 10~90 s |

| Recording Device | Electronic Stethoscope |

| Number of Class | 8 |

| Type of Disease | Asthma, COPD, URTI, Bronchiectasis, Pneumonia, Bronchiolitis, LRTI, and Health |

| File Format | .wav |

Table 2.

Configuration of KAUH dataset.

Table 2.

Configuration of KAUH dataset.

| | Configuration |

|---|

| Number of Data | 308 |

| Audio Length | 5–30 s |

| Recording Device | Electronic Stethoscope |

| Number of Class | 7 |

| Type of Disease | Asthma, Heart Failure, Pneumonia, Bronchitis, Pleural Effusion, Lung Fibrosis, and COPD |

| File Format | .wav |

Table 3.

Parameter settings for audio data processing.

Table 3.

Parameter settings for audio data processing.

| Parameters | Setting |

|---|

| Sampling Rate | 22,050 Hz |

| Audio Duration | 5 s |

| Maximum dB | 20 dB |

| Num of Mel Filter Bank | 128 |

| Num of MFCC | 40 |

Table 4.

Parameter settings for learning.

Table 4.

Parameter settings for learning.

| Parameters | Setting |

|---|

| Learning Rate | 0.0001 |

| Batch Size | 32 |

| Epoch | 120 |

| Activation Function | Softmax |

Table 5.

Result of lung sound classification according to depth of layer.

Table 5.

Result of lung sound classification according to depth of layer.

| Model | Accuracy | F1-Score | Precision | Recall |

|---|

| 2T | 75.27% | 0.724 | 0.752 | 0.752 |

| 3T | 79.74% | 0.787 | 0.817 | 0.762 |

| 4T | 78.05% | 0.76 | 0.784 | 0.74 |

Table 6.

Classification performance according to the audio feature combination.

Table 6.

Classification performance according to the audio feature combination.

| Model | Accuracy | F1-Score | Precision | Recall |

|---|

Mel spectrogram

Chroma

MFCC | 79.74% | 0.787 | 0.817 | 0.762 |

Chroma

MFCC

Mel spectrogram | 78.31% | 0.771 | 0.786 | 0.759 |

MFCC

Mel spectrogram

Chroma | 77.63% | 0.758 | 0.776 | 0.735 |

Table 7.

Result of lung sound classification.

Table 7.

Result of lung sound classification.

| Model | Accuracy | F1-Score | Precision | Recall |

|---|

| Proposed | 79.68% | 0.789 | 0.809 | 0.759 |

| ResNet50 [29] | 80.59% | 0.798 | 0.801 | 0.798 |

| MobileNetV2 [30] | 55.43% | 0.495 | 0.541 | 0.412 |

| InceptionV2 [31] | 54.48% | 0.427 | 0.493 | 0.41 |

| Stacked [23] | 50.16% | 0.243 | 0.267 | 0.224 |

| EfficientNet [32] | 60.44% | 0.552 | 0.576 | 0.535 |

Table 8.

Result of models’ parameters.

Table 8.

Result of models’ parameters.

| Model | Parameter | Model Size |

|---|

| Proposed | 264 K | 1.13 MB |

| ResNet50 [29] | 23.53 M | 90 MB |

| MobileNetV2 [30] | 2.24 M | 8.75 MB |

| InceptionV2 [31] | 5.98 M | 22.8 MB |

| Stacked [23] | 0.36 K | 1.4 MB |

| EfficientNet [32] | 2.45 M | 9.55 MB |

Table 9.

p-value between proposed scheme and existing schemes.

Table 9.

p-value between proposed scheme and existing schemes.

| ResNet50 | MobileNetV2 | InceptionV2 | Stacked | EfficientNet |

|---|

| | | | |

Table 10.

Performance of each scheme in ONNX framework.

Table 10.

Performance of each scheme in ONNX framework.

| Model | Accuracy | F1-Score | Precision | Recall |

|---|

| Proposed | 79.44% | 0.781 | 0.799 | 0.765 |

| ResNet50 [29] | 80.42% | 0.781 | 0.788 | 0.78 |

| MobileNetV2 [30] | 55.24% | 0.484 | 0.522 | 0.397 |

| InceptionV2 [31] | 54.36% | 0.415 | 0.477 | 0.392 |

| Stacked [23] | 50.02% | 0.228 | 0.256 | 0.204 |

| EfficientNet [32] | 60.26% | 0.534 | 0.562 | 0.523 |

Table 11.

Result of lung sound classification in NPU.

Table 11.

Result of lung sound classification in NPU.

| Model | Accuracy | F1-Score | Precision | Recall |

|---|

| Proposed | 89.46% | 0.87 | 0.89 | 0.87 |

| ResNet50 [29] | 89.41% | 0.89 | 0.88 | 0.87 |

| MobileNetV2 [30] | 71.89% | 0.66 | 0.73 | 0.66 |

| InceptionV2 [31] | 47.74% | 0.42 | 0.41 | 0.36 |

| Stacked [23] | 55.95% | 0.28 | 0.24 | 0.32 |

| EfficientNet [32] | 40.63% | 0.273 | 0.45 | 0.265 |

Table 12.

Result of inference time and power consumption in NPU.

Table 12.

Result of inference time and power consumption in NPU.

| Metric | Proposed | ResNet50 | MobileNetV2 | InceptionV2 | Stacked | EfficientNet |

|---|

| Average | 245.9 ms | 269.77 ms | 150.27 ms | 166.43 ms | 157.74 ms | 166.84 ms |

Standard

Deviation | 197 ms | 186 ms | 241 ms | 260 ms | 271 ms | 264 ms |

Power

Consumption | 4.6 W | 3.8 W | 5.55 W | 4.95 W | 5.1 W | 4.45 W |

Table 13.

Result of lung sound classification in NPU.

Table 13.

Result of lung sound classification in NPU.

| Memory | Proposed | ResNet50 | MobileNetV2 | InceptionV2 | Stacked | EfficientNet |

|---|

| RAM | 60.3 MB | 150.4 MB | 15.1 MB | 50.6 MB | 20.7 MB | 20.2 MB |

Table 14.

P-value between proposed scheme and existing schemes in NPU.

Table 14.

P-value between proposed scheme and existing schemes in NPU.

| ResNet50 | MobileNetV2 | InceptionV2 | Stacked | EfficientNet |

|---|

| | | | |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).