A Review on Sound Source Localization in Robotics: Focusing on Deep Learning Methods

Abstract

1. Introduction

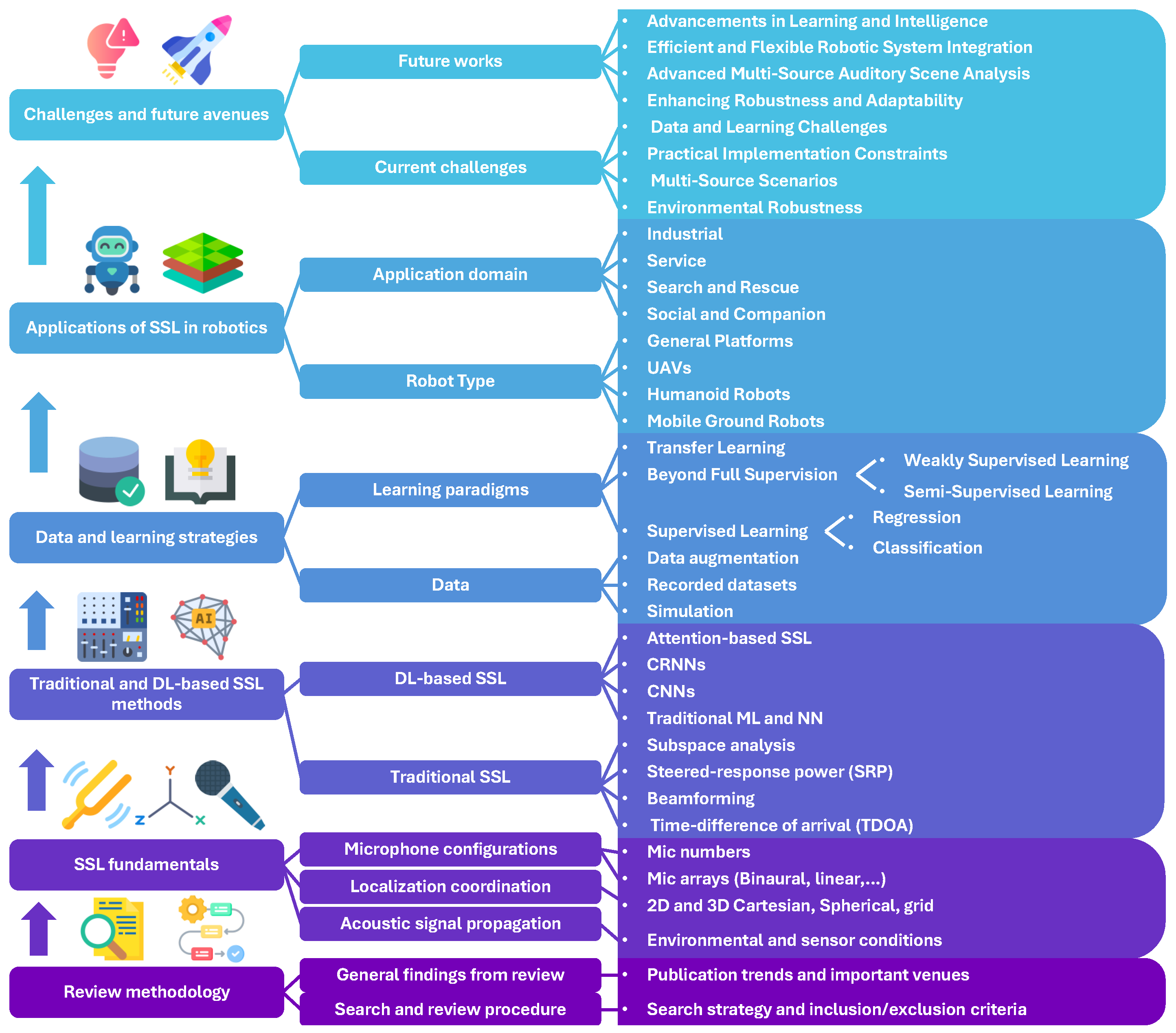

2. Review Methodology

2.1. Literature Search Strategy

2.2. Inclusion and Exclusion Criteria

- Peer-reviewed publications, encompassing journal articles, full conference papers, or workshop papers with archival proceedings.

- Published within the window of 1 January 2013, to 1 May 2025, capturing the significant surge of deep learning applications in robotics.

- Relevant to a robotic context, meaning the work either (i) evaluated SSL on a physical or simulated robot, or (ii) explicitly targeted a specific robotic use-case (e.g., service, industrial, aerial, field, or social human–robot interaction). Some studies that have not directly implemented SSL on robotic platforms, such as simulations and conceptual frameworks, were also included if their findings were directly and explicitly transferable to the robotic context.

- Written in English.

2.3. Publication Trends and Venues

3. SSL Foundementals

3.1. Acoustic Signal Propagation

- is the synchronization error, which arises from imperfect clock synchronization between microphones or channels.

- is the propagation speed error, caused by variations in the speed of sound due to changes in temperature, humidity, or wind.

- represents the reverberation error, which results from multipath propagation, causing the direct sound to be masked or delayed by reflections.

- is the signal-to-noise error, stemming from ambient noise and sensor imperfections.

- Free-field propagation: The assumption of a free-field environment (anechoic) is rarely valid in indoor robotics. As a robot navigates an office, factory, or home, reverberation artifacts are the most significant source of error. The direct path signal is often overshadowed by reflections, leading to inaccurate TDOA or phase-based estimates. To mitigate this, advanced methods should learn robust features that are invariant to reverberation.

- Constant propagation speed: The speed of sound is assumed to be a constant 343 m/s. However, temperature and wind gradients in a real environment can cause this assumption to fail. While less impactful than reverberation in most indoor settings, spatial variations in temperature or air movement can introduce a non-negligible . For precision applications or outdoor robots, this should be revised by taking into consideration temperature and wind factors to dynamically correct the speed of sound.

- Point source model: The assumption that the sound originates from a single point is valid for small sources at a distance, but it fails for extended sound sources (e.g., a person speaking, a large machine). The phase and amplitude can vary across the source, which can confuse DOA algorithms that rely on simple time or phase differences.

3.2. Coordinate Systems and Terminology

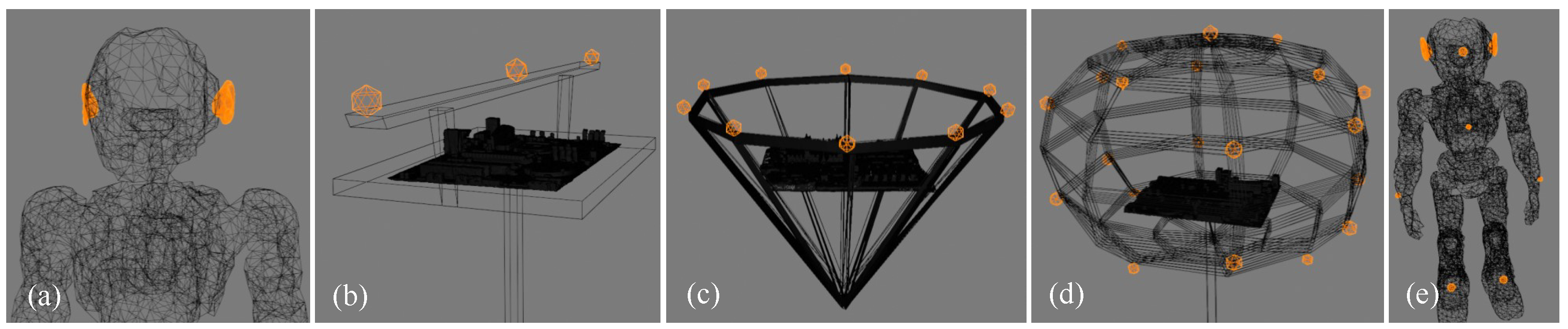

3.3. Microphone Numbers and Array Configurations

4. From Traditional SSL to DL Models

4.1. Traditional Sound Source Localization Methods

4.1.1. Time Difference of Arrival (TDOA)

4.1.2. Beamforming

4.1.3. Steered-Response Power (SRP)

4.1.4. Subspace Analysis

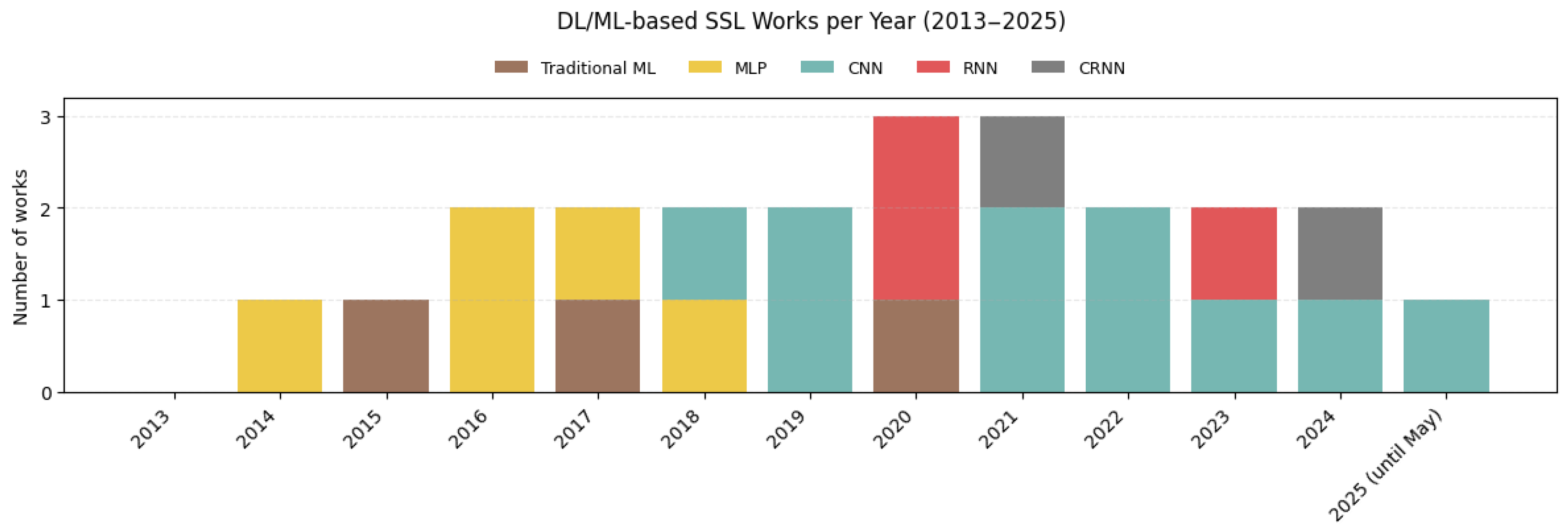

4.2. DL-Based SSL

4.2.1. Traditional Machine Learning and Neural Networks

4.2.2. Convolutional Neural Networks (CNNs)

4.2.3. Convolutional Recurrent Neural Networks (CRNNs)

4.2.4. Attention-Based SSL

5. Data and Learning Strategies

5.1. Data

5.2. Learning Paradigms

- Classification: When the sound source’s location is discretized into angular bins (e.g., five-degree sectors around the robot). Here, a softmax activation function is often used at the output, and the model minimizes the categorical cross-entropy loss. This is suitable for tasks where a robot needs to identify which general direction a sound is coming from [2].

- Regression: When the goal is to predict continuous values for the sound source’s azimuth, elevation, or 3D Cartesian coordinates. In this case, the mean square error (MSE) is the most common choice for the cost function [222]. This enables robots to pinpoint locations with greater precision. While MSE is prevalent, other metrics like angular error or L1-norm are sometimes employed to capture specific aspects of localization accuracy [223].

- Semi-supervised learning: This approach combines both labeled and unlabeled data for training [23]. The core idea is to perform part of the learning in a supervised manner (using available labels) and another part in an unsupervised manner (learning from unlabeled data) [224]. For robotics, this is highly valuable because robots can continuously collect vast amounts of unlabeled audio data during their operation. Semi-supervised learning methods can fine-tune a network pre-trained on labeled data (often simulated data), adapting it to real-world conditions without requiring exhaustive manual labeling. Techniques such as minimizing overall entropy (e.g., SSL work by Takeda et al. [225]) or employing generative models (e.g., SSL work by Bianco et al. [226]) that learn underlying data distributions have been proposed. Adversarial training (e.g., SSL work by Le Moing et al. [227]) is another example, where a discriminator network tries to distinguish real from simulated data, while the SSL network learns to “fool” it by producing realistic outputs from simulated inputs, thus adapting to real-world acoustic characteristics. This allows a robot to refine its SSL capabilities based on its own experiences in the operational environment, even if precise ground truth is unavailable.

- Weakly supervised learning: In this paradigm, the training data comes with “weak” or imprecise labels, rather than detailed ground truth in many domains when labeling is costly or challenging [228]. For SSL, this might mean only knowing the number of sound sources present, or having a rough idea of their general location without the exact coordinates. Models are designed with specialized cost functions that can account for these less precise labels. For instance, He et al. [229] fine-tuned networks using weak labels representing the known number of sources, which helped regularize predictions. Other approaches, like those using triplet loss functions by Opochinsky et al. [230], involve training the network to correctly infer the relative positions of sound sources (e.g., that one source is closer than another), even if their absolute coordinates are not provided. For robotics, weakly supervised learning offers a pragmatic solution when collecting precise ground truth labels is impractical, allowing robots to learn from more easily obtainable, albeit less granular, supervisory signals. This means a robot could learn to localize effectively just by being told, for example, “there is a sound coming from that general direction” rather than requiring exact coordinates, making deployment and ongoing learning more feasible.

6. Applications of SSL in Robotics

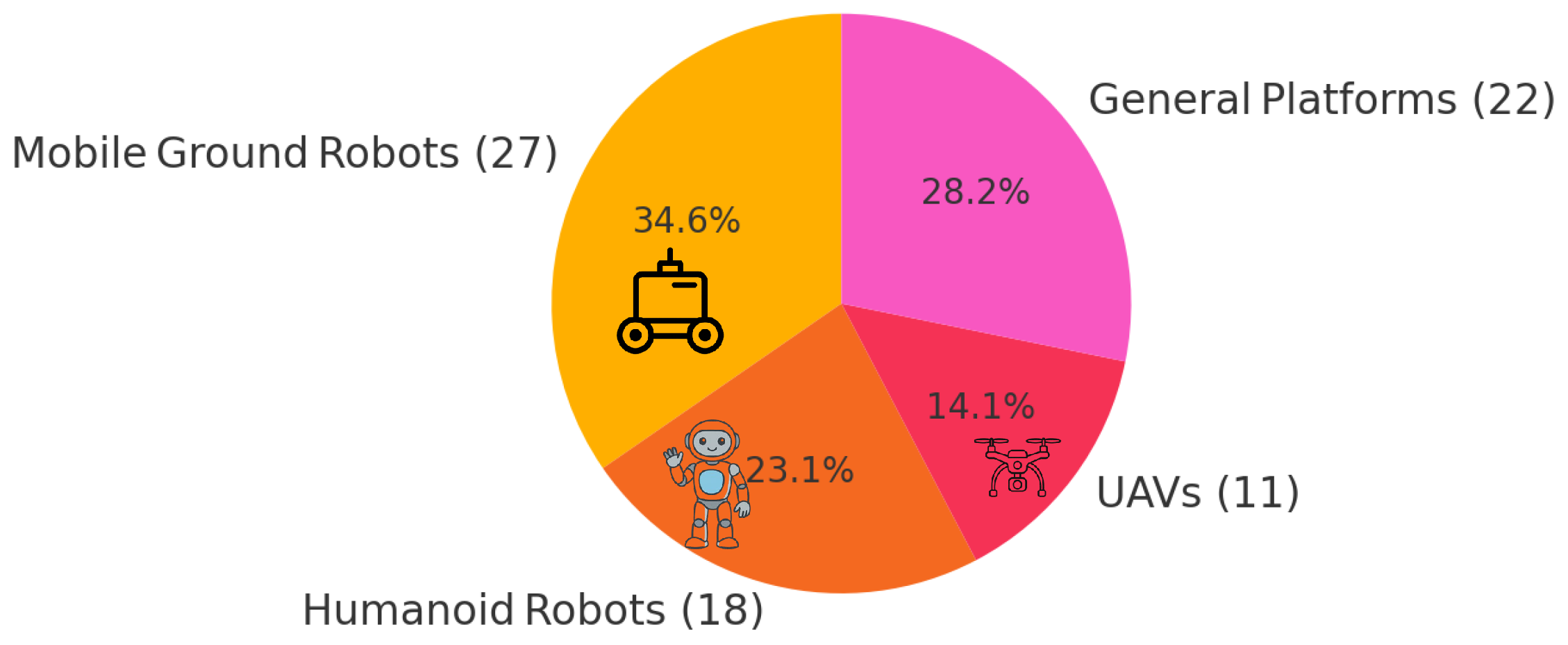

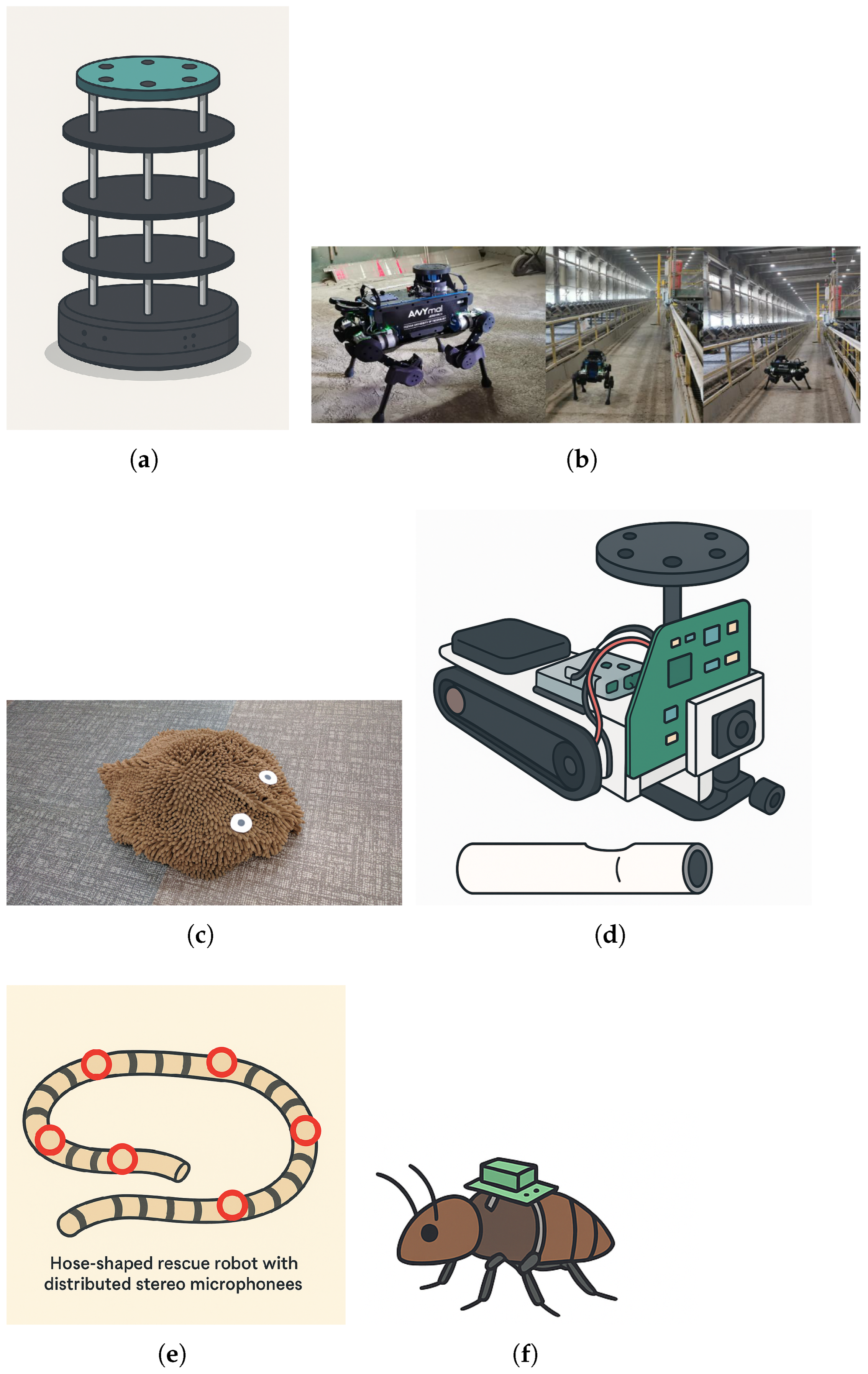

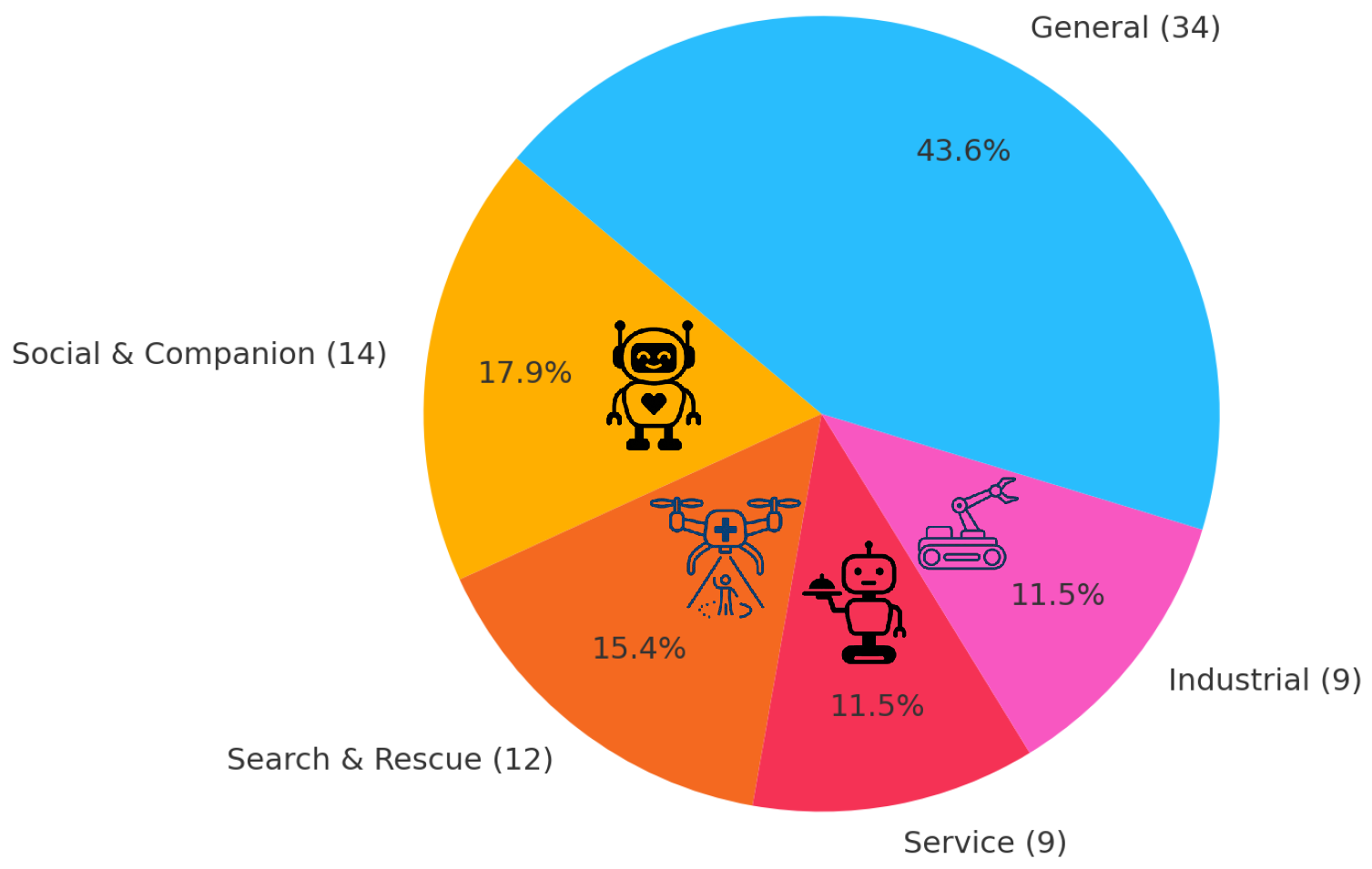

6.1. Categorization by Robot Type

- Acoustic navigation and mapping: Mobile robots can utilize SSL to identify and localize fixed sound sources (e.g., specific machinery hums in an industrial setting, ventilation systems, or public address announcements) as acoustic landmarks for simultaneous localization and mapping (SLAM) or precise navigation in GPS-denied or visually ambiguous areas.

- Hazard detection and avoidance: For service robots in public spaces or industrial robots on factory floors, SSL enables the early detection and localization of unexpected or dangerous sounds (e.g., a car horn, breaking glass, a falling object, abnormal machinery sounds). This facilitates proactive collision avoidance or emergency response.

- Sound-guided exploration and search: In search and rescue scenarios (e.g., navigating collapsed buildings or smoke-filled areas), mobile robots can rely on SSL to precisely locate sounds like human voices or whistles, effectively guiding their exploration towards potential survivors.

- Security and surveillance: Mobile robots can patrol large areas, employing SSL to detect and track intruders or suspicious acoustic events (e.g., footsteps, suspicious voices) in various service or security contexts.

- Natural human–robot interaction (HRI): Localizing the direction of human speech allows humanoids to naturally orient their head and gaze towards a speaker, facilitating engaging conversations and conveying attentiveness. This is crucial for social and companion robots aiming to build rapport with users.

- Multi-speaker tracking: In dynamic social settings, humanoids can employ advanced SSL techniques (e.g., using deep learning or subspace methods) to identify and track multiple simultaneous speakers, enabling complex multi-party conversations and selective listening.

- Enhanced situational awareness: Beyond direct interaction, SSL enables humanoids to detect and localize various environmental sounds, such as alarms, doorbells, or footsteps, contributing to their overall understanding of the surrounding service environment.

- Remote monitoring and surveillance: UAVs equipped with SSL can perform long-range acoustic monitoring for environmental applications (e.g., detecting illegal logging, tracking wildlife based on vocalizations) or security tasks (e.g., localizing gunshots or human activity over large, inaccessible terrains).

- Search and rescue in challenging terrains: In search and rescue operations over vast or difficult-to-traverse areas (e.g., dense forests, mountainous regions), UAVs can use SSL to pinpoint distress calls or specific human sounds, directing ground teams more efficiently.

- Hazard identification: Detecting and localizing critical sounds like explosions, gas leaks (through associated sounds), or structural failures from a safe distance in industrial or disaster zones.

- Traffic monitoring: In service or urban planning contexts, UAVs can localize vehicles based on their sounds, contributing to traffic flow analysis.

6.2. Categorization by Application Domain

7. Challenges and Future Avenues

7.1. Current Challenges

7.1.1. Environmental Robustness

- Extreme reverberation: In large indoor spaces with reflective surfaces, extreme reverberation severely degrades a robot’s ability to precisely map its acoustic surroundings. This phenomenon continues to be a significant obstacle to SSL accuracy in multi-source and noisy environments, causing sound reflections to confuse the robot’s directional cues. In highly reflective environments, the multi-path distorts the inter-microphone cross-correlation, producing spurious TDOA peaks and front–back/elevation ambiguities for linear or near-planar arrays. Classical SRP-PHAT/MUSIC degrade as the direct-path coherence drops and DL-based models trained on mild reverberation overfit to room responses and suffer under unseen room geometry. This degradation has been empirically confirmed; for example, Keyrouz [55] reported significant performance drops in extreme reverberation for both ML-based methods and, more severely, for SRP-PHAT-based SSL.

- Different noise types: Real-world scenarios are characterized by complex, unpredictable background noise, such as human chatter, machinery, or general environmental sounds, which can mask critical acoustic cues. Furthermore, a robot’s own self-generated noise (ego-noise) from motors, actuators, and movement significantly complicates its internal auditory focus. Although many studies considered ego-noise in their studies and datasets [37], considering multiple noise types and their cumulative effect that can happen in realistic applications is still a challenge. Also, considering the effect of a microphone’s internal noise (caused by imperfection [72]) could add complexity, and this should be addressed since microphones do not always work perfectly. To the best of our knowledge, still no robotic studies have systematically and comprehensively examined the simultaneous presence of multiple noise types during experiments. Nevertheless, prior work has demonstrated that high-noise conditions (SNR below 0 dB) considerably impair localization accuracy in both traditional SSL methods (e.g., TDOA and subspace approaches [103]) and DL-based models (e.g., MLPs and, to a lesser degree, CNNs [161]). Despite these findings, the cumulative effects of diverse and concurrent noise sources remain largely unaddressed in the literature, representing an important gap for realistic robotic applications.

- Dynamic acoustic conditions and unstructured environments: As robots navigate, acoustic conditions continuously change due to varying listener positions relative to sources and reflections. Even under controlled experimental conditions, source–listener distance alone can significantly influence localization and tracking accuracy. This effect has been reported for both ML/DL-based methods (e.g., MLP [30], LSTM [168]) and traditional SSL approaches [51]. When combined with changes in acoustic conditions, such variations present even greater challenges. Despite their practical relevance, a comprehensive analysis of these combined effects remains unexplored in robotic experiments. Adapting to these dynamic changes without requiring re-calibration or extensive retraining presents a significant challenge for both traditional and deep learning methods. Moreover, extending SSL approaches to outdoor (especially for UAVs) or highly unstructured environments introduces additional complexities related to wind, varying atmospheric conditions, and unpredictable sound propagation.

7.1.2. Multi-Source Scenarios

- Source separation vs. localization interdependence: A fundamental dilemma for a robot’s auditory processing pipeline is the interdependency between source separation and localization. The effective separation of individual sound streams often requires prior knowledge of source locations, while accurate localization may require separated or enhanced source signals, creating a “chicken-and-egg” problem for the robot’s acoustic intelligence. In this regard, some previous SSL studies implemented separate DL models for sound counting, classification and localization tasks [143], while some did all within one model [46].

- Overlapping sources: When multiple sound sources extensively overlap in time and frequency, it profoundly challenges a robot’s ability to differentiate and pinpoint distinct acoustic events. This is particularly difficult for speech sources with similar spectral characteristics or when multiple human speakers are closely spaced (a cocktail party scenario [54]), affecting a robot’s ability to focus on a specific speaker. Performance degradation in the presence of multiple simultaneous sound-emitting sources has been observed in practice. For instance, Yamada et al. [52] reported increased localization errors when two sources emitted sounds simultaneously using a traditional SSL method (MUSIC). Similarly, Jalayer et al. [72] documented reduced accuracy for a DL-based method (ConvLSTM) when multiple sound sources overlapped in time, underscoring the persistence of this challenge across methodological paradigms.

- Variable source numbers: Real-world scenarios involve a fluctuating number of active sound sources over time. Developing robotic auditory systems that can dynamically adapt to changing numbers of sources without explicit prior knowledge remains a complex task, requiring flexible and scalable processing architectures.

- Source tracking and identity maintenance: Maintaining consistent the identity tracking of multiple moving sound sources over extended periods [56], especially through instances of silence, occlusion, or signal degradation, presents significant difficulties that current robotic audition methods have not fully resolved [23]. This capability is essential for a robot to maintain situational awareness and interact intelligently with dynamic entities.

7.1.3. Practical Implementation Constraints

- Computational efficiency and power consumption: Real-time processing requirements coupled with the limited computational resources and strict power budgets on many robotic platforms directly impact a robot’s operational endurance and real-time responsiveness. There is a growing interest in “energy-efficient wake-up technologies” [238] for SSL, as highlighted by Khan et al. [28], to enable long-duration missions for battery-powered robots by minimizing energy expenditure on continuous acoustic monitoring.

- Microphone array limitations and flexibility: Physical constraints on microphone placement, quality, and array geometry across diverse robotic platforms (e.g., humanoid heads, mobile robot chassis, UAVs) impose inherent sensory limitations on a robot’s acoustic perception and can significantly impact SSL performance. The type of input features and microphone array configurations heavily influence the effectiveness of deep learning approaches, often requiring model retraining for different robot setups. Moreover, supporting dynamic microphone array configurations (e.g., on articulated or reconfigurable robots) remains a challenge.

- Calibration and maintenance: Ensuring consistent acoustic performance over a robot’s operational lifespan demands robust self-calibration routines within its perceptual architecture to address issues such as microphone drift, physical damage, or changes in robot configuration that may affect the acoustic properties of the system. This is crucial for maintaining the integrity of the robot’s auditory data.

7.1.4. Data and Learning Challenges

- Limited Training Data and Annotation Complexity: Collecting diverse, high-quality labeled acoustic data for SSL is inherently time-consuming and expensive for robotic applications. Unlike vision datasets (where images can often be manually labeled), obtaining the true direction or position of a sound source at each time requires specialized equipment (e.g., motion tracking systems as used in LOCATA [213]) or careful calibration with known source positions. This becomes even harder if either the sound source or the robot (or both) are moving. As a result, truly comprehensive spatial audio datasets for robotics are scarce. Furthermore, a significant impediment is that most research studies often do not share their collected data, hindering broader research progress and comparative analysis.

- Lack of comprehensive benchmarks in SSL: Despite some efforts (e.g., CASE challenges in the last decade [204]), there is a notable lack of comprehensive, widely adopted benchmark datasets (like ImageNet in visual object recognition) in the field of SSL. This absence makes it difficult for researchers to uniformly test and compare the performance of different models, impeding the standardized evaluation and progress towards common goals.

- Sim-to-real gap and generalization: While simulated environments, such as Pyroomacoustics [196] and ROOMSIM [197,198], can generate large amounts of training data, bridging the fidelity gap between simulated and real-world acoustics remains a critical barrier to a robot’s transition from simulated training to autonomous real-world operation. Consequently, models trained on specific environments or conditions often fail to generalize robustly to new, unseen scenarios, limiting their real-world applicability for robotic deployment.

- Interpretability: The “black box” nature of many deep learning models complicates debugging, understanding their decision-making processes, and ensuring their reliability. This lack of interpretability can be particularly problematic for safety-critical robotics (e.g., rescue robots [36] or condition monitoring robots [6]) applications where explainability and a human operator’s trust in the robot’s auditory decisions are paramount.

- Accurate 3D localization and distance estimation: While 2D direction of arrival (DoA) is commonly addressed, accurately estimating the distance to a sound source, especially in reverberant environments, remains a more complex task for a robot’s spatial awareness. As highlighted by Rascon et al. [1], reliable 3D localization (azimuth, elevation, and distance) with high resolution is essential for a robot’s precise navigation, manipulation, and interaction within a volumetric space, but it is not yet consistently achievable in real-time.

7.2. Future Opportunities and Avenues

7.2.1. Enhancing Robustness and Adaptability in Robot Audition

- Adaptive noise and reverberation suppression: Developing advanced signal processing and deep learning techniques that can adaptively suppress diverse non-stationary noise and mitigate extreme reverberation in real-time. This includes research into robust ego-noise cancellation specific to robotic platforms, ensuring that a robot can maintain auditory focus even during rapid movement or noisy operations.

- Outdoor and unstructured acoustic modeling: Expanding SSL research beyond the controlled indoor environments of the labs to truly unstructured outdoor settings. This involves developing new models for sound propagation in open air, by introducing novel outdoor acoustic simulators, accounting for environmental factors like wind, and leveraging multi-modal fusion with visual or inertial sensors to enhance robustness where audio cues might be ambiguous.

- Dynamic acoustic scene understanding: Moving beyond static snapshot localization to the continuous, real-time comprehension of evolving acoustic scenes. This includes rapid adaptation to changing sound source characteristics, varying background noise, and dynamic room acoustics as the robot moves, fostering a more fluid and context-aware auditory perception.

7.2.2. Advanced Multi-Source Auditory Scene Analysis

- Integrated sound event localization and detection (SELD) with source separation: Developing holistic systems that simultaneously detect, localize, and separate multiple overlapping sound events. This integrated approach, often framed as a multi-task learning problem, promises to break the current “chicken-and-egg” dilemma between separation and localization, allowing robots to extract distinct auditory streams for specific analysis or interaction.

- Robust multi-object acoustic tracking: Advancing algorithms for the reliable and persistent tracking of multiple moving sound sources, including handling occlusions, disappearances, and re-appearances. This is crucial for collaborative robots interacting with multiple humans or for inspection robots monitoring various machinery components in parallel.

- Dynamic source counting and characterization: Equipping robots with the ability to dynamically estimate the number of active sound sources in real-time, along with their types (e.g., speech, machinery, alarms). This capability enhances a robot’s contextual awareness, allowing it to prioritize relevant acoustic information within a complex environment.

7.2.3. Efficient and Flexible Robotic System Integration

- Lightweight and energy-efficient deep learning models: Designing novel, compact deep learning architectures and employing techniques like model quantization, pruning, and knowledge distillation tailored for execution on resource-constrained edge AI platforms. Also, advancements in power-saving techniques (e.g., the wake-up strategy described in Khan et al. survey [28]) promise to keep the auditory system off until an acoustic event of interest occurs, allowing the robot to reserve precious energy for localization and task execution. This will enable robots to perform complex SSL tasks while maintaining long operational durations and reducing power consumption.

- Flexible and self-calibrating microphone arrays: Investigating SSL methods that are robust to variations in microphone array geometry, supporting dynamic reconfigurations inherent to mobile or articulated robots. Furthermore, developing autonomous, self-calibrating routines for microphone arrays will reduce deployment complexity and ensure consistent performance over a robot’s lifespan, compensating for sensor drift or minor physical changes. Techniques like non-synchronous measurement (NSM) technology, which allows a small moving microphone array to emulate a larger static one, offer promising avenues for achieving high-resolution localization with fewer microphones while managing the challenges of dynamic array configurations [6].

- Hardware-software co-design for robot audition: Fostering a holistic approach where microphone array design, sensor placement on the robot, and signal processing algorithms are jointly optimized. This co-design can lead to highly efficient and purpose-built auditory systems that maximize performance within a robot’s physical and computational constraints.

7.2.4. Advancements in Data-Driven Learning and Robot Intelligence

- Large-scale, diverse, and shared datasets: A critical opportunity is the creation and broad dissemination of large-scale, diverse, and meticulously annotated benchmark datasets specifically for robotic SSL. These datasets should include challenging real-world scenarios, diverse robot platforms, varying microphone array configurations (including dynamic ones), and precise ground truth for moving sources and robots. Initiatives to encourage data sharing across research institutions are vital to foster collaborative progress and provide standardized benchmarks for model comparison.

- Unsupervised, self-supervised, and reinforcement learning for SSL: Exploring learning paradigms that minimize the reliance on labor-intensive labeled data. This includes techniques that allow robots to learn spatial acoustic features from vast amounts of unlabeled audio data gathered during operation, as well as reinforcement learning approaches where a robot can optimize its SSL performance through interaction with its environment [23,27].

- Bridging the sim-to-real gap with domain adaptation: Developing robust domain adaptation and transfer learning techniques to effectively transfer knowledge from simulated acoustic environments to real-world robotic deployment. This will involve more sophisticated acoustic simulations that accurately model complex reverberation and noise, coupled with techniques that make deep learning models more resilient to the inevitable discrepancies between simulated and real data [234].

- Foundation models for semantic interpretation: A promising avenue involves integrating large language models (LLMs) into the robot’s auditory processing pipeline, particularly after successful sound source localization and speech recognition. Once an SSL system pinpoints the origin of human speech, and an automatic speech recognition (ASR) system transcribes it, LLMs can be employed to provide a deeper contextual understanding and semantic interpretation of verbal commands, queries, or intentions. This would enable robots to engage in more natural, nuanced, and multi-turn dialogues, disambiguate vague instructions based on conversational history or common sense, and ground abstract concepts in the robot’s physical environment, moving beyond mere utterance processing to true linguistic comprehension.

- Hybrid models and multi-modal fusion for holistic perception: Future research will increasingly focus on hybrid models that intelligently combine deep learning with traditional signal processing techniques, and crucially, on fusing SSL outputs with other sensory modalities. This includes the tightly coupled integration of acoustic data with visual information from cameras, e.g., for audio-visual speaker tracking, identifying sounding objects, human facial expression in HRI [3,54], and haptic feedback (e.g., for contact localization on robot limbs). Such multi-modal fusion allows robots to build a more comprehensive and robust perception of their environment, compensating for limitations in any single modality and enabling a richer semantic understanding of the auditory scene.

- Joining “hearing” and “touch” for safer human–robot teamwork: Beyond vision, force/torque and impedance signals captured at a robot’s joints offer information. Recent impedance-learning methods already let a robot adjust its stiffness and damping by feeling the force that a person applies [239]. A natural next step is to let the robot listen. If the robot knows where a voice command or warning sound is coming from, it can quickly relax its grip, steer away, or steady its tool in that direction. Achieving this will require (i) shared audio-and-force datasets collected while people guide a robot and talk to it, and (ii) simple learning rules that map those combined signals to clear impedance changes. Such “audio-haptic” control could make everyday tasks—like power-tool assistance, bedside help, or shared carrying— both easier for the user and safer for everyone nearby.

- Explainable AI for robot audition: Research into methods that allow deep learning-based SSL systems to provide transparent explanations for their localization decisions. This will enhance human operators’ trust in autonomous robots and facilitate debugging and the refinement of auditory perception systems in safety-critical applications.

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Rascon, C.; Meza, I. Localization of sound sources in robotics: A review. Robot. Auton. Syst. 2017, 96, 184–210. [Google Scholar] [CrossRef]

- Jo, H.M.; Kim, T.W.; Kwak, K.C. Sound Source Localization Using Deep Learning for Human–Robot Interaction Under Intelligent Robot Environments. Electronics 2025, 14, 1043. [Google Scholar] [CrossRef]

- Korayem, M.; Azargoshasb, S.; Korayem, A.; Tabibian, S. Design and implementation of the voice command recognition and the sound source localization system for human–robot interaction. Robotica 2021, 39, 1779–1790. [Google Scholar] [CrossRef]

- Jalayer, R.; Jalayer, M.; Orsenigo, C.; Vercellis, C. A Conceptual Framework for Localization of Active Sound Sources in Manufacturing Environment Based on Artificial Intelligence. In Proceedings of the 33rd International Conference on Flexible Automation and Intelligent Manufacturing (FAIM 2023), Porto, Portugal, 18–22 June 2023; pp. 699–707. [Google Scholar]

- Lv, D.; Tang, W.; Feng, G.; Zhen, D.; Gu, F.; Ball, A.D. An Overview of Sound Source Localization based Condition Monitoring Robots. ISA Trans. 2024, 158, 537–555. [Google Scholar] [CrossRef]

- Lv, D.; Feng, G.; Zhen, D.; Liang, X.; Sun, G.; Gu, F. Motor Bearing Fault Source Localization Based on Sound and Robot Movement Characteristics. In Proceedings of the 2024 International Conference on Sensing, Measurement & Data Analytics in the era of Artificial Intelligence (ICSMD), Huangshan, China, 31 October 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Marques, I.; Sousa, J.; Sá, B.; Costa, D.; Sousa, P.; Pereira, S.; Santos, A.; Lima, C.; Hammerschmidt, N.; Pinto, S.; et al. Microphone array for speaker localization and identification in shared autonomous vehicles. Electronics 2022, 11, 766. [Google Scholar] [CrossRef]

- Yamada, T.; Itoyama, K.; Nishida, K.; Nakadai, K. Sound source tracking by drones with microphone arrays. In Proceedings of the 2020 IEEE/SICE International Symposium on System Integration (SII), Honolulu, HI, USA, 12–15 January 2020; IEEE: New York, NY, USA, 2020; pp. 796–801. [Google Scholar]

- Yamamoto, T.; Hoshiba, K.; Yen, B.; Nakadai, K. Implementation of a Robot Operation System-based network for sound source localization using multiple drones. In Proceedings of the 2024 Asia Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Macau, China, 3–6 December 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Latif, T.; Whitmire, E.; Novak, T.; Bozkurt, A. Sound localization sensors for search and rescue biobots. IEEE Sens. J. 2015, 16, 3444–3453. [Google Scholar] [CrossRef]

- Zhang, B.; Masahide, K.; Lim, H. Sound source localization and interaction based human searching robot under disaster environment. In Proceedings of the 2019 SICE International Symposium on Control Systems (SICE ISCS), Kumamoto, Japan, 7–9 March 2019; IEEE: New York, NY, USA, 2019; pp. 16–20. [Google Scholar]

- Mae, N.; Mitsui, Y.; Makino, S.; Kitamura, D.; Ono, N.; Yamada, T.; Saruwatari, H. Sound source localization using binaural difference for hose-shaped rescue robot. In Proceedings of the 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; IEEE: New York, NY, USA, 2017; pp. 1621–1627. [Google Scholar]

- Park, J.H.; Sim, K.B. A design of mobile robot based on Network Camera and sound source localization for intelligent surveillance system. In Proceedings of the 2008 International Conference on Control, Automation and Systems, Seoul, Republic of Korea, 14–17 October 2008; IEEE: New York, NY, USA, 2008; pp. 674–678. [Google Scholar]

- Han, Z.; Li, T. Research on sound source localization and real-time facial expression recognition for security robot. In Proceedings of the Journal of Physics: Conference Series, Hangzhou, China, 25–26 July 2020; IOP Publishing: Bristol, UK, 2020; Volume 1621, p. 012045. [Google Scholar]

- Obeidat, H.; Shuaieb, W.; Obeidat, O.; Abd-Alhameed, R. A review of indoor localization techniques and wireless technologies. Wirel. Pers. Commun. 2021, 119, 289–327. [Google Scholar] [CrossRef]

- Tarokh, M.; Merloti, P. Vision-based robotic person following under light variations and difficult walking maneuvers. J. Field Robot. 2010, 27, 387–398. [Google Scholar] [CrossRef]

- Hall, D.; Talbot, B.; Bista, S.R.; Zhang, H.; Smith, R.; Dayoub, F.; Sünderhauf, N. The robotic vision scene understanding challenge. arXiv 2020, arXiv:2009.05246. [Google Scholar] [CrossRef]

- Belkin, I.; Abramenko, A.; Yudin, D. Real-time lidar-based localization of mobile ground robot. Procedia Comput. Sci. 2021, 186, 440–448. [Google Scholar] [CrossRef]

- Yu, Z. A WiFi Indoor Localization System Based on Robot Data Acquisition and Deep Learning Model. In Proceedings of the 2024 6th International Conference on Internet of Things, Automation and Artificial Intelligence (IoTAAI), Guangzhou, China, 26–28 July 2024; IEEE: New York, NY, USA, 2024; pp. 367–371. [Google Scholar]

- Wahab, N.H.A.; Sunar, N.; Ariffin, S.H.; Wong, K.Y.; Aun, Y. Indoor positioning system: A review. Int. J. Adv. Comput. Sci. Appl. 2022, 13, 477–490. [Google Scholar] [CrossRef]

- Alfurati, I.S.; Rashid, A.T. Performance comparison of three types of sensor matrices for indoor multi-robot localization. Int. J. Comput. Appl. 2018, 181, 22–29. [Google Scholar] [CrossRef]

- Flynn, A.M.; Brooks, R.A.; Wells, W.M., III; Barrett, D.S. Squirt: The Prototypical Mobile Robot for Autonomous Graduate Students; Massachusetts Institute of Technology: Cambridge, MA, USA, 1989. [Google Scholar]

- Grumiaux, P.A.; Kitić, S.; Girin, L.; Guérin, A. A survey of sound source localization with deep learning methods. J. Acoust. Soc. Am. 2022, 152, 107–151. [Google Scholar] [CrossRef]

- Liaquat, M.U.; Munawar, H.S.; Rahman, A.; Qadir, Z.; Kouzani, A.Z.; Mahmud, M.P. Localization of sound sources: A systematic review. Energies 2021, 14, 3910. [Google Scholar] [CrossRef]

- Desai, D.; Mehendale, N. A review on sound source localization systems. Arch. Comput. Methods Eng. 2022, 29, 4631–4642. [Google Scholar] [CrossRef]

- Zhang, B.J.; Fitter, N.T. Nonverbal sound in human–robot interaction: A systematic review. ACM Trans. Hum.-Robot. Interact. 2023, 12, 1–46. [Google Scholar] [CrossRef]

- Jekateryńczuk, G.; Piotrowski, Z. A survey of sound source localization and detection methods and their applications. Sensors 2023, 24, 68. [Google Scholar] [CrossRef]

- Khan, A.; Waqar, A.; Kim, B.; Park, D. A Review on Recent Advances in Sound Source Localization Techniques, Challenges, and Applications. Sens. Actuators Rep. 2025, 9, 100313. [Google Scholar] [CrossRef]

- He, W. Deep Learning Approaches for Auditory Perception in Robotics. Ph.D. Thesis, EPFL, Lausanne, Switzerland, 2021. [Google Scholar]

- Youssef, K.; Argentieri, S.; Zarader, J.L. A learning-based approach to robust binaural sound localization. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; IEEE: New York, NY, USA, 2013; pp. 2927–2932. [Google Scholar]

- Nakamura, K.; Gomez, R.; Nakadai, K. Real-time super-resolution three-dimensional sound source localization for robots. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; IEEE: New York, NY, USA, 2013; pp. 3949–3954. [Google Scholar]

- Ohata, T.; Nakamura, K.; Mizumoto, T.; Taiki, T.; Nakadai, K. Improvement in outdoor sound source detection using a quadrotor-embedded microphone array. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; IEEE: New York, NY, USA, 2014; pp. 1902–1907. [Google Scholar]

- Grondin, F.; Michaud, F. Time difference of arrival estimation based on binary frequency mask for sound source localization on mobile robots. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; IEEE: New York, NY, USA, 2015; pp. 6149–6154. [Google Scholar]

- Nakamura, K.; Sinapayen, L.; Nakadai, K. Interactive sound source localization using robot audition for tablet devices. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; IEEE: New York, NY, USA, 2015; pp. 6137–6142. [Google Scholar]

- Li, X.; Girin, L.; Badeig, F.; Horaud, R. Reverberant sound localization with a robot head based on direct-path relative transfer function. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; IEEE: New York, NY, USA, 2016; pp. 2819–2826. [Google Scholar]

- Nakadai, K.; Kumon, M.; Okuno, H.G.; Hoshiba, K.; Wakabayashi, M.; Washizaki, K.; Ishiki, T.; Gabriel, D.; Bando, Y.; Morito, T.; et al. Development of microphone-array-embedded UAV for search and rescue task. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; IEEE: New York, NY, USA, 2017; pp. 5985–5990. [Google Scholar]

- Strauss, M.; Mordel, P.; Miguet, V.; Deleforge, A. DREGON: Dataset and methods for UAV-embedded sound source localization. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: New York, NY, USA, 2018; pp. 1–8. [Google Scholar]

- Wang, L.; Sanchez-Matilla, R.; Cavallaro, A. Audio-visual sensing from a quadcopter: Dataset and baselines for source localization and sound enhancement. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; IEEE: New York, NY, USA, 2019; pp. 5320–5325. [Google Scholar]

- Michaud, S.; Faucher, S.; Grondin, F.; Lauzon, J.S.; Labbé, M.; Létourneau, D.; Ferland, F.; Michaud, F. 3D localization of a sound source using mobile microphone arrays referenced by SLAM. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; IEEE: New York, NY, USA, 2020; pp. 10402–10407. [Google Scholar]

- Sewtz, M.; Bodenmüller, T.; Triebel, R. Robust MUSIC-based sound source localization in reverberant and echoic environments. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 25–29 October 2020; IEEE: New York, NY, USA, 2020; pp. 2474–2480. [Google Scholar]

- Tourbabin, V.; Barfuss, H.; Rafaely, B.; Kellermann, W. Enhanced robot audition by dynamic acoustic sensing in moving humanoids. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; IEEE: New York, NY, USA, 2015; pp. 5625–5629. [Google Scholar]

- Takeda, R.; Komatani, K. Sound source localization based on deep neural networks with directional activate function exploiting phase information. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; IEEE: New York, NY, USA, 2016; pp. 405–409. [Google Scholar]

- Takeda, R.; Komatani, K. Unsupervised adaptation of deep neural networks for sound source localization using entropy minimization. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; IEEE: New York, NY, USA, 2017; pp. 2217–2221. [Google Scholar]

- Ferguson, E.L.; Williams, S.B.; Jin, C.T. Sound source localization in a multipath environment using convolutional neural networks. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; IEEE: New York, NY, USA, 2018; pp. 2386–2390. [Google Scholar]

- Grondin, F.; Michaud, F. Noise mask for TDOA sound source localization of speech on mobile robots in noisy environments. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; IEEE: New York, NY, USA, 2016; pp. 4530–4535. [Google Scholar]

- He, W.; Motlicek, P.; Odobez, J.M. Deep neural networks for multiple speaker detection and localization. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE: New York, NY, USA, 2018; pp. 74–79. [Google Scholar]

- An, I.; Son, M.; Manocha, D.; Yoon, S.E. Reflection-aware sound source localization. In Proceedings of the 2018 IEEE international conference on robotics and automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE: New York, NY, USA, 2018; pp. 66–73. [Google Scholar]

- Song, L.; Wang, H.; Chen, P. Automatic patrol and inspection method for machinery diagnosis robot—Sound signal-based fuzzy search approach. IEEE Sens. J. 2020, 20, 8276–8286. [Google Scholar] [CrossRef]

- Clayton, M.; Wang, L.; McPherson, A.; Cavallaro, A. An embedded multichannel sound acquisition system for drone audition. IEEE Sens. J. 2023, 23, 13377–13386. [Google Scholar] [CrossRef]

- Grondin, F.; Michaud, F. Lightweight and optimized sound source localization and tracking methods for open and closed microphone array configurations. Robot. Auton. Syst. 2019, 113, 63–80. [Google Scholar] [CrossRef]

- Go, Y.J.; Choi, J.S. An acoustic source localization method using a drone-mounted phased microphone array. Drones 2021, 5, 75. [Google Scholar] [CrossRef]

- Yamada, T.; Itoyama, K.; Nishida, K.; Nakadai, K. Placement planning for sound source tracking in active drone audition. Drones 2023, 7, 405. [Google Scholar] [CrossRef]

- Skoczylas, A.; Stefaniak, P.; Anufriiev, S.; Jachnik, B. Belt conveyors rollers diagnostics based on acoustic signal collected using autonomous legged inspection robot. Appl. Sci. 2021, 11, 2299. [Google Scholar] [CrossRef]

- Shi, Z.; Zhang, L.; Wang, D. Audio–visual sound source localization and tracking based on mobile robot for the cocktail party problem. Appl. Sci. 2023, 13, 6056. [Google Scholar] [CrossRef]

- Keyrouz, F. Advanced binaural sound localization in 3-D for humanoid robots. IEEE Trans. Instrum. Meas. 2014, 63, 2098–2107. [Google Scholar] [CrossRef]

- Wang, Z.; Zou, W.; Su, H.; Guo, Y.; Li, D. Multiple sound source localization exploiting robot motion and approaching control. IEEE Trans. Instrum. Meas. 2023, 72, 7505316. [Google Scholar] [CrossRef]

- Tourbabin, V.; Rafaely, B. Theoretical framework for the optimization of microphone array configuration for humanoid robot audition. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 1803–1814. [Google Scholar] [CrossRef]

- Manamperi, W.; Abhayapala, T.D.; Zhang, J.; Samarasinghe, P.N. Drone audition: Sound source localization using on-board microphones. IEEE/ACM Trans. Audio Speech Lang. Process. 2022, 30, 508–519. [Google Scholar] [CrossRef]

- Gonzalez-Billandon, J.; Belgiovine, G.; Tata, M.; Sciutti, A.; Sandini, G.; Rea, F. Self-supervised learning framework for speaker localisation with a humanoid robot. In Proceedings of the 2021 IEEE International Conference on Development and Learning (ICDL), Beijing, China, 23–26 August 2021; IEEE: New York, NY, USA, 2021; pp. 1–7. [Google Scholar]

- Gamboa-Montero, J.J.; Basiri, M.; Castillo, J.C.; Marques-Villarroya, S.; Salichs, M.A. Real-Time Acoustic Touch Localization in Human-Robot Interaction based on Steered Response Power. In Proceedings of the 2022 IEEE International Conference on Development and Learning (ICDL), London, UK, 12–15 September 2022; IEEE: New York, NY, USA, 2022; pp. 101–106. [Google Scholar]

- Yalta, N.; Nakadai, K.; Ogata, T. Sound source localization using deep learning models. J. Robot. Mechatronics 2017, 29, 37–48. [Google Scholar] [CrossRef]

- Chen, L.; Chen, G.; Huang, L.; Choy, Y.S.; Sun, W. Multiple sound source localization, separation, and reconstruction by microphone array: A dnn-based approach. Appl. Sci. 2022, 12, 3428. [Google Scholar] [CrossRef]

- Tian, C. Multiple CRNN for SELD. Parameters 2020, 488211, 490326. [Google Scholar]

- Bohlender, A.; Spriet, A.; Tirry, W.; Madhu, N. Exploiting temporal context in CNN based multisource DOA estimation. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 1594–1608. [Google Scholar] [CrossRef]

- Li, X.; Liu, H.; Yang, X. Sound source localization for mobile robot based on time difference feature and space grid matching. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; IEEE: New York, NY, USA, 2011; pp. 2879–2886. [Google Scholar]

- El Zooghby, A.H.; Christodoulou, C.G.; Georgiopoulos, M. A neural network-based smart antenna for multiple source tracking. IEEE Trans. Antennas Propag. 2000, 48, 768–776. [Google Scholar] [CrossRef]

- Ishfaque, A.; Kim, B. Real-time sound source localization in robots using fly Ormia ochracea inspired MEMS directional microphone. IEEE Sens. Lett. 2022, 7, 6000204. [Google Scholar] [CrossRef]

- Athanasopoulos, G.; Dekens, T.; Brouckxon, H.; Verhelst, W. The effect of speech denoising algorithms on sound source localization for humanoid robots. In Proceedings of the 2012 11th International Conference on Information Science, Signal Processing and their Applications (ISSPA), Montreal, QC, Canada, 2–5 July 2012; IEEE: New York, NY, USA, 2012; pp. 327–332. [Google Scholar]

- Subramanian, A.S.; Weng, C.; Watanabe, S.; Yu, M.; Yu, D. Deep learning based multi-source localization with source splitting and its effectiveness in multi-talker speech recognition. Comput. Speech Lang. 2022, 75, 101360. [Google Scholar] [CrossRef]

- Goli, P.; van de Par, S. Deep learning-based speech specific source localization by using binaural and monaural microphone arrays in hearing aids. IEEE/ACM Trans. Audio Speech Lang. Process. 2023, 31, 1652–1666. [Google Scholar] [CrossRef]

- Wu, S.; Zheng, Y.; Ye, K.; Cao, H.; Zhang, X.; Sun, H. Sound source localization for unmanned aerial vehicles in low signal-to-noise ratio environments. Remote Sens. 2024, 16, 1847. [Google Scholar] [CrossRef]

- Jalayer, R.; Jalayer, M.; Mor, A.; Orsenigo, C.; Vercellis, C. ConvLSTM-based Sound Source Localization in a manufacturing workplace. Comput. Ind. Eng. 2024, 192, 110213. [Google Scholar] [CrossRef]

- Risoud, M.; Hanson, J.N.; Gauvrit, F.; Renard, C.; Lemesre, P.E.; Bonne, N.X.; Vincent, C. Sound source localization. Eur. Ann. Otorhinolaryngol. Head Neck Dis. 2018, 135, 259–264. [Google Scholar] [CrossRef]

- Hirvonen, T. Classification of spatial audio location and content using convolutional neural networks. In Proceedings of the Audio Engineering Society Convention 138, Warsaw, Poland, 7–10 May 2015; Audio Engineering Society: New York, NY, USA, 2015. [Google Scholar]

- Xiao, X.; Zhao, S.; Zhong, X.; Jones, D.L.; Chng, E.S.; Li, H. A learning-based approach to direction of arrival estimation in noisy and reverberant environments. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; IEEE: New York, NY, USA, 2015; pp. 2814–2818. [Google Scholar]

- Geng, Y.; Jung, J.; Seol, D. Sound-source localization system based on neural network for mobile robots. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; IEEE: New York, NY, USA, 2008; pp. 3126–3130. [Google Scholar]

- Liu, G.; Yuan, S.; Wu, J.; Zhang, R. A sound source localization method based on microphone array for mobile robot. In Proceedings of the 2018 Chinese Automation Congress (CAC), Xi’an, China, 30 November–2 December 2018; IEEE: New York, NY, USA, 2018; pp. 1621–1625. [Google Scholar]

- Lee, S.Y.; Chang, J.; Lee, S. Deep learning-based method for multiple sound source localization with high resolution and accuracy. Mech. Syst. Signal Process. 2021, 161, 107959. [Google Scholar] [CrossRef]

- Acosta, O.; Hermida, L.; Herrera, M.; Montenegro, C.; Gaona, E.; Bejarano, M.; Gordillo, K.; Pavón, I.; Asensio, C. Remote Binaural System (RBS) for Noise Acoustic Monitoring. J. Sens. Actuator Netw. 2023, 12, 63. [Google Scholar] [CrossRef]

- Deleforge, A.; Forbes, F.; Horaud, R. Acoustic space learning for sound-source separation and localization on binaural manifolds. Int. J. Neural Syst. 2015, 25, 1440003. [Google Scholar] [CrossRef] [PubMed]

- Gala, D.; Lindsay, N.; Sun, L. Realtime active sound source localization for unmanned ground robots using a self-rotational bi-microphone array. J. Intell. Robot. Syst. 2019, 95, 935–954. [Google Scholar] [CrossRef]

- Baxendale, M.D.; Nibouche, M.; Secco, E.L.; Pipe, A.G.; Pearson, M.J. Feed-forward selection of cerebellar models for calibration of robot sound source localization. In Proceedings of the Conference on Biomimetic and Biohybrid Systems, Nara, Japan, 9–12 July 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 3–14. [Google Scholar]

- Gala, D.; Sun, L. Moving sound source localization and tracking for an autonomous robot equipped with a self-rotating bi-microphone array. J. Acoust. Soc. Am. 2023, 154, 1261–1273. [Google Scholar] [CrossRef]

- Mumolo, E.; Nolich, M.; Vercelli, G. Algorithms for acoustic localization based on microphone array in service robotics. Robot. Auton. Syst. 2003, 42, 69–88. [Google Scholar] [CrossRef]

- Nguyen, Q.V.; Colas, F.; Vincent, E.; Charpillet, F. Long-term robot motion planning for active sound source localization with Monte Carlo tree search. In Proceedings of the 2017 Hands-free Speech Communications and Microphone Arrays (HSCMA), San Francisco, CA, USA, 1–3 March 2017; IEEE: New York, NY, USA, 2017; pp. 61–65. [Google Scholar]

- Tamai, Y.; Kagami, S.; Amemiya, Y.; Sasaki, Y.; Mizoguchi, H.; Takano, T. Circular microphone array for robot’s audition. In Proceedings of the SENSORS, 2004 IEEE, Vienna, Austria, 24–27 October 2004; IEEE: New York, NY, USA, 2004; pp. 565–570. [Google Scholar]

- Choi, C.; Kong, D.; Kim, J.; Bang, S. Speech enhancement and recognition using circular microphone array for service robots. In Proceedings of the Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003) (Cat. No. 03CH37453), Las Vegas, NV, USA, 27–31 October 2003; IEEE: New York, NY, USA, 2003; Volume 4, pp. 3516–3521. [Google Scholar]

- Sasaki, Y.; Kabasawa, M.; Thompson, S.; Kagami, S.; Oro, K. Spherical microphone array for spatial sound localization for a mobile robot. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; IEEE: New York, NY, USA, 2012; pp. 713–718. [Google Scholar]

- Jin, L.; Yan, J.; Du, X.; Xiao, X.; Fu, D. RNN for solving time-variant generalized Sylvester equation with applications to robots and acoustic source localization. IEEE Trans. Ind. Inform. 2020, 16, 6359–6369. [Google Scholar] [CrossRef]

- Bando, Y.; Mizumoto, T.; Itoyama, K.; Nakadai, K.; Okuno, H.G. Posture estimation of hose-shaped robot using microphone array localization. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; IEEE: New York, NY, USA, 2013; pp. 3446–3451. [Google Scholar]

- Kim, U.H. Improvement of Sound Source Localization for a Binaural Robot of Spherical Head with Pinnae. Ph.D. Thesis, Kyoto University, Kyoto, Japan, 2013. [Google Scholar]

- Kumon, M.; Noda, Y. Active soft pinnae for robots. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; IEEE: New York, NY, USA, 2011; pp. 112–117. [Google Scholar]

- Murray, J.C.; Erwin, H.R. A neural network classifier for notch filter classification of sound-source elevation in a mobile robot. In Proceedings of the The 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, 16 January 2012; IEEE: New York, NY, USA, 2011; pp. 763–769. [Google Scholar]

- Zhang, Y.; Weng, J. Grounded auditory development by a developmental robot. In Proceedings of the IJCNN’01. International Joint Conference on Neural Networks. Proceedings (Cat. No. 01CH37222), Washington, DC, USA, 15–19 July 2001; IEEE: New York, NY, USA, 2001; Volume 2, pp. 1059–1064. [Google Scholar]

- Xu, B.; Sun, G.; Yu, R.; Yang, Z. High-accuracy TDOA-based localization without time synchronization. IEEE Trans. Parallel Distrib. Syst. 2012, 24, 1567–1576. [Google Scholar] [CrossRef]

- Knapp, C.; Carter, G. The generalized correlation method for estimation of time delay. IEEE Trans. Acoust. Speech Signal Process. 2003, 24, 320–327. [Google Scholar] [CrossRef]

- Park, B.C.; Ban, K.D.; Kwak, K.C.; Yoon, H.S. Performance analysis of GCC-PHAT-based sound source localization for intelligent robots. J. Korea Robot. Soc. 2007, 2, 270–274. [Google Scholar]

- Wang, J.; Qian, X.; Pan, Z.; Zhang, M.; Li, H. GCC-PHAT with speech-oriented attention for robotic sound source localization. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: New York, NY, USA, 2021; pp. 5876–5883. [Google Scholar]

- Lombard, A.; Zheng, Y.; Buchner, H.; Kellermann, W. TDOA estimation for multiple sound sources in noisy and reverberant environments using broadband independent component analysis. IEEE Trans. Audio Speech Lang. Process. 2010, 19, 1490–1503. [Google Scholar] [CrossRef]

- Huang, B.; Xie, L.; Yang, Z. TDOA-based source localization with distance-dependent noises. IEEE Trans. Wirel. Commun. 2014, 14, 468–480. [Google Scholar] [CrossRef]

- Scheuing, J.; Yang, B. Disambiguation of TDOA estimation for multiple sources in reverberant environments. IEEE Trans. Audio Speech Lang. Process. 2008, 16, 1479–1489. [Google Scholar] [CrossRef]

- Kim, U.H.; Nakadai, K.; Okuno, H.G. Improved sound source localization and front-back disambiguation for humanoid robots with two ears. In Proceedings of the Recent Trends in Applied Artificial Intelligence: 26th International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, IEA/AIE 2013, Amsterdam, The Netherlands, 17–21 June 2013; Proceedings 26. Springer: Berlin/Heidelberg, Germany, 2013; pp. 282–291. [Google Scholar]

- Kim, U.H.; Nakadai, K.; Okuno, H.G. Improved sound source localization in horizontal plane for binaural robot audition. Appl. Intell. 2015, 42, 63–74. [Google Scholar] [CrossRef]

- Chen, G.; Xu, Y. A sound source localization device based on rectangular pyramid structure for mobile robot. J. Sens. 2019, 2019, 4639850. [Google Scholar] [CrossRef]

- Chen, H.; Liu, C.; Chen, Q. Efficient and robust approaches for three-dimensional sound source recognition and localization using humanoid robots sensor arrays. Int. J. Adv. Robot. Syst. 2020, 17, 1729881420941357. [Google Scholar] [CrossRef]

- Xu, Q.; Yang, P. Sound Source Localization Strategy Based on Mobile Robot. In Proceedings of the 2013 Chinese Intelligent Automation Conference: Intelligent Automation & Intelligent Technology and Systems, Yangzhou, China, 26–28 August 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 469–478. [Google Scholar]

- Alameda-Pineda, X.; Horaud, R. A geometric approach to sound source localization from time-delay estimates. IEEE/ACM Trans. Audio Speech Lang. Process. 2014, 22, 1082–1095. [Google Scholar] [CrossRef]

- Valin, J.M.; Michaud, F.; Rouat, J. Robust localization and tracking of simultaneous moving sound sources using beamforming and particle filtering. Robot. Auton. Syst. 2007, 55, 216–228. [Google Scholar] [CrossRef]

- Luzanto, A.; Bohmer, N.; Mahu, R.; Alvarado, E.; Stern, R.M.; Becerra Yoma, N. Effective Acoustic Model-Based Beamforming Training for Static and Dynamic Hri Applications. Sensors 2024, 24, 6644. [Google Scholar] [CrossRef]

- Kagami, S.; Thompson, S.; Sasaki, Y.; Mizoguchi, H.; Enomoto, T. 2D sound source mapping from mobile robot using beamforming and particle filtering. In Proceedings of the 2009 IEEE International Conference on Acoustics, Speech and Signal Processing, Taipei, Taiwan, 19–24 April 2009; IEEE: New York, NY, USA, 2009; pp. 3689–3692. [Google Scholar]

- Capon, J. High-resolution frequency-wavenumber spectrum analysis. Proc. IEEE 2005, 57, 1408–1418. [Google Scholar] [CrossRef]

- Dmochowski, J.; Benesty, J.; Affes, S. Linearly constrained minimum variance source localization and spectral estimation. IEEE Trans. Audio Speech Lang. Process. 2008, 16, 1490–1502. [Google Scholar] [CrossRef]

- Yang, C.; Wang, Y.; Wang, Y.; Hu, D.; Guo, H. An improved functional beamforming algorithm for far-field multi-sound source localization based on Hilbert curve. Appl. Acoust. 2022, 192, 108729. [Google Scholar] [CrossRef]

- Liu, M.; Qu, S.; Zhao, X. Minimum Variance Distortionless Response—Hanbury Brown and Twiss Sound Source Localization. Appl. Sci. 2023, 13, 6013. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, R.; Yu, L.; Xiao, Y.; Guo, Q.; Ji, H. Localization of cyclostationary acoustic sources via cyclostationary beamforming and its high spatial resolution implementation. Mech. Syst. Signal Process. 2023, 204, 110718. [Google Scholar] [CrossRef]

- Faraji, M.M.; Shouraki, S.B.; Iranmehr, E.; Linares-Barranco, B. Sound source localization in wide-range outdoor environment using distributed sensor network. IEEE Sens. J. 2019, 20, 2234–2246. [Google Scholar] [CrossRef]

- DiBiase, J.H.; Silverman, H.F.; Brandstein, M.S. Robust localization in reverberant rooms. In Microphone Arrays: Signal Processing Techniques and Applications; Springer: Berlin/Heidelberg, Germany, 2001; pp. 157–180. [Google Scholar]

- Yook, D.; Lee, T.; Cho, Y. Fast sound source localization using two-level search space clustering. IEEE Trans. Cybern. 2015, 46, 20–26. [Google Scholar] [CrossRef]

- Ishi, C.T.; Chatot, O.; Ishiguro, H.; Hagita, N. Evaluation of a MUSIC-based real-time sound localization of multiple sound sources in real noisy environments. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; IEEE: New York, NY, USA, 2009; pp. 2027–2032. [Google Scholar]

- Zhang, X.; Feng, D. An efficient MUSIC algorithm enhanced by iteratively estimating signal subspace and its applications in spatial colored noise. Remote Sens. 2022, 14, 4260. [Google Scholar] [CrossRef]

- Weng, L.; Song, X.; Liu, Z.; Liu, X.; Zhou, H.; Qiu, H.; Wang, M. DOA estimation of indoor sound sources based on spherical harmonic domain beam-space MUSIC. Symmetry 2023, 15, 187. [Google Scholar] [CrossRef]

- Suzuki, R.; Takahashi, T.; Okuno, H.G. Development of a robotic pet using sound source localization with the hark robot audition system. J. Robot. Mechatronics 2017, 29, 146–153. [Google Scholar] [CrossRef]

- Chen, L.; Sun, W.; Huang, L.; Yu, L. Broadband sound source localisation via non-synchronous measurements for service robots: A tensor completion approach. IEEE Robot. Autom. Lett. 2022, 7, 12193–12200. [Google Scholar] [CrossRef]

- Chen, L.; Huang, L.; Chen, G.; Sun, W. A large scale 3d sound source localisation approach achieved via small size microphone array for service robots. In Proceedings of the 2022 5th International Conference on Information Communication and Signal Processing (ICICSP), Shenzhen, China, 26–28 November 2022; IEEE: New York, NY, USA, 2022; pp. 589–594. [Google Scholar]

- Hoshiba, K.; Washizaki, K.; Wakabayashi, M.; Ishiki, T.; Kumon, M.; Bando, Y.; Gabriel, D.; Nakadai, K.; Okuno, H.G. Design of UAV-embedded microphone array system for sound source localization in outdoor environments. Sensors 2017, 17, 2535. [Google Scholar] [CrossRef]

- Azrad, S.; Salman, A.; Al-Haddad, S.A.R. Performance of DOA Estimation Algorithms for Acoustic Localization of Indoor Flying Drones Using Artificial Sound Source. J. Aeronaut. Astronaut. Aviat. 2024, 56, 469–476. [Google Scholar]

- Nakamura, K.; Nakadai, K.; Asano, F.; Hasegawa, Y.; Tsujino, H. Intelligent sound source localization for dynamic environments. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; IEEE: New York, NY, USA, 2009; pp. 664–669. [Google Scholar]

- Narang, G.; Nakamura, K.; Nakadai, K. Auditory-aware navigation for mobile robots based on reflection-robust sound source localization and visual slam. In Proceedings of the 2014 IEEE International Conference on Systems, Man, and Cybernetics (SMC), San Diego, CA, USA,, 5–8 October 2014; IEEE: New York, NY, USA, 2014; pp. 4021–4026. [Google Scholar]

- Asano, F.; Morisawa, M.; Kaneko, K.; Yokoi, K. Sound source localization using a single-point stereo microphone for robots. In Proceedings of the 2015 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA), Hong Kong, China, 16–19 December 2015; IEEE: New York, NY, USA, 2015; pp. 76–85. [Google Scholar]

- Tran, H.D.; Li, H. Sound event recognition with probabilistic distance SVMs. IEEE Trans. Audio Speech Lang. Process. 2010, 19, 1556–1568. [Google Scholar] [CrossRef]

- Wang, J.C.; Wang, J.F.; He, K.W.; Hsu, C.S. Environmental sound classification using hybrid SVM/KNN classifier and MPEG-7 audio low-level descriptor. In Proceedings of the 2006 IEEE International Joint Conference on Neural Network Proceedings, Vancouver, BC, Canada, 16–21 July 2006; IEEE: New York, NY, USA, 2006; pp. 1731–1735. [Google Scholar]

- Yussif, A.M.; Sadeghi, H.; Zayed, T. Application of machine learning for leak localization in water supply networks. Buildings 2023, 13, 849. [Google Scholar] [CrossRef]

- Chen, H.; Ser, W. Sound source DOA estimation and localization in noisy reverberant environments using least-squares support vector machines. J. Signal Process. Syst. 2011, 63, 287–300. [Google Scholar] [CrossRef]

- Salvati, D.; Drioli, C.; Foresti, G.L. A weighted MVDR beamformer based on SVM learning for sound source localization. Pattern Recognit. Lett. 2016, 84, 15–21. [Google Scholar] [CrossRef]

- Salvati, D.; Drioli, C.; Foresti, G.L. On the use of machine learning in microphone array beamforming for far-field sound source localization. In Proceedings of the 2016 IEEE 26th International Workshop on Machine Learning for Signal Processing (MLSP), Vietri sul Mare, Italy, 13–16 September 2016; IEEE: New York, NY, USA, 2016; pp. 1–6. [Google Scholar]

- Gadre, C.M.; Patole, R.K.; Metkar, S.P. Comparative analysis of KNN and CNN for Localization of Single Sound Source. In Proceedings of the 2023 International Conference on Network, Multimedia and Information Technology (NMITCON), Bengaluru, India, 1–2 September 2023; IEEE: New York, NY, USA, 2023; pp. 1–6. [Google Scholar]

- Nando, P.; Putrada, A.G.; Abdurohman, M. Increasing the precision of noise source detection system using KNN method. Kinet. Game Technol. Inf. Syst. Comput. Netw. Comput. Electron. Control 2019, 4, 157–168. [Google Scholar] [CrossRef]

- Sun, Y.; Chen, J.; Yuen, C.; Rahardja, S. Indoor sound source localization with probabilistic neural network. IEEE Trans. Ind. Electron. 2017, 65, 6403–6413. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Fu, T.; Zhang, Z.; Liu, Y.; Leng, J. Development of an artificial neural network for source localization using a fiber optic acoustic emission sensor array. Struct. Health Monit. 2015, 14, 168–177. [Google Scholar] [CrossRef]

- Jin, C.; Schenkel, M.; Carlile, S. Neural system identification model of human sound localization. J. Acoust. Soc. Am. 2000, 108, 1215–1235. [Google Scholar] [CrossRef] [PubMed]

- Pu, C.J.; Harris, J.G.; Principe, J.C. A neuromorphic microphone for sound localization. In Proceedings of the 1997 IEEE International Conference on Systems, Man, and Cybernetics. Computational Cybernetics and Simulation, Orlando, FL, USA, 12–15 October 1997; IEEE: New York, NY, USA, 1997; Volume 2, pp. 1469–1474. [Google Scholar]

- Kim, Y.; Ling, H. Direction of arrival estimation of humans with a small sensor array using an artificial neural network. Prog. Electromagn. Res. B 2011, 27, 127–149. [Google Scholar] [CrossRef]

- Davila-Chacon, J.; Twiefel, J.; Liu, J.; Wermter, S. Improving Humanoid Robot Speech Recognition with Sound Source Localisation. In Proceedings of the Artificial Neural Networks and Machine Learning–ICANN 2014: 24th International Conference on Artificial Neural Networks, Hamburg, Germany, 15–19 September 2014; Proceedings 24. Springer: Berlin/Heidelberg, Germany, 2014; pp. 619–626. [Google Scholar]

- Dávila-Chacón, J.; Liu, J.; Wermter, S. Enhanced robot speech recognition using biomimetic binaural sound source localization. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 138–150. [Google Scholar] [CrossRef] [PubMed]

- Takeda, R.; Komatani, K. Discriminative multiple sound source localization based on deep neural networks using independent location model. In Proceedings of the 2016 IEEE Spoken Language Technology Workshop (SLT), San Diego, CA, USA, 13–16 December 2016; IEEE: New York, NY, USA, 2016; pp. 603–609. [Google Scholar]

- Bianco, M.J.; Gerstoft, P.; Traer, J.; Ozanich, E.; Roch, M.A.; Gannot, S.; Deledalle, C.A. Machine learning in acoustics: Theory and applications. J. Acoust. Soc. Am. 2019, 146, 3590–3628. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Vera-Diaz, J.M.; Pizarro, D.; Macias-Guarasa, J. Towards end-to-end acoustic localization using deep learning: From audio signals to source position coordinates. Sensors 2018, 18, 3418. [Google Scholar] [CrossRef]

- Suvorov, D.; Dong, G.; Zhukov, R. Deep residual network for sound source localization in the time domain. arXiv 2018, arXiv:1808.06429. [Google Scholar] [CrossRef]

- Huang, D.; Perez, R.F. Sseldnet: A fully end-to-end sample-level framework for sound event localization and detection. In Proceedings of the DCASE, Online, 15–19 November 2021. [Google Scholar]

- Vincent, E.; Virtanen, T.; Gannot, S. Audio Source Separation and Speech Enhancement; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Chakrabarty, S.; Habets, E.A. Multi-speaker DOA estimation using deep convolutional networks trained with noise signals. IEEE J. Sel. Top. Signal Process. 2019, 13, 8–21. [Google Scholar] [CrossRef]

- Wu, Y.; Ayyalasomayajula, R.; Bianco, M.J.; Bharadia, D.; Gerstoft, P. Sound source localization based on multi-task learning and image translation network. J. Acoust. Soc. Am. 2021, 150, 3374–3386. [Google Scholar] [CrossRef]

- Butt, S.S.; Fatima, M.; Asghar, A.; Muhammad, W. Active Binaural Auditory Perceptual System for a Socially Interactive Humanoid Robot. Eng. Proc. 2022, 12, 83. [Google Scholar]

- Krause, D.; Politis, A.; Kowalczyk, K. Comparison of convolution types in CNN-based feature extraction for sound source localization. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO), Belgrade, Serbia, 18–21 January 2021; IEEE: New York, NY, USA, 2021; pp. 820–824. [Google Scholar]

- Diaz-Guerra, D.; Miguel, A.; Beltran, J.R. Robust sound source tracking using SRP-PHAT and 3D convolutional neural networks. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 29, 300–311. [Google Scholar] [CrossRef]

- Bologni, G.; Heusdens, R.; Martinez, J. Acoustic reflectors localization from stereo recordings using neural networks. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: New York, NY, USA, 2021; pp. 1–5. [Google Scholar]

- Nguyen, Q.; Girin, L.; Bailly, G.; Elisei, F.; Nguyen, D.C. Autonomous sensorimotor learning for sound source localization by a humanoid robot. In Proceedings of the IROS 2018-Workshop on Crossmodal Learning for Intelligent Robotics in Conjunction with IEEE/RSJ IROS, Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Boztas, G. Sound source localization for auditory perception of a humanoid robot using deep neural networks. Neural Comput. Appl. 2023, 35, 6801–6811. [Google Scholar] [CrossRef]

- Pang, C.; Liu, H.; Li, X. Multitask learning of time-frequency CNN for sound source localization. IEEE Access 2019, 7, 40725–40737. [Google Scholar] [CrossRef]

- Ko, J.; Kim, H.; Kim, J. Real-time sound source localization for low-power IoT devices based on multi-stream CNN. Sensors 2022, 22, 4650. [Google Scholar] [CrossRef]

- Mjaid, A.Y.; Prasad, V.; Jonker, M.; Van Der Horst, C.; De Groot, L.; Narayana, S. Ai-based simultaneous audio localization and communication for robots. In Proceedings of the 8th ACM/IEEE Conference on Internet of Things Design and Implementation, San Antonio, TX, USA, 9–12 May 2023; pp. 172–183. [Google Scholar]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.N.T.; Nguyen, N.K.; Phan, H.; Pham, L.; Ooi, K.; Jones, D.L.; Gan, W.S. A general network architecture for sound event localization and detection using transfer learning and recurrent neural network. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: New York, NY, USA, 2021; pp. 935–939. [Google Scholar]

- Wang, Z.Q.; Zhang, X.; Wang, D. Robust speaker localization guided by deep learning-based time-frequency masking. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 27, 178–188. [Google Scholar] [CrossRef]

- Andra, M.B.; Usagawa, T. Portable keyword spotting and sound source detection system design on mobile robot with mini microphone array. In Proceedings of the 2020 6th International Conference on Control, Automation and Robotics (ICCAR), Singapore, 20–23 April 2020; IEEE: New York, NY, USA, 2020; pp. 170–174. [Google Scholar]

- Adavanne, S.; Politis, A.; Nikunen, J.; Virtanen, T. Sound event localization and detection of overlapping sources using convolutional recurrent neural networks. IEEE J. Sel. Top. Signal Process. 2018, 13, 34–48. [Google Scholar] [CrossRef]

- Lu, Z. Sound event detection and localization based on CNN and LSTM. Technical Report. In Proceedings of the Detection Classification Acoustic Scenes Events Challenge, New York, NY, USA, 25–26 October 2019. [Google Scholar]

- Perotin, L.; Serizel, R.; Vincent, E.; Guérin, A. CRNN-based multiple DoA estimation using acoustic intensity features for Ambisonics recordings. IEEE J. Sel. Top. Signal Process. 2019, 13, 22–33. [Google Scholar] [CrossRef]

- Grumiaux, P.A.; Kitić, S.; Girin, L.; Guérin, A. Improved feature extraction for CRNN-based multiple sound source localization. In Proceedings of the 2021 29th European Signal Processing Conference (EUSIPCO), Belgrade, Serbia, 23–27 August 2021; IEEE: New York, NY, USA, 2021; pp. 231–235. [Google Scholar]

- Kim, J.H.; Choi, J.; Son, J.; Kim, G.S.; Park, J.; Chang, J.H. MIMO noise suppression preserving spatial cues for sound source localization in mobile robot. In Proceedings of the 2021 IEEE International Symposium on Circuits and Systems (ISCAS), Daegu, Republic of Korea, 22–28 May 2021; IEEE: New York, NY, USA, 2021; pp. 1–5. [Google Scholar]

- Han, C.; Luo, Y.; Mesgarani, N. Real-time binaural speech separation with preserved spatial cues. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: New York, NY, USA, 2020; pp. 6404–6408. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Mack, W.; Bharadwaj, U.; Chakrabarty, S.; Habets, E.A. Signal-aware broadband DOA estimation using attention mechanisms. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; IEEE: New York, NY, USA, 2020; pp. 4930–4934. [Google Scholar]

- Altayeva, A.; Omarov, N.; Tileubay, S.; Zhaksylyk, A.; Bazhikov, K.; Kambarov, D. Convolutional LSTM Network for Real-Time Impulsive Sound Detection and Classification in Urban Environments. Int. J. Adv. Comput. Sci. Appl. 2023, 14. [Google Scholar] [CrossRef]

- Akter, R.; Islam, M.R.; Debnath, S.K.; Sarker, P.K.; Uddin, M.K. A hybrid CNN-LSTM model for environmental sound classification: Leveraging feature engineering and transfer learning. Digit. Signal Process. 2025, 163, 105234. [Google Scholar] [CrossRef]

- Varnita, L.S.S.; Subramanyam, K.; Ananya, M.; Mathilakath, P.; Krishnan, M.; Tiwari, S.; Shankarappa, R.T. Precision in Audio: CNN+ LSTM-Based 3D Sound Event Localization and Detection in Real-world Environments. In Proceedings of the 2024 2nd International Conference on Networking and Communications (ICNWC), Chennai, India, 2–4 April 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser,, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems, Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Neural Information Processing Systems Foundation, Inc. (NeurIPS): La Jolla, CA, USA, 2017; Volume 30. [Google Scholar]

- Phan, H.; Pham, L.; Koch, P.; Duong, N.Q.; McLoughlin, I.; Mertins, A. On multitask loss function for audio event detection and localization. arXiv 2020, arXiv:2009.05527. [Google Scholar] [CrossRef]

- Schymura, C.; Ochiai, T.; Delcroix, M.; Kinoshita, K.; Nakatani, T.; Araki, S.; Kolossa, D. Exploiting attention-based sequence-to-sequence architectures for sound event localization. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO), Belgrade, Serbia, 18–21 January 2021; IEEE: New York, NY, USA, 2021; pp. 231–235. [Google Scholar]

- Emmanuel, P.; Parrish, N.; Horton, M. Multi-scale network for sound event localization and detection. In Proceedings of the Technol Report of DCASE Challenge, Online, 15–19 November 2021. [Google Scholar]

- Yalta, N.; Sumiyoshi, Y.; Kawaguchi, Y. The Hitachi DCASE 2021 Task 3 system: Handling directive interference with self attention layers. Technical Report. In Proceedings of the Detection and Classification of Acoustic Scenes and Events 2021, Online, 15–19 November 2021; Volume 29. [Google Scholar]

- Zhang, G.; Geng, L.; Xie, F.; He, C.D. A dynamic convolution-transformer neural network for multiple sound source localization based on functional beamforming. Mech. Syst. Signal Process. 2024, 211, 111272. [Google Scholar] [CrossRef]

- Zhang, R.; Shen, X. A Novel Sound Source Localization Method Using Transformer. In Proceedings of the 2024 9th International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Okinawa, Japan, 21–23 November 2024; IEEE: New York, NY, USA, 2024; Volume 9, pp. 361–366. [Google Scholar]

- Chen, X.; Zhao, L.; Cui, J.; Li, H.; Wang, X. Hybrid Convolutional Neural Network-Transformer Model for End-to-End Binaural Sound Source Localization in Reverberant Environments. IEEE Access 2025, 13, 36701–36713. [Google Scholar] [CrossRef]

- Zhang, D.; Chen, J.; Bai, J.; Wang, M.; Ayub, M.S.; Yan, Q.; Shi, D.; Gan, W.S. Multiple sound sources localization using sub-band spatial features and attention mechanism. Circuits Syst. Signal Process. 2025, 44, 2592–2620. [Google Scholar] [CrossRef]

- Lin, T.; Wang, Y.; Liu, X.; Qiu, X. A survey of transformers. AI Open 2022, 3, 111–132. [Google Scholar] [CrossRef]

- Mu, H.; Xia, W.; Che, W. Improving domain generalization for sound classification with sparse frequency-regularized transformer. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; IEEE: New York, NY, USA, 2023; pp. 1104–1108. [Google Scholar]

- Liu, Z.; Wang, Y.; Han, K.; Zhang, W.; Ma, S.; Gao, W. Post-training quantization for vision transformer. Adv. Neural Inf. Process. Syst. 2021, 34, 28092–28103. [Google Scholar]