Abstract

The graphite mineral processing workshop involves complex procedures and generates a large amount of dust and smoke during operation. This particulate matter significantly degrades the quality of indoor surveillance video frames, thereby affecting subsequent tasks such as image segmentation and recognition. Existing image dehazing algorithms often suffer from insufficient feature extraction or excessive computational cost, which limits their real-time applicability and makes them unsuitable for deployment in graphite processing environments. To address this issue, this paper proposes a CNN-based dehazing algorithm tailored for dust and haze removal in graphite mineral processing workshops. Experimental results on a synthetic haze dataset constructed for graphite processing scenarios demonstrate that the proposed method achieves higher PSNR and SSIM compared to existing deep learning-based dehazing approaches, resulting in improved visual quality of dehazed images.

1. Introduction

Graphite ore is an important mineral form of carbon, characterized by excellent electrical conductivity, high-temperature resistance, and chemical stability. It is widely used across various industries, including new energy, electronics, and metallurgy. With the advancement of intelligent manufacturing, smart surveillance systems are playing an increasingly critical role. However, the production environment of graphite processing workshops is often accompanied by heavy dust, smoke, and humidity, which significantly reduces the visibility of monitoring equipment and poses challenges to safe production and automated surveillance. Therefore, the development of an effective image dehazing and dust removal algorithm for graphite mining environments holds significant practical importance and application value.

The appearance of dust haze in images is highly complex, exhibiting diverse colors, sizes, and shapes, and often blending with image textures and noise. Traditional methods, such as filtering and inpainting, struggle to accurately detect and remove these artifacts. Although deep learning techniques can improve performance, they require large amounts of annotated data containing dust, which is difficult to obtain. As a result, dedicated dust removal algorithms remain scarce in the image processing domain. In graphite ore workshops, the dust generated during mineral processing manifests as a dense haze-like phenomenon. Therefore, existing image dehazing algorithms can be adapted and further enhanced to better address dust haze removal in mineral processing environments.

The purpose of image dehazing algorithms is to restore a haze-free image from a hazy input. The atmospheric scattering model is commonly used to describe the degradation caused by haze, and it can be formulated as follows:

I(x) = J(x)t(x) + A(1 − t(x)),

I(x) indicates the input image degraded by haze, J(x) denotes the latent haze-free scene. In addition, t(x) characterizes the transmission map specifying the fraction of light that penetrates the haze at location x, and A denotes the global atmospheric light intensity.

Early prior-based methods [1,2,3,4,5,6] estimate the transmission map t(x) and atmospheric light based on the atmospheric scattering model to inversely recover the original haze-free image, thus obtaining the dehazed image. Prior-based algorithms achieve good accuracy in static and simple environments but often fail under dynamic and complex conditions. Subsequently, deep learning-based dehazing methods [7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31] have made significant progress. By integrating physical models with deep neural networks, researchers learn dehazing models by exploring feature relationships between paired hazy and clear images. Deep learning approaches can adaptively adjust parameters through training on large and diverse datasets, enabling robust dehazing performance in complex environments. However, these methods typically require greater computational resources and may suffer from distortions in restoring fine local details due to the depth and complexity of the networks.

The environment in graphite ore processing workshops is relatively fixed, and the equipment deployment is subject to strict constraints. However, the concentration of dust haze varies dynamically with different processing stages. To address these challenges, this paper proposes a lightweight deep learning algorithm “IGWDehaze-Net” that combines convolutional neural networks (CNNs) with multi-attention feature fusion to enable effective image dehazing in workshop environments. The main contributions of this work are summarized as follows:

- We propose a lightweight dehazing algorithm designed for graphite ore processing, combining instance normalization, dual-stream convolution, and multi-attention fusion to achieve efficient and effective haze removal.

- To better aggregate haze features, a lightweight Dual-Stream Convolution (DSC) module with parallel global and local branches is proposed, enhancing feature representation with low computational cost for efficient deployment.

- We introduce a Multi-Attention Feature Fusion (MAFF) module that integrates channel, spatial, and contextual attention to enhance feature representation with minimal overhead. It adaptively responds to haze distribution, effectively reducing residual haze and blur while maintaining strong performance in complex scenarios.

2. Related Work

2.1. Prior-Based Methods

Prior-based image dehazing methods form a foundational research direction in image restoration. These methods aim to recover clear images using physical models and statistical priors without requiring paired training data. Key techniques include the Dark Channel Prior (DCP) [1], which assumes that in most local patches of a natural image, at least one-color channel contains pixels with very low intensity. This assumption enables effective estimation of haze thickness and scene transmission, allowing haze removal while preserving structural details, the Color-Line Prior [2], which leverages the observation that pixel colors within small image regions tend to form linear patterns in RGB space to guide transmission estimation, and the Max Contrast Prior [3], which enhances local contrast to perform dehazing without the need for training data. These approaches are interpretable, training-free, and efficient, making them suitable for scenarios where data collection is difficult.

However, their reliance on fixed assumptions limits their adaptability in complex scenes with challenging lighting or textures, often leading to artifacts or over-dehazing. While they provide strong theoretical foundations and continue to influence modern deep learning models, their lack of learning ability restricts generalization. Prior-based methods are most effective in controlled environments such as industrial workshops or tunnels, where conditions align with their assumptions. Despite their advantages, they require further refinement to handle more diverse and unpredictable real-world scenarios.

2.2. Deep Learning-Based Methods

Image dehazing plays a vital role in computer vision by removing visual degradation caused by atmospheric particles like fog and haze, which lead to blurring and reduced contrast. Traditional methods based on physical models such as the Atmospheric Scattering Model (ASM) often struggle in complex real-world scenarios. In contrast, deep learning-based approaches have shown remarkable improvements by learning effective dehazing features from large-scale datasets. These methods vary in architecture, including CNNs, U-Net structures, GANs, and Transformers. Notable examples include AOD-Net [7], which directly learns the parameters of the atmospheric scattering model, thereby eliminating the need to estimate the transmission map separately. By adopting an end-to-end training framework, this approach enhances the efficiency of the dehazing process. DM2F [8] predicts the transmission map using a deep neural network and then reconstructs the haze-free image using physical modeling. DehazeGAN [9] employs a purely GAN-based unsupervised framework, demonstrating strong performance under challenging hazy conditions. FFA-Net [10] incorporates feature attention mechanisms to enhance dehazing quality, while multi-scale learning and skip connections help better preserve image details.

Compared to traditional techniques, deep learning models automatically learn complex image representations and adapt to diverse haze conditions, achieving strong generalization, especially when trained on datasets like RESIDE. However, these models—particularly Transformer-based ones—demand substantial computational resources, making deployment on edge devices challenging. Additionally, issues like color distortion, detail loss, and unrealistic textures may arise during inference, with CNNs prone to over-sharpening and GANs to generating false details. As such, choosing or designing a dehazing algorithm that balances performance, generalization, and computational efficiency is essential for practical applications.

3. Proposed Method

3.1. Overall Architecture

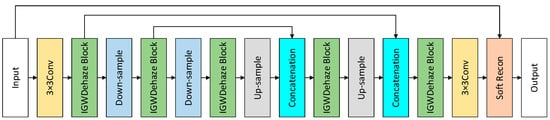

The overall architecture of the proposed algorithm is illustrated in Figure 1. The network adopts a five-stage U-Net structure, where the conventional convolutional layers are replaced by our custom-designed IGWDehaze Blocks. The network consists of five downsampling (encoder) stages and five upsampling (decoder) stages. Downsampling is performed using 3 × 3 convolutions with a stride of 2, while upsampling is achieved by combining point-wise convolutions with PixelShuffle operations. The final output passes through a soft reconstruction module to generate the haze-free image. Skip connections are employed throughout the network to fuse multi-scale features, effectively balancing global contextual information and local details via multi-scale feature integration. The five-stage design enables the network to adaptively process feature maps at different resolutions, which is critical for image dehazing tasks that require simultaneous capture of the global distribution of haze and the local details such as edges and textures.

Figure 1.

The overall architecture of IGWDehaze-Net.

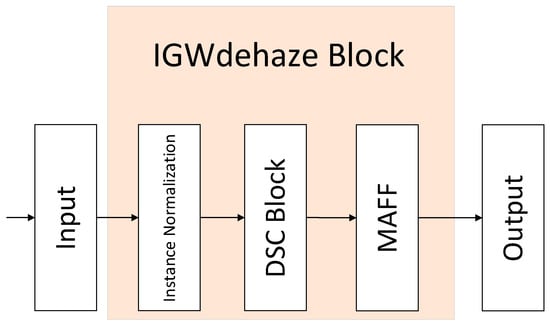

This paper proposes an efficient and lightweight dehazing network, IGWDehaze-Net, specifically designed for image dehazing in graphite mineral processing workshops to restore the original clarity of images. The overall network architecture is illustrated in Figure 2. It primarily consists of Instance Normalization (IN), a Dual-Scale Convolutional Block (DSC Block), and a Multi-Attention Feature Fusion Module (MAFF).

Figure 2.

The overall architecture of IGWDehaze Block.

To meet the requirements of image dehazing, the network incorporates prediction of the atmospheric light intensity A and the transmission map t(x) at the final output stage. These parameters guide the reconstruction of the dehazed image based on the atmospheric scattering model.

3.2. Instance Normalization (IN)

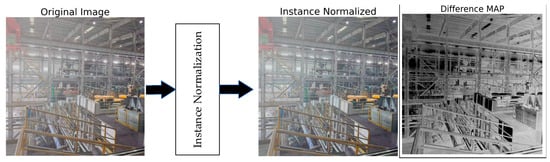

Instance Normalization (IN) is a feature normalization technique that plays a crucial role in the field of image dehazing. The core objective of image dehazing is to restore regions of low contrast, low brightness, and color distortion caused by atmospheric scattering, thereby reconstructing images that closely approximate clear, haze-free scenes. Figure 3 illustrates the outcome. Since haze impacts different regions of an image with varying severity, models require a certain degree of local adaptability when processing distinct areas. The introduction of Instance Normalization precisely addresses this need. IN normalizes the mean and variance independently for each channel of every individual sample, effectively eliminating brightness biases and contrast fluctuations on a per-image basis during feature extraction. This enhances the model’s focus on local image structures. In contrast, traditional Batch Normalization computes statistics across multiple samples, which can introduce inter-sample statistical interferences especially when the batch size is small leading to instability and adversely affecting detail recovery in images. In multi-scale or lightweight network architectures, Instance Normalization can effectively replace Batch Normalization, thereby save computational resources and improve training efficiency. Therefore, IN not only enhances the stability of image restoration but also increases the network’s robustness to complex input conditions, making it a common and practical normalization strategy in modern image dehazing networks.

Figure 3.

The changes induced by Instance Normalization (IN) are visualized using the processed images. The third image illustrates the difference between the original image and the result after IN processing, where flat regions appear nearly black while edges and textures are highlighted, emphasizing the effect of normalization on structural details.

The feature maps are first normalized using Instance Normalization (IN), which independently normalizes the features of each channel for every individual sample. This process effectively eliminates feature variations caused by non-structural factors such as illumination, style, and exposure to the images. For an input feature map , the mean and variance are computed independently for each channel over the spatial dimensions to measure the overall response intensity and distribution shift of the current channel, which is defined as follows:

Next, the feature map is normalized as follows:

where is a small constant added for numerical stability to prevent division by zero. Subsequently, a learnable affine transformation is applied channel-wise:

where and are the learnable scale and shift parameters for each channel. The final output normalized feature map is denoted as

3.3. DSC Block

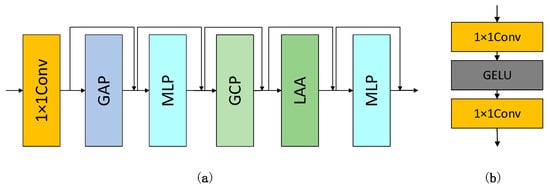

The core principle of the DSC (Dual-Scale Convolutional) Block lies in combining global feature extraction with local feature supplementation. In graphite processing workshop scenarios, images typically exhibit weak textures, low contrast, dense, and unevenly distributed haze. Traditional single-scale convolution suffers from inherent limitations, including large convolution kernels, but are capable of capturing long-range dependencies, incur high computational costs, and exhibit weak local representation. Small convolution kernels are computationally efficient but have limited receptive fields, making them inadequate for modeling extensive haze regions. To address these challenges, the DSC Block adopts a dual-scale pathway architecture. The overall network architecture is illustrated in Figure 4. It leverages Global Context Perception (GCP) to capture contextual information through large-kernel depthwise convolutions that model spatial relationships, while simultaneously employing a Local Adaptive Aggregation (LAA) path with smaller kernels to dynamically aggregate fine-grained local features. This design yields a lightweight yet powerful context modeling unit. Rather than simply combining large and small kernel convolutions, the module first applies large-kernel depthwise convolution to encode broad contextual cues, followed by dynamic convolutions with smaller kernels to focus attention within a localized receptive field. By incorporating large receptive field convolutions, residual connections, and local enhancement mechanisms, the DSC Block effectively extracts local structural features and strengthens edge and contour awareness. This is particularly suited for industrial images dominated by grayscale and simple structures. The smaller convolution kernels further refine the attention within task-relevant regions, enhancing detail recovery in complex haze conditions.

Figure 4.

(a) The structure of the DSC Block, where GAP denotes Global Average Pooling; (b) The architecture of the Multi-Layer Perceptron (MLP).

This module consists of a 1 × 1 convolution; a Global Average Pooling (GAP) layer; two Multi-Layer Perceptrons (MLP); and two primary feature extraction branches, including the Global Context Perception (GCP) path and the Local Adaptive Aggregation (LAA) path, which are integrated using a residual connection structure. The inclusion of GCP and LAA significantly strengthens the model’s capability of learning local structural representations, allowing it to better preserve edge features in weak-texture regions. Additionally, the DSC Block incorporates nonlinear transformations and features fusion mechanisms to mitigate the spatial non-uniformity of haze distribution. It demonstrates superior capability in restoring features in target regions that are heavily occluded by dense fog, such as conveyor belts and equipment contours. This is particularly effective in graphite workshop scenarios, where images are typically dominated by grayscale tones and lack significant color variation. The DSC Block provides additional structural awareness, addressing the limitations of traditional convolutions in recognizing subtle structural details. By combining large-kernel depthwise convolutions for context modeling with small-kernel dynamic convolutions for fine-grained feature aggregation, the representational power of the model is reinforced by the DSC Block, while computational efficiency necessary for real-world deployment is preserved.

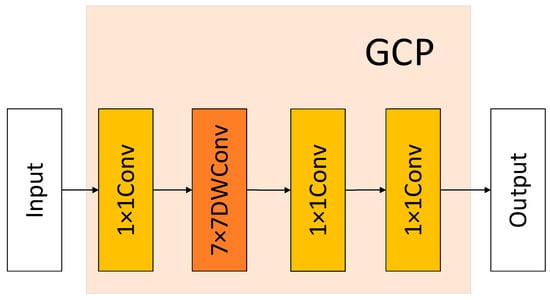

The Global Context Perception (GCP) module is composed of two 1 × 1 pointwise convolutions and one 7 × 7 depthwise separable convolution. The configuration of the network is presented in Figure 5. First, the channel dimension of the input feature map is reduced from C to C′ via a 1 × 1 convolution, and long-range context is then captured using a 7 × 7 depthwise convolution. Finally, another 1× 1 convolution restores the channel dimension back to C. Given an input feature map , the output of the GCP module can be expressed as follows:

where represents the global context-enhanced feature map.

Figure 5.

The structure of the GCP Block.

The GCP module effectively strengthens the feature representation by embedding global dependencies through large receptive field modeling, which is crucial in scenes with spatially dispersed haze.

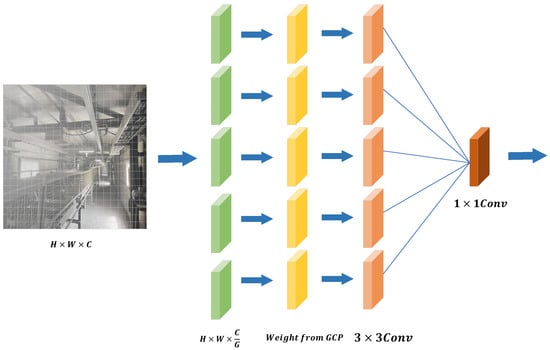

The Local Adaptive Aggregation (LAA) module is designed to perform dynamic fusion of local regions based on small convolutional kernels, enabling adaptive fine-grained detail modeling. This path compensates for the deficiency of the large-kernel perception path (GCP) in capturing local information, thus forming a dual-scale convolutional structure that integrates both global and local contexts. The structure of the LAA is depicted in Figure 6. The LAA module mainly consists of three components: Channel Grouping, Guided Weights from GCP, and Local Convolutional Aggregation. Given an input feature map , the channel grouping mechanism divides the feature map into groups, each with channels, as follows:

Figure 6.

The structure of the LAA Block.

Each group is then processed using a dynamic convolution operation. This design enables the network to apply differentiated processing to different channel groups, thereby recovering more fine-grained image details. Subsequently, the grouped features are modulated by the guidance weights derived from the GCP module. These global-aware weights serve as dynamic scaling factors to ensure consistency between global structure and local details, preventing uneven dehazing. Each group is then passed through a dynamic convolution to extract local features such as edges, textures, and color gradients. Finally, the outputs from all groups are concatenated and fused via a convolution to integrate the refined local features.

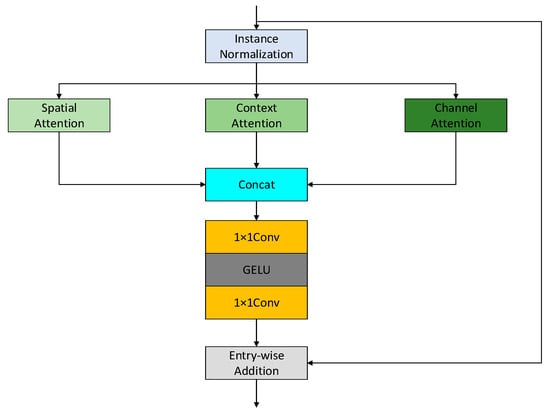

3.4. The Multi-Attention Feature Fusion (MAFF)

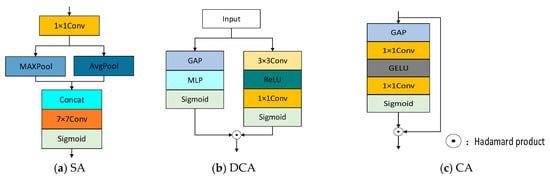

The Multi-Attention Feature Fusion Module (MAFF) employs three types of attention mechanisms—Channel Attention (CA), Spatial Attention (SA), and Dynamic Context Attention (DCA)—in parallel, enabling the network to simultaneously capture global feature dependencies and supplement local feature details at the same hierarchical level. As depicted in Figure 7 and Figure 8, the corresponding networks are structured as follows. This parallel design significantly enhances computational efficiency while improving the model’s capability to learn and process complex images. The MAFF module selects Channel Attention, Spatial Attention, and Context Attention to work concurrently. Multi-attention mechanisms are a key technique in deep learning to boost model expressiveness by dynamically adjusting the focus on different parts of the input, mimicking human visual attention. Compared to traditional single-attention mechanisms, multi-attention can weight features from multiple dimensions, scales, or information channels, extracting richer and more discriminative representations. The core idea of multi-attention is to introduce multiple independent or cooperative attention submodules, each focusing on distinct feature dimensions, such as spatial, channel, or semantic context. Channel Attention operates along the channel dimension to emphasize critical details like color, texture, and structure—essentially determining “which feature maps are more important.” Spatial Attention focuses on the spatial dimension, highlighting key regions such as edges and haze areas by answering “where in the image is more important.” Context Attention leverages global semantic information across the entire image to guide attention, determining “how accurate the focus is.” Combining these three attentions allows the network to jointly address “where to look,” “what to look at,” and “how precise the focus is,” thereby enhancing overall feature representation and dehazing performance.

Figure 7.

MAFF Block architecture.

Figure 8.

The schematic diagrams of Spatial Attention (SA), Dynamic Contextual Attention (DCA), and Channel Attention (CA). GAP is the global average pooling.

Spatial Attention (SA) focuses on “where in the image is more important.” In image dehazing tasks, haze is often unevenly distributed spatially. Certain regions such as the sky, distant background, or object edges may have denser haze, while other areas might be clearer. Traditional convolution operations treat all spatial positions equally, which can cause critical structures to be overlooked. Therefore, introducing spatial attention effectively improves the network’s capability to capture spatial relationships within structural features and regions with dense haze. Given an input feature map , spatial attention first applies max pooling and average pooling along the channel dimension separately, producing two single channel feature maps:

These feature maps are combined by concatenation across the channel dimension:

Then, the concatenated feature is passed through a 7 × 7 convolutional layer, which is then activated using a sigmoid function, to generate the spatial attention map:

This attention map highlights important spatial regions, directing the network’s attention toward regions significantly impacted by haze for better image restoration.

The Dynamic Context-Aware Attention (DCA) mechanism enhances the network’s ability to perceive the global haze distribution by leveraging global statistical information and contextual semantics to guide local attention. It typically consists of two parallel branches: one modeling global context along the channel dimension, and the other capturing attention focused on local spatial regions. With respect to the input feature map , branch 1 performs Global Average Pooling (GAP) to compress spatial information and extract a context vector:

This step captures the statistical summary of the entire image, producing a compact representation of the global haze characteristics. Subsequently, the context vector z is fed into a Multi-Layer Perceptron (MLP) followed by a sigmoid activation to produce channel-wise attention weights:

In branch 2, local contextual features are extracted to produce a spatial attention map. Initially, the input feature map F is convolved using a 3 × 3 kernel to capture structural details such as edges and textures, after which a ReLU activation is applied to introduce non-linearity. The feature map is then passed through a 1 × 1 convolution for channel reduction, and spatial attention weights are obtained via a sigmoid function:

Finally, the outputs from both branches are combined using element-wise multiplication (Hadamard product) to form the final dynamic context-aware attention map:

This attention mechanism helps the network highlight salient regions (object edges and target areas) while suppressing irrelevant or redundant haze, thereby improving the model’s robustness and accuracy in dehazing complex industrial images.

Channel Attention (CA) plays a crucial role in image dehazing tasks by enhancing the network’s ability to selectively focus on informative feature representations. During the feature extraction stage, the input image is encoded into a set of feature maps across multiple channels, where each channel responds to specific semantic attributes such as haze texture, color gradients, or structural edges. The fundamental objective of the CA mechanism is to assign adaptive weights to each channel, thereby highlighting informative features and suppressing irrelevant or redundant information. This dynamic weighting mechanism enables the network to concentrate on features that are most relevant to haze perception and image restoration, significantly improving its capacity to “see” and “see clearly”. For the input feature map , Global Average Pooling (GAP) is first applied across the spatial dimensions of each channel to obtain a compact representation that captures the overall channel response. These channel descriptors are then passed through a lightweight fully connected network, which models the nonlinear inter-channel dependencies. Specifically, the descriptors are first transformed using a point-wise convolution followed by a GELU activation and then mapped back to the original channel dimension using another point-wise convolution. Finally, a Sigmoid activation function is applied to normalize the output, producing an attention weight vector , which can be expressed as follows:

After computing the three types of attention weights, including spatial attention , dynamic context-aware attention , and channel attention , these attention maps are concatenated and passed through a Multi-Layer Perceptron (MLP) for nonlinear transformation and dimensionality reduction. The output of the MLP is projected to match the original feature dimension of , enabling the extraction of deeper semantic representations. The final output of the module is obtained by adding the MLP-processed attention feature to the original input via a residual connection, which not only enhances feature expressiveness but also mitigates potential loss of information.

3.5. Loss Function

To supervise the discrepancy between the model output and the ground truth image—and more importantly—to guide the network in accurately restoring image details such as texture, edges, color, and structural content, this study adopts the L1 loss function for parameter optimization.

The L1 loss is more stable and sensitive to edge and luminance variations, making it particularly suitable for recovering overall brightness and haze-affected regions in dehazed images. By computing the absolute pixel-wise difference between the predicted image and the ground truth, it treats all errors equally. Furthermore, the L1 loss is more responsive to small errors while maintaining robustness to outliers, which helps preserve sharp structures such as edges. The loss function can be expressed as follows:

The pixel value of the predicted dehazed image is given by Ipred(i), the corresponding ground truth pixel is given as Igt(i), and the total number of pixels is denoted by N.

4. Experiments

4.1. Implementation Details

Due to hardware limitations, all experiments in this work were carried out on an NVIDIA RTX 4070 Ti GPU. The training image size was set to 256 × 256 pixels, with a batch size of 16. The initial learning rate was set to 1 × 10−4, and the model was trained for 300 epochs. Adam optimizer was employed, accompanied by a cosine annealing scheduler to gradually decrease the learning rate to 1 × 10−6.

Since the network modules in this study are designed for application in a graphite beneficiation workshop, the training process requires paired dusty (hazy) and dust-free (clear) images from the workshop environment. However, collecting such paired images is challenging. To verify the superiority of the proposed IGW model, we first perform pretraining on the widely recognized RESIDE dataset, which is extensively used in image dehazing research. The RESIDE dataset comprises an indoor training set (ITS) with 13,990 image pairs, an outdoor training set (OTS) with 313,950 image pairs, and a Synthetic Objective Testing Set (SOTS) consisting of 500 indoor and 500 outdoor image pairs. This dataset is a generic benchmark that is easy to acquire and can simulate various complex hazy conditions. Its large scale effectively enhances the model’s generalization capability in diverse environments.

To enable the proposed network model to perform effectively in the graphite beneficiation workshop scenario, this study further collects several color images captured by a camera in randomly selected graphite mine workshop environments. During the acquisition process, sufficient illumination is ensured, and uniform color or blank images are avoided. All captured images are preprocesses to a uniform resolution. Subsequently, synthetic haze of varying densities is applied to these images using haze synthesis software. Corresponding annotations are generated for the paired clear and synthetic hazy images, resulting in a custom dehazing dataset tailored for the graphite workshop environment.

Quantitative evaluation of the proposed image dehazing algorithm is conducted using Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index Measure (SSIM) on the RESIDE image dehazing dataset. These metrics are widely used in the field of computer vision for assessing image quality. PSNR is defined as follows:

Mean squared error (MSE) quantifies the difference between images, where and are the pixel values at position of the original and dehazed images, respectively. is the image resolution, and denotes the maximum possible pixel value.

PSNR effectively captures pixel-level differences between the original and restored images and offers fast computation. On the other hand, SSIM is defined as follows:

where , , and denote luminance, contrast, and structural similarities, respectively.

SSIM aligns more closely with human visual perception and is particularly sensitive to structural distortions such as edge blurring and texture loss. Unlike PSNR, it considers both local and global structural information, providing a more comprehensive evaluation of visual quality.

To assess the practical applicability of the proposed algorithms, this study evaluates both model complexity and computational efficiency using the total number of parameters (Params) and floating-point operations (FLOPs), respectively. The Params metric reflects the total count of learnable weights and biases within a model. A larger number of parameters generally allows the model to capture more complex mappings, but it also increases the storage requirements, which is particularly relevant for deployment on mobile or embedded platforms. FLOPs, on the other hand, quantify the number of floating-point operations executed during inference. Higher FLOPs typically lead to slower inference, which may be detrimental in scenarios with strict real-time processing constraints.

4.2. The Method for Synthesizing Hazy Image Datasets

Since it is difficult to obtain perfectly corresponding haze-free and hazy images in graphite workshops, the image dataset in this study was generated by synthetically adding haze to the images. The synthetic hazy image dataset used in this experiment was generated using a Python (https://www.python.org/ (accessed on 1 August 2025)) + OpenCV-based haze simulation script, which implements the classical atmospheric scattering model:

A stands for the overall atmospheric light intensity.

Given that the lighting conditions in the graphite mineral processing plant are artificially controlled, is set to a fixed, bright constant value (220 in this experiment) to better reflect the real-world application scenario.

The transmission map is modeled as follows:

where denotes the haze concentration, and represents the scene depth, which is approximated using a linear depth map in the simulation script.

Due to the variability in dust concentration caused by different processing stages in the graphite plant, it is difficult to determine an accurate value for. Thus, the value of is empirically assigned based on the plant location. For ventilated conveyor belt areas, is set around 0.8–1.2. In more enclosed grinding and screening zones, ranges from 1.5–2.5. In this study, to enhance the robustness of the dehazing model, three levels of haze intensity were simulated using values of 1.0 (light haze), 1.8 (moderate haze), and 2.5 (dense haze). Regarding , since surveillance cameras are typically mounted at the top of the workshop and the dehazing focus is primarily on conveyor belt areas, the simulated depth map is designed such that the center (conveyor region) is closer, while the surroundings and background are farther, simulating occlusions. This leads to haze being concentrated near the belt and in the lower-middle part of the image, with a gradual fade toward the upper areas.

Finally, to ensure efficiency in image generation and consistency for downstream tasks, all synthetic hazy images were resized to a resolution of 256 × 256 pixels, making them suitable for model training and evaluation.

Using the above method, each image from the graphite mineral processing plant can be used to synthesize three corresponding hazy images, resulting in three pairs of clear and hazy images. This enables the rapid construction of a dehazing dataset that effectively meets the requirements for training the proposed model. The final effect is presented in Figure 9.

Figure 9.

Haze synthesis results with increasing fog density from left to right, corresponding to low, medium, and high levels of atmospheric scattering.

4.3. Experimental Results

To evaluate the dehazing performance of the proposed IGWDehaze-Net algorithm, multiple representative methods are selected for comparison. The experiments are conducted on the RESIDE dataset, a popular dataset in image dehazing studies. The dataset consists of several components, including the ITS (Indoor Training Set), the OTS (Outdoor Training Set), and the SOTS (Standard Objective Testing Set). Specifically, ITS contains 13,990 image pairs, OTS contains 313,950 image pairs, and SOTS includes 500 indoor pairs and 500 outdoor pairs, denoted as SOTS-IN and SOTS-OUT, respectively.

As a general-purpose dataset, RESIDE is easy to access and capable of simulating various complex hazy conditions. Its large volume significantly enhances the model’s adaptability to diverse environments. In this study, 12,800 image pairs are randomly selected from ITS for training and 500 pairs from SOTS-IN for testing. Similarly, 12,800 pairs are selected from OTS for training and 500 pairs from SOTS-OUT for testing.

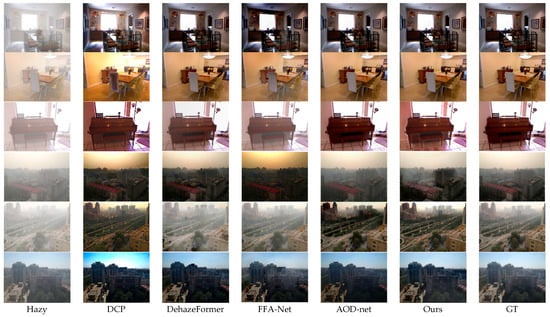

Visually inspecting Figure 10, it can be observed that the prior-based DCP algorithm exhibits obvious overexposure in bright regions of the image. For other deep learning-based methods, the differences are subtle to the naked eye, and all achieve relatively good dehazing results.

Figure 10.

Qualitative comparisons on RESIDE dataset. Rows 1–3 correspond to SOTS-IN, and rows 4–6 correspond to SOTS-OUT.

Quantitative comparison from Table 1 further demonstrates that IGWDehaze-Net achieves significant performance and model efficiency advantages over existing mainstream methods in the image dehazing task. On the standard SOTS-IN dataset, IGWDehaze-Net attains a PSNR of 37.72 dB and an SSIM of 0.994, approaching the current state-of-the-art level. Compared to traditional prior-based methods like DCP and earlier deep networks such as AOD-Net and DehazeNet, IGWDehaze-Net shows orders of magnitude improvement in image quality, reflecting substantial advances in feature representation and restoration ability due to its deep architecture.

Table 1.

Comparison of different methods on the RESIDE datasets.

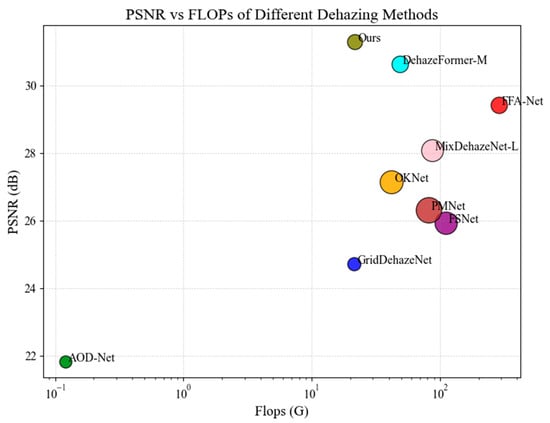

Against widely adopted representative deep learning methods including GridDehazeNet, FFA-Net, OKNet, and PMNet, IGWDehaze-Net also demonstrates clear advantages in both PSNR and SSIM metrics. Although PMNet and FSNet achieve relatively high scores in some metrics, their parameter counts (18.85 M and 13.28 M, respectively) are much larger than IGWDehaze-Net’s 2.81 M. Their computational costs (FLOPs of 81 G and 111 G) are also significantly higher, making them unsuitable for resource-constrained scenarios. In contrast, IGWDehaze-Net maintains high performance with only 21.74 G FLOPs, substantially lower than FFA-Net (287.8 G) and MixDehazeNet-L (86.70 G), thus significantly reducing computational overhead while ensuring effective dehazing. This results in higher efficiency and better deployment suitability.

Even compared to the recent Transformer-based DehazeFormer-M, IGWDehaze-Net achieves comparable PSNR and SSIM but with fewer parameters and FLOPs, highlighting its unique advantages in structural design and feature fusion strategy. Therefore, IGWDehaze-Net strikes a balance between accuracy and lightweight design among current mainstream methods, making it especially suitable for industrial scenarios or applications with stringent real-time requirements.

Subsequently, this study conducted evaluations on a synthesized haze dataset specifically designed for graphite beneficiation workshops. The dataset comprises 200 paired images, which were split into training and validation sets at a ratio of 3:1. The same dehazing algorithms mentioned earlier were applied to this dataset for comparative experiments. The results are summarized as follows.

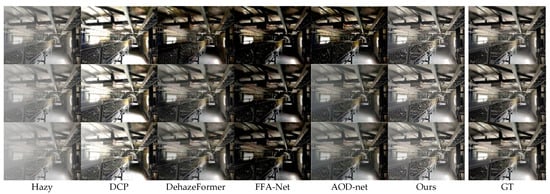

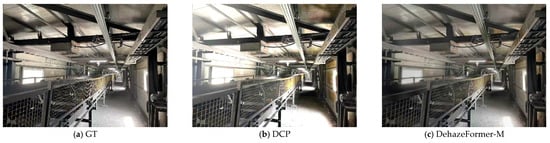

A clear observation emerges from the coordinate plot in Figure 11, the visualization of three haze concentrations in Figure 12, and the detail enlargement in Figure 13. Experimental results demonstrate that while the traditional DCP algorithm offers good interpretability and requires no training, it tends to suffer from transmission estimation errors under non-uniform haze and strong illumination conditions. This leads to over-dehazing in bright areas and blurred structural details. As a typical prior-based method, DCP leverages the dark channel prior to estimate haze in light haze conditions and achieves basic enhancement. However, in medium and dense haze scenarios, due to its lack of modeling for depth and brightness variations, it often results in grayscale shifts, structure blurring, or even halo artifacts, indicating insufficient dehazing capability. DehazeFormer-M, based on the Transformer architecture, demonstrates strong global modeling and contextual feature fusion abilities, achieving superior performance across all haze levels, especially in structural recovery and color restoration under heavy haze conditions. However, its large number of parameters and computational overhead limit its deployment flexibility on edge devices or real-time applications. OKNet incorporates multi-scale and structure-aware modules in its design, enabling it to effectively model mid-level structures. It performs well under light and medium haze, recovering clear contours and color details efficiently, and offers faster inference suitable for practical deployment. Nonetheless, in dense haze scenes, its lack of long-range context modeling limits its capability to reconstruct deep haze structures, often resulting in residual haze or loss of fine details. AOD-Net, as one of the earlier lightweight deep learning-based dehazing models, employs an end-to-end mapping mechanism for feature reconstruction. It shows noticeable improvements in light haze scenarios and produces natural-looking outputs under some moderate haze conditions. However, due to its compact structure and limited feature representation capacity, it struggles in high haze scenarios, often yielding blurred edges and overly smoothed structures. While stable in performance, its dehazing depth remains limited.

Figure 11.

Comparison of IGWDehaze-Net (Ours) with other image dehazing methods on the synthetic haze dataset from the graphite beneficiation workshop. The size of each dot indicates the number of parameters of the method, and FLOPs are shown on a logarithmic scale.

Figure 12.

Qualitative comparisons of the Synthetic Haze Dataset, from top to bottom, the haze concentration ranges from low to medium to high.

Figure 13.

A qualitative comparison of multiple dehazing methods with the ground truth images (GT) was performed on the synthetic haze dataset of the graphite beneficiation workshop under equal haze concentration levels.

The proposed IGWDehaze-Net, built on a lightweight design, integrates Instance Normalization to enhance normalization robustness, the DSC module to capture global contextual structures through GCP and fuse local details via LAA and incorporates a multi-attention feature fusion mechanism (MAFF) to improve the model’s responsiveness to haze distribution and critical image regions. This model performs excellently across light, medium, and heavy haze concentrations, producing naturally colored and well-contrasted images under light haze conditions, while preserving edge structures and enhancing texture restoration under medium and heavy haze, all the while maintaining low parameter counts and inference costs. This balance between performance and efficiency makes it particularly suitable for practical deployment in complex industrial workshop environments.

As shown in Table 2, it can be observed that IGWDehaze-Net effectively addresses the deficiencies of traditional prior-based methods and certain deep networks in restoring structures and edges under high haze density, achieving more balanced and stable performance across multiple haze levels. Specifically, under light, medium, and heavy haze conditions, IGWDehaze-Net achieves PSNR values of 32.84 dB, 30.35 dB, and 28.38 dB and SSIM values of 0.933, 0.928, and 0.911, respectively. It comprehensively outperforms other comparative methods, delivering more natural subjective visual effects with complete details, smooth edges, and virtually no noticeable artifacts or haze residues.

Table 2.

Comparison of different methods on the Synthetic dataset.

4.4. Ablation Study

In this study, to validate the effectiveness of the key components in the proposed image dehazing module, a series of ablation experiments were designed. The primary objective of these experiments is to quantify the contribution of each module to the overall dehazing performance by progressively adding or removing different structural components, thereby verifying the rationality and necessity of the design. The experiments were conducted on a standard synthetic image dehazing dataset, with Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM) used as evaluation metrics.

First, a baseline network without attention mechanisms or structural innovations, denoted as “B,” was constructed. This baseline model employs a standard five-stage U-Net backbone with conventional convolutional encoder-decoder architecture for feature extraction in image dehazing. Based on this baseline, Instance Normalization (IN) and the Multi-Attention Feature Fusion module (MAFF) were added separately to observe the performance changes after each component’s inclusion. Subsequently, the conventional convolutional encoder-decoder in “B” was replaced with the proposed DSC module. On top of the DSC module, IN and MAFF were also added individually for comparative experiments to evaluate the impact of these components on overall performance.

The Table 3 above shows that the baseline model with a conventional convolutional encoder-decoder structure (denoted as B) achieves a PSNR of only 17.23 and an SSIM of 0.732, indicating significant shortcomings in image restoration quality and structural preservation. By adding the Instance Normalization (IN) module to baseline B, the PSNR improves to 18.74 and SSIM increases to 0.757, demonstrating that IN helps normalize feature distributions effectively. When the Multi-Attention Feature Fusion (MAFF) module is introduced into the baseline, the performance improves more markedly, with PSNR rising to 22.37 and SSIM reaching 0.819, indicating MAFF’s strong ability to enhance key feature representation. When both IN and MAFF are added simultaneously, the model’s performance further improves, with PSNR reaching 24.23 and SSIM increasing to 0.885, reflecting their synergistic effect. Replacing the baseline encoder-decoder with the DSC module already yields excellent performance even without any attention or normalization, achieving a PSNR of 26.58 and SSIM of 0.824, which demonstrates the DSC’s stronger feature extraction capability. Adding the IN module on top of DSC leads to a moderate yet effective gain, with PSNR rising to 27.49 and SSIM to 0.841. Combining DSC with MAFF results in a more significant jump, with PSNR reaching 29.86 and SSIM 0.903, showcasing the attention mechanism’s capacity to further focus on critical regions within high-quality features. Finally, integrating both IN and MAFF into DSC achieves the highest performance, with a PSNR of 30.71 and SSIM of 0.918, indicating that normalization combined with multi-scale attention fusion on a high-expressive feature backbone can yield optimal image reconstruction. These results demonstrate that IN and MAFF modules possess both generality and complementarity, especially when paired with a powerful feature extractor like DSC, effectively producing synergistic enhancements and providing strong support for constructing high-performance image dehazing networks.

Table 3.

Ablation Study.

In summary, the ablation study clearly demonstrates the significant roles of each module within the IGWDehaze Block for image dehazing, with every component contributing positively to the overall performance improvement. Through systematic comparative experiments, this study validates both the structural effectiveness and practical feasibility of the proposed modules.

5. Conclusions

This paper proposes a lightweight IGWDehaze-Net image dehazing algorithm tailored for graphite beneficiation workshops, addressing the issue where complex workshop environments and process-induced dust haze interfere with monitoring and intelligent equipment. The proposed DSC module effectively extracts image features by combining global and local information, while the multi-attention feature fusion module allocates feature weights finely and rationally, further enhancing the model’s focus on diverse feature types and saving computational resources. Experimental results demonstrate that the proposed method achieves satisfactory dehazing performance with lower computational cost compared to mainstream dehazing algorithms on both public datasets and synthetic haze image datasets. Ablation studies further validate the contribution of each module to overall model performance. However, practical dehazing results in graphite workshops indicate that due to limited data volume, the model still struggles with incomplete haze removal under high haze density conditions. Future work will focus on further optimizing the model architecture, improving computational efficiency and algorithm robustness, and aiming to achieve robust performance across a broader range of real-world applications, such as other industrial and agricultural scenarios.

Author Contributions

Conceptualization, S.L. and Z.Q.; methodology, Z.Q.; software, S.L.; validation, S.L. and Z.Q.; resources, S.L.; data curation, S.L. and Z.Q.; writing—original draft preparation, S.L.; writing—review and editing, S.L.; visualization, S.L.; supervision, X.H. and Z.Q.; project administration, X.H.; funding acquisition, X.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National key Research and Development program of China 2020YFB1713700.

Institutional Review Board Statement

Not applicable. This study did not involve humans or animals.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data supporting the results of this study are available from the corresponding author upon request.

Acknowledgments

The authors would like to express their sincere gratitude to China Minmetals Corporation Graphite Industry Co., Ltd. for generously providing the site access and mineral raw materials that supported this research.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. In Proceedings of the 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPR Workshops), Miami, FL, USA, 20–25 June 2009; pp. 1956–1963. [Google Scholar] [CrossRef]

- Fattal, R. Dehazing using color-lines. ACM Trans. Graph. 2014, 34, 1–14. [Google Scholar] [CrossRef]

- Tan, R.T. Visibility in bad weather from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar] [CrossRef]

- Long, X.; Dai, X. Research and Implementation of Image Dehazing Application with Controllable Haze Density Based on Dark Channel. In Proceedings of the 2022 3rd International Conference on Computer Science and Management Technology (ICCSMT), Shanghai, China, 18–20 November 2022; pp. 90–93. [Google Scholar] [CrossRef]

- Huang, Y.Q.; Ding, W.R.; Li, H.G. A defogging method for UAV reconnaissance images based on image enhancement. J. Beijing Univ. Aeronaut. Astronaut. 2017, 43, 592–601. [Google Scholar] [CrossRef]

- Song, L.; Feng, F.; Li, X. Improved dark channel dehazing algorithm for coal mine underground images based on adaptive atmospheric light compensation (Invited). Acta Photonica Sin. 2024, 53, 102–113. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. AOD-Net: All-in-One Dehazing Network. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4780–4788. [Google Scholar] [CrossRef]

- Deng, Z.; Zhu, L.; Hu, X.; Fu, C.-W.; Xu, X.; Zhang, Q.; Qin, J.; Heng, P.-A. Deep Multi-Model Fusion for Single-Image Dehazing. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2453–2462. [Google Scholar] [CrossRef]

- Malav, R.; Pandey, G.; Kim, A. DehazeGAN: Underwater Haze Image Restoration using Unpaired Image-to-image Translation. IFAC-PapersOnLine 2019, 52, 82–85. [Google Scholar]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature fusion attention network for single image dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11908–11915. [Google Scholar]

- Galdran, A.; Alvarez-Gila, A.; Bria, A.; Vazquez-Corral, J.; Bertalmío, M. On the duality between Retinex and image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8212–8221. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Luo, P.; Xiao, G.; Gao, X.; Wu, S. LKD-Net: Large Kernel Convolution Network for Single Image Dehazing. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; pp. 1601–1606. [Google Scholar] [CrossRef]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. DehazeNet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed]

- Luo, J.; Bu, Q.; Zhang, L.; Feng, J. Global Feature Fusion Attention Network For Single Image Dehazing. In Proceedings of the 2021 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Boxin, C.; Xu, X.; Jia, K. GridDehazeNet: Attention-Based Multi-Scale Network for Image Dehazing. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 10042–10051. [Google Scholar]

- Song, Y.; He, Z.; Qian, H.; Du, X. Vision Transformers for Single Image Dehazing. IEEE Trans. Image Process. 2023, 32, 1927–1941. [Google Scholar] [CrossRef] [PubMed]

- Lu, L.P.; Chu, D.F.; Xiong, Q.; Xu, B.R. MixDehazeNet: Mix Structure Block For Image DehazingNetwork. arXiv 2023, arXiv:2305.17654v1. [Google Scholar]

- Liu, Y.; Zhu, L.; Pei, S.; Fu, H.; Qin, J.; Zhang, Q.; Wan, L.; Feng, W. From Synthetic to Real: Image Dehazing Collaborating with Unlabeled Real Data. In Proceedings of the 29th ACM international conference on multimedia, Chengdu, China, 20–24 October 2021. [Google Scholar]

- Li, H.; Liang, J.; Li, W.; Wu, W. FSNet: Frequency Domain Guided Superpixel Segmentation Network for Complex Scenes. In Proceedings of the 31st ACM International Conference on Multimedia, Nice, France, 21 October 2019. [Google Scholar]

- Ye, T.; Zhang, Y.; Jiang, M.; Chen, L.; Liu, Y.; Chen, S.; Chen, E. Perceiving and modeling density for image dehazing. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 130–145. [Google Scholar]

- Wang, Y.; Yan, X.; Wang, F.L.; Xie, H.; Yang, W.; Zhang, X.-P.; Qin, J.; Wei, M. UCL-Dehaze: Toward Real-World Image Dehazing via Unsupervised Contrastive Learning. IEEE Trans. Image Process. 2024, 33, 1361–1374. [Google Scholar] [CrossRef] [PubMed]

- Cui, Y.; Ren, W.; Knoll, A. Omni-Kernel Network for Image Restoration. In Proceedings of the Thirty-Eighth AAAI Conference on Artifial Intelligence (AAAI-24), Vancouver, BC, Canada, 20–27 February 2024; pp. 1426–1432. [Google Scholar]

- Gondal, M.W.; Schölkopf, B.; Hirsch, M. The unreasonable effectiveness of texture transfer for single image super-resolution. In Proceedings of the Computer Vision–ECCV 2018 Workshops, Munich, Germany, 8–14 September 2018; Springer: Berlin/Heidelberg, Germany, 2019; pp. 80–97. [Google Scholar]

- Chan, K.C.; Wang, X.; Xu, X.; Gu, J.; Loy, C.C. Glean: Generative latent bank for large-factor image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 14245–14254. [Google Scholar]

- Wang, X.; Li, Y.; Zhang, H.; Shan, Y. Towards real-world blind face restoration with generative facial prior. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 9168–9178. [Google Scholar]

- Agustsson, E.; Timofte, R. Ntire 2017 challenge on single image super-resolution: Dataset and study. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 126–135. [Google Scholar]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef] [PubMed]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 154–169. [Google Scholar]

- Dong, H.; Pan, J.; Xiang, L.; Hu, Z.; Zhang, X.; Wang, F.; Yang, M.H. Multi-scale boosted dehazing network with dense feature fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2020, Seattle, WA, USA, 13–19 June 2020; pp. 2157–2167. [Google Scholar]

- Wang, A.; Chen, H.; Lin, Z.; Han, J.; Ding, G. LSNet: See Large, Focus Small. arXiv 2025, arXiv:2503.23135. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).