Multimodal Emotion Recognition for Seafarers: A Framework Integrating Improved D-S Theory and Calibration: A Case Study of a Real Navigation Experiment

Abstract

1. Introduction

2. Related Work

2.1. Emotion Recognition of Seafarers

2.2. Multi-Model Information Fusion

3. Methodology

- Data acquisition and preprocessing for determining the identification framework of seafarer emotion recognition.

- Select multiple machine learning models as preliminary evidence. After obtaining the preliminary recognition results, the top 3 performing models are chosen as the candidate evidence for fusion based on accuracy.

- Probability calibration is implemented on the selected candidate evidence. Specifically, Sigmoid calibration is executed for SVM and RF, and Softmax temperature scaling is conducted on MLP.

- For each instance to be tested, the calibrated probability output is used to construct the BPA, and the weight coefficient between the evidence is calculated using the evidence distance formula.

- The weight coefficients are assigned to the preliminary prediction results of the instances to be tested, and the final results are synthesized.

3.1. Construction of Machine Learning Models

3.2. The Training of the Models

3.3. Classifier Calibration

3.3.1. Calibration Method

3.3.2. Metric for Calibration

3.4. Improved D-S Weight Fusion Strategy

4. Experimental Setup and Data

4.1. Participants

4.2. Experimental Apparatus and Technique

4.2.1. EEG Equipment

4.2.2. Participant Self-Assessment

4.3. Experimental Design

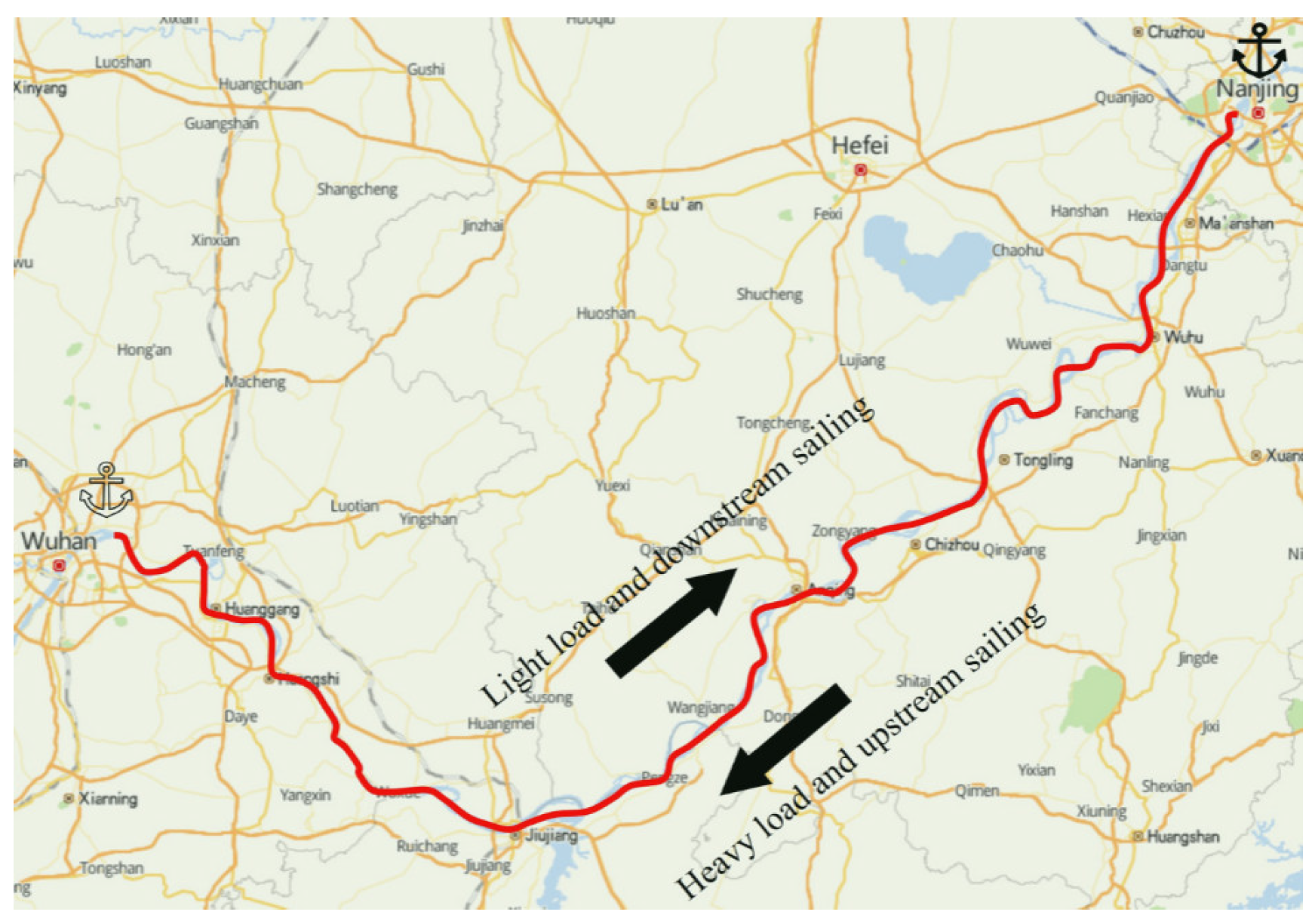

4.3.1. Test Ship and Route

4.3.2. Experimental Process

4.4. Data Collection and Processing

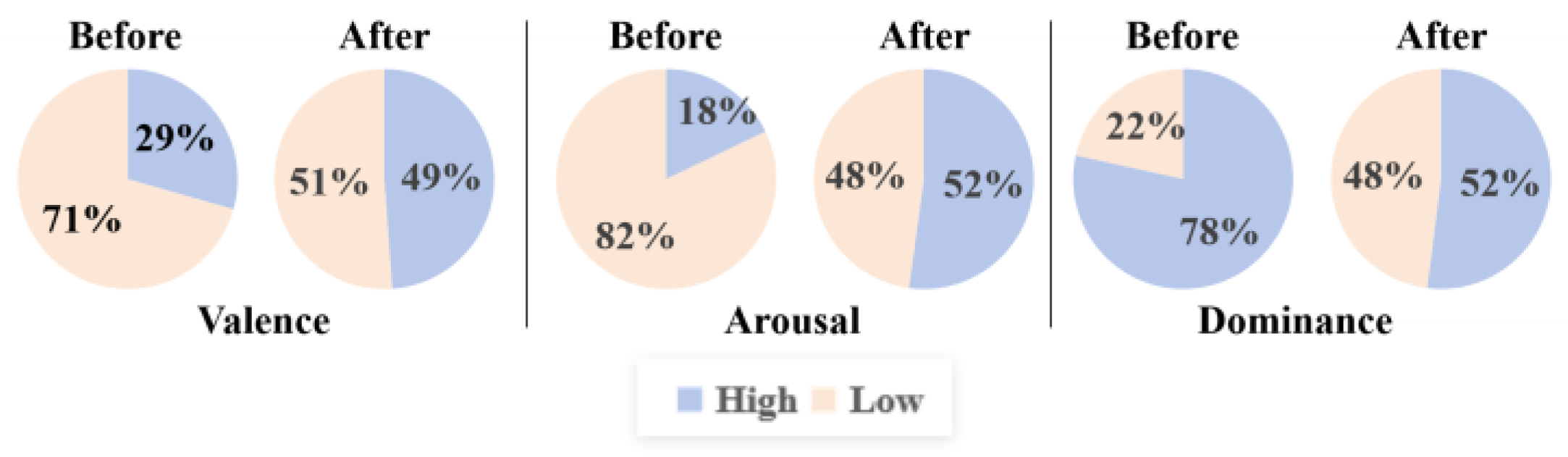

4.4.1. Processing of Subjective Questionnaires

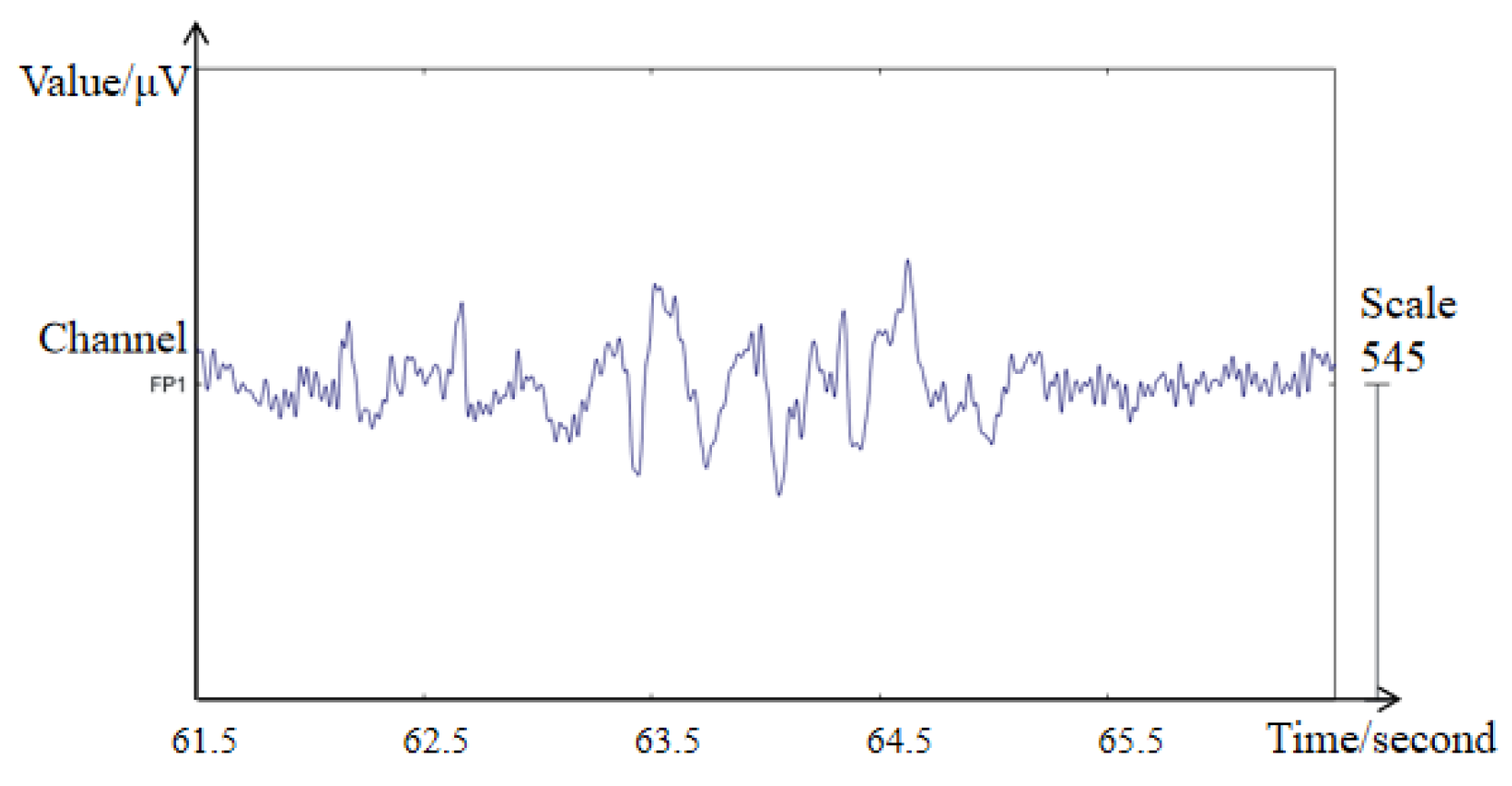

4.4.2. EEG Data Preprocessing

- Power Feature: Employing the EEGLAB (version 2024) toolbox of MATLAB (version 2023b), the baseline of the data was eliminated, and artifacts were removed at 1 s intervals. The band-pass filter within EEGLAB was utilized to attenuate the non-EEG signals through a 1 Hz high-pass filter and a 50 Hz low-pass filter. The enhanced periodogram method was adopted for power spectrum estimation. The relative spectral power of the theta band (4–7 Hz), the alpha band (8–13 Hz), the beta band (13–30 Hz), and the gamma band (30–48 Hz) was computed, respectively.

- Differential Entropy Feature (DE): DE is the generalized form of Shannon’s information entropy with respect to continuous variables.

4.4.3. Data Balancing

5. Result and Discussion

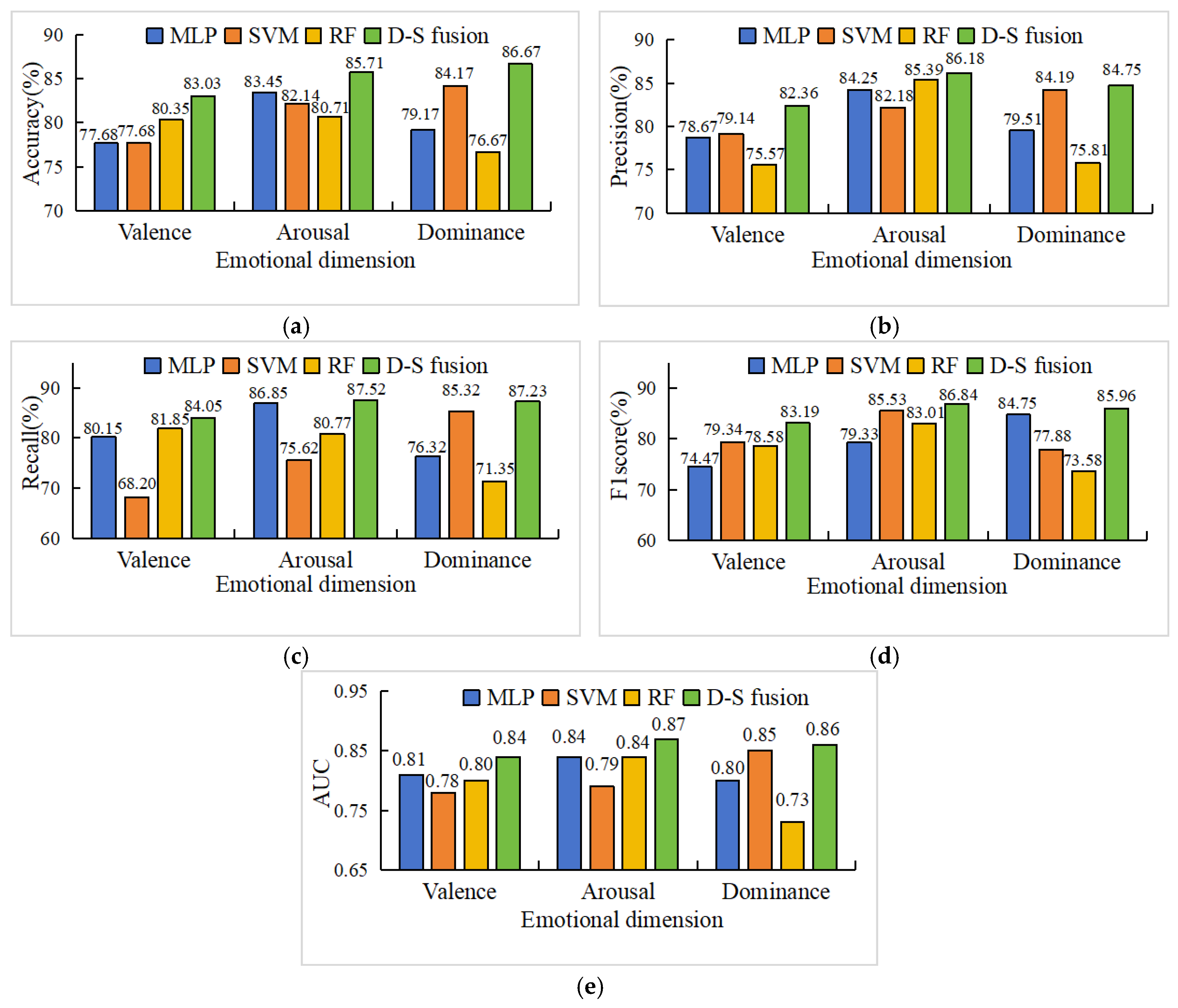

5.1. Results on Test Set

5.2. Evaluation of Calibration

5.3. Comparison Between Single and Fusion Models

5.4. Statistical Test

5.5. Comparison with the Existing Studies

6. Discussion

6.1. Claims and Summary

6.2. Implication

6.3. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ER | emotion recognition |

| SER | seafarers’ emotion recognition |

Appendix A

| d(E1, E2) | The distance between the probability output vectors of two machine learning models |

| D | Confidence distance matrix of the distance between the probability outputs of various machine learning models |

| ||Ei||2 | Inner product of the probability output vector of a machine learning model |

| s | Similarity between probability output vectors of machine learning models |

| S | Similarity matrix between probability output vectors of machine learning models |

| R(Ei) | The degree of a single probability output (evidence) is supported by other ones |

| wi | Weight of the ith machine learning model |

References

- Chen, D.; Pei, Y.; Xia, Q. Research on human factors cause chain of ship accidents based on multidimensional association rules. Ocean. Eng. 2020, 218, 107717. [Google Scholar] [CrossRef]

- Heij, C.; Knapp, S. Predictive Power of Inspection Outcomes for Future Shipping Accidents—An Empirical Appraisal with Special Attention for Human Factor Aspects. Marit. Policy Manag. 2018, 45, 604–621. [Google Scholar] [CrossRef]

- Wróbel, K. Searching for the origins of the myth 80% human error impact on maritime safety. Reliab. Eng. Syst. Saf. 2021, 216, 107942. [Google Scholar] [CrossRef]

- AGCS, Safety and Shipping Review 2022. Retrieved May 10, 2022 [EB/OL]. Available online: https://www.allianz.com/en/mediacenter/news/studies/220510_Allianz-AGCS-PressRelease-Safety-Shipping-Review-2022.html (accessed on 17 August 2025).

- Fan, S.; Yan, X.; Zhang, J.; Wang, J. A review on human factors in maritime transportation using seafarers’ physiological data. In Proceedings of the 2017 4th International Conference on Transportation Information and Safety (ICTIS), Banff, AB, Canada, 8–10 August 2017; pp. 104–110. [Google Scholar] [CrossRef]

- Sánchez-González, A.; Díaz-Secades, L.A.; García-Fernández, J.; Menéndez-Teleña, D. Screening for anxiety, depression and poor psychological well-being in Spanish seafarers: An empirical study of the cut-off points on three measures of psychological functioning. Ocean Eng. 2024, 309, 118572. [Google Scholar] [CrossRef]

- Bedyńska, S.; Żołnierczyk-Zreda, D. Stereotype threat as a determinant of burnout or work engagement: Mediating role of positive and negative emotions. Int. J. Occup. Saf. Ergon. 2015, 21, 1–8. [Google Scholar] [CrossRef]

- Dzedzickis, A.; Kaklauskas, A.; Bucinskas, V. Human Emotion Recognition: Review of Sensors and Methods. Sensors 2020, 20, 592. [Google Scholar] [CrossRef] [PubMed]

- Morris, J.D. SAM: The Self-Assessment Manikin: An Efficient Cross-Cultural Measurement of Emotional Response. J. Advert. Res. 1995, 35, 63–68. [Google Scholar] [CrossRef]

- Zimmermann, P.; Guttormsen, S.; Danuser, B.; Gomez, P. Affective computing—A rationale for measuring mood with mouse and keyboard. Int. J. Occup. Saf. Ergon. 2003, 9, 539–551. [Google Scholar] [CrossRef]

- Liu, Y.; Sourina, O. Real-Time Subject-Dependent EEG-Based Emotion Recognition Algorithm. In Transactions on Computational Science XXIII; Springer: Berlin/Heidelberg, Germany, 2014; pp. 199–223. [Google Scholar] [CrossRef]

- Hou, X.; Liu, Y.; Lim, W.L.; Lan, Z.; Sourina, O.; Mueller-Wittig, W.; Wang, L. CogniMeter: EEG-Based Brain States Monitoring. In Transactions on Computational Science XXVIII; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2016; pp. 108–126. [Google Scholar] [CrossRef]

- Chanel, G.; Rebetez, C.; Bétrancourt, M.; Pun, T. Emotion Assessment From Physiological Signals for Adaptation of Game Difficulty. IEEE Trans. Syst. Man Cybern. Syst. 2011, 41, 1052–1063. [Google Scholar] [CrossRef]

- Fan, S.; Zhang, J.; Blanco-Davis, E.; Yang, Z.; Wang, J.; Yan, X. Effects of seafarers’ emotion on human performance using bridge simulation. Ocean Eng. 2018, 170, 111–119. [Google Scholar] [CrossRef]

- Žagar, D.; Dimc, F. E-navigation: Integrating physiological readings within the ship’ s bridge infrastructure. Transp. Res. Procedia 2025, 83, 343–348. [Google Scholar] [CrossRef]

- Zhang, W.; Jiang, W.; Liu, Q.; Wang, W. AIS data repair model based on generative adversarial network. Reliab. Eng. Syst. Saf. 2023, 240, 109572. [Google Scholar] [CrossRef]

- Ma, J.; Li, W.; Jia, C.; Zhang, C.; Zhang, Y. Risk Prediction for Ship Encounter Situation Awareness Using Long Short-Term Memory Based Deep Learning on Intership Behaviors. J. Adv. Transp. 2020, 2020, 8897700. [Google Scholar] [CrossRef]

- Zhao, J.; Chen, Y.; Zhou, Z.; Zhao, J.; Wang, S.; Chen, X. Extracting vessel speed based on machine learning and drone images during ship traffic flow prediction. J. Adv. Transp. 2022, 2022, 3048611. [Google Scholar] [CrossRef]

- Kim, H.; Hong, T. Enhancing emotion recognition using multimodal fusion of physiological, environmental, personal data. Expert Syst. Appl. 2024, 249, 123723. [Google Scholar] [CrossRef]

- Khaleghi, B.; Khamis, A.; Karray, F.O.; Razavi, S.N. Multisensor Data Fusion: A Review of the State-of-the-Art. Inf. Fusion 2013, 14, 28–44. [Google Scholar] [CrossRef]

- Folgado, D.; Barandas, M.; Famiglini, L.; Santos, R.; Cabitza, F.; Gamboa, H. Explainability meets uncertainty quantification: Insights from feature-based model fusion on multimodal time series. Inf. Fusion 2023, 100, 101955. [Google Scholar] [CrossRef]

- Deng, Y. Generalized evidence theory. Appl. Intell. 2015, 43, 530–543. [Google Scholar] [CrossRef]

- Filho, T.; Song, H.; Perello-Nieto, M.; Santos-Rodriguez, R.; Kull, M.; Flach, P. Classifier Calibration: A survey on how to assess and improve predicted class probabilities. Mach. Learn. 2023, 112, 3211–3260. [Google Scholar] [CrossRef]

- Fan, S.; Blanco-Davis, E.; Fairclough, S.; Zhang, J.; Yan, X.; Wang, J.; Yang, Z. Incorporation of seafarer psychological factors into maritime safety assessment. Ocean. Coast. Manag. 2023, 237, 106515. [Google Scholar] [CrossRef]

- Liu, Y.; Hou, X.; Sourina, O.; Konovessis, D.; Krishnan, G. EEG-based human factors evaluation for maritime simulator-aided assessment. In Proceedings of the 3rd International Conference on Maritime Technology and Engineering (MARTECH 2016), Lisbon, Portugal, 4–6 July 2016; CRC Press: Boca Raton, FL, USA; pp. 859–864. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, J.; Mao, Z.; Fan, S.; Wang, Z.; Wang, H. Emotional State Evaluation during Collision Avoidance Operations of Seafarers Using Ship Bridge Simulator and Wearable EEG. In Proceedings of the 6th International Conference on Transportation Information and Safety (ICTIS) 2021, Wuhan, China, 22–24 October 2021; pp. 415–422. [Google Scholar] [CrossRef]

- Shi, K.; Weng, J.; Fan, S.; Yang, Z.; Ding, H. Exploring seafarers’ emotional responses to emergencies: An empirical study using a ship handling simulator. Ocean Coast Manag. 2023, 243, 106736. [Google Scholar] [CrossRef]

- Lim, W.L.; Liu, Y.; Subramaniam, S.C.H.; Liew, S.H.P.; Krishnan, G.; Sourina, O.; Wang, L. EEG-Based Mental Workload and Stress Monitoring of Crew Members in Maritime Virtual Simulator. In Transactions on Computational Science XXXII: Special Issue on Cybersecurity and Biometrics; Springer: Berlin/Heidelberg, Germany, 2018; pp. 15–28. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. Deap: A database for emotion analysis; using physiological signals. IEEE Trans. Affective Comput. 2011, 3, 18–31. [Google Scholar] [CrossRef]

- Wen, H.; Gao, B.; Yang, D.; Zhang, Y.; Huang, L.; Woo, W.L. Wearable Integrated Online Fusion Learning Filter for Heart PPG Sensing Tracking. IEEE Sensors. J. 2023, 23, 14938–14949. [Google Scholar] [CrossRef]

- Ma, Y.; Liu, Q.; Yang, L. Machine learning-based multimodal fusion recognition of passenger ship seafarers’ workload: A case study of a real navigation experiment. Ocean. Eng. 2024, 300, 117346. [Google Scholar] [CrossRef]

- Albuquerque, A.; Tiwari, M.; Parent, M.; Cassani, R.; Gagnon, J.-F.; Lafond, D.; Falk, T.H. WAUC: A Multi-Modal Database for Mental Workload Assessment Under Physical Activity. Front. Neurosci. 2020, 14, 549524. [Google Scholar] [CrossRef]

- Yang, L.; Li, L.; Liu, Q.; Ma, Y.; Liao, J. Influence of physiological, psychological and environmental factors on passenger ship seafarer fatigue in real navigation environment. Saf. Sci. 2023, 168, 106293. [Google Scholar] [CrossRef]

- Wan, Z.; Yang, R.; Huang, M.; Zeng, N.; Liu, X. A review on transfer learning in EEG signal analysis. Neurocomputing 2021, 421, 1–14. [Google Scholar] [CrossRef]

- Kittler, J.; Hatef, M.; Duin, R.P.W.; Matas, J. On combining classifiers. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 226–239. [Google Scholar] [CrossRef]

- Mohandes, M.; Deriche, M.; Aliyu, S.O. Classifiers Combination Techniques: A Comprehensive Review. IEEE Access 2018, 6, 19626–19639. [Google Scholar] [CrossRef]

- Toufiq, R.; Islam, M.R. Face recognition system using soft-output classifier fusion method. In Proceedings of the 2016 2nd International Conference on Electrical, Computer & Telecommunication Engineering (ICECTE), Rajshahi, Bangladesh, 8–10 December 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Muhlbaier, M.D.; Topalis, A.; Polikar, R. Learn++NC: Combining Ensemble of Classifiers With Dynamically Weighted Consult-and-Vote for Efficient Incremental Learning of New Classes. IEEE Trans. Neural Netw. 2009, 20, 152–168. [Google Scholar] [CrossRef]

- Chitroub, C.; Salim, S. Classifier combination and score level fusion: Concepts and practical aspects. Int. J. Image Data Fusion 2010, 1, 113–135. [Google Scholar] [CrossRef]

- Han, D.Q.; Han, C.Z.; Yang, Y. Combination of heterogeneous multiple classifiers based on evidence theory. In Proceedings of the 2007 International Conference on Wavelet Analysis and Pattern Recognition (ICWAPR), Beijing, China, 2–4 November 2007; pp. 573–578. [Google Scholar] [CrossRef]

- Shi, C. A Novel Ensemble Learning Algorithm Based on D-S Evidence Theory for IoT Security. Comput. Mater. Contin. 2018, 57, 635–652. [Google Scholar] [CrossRef]

- Qiu, D.; Li, X.; Xue, Y.; Fu, K.; Zhang, W.; Shao, T.; Fu, Y. Analysis and prediction of rockburst intensity using improved D-S evidence theory based on multiple machine learning algorithms. Tunn. Undergr. Space Technol. 2023, 140, 105331. [Google Scholar] [CrossRef]

- Ghosh, M.; Dey, A.; Kahali, S. Type-2 fuzzy blended improved D-S evidence theory based decision fusion for face recognition. Appl. Soft Comput. 2022, 125, 109179. [Google Scholar] [CrossRef]

- Yi, D.; Su, J.; Liu, C.; Quddus, M.; Chen, W. A machine learning based personalized system for driving state recognition. Transp. Res. Part C Emerg. Technol. 2019, 105, 241–261. [Google Scholar] [CrossRef]

- Knapp, S.; Velden, M. Exploration of Machine Learning Methods for Maritime Risk Predictions. Marit. Policy Manag. 2024, 51, 1443–1473. [Google Scholar] [CrossRef]

- Peng, W.; Bai, X.; Yang, D.; Yuen, K.F.; Wu, J. A Deep Learning Approach for Port Congestion Estimation and Prediction. Marit. Policy Manag. 2023, 50, 835–860. [Google Scholar] [CrossRef]

- Zhao, Q.; Yang, L.; Lyu, N. A driver stress detection model via data augmentation based on deep convolutional recurrent neural network. Expert Syst. Appl. 2024, 238, 122056. [Google Scholar] [CrossRef]

- Guo, C.; Pleiss, G.; Sun, Y.; Weinberger, K.Q. On Calibration of Modern Neural Networks. In Proceedings of the 34th International Conference on Machine Learning (ICML), Sydney, Australia, 6–11 August 2017; PMLR 70; pp. 1321–1330. [Google Scholar] [CrossRef]

- Jousselme, A.L.; Grenier, D.; Bossé, É. A new distance between two bodies of evidence. Inf. Fusion 2001, 2, 91–101. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, P. Selection of optimal EEG electrodes for human emotion recognition. IFAC-PapersOnLine 2020, 53, 10229–10235. [Google Scholar] [CrossRef]

- Zhu, Q.; Zheng, C.; Zhang, Z.; Shao, W.; Zhang, D. Dynamic confidence-aware multi-modal emotion recognition. IEEE Trans. Affective Comput. 2023, 15, 1358–1370. [Google Scholar] [CrossRef]

- Fan, J.; Yan, J.; Xiong, Y.; Shu, Y.; Fan, X.; Wang, Y.; He, Y.; Chen, J. Characteristics of real-world ship energy consumption and emissions based on onboard testing. Mar. Pollut. Bull. 2023, 194, 115411. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Zeng, G.; Zhang, J.; Xu, Y.; Xing, Y.; Zhou, R.; Guo, G.; Shen, Y.; Cao, D.; Wang, F. Cogemonet: A cognitive-feature-augmented driver emotion recognition model for smart cockpit. IEEE Trans. Comput. Social Syst. 2021, 9, 667–678. [Google Scholar] [CrossRef]

- He, H.; Bai, Y.; Garcia, E.A.; Li, S. ADASYN: Adaptive synthetic sampling approach for imbalanced learning. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IJCNN), Hong Kong, China, 1–8 June 2008; pp. 1322–1328. [Google Scholar] [CrossRef]

- Frenkel, L.; Goldberger, J. Network calibration by temperature scaling based on the predicted confidence. In Proceedings of the 2022 30th European Signal Processing Conference (EUSIPCO), Belgrade, Serbia, 29 August–2 September 2022; pp. 1586–1590. [Google Scholar] [CrossRef]

- Yang, L.; Zhao, Q. An aggressive driving state recognition model using EEG based on stacking ensemble learning. J. Transp. Saf. Secur. 2024, 16, 271–292. [Google Scholar] [CrossRef]

- Wang, J.; Ma, H.; Yan, X. Rockburst Intensity Classification Prediction Based on Multi-Model Ensemble Learning Algorithms. Mathematics 2023, 11, 838. [Google Scholar] [CrossRef]

- Yin, X.; Liu, Q.; Pan, Y.; Huang, X.; Wu, J.; Wang, X. Strength of Stacking Technique of Ensemble Learning in Rockburst Prediction with Imbalanced Data: Comparison of Eight Single and Ensemble Models. Nat. Resour. Res. 2021, 30, 1795–1815. [Google Scholar] [CrossRef]

| Types of Scenarios | Description | Advantage | Drawback | Application Scope |

|---|---|---|---|---|

| Empirical binning | The probability predictions are divided into different interval bins. | Simple and intuitive, no need for complex modelling | The number of bins and the choice of boundaries are not easy to determine. | Small to medium datasets |

| Isotonic calibration | Adjusting probabilities based on the monotonic relationship between predicted and actual probabilities | Highly flexible to handle complex non-linear distortions | Requiring more data to prevent over-fitting. Higher computational complexity | Medium to large datasets. |

| Sigmoid calibration | Adjusting probabilities using logistic regression | Fewer parameters. Efficient calculation | Unable to handle non-monotonic problems. Sensitive to category imbalance | Binary classification tasks, small datasets, or scenarios requiring lightweight calibration |

| Beta calibration | Three-parameter model based on the beta distribution, allowing asymmetric tuning | Applicable for skewed distributions. | Still limited by parametric form. Requires medium-sized data | Binary classification, cases with large differences in the distribution of classes. |

| Temperature scaling | Introducing the parameter T in softmax, scaling logits to adjust the confidence level. | Fewer parameters. Computationally efficient. | Global adjustments only, limited effect on complex calibration problems | Neural networks, scenarios that require fast calibration and preservation of predictive order relationships |

| Parameter | Value |

|---|---|

| Ship type | LPG tanker |

| Year of manufacture | 2021 |

| Length | 88 m |

| Depth | 5.6 m |

| Width | 16 m |

| Design draft | 4.2 m |

| Deadweight tonnage | 2693 t |

| Rated speed | 150 r/min |

| Main engine power | 2 × 600 kw |

| Types of Scenarios | Detailed Description |

|---|---|

| Normal navigation | No specific scenario occurs within 15minutes. Exchange information and ship operates normal. |

| Overtaking ships | Overtake the ships ahead Exchange information. |

| Normal turn | The helmsman steers the ship. Exchange information when encountering ships. Sailors keep watching. |

| Passing under bridges | The helmsman steers the ship. The bridge team maintains a proper look-out by sight and hearing as well as other available means, to ensure safe passage. Exchange information. |

| Change lanes under complex conditions | The captain intervenes. Seafarers act by order of the captain. |

| Special maneuvering turns with high navigational difficulty | The captain intervenes. Seafarers act by order of the captain. |

| Model | Valence | Arousal | Dominance | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Acc (%) | Precision (%) | Recall (%) | F1 (%) | AUC | Acc (%) | Precision (%) | Recall (%) | F1 (%) | AUC | Acc (%) | Precision (%) | Recall (%) | F1 (%) | AUC | |

| ELM | 71.43 | 75.02 | 70.20 | 72.53 | 0.73 | 73.57 | 73.45 | 67.63 | 70.42 | 0.72 | 82.50 | 83.26 | 84.23 | 83.74 | 0.83 |

| RBF | 72.35 | 67.60 | 74.85 | 71.04 | 0.74 | 72.86 | 65.42 | 71.57 | 68.35 | 0.72 | 83.33 | 83.97 | 81.57 | 82.75 | 0.81 |

| MLP | 77.68 | 78.67 | 80.15 | 79.34 | 0.81 | 83.45 | 84.25 | 86.85 | 85.53 | 0.84 | 79.17 | 79.51 | 76.32 | 77.88 | 0.80 |

| XGB | 76.79 | 77.53 | 74.90 | 76.19 | 0.77 | 72.14 | 81.55 | 72.68 | 76.92 | 0.78 | 74.17 | 73.95 | 70.28 | 72.07 | 0.72 |

| RF | 80.35 | 75.57 | 81.85 | 78.58 | 0.80 | 80.71 | 85.39 | 80.77 | 83.01 | 0.84 | 76.67 | 75.81 | 71.35 | 73.58 | 0.73 |

| LGBM | 77.68 | 77.83 | 70.15 | 73.79 | 0.74 | 75.00 | 74.20 | 82.33 | 78.05 | 0.79 | 74.17 | 73.84 | 69.42 | 71.56 | 0.71 |

| KNN | 75.89 | 75.60 | 79.90 | 77.69 | 0.76 | 76.43 | 77.42 | 83.27 | 80.23 | 0.80 | 75.83 | 72.90 | 67.53 | 70.10 | 0.70 |

| SVM | 75.89 | 79.14 | 68.20 | 73.33 | 0.78 | 82.14 | 82.18 | 75.62 | 79.33 | 0.79 | 84.17 | 84.19 | 85.32 | 84.75 | 0.85 |

| Model | Valence | Arousal | Dominance | Method | |||

|---|---|---|---|---|---|---|---|

| Before | After | Before | After | Before | After | ||

| SVM | 0.112 | 0.081 | 0.168 | 0.057 | 0.147 | 0.108 | Sigmoid calibration |

| RF | 0.161 | 0.071 | 0.174 | 0.140 | 0.154 | 0.144 | Sigmoid calibration |

| MLP | 0.210 | 0.144 | 0.209 | 0.163 | 0.210 | 0.142 | Temperature scaling |

| Paired t-Test | t | p | Cohen’s d |

|---|---|---|---|

| D-S fusion & SVM | 6.535 | <0.001 | 2.067 |

| D-S fusion & MLP | 13.769 | <0.001 | 4.353 |

| D-S fusion & RF | 10.614 | <0.001 | 3.362 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, L.; Yang, J.; Cao, C.; Li, M.; Fei, P.; Liu, Q. Multimodal Emotion Recognition for Seafarers: A Framework Integrating Improved D-S Theory and Calibration: A Case Study of a Real Navigation Experiment. Appl. Sci. 2025, 15, 9253. https://doi.org/10.3390/app15179253

Yang L, Yang J, Cao C, Li M, Fei P, Liu Q. Multimodal Emotion Recognition for Seafarers: A Framework Integrating Improved D-S Theory and Calibration: A Case Study of a Real Navigation Experiment. Applied Sciences. 2025; 15(17):9253. https://doi.org/10.3390/app15179253

Chicago/Turabian StyleYang, Liu, Junzhang Yang, Chengdeng Cao, Mingshuang Li, Peng Fei, and Qing Liu. 2025. "Multimodal Emotion Recognition for Seafarers: A Framework Integrating Improved D-S Theory and Calibration: A Case Study of a Real Navigation Experiment" Applied Sciences 15, no. 17: 9253. https://doi.org/10.3390/app15179253

APA StyleYang, L., Yang, J., Cao, C., Li, M., Fei, P., & Liu, Q. (2025). Multimodal Emotion Recognition for Seafarers: A Framework Integrating Improved D-S Theory and Calibration: A Case Study of a Real Navigation Experiment. Applied Sciences, 15(17), 9253. https://doi.org/10.3390/app15179253