Space–Bandwidth Product Extension for Holographic Displays Through Cascaded Wavefront Modulation

Abstract

1. Introduction

2. Principle and Method

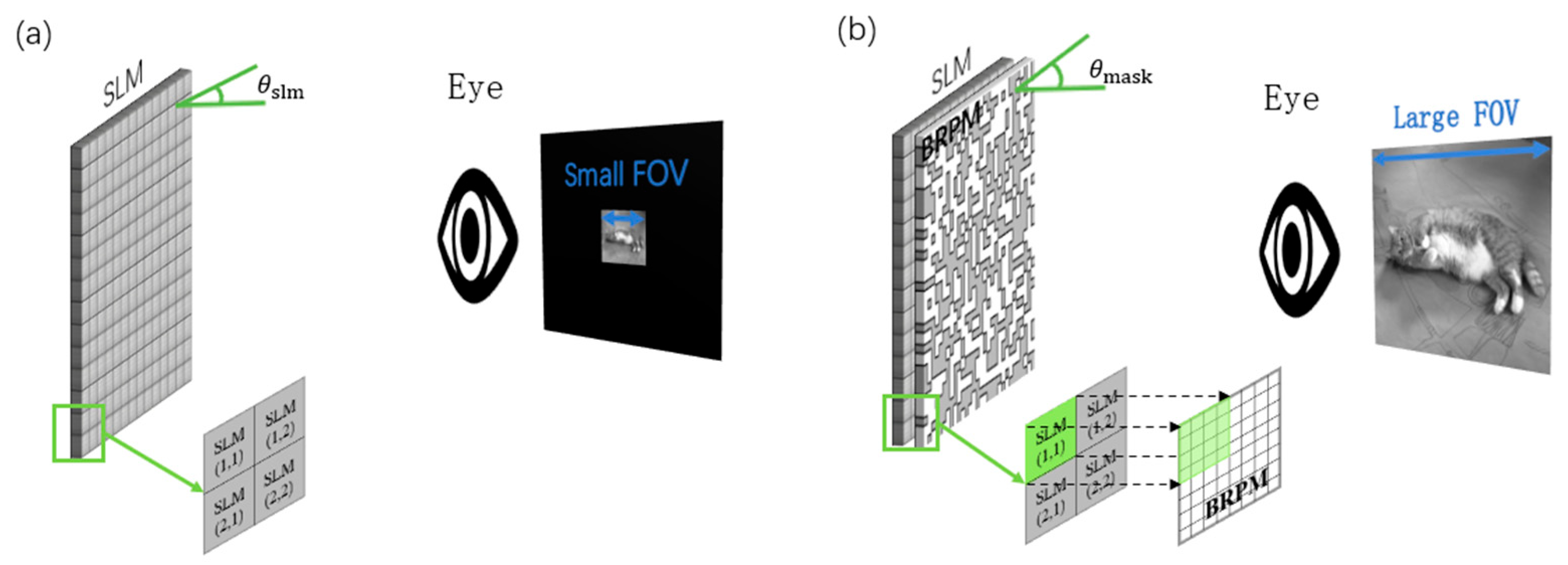

2.1. SBP Extension Principle Based on a Static Mask

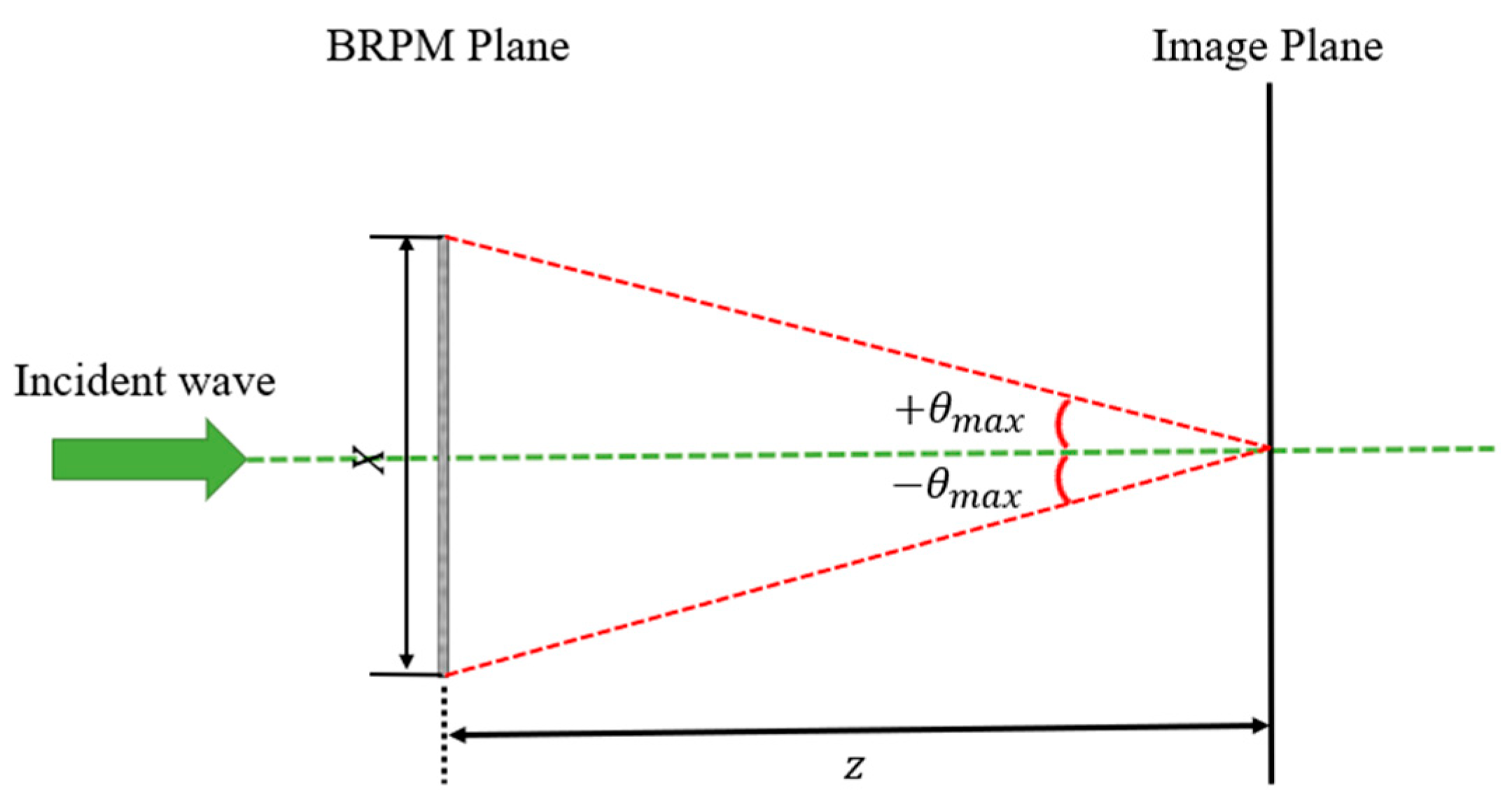

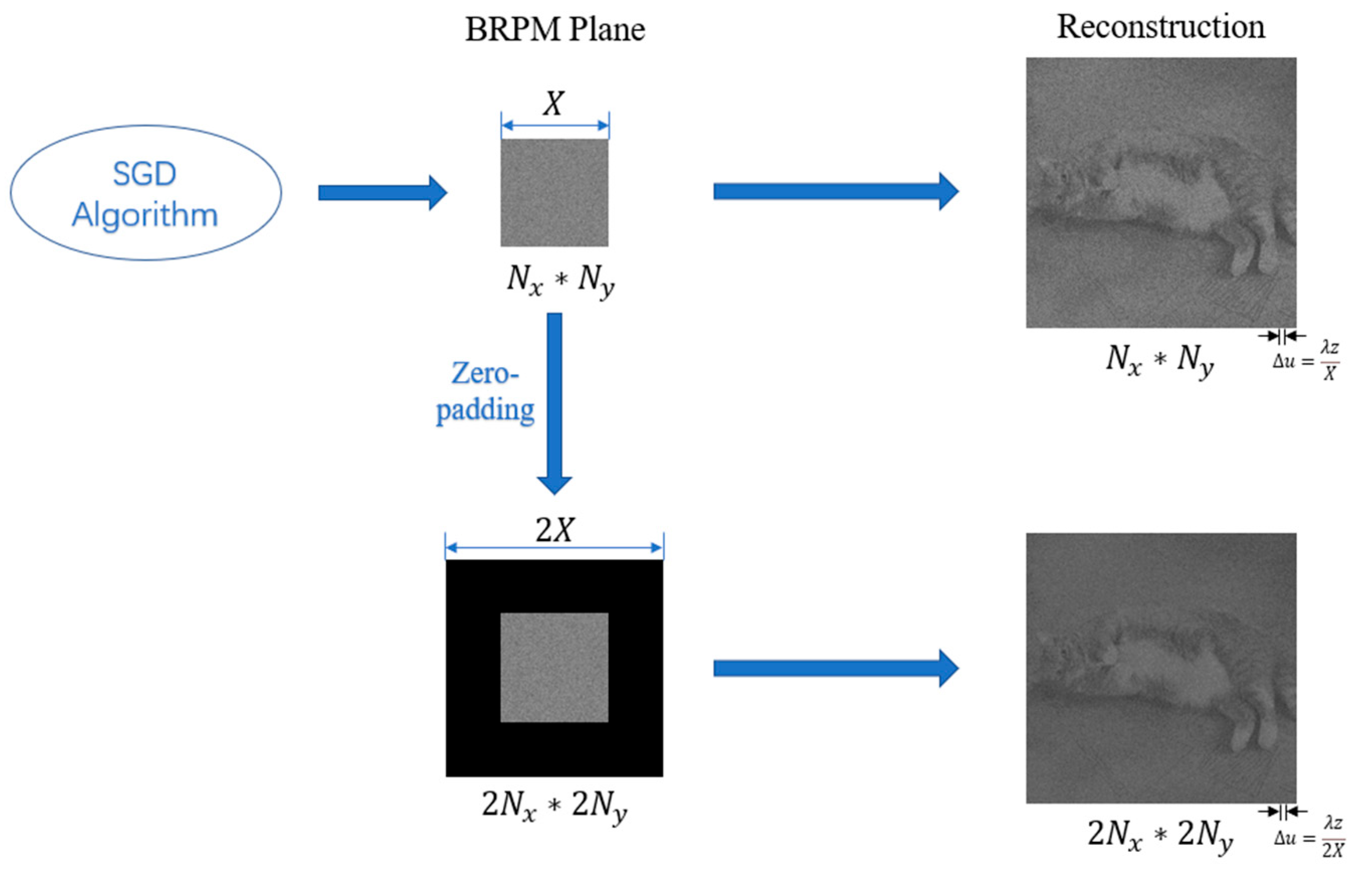

2.2. Analysis of Undersampling in High-Frequency Diffraction Calculation

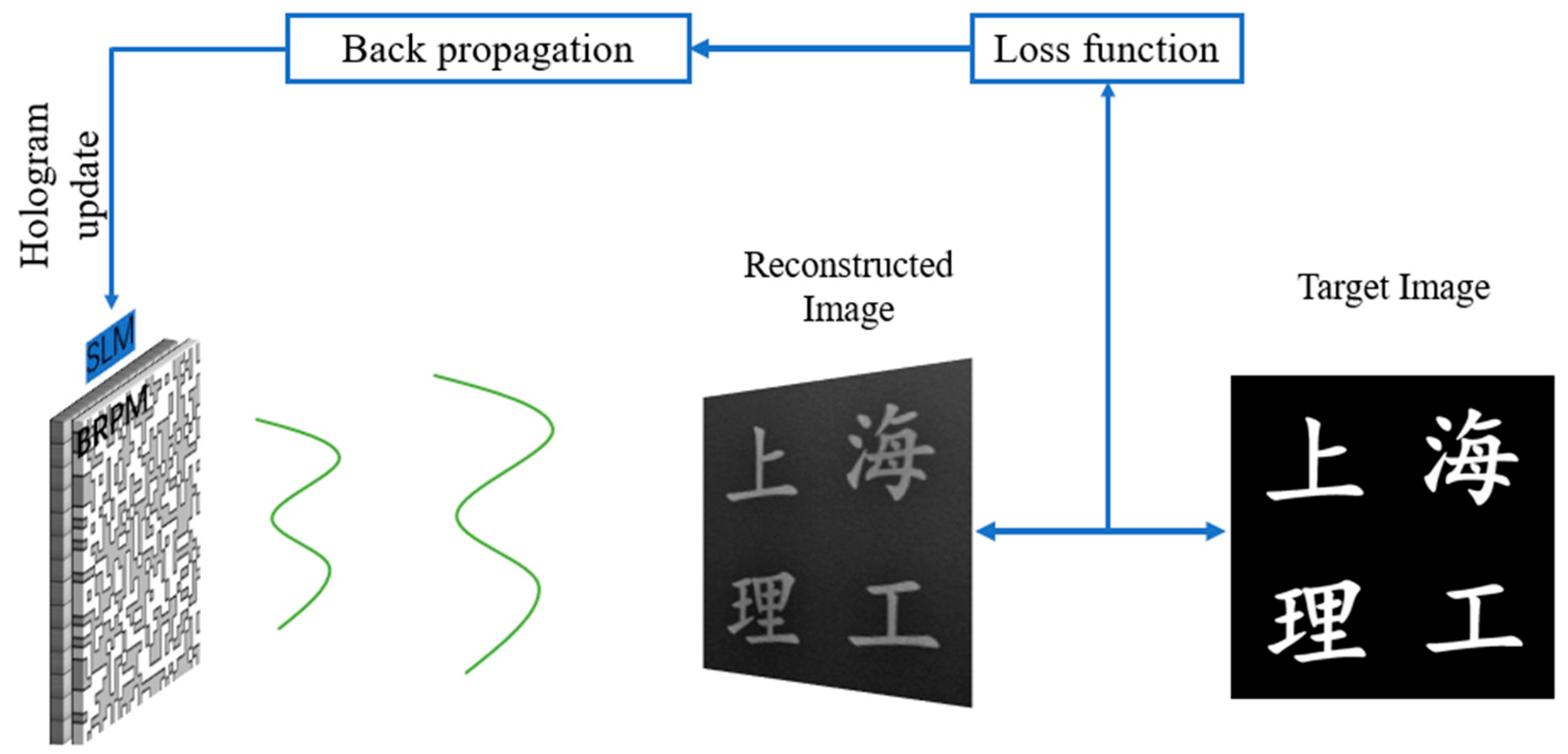

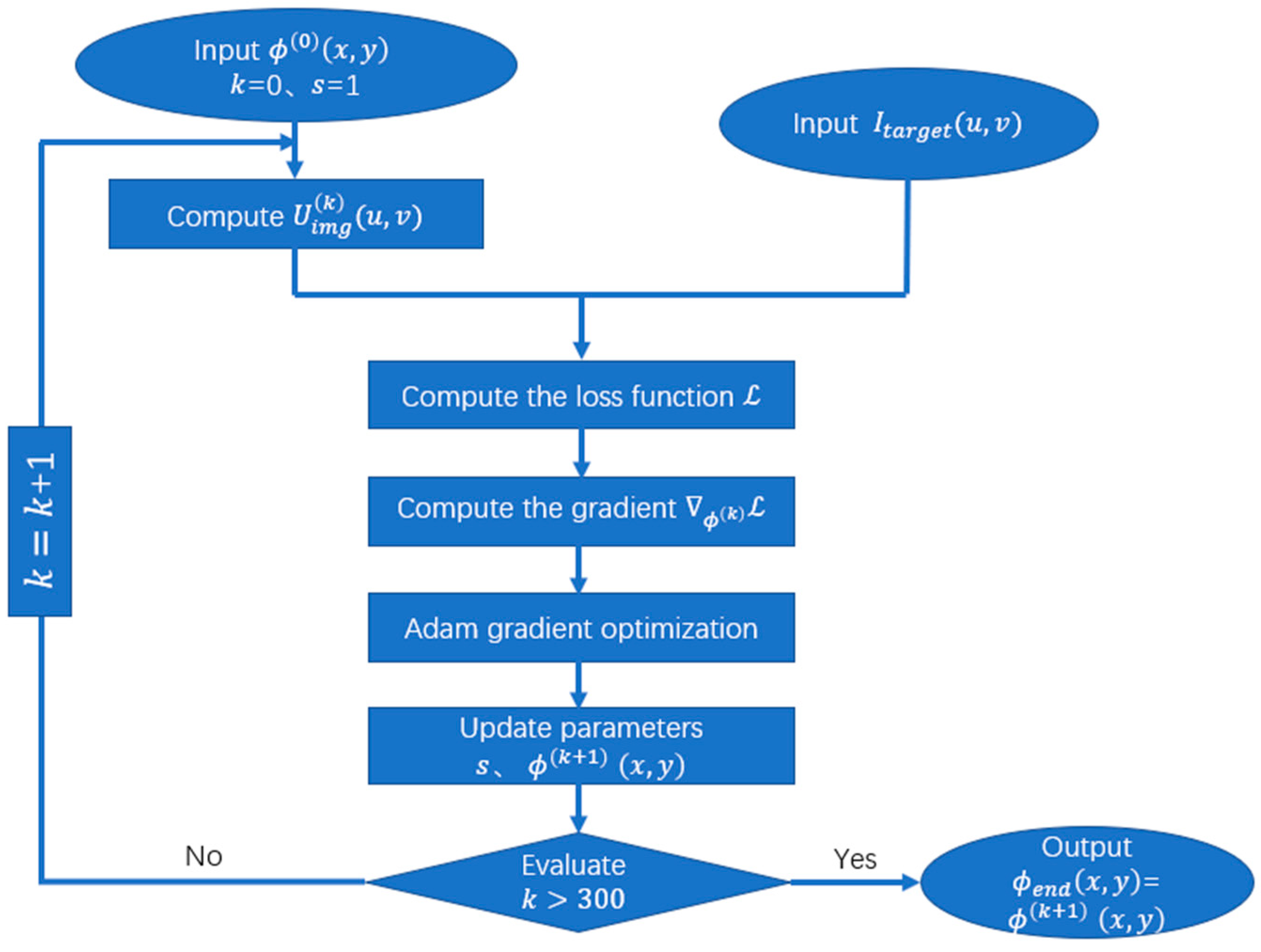

2.3. Hologram Optimization Based on Gradient Descent

3. Results and Analysis

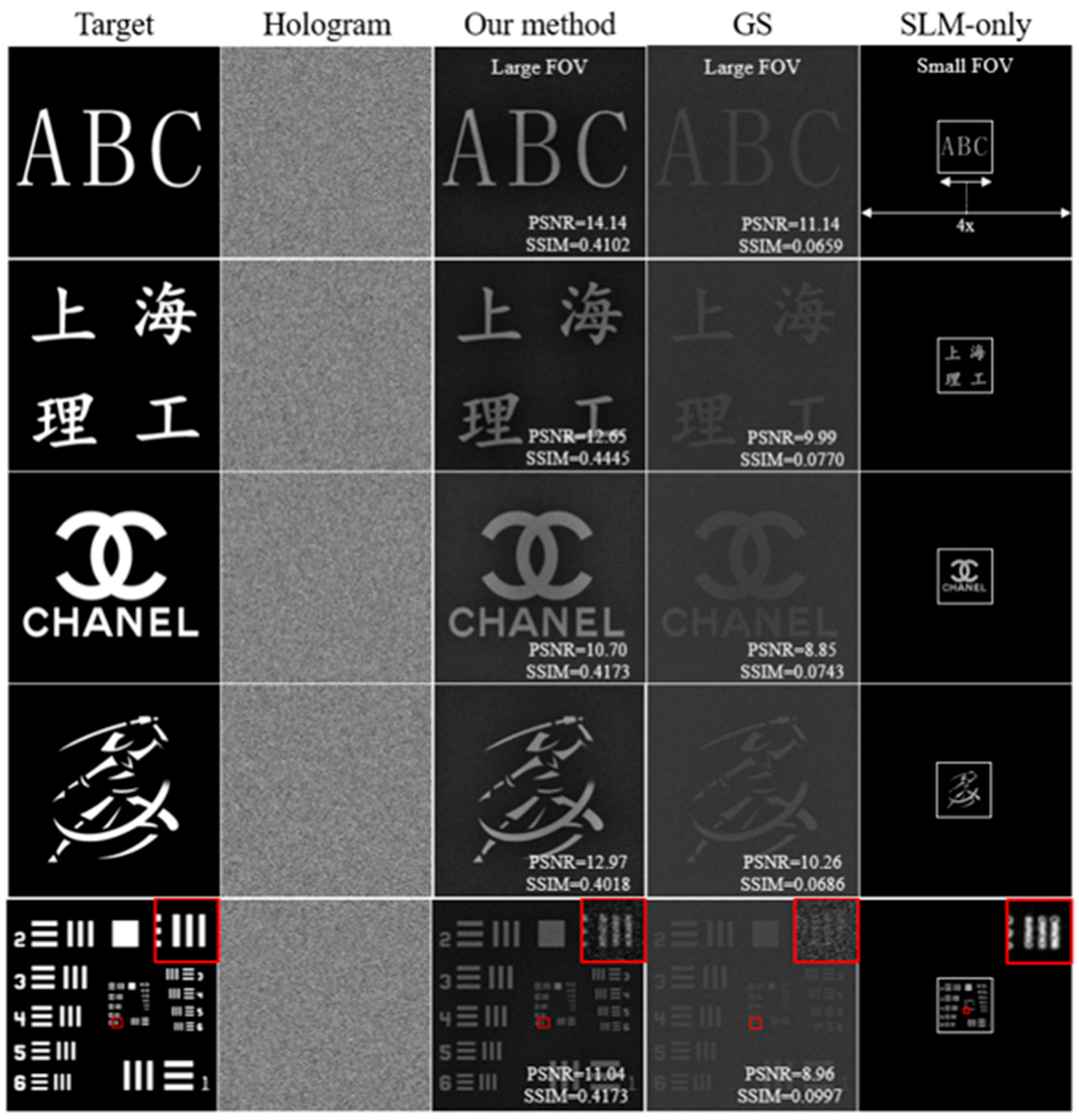

3.1. Evaluation Metrics and Performance Evaluation

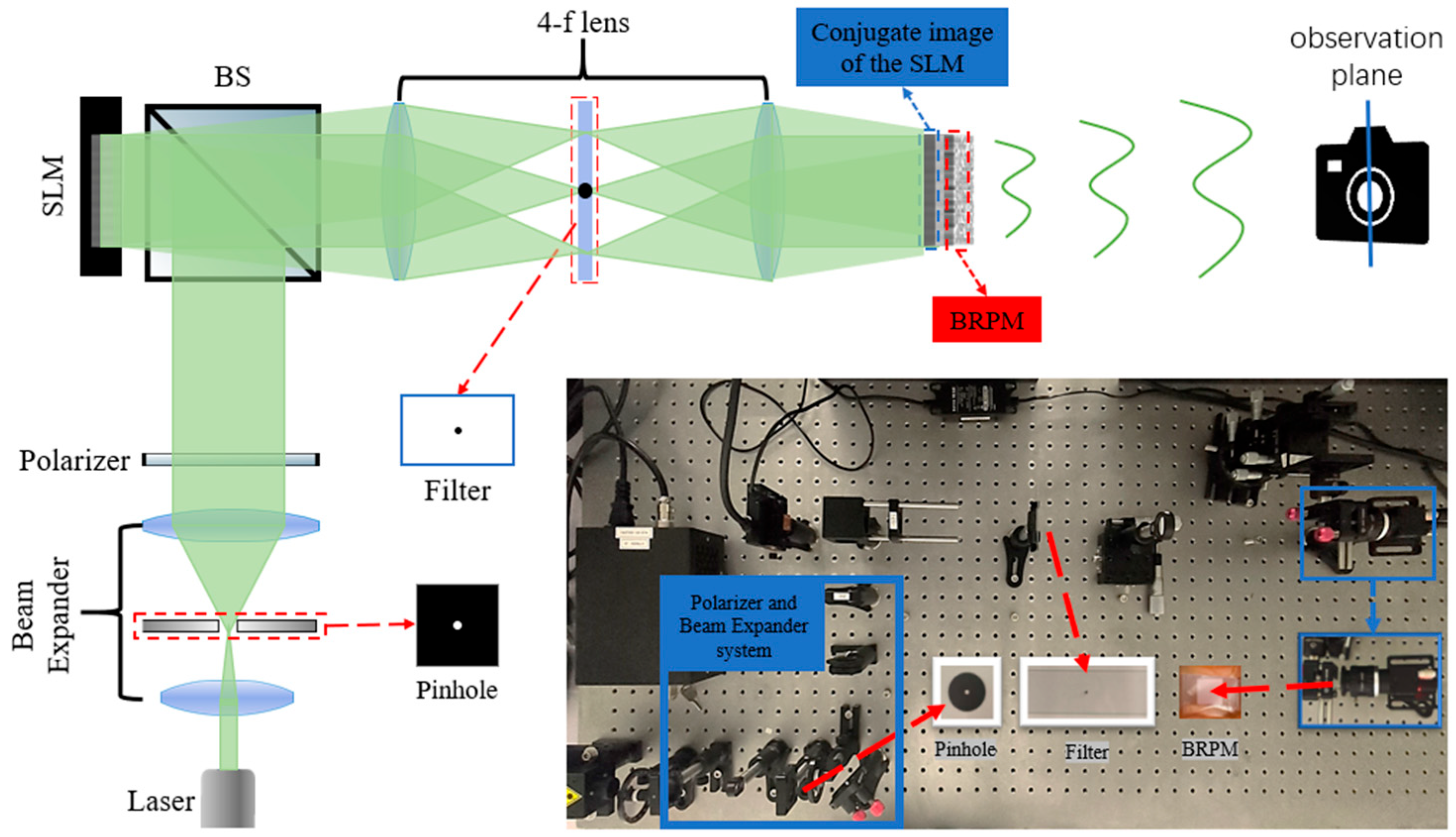

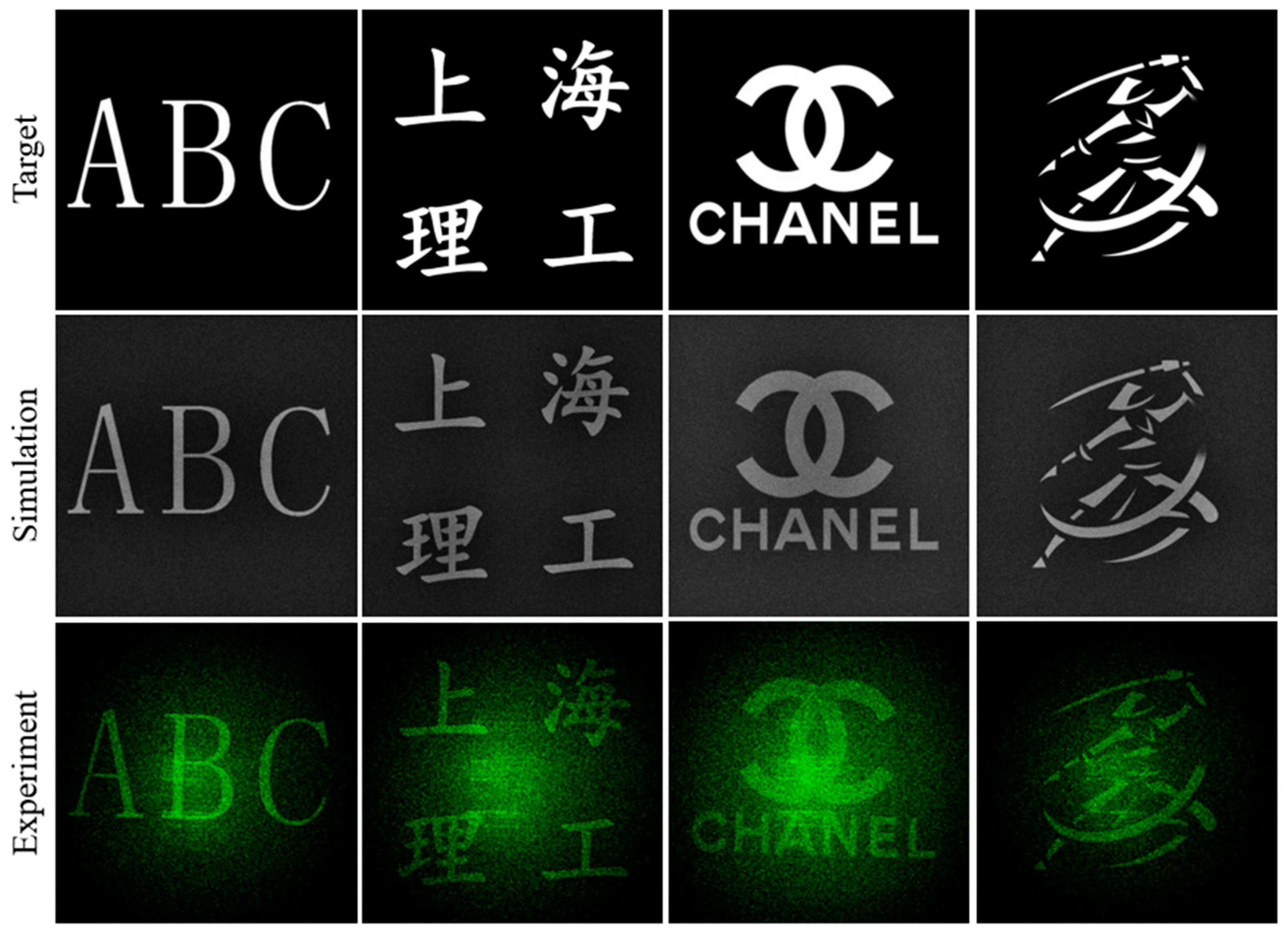

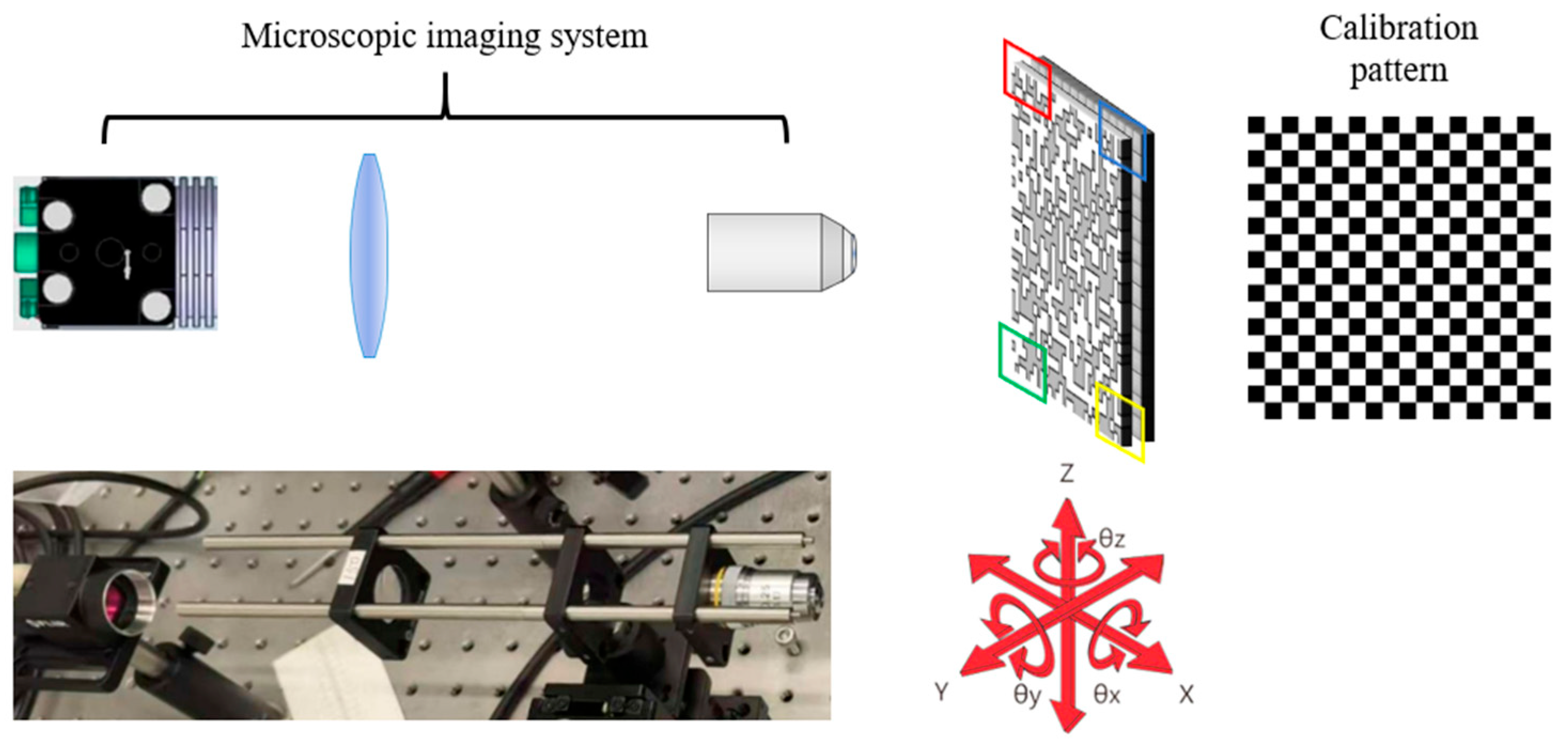

3.2. Experimental Setup and Results Analysis

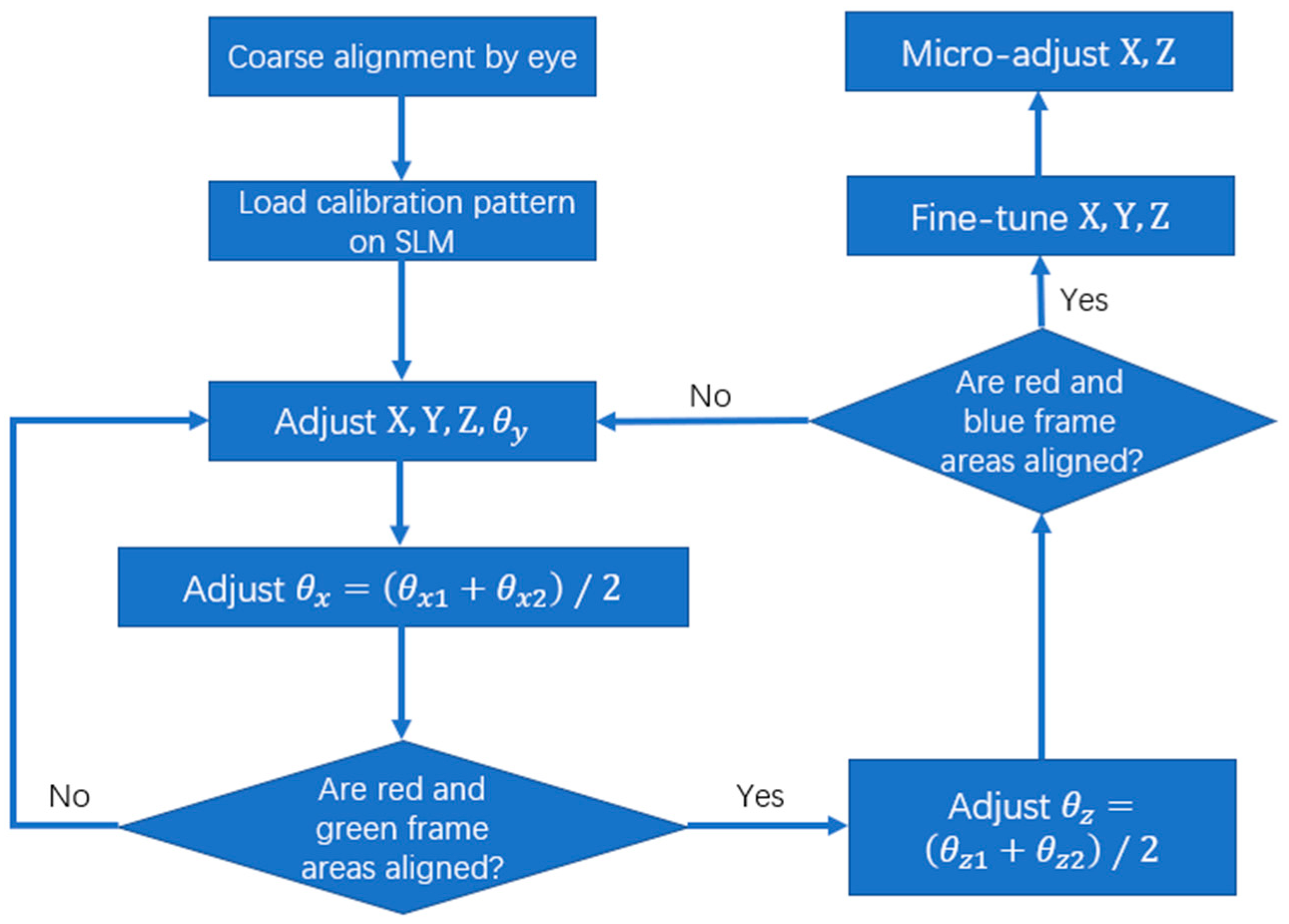

3.3. Alignment of the SLM Conjugate Image and the BRPM

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| SBP | space–bandwidth product |

| FOV | field of view |

| SLM | spatial light modulator |

| BRPM | binary random phase mask |

| 3D | three-dimensional |

| VAC | vergence-accommodation conflict |

| VR | virtual reality |

| AR | augmented reality |

| LCoS | Liquid-Crystal-on-Silicon |

| GS | Gerchberg–Saxton |

| IFTA | Iterative Fourier Transform Algorithm |

| PSNR | Peak Signal-to-Noise Ratio |

| SSIM | Structural Similarity Index |

| CGHs | computer-generated holograms |

References

- Wakunami, K.; Hsieh, P.Y.; Oi, R.; Senoh, T.; Sasaki, H.; Ichihashi, Y.; Okui, M.; Huang, Y.; Yamamoto, K. Projection-type see-through holographic three-dimensional display. Nat. Commun. 2016, 7, 12954. [Google Scholar] [CrossRef]

- Shi, L.; Li, B.; Kim, C.; Kellnhofer, P.; Matusik, W. Towards real-time photorealistic 3D holography with deep neural networks. Nature 2021, 591, 234–239. [Google Scholar] [CrossRef]

- Itoh, Y.; Langlotz, T.; Sutton, J.; Plopski, A. Towards indistinguishable augmented reality: A survey on optical see-through head-mounted displays. ACM Comput. Surv. 2021, 54, 1–36. [Google Scholar] [CrossRef]

- Hoffman, D.M.; Girshick, A.R.; Akeley, K.; Banks, M.S. Vergence–accommodation conflicts hinder visual performance and cause visual fatigue. J. Vis. 2008, 8, 33. [Google Scholar] [CrossRef]

- Dorrah, A.H.; Bordoloi, P.; de Angelis, V.S.; de Sarro, J.O.; Ambrosio, L.A.; Zamboni-Rached, M.; Capasso, F. Light sheets for continuous-depth holography and three-dimensional volumetric displays. Nat. Photonics 2023, 17, 427–434. [Google Scholar] [CrossRef]

- Schnars, U.; Jüptner, W.P.O. Digital recording and numerical reconstruction of holograms. Meas. Sci. Technol. 2002, 13, R85. [Google Scholar] [CrossRef]

- Moser, S.; Ritsch-Marte, M.; Thalhammer, G. Model-based compensation of pixel crosstalk in liquid crystal spatial light modulators. Opt. Express 2019, 27, 25046–25063. [Google Scholar] [CrossRef]

- Chen, H.M.P.; Yang, J.P.; Yen, H.T.; Hsu, Z.N.; Huang, Y.; Wu, S.T. Pursuing high quality phase-only liquid crystal on silicon (LCoS) devices. Appl. Sci. 2018, 8, 2323. [Google Scholar] [CrossRef]

- Lazarev, G.; Chen, P.J.; Strauss, J.; Fontaine, N.; Forbes, A. Beyond the display: Phase-only liquid crystal on silicon devices and their applications in photonics. Opt. Express 2019, 27, 16206–16249. [Google Scholar] [CrossRef] [PubMed]

- Haist, T.; Osten, W. Holography using pixelated spatial light modulators—Part 1: Theory and basic considerations. J. Micro/Nanolith. MEMS MOEMS 2015, 14, 041310. [Google Scholar] [CrossRef]

- Maeno, K.; Fukaya, N.; Nishikawa, O.; Sato, K.; Honda, T. Electro-holographic display using 15 mega pixels LCD. In Proceedings of the Electronic Imaging: Science and Technology, San Jose, CA, USA, 28 January–2 February 1996; pp. 15–23. [Google Scholar]

- Hahn, J.; Kim, H.; Lim, Y.; Park, G.; Lee, B. Wide viewing angle dynamic holographic stereogram with a curved array of spatial light modulators. Opt. Express 2008, 16, 12372–12386. [Google Scholar] [CrossRef]

- Yaraş, F.; Kang, H.; Onural, L. Circular holographic video display system. Opt. Express 2011, 19, 9147–9156. [Google Scholar] [CrossRef]

- Sasaki, H.; Yamamoto, K.; Wakunami, K.; Ichihashi, Y.; Oi, R.; Senoh, T. Large size three-dimensional video by electronic holography using multiple spatial light modulators. Sci. Rep. 2014, 4, 6177. [Google Scholar] [CrossRef] [PubMed]

- Mishina, T.; Okano, F.; Yuyama, I. Time-alternating method based on single-sideband holography with half-zone-plate processing for the enlargement of viewing zones. Appl. Opt. 1999, 38, 3703–3713. [Google Scholar] [CrossRef]

- Liu, Y.Z.; Pang, X.N.; Jiang, S.; Dong, J.W. Viewing-angle enlargement in holographic augmented reality using time division and spatial tiling. Opt. Express 2013, 21, 12068–12076. [Google Scholar] [CrossRef]

- Sando, Y.; Barada, D.; Yatagai, T. Holographic 3D display observable for multiple simultaneous viewers from all horizontal directions by using a time division method. Opt. Lett. 2014, 39, 5555–5557. [Google Scholar] [CrossRef] [PubMed]

- Sando, Y.; Barada, D.; Yatagai, T. Full-color holographic 3D display with horizontal full viewing zone by spatiotemporal-division multiplexing. Appl. Opt. 2018, 57, 7622–7626. [Google Scholar] [CrossRef]

- Choi, C.; Kim, S.J.; Yun, J.G.; Sung, J.; Lee, S.Y.; Lee, B. Deflection angle switching with a metasurface based on phase-change nanorods. Chin. Opt. Lett. 2018, 16, 050009. [Google Scholar] [CrossRef]

- Choi, C.; Lee, S.Y.; Mun, S.E.; Lee, G.Y.; Sung, J.; Yun, H.; Yang, J.H.; Kim, H.O.; Hwang, C.Y.; Lee, B. Metasurface with nanostructured Ge2Sb2Te5 as a platform for broadband-operating wavefront switch. Adv. Opt. Mater. 2019, 7, 1900171. [Google Scholar] [CrossRef]

- Wang, D.; Li, Y.L.; Zheng, X.R.; Ji, R.N.; Xie, X.; Song, K.; Lin, F.C.; Li, N.; Jiang, Z.; Liu, C.; et al. Decimeter-depth and polarization addressable color 3D meta-holography. Nat. Commun. 2024, 15, 8242. [Google Scholar] [CrossRef]

- Yu, H.; Lee, K.; Park, J.; Park, Y. Ultrahigh-definition dynamic 3D holographic display by active control of volume speckle fields. Nat. Photonics 2017, 11, 186–192. [Google Scholar] [CrossRef]

- Baek, Y.S.; Lee, K.R.; Park, Y.K. High-resolution holographic microscopy exploiting speckle-correlation scattering matrix. Phys. Rev. Appl. 2018, 10, 024053. [Google Scholar] [CrossRef]

- Park, J.; Lee, K.R.; Park, Y.K. Ultrathin wide-angle large-area digital 3D holographic display using a non-periodic photon sieve. Nat. Commun. 2019, 10, 1304. [Google Scholar] [CrossRef] [PubMed]

- Vellekoop, I.M.; Mosk, A.P. Focusing coherent light through opaque strongly scattering media. Opt. Lett. 2007, 32, 2309–2311. [Google Scholar] [CrossRef] [PubMed]

- Vellekoop, I.M.; Lagendijk, A.; Mosk, A.P. Exploiting disorder for perfect focusing. Nat. Photonics 2010, 4, 320–322. [Google Scholar] [CrossRef]

- Mosk, A.P.; Lagendijk, A.; Lerosey, G.; Fink, M. Controlling waves in space and time for imaging and focusing in complex media. Nat. Photonics 2012, 6, 283–292. [Google Scholar] [CrossRef]

- Buckley, E.; Cable, A.; Lawrence, N.; Wilkinson, T. Viewing angle enhancement for two- and three-dimensional holographic displays with random superresolution phase masks. Appl. Opt. 2006, 45, 7334–7341. [Google Scholar] [CrossRef]

- Tsang, P.W.M.; Poon, T.C.; Zhou, C.; Cheung, K.W.K. Binary mask programmable hologram. Opt. Express 2012, 20, 26480–26485. [Google Scholar] [CrossRef]

- Choi, W.Y.; Lee, C.J.; Kim, B.S.; Oh, K.J.; Hong, K.; Choo, H.G.; Park, J.; Lee, S.Y. Numerical analysis on a viewing angle enhancement of a digital hologram by attaching a pixelated random phase mask. Appl. Opt. 2021, 60, A54–A61. [Google Scholar] [CrossRef]

- Gerchberg, R.W.; Saxton, W.O. A practical algorithm for the determination of phase from image and diffraction plane pictures. SPIE Milest. Ser. MS 1994, 94, 646. [Google Scholar]

- Wyrowski, F.; Bryngdahl, O. Iterative Fourier-transform algorithm applied to computer holography. J. Opt. Soc. Am. A 1988, 5, 1058–1065. [Google Scholar] [CrossRef]

- Sui, X.; He, Z.; Chu, D.; Cao, L. Non-convex optimization for inverse problem solving in computer-generated holography. Light Sci. Appl. 2024, 13, 158. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Goodman, J.W. Introduction to Fourier Optics; Roberts and Company Publishers: Greenwood Village, CO, USA, 2005. [Google Scholar]

- Schnars, U.; Falldorf, C.; Watson, J.; Jüptner, W. Digital Holography and Wavefront Sensing; Springer: Dordrecht, The Netherlands, 2015; pp. 1–226. [Google Scholar]

- Available online: https://github.com/pytorch/pytorch (accessed on 10 May 2024).

- Kinga, D.; Adam, J.B. A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Kuo, G.; Schiffers, F.; Lanman, D.; Cossairt, O.; Matsuda, N. Multisource holography. ACM Trans. Graph. 2023, 42, 1–14. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Li, W.; Dai, B.; Wang, Q.; Zhuang, S.; Zhang, D.; Chang, C. Space–Bandwidth Product Extension for Holographic Displays Through Cascaded Wavefront Modulation. Appl. Sci. 2025, 15, 9237. https://doi.org/10.3390/app15179237

Zhang S, Li W, Dai B, Wang Q, Zhuang S, Zhang D, Chang C. Space–Bandwidth Product Extension for Holographic Displays Through Cascaded Wavefront Modulation. Applied Sciences. 2025; 15(17):9237. https://doi.org/10.3390/app15179237

Chicago/Turabian StyleZhang, Shenao, Wenjia Li, Bo Dai, Qi Wang, Songlin Zhuang, Dawei Zhang, and Chenliang Chang. 2025. "Space–Bandwidth Product Extension for Holographic Displays Through Cascaded Wavefront Modulation" Applied Sciences 15, no. 17: 9237. https://doi.org/10.3390/app15179237

APA StyleZhang, S., Li, W., Dai, B., Wang, Q., Zhuang, S., Zhang, D., & Chang, C. (2025). Space–Bandwidth Product Extension for Holographic Displays Through Cascaded Wavefront Modulation. Applied Sciences, 15(17), 9237. https://doi.org/10.3390/app15179237