Enhancing Wafer Notch Detection for Ion Implantation: Optimized YOLOv8 Approach with Global Attention Mechanism

Abstract

1. Introduction

2. Methods

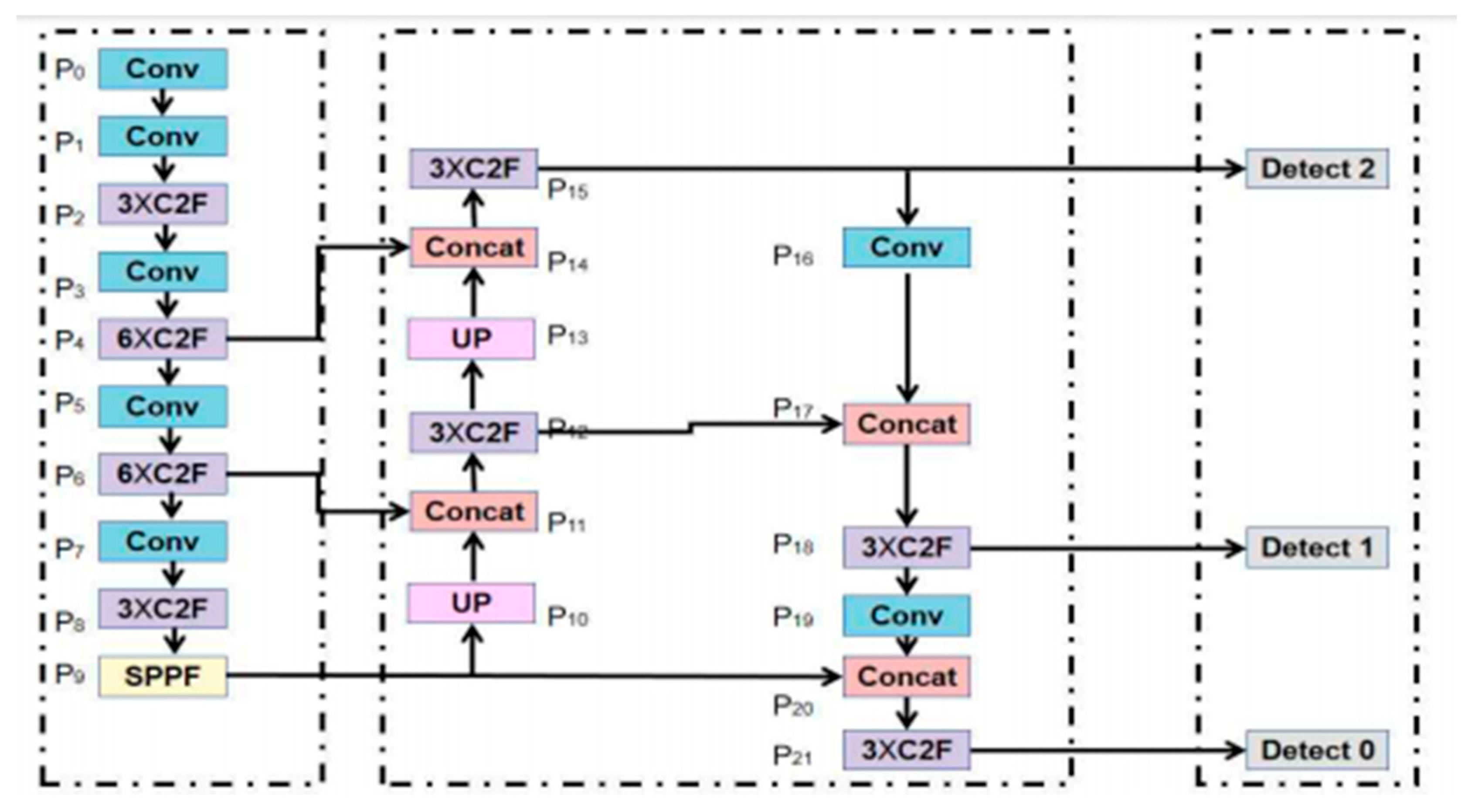

2.1. Yolov8

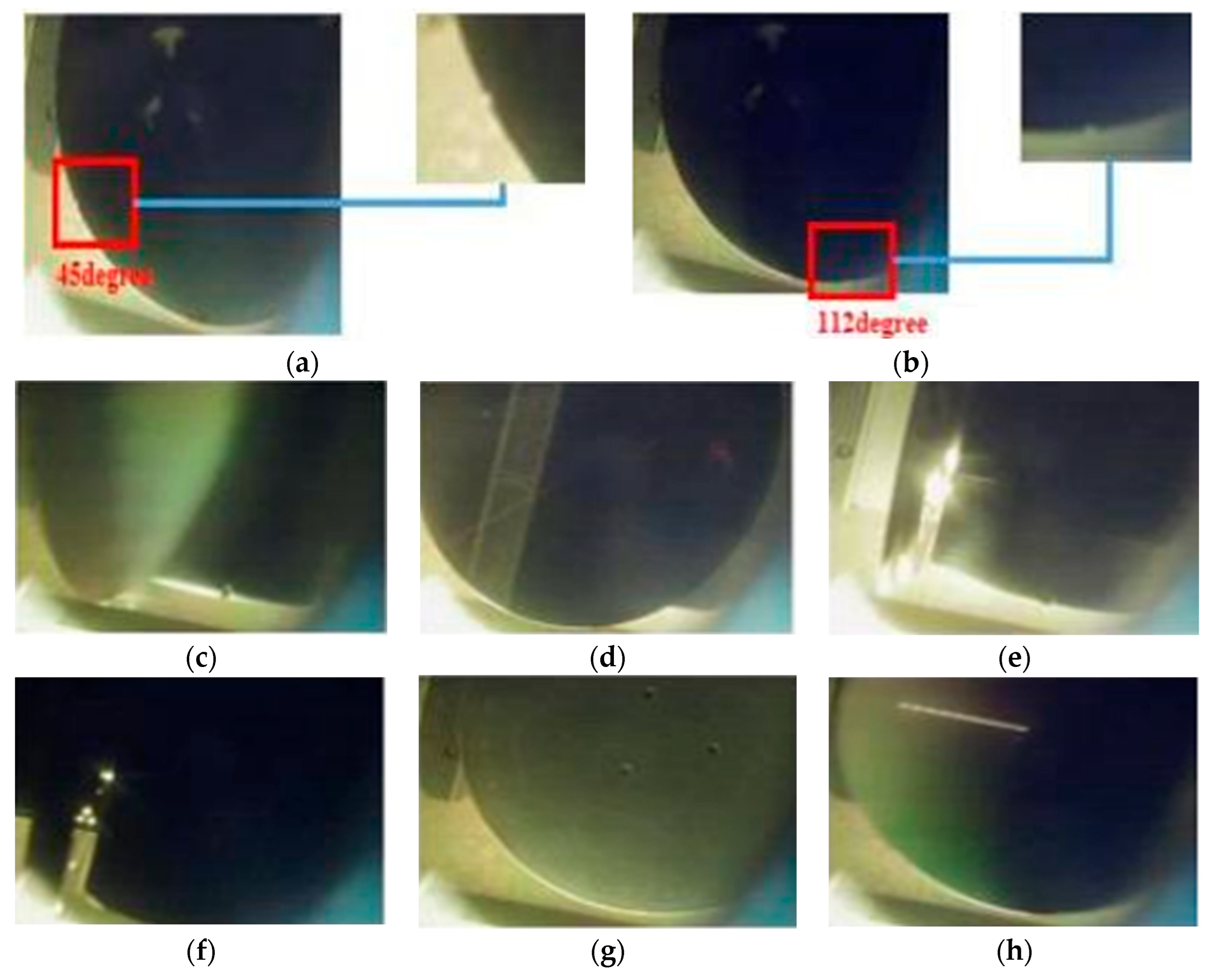

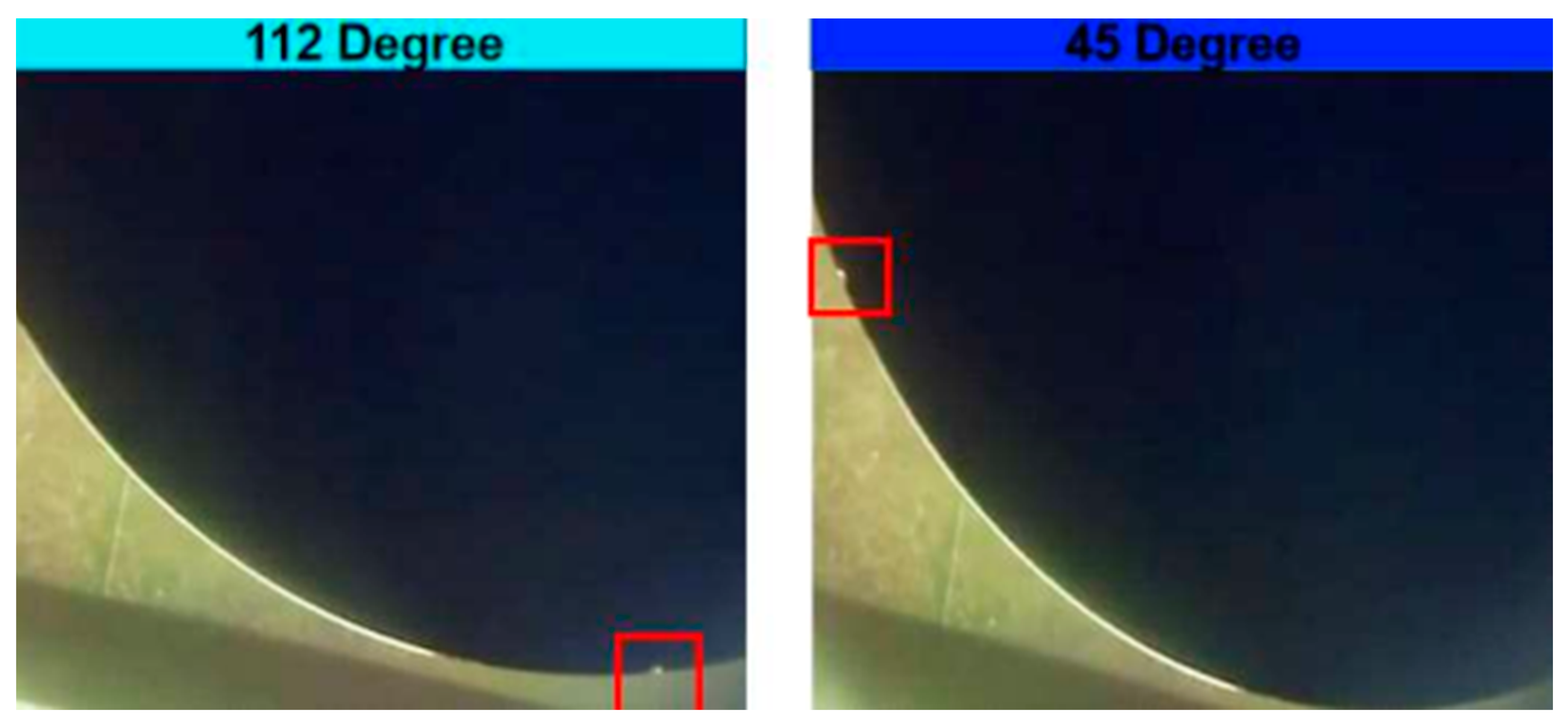

2.2. Dataset Preparation

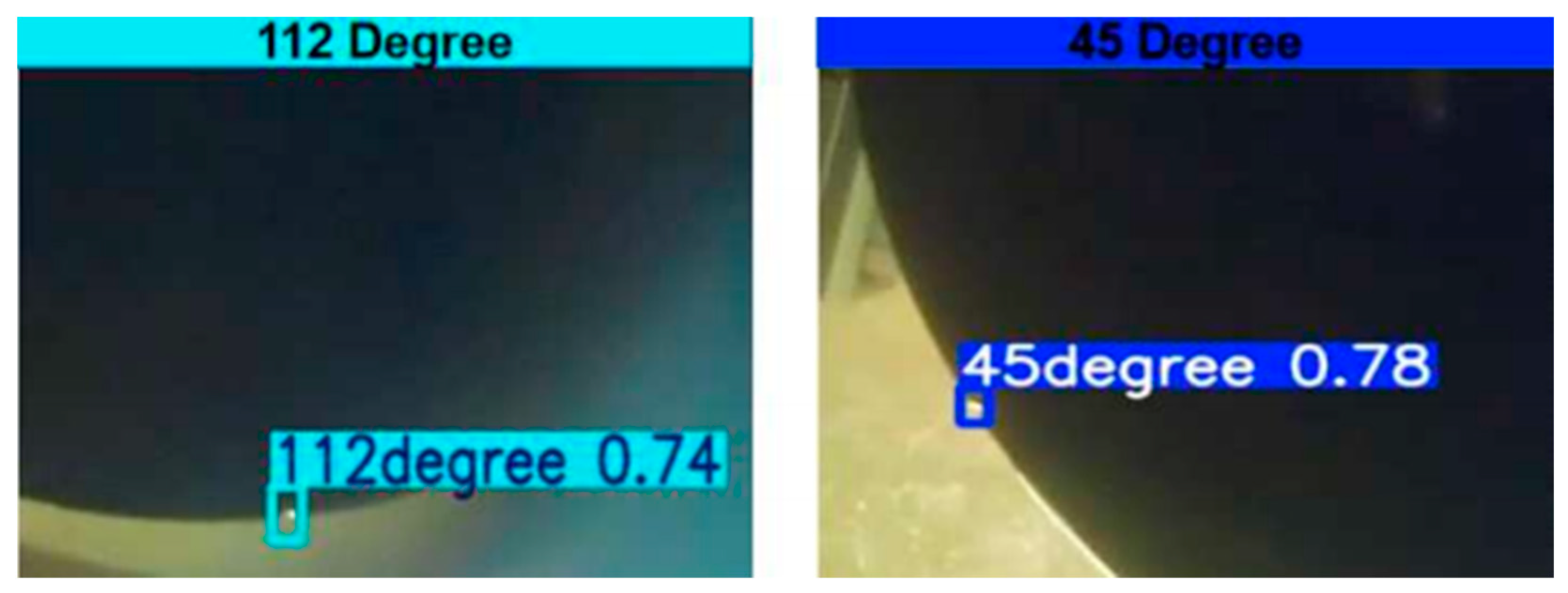

2.2.1. Misidentification (False Detection)

2.2.2. Class Imbalance

2.2.3. New Dataset Construction

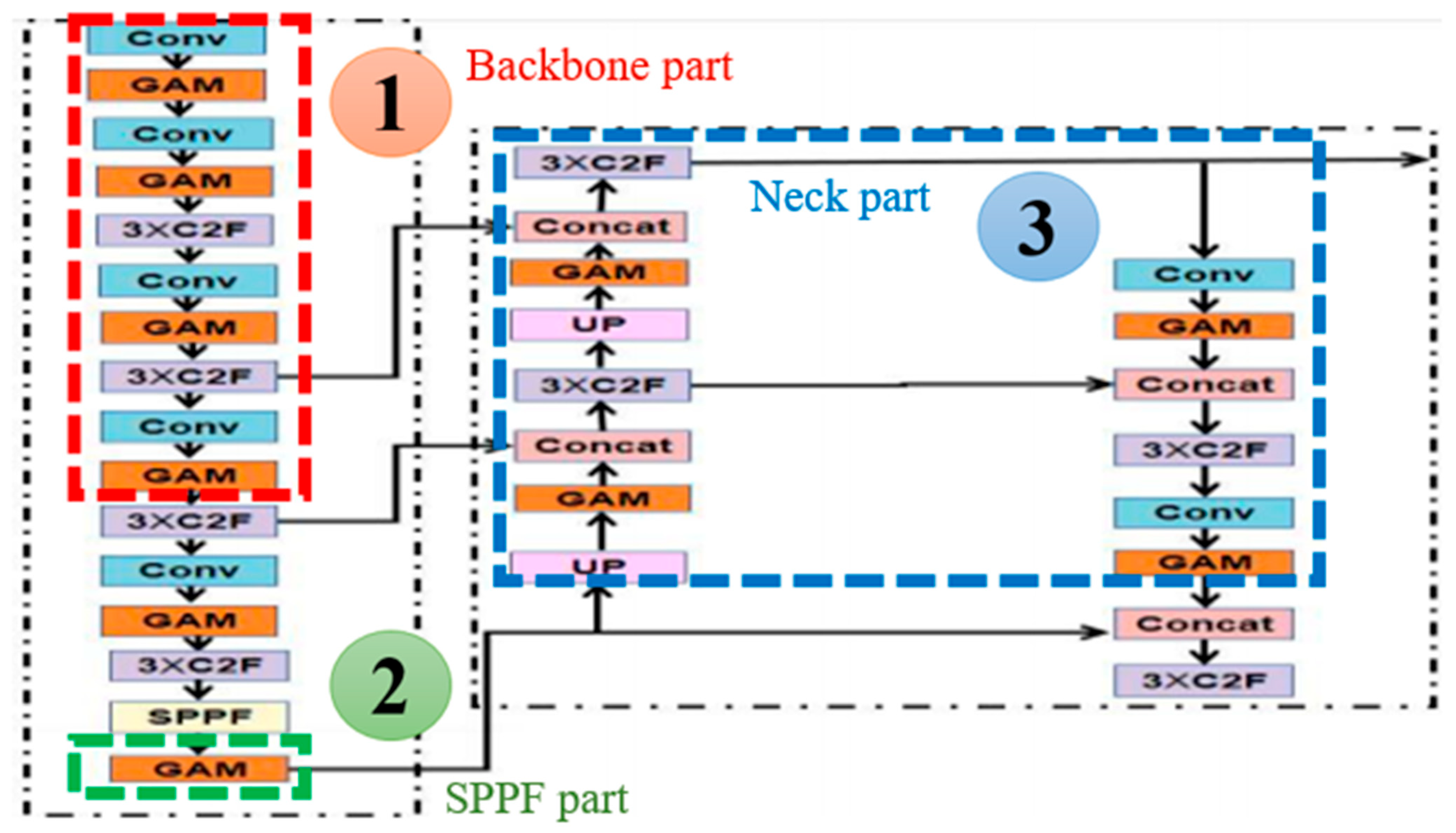

2.3. Improved YOLOv8 Model

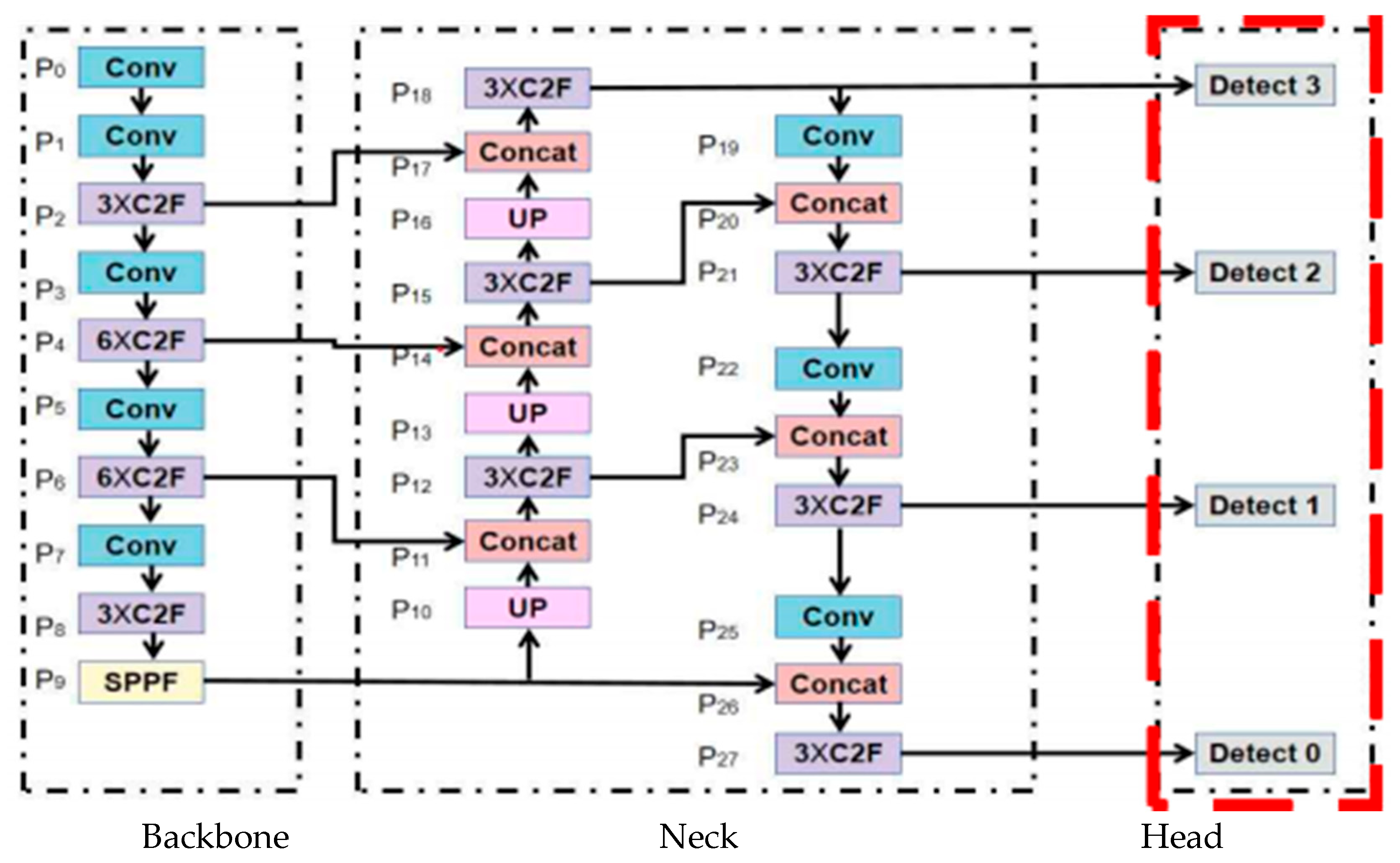

2.3.1. Detection Head

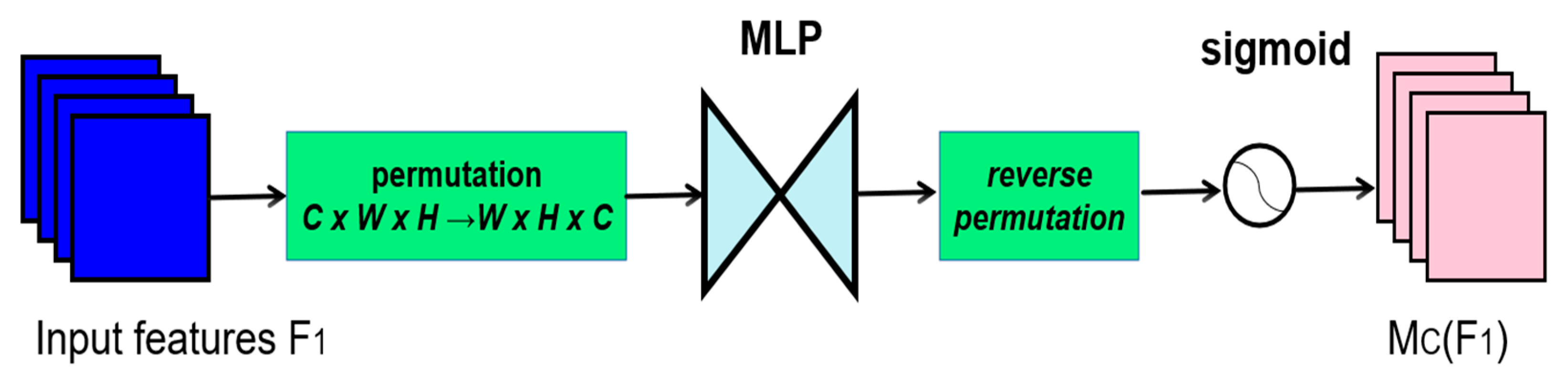

2.3.2. Global Attention Mechanism

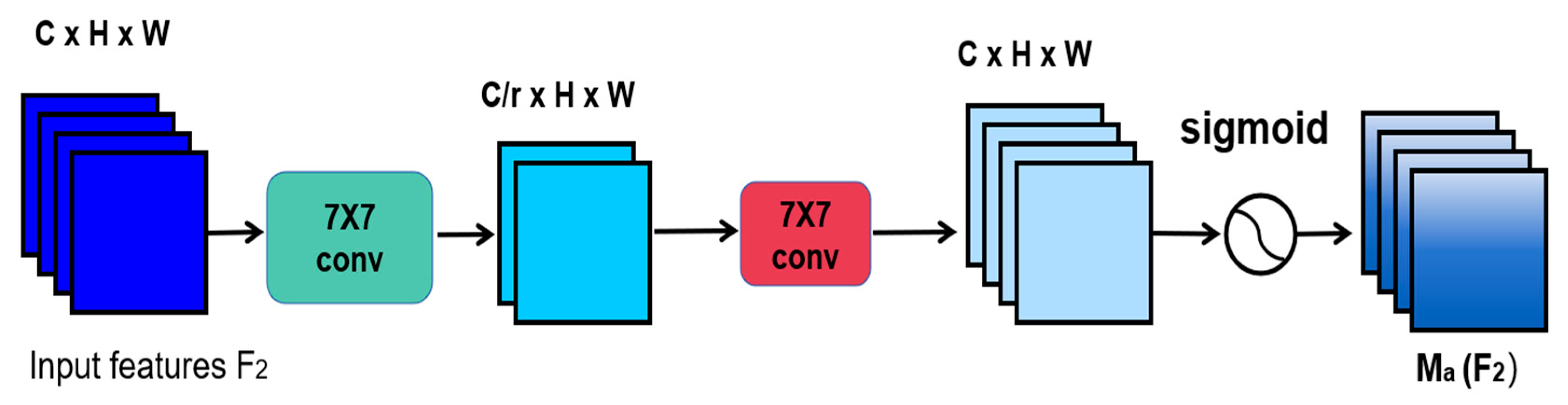

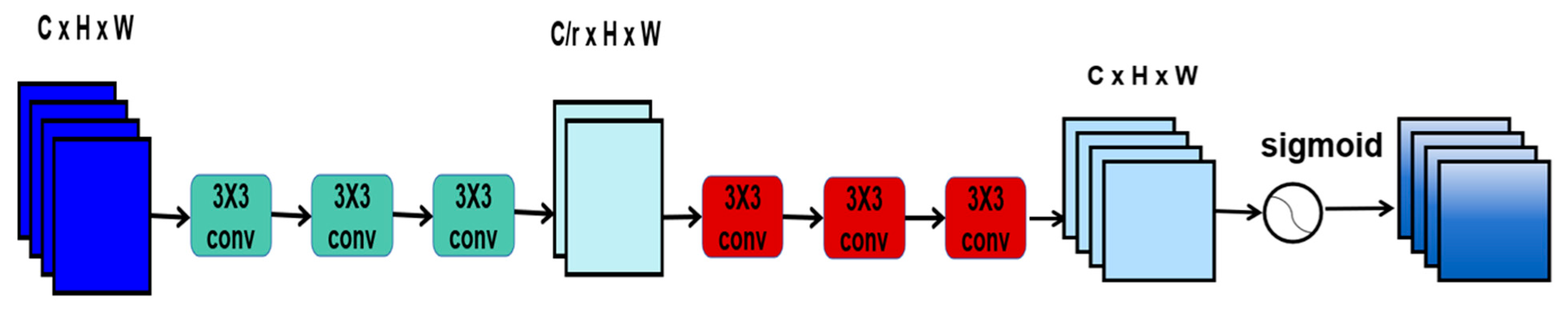

2.3.3. Global Attention Mechanism Improvements

3. Experimental Settings

3.1. Experimental Environment

Network Architecture Enhancements

3.2. Evaluation Metrics

3.2.1. Recall

3.2.2. Precision

3.2.3. Mean Average Precision

4. Results and Discussion

4.1. Class Imbalance and Misidentification Problems

4.2. Comparison with YOLOv8

4.3. Comparison of Results from New and Old Datasets

4.4. Different Attention Mechanisms

4.5. Adding the GAM at Different Positions

4.6. Improved Global Attention Mechanism

4.7. Comparison with Other Models

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Purwaningsih, L.; Konsulke, P.; Tonhaeuser, M.; Jantoljak, H. Defect Inspection Wafer Notch Orientation and Defect Detection Dependency. In Proceedings of the International Symposium for Testing and Failure Analysis, Phoenix, AZ, USA, 31 October–4 November 2021; ASM International: Almere, The Netherlands, 2021; Volume 84215, pp. 403–405. [Google Scholar]

- Chaudhry, A.; Kumar, M.J. Controlling Short-Channel Effects in Deep-Submicron SOI MOSFETs for Improved Reliability: A Review. IEEE Trans. Device Mater. Reliab. 2004, 4, 99–109. [Google Scholar] [CrossRef]

- Qu, D.; Qiao, S.; Rong, W.; Song, Y.; Zhao, Y. Design and Experiment of the Wafer Pre-Alignment System. In Proceedings of the 2007 International Conference on Mechatronics and Automation, Kumamoto, Japan, 8–10 May 2007; pp. 1483–1488. [Google Scholar]

- Jiang, H.; Learned-Miller, E. Face Detection with the Faster R-CNN. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 650–657. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-Cnn: Delving into High Quality Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Wang, H.; Sim, H.J.; Hwang, J.J.; Kwak, S.J.; Moon, S.J. YOLOv4-Based Semiconductor Wafer Notch Detection Using Deep Learning and Image Enhancement Algorithms. Int. J. Precis. Eng. Manuf. 2024, 25, 1909–1916. [Google Scholar] [CrossRef]

- Li, Y.; Ren, F. Light-Weight Retinanet for Object Detection. arXiv 2019, arXiv:1905.10011. [Google Scholar]

- Putra, M.H.; Yussof, Z.M.; Lim, K.C.; Salim, S.I. Convolutional Neural Network for Person and Car Detection Using Yolo Framework. J. Telecommun. Electron. Comput. Eng. 2018, 10, 67–71. [Google Scholar]

- Alruwaili, M.; Siddiqi, M.H.; Atta, M.N.; Arif, M. Deep Learning and Ubiquitous Systems for Disabled People Detection Using YOLO Models. Comput. Hum. Behav. 2024, 154, 108150. [Google Scholar] [CrossRef]

- Gomes, H.; Redinha, N.; Lavado, N.; Mendes, M. Counting People and Bicycles in Real Time Using YOLO on Jetson Nano. Energies 2022, 15, 8816. [Google Scholar] [CrossRef]

- Khobdeh, S.B.; Yamaghani, M.R.; Sareshkeh, S.K. Basketball Action Recognition Based on the Combination of YOLO and a Deep Fuzzy LSTM Network. J. Supercomput. 2024, 80, 3528–3553. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ali, S.G.; Wang, X.; Li, P.; Li, H.; Yang, P.; Jung, Y.; Qin, J.; Kim, J.; Sheng, B. Egdnet: An Efficient Glomerular Detection Network for Multiple Anomalous Pathological Feature in Glomerulonephritis. Vis. Comput. 2024, 41, 2817–2834. [Google Scholar] [CrossRef]

- Pan, W.; Yang, Z. A Lightweight Enhanced YOLOv8 Algorithm for Detecting Small Objects in UAV Aerial Photography. Vis. Comput. 2025, 41, 7123–7139. [Google Scholar] [CrossRef]

- Shugui, Z.; Shuli, C.; Zhan, Z. Recognition Algorithm for Crop Leaf Diseases and Pests Based on Improved YOLOv8. J. Chin. Agric. Mech. 2024, 45, 255. [Google Scholar]

- Junos, M.H.; Mohd Khairuddin, A.S.; Thannirmalai, S.; Dahari, M. An Optimized YOLO-based Object Detection Model for Crop Harvesting System. IET Image Process 2021, 15, 2112–2125. [Google Scholar] [CrossRef]

- Prinzi, F.; Insalaco, M.; Orlando, A.; Gaglio, S.; Vitabile, S. A Yolo-Based Model for Breast Cancer Detection in Mammograms. Cogn. Comput. 2024, 16, 107–120. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A Small-Object-Detection Model Based on Improved YOLOv8 for UAV Aerial Photography Scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global Attention Mechanism: Retain Information to Enhance Channel-Spatial Interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11534–11542. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, H.; Fan, Y.; Wang, Z.; Jiao, L.; Schiele, B. Parameter-Free Spatial Attention Network for Person Re-Identification. arXiv 2018, arXiv:1811.12150. [Google Scholar] [CrossRef]

- Lou, H.; Duan, X.; Guo, J.; Liu, H.; Gu, J.; Bi, L.; Chen, H. DC-YOLOv8: Small-Size Object Detection Algorithm Based on Camera Sensor. Electronics 2023, 12, 2323. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, Z.; Wu, J.; Tian, Y.; Tang, H.; Guo, X. Real-Time Vehicle Detection Based on Improved Yolo V5. Sustainability 2022, 14, 12274. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (Voc) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

| Positive: Negative | Train Set (45°) | Train Set (112°) | Train Set (Negative) | Val Set (45°) | Val Set (112°) | Val Set (Negative) |

|---|---|---|---|---|---|---|

| 1:0 | 80 | 80 | 0 | 20 | 20 | 0 |

| 1:1 | 80 | 80 | 160 | 20 | 20 | 40 |

| 1:2 | 80 | 80 | 320 | 20 | 20 | 80 |

| 1:4 | 80 | 80 | 640 | 20 | 20 | 160 |

| 1:8 | 80 | 80 | 1280 | 20 | 20 | 320 |

| 1:16 | 80 | 80 | 2560 | 20 | 20 | 640 |

| Hyper Parameters | Value |

|---|---|

| Leering | 0.01 |

| Image size | 640 |

| Batch | 64 |

| Epoch | 2000 |

| Patience | 2000 |

| Central processing unit (CPU) | i5-11400F |

| Graphics processing unit (GPU) | 4070 |

| Deep learning framework | Porch |

| Kernel Configuration | Receptive Field | Parameters | FLOPs |

|---|---|---|---|

| 7 × 7 Conv | 7 × 7 | 49 | 49 |

| 3 × 3 × 3 Conv Stack | 7 × 7 (equivalent) | 27 | 27 |

| Positive and Negative Sample Ratio | Precision | Recall | MAP50 | MAP0.5–0.95 |

|---|---|---|---|---|

| 1:0 | 60.5 | 1 | 0.986 | 0.597 |

| 1:1 | 96.4 | 0.998 | 0.985 | 0.581 |

| 1:2 | 0.967 | 1 | 0.985 | 0.595 |

| 1:4 | 0.966 | 0.998 | 0.987 | 0.587 |

| 1:8 | 0.965 | 0.999 | 0.976 | 0.564 |

| 1:16 | 0.964 | 0.998 | 0.985 | 0.544 |

| Names | A | B | C | D | E | F |

|---|---|---|---|---|---|---|

| P1 | P1 | P1 | P1 | P1 | ||

| P2 | P2 | P2 | P2 | P2 | ||

| P3 | P3 | P3 | P3 | P3 | ||

| P4 | P4 | P4 | P4 | P4 | ||

| P5 | P5 | P5 | P5 | P5 | ||

| Names | G | H | I | J | K | |

| P1 | P1 | P1 | P1 | |||

| P2 | P2 | P2 | P2 | |||

| P3 | P3 | P3 | P3 | |||

| P4 | P4 | P4 | P4 | |||

| Names | Precision | Recall | MAP | MAP50–95 |

|---|---|---|---|---|

| A | 0.968 | 1 | 0.991 | 0.582 |

| B | 0.969 | 1 | 0.993 | 0.586 |

| C | 0.97 | 1 | 0.99 | 0.601 |

| D | 0.97 | 1 | 0.991 | 0.568 |

| E | 0.97 | 1 | 0.995 | 0.571 |

| F | 0.971 | 1 | 0.987 | 0.608 |

| G | 0.96 | 1 | 0.991 | 0.561 |

| H | 0.97 | 1 | 0.994 | 0.558 |

| I | 0.97 | 1 | 0.99 | 0.589 |

| J | 0 | 0 | 0 | 0 |

| K | 0.971 | 1 | 0.99 | 0.581 |

| Names | Recall | Precision | MAP50 | MAP50–95 |

|---|---|---|---|---|

| Old data | 1 | 0.971 | 0.985 | 0.608 |

| New data | 0.992 | 0.991 | 0.994 | 0.88 |

| Names | Precision | Recall | MAP50 | MAP50–95 |

|---|---|---|---|---|

| GAM | 0.996 | 1 | 0.995 | 0.937 |

| CBAM | 0.995 | 1 | 0.995 | 0.933 |

| SimAM | 0.994 | 1 | 0.995 | 0.882 |

| ECAM | 0.994 | 1 | 0.995 | 0.889 |

| SEAN | 0.994 | 1 | 0.995 | 0.911 |

| Position | Precision | Recall | MAP50 | MAP50–95 |

|---|---|---|---|---|

| Backbone | 0.997 | 1 | 0.995 | 0.937 |

| SPPF | 0.997 | 1 | 0.995 | 0.953 |

| Cancat | 0.995 | 1 | 0.995 | 0.95 |

| All | 0.993 | 1 | 0.995 | 0.911 |

| Names | Precision | Recall | MAP50 | MAP50–95 |

|---|---|---|---|---|

| Old | 0.994 | 1 | 0.988 | 0.953 |

| Module | ||||

| New | 0.995 | 1 | 0.986 | 0.987 |

| Module |

| Names of Models | Precision (%) | Recall (%) | MAP50 (%) | MAP50–95 (%) |

|---|---|---|---|---|

| Yolov5 | 96.8 | 100 | 99 | 56.5 |

| Yolov7 | 97.1 | 100 | 98.2 | 57.8 |

| Yolov8 | 98.8 | 100 | 98.5 | 59.8 |

| Yolov10 | 99.6 | 100 | 99.8 | 57.2 |

| Our-Yolov8 | 99.5 | 100 | 98.6 | 98.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Sim, H.J.; Hwang, J.J.; Moon, S.J. Enhancing Wafer Notch Detection for Ion Implantation: Optimized YOLOv8 Approach with Global Attention Mechanism. Appl. Sci. 2025, 15, 9122. https://doi.org/10.3390/app15169122

Zhang Y, Sim HJ, Hwang JJ, Moon SJ. Enhancing Wafer Notch Detection for Ion Implantation: Optimized YOLOv8 Approach with Global Attention Mechanism. Applied Sciences. 2025; 15(16):9122. https://doi.org/10.3390/app15169122

Chicago/Turabian StyleZhang, Yuanhao, Hyo Jun Sim, Jong Jin Hwang, and Seung Jae Moon. 2025. "Enhancing Wafer Notch Detection for Ion Implantation: Optimized YOLOv8 Approach with Global Attention Mechanism" Applied Sciences 15, no. 16: 9122. https://doi.org/10.3390/app15169122

APA StyleZhang, Y., Sim, H. J., Hwang, J. J., & Moon, S. J. (2025). Enhancing Wafer Notch Detection for Ion Implantation: Optimized YOLOv8 Approach with Global Attention Mechanism. Applied Sciences, 15(16), 9122. https://doi.org/10.3390/app15169122