MDKAG: Retrieval-Augmented Educational QA Powered by a Multimodal Disciplinary Knowledge Graph

Abstract

1. Introduction

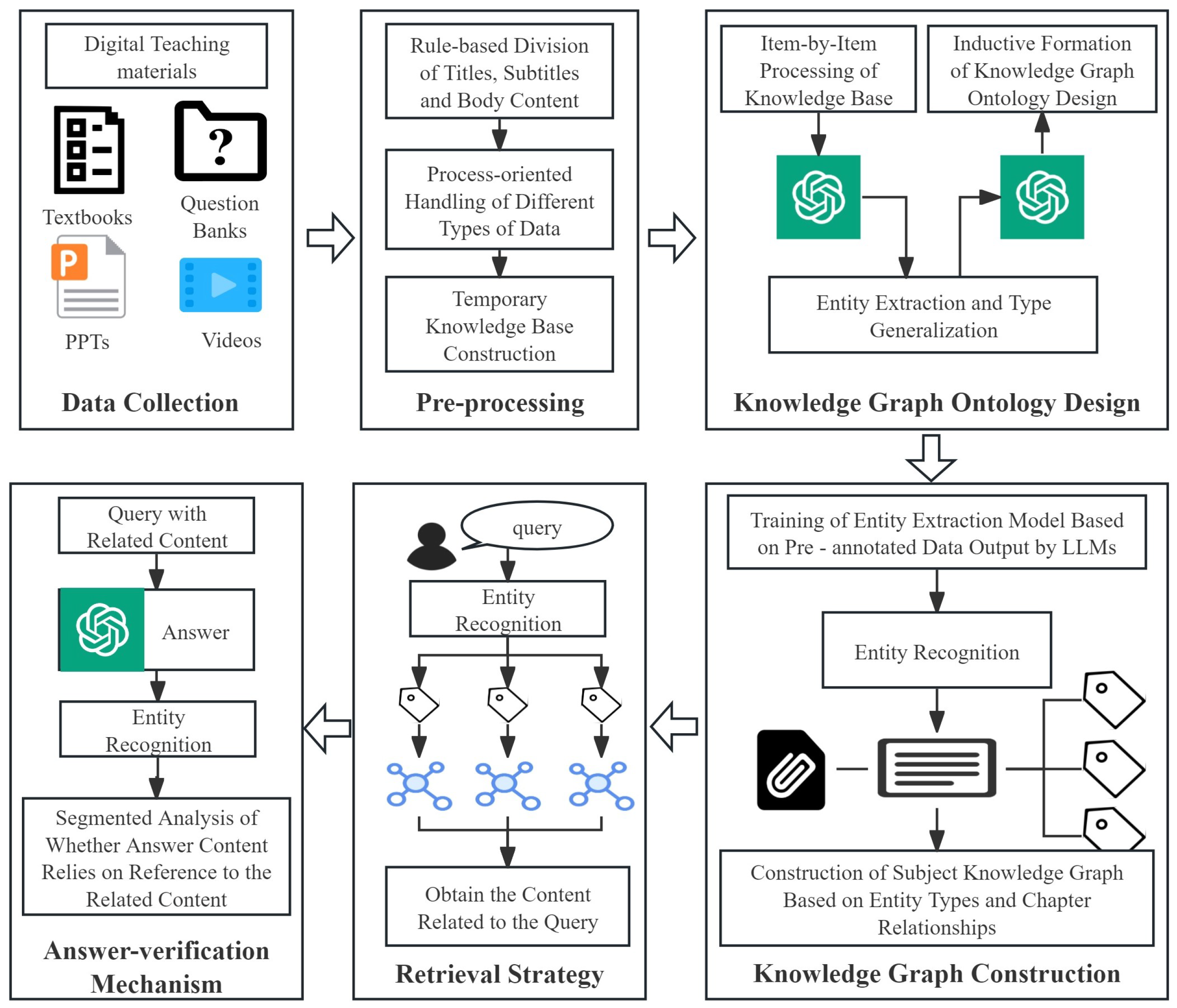

- 1.

- We present a general and updatable multimodal disciplinary knowledge-graph construction pipeline. Through multimodal data linkage preprocessing, LLM-driven bottom-up ontology design, and named-entity recognition, the framework automatically extracts concepts and builds the graph;

- 2.

- We design a relevance-first retrieval strategy that leverages graph topology and graph-search capabilities to accurately recall educational resources relevant to a query, thereby improving retrieval efficiency and quality;

- 3.

- We develop an answer-verification mechanism that combines semantic-overlap and entity-coverage checks to ensure the accuracy and reliability of generated answers, and we introduce a graph-update module for dynamic optimization and the expansion of the KG.

2. Related Work

- The Data Processing and Indexing Stage: This stage processes input data sources, such as documents or knowledge bases, to enhance their representativeness. The process begins by segmenting documents into manageable chunks:Each chunk is then encoded using specialized encoding functions to obtain vector embeddings:Finally, the data is structured into easily retrievable formats, such as knowledge graphs, to facilitate efficient access during retrieval.

- Query Processing and Generation Stage: When a user submits a query q, the system first encodes it into a suitable vector representation:The system then retrieves the top-k most relevant data points or knowledge components based on similarity metrics:Finally, these retrieved elements are integrated with the original query to facilitate informed response generation from the language model.

2.1. Evolution of Graph-Based RAG Techniques

2.2. RAG Applications in Education

2.3. Limitations of Existing Work

- 1.

- Graph construction: Current RAG frameworks build knowledge bases or graphs using a “human-defined schema plus LLM-assisted filling” paradigm; priorities differ across subjects, and reliance on LLM filling incurs hallucination risks;

- 2.

- Retrieval reasoning: By uniformly converting queries into vectors for indexing, the explicit semantics of the graph are blurred in the implicit vector space. Results favor similarity over relevance, failing to exploit the symbolic-reasoning advantages of graphs;

- 3.

- Absence of answer-verification mechanisms: Most work focuses on locating supporting passages before generation but seldom checks whether the answers maintain strong alignment with supporting materials after generation.

3. Methods

3.1. Strategy I: Multimodal Knowledge Fusion and Graph Construction

3.1.1. Data Collection

3.1.2. Data Preprocessing

3.1.3. Knowledge Graph Ontology Design

3.1.4. Knowledge Graph Construction

3.2. Strategy II: Relevance-Prioritized Retrieval Strategy

3.2.1. Query Entity Extraction and Classification

3.2.2. Graph-Based Retrieval with Divide-and-Conquer Strategy

- 1.

- Entity Type Analysis: Geographic (China) + Temporal (Song, Ming) + Artistic Practice (embroidery)

- 2.

- Combination Path Construction: Generate two precise retrieval paths:

- Path 1: China + Song Dynasty + embroidery;

- Path 2: China + Ming Dynasty + embroidery.

- 3.

- Interference Filtering: Avoid retrieving semantically similar but imprecise content.

3.2.3. Hybrid Ranking Mechanism

3.3. Strategy III: Answer Verification and Update Strategy

3.3.1. Semantic-Entity Coverage Verification

3.3.2. Dynamic Graph Update Mechanism

4. Experimental Setup

4.1. Datasets

4.2. Baselines

4.3. Metrics

4.3.1. Entity Extraction Evaluation

4.3.2. Semantic Similarity and Entity Coverage Assessment

4.3.3. End-to-End QA Evaluation

4.3.4. Answer Validation Criteria

4.4. Implementation Details

5. Results

5.1. Strategy I: Multimodal Knowledge Fusion and Graph Construction

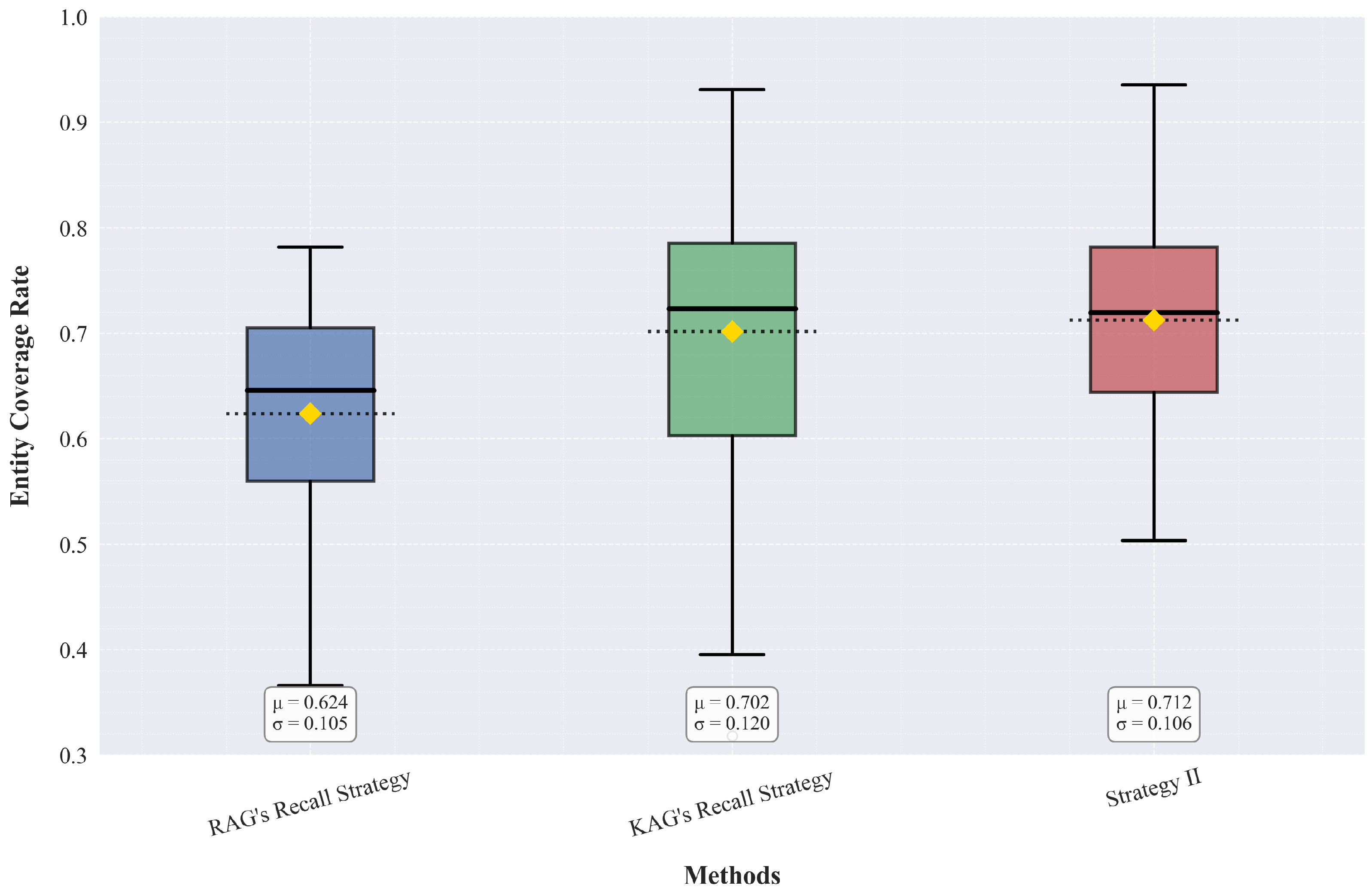

5.2. Strategy II: Relevance-Prioritized Retrieval Strategy

5.3. Strategy III: Answer Verification and Update Strategy

6. Discussion

6.1. Theoretical Breakthrough and Practical Value of Multimodal Knowledge Integration

6.2. Educational Significance of Retrieval Strategy Innovation and Answer Verification Mechanisms

6.3. Generalization Across Disciplines and Languages

6.4. Future Research Directions and Technological Development Prospects

- Personalized learning path generation, leveraging knowledge graph structural information to customize adaptive education for learners;

- Cross-lingual knowledge graph construction to promote international educational resource sharing;

- Real-time knowledge update mechanisms, developing efficient incremental learning algorithms;

- Cognitive load optimization, integrating educational psychology theories to optimize knowledge;

- Large-scale deployment studies, exploring performance optimization in practical applications.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, J.; Leng, F.; Wu, W.; Bao, Y. Construction Method of Textbook Knowledge Graph Based on Multimodal and Knowledge Distillation. J. Front. Comput. Sci. Technol. 2024, 18, 2901–2911. [Google Scholar]

- Liu, Z.; Wang, P.; Song, X.; Zhang, X.; Jiang, B. Survey on Hallucinations in Large Language Models. J. Softw. 2025, 36, 1152–1185. [Google Scholar] [CrossRef]

- He, J.; Shen, Y.; Xie, R. Recognition and Optimization of Hallucination Phenomena in Large Language Models. J. Comput. Appl. 2025, 45, 709–714. [Google Scholar]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.t.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 9459–9474. [Google Scholar]

- Yijie, C. Research on Hallucination in Controlled Text Generation. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2024. [Google Scholar]

- Liang, L.; Bo, Z.; Gui, Z.; Zhu, Z.; Zhong, L.; Zhao, P.; Sun, M.; Zhang, Z.; Zhou, J.; Chen, W.; et al. Kag: Boosting llms in professional domains via knowledge augmented generation. In Proceedings of the Companion Proceedings of the ACM on Web Conference, Sydney, Australia, 28 April–2 May 2025; pp. 334–343. [Google Scholar]

- Qika, L.; Lingling, Z.; Jun, L.; Tianzhe, Z. Question-aware Graph Convolutional Network for Educational Knowledge Base Question Answering. J. Front. Comput. Sci. Technol. 2021, 15, 1880–1887. [Google Scholar]

- Edge, D.; Trinh, H.; Cheng, N.; Bradley, J.; Chao, A.; Mody, A.; Truitt, S.; Metropolitansky, D.; Ness, R.O.; Larson, J. From local to global: A graph rag approach to query-focused summarization. arXiv 2024, arXiv:2404.16130. [Google Scholar] [CrossRef]

- Guo, Z.; Xia, L.; Yu, Y.; Ao, T.; Huang, C. LightRAG: Simple and Fast Retrieval-Augmented Generation. arXiv 2024, arXiv:2410.05779. [Google Scholar]

- Gutiérrez, B.J.; Shu, Y.; Gu, Y.; Yasunaga, M.; Su, Y. Hipporag: Neurobiologically inspired long-term memory for large language models. In Proceedings of the The Thirty-Eighth Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024. [Google Scholar]

- Choubey, P.K.; Su, X.; Luo, M.; Peng, X.; Xiong, C.; Le, T.; Rosenman, S.; Lal, V.; Mui, P.; Ho, R.; et al. Distill-SynthKG: Distilling Knowledge Graph Synthesis Workflow for Improved Coverage and Efficiency. arXiv 2024, arXiv:2410.16597. [Google Scholar]

- Luo, H.; Chen, G.; Zheng, Y.; Wu, X.; Guo, Y.; Lin, Q.; Feng, Y.; Kuang, Z.; Song, M.; Zhu, Y.; et al. HyperGraphRAG: Retrieval-Augmented Generation with Hypergraph-Structured Knowledge Representation. arXiv 2025, arXiv:2503.21322. [Google Scholar]

- Li, Y.; Liang, Y.; Yang, R.; Qiu, J.; Zhang, C.; Zhang, X. CourseKG: An educational knowledge graph based on course information for precision teaching. Appl. Sci. 2024, 14, 2710. [Google Scholar] [CrossRef]

- Yadav, V.; Bethard, S. A survey on recent advances in named entity recognition from deep learning models. arXiv 2019, arXiv:1910.11470. [Google Scholar] [CrossRef]

- Jiarui, L.; Huayu, L.; Yang, Y. Construction of Discipline Knowledge Graph for Multi-Source Heterogeneous Data Sources. Comput. Syst. Appl. 2021, 30, 59–67. [Google Scholar] [CrossRef]

- Melnyk, I.; Dognin, P.; Das, P. Knowledge graph generation from text. arXiv 2022, arXiv:2211.10511. [Google Scholar] [CrossRef]

- Lairgi, Y.; Moncla, L.; Cazabet, R.; Benabdeslem, K.; Cléau, P. itext2kg: Incremental knowledge graphs construction using large language models. In Proceedings of the International Conference on Web Information Systems Engineering, Doha, Qatar, 2–5 December 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 214–229. [Google Scholar]

- Drori, I.; Zhang, S.J.; Shuttleworth, R.; Zhang, S.; Tyser, K.; Chin, Z.; Lantigua, P.; Surbehera, S.; Hunter, G.; Austin, D.; et al. From human days to machine seconds: Automatically answering and generating machine learning final exams. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Long Beach, CA, USA, 6–10 August 2023; pp. 3947–3955. [Google Scholar]

- Zheng, T.; Li, W.; Bai, J.; Wang, W.; Song, Y. Assessing the Robustness of Retrieval-Augmented Generation Systems in K-12 Educational Question Answering with Knowledge Discrepancies. arXiv 2024, arXiv:2412.08985. [Google Scholar] [CrossRef]

- Alier, M.; García-Peñalvo, F.; Camba, J.D. Generative artificial intelligence in education: From deceptive to disruptive. Int. J. Interact. Multimed. Artif. Intell. 2024, 8, 5–14. [Google Scholar] [CrossRef]

- Dong, C.; Yuan, Y.; Chen, K.; Cheng, S.; Wen, C. How to Build an Adaptive AI Tutor for Any Course Using Knowledge Graph-Enhanced Retrieval-Augmented Generation (KG-RAG). In Proceedings of the 2025 14th International Conference on Educational and Information Technology (ICEIT), Guangzhou, China, 14–16 March 2025; pp. 152–157. [Google Scholar]

- Fatehkia, M.; Lucas, J.K.; Chawla, S. T-RAG: Lessons from the LLM trenches. arXiv 2024, arXiv:2402.07483. [Google Scholar] [CrossRef]

- Lu, Y.; Liu, Q.; Dai, D.; Xiao, X.; Lin, H.; Han, X.; Sun, L.; Wu, H. Unified structure generation for universal information extraction. arXiv 2022, arXiv:2203.12277. [Google Scholar] [CrossRef]

| Subject | Text Segments | Images | Other Modalities |

|---|---|---|---|

| Principles of Digital Television | 1269 | 279 | 0 |

| Costume History | 900 | 564 | 0 |

| Calligraphy Appreciating | 1034 | 0 | 12 |

| Subject | Model | ACC | Recall | F1 |

|---|---|---|---|---|

| Principles of Digital Television | DeepSeek-R1 | 0.805 | 0.920 | 0.859 |

| Kimi | 0.756 | 0.885 | 0.815 | |

| Doubao | 0.641 | 0.846 | 0.729 | |

| GPT-4o | 0.749 | 0.822 | 0.784 | |

| QwQ-32B | 0.781 | 0.911 | 0.840 | |

| GPT-3.5 | 0.671 | 0.791 | 0.726 | |

| GPT-3.5 + Strategy I | 0.880 | 0.896 | 0.888 | |

| Costume History | DeepSeek-R1 | 0.867 | 0.730 | 0.793 |

| Kimi | 0.781 | 0.766 | 0.773 | |

| Doubao | 0.640 | 0.820 | 0.719 | |

| GPT-4o | 0.751 | 0.791 | 0.770 | |

| QwQ-32B | 0.800 | 0.860 | 0.829 | |

| GPT-3.5 | 0.557 | 0.783 | 0.651 | |

| GPT-3.5 + Strategy I | 0.749 | 0.843 | 0.794 | |

| Calligraphy Appreciating | DeepSeek-R1 | 0.842 | 0.910 | 0.875 |

| Kimi | 0.771 | 0.860 | 0.813 | |

| Doubao | 0.610 | 0.831 | 0.704 | |

| GPT-4o | 0.721 | 0.843 | 0.777 | |

| QwQ-32B | 0.843 | 0.913 | 0.877 | |

| GPT-3.5 | 0.714 | 0.785 | 0.748 | |

| GPT-3.5 + Strategy I | 0.857 | 0.869 | 0.863 | |

| Average | DeepSeek-R1 | 0.838 | 0.854 | 0.842 |

| Kimi | 0.769 | 0.837 | 0.800 | |

| Doubao | 0.630 | 0.832 | 0.717 | |

| GPT-4o | 0.740 | 0.819 | 0.777 | |

| QwQ-32B | 0.808 | 0.895 | 0.849 | |

| GPT-3.5 | 0.648 | 0.787 | 0.708 | |

| GPT-3.5 + Strategy I | 0.829 | 0.869 | 0.848 |

| Subject | Method | Before Strategy III | After Strategy III |

|---|---|---|---|

| Principles of Digital Television | RAG | 0.627 | 0.733 (+16.9%) |

| KAG | 0.653 | 0.760 (+16.4%) | |

| MDKAG | 0.667 | 0.787 (+18.0%) | |

| Costume History | RAG | 0.680 | 0.693 (+1.9%) |

| KAG | 0.720 | 0.720 (+0.0%) | |

| MDKAG | 0.746 | 0.760 (+1.9%) | |

| Calligraphy Appreciating | RAG | 0.653 | 0.653 (+0.0%) |

| KAG | 0.640 | 0.706 (+10.3%) | |

| MDKAG | 0.667 | 0.706 (+5.8%) | |

| Average | RAG | 0.653 | 0.693 (+6.1%) |

| KAG | 0.671 | 0.729 (+8.6%) | |

| MDKAG | 0.693 | 0.751 (+8.4%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, X.; Wang, G.; Lu, Y. MDKAG: Retrieval-Augmented Educational QA Powered by a Multimodal Disciplinary Knowledge Graph. Appl. Sci. 2025, 15, 9095. https://doi.org/10.3390/app15169095

Zhao X, Wang G, Lu Y. MDKAG: Retrieval-Augmented Educational QA Powered by a Multimodal Disciplinary Knowledge Graph. Applied Sciences. 2025; 15(16):9095. https://doi.org/10.3390/app15169095

Chicago/Turabian StyleZhao, Xu, Guozhong Wang, and Yufei Lu. 2025. "MDKAG: Retrieval-Augmented Educational QA Powered by a Multimodal Disciplinary Knowledge Graph" Applied Sciences 15, no. 16: 9095. https://doi.org/10.3390/app15169095

APA StyleZhao, X., Wang, G., & Lu, Y. (2025). MDKAG: Retrieval-Augmented Educational QA Powered by a Multimodal Disciplinary Knowledge Graph. Applied Sciences, 15(16), 9095. https://doi.org/10.3390/app15169095