Evaluating Psychological Competency via Chinese Q&A in Large Language Models

Abstract

1. Introduction

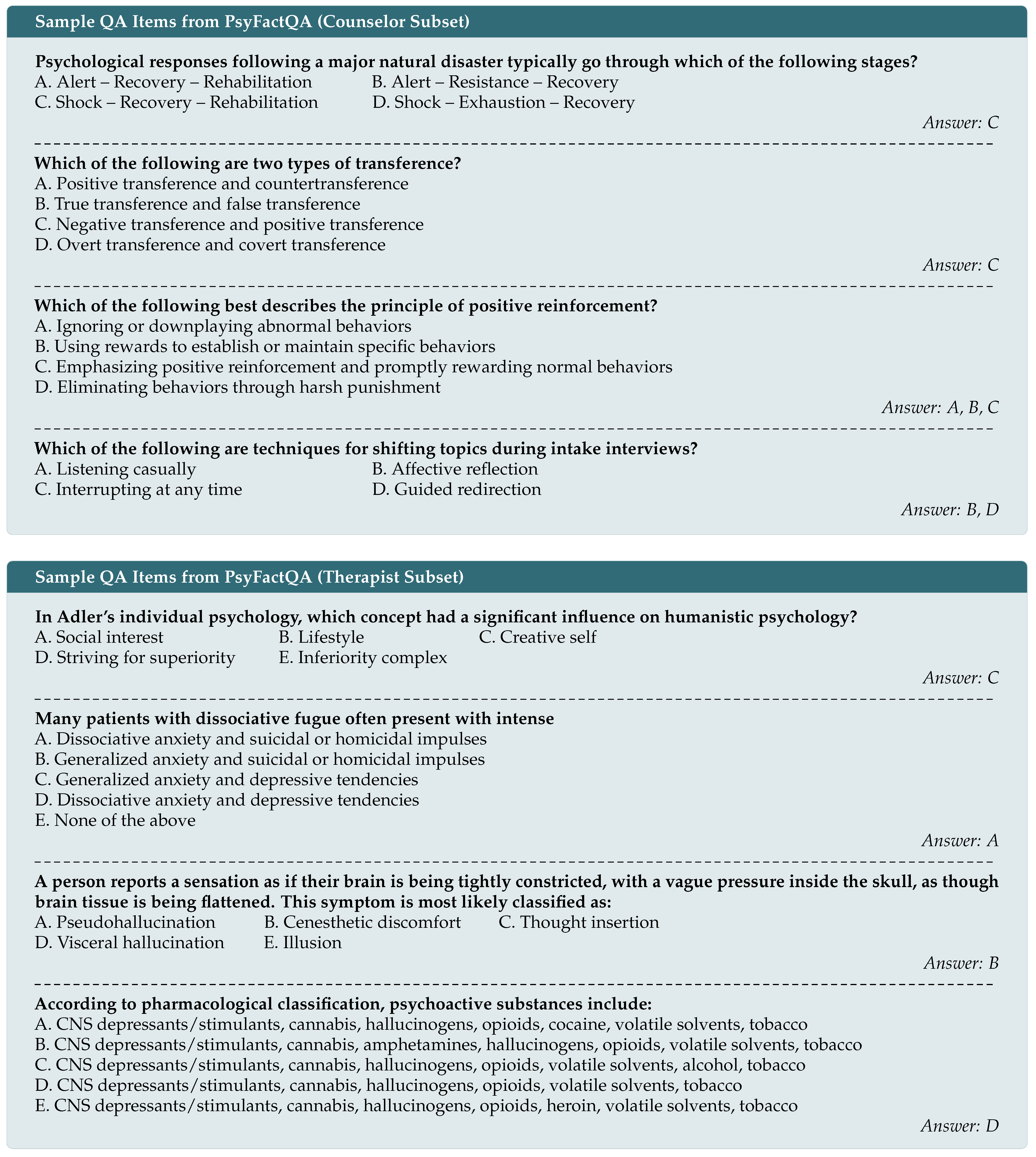

- We constructed a large-scale Chinese psychological QA dataset comprising over 6000 high-quality psychological QA items from professional practice exams for psychological counselors and psychotherapists. This dataset covers a broad spectrum of knowledge relevant to clinical psychological practice and mental disorder diagnosis.

- We evaluated diverse LLMs, including dense, MoE, and reasoning models across various parameter scales (7B to 235B) and both closed-ended and open-ended settings. Experimental results suggest that larger and newer models generally achieve higher accuracy, but performance gains are not always consistent across open-ended settings. MoE models outperform dense models when active parameters are comparable. Reasoning models provide limited accuracy gains but incur significantly higher inference latency.

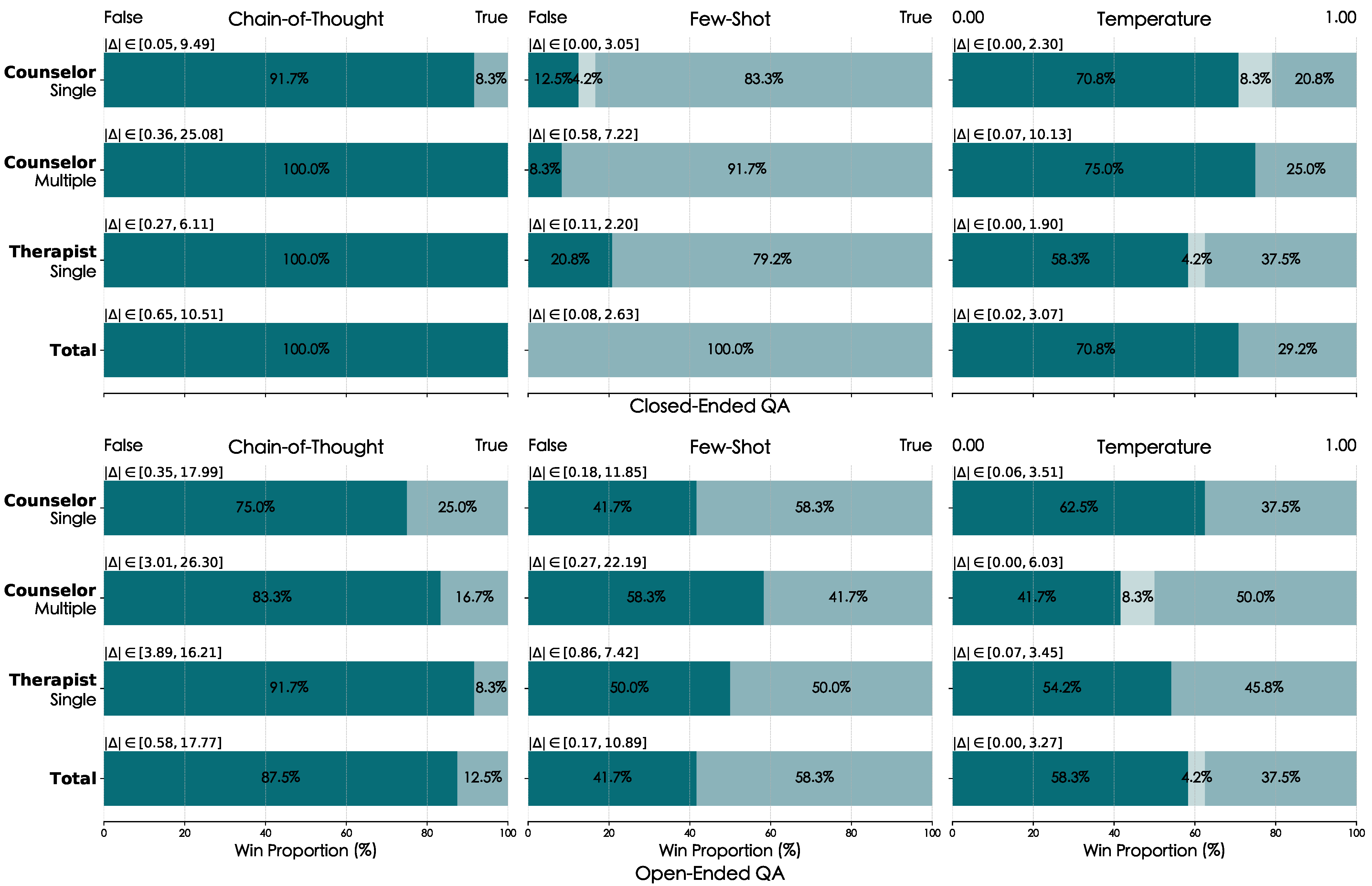

- We explored the impact of inference strategies on psychological QA using a win-rate comparison. Experimental results showed that few-shot learning consistently improved performance, while CoT offered limited benefits and increased latency. Lower temperatures improved accuracy in closed-ended QA but had negligible effects in open-ended settings.

- We conducted a fine-grained error analysis by ranking question-level pass rates. We identified three major error types: factual errors, reasoning errors, and decision errors. Our findings highlight that LLMs still struggle with some basic psychological factual knowledge, psychometric reasoning, and interpreting nuanced behavioral scenarios, reflecting limitations in psychological knowledge coverage and contextual understanding.

2. Related Work

2.1. LLMs

2.2. Evaluations of LLMs

3. PsyFactQA: A Psychological QA Benchmark Dataset

4. Evaluation

4.1. Evaluated LLMs

- (1)

- Non-Reasoning Models:

- Qwen3† [4] (2025): The latest (In this paper, “latest” refers to versions released up to 1 May 2025) series of models from the Qwen family are intended to advance performance, efficiency, and multilingual capabilities. Qwen3 was pre-trained on 36 trillion tokens drawn from a diverse corpus including plain text, text extracted from PDF documents, and synthetic data. We included two types of Qwen3 models: dense models (8B, 14B, and 32B) and MoE models (235B-A22B, and 30B-A3B).

- DeepSeek-V3† [41] (2025): This MoE model has 671B total parameters, with 37B activated during inference. It was pre-trained on 14.8 trillion high-quality and diverse tokens, and optimized through supervised fine-tuning and reinforcement learning.

- Qwen2.5† [44] (2025): This series was trained on 18 trillion high-quality tokens, incorporating more common sense, expert knowledge, and reasoning information. More than 1 million samples were used during post-training to enhance instruction-following capabilities. We included four dense models: 7B, 14B, 32B, and 72B.

- GLM-4-0414† [42] (2025): This latest GLM dense model series was pre-trained on 15 trillion high-quality tokens and further optimized via supervised fine-tuning and human preference alignment. Two model sizes were included: 9B, and 32B.

- GLM-4-9B-Chat† [59] (2024): This fourth-generation GLM model was pre-trained on 10 trillion multilingual tokens and fine-tuned with supervised learning and human feedback, showing strong performance in semantics, knowledge, and reasoning tasks.

- ChatGLM3-6B† [60] (2023): As the third-generation model in the GLM family, this dialog-optimized version shows competitive performance compared to other models within 10 billion parameters, especially in knowledge and reasoning tasks.

- ChatGLM2-6B† [61] (2023): As the second-generation GLM model, it was pre-trained on 1.4 trillion bilingual tokens and fine-tuned with supervised learning and human preference alignment, outperforming similarly sized models at the time of release.

- ChatGLM-6B† [62] (2023): As the first-generation GLM model, it was pre-trained on 1 trillion tokens and fine-tuned with supervised learning and reinforcement learning.

- Qwen† [43] (2023): As the first-generation models in the Qwen family, they were pre-trained on 3 trillion tokens and fine-tuned with supervised learning and reinforcement learning from human feedback. They are reported to perform well in arithmetic and logical reasoning. The evaluation includes three dense models: 7B, 14B, and 72B.

- Baichuan2† [45] (2023): The latest series of models from the Baichuan family, which was pre-trained on 2.6 trillion high-quality tokens, showed significantly improved performance across benchmarks. We included two dense models: 7B and 13B.

- ERNIE-4.5‡ [63] (2024): This is the latest closed-source LLM from the ERNIE family, jointly trained on text, images, and videos. It achieves significant improvements in cross-modal learning efficiency and multimodal understanding.

- GPT-4o‡ [2] (2024): One of the leading LLMs for dialogue, it achieves strong results across a wide range of benchmarks, including conversation, knowledge reasoning, and code generation, in both text and multimodal settings.

- ERNIE-4‡ [64] (2024): A widely adopted LLM particularly for Chinese language, it demonstrates improved capabilities in semantic understanding, text generation, and reasoning over its predecessor, making it suitable for complex tasks.

- ChatGPT‡ [1] (2022): Recognized as a milestone in conversational artificial intelligence, it delivers strong performance in language understanding and generation.

- (2)

- Reasoning Models:

- Qwen3-Think† [4] (2025): This series of models introduces a naturally integrated thinking mode. We included five models from both dense and MoE architectures: 32B, 14B, 8B, 235B-A22B, and 30B-A3B, and we activated their thinking mode.

- QwQ-32B† [65] (2025): Based on Qwen2.5, this model adopts multi-stage reinforcement learning, starting with accuracy and execution signals for math and coding, and then using general rewards and rule-based validators to enhance its performance.

- GLM-Z1-0414† [42] (2025): Built on GLM-4-0414, this model series enhances deep reasoning through cold-start and extended reinforcement learning, improving performance on math and complex logic tasks. We included its two models: 9B and 32B.

- ERNIE-X1‡ [68] (2025): The latest reasoning model from the ERNIE family, featuring enhanced comprehension, planning, and reflection. It excels in Chinese knowledge QA, literary generation, logical reasoning, and complex calculations.

- o3-mini‡ [69] (2025): A reasoning model focused on STEM domains, it improves efficiency while matching or surpassing its predecessor in multiple benchmarks.

- o4-mini‡ [47] (2025): The latest in OpenAI reasoning models family, it builds on o3-mini with stronger tool use and problem-solving capabilities, outperforming its predecessor in expert evaluations and setting a new standard for reasoning.

4.2. Evaluation Mode

- Direct Answering: LLMs are required to directly produce the answer option or content based on the given question, without step-by-step reasoning or any examples.

- Closed/Open-Ended: In the closed-ended setting, LLMs receive both the question stem and answer options, reflecting standardized exam formats, and their responses are extracted using regular expressions. In contrast, in the open-ended setting, only the question stem is provided, and the generated answers are evaluated by Qwen3-32B for whether they at least contain the correct answers.

4.3. Research Questions

- RQ1: How well do popular LLMs perform on the psychological QA dataset?

- RQ2: How do reasoning and non-reasoning LLMs differ on psychological QA?

- RQ3: How do different evaluation modes affect the accuracy of psychological QA?

- RQ4: What kinds of errors do LLMs make in psychological QA?

4.4. RQ1: Comparative Psychological QA Accuracy of Popular LLMs

4.5. RQ2: Reasoning vs. Non-Reasoning LLMs in Psychological QA Accuracy

4.6. RQ3: Psychological QA Performance Across Evaluation Modes

4.7. RQ4: Error Analysis of LLM Performance in Psychological QA

- Factual Error: This type includes questions related to established psychological knowledge, such as concepts from the history of psychology, psychotherapy, cognitive psychology, psychometrics, expert consensus, and diagnostic criteria for mental disorders. These questions have clear, fixed answers and usually require no reasoning. They assess the model’s ability to recall factual knowledge about psychological concepts, diagnostic standards, and medication recommendations. Examples: “Which event marked the birth of scientific psychology?”, “Which medications are used to treat bulimia nervosa?”.

- Reasoning Error: These questions involve basic psychometric computations and typically require recognizing variable relationships, or performing simple calculations. This category evaluates the model’s abilities in logic, causality, and basic numerical reasoning. Examples: “A child aged 8 has a mental age of 10. What is their ratio IQ?”, “A test item has a full score of 15 and an average score of 9.6. What is its difficulty index approximately?”

- Decision Error: This question type involves interpreting specific behavioral scenarios or case-based descriptions to identify the underlying psychological concept or state. Such questions provide detailed context or observable behaviors and evaluate the model’s ability to apply theoretical knowledge to diagnostic or interpretive judgments. Examples: “If a client constantly discusses others to prove they have no issues, this pattern may indicate:”, “When the counselor and client have inconsistent goals that are difficult to align, how should the goals be determined?”, “How should a counselor respond to a client’s conflicted silence?”

5. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| LLMs | Large Language Models |

| QA | Question Answering |

| CoT | Chain-of-thought |

| FS | Few-Shot |

| MoE | Mixture-of-Expert |

References

- OpenAI. Introducing ChatGPT. Available online: https://openai.com/blog/chatgpt (accessed on 27 July 2025).

- OpenAI. Hello GPT-4o. Available online: https://openai.com/index/hello-gpt-4o (accessed on 27 July 2025).

- Yang, A.; Yang, B.; Zhang, B.; Hui, B.; Zheng, B.; Yu, B.; Li, C.; Liu, D.; Huang, F.; Wei, H.; et al. Qwen2.5 Technical Report. arXiv 2025. [Google Scholar] [CrossRef]

- Yang, A.; Li, A.; Yang, B.; Zhang, B.; Hui, B.; Zheng, B.; Yu, B.; Gao, C.; Huang, C.; Lv, C.; et al. Qwen3 Technical Report. arXiv 2025. [Google Scholar] [CrossRef]

- Hou, W.; Ji, Z. Comparing Large Language Models and Human Programmers for Generating Programming Code. Adv. Sci. 2025, 12, 2412279. [Google Scholar] [CrossRef] [PubMed]

- Lyu, M.R.; Ray, B.; Roychoudhury, A.; Tan, S.H.; Thongtanunam, P. Automatic Programming: Large Language Models and Beyond. ACM Trans. Softw. Eng. Methodol. 2024, 34, 1–33. [Google Scholar] [CrossRef]

- Husein, R.A.; Aburajouh, H.; Catal, C. Large language models for code completion: A systematic literature review. Comput. Stand. Interfaces 2025, 92, 103917. [Google Scholar] [CrossRef]

- Singhal, K.; Tu, T.; Gottweis, J.; Sayres, R.; Wulczyn, E.; Amin, M.; Hou, L.; Clark, K.; Pfohl, S.R.; Cole-Lewis, H.; et al. Toward Expert-Level Medical Question Answering with Large Language Models. Nat. Med. 2025, 31, 943–950. [Google Scholar] [CrossRef]

- Louis, A.; van Dijck, G.; Spanakis, G. Interpretable Long-Form Legal Question Answering with Retrieval-Augmented Large Language Models. In Proceedings of the 38th AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 22266–22275. [Google Scholar]

- Li, X.; Zhou, Y.; Dou, Z. UniGen: A Unified Generative Framework for Retrieval and Question Answering with Large Language Models. In Proceedings of the 38th AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 8688–8696. [Google Scholar]

- Chen, Y.; Xing, X.; Lin, J.; Zheng, H.; Wang, Z.; Liu, Q.; Xu, X. SoulChat: Improving LLMs’ Empathy, Listening, and Comfort Abilities through Fine-Tuning with Multi-Turn Empathy Conversations. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; pp. 1170–1183. [Google Scholar]

- Blanco-Cuaresma, S. Psychological Assessments with Large Language Models: A Privacy-Focused and Cost-Effective Approach. In Proceedings of the 9th Workshop on Computational Linguistics and Clinical Psychology, St. Julians, Malta, 21 March 2024; pp. 203–210. [Google Scholar]

- Zheng, D.; Lapata, M.; Pan, J.Z. How Reliable are LLMs as Knowledge Bases? Re-thinking Facutality and Consistency. arXiv 2024. [Google Scholar] [CrossRef]

- Demszky, D.; Yang, D.; Yeager, D.S.; Bryan, C.J.; Clapper, M.; Chandhok, S.; Eichstaedt, J.C.; Hecht, C.; Jamieson, J.; Johnson, M.; et al. Using Large Language Models in Psychology. Nat. Rev. Psychol. 2023, 2, 688–701. [Google Scholar] [CrossRef]

- Katz, D.M.; Bommarito, M.J.; Gao, S.; Arredondo, P. GPT-4 passes the bar exam. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2024, 382, 20230254. [Google Scholar] [CrossRef]

- Ali, R.; Tang, O.Y.; Connolly, I.D.; Zadnik Sullivan, P.L.; Shin, J.H.; Fridley, J.S.; Asaad, W.F.; Cielo, D.; Oyelese, A.A.; Doberstein, C.E.; et al. Performance of ChatGPT and GPT-4 on Neurosurgery Written Board Examinations. Neurosurgery 2023, 93, 1353–1365. [Google Scholar] [CrossRef]

- Zong, H.; Wu, R.; Cha, J.; Wang, J.; Wu, E.; Li, J.; Zhou, Y.; Zhang, C.; Feng, W.; Shen, B. Large Language Models in Worldwide Medical Exams: Platform Development and Comprehensive Analysis. J. Med. Internet Res. 2024, 26, e66114. [Google Scholar] [CrossRef]

- Kasneci, E.; Sessler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for Good? On Opportunities and Challenges of Large Language Models for Education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Xing, W.; Nixon, N.; Crossley, S.; Denny, P.; Lan, A.; Stamper, J.; Yu, Z. The Use of Large Language Models in Education. Int. J. Artif. Intell. Educ. 2025, 35, 439–443. [Google Scholar] [CrossRef]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions. ACM Trans. Inf. Syst. 2025, 43, 1–55. [Google Scholar] [CrossRef]

- Hao, G.; Wu, J.; Pan, Q.; Morello, R. Quantifying the Uncertainty of LLM Hallucination Spreading in Complex Adaptive Social Networks. Sci. Rep. 2024, 14, 16375. [Google Scholar] [CrossRef]

- Tang, W.; Cao, Y.; Deng, Y.; Ying, J.; Wang, B.; Yang, Y.; Zhao, Y.; Zhang, Q.; Huang, X.; Jiang, Y.; et al. EvoWiki: Evaluating LLMs on Evolving Knowledge. arXiv 2024. [Google Scholar] [CrossRef]

- Wang, W.; Shi, J.; Tu, Z.; Yuan, Y.; Huang, J.; Jiao, W.; Lyu, M.R. The Earth is Flat? Unveiling Factual Errors in Large Language Models. arXiv 2024. [Google Scholar] [CrossRef]

- Yoshida, L. The Impact of Example Selection in Few-Shot Prompting on Automated Essay Scoring Using GPT Models. In Artificial Intelligence in Education. Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practitioners, Doctoral Consortium and Blue Sky, Proceedings of the 25th International Conference, AIED 2024, Recife, Brazil, 8–12 July 2024; Springer: Cham, Switzerland, 2024; pp. 61–73. [Google Scholar]

- Steyvers, M.; Tejeda, H.; Kumar, A.; Belem, C.; Karny, S.; Hu, X.; Mayer, L.W.; Smyth, P. What Large Language Models Know and What People Think They Know. Nat. Mach. Intell. 2025, 7, 221–231. [Google Scholar] [CrossRef]

- Echterhoff, J.M.; Liu, Y.; Alessa, A.; McAuley, J.; He, Z. Cognitive Bias in Decision-Making with LLMs. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; pp. 12640–12653. [Google Scholar]

- Xie, H.; Chen, Y.; Xing, X.; Lin, J.; Xu, X. PsyDT: Using LLMs to Construct the Digital Twin of Psychological Counselor with Personalized Counseling Style for Psychological Counseling. arXiv 2024. [Google Scholar] [CrossRef]

- Lu, H.; Liu, T.; Cong, R.; Yang, J.; Gan, Q.; Fang, W.; Wu, X. QAIE: LLM-based Quantity Augmentation and Information Enhancement for few-shot Aspect-Based Sentiment Analysis. Inf. Process. Manag. 2025, 62, 103917. [Google Scholar] [CrossRef]

- Hellwig, N.C.; Fehle, J.; Wolff, C. Exploring large language models for the generation of synthetic training samples for aspect-based sentiment analysis in low resource settings. Expert Syst. Appl. 2025, 261, 125514. [Google Scholar] [CrossRef]

- Xu, X.; Yao, B.; Dong, Y.; Gabriel, S.; Yu, H.; Hendler, J.; Ghassemi, M.; Dey, A.K.; Wang, D. Mental-LLM: Leveraging Large Language Models for Mental Health Prediction via Online Text Data. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2024, 8, 1–32. [Google Scholar] [CrossRef]

- Zhang, J.; He, H.; Song, N.; Zhou, Z.; He, S.; Zhang, S.; Qiu, H.; Li, A.; Dai, Y.; Ma, L.; et al. ConceptPsy:A Benchmark Suite with Conceptual Comprehensiveness in Psychology. arXiv 2024. [Google Scholar] [CrossRef]

- Hu, J.; Dong, T.; Luo, G.; Ma, H.; Zou, P.; Sun, X.; Guo, D.; Yang, X.; Wang, M. PsycoLLM: Enhancing LLM for Psychological Understanding and Evaluation. IEEE Trans. Comput. Soc. Syst. 2025, 12, 539–551. [Google Scholar] [CrossRef]

- Zhao, J.; Zhu, J.; Tan, M.; Yang, M.; Li, R.; Di, Y.; Zhang, C.; Ye, G.; Li, C.; Hu, X.; et al. CPsyExam: A Chinese Benchmark for Evaluating Psychology using Examinations. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; Rambow, O., Wanner, L., Apidianaki, M., Al-Khalifa, H., Eugenio, B.D., Schockaert, S., Eds.; pp. 11248–11260. [Google Scholar]

- Wang, C.; Luo, W.; Dong, S.; Xuan, X.; Li, Z.; Ma, L.; Gao, S. MLLM-Tool: A Multimodal Large Language Model for Tool Agent Learning. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 26 February–6 March 2025; pp. 6678–6687. [Google Scholar]

- Bai, S.; Chen, K.; Liu, X.; Wang, J.; Ge, W.; Song, S.; Dang, K.; Wang, P.; Wang, S.; Tang, J.; et al. Qwen2.5-VL Technical Report. arXiv 2025. [Google Scholar] [CrossRef]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 27 July 2025).

- Algherairy, A.; Ahmed, M. Prompting large language models for user simulation in task-oriented dialogue systems. Comput. Speech Lang. 2025, 89, 101697. [Google Scholar] [CrossRef]

- Seo, H.; Hwang, T.; Jung, J.; Kang, H.; Namgoong, H.; Lee, Y.; Jung, S. Large Language Models as Evaluators in Education: Verification of Feedback Consistency and Accuracy. Appl. Sci. 2025, 15, 671. [Google Scholar] [CrossRef]

- Duong, B.T.; Le, T.H. MedQAS: A Medical Question Answering System Based on Finetuning Large Language Models. In Future Data and Security Engineering, Big Data, Security and Privacy, Smart City and Industry 4.0 Applications, Proceedings of the 11th International Conference on Future Data and Security Engineering, FDSE 2024, Binh Duong, Vietnam, 27–29 November 2024; Dang, T.K., Küng, J., Chung, T.M., Eds.; Springer: Singapore, 2024; pp. 297–307. [Google Scholar]

- Polignano, M.; Musto, C.; Pellungrini, R.; Purificato, E.; Semeraro, G.; Setzu, M. XAI.it 2024: An Overview on the Future of AI in the era of Large Language Models. In Proceedings of the 5th Italian Workshop on Explainable Artificial Intelligence, Co-Located with the 23rd International Conference of the Italian Association for Artificial Intelligence, Bolzano, Italy, 25–28 November 2024; Volume 3839, pp. 1–10. [Google Scholar]

- DeepSeek-AI; Liu, A.; Feng, B.; Xue, B.; Wang, B.; Wu, B.; Lu, C.; Zhao, C.; Deng, C.; Zhang, C.; et al. DeepSeek-V3 Technical Report. arXiv 2025, arXiv:2412.19437. [Google Scholar] [CrossRef]

- THUDM. GLM-4-0414—A THUDM Collection. Available online: https://huggingface.co/collections/THUDM/glm-4-0414-67f3cbcb34dd9d252707cb2e (accessed on 27 July 2025).

- Qwen. Qwen—A Qwen Collection. Available online: https://huggingface.co/collections/Qwen/qwen-65c0e50c3f1ab89cb8704144 (accessed on 27 July 2025).

- Qwen. Qwen2.5—A Qwen Collection. Available online: https://huggingface.co/collections/Qwen/qwen25-66e81a666513e518adb90d9e (accessed on 27 July 2025).

- Yang, A.; Xiao, B.; Wang, B.; Zhang, B.; Bian, C.; Yin, C.; Lv, C.; Pan, D.; Wang, D.; Yan, D.; et al. Baichuan 2: Open Large-scale Language Models. arXiv 2025. [Google Scholar] [CrossRef]

- DeepSeek-AI; Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; et al. DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning. arXiv 2025. [Google Scholar] [CrossRef]

- OpenAI. Introducing OpenAI o3 and o4-mini. Available online: https://openai.com/index/introducing-o3-and-o4-mini (accessed on 27 July 2025).

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. In Proceedings of the 36th International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Curran Associates, Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 24824–24837. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Peeperkorn, M.; Kouwenhoven, T.; Brown, D.; Jordanous, A. Is Temperature the Creativity Parameter of Large Language Models? In Proceedings of the 15th International Conference on Computational Creativity, ICCC 2024, Jönköping, Sweden, 17–21 June 2024; Association for Computational Creativity: Jönköping, Sweden, 2024; pp. 226–235. [Google Scholar]

- Xiao, Z.; Deng, W.H.; Lam, M.S.; Eslami, M.; Kim, J.; Lee, M.; Liao, Q.V. Human-Centered Evaluation and Auditing of Language Models. In Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024. CHI EA ’24. [Google Scholar]

- Zheng, S.; Zhang, Y.; Zhu, Y.; Xi, C.; Gao, P.; Xun, Z.; Chang, K. GPT-Fathom: Benchmarking Large Language Models to Decipher the Evolutionary Path towards GPT-4 and Beyond. In Proceedings of the Findings of the Association for Computational Linguistics: NAACL 2024, Mexico City, Mexico, 16–21 June 2024; Duh, K., Gomez, H., Bethard, S., Eds.; pp. 1363–1382. [Google Scholar]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y.; et al. A Survey on Evaluation of Large Language Models. ACM Trans. Intell. Syst. Technol. 2024, 15, 1–45. [Google Scholar] [CrossRef]

- Huang, Y.; Bai, Y.; Zhu, Z.; Zhang, J.; Zhang, J.; Su, T.; Liu, J.; Lv, C.; Zhang, Y.; Lei, J.; et al. C-Eval: A Multi-Level Multi-Discipline Chinese Evaluation Suite for Foundation Models. In Proceedings of the 37th International Conference on Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023; Curran Associates, Inc.: Red Hook, NY, USA, 2023; Volume 36, pp. 62991–63010. [Google Scholar]

- Hu, Y.; Goktas, Y.; Yellamati, D.D.; De Tassigny, C. The Use and Misuse of Pre-Trained Generative Large Language Models in Reliability Engineering. In Proceedings of the 2024 Annual Reliability and Maintainability Symposium (RAMS), Albuquerque, NM, USA, 22–25 January 2024; pp. 1–7. [Google Scholar]

- Fu, X.; Wu, G.; Chen, X.; Liu, Y.; Wang, Y.; Lin, C. Professional Qualification Certification for Psychological Counselors. In Proceedings of the 21st National Conference on Psychology, Beijing, China, 2 November 2018; p. 149. [Google Scholar]

- Wang, M.; Jiang, G.; Yan, Y.; Zhou, Z. Certification Methods for Professional Qualification of Psychological Counselors and Therapists in China. Chin. J. Ment. Health 2015, 29, 503–509. [Google Scholar]

- Shen, J.; Wu, Z. A Review of Competency Assessment Research for Psychotherapists in China. Med. Philos. 2021, 42, 50–53. [Google Scholar]

- THUDM. GLM-4-9B-Chat. Available online: https://huggingface.co/THUDM/glm-4-9b-chat (accessed on 27 July 2025).

- THUDM. ChatGLM3-6B. Available online: https://huggingface.co/THUDM/chatglm3-6b (accessed on 27 July 2025).

- THUDM. ChatGLM2-6B. Available online: https://huggingface.co/THUDM/chatglm2-6b (accessed on 27 July 2025).

- THUDM. ChatGLM-6B. Available online: https://huggingface.co/THUDM/chatglm-6b (accessed on 27 July 2025).

- Baidu. ERNIE-4.5. Available online: https://yiyan.baidu.com (accessed on 27 July 2025).

- Baidu. ERNIE-4. Available online: https://yiyan.baidu.com (accessed on 27 July 2025).

- Qwen Team. QwQ-32B: Embracing the Power of Reinforcement Learning. Available online: https://qwenlm.github.io/blog/qwq-32b/ (accessed on 27 July 2025).

- deepseek-ai. DeepSeek-R1. Available online: https://huggingface.co/deepseek-ai/DeepSeek-R1 (accessed on 27 July 2025).

- Shao, Z.; Wang, P.; Zhu, Q.; Xu, R.; Song, J.; Bi, X.; Zhang, H.; Zhang, M.; Li, Y.K.; Wu, Y.; et al. DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models. arXiv 2024, arXiv:2402.03300. [Google Scholar] [CrossRef]

- Baidu. ERNIE-X1. Available online: https://yiyan.baidu.com (accessed on 27 July 2025).

- OpenAI. OpenAI o3-mini. Available online: https://openai.com/index/openai-o3-mini (accessed on 27 July 2025).

- Fung, S.C.E.; Wong, M.F.; Tan, C.W. Chain-of-Thoughts Prompting with Language Models for Accurate Math Problem-Solving. In Proceedings of the 2023 IEEE MIT Undergraduate Research Technology Conference (URTC), Cambridge, MA, USA, 6–8 October 2023; pp. 1–5. [Google Scholar]

- Henkel, O.; Horne-Robinson, H.; Dyshel, M.; Thompson, G.; Abboud, R.; Ch, N.A.N.; Moreau-Pernet, B.; Vanacore, K. Learning to Love LLMs for Answer Interpretation: Chain-of-Thought Prompting and the AMMORE Dataset. J. Learn. Anal. 2025, 12, 50–64. [Google Scholar] [CrossRef]

- Yang, G.; Zhou, Y.; Chen, X.; Zhang, X.; Zhuo, T.Y.; Chen, T. Chain-of-Thought in Neural Code Generation: From and for Lightweight Language Models. IEEE Trans. Softw. Eng. 2024, 50, 2437–2457. [Google Scholar] [CrossRef]

- Tian, Z.; Chen, J.; Zhang, X. Fixing Large Language Models’ Specification Misunderstanding for Better Code Generation. In Proceedings of the 2025 IEEE/ACM 47th International Conference on Software Engineering (ICSE), Ottawa, ON, Canada, 27 April–3 May 2025; p. 645. [Google Scholar]

- Parikh, S.; Tiwari, M.; Tumbade, P.; Vohra, Q. Exploring Zero and Few-shot Techniques for Intent Classification. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 5: Industry Track), Toronto, ON, Canada, 9–14 July 2023; pp. 744–751. [Google Scholar]

- Li, Q.; Chen, Z.; Ji, C.; Jiang, S.; Li, J. LLM-based Multi-Level Knowledge Generation for Few-shot Knowledge Graph Completion. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence, IJCAI-24, Jeju, Republic of Korea, 3–9 August 2024; pp. 2135–2143, Main Track. [Google Scholar]

- Bi, J.; Zhu, W.; He, J.; Zhang, X.; Xian, C. Large Model Fine-tuning for Suicide Risk Detection Using Iterative Dual-LLM Few-Shot Learning with Adaptive Prompt Refinement for Dataset Expansion. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData), Washington, DC, USA, 15–18 December 2024; pp. 8520–8526. [Google Scholar]

- Renze, M. The Effect of Sampling Temperature on Problem Solving in Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; pp. 7346–7356. [Google Scholar]

- Patel, D.; Timsina, P.; Raut, G.; Freeman, R.; Levin, M.A.; Nadkarni, G.N.; Glicksberg, B.S.; Klang, E. Exploring Temperature Effects on Large Language Models Across Various Clinical Tasks. medRxiv 2024. [Google Scholar] [CrossRef]

- Anderson, J. Cognitive Psychology and Its Implications; Post & Telecom Press: Beijing, China, 2012. [Google Scholar]

- Dai, X. Handbook of Common Psychological Assessment Scales; People’s Military Medical Press: Beijing, China, 2010. [Google Scholar]

- Jin, H.; Wu, W.; Zhang, M. A Preliminary Analysis of SCL-90 Scores in the Chinese Normal Population. Chin. J. Neuropsychiatr. Dis. 1986, 12, 260–263. [Google Scholar]

- Wang, X.; Wang, X.; Ma, H. Manual of Mental Health Rating Scales; Chinese Journal of Mental Health Press: Beijing, China, 1999. [Google Scholar]

- Shao, Z. Psychological Statistics; China Light Industry Press: Beijing, China, 2024. [Google Scholar]

- McCarty, R. The Alarm Phase and the General Adaptation Syndrome: Two Aspects of Selye’s Inconsistent Legacy. In Stress: Concepts, Cognition, Emotion, and Behavior; Academic Press: Cambridge, MA, USA, 2016; pp. 13–19. [Google Scholar]

- Loizzo, A.; Campana, G.; Loizzo, S.; Spampinato, S. Postnatal Stress Procedures Induce Long-Term Endocrine and Metabolic Alterations Involving Different Proopiomelanocortin-Derived Peptides. In Neuropeptides in Neuroprotection and Neuroregeneration; CRC Press: Boca Raton, FL, USA, 2012; pp. 107–126. [Google Scholar]

- Rudolf, G. Structure-oriented psychotherapy; [Strukturbezogene Psychotherapie]. Psychotherapeut 2012, 57, 357–372. [Google Scholar] [CrossRef]

- Corey, G. Theory and Practice of Counseling and Psychotherapy, 10th ed.; Cengage Learning: Boston, MA, USA, 2016. [Google Scholar]

- Amianto, F.; Ferrero, A.; Pierò, A.; Cairo, E.; Rocca, G.; Simonelli, B.; Fassina, S.; Abbate-Daga, G.; Fassino, S. Supervised Team Management, with or without Structured Psychotherapy, in Heavy Users of a Mental Health Service with Borderline Personality Disorder: A Two-Year Follow-up Preliminary Randomized Study. BMC Psychiatry 2011, 11, 181. [Google Scholar] [CrossRef]

| Counselor | Therapist | Total | Counselor | Therapist | Total | |

|---|---|---|---|---|---|---|

| Single-Choice | 2001 | 2632 | 4633 | 1679 | 1388 | 3067 |

| Multiple-Choice | 1372 | 0 | 1372 | 365 | 0 | 365 |

| Total | 3373 | 2632 | 6005 | 2044 | 1388 | 3432 |

| LLMs | Counselor | Therapist | Total | RL | Counselor | Therapist | Total | RL | ||

|---|---|---|---|---|---|---|---|---|---|---|

| Single (%) | Multiple (%) | Single (%) | (%) | (s) | Single (%) | Multiple (%) | Single (%) | (%) | (s) | |

| Qwen3-32B | 88.96 | 69.75 | 83.28 | 82.08 | 0.63 | 63.61 | 57.26 | 62.90 | 62.65 | 2.49 |

| Qwen3-14B | 84.36 | 64.21 | 79.71 | 77.72 | 0.25 | 58.43 | 51.78 | 60.01 | 58.36 | 0.80 |

| Qwen3-8B | 79.66 | 57.00 | 76.22 | 72.97 | 0.21 | 49.26 | 41.64 | 55.33 | 50.90 | 0.75 |

| Qwen3-235B-A22B | 91.20 | 67.93 | 86.97 | 84.03 | 0.75 | 63.19 | 53.97 | 67.51 | 63.96 | 0.33 |

| Qwen3-30B-A3B | 84.56 | 60.42 | 81.27 | 77.60 | 0.17 | 56.22 | 47.12 | 58.36 | 56.12 | 0.60 |

| DeepSeek-V3 | 88.41 | 72.38 | 85.14 | 83.31 | 1.29 | 67.18 | 57.81 | 65.13 | 65.36 | 5.23 |

| Qwen2.5-72B | 92.75 | 73.11 | 86.82 | 85.66 | 0.32 | 63.19 | 59.73 | 68.08 | 64.80 | 1.70 |

| Qwen2.5-32B | 94.40 | 80.39 | 68.96 | 80.05 | 0.14 | 64.38 | 55.89 | 64.70 | 63.61 | 2.11 |

| Qwen2.5-14B | 89.71 | 71.79 | 65.31 | 74.92 | 0.13 | 62.54 | 58.36 | 62.68 | 62.15 | 1.63 |

| Qwen2.5-7B | 87.06 | 70.34 | 62.54 | 72.49 | 0.08 | 55.45 | 51.23 | 55.69 | 55.10 | 0.64 |

| GLM-4-0414-32B | 83.71 | 62.61 | 81.35 | 77.85 | 0.15 | 56.76 | 43.29 | 58.57 | 56.06 | 0.73 |

| GLM-4-0414-9B | 77.01 | 51.09 | 73.52 | 69.56 | 0.13 | 48.00 | 39.73 | 53.17 | 49.21 | 1.41 |

| GLM-4-9B | 72.16 | 49.71 | 72.76 | 67.29 | 0.19 | 43.90 | 34.79 | 52.45 | 46.39 | 0.71 |

| ChatGLM3-6B | 44.28 | 28.50 | 42.02 | 39.68 | 0.09 | 13.28 | 11.23 | 24.14 | 17.45 | 0.86 |

| ChatGLM2-6B | 50.17 | 29.52 | 48.82 | 44.86 | 0.18 | 28.65 | 24.11 | 34.29 | 30.45 | 0.82 |

| ChatGLM-6B | 38.33 | 11.22 | 35.56 | 30.92 | 1.85 | 24.18 | 18.08 | 26.95 | 24.65 | 1.79 |

| Qwen-72B | 86.51 | 65.38 | 81.31 | 79.40 | 0.24 | 41.33 | 30.14 | 55.62 | 45.92 | 1.01 |

| Qwen-14B | 65.22 | 40.45 | 66.98 | 60.33 | 0.20 | 40.62 | 36.16 | 47.55 | 42.95 | 0.95 |

| Qwen-7B | 56.02 | 27.11 | 56.00 | 49.41 | 0.33 | 34.78 | 32.88 | 41.43 | 37.27 | 1.12 |

| Baichuan2-13B | 51.17 | 23.69 | 50.15 | 44.45 | 0.12 | 30.61 | 26.30 | 38.62 | 33.39 | 0.99 |

| Baichuan2-7B | 54.57 | 7.65 | 52.58 | 42.98 | 0.07 | 34.01 | 26.03 | 39.12 | 35.23 | 0.63 |

| ERNIE-4.5 | 95.85 | 82.73 | 88.98 | 89.84 | 0.61 | 77.49 | 73.70 | 74.78 | 75.99 | 2.05 |

| ERNIE-4 | 85.21 | 62.32 | 80.28 | 77.82 | 1.04 | 64.12 | 65.27 | 2.29 | ||

| GPT-4o | 76.66 | 53.06 | 80.24 | 72.84 | 0.75 | 53.60 | 40.82 | 61.38 | 55.39 | 2.10 |

| ChatGPT | 53.92 | 29.01 | 58.70 | 50.32 | 0.72 | 37.05 | 24.93 | 45.53 | 39.19 | 1.63 |

| Qwen3-32B-Think | 87.61 | 38.78 | 82.86 | 74.37 | 15.07 | 57.18 | 54.79 | 57.71 | 57.14 | 26.72 |

| Qwen3-14B-Think | 85.21 | 38.78 | 79.37 | 72.04 | 11.72 | 52.65 | 46.03 | 56.12 | 53.35 | 18.52 |

| Qwen3-8B-Think | 80.56 | 35.50 | 77.32 | 68.84 | 14.84 | 47.41 | 36.44 | 51.37 | 47.84 | 20.65 |

| Qwen3-235B-A22B-Think | 85.91 | 14.03 | 55.15 | 42.47 | 56.20 | 54.22 | 31.48 | |||

| Qwen3-30B-A3B-Think | 84.91 | 47.67 | 81.91 | 75.09 | 7.98 | 51.10 | 40.55 | 54.90 | 51.52 | 14.40 |

| QwQ-32B | 46.94 | 77.64 | 21.91 | 52.95 | 39.45 | 52.88 | 51.49 | 36.08 | ||

| DeepSeek-R1 | 91.35 | 76.46 | 81.91 | 83.81 | 20.92 | 70.34 | 64.11 | 71.47 | 70.13 | 35.69 |

| DeepSeek-R1-Distill- Qwen-32B | 83.96 | 42.06 | 81.00 | 73.09 | 8.74 | 45.15 | 31.78 | 47.69 | 44.76 | 17.30 |

| DeepSeek-R1-Distill-Qwen-7B | 51.37 | 18.08 | 46.50 | 41.63 | 11.24 | 15.55 | 12.05 | 19.74 | 16.87 | 13.64 |

| GLM-Z1-0414-32B | 84.41 | 43.95 | 80.78 | 73.57 | 16.27 | 48.12 | 35.89 | 51.30 | 48.11 | 29.84 |

| GLM-Z1-0414-9B | 69.82 | 26.60 | 69.41 | 59.77 | 18.14 | 28.83 | 20.55 | 37.32 | 31.38 | 32.29 |

| ERNIE-X1 | 78.51 | 63.41 | 74.66 | 73.37 | 44.20 | 64.92 | 58.36 | 65.71 | 64.54 | 38.61 |

| o4-mini | 78.71 | 55.25 | 79.67 | 73.77 | 7.73 | 57.95 | 46.03 | 67.29 | 60.46 | 2.49 |

| o3-mini | 74.11 | 52.48 | 77.01 | 70.44 | 5.14 | 57.36 | 47.95 | 64.77 | 59.35 | 14.81 |

| Counselor | Therapist | Total | RL | Counselor | Therapist | Total | RL | |||

|---|---|---|---|---|---|---|---|---|---|---|

| Single | Multiple | Single | Single | Multiple | Single | |||||

| 0.98 | 0.56 | 0.99 | 0.91 | 23.92 | 0.90 | 0.96 | 0.92 | 0.91 | 10.73 | |

| 1.01 | 0.60 | 1.00 | 0.93 | 46.88 | 0.90 | 0.89 | 0.94 | 0.91 | 23.15 | |

| 1.01 | 0.62 | 1.01 | 0.94 | 70.67 | 0.96 | 0.88 | 0.93 | 0.94 | 27.53 | |

| 0.94 | 1.01 | 0.96 | 0.96 | 18.71 | 0.87 | 0.79 | 0.83 | 0.85 | 95.39 | |

| 1.00 | 0.79 | 1.01 | 0.97 | 46.94 | 0.91 | 0.86 | 0.94 | 0.92 | 24.00 | |

| 1.03 | 1.06 | 0.96 | 1.01 | 16.22 | 1.05 | 1.11 | 1.10 | 1.07 | 6.82 | |

| 0.97 | 0.95 | 1.19 | 1.05 | 149.43 | 1.09 | 1.15 | 1.10 | 1.10 | 16.91 | |

| 0.59 | 0.26 | 0.74 | 0.57 | 140.50 | 0.28 | 0.24 | 0.35 | 0.31 | 21.31 | |

| 0.95 | 0.58 | 1.23 | 0.97 | 156.50 | 0.82 | 0.71 | 0.82 | 0.81 | 17.10 | |

| 1.01 | 0.70 | 0.99 | 0.95 | 108.47 | 0.85 | 0.83 | 0.88 | 0.86 | 40.88 | |

| 0.91 | 0.52 | 0.94 | 0.86 | 139.54 | 0.60 | 0.52 | 0.70 | 0.64 | 22.90 | |

| 0.82 | 0.77 | 0.84 | 0.82 | 72.46 | 0.84 | 0.79 | 0.88 | 0.85 | 18.83 | |

| 1.03 | 1.04 | 0.99 | 1.01 | 10.31 | 1.08 | 1.13 | 1.10 | 1.09 | 1.19 | |

| 0.97 | 0.99 | 0.96 | 0.97 | 6.85 | 1.07 | 1.17 | 1.06 | 1.07 | 7.05 | |

| LLMs | CoT | FS | Counselor | Therapist | Total | RL | Counselor | Therapist | Total | RL | |||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Single (%) | Multiple (%) | Single (%) | (%) | (s) | Single (%) | Multiple (%) | Single (%) | (%) | (s) | ||||

| Qwen3-32B | ✗ | ✗ | 0.00 | 89.11 | 71.21 | 83.74 | 82.66 | 0.63 | 64.92 | 55.34 | 66.35 | 64.48 | 2.37 |

| Qwen3-32B | ✗ | ✗ | 1.00 | 88.96 | 69.75 | 83.28 | 82.08 | 0.63 | 63.61 | 57.26 | 62.90 | 62.65 | 2.49 |

| Qwen3-32B | ✓ | ✗ | 0.00 | 86.66 | 63.05 | 82.29 | 79.35 | 5.17 | 46.93 | 32.60 | 50.14 | 46.71 | 9.45 |

| Qwen3-32B | ✓ | ✗ | 1.00 | 85.16 | 60.86 | 80.40 | 77.52 | 5.33 | 48.48 | 30.96 | 50.07 | 47.26 | 10.05 |

| Qwen3-32B | ✗ | ✓ | 0.00 | 90.70 | 75.73 | 0.48 | 62.42 | 44.11 | 58.93 | 59.06 | 2.05 | ||

| Qwen3-32B | ✗ | ✓ | 1.00 | 90.90 | 75.22 | 84.42 | 84.48 | 0.49 | 61.11 | 44.38 | 60.66 | 59.15 | 2.08 |

| Qwen3-32B | ✓ | ✓ | 0.00 | 88.31 | 64.21 | 83.47 | 80.68 | 4.92 | 53.72 | 35.62 | 53.67 | 51.78 | 8.52 |

| Qwen3-32B | ✓ | ✓ | 1.00 | 87.86 | 64.65 | 82.37 | 80.15 | 4.99 | 52.17 | 37.81 | 53.89 | 51.34 | 9.14 |

| Qwen3-14B | ✗ | ✗ | 0.00 | 84.11 | 63.99 | 79.75 | 77.60 | 0.25 | 58.96 | 51.78 | 60.95 | 59.00 | 0.97 |

| Qwen3-14B | ✗ | ✗ | 1.00 | 84.36 | 64.21 | 79.71 | 77.72 | 0.25 | 58.43 | 51.78 | 60.01 | 58.36 | 0.80 |

| Qwen3-14B | ✓ | ✗ | 0.00 | 83.21 | 55.83 | 78.34 | 74.82 | 3.11 | 42.88 | 31.23 | 47.77 | 43.62 | 4.05 |

| Qwen3-14B | ✓ | ✗ | 1.00 | 82.51 | 55.47 | 78.61 | 74.62 | 3.15 | 43.72 | 35.07 | 47.91 | 44.49 | 4.04 |

| Qwen3-14B | ✗ | ✓ | 0.00 | 85.61 | 63.41 | 80.40 | 78.25 | 0.21 | 58.49 | 42.19 | 59.01 | 56.96 | 0.91 |

| Qwen3-14B | ✗ | ✓ | 1.00 | 85.26 | 62.97 | 80.05 | 77.89 | 0.21 | 58.25 | 45.75 | 58.36 | 56.96 | 0.89 |

| Qwen3-14B | ✓ | ✓ | 0.00 | 82.51 | 63.05 | 78.91 | 76.49 | 3.15 | 46.99 | 32.88 | 48.63 | 46.15 | 3.86 |

| Qwen3-14B | ✓ | ✓ | 1.00 | 84.81 | 58.67 | 78.76 | 76.19 | 3.11 | 46.75 | 34.79 | 49.93 | 46.77 | 3.89 |

| Qwen3-8B | ✗ | ✗ | 0.00 | 79.71 | 57.58 | 76.03 | 73.04 | 0.21 | 49.08 | 41.92 | 55.76 | 51.02 | 0.87 |

| Qwen3-8B | ✗ | ✗ | 1.00 | 79.66 | 57.00 | 76.22 | 72.97 | 0.21 | 49.26 | 41.64 | 55.33 | 50.90 | 0.75 |

| Qwen3-8B | ✓ | ✗ | 0.00 | 79.66 | 50.87 | 75.53 | 71.27 | 3.29 | 37.46 | 25.48 | 42.36 | 38.17 | 3.41 |

| Qwen3-8B | ✓ | ✗ | 1.00 | 79.51 | 45.34 | 75.23 | 69.83 | 3.19 | 39.31 | 25.75 | 42.72 | 39.25 | 3.47 |

| Qwen3-8B | ✗ | ✓ | 0.00 | 79.66 | 58.67 | 76.37 | 73.42 | 0.18 | 53.72 | 42.47 | 50.43 | 51.19 | 0.58 |

| Qwen3-8B | ✗ | ✓ | 1.00 | 79.66 | 59.40 | 76.41 | 73.61 | 0.18 | 53.78 | 41.92 | 50.58 | 51.22 | 0.70 |

| Qwen3-8B | ✓ | ✓ | 0.00 | 80.71 | 54.81 | 76.10 | 72.77 | 2.38 | 40.02 | 24.38 | 40.71 | 38.64 | 3.33 |

| Qwen3-8B | ✓ | ✓ | 1.00 | 80.16 | 52.55 | 75.46 | 71.79 | 2.47 | 40.98 | 28.77 | 41.14 | 39.74 | 3.37 |

| Qwen2.5-32B | ✗ | ✗ | 0.00 | 94.45 | 80.32 | 68.58 | 79.88 | 0.14 | 63.73 | 65.49 | 64.77 | 1.90 | |

| Qwen2.5-32B | ✗ | ✗ | 1.00 | 94.40 | 80.39 | 68.96 | 80.05 | 0.14 | 63.43 | 67.67 | 65.27 | 2.11 | |

| Qwen2.5-32B | ✓ | ✗ | 0.00 | 85.56 | 65.31 | 66.07 | 72.39 | 4.41 | 56.22 | 45.48 | 55.04 | 54.60 | 9.80 |

| Qwen2.5-32B | ✓ | ✗ | 1.00 | 84.91 | 59.11 | 64.17 | 69.93 | 5.02 | 54.62 | 47.40 | 56.41 | 54.57 | 10.13 |

| Qwen2.5-32B | ✗ | ✓ | 0.00 | 94.85 | 70.67 | 81.73 | 0.28 | 59.20 | 58.63 | 62.39 | 60.43 | 2.41 | |

| Qwen2.5-32B | ✗ | ✓ | 1.00 | 84.33 | 71.16 | 82.03 | 0.31 | 59.86 | 56.44 | 61.82 | 60.29 | 2.74 | |

| Qwen2.5-32B | ✓ | ✓ | 0.00 | 88.61 | 67.86 | 65.01 | 73.52 | 3.13 | 61.41 | 52.33 | 58.50 | 59.27 | 6.95 |

| Qwen2.5-32B | ✓ | ✓ | 1.00 | 86.66 | 61.95 | 65.05 | 71.54 | 3.78 | 60.21 | 49.86 | 57.78 | 58.13 | 8.48 |

| Qwen2.5-14B | ✗ | ✗ | 0.00 | 90.00 | 72.67 | 65.31 | 75.22 | 0.11 | 59.98 | 65.75 | 62.32 | 61.54 | 1.44 |

| Qwen2.5-14B | ✗ | ✗ | 1.00 | 89.71 | 71.79 | 65.31 | 74.92 | 0.13 | 59.80 | 64.66 | 61.10 | 60.84 | 1.63 |

| Qwen2.5-14B | ✓ | ✗ | 0.00 | 83.86 | 61.08 | 61.78 | 68.98 | 3.63 | 52.47 | 47.67 | 50.58 | 51.19 | 8.61 |

| Qwen2.5-14B | ✓ | ✗ | 1.00 | 81.76 | 50.95 | 61.66 | 65.91 | 4.32 | 53.01 | 51.78 | 52.02 | 52.48 | 9.18 |

| Qwen2.5-14B | ✗ | ✓ | 0.00 | 89.66 | 76.31 | 63.87 | 75.30 | 0.28 | 51.22 | 50.14 | 64.19 | 56.35 | 3.04 |

| Qwen2.5-14B | ✗ | ✓ | 1.00 | 89.76 | 76.38 | 63.91 | 75.37 | 0.32 | 53.13 | 46.58 | 64.84 | 57.17 | 3.51 |

| Qwen2.5-14B | ✓ | ✓ | 0.00 | 84.91 | 63.48 | 62.31 | 70.11 | 3.22 | 57.59 | 53.15 | 57.13 | 56.93 | 6.53 |

| Qwen2.5-14B | ✓ | ✓ | 1.00 | 84.41 | 57.14 | 61.17 | 67.99 | 3.87 | 56.58 | 51.51 | 57.35 | 56.35 | 8.17 |

| Qwen2.5-7B | ✗ | ✗ | 0.00 | 86.71 | 70.77 | 62.73 | 72.56 | 0.08 | 52.83 | 56.71 | 52.88 | 53.26 | 0.64 |

| Qwen2.5-7B | ✗ | ✗ | 1.00 | 87.06 | 70.34 | 62.54 | 72.49 | 0.08 | 50.21 | 54.25 | 50.86 | 50.90 | 0.64 |

| Qwen2.5-7B | ✓ | ✗ | 0.00 | 79.21 | 52.04 | 57.83 | 63.63 | 2.75 | 47.41 | 40.82 | 48.49 | 47.14 | 5.75 |

| Qwen2.5-7B | ✓ | ✗ | 1.00 | 79.21 | 45.26 | 57.60 | 61.98 | 2.82 | 44.55 | 42.19 | 45.75 | 44.78 | 6.11 |

| Qwen2.5-7B | ✗ | ✓ | 0.00 | 88.11 | 73.62 | 62.84 | 73.72 | 0.10 | 40.98 | 34.52 | 46.11 | 42.37 | 1.01 |

| Qwen2.5-7B | ✗ | ✓ | 1.00 | 87.91 | 73.25 | 63.22 | 73.74 | 0.10 | 39.31 | 34.52 | 44.09 | 40.73 | 0.76 |

| Qwen2.5-7B | ✓ | ✓ | 0.00 | 82.16 | 57.22 | 57.94 | 65.85 | 2.10 | 50.86 | 46.03 | 51.87 | 50.76 | 3.74 |

| Qwen2.5-7B | ✓ | ✓ | 1.00 | 80.71 | 51.02 | 57.33 | 63.68 | 2.33 | 47.35 | 40.00 | 49.64 | 47.49 | 4.56 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, F.; He, Y.; Chen, Q.; Liu, F. Evaluating Psychological Competency via Chinese Q&A in Large Language Models. Appl. Sci. 2025, 15, 9089. https://doi.org/10.3390/app15169089

Gao F, He Y, Chen Q, Liu F. Evaluating Psychological Competency via Chinese Q&A in Large Language Models. Applied Sciences. 2025; 15(16):9089. https://doi.org/10.3390/app15169089

Chicago/Turabian StyleGao, Feng, Yishen He, Qin Chen, and Feng Liu. 2025. "Evaluating Psychological Competency via Chinese Q&A in Large Language Models" Applied Sciences 15, no. 16: 9089. https://doi.org/10.3390/app15169089

APA StyleGao, F., He, Y., Chen, Q., & Liu, F. (2025). Evaluating Psychological Competency via Chinese Q&A in Large Language Models. Applied Sciences, 15(16), 9089. https://doi.org/10.3390/app15169089