Ten Natural Language Processing Tasks with Generative Artificial Intelligence

Abstract

1. Introduction

1.1. The State of the Art

1.2. Novelty of This Paper

- Information retrieval;

- Named entity recognition;

- Text classification;

- Machine translation;

- Text summarization;

- Question and answering generation;

- Fake news detection;

- Hate speech detection;

- Text generation;

- Sentiment analysis.

- ROGUE (Recall-Oriented Understudy for Gisting Evaluation).

- METEOR (Metric for Evaluation of Translation with Explicit ORdering).

- BLEU (Bilingual Evaluation Understudy).

- BERTScore.

- BARTScore (Bidirectional and Auto-Regressive Transformers).

- A thorough examination of contemporary scholarly publications pertaining to LLMs is presented, offering both a comprehensive review and a detailed analysis of the extant literature.

- An examination of the benefits and risks associated with the implementation of artificial intelligence.

- A description of text generation.

- A classification and description of ten NLP tasks, with the use of LLMs.

- A detailed exposition of five widely accepted metrics for the evaluation of the quality of content that has been generated.

- A discussion on the potential for the development of artificial intelligence that uses large language models.

2. Materials and Methods

2.1. Large Language Models

2.2. The Tasks of Natural Language Processing Using the GenAI with Large Language Models

2.2.1. Information Retrieval

2.2.2. Named Entity Recognition

- Information extraction;

- Information retrieval;

- Text or document summarization;

- Social media monitoring;

- Named entity recognition;

- Question answering;

- Machine translation.

2.2.3. Text and Document Classification

- Text preparation, including size reduction;

- Feature extraction;

- Choice of classifier;

- Classification.

2.2.4. Machine Translation

2.2.5. Text Summarization

2.2.6. Question-and-Answer Generation

2.2.7. Fake News Detection

2.2.8. Hate Speech Detection

2.2.9. Text Generation

2.2.10. Sentiment Analysis

2.3. Metrics Used to Assess Text Quality

2.3.1. ROUGE Score

- ROUGE-L;

- ROUGE-W;

- ROUGE-N;

- ROUGE-S.

2.3.2. METEOR

- Chunks is the number of consecutive sequences of matched words that appear in the same order in generated translation (hypothesis) and human translation (reference);

- MatchedWords is total number of matched words, which are average over references and hypothesis;

- and are tuned parameters (tuning procedure formula below, commonly is set 0.5 and ).

- is equal to matcher weight;

- defines word weight value;

- and are tuned parameters (tuning procedure formula below, default value is set 0.9);

- is the number of content words matched in the references;

- is the count of function words matched in the references.

- is equal to matcher weight;

- defines word weight value;

- and are tuned parameters (tuning procedure formula below);

- is the number of content words matched in the hypothesis;

- is the count of function words matched in the hypothesis.

2.3.3. BLEU

- N is the highest n-gram order is typically identified as 4.

- is the weight for each n-gram precision.

- is the modified precision for n-grams and is a basic BLEU metric. It checks how many continuous sequences of words occur, with the length defined as n, and it avoids inflating repetition results thanks to the pruning rule.

- The BP is calculated based on the length of the hypothetical translation corpus (ch) and the reference corpus (rc). If ch > rc, then BP is equal to 1. In case of a ch that is greater than or equal to rc, then BP has the value .

2.3.4. BERTScore

2.3.5. BARTScore

- Unsupervised matching is a method that enables the measurement of semantic equivalence between a reference and a hypothesis.

- Regression is a statistical technique that enables the prediction of human judgments based on a parameterized regression layer.

- The process of ranking involves the systematic allocation of higher ratings to hypotheses that demonstrate a higher degree of validity and relevance generation.

- is model seq2seq parameter;

- is target sequence of n tokens;

- is target sequence of l tokens;

- is probability of y conditioned on x.

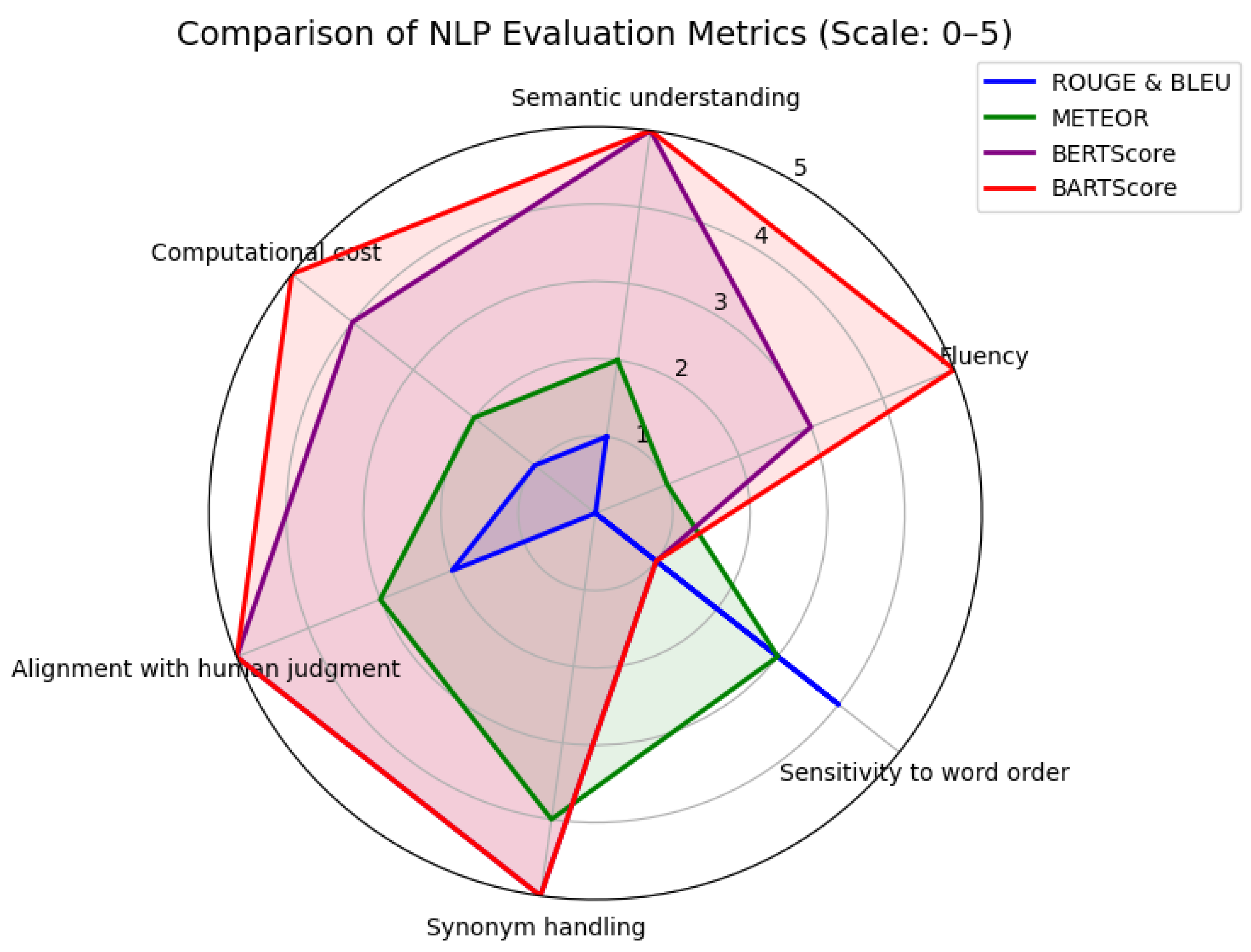

2.3.6. Comparison of the Described Metrics

- Semantic understanding;

- Fluency;

- Sensitivity to word order;

- Synonym handling;

- Alignment with human judgment;

- Computational cost.

2.3.7. Example Evaluation of Generated Text

3. Discussion

4. Challenges and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sætra, H.S. Generative AI: Here to stay, but for good? Technol. Soc. 2023, 75, 102372. [Google Scholar] [CrossRef]

- Manduva, V.C. Current State and Future Directions for AI Research in the Corporate World. Metascience 2024, 2, 70–83. [Google Scholar]

- Okaiyeto, S.A.; Bai, J.; Xiao, H. Generative AI in education: To embrace it or not? Int. J. Agric. Biol. Eng. 2023, 16, 285–286. [Google Scholar] [CrossRef]

- Lim, W.M.; Gunasekara, A.; Pallant, J.L.; Pallant, J.I.; Pechenkina, E. Generative AI and the future of education: Ragnarök or reformation? A paradoxical perspective from management educators. Int. J. Manag. Educ. 2023, 21, 100790. [Google Scholar] [CrossRef]

- Zhang, W.; Cai, M.; Lee, H.J.; Evans, R.; Zhu, C.; Ming, C. AI in Medical Education: Global situation, effects and challenges. Educ. Inf. Technol. 2024, 29, 4611–4633. [Google Scholar] [CrossRef]

- Jošt, G.; Taneski, V.; Karakatič, S. The impact of large language models on programming education and student learning outcomes. Appl. Sci. 2024, 14, 4115. [Google Scholar] [CrossRef]

- Shanmugam, D.; Agrawal, M.; Movva, R.; Chen, I.Y.; Ghassemi, M.; Jacobs, M.; Pierson, E. Generative AI in medicine. arXiv 2024, arXiv:2412.10337. [Google Scholar] [CrossRef]

- Zhang, K.; Meng, X.; Yan, X.; Ji, J.; Liu, J.; Xu, H.; Zhang, H.; Liu, D.; Wang, J.; Wang, X.; et al. Revolutionizing health care: The transformative impact of large language models in medicine. J. Med. Internet Res. 2025, 27, e59069. [Google Scholar] [CrossRef] [PubMed]

- Johri, S.; Jeong, J.; Tran, B.A.; Schlessinger, D.I.; Wongvibulsin, S.; Barnes, L.A.; Zhou, H.Y.; Cai, Z.R.; Van Allen, E.M.; Kim, D.; et al. An evaluation framework for clinical use of large language models in patient interaction tasks. Nat. Med. 2025, 31, 77–86. [Google Scholar] [CrossRef]

- Shahid, A.; Shetty, N.S.; Patel, N.; Gaonkar, M.; Arora, G.; Arora, P. Evaluating Cardiology Certification Using the ACCSAP Question Bank: Large Language Models vs Physicians. In Mayo Clinic Proceedings; Elsevier: Amsterdam, The Netherlands, 2025; Volume 100, pp. 160–163. [Google Scholar]

- Salastekar, N.V.; Maxfield, C.; Hanna, T.N.; Krupinski, E.A.; Heitkamp, D.; Grimm, L.J. Artificial intelligence/machine learning education in radiology: Multi-institutional survey of radiology residents in the United States. Acad. Radiol. 2023, 30, 1481–1487. [Google Scholar] [CrossRef] [PubMed]

- Gunes, Y.C.; Cesur, T. The diagnostic performance of large language models and general radiologists in thoracic radiology cases: A comparative study. J. Thorac. Imaging 2025, 40, e0805. [Google Scholar] [CrossRef]

- Can, E.; Uller, W.; Vogt, K.; Doppler, M.C.; Busch, F.; Bayerl, N.; Ellmann, S.; Kader, A.; Elkilany, A.; Makowski, M.R.; et al. Large language models for simplified interventional radiology reports: A comparative analysis. Acad. Radiol. 2025, 32, 888–898. [Google Scholar] [CrossRef]

- Cheng, K.; Li, Z.; Guo, Q.; Sun, Z.; Wu, H.; Li, C. Emergency surgery in the era of artificial intelligence: ChatGPT could be the doctor’s right-hand man. Int. J. Surg. 2023, 109, 1816–1818. [Google Scholar] [CrossRef] [PubMed]

- Guni, A.; Varma, P.; Zhang, J.; Fehervari, M.; Ashrafian, H. Artificial intelligence in surgery: The future is now. Eur. Surg. Res. 2024, 65, 22–39. [Google Scholar] [CrossRef] [PubMed]

- Zargarzadeh, S.; Mirzaei, M.; Ou, Y.; Tavakoli, M. From Decision to Action in Surgical Autonomy: Multi-Modal Large Language Models for Robot-Assisted Blood Suction. IEEE Robot. Autom. Lett. 2025, 10, 2598–2605. [Google Scholar] [CrossRef]

- Vilhekar, R.S.; Rawekar, A. Artificial intelligence in genetics. Cureus 2024, 16, e52035. [Google Scholar] [CrossRef] [PubMed]

- Srivastava, S.; Jayaswal, N.; Kumar, S.; Sharma, P.K.; Behl, T.; Khalid, A.; Mohan, S.; Najmi, A.; Zoghebi, K.; Alhazmi, H.A. Unveiling the potential of proteomic and genetic signatures for precision therapeutics in lung cancer management. Cell. Signal. 2024, 113, 110932. [Google Scholar] [CrossRef]

- Routray, B.B. The Spectre of Generative AI Over Advertising, Marketing, and Branding. Authorea 2024. [Google Scholar] [CrossRef]

- Yoo, B.; Kim, J.; Park, S.; Ahn, C.R.; Oh, T. Harnessing Generative Pre-Trained Transformers for Construction Accident Prediction with Saliency Visualization. Appl. Sci. 2024, 14, 664. [Google Scholar] [CrossRef]

- Li, C.; Zhang, Z.; Wu, H.; Sun, W.; Min, X.; Liu, X.; Zhai, G.; Lin, W. AGIQA-3K: An Open Database for AI-Generated Image Quality Assessment. arXiv 2023, arXiv:2306.04717. [Google Scholar] [CrossRef]

- Bandi, A.; Adapa, P.V.S.R.; Kuchi, Y.E.V.P.K. The power of generative ai: A review of requirements, models, input–output formats, evaluation metrics, and challenges. Future Internet 2023, 15, 260. [Google Scholar] [CrossRef]

- Ma, Y.; Liu, J.; Yi, F. Is this abstract generated by ai? A research for the gap between ai-generated scientific text and human-written scientific text. arXiv 2023, arXiv:2301.10416. [Google Scholar]

- Sardinha, T.B. AI-generated vs human-authored texts: A multidimensional comparison. Appl. Corpus Linguist. 2024, 4, 100083. [Google Scholar] [CrossRef]

- Wei, J.; Tay, Y.; Bommasani, R.; Raffel, C.; Zoph, B.; Borgeaud, S.; Yogatama, D.; Bosma, M.; Zhou, D.; Metzler, D.; et al. Emergent abilities of large language models. arXiv 2022, arXiv:2206.07682. [Google Scholar] [CrossRef]

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E.; et al. ChatGPT for good? On opportunities and challenges of large language models for education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Kheddar, H. Transformers and large language models for efficient intrusion detection systems: A comprehensive survey. Inf. Fusion 2025, 124, 103347. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Brown, P.F.; Della Pietra, V.J.; Desouza, P.V.; Lai, J.C.; Mercer, R.L. Class-based n-gram Models of Natural Language. Comput. Linguist. 1992, 18, 467–480. [Google Scholar]

- Mavrych, V.; Ganguly, P.; Bolgova, O. Using large language models (ChatGPT, Copilot, PaLM, Bard, and Gemini) in gross anatomy course: Comparative analysis. Clin. Anat. 2025, 38, 200–210. [Google Scholar] [CrossRef]

- Urman, A.; Makhortykh, M. The silence of the LLMs: Cross-lingual analysis of guardrail-related political bias and false information prevalence in ChatGPT, Google Bard (Gemini), and Bing Chat. Telemat. Inform. 2025, 96, 102211. [Google Scholar] [CrossRef]

- Lo, C.K. What is the impact of ChatGPT on education? A rapid review of the literature. Educ. Sci. 2023, 13, 410. [Google Scholar] [CrossRef]

- Patel, V.V. Revolutionizing Marketing Efficiency with ChatGpt; Technical Report; GSFC University: Vadodara, India, 2023. [Google Scholar]

- Ye, H.; Liu, T.; Zhang, A.; Hua, W.; Jia, W. Cognitive mirage: A review of hallucinations in large language models. arXiv 2023, arXiv:2309.06794. [Google Scholar] [CrossRef]

- Farquhar, S.; Kossen, J.; Kuhn, L.; Gal, Y. Detecting hallucinations in large language models using semantic entropy. Nature 2024, 630, 625–630. [Google Scholar] [CrossRef]

- Wilcox, E.G.; Gauthier, J.; Hu, J.; Qian, P.; Levy, R. Learning syntactic structures from string input. In Algebraic Structures in Natural Language; CRC Press: Boca Raton, FL, USA, 2022; pp. 113–138. [Google Scholar]

- Bai, J.; Wang, Y.; Chen, Y.; Yang, Y.; Bai, J.; Yu, J.; Tong, Y. Syntax-BERT: Improving pre-trained transformers with syntax trees. arXiv 2021, arXiv:2103.04350. [Google Scholar]

- Baroni, M. On the proper role of linguistically-oriented deep net analysis in linguistic theorizing. In Algebraic Structures in Natural Language; CRC Press: Boca Raton, FL, USA, 2022; pp. 1–16. [Google Scholar]

- Dasgupta, I.; Kaeser-Chen, C.; Marino, K.; Ahuja, A.; Babayan, S.; Hill, F.; Fergus, R. Collaborating with language models for embodied reasoning. arXiv 2023. [Google Scholar] [CrossRef]

- Zhu, Y.; Yuan, H.; Wang, S.; Liu, J.; Liu, W.; Deng, C.; Dou, Z.; Wen, J.R. Large language models for information retrieval: A survey. arXiv 2023, arXiv:2308.07107. [Google Scholar]

- Salton, G. Modern Information Retrieval; ACM Press: New York, NY, USA, 1983. [Google Scholar]

- Salton, G.; Wong, A.; Yang, C.S. A vector space model for automatic indexing. Commun. ACM 1975, 18, 613–620. [Google Scholar] [CrossRef]

- Mitra, B.; Craswell, N. Neural models for information retrieval. arXiv 2017, arXiv:1705.01509. [Google Scholar] [CrossRef]

- Zhao, W.X.; Liu, J.; Ren, R.; Wen, J.R. Dense text retrieval based on pretrained language models: A survey. arXiv 2022, arXiv:2211.14876. [Google Scholar] [CrossRef]

- Jiang, S.; Chen, Z.; Liang, J.; Zhao, Y.; Liu, M.; Qin, B. Infrared-LLaVA: Enhancing Understanding of Infrared Images in Multi-Modal Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; pp. 8573–8591. [Google Scholar]

- Gao, L.; Ma, X.; Lin, J.; Callan, J. Precise zero-shot dense retrieval without relevance labels. arXiv 2022, arXiv:2212.10496. [Google Scholar] [CrossRef]

- Srinivasan, K.; Raman, K.; Samanta, A.; Liao, L.; Bertelli, L.; Bendersky, M. QUILL: Query intent with large language models using retrieval augmentation and multi-stage distillation. arXiv 2022, arXiv:2210.15718. [Google Scholar] [CrossRef]

- Shen, T.; Long, G.; Geng, X.; Tao, C.; Zhou, T.; Jiang, D. Large Language Models are Strong Zero-Shot Retriever. arXiv 2023, arXiv:2304.14233. [Google Scholar] [CrossRef]

- Wang, L.; Yang, N.; Wei, F. Query2doc: Query Expansion with Large Language Models. arXiv 2023, arXiv:2303.07678. [Google Scholar] [CrossRef]

- Jagerman, R.; Zhuang, H.; Qin, Z.; Wang, X.; Bendersky, M. Query Expansion by Prompting Large Language Models. arXiv 2023, arXiv:2305.03653. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, A.; Li, M.; Zhao, H.; Karypis, G.; Smola, A. Multimodal chain-of-thought reasoning in language models. arXiv 2023, arXiv:2302.00923. [Google Scholar]

- Pérez-Toro, P.A.; Dineley, J.; Iniesta, R.; Zhang, Y.; Matcham, F.; Siddi, S.; Lamers, F.; Haro, J.M.; Penninx, B.W.; Folarin, A.A.; et al. Exploring Biases Related to the Use of Large Language Models in a Multilingual Depression Corpus. JMIR Ment Health 2025, 12, e57986. [Google Scholar] [CrossRef]

- Mackie, I.; Sekulic, I.; Chatterjee, S.; Dalton, J.; Crestani, F. GRM: Generative Relevance Modeling Using Relevance-Aware Sample Estimation for Document Retrieval. arXiv 2023, arXiv:2306.09938. [Google Scholar] [CrossRef]

- Keraghel, I.; Morbieu, S.; Nadif, M. A survey on recent advances in named entity recognition. arXiv 2024, arXiv:2401.10825. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, T.; Tsai, C.Y.; Lu, Y.; Yao, L. Evolution and emerging trends of named entity recognition: Bibliometric analysis from 2000 to 2023. Heliyon 2024, 10, e30053. [Google Scholar] [CrossRef]

- Sharma, R.; Katyayan, P.; Joshi, N. Improving the Quality of Neural Machine Translation Through Proper Translation of Name Entities. In Proceedings of the 2023 6th International Conference on Information Systems and Computer Networks (ISCON), Mathura, India, 3–4 March 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–4. [Google Scholar]

- Kang, H.; Xiao, J.; Zhang, Y.; Zhang, L.; Zhao, X.; Feng, T. A Research Toward Chinese Named Entity Recognition Based on Transfer Learning. Int. J. Comput. Intell. Syst. 2023, 16, 56. [Google Scholar] [CrossRef]

- Yang, S.; Lai, P.; Fang, R.; Fu, Y.; Ye, F.; Wang, Y. FE-CFNER: Feature Enhancement-based approach for Chinese Few-shot Named Entity Recognition. Comput. Speech Lang. 2025, 90, 101730. [Google Scholar] [CrossRef]

- Abe, K. Application-Oriented Machine Translation: Design and Evaluation. Ph.D. Thesis, Tohoku University, Sendai, Japan, 2023. [Google Scholar]

- Yilmaz, S.F.; Mutlu, F.B.; Balaban, I.; Kozat, S.S. TMD-NER: Turkish multi-domain named entity recognition for informal texts. Signal Image Video Process. 2024, 18, 2255–2263. [Google Scholar] [CrossRef]

- González-Gallardo, C.E.; Tran, H.T.H.; Hamdi, A.; Doucet, A. Leveraging open large language models for historical named entity recognition. In Proceedings of the International Conference on Theory and Practice of Digital Libraries, Ljubljana, Slovenia, 24–27 September 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 379–395. [Google Scholar]

- Frei, J.; Kramer, F. German Medical Named Entity Recognition Model and Data Set Creation Using Machine Translation and Word Alignment: Algorithm Development and Validation. JMIR Form. Res. 2023, 7, e39077. [Google Scholar] [CrossRef]

- Rau, L.F. Extracting company names from text. In Proceedings of the Seventh IEEE Conference on Artificial Intelligence Application, Miami Beach, FL, USA, 24–28 February 1991; IEEE Computer Society: Los Alamitos, CA, USA, 1991; pp. 29–30. [Google Scholar]

- Nadeau, D.; Sekine, S. A survey of named entity recognition and classification. Lingvisticae Investig. 2007, 30, 3–26. [Google Scholar] [CrossRef]

- Collobert, R. Deep learning for efficient discriminative parsing. In JMLR Workshop and Conference Proceedings, Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Fort Lauderdale, FL, USA, 11–13 April 2011; JMLR: Cambridge, MA, USA, 2011; pp. 224–232. [Google Scholar]

- Wang, S.; Hu, T.; Xiao, H.; Li, Y.; Zhang, C.; Ning, H.; Zhu, R.; Li, Z.; Ye, X. GPT, large language models (LLMs) and generative artificial intelligence (GAI) models in geospatial science: A systematic review. Int. J. Digit. Earth 2024, 17, 2353122. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, M.; Li, Q.; Tiwari, P.; Qin, J. Pushing the limit of LLM capacity for text classification. In Proceedings of the Companion Proceedings of the ACM on Web Conference 2025, Sydney, Australia, 28 April–2 May 2025; pp. 1524–1528. [Google Scholar]

- Fields, J.; Chovanec, K.; Madiraju, P. A survey of text classification with transformers: How wide? How large? How long? How accurate? How expensive? How safe? IEEE Access 2024, 12, 6518–6531. [Google Scholar] [CrossRef]

- Palanivinayagam, A.; El-Bayeh, C.Z.; Damaševičius, R. Twenty Years of Machine-Learning-Based Text Classification: A Systematic Review. Algorithms 2023, 16, 236. [Google Scholar] [CrossRef]

- Kowsari, K.; Jafari Meimandi, K.; Heidarysafa, M.; Mendu, S.; Barnes, L.; Brown, D. Text classification algorithms: A survey. Information 2019, 10, 150. [Google Scholar] [CrossRef]

- Gasparetto, A.; Marcuzzo, M.; Zangari, A.; Albarelli, A. A survey on text classification algorithms: From text to predictions. Information 2022, 13, 83. [Google Scholar] [CrossRef]

- Das, M.; Alphonse, P. A comparative study on tf-idf feature weighting method and its analysis using unstructured dataset. arXiv 2023, arXiv:2308.04037. [Google Scholar] [CrossRef]

- Lai, J.; Yang, X.; Luo, W.; Zhou, L.; Li, L.; Wang, Y.; Shi, X. Rumorllm: A rumor large language model-based fake-news-detection data-augmentation approach. Appl. Sci. 2024, 14, 3532. [Google Scholar] [CrossRef]

- Benlahbib, A.; Boumhidi, A.; Fahfouh, A.; Alami, H. Comparative Analysis of Traditional and Modern NLP Techniques on the CoLA Dataset: From POS Tagging to Large Language Models. IEEE Open J. Comput. Soc. 2025, 6, 248–260. [Google Scholar] [CrossRef]

- Juraev, G.; Bozorov, O. Using TF-IDF in text classification. In Proceedings of the AIP Conference Proceedings; AIP Publishing: Melville, NY, USA, 2023; Volume 2789. [Google Scholar]

- Lu, T.; Zhou, Z.; Wang, J.; Wang, Y. A Large Language Model-based Approach for Personalized Search Results Re-ranking in Professional Domains. Int. J. Lang. Stud. 2025, 1, 1–6. [Google Scholar] [CrossRef]

- Rakshit, P.; Sarkar, A. A supervised deep learning-based sentiment analysis by the implementation of Word2Vec and GloVe Embedding techniques. Multimed. Tools Appl. 2025, 84, 979–1012. [Google Scholar] [CrossRef]

- Kale, A.S.; Pandya, V.; Di Troia, F.; Stamp, M. Malware classification with word2vec, hmm2vec, bert, and elmo. J. Comput. Virol. Hacking Tech. 2023, 19, 1–16. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Li, Q.; Zhao, S.; Zhao, S.; Wen, J. Logistic Regression Matching Pursuit algorithm for text classification. Knowl.-Based Syst. 2023, 277, 110761. [Google Scholar] [CrossRef]

- Almazaydeh, L.; Abuhelaleh, M.; Al Tawil, A.; Elleithy, K. Clinical Text Classification with Word Representation Features and Machine Learning Algorithms. Int. J. Online Biomed. Eng. 2023, 19, 65. [Google Scholar] [CrossRef]

- Parlak, B.; Uysal, A.K. A novel filter feature selection method for text classification: Extensive Feature Selector. J. Inf. Sci. 2023, 49, 59–78. [Google Scholar] [CrossRef]

- Magalhães, D.; Lima, R.H.; Pozo, A. Creating deep neural networks for text classification tasks using grammar genetic programming. Appl. Soft Comput. 2023, 135, 110009. [Google Scholar] [CrossRef]

- Umer, M.; Imtiaz, Z.; Ahmad, M.; Nappi, M.; Medaglia, C.; Choi, G.S.; Mehmood, A. Impact of convolutional neural network and FastText embedding on text classification. Multimed. Tools Appl. 2023, 82, 5569–5585. [Google Scholar] [CrossRef]

- Chen, W.; Jin, J.; Gerontitis, D.; Qiu, L.; Zhu, J. Improved recurrent neural networks for text classification and dynamic Sylvester equation solving. Neural Process. Lett. 2023, 55, 8755–8784. [Google Scholar] [CrossRef]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 2022, 35, 27730–27744. [Google Scholar]

- Thoppilan, R.; De Freitas, D.; Hall, J.; Shazeer, N.; Kulshreshtha, A.; Cheng, H.T.; Jin, A.; Bos, T.; Baker, L.; Du, Y.; et al. Lamda: Language models for dialog applications. arXiv 2022, arXiv:2201.08239. [Google Scholar] [CrossRef]

- Doveh, S.; Perek, S.; Mirza, M.J.; Lin, W.; Alfassy, A.; Arbelle, A.; Ullman, S.; Karlinsky, L. Towards Multimodal In-context Learning for Vision and Language Models. In Proceedings of the European Conference on Computer Vision, Dublin, Ireland, 17–18 September 2025; Springer: Berlin/Heidelberg, Germany, 2025; pp. 250–267. [Google Scholar]

- Cheng, C.; Yu, X.; Wen, H.; Sun, J.; Yue, G.; Zhang, Y.; Wei, Z. Exploring the robustness of in-context learning with noisy labels. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–5. [Google Scholar]

- Moslem, Y.; Haque, R.; Kelleher, J.D.; Way, A. Adaptive machine translation with large language models. arXiv 2023, arXiv:2301.13294. [Google Scholar] [CrossRef]

- Toshevska, M.; Gievska, S. A review of text style transfer using deep learning. IEEE Trans. Artif. Intell. 2021, 3, 669684. [Google Scholar] [CrossRef]

- Makridakis, S.; Petropoulos, F.; Kang, Y. Large language models: Their success and impact. Forecasting 2023, 5, 536–549. [Google Scholar] [CrossRef]

- Yang, M.; Li, F. Improving Machine Translation Formality with Large Language Models. Comput. Mater. Contin. 2025, 82, 2061–2075. [Google Scholar] [CrossRef]

- Lu, Q.; Qiu, B.; Ding, L.; Xie, L.; Tao, D. Error analysis prompting enables human-like translation evaluation in large language models: A case study on chatgpt. arXiv 2023, arXiv:2303.13809. [Google Scholar]

- Pang, J.; Ye, F.; Wong, D.F.; Yu, D.; Shi, S.; Tu, Z.; Wang, L. Salute the classic: Revisiting challenges of machine translation in the age of large language models. Trans. Assoc. Comput. Linguist. 2025, 13, 73–95. [Google Scholar] [CrossRef]

- Vilar, D.; Freitag, M.; Cherry, C.; Luo, J.; Ratnakar, V.; Foster, G. Prompting PaLM for Translation: Assessing Strategies and Performance. arXiv 2023. [Google Scholar] [CrossRef]

- Zhu, W.; Liu, H.; Dong, Q.; Xu, J.; Huang, S.; Kong, L.; Chen, J.; Li, L. Multilingual Machine Translation with Large Language Models: Empirical Results and Analysis. arXiv 2023. [Google Scholar] [CrossRef]

- Żelasko, P.; Chen, Z.; Wang, M.; Galvez, D.; Hrinchuk, O.; Ding, S.; Hu, K.; Balam, J.; Lavrukhin, V.; Ginsburg, B. Emmett: Efficient multimodal machine translation training. In Proceedings of the ICASSP 2025—2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–5. [Google Scholar]

- Miah, M.S.U.; Kabir, M.M.; Sarwar, T.B.; Safran, M.; Alfarhood, S.; Mridha, M. A multimodal approach to cross-lingual sentiment analysis with ensemble of transformer and LLM. Sci. Rep. 2024, 14, 9603. [Google Scholar] [CrossRef]

- Xie, S.; Dai, W.; Ghosh, E.; Roy, S.; Schwartz, D.; Laine, K. Does Prompt-Tuning Language Model Ensure Privacy? arXiv 2023, arXiv:2304.03472. [Google Scholar] [CrossRef]

- Yan, B.; Li, K.; Xu, M.; Dong, Y.; Zhang, Y.; Ren, Z.; Cheng, X. On protecting the data privacy of large language models (llms): A survey. arXiv 2024, arXiv:2403.05156. [Google Scholar] [CrossRef]

- Das, B.C.; Amini, M.H.; Wu, Y. Security and privacy challenges of large language models: A survey. ACM Comput. Surv. 2025, 57, 1–39. [Google Scholar] [CrossRef]

- Jia, Q.; Liu, Y.; Ren, S.; Zhu, K.Q. Taxonomy of Abstractive Dialogue Summarization: Scenarios, Approaches, and Future Directions. ACM Comput. Surv. 2023, 56, 1–38. [Google Scholar] [CrossRef]

- Edmunds, A.; Morris, A. The problem of information overload in business organisations: A review of the literature. Int. J. Inf. Manag. 2000, 20, 17–28. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, Y.; Chen, L.; Zhang, Y. DialogSum: A real-life scenario dialogue summarization dataset. arXiv 2021, arXiv:2105.06762. [Google Scholar]

- Joshi, A.; Katariya, N.; Amatriain, X.; Kannan, A. Dr. summarize: Global summarization of medical dialogue by exploiting local structures. arXiv 2020, arXiv:2009.08666. [Google Scholar] [CrossRef]

- Zou, Y.; Zhao, L.; Kang, Y.; Lin, J.; Peng, M.; Jiang, Z.; Sun, C.; Zhang, Q.; Huang, X.; Liu, X. Topic-oriented spoken dialogue summarization for customer service with saliency-aware topic modeling. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 2–9 February 2021; Volume 35, pp. 14665–14673. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar]

- Golec, J.; Hachaj, T.; Sokal, G. TIPS: A Framework for Text Summarising with Illustrative Pictures. Entropy 2021, 23, 1614. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, Y.; Saleh, M.; Liu, P. Pegasus: Pre-training with extracted gap-sentences for abstractive summarization. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; PMLR: New York, NY, USA, 2020; pp. 11328–11339. [Google Scholar]

- See, A.; Liu, P.J.; Manning, C.D. Get to the point: Summarization with pointer-generator networks. arXiv 2017, arXiv:1704.04368. [Google Scholar] [CrossRef]

- Chen, Y.C.; Bansal, M. Fast abstractive summarization with reinforce-selected sentence rewriting. arXiv 2018, arXiv:1805.11080. [Google Scholar] [CrossRef]

- Serban, I.; Sordoni, A.; Bengio, Y.; Courville, A.; Pineau, J. Building end-to-end dialogue systems using generative hierarchical neural network models. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- OpenAI, T. Chatgpt: Optimizing Language Models for Dialogue; OpenAI: San Francisco, CA, USA, 2022. [Google Scholar]

- Bang, Y.; Cahyawijaya, S.; Lee, N.; Dai, W.; Su, D.; Wilie, B.; Lovenia, H.; Ji, Z.; Yu, T.; Chung, W.; et al. A Multitask, Multilingual, Multimodal Evaluation of ChatGPT on Reasoning, Hallucination, and Interactivity. arXiv 2023. [Google Scholar] [CrossRef]

- Wang, Q.; Fu, Y.; Cao, Y.; Wang, S.; Tian, Z.; Ding, L. Recursively summarizing enables long-term dialogue memory in large language models. Neurocomputing 2025, 639, 130193. [Google Scholar] [CrossRef]

- OpenAI, R. Gpt-4 technical report. View Artic. 2023, 2, 13. [Google Scholar]

- Aydin, O.; Karaarslan, E. OpenAI ChatGPT interprets radiological images: GPT-4 as a medical doctor for a fast check-up. arXiv 2025, arXiv:2501.06269. [Google Scholar]

- Yue, M. A survey of large language model agents for question answering. arXiv 2025, arXiv:2503.19213. [Google Scholar] [CrossRef]

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S.; et al. Palm: Scaling language modeling with pathways. J. Mach. Learn. Res. 2023, 24, 11324–11436. [Google Scholar]

- Ma, P.; Tsai, S.; He, Y.; Jia, X.; Zhen, D.; Yu, N.; Wang, Q.; Ahuja, J.K.; Wei, C.I. Large language models in food science: Innovations, applications, and future. Trends Food Sci. Technol. 2024, 148, 104488. [Google Scholar] [CrossRef]

- Fecher, B.; Hebing, M.; Laufer, M.; Pohle, J.; Sofsky, F. Friend or foe? Exploring the implications of large language models on the science system. AI Soc. 2025, 40, 447–459. [Google Scholar] [CrossRef]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y.; et al. A survey on evaluation of large language models. ACM Trans. Intell. Syst. Technol. 2024, 15, 39. [Google Scholar] [CrossRef]

- Benedetto, L.; Cremonesi, P.; Caines, A.; Buttery, P.; Cappelli, A.; Giussani, A.; Turrin, R. A survey on recent approaches to question difficulty estimation from text. ACM Comput. Surv. 2023, 55, 178. [Google Scholar] [CrossRef]

- AlKhuzaey, S.; Grasso, F.; Payne, T.R.; Tamma, V. Text-based question difficulty prediction: A systematic review of automatic approaches. Int. J. Artif. Intell. Educ. 2024, 34, 862–914. [Google Scholar] [CrossRef]

- Ch, D.R.; Saha, S.K. Automatic multiple choice question generation from text: A survey. IEEE Trans. Learn. Technol. 2018, 13, 14–25. [Google Scholar] [CrossRef]

- Kurdi, G.; Leo, J.; Parsia, B.; Sattler, U.; Al-Emari, S. A systematic review of automatic question generation for educational purposes. Int. J. Artif. Intell. Educ. 2020, 30, 121–204. [Google Scholar] [CrossRef]

- Chen, C.; Shu, K. Can llm-generated misinformation be detected? arXiv 2023, arXiv:2309.13788. [Google Scholar]

- Wei, A.; Haghtalab, N.; Steinhardt, J. Jailbroken: How does llm safety training fail? arXiv 2023, arXiv:2307.02483. [Google Scholar] [CrossRef]

- Zou, A.; Wang, Z.; Kolter, J.Z.; Fredrikson, M. Universal and transferable adversarial attacks on aligned language models. arXiv 2023, arXiv:2307.15043. [Google Scholar] [CrossRef]

- Joachim, M.; Castelló, I.; Parry, G. Moving Beyond “Facts Are Facts”: Managing Emotions and Legitimacy After a Fake News Attack. Bus. Soc. 2024, 00076503241281632. [Google Scholar] [CrossRef]

- Gupta, B.B.; Gaurav, A.; Arya, V.; Waheeb Attar, R.; Bansal, S.; Alhomoud, A.; Chui, K.T. Sustainable supply chain security through BEART-based fake news detection on supplier practices. Enterp. Inf. Syst. 2025, 2462972. [Google Scholar] [CrossRef]

- Şahi, H.; Kılıç, Y.; Saǧlam, R.B. Automated detection of hate speech towards woman on Twitter. In Proceedings of the 2018 3rd International Conference on Computer Science and Engineering (UBMK), Sarajevo, Bosnia and Herzegovina, 20–23 September 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 533–536. [Google Scholar]

- Fortuna, P.; Nunes, S. A survey on automatic detection of hate speech in text. ACM Comput. Surv. (CSUR) 2018, 51, 85. [Google Scholar] [CrossRef]

- Jahan, M.S.; Oussalah, M. A systematic review of Hate Speech automatic detection using Natural Language Processing. Neurocomputing 2023, 546, 126232. [Google Scholar] [CrossRef]

- Roberts, E. Automated hate speech detection in a low-resource environment. J. Digit. Humanit. Assoc. South. Afr. 2024, 5. [Google Scholar] [CrossRef]

- Kikkisetti, D.; Mustafa, R.U.; Melillo, W.; Corizzo, R.; Boukouvalas, Z.; Gill, J.; Japkowicz, N. Using LLMs to discover emerging coded antisemitic hate-speech emergence in extremist social media. arXiv 2024, arXiv:2401.10841. [Google Scholar]

- Smith, E.L. Hate Crime Recorded by Law Enforcement, 2010–2019; US Department of Justice, Office of Justice Programs, Bureau of Justice Assistance: Washington, DC, USA, 2021.

- Stroud, S. Hate by Numbers: Exploring Hate Crime Reporting Across Crime Type and Among Special Interest Groups Using the NCVS; University of Missouri-Kansas City: Kansas City, MO, USA, 2023. [Google Scholar]

- Yadav, D.; Sain, M.K. Comparative Analysis and Assesment on Different Hate Speech Detection Learning Techniques. J. Algebr. Stat. 2023, 14, 29–48. [Google Scholar]

- Wiedemann, G.; Yimam, S.M.; Biemann, C. UHH-LT at SemEval-2020 task 12: Fine-tuning of pre-trained transformer networks for offensive language detection. arXiv 2020, arXiv:2004.11493. [Google Scholar]

- Saleh, H.; Alhothali, A.; Moria, K. Detection of hate speech using bert and hate speech word embedding with deep model. Appl. Artif. Intell. 2023, 37, 2166719. [Google Scholar] [CrossRef]

- Miran, A.Z.; Yahia, H.S. Hate Speech Detection in Social Media (Twitter) Using Neural Network. J. Mob. Multimed. 2023, 19, 765–798. [Google Scholar] [CrossRef]

- Abraham, A.; Kolanchery, A.J.; Kanjookaran, A.A.; Jose, B.T.; Dhanya, P. Hate speech detection in Twitter using different models. In Proceedings of the ITM Web of Conferences; EDP Sciences: Ulis, France, 2023; Volume 56, p. 04007. [Google Scholar]

- Chen, Y.; Wang, R.; Jiang, H.; Shi, S.; Xu, R. Exploring the use of large language models for reference-free text quality evaluation: A preliminary empirical study. arXiv 2023, arXiv:2304.00723. [Google Scholar]

- Min, B.; Ross, H.; Sulem, E.; Veyseh, A.P.B.; Nguyen, T.H.; Sainz, O.; Agirre, E.; Heintz, I.; Roth, D. Recent advances in natural language processing via large pre-trained language models: A survey. ACM Comput. Surv. 2023, 56, 30. [Google Scholar] [CrossRef]

- Zhou, W.; Jiang, Y.E.; Wilcox, E.; Cotterell, R.; Sachan, M. Controlled text generation with natural language instructions. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; PMLR: New York, NY, USA, 2023; pp. 42602–42613. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Kumar, M.; Sharma, H.K. A GAN-based model of deepfake detection in social media. Procedia Comput. Sci. 2023, 218, 2153–2162. [Google Scholar] [CrossRef]

- Mirza, M.; Osindero, S. Conditional Generative Adversarial Nets. arXiv 2014. [Google Scholar] [CrossRef]

- Patashnik, O.; Wu, Z.; Shechtman, E.; Cohen-Or, D.; Lischinski, D. Styleclip: Text-driven manipulation of stylegan imagery. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 2085–2094. [Google Scholar]

- Karras, T.; Laine, S.; Aittala, M.; Hellsten, J.; Lehtinen, J.; Aila, T. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 8110–8119. [Google Scholar]

- Stypułkowski, M.; Vougioukas, K.; He, S.; Zięba, M.; Petridis, S.; Pantic, M. Diffused heads: Diffusion models beat gans on talking-face generation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 5091–5100. [Google Scholar]

- Iqbal, T.; Qureshi, S. The survey: Text generation models in deep learning. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 2515–2528. [Google Scholar] [CrossRef]

- Wei, Y.; Zheng, Y.; Zhang, Y.; Liu, M.; Ji, Z.; Zhang, L.; Zuo, W. Personalized image generation with deep generative models: A decade survey. arXiv 2025, arXiv:2502.13081. [Google Scholar] [CrossRef]

- Zheng, S.; Wang, S.; Li, K.; Li, X.; Sun, F. When Feature Encoder Meets Diffusion Model for Sequential Recommendations. Inf. Sci. 2025, 702, 121903. [Google Scholar] [CrossRef]

- Yang, L.; Tian, Y.; Li, B.; Zhang, X.; Shen, K.; Tong, Y.; Wang, M. Mmada: Multimodal large diffusion language models. arXiv 2025, arXiv:2505.15809. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar] [CrossRef]

- Grover, A.; Leskovec, J. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 855–864. [Google Scholar]

- Du, J.; Jia, P.; Dai, Y.; Tao, C.; Zhao, Z.; Zhi, D. Gene2vec: Distributed representation of genes based on co-expression. BMC Genom. 2019, 20, 82. [Google Scholar] [CrossRef]

- Sherstinsky, A. Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Phys. D Nonlinear Phenom. 2020, 404, 132306. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Berglund, M.; Raiko, T.; Honkala, M.; Kärkkäinen, L.; Vetek, A.; Karhunen, J.T. Bidirectional recurrent neural networks as generative models. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Lai, S.; Xu, L.; Liu, K.; Zhao, J. Recurrent convolutional neural networks for text classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 29. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems (NIPS 2014), Montreal, QC, Canada, 8–13 December 2014; Volume 27. [Google Scholar]

- Hussein, D.M.E.D.M. A survey on sentiment analysis challenges. J. King Saud Univ.-Eng. Sci. 2018, 30, 330–338. [Google Scholar] [CrossRef]

- Wankhade, M.; Rao, A.C.S.; Kulkarni, C. A survey on sentiment analysis methods, applications, and challenges. Artif. Intell. Rev. 2022, 55, 5731–5780. [Google Scholar] [CrossRef]

- Pang, B.; Lee, L. Opinion mining and sentiment analysis. In Foundations and Trends® in Information Retrieval; Now Publishers Inc.: Hanover, MA, USA, 2008; Volume 2, pp. 1–135. [Google Scholar]

- Schouten, K.; Frasincar, F. Survey on aspect-level sentiment analysis. IEEE Trans. Knowl. Data Eng. 2015, 28, 813–830. [Google Scholar] [CrossRef]

- Prasanna, M.; Shaila, S.; Vadivel, A. Polarity classification on twitter data for classifying sarcasm using clause pattern for sentiment analysis. Multimed. Tools Appl. 2023, 82, 32789–32825. [Google Scholar] [CrossRef]

- Hung, L.P.; Alias, S. Beyond sentiment analysis: A review of recent trends in text based sentiment analysis and emotion detection. J. Adv. Comput. Intell. Intell. Inform. 2023, 27, 84–95. [Google Scholar] [CrossRef]

- Do, H.H.; Prasad, P.W.; Maag, A.; Alsadoon, A. Deep learning for aspect-based sentiment analysis: A comparative review. Expert Syst. Appl. 2019, 118, 272–299. [Google Scholar] [CrossRef]

- Nazir, A.; Rao, Y.; Wu, L.; Sun, L. Issues and challenges of aspect-based sentiment analysis: A comprehensive survey. IEEE Trans. Affect. Comput. 2020, 13, 845–863. [Google Scholar] [CrossRef]

- Truşcǎ, M.M.; Frasincar, F. Survey on aspect detection for aspect-based sentiment analysis. Artif. Intell. Rev. 2023, 56, 3797–3846. [Google Scholar] [CrossRef]

- Agarwal, B.; Mittal, N.; Agarwal, B.; Mittal, N. Machine learning approach for sentiment analysis. In Prominent Feature Extraction for Sentiment Analysis; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–45. [Google Scholar]

- Tanveer, M.; Rajani, T.; Rastogi, R.; Shao, Y.H.; Ganaie, M. Comprehensive review on twin support vector machines. Ann. Oper. Res. 2024, 339, 1223–1268. [Google Scholar] [CrossRef]

- Sangeetha, J.; Kumaran, U. A hybrid optimization algorithm using BiLSTM structure for sentiment analysis. Meas. Sens. 2023, 25, 100619. [Google Scholar] [CrossRef]

- Mutinda, J.; Mwangi, W.; Okeyo, G. Sentiment analysis of text reviews using lexicon-enhanced bert embedding (LeBERT) model with convolutional neural network. Appl. Sci. 2023, 13, 1445. [Google Scholar] [CrossRef]

- Zarandi, A.K.; Mirzaei, S. A survey of aspect-based sentiment analysis classification with a focus on graph neural network methods. Multimed. Tools Appl. 2024, 83, 56619–56695. [Google Scholar] [CrossRef]

- Petrovic, A.; Jovanovic, L.; Bacanin, N.; Antonijevic, M.; Savanovic, N.; Zivkovic, M.; Milovanovic, M.; Gajic, V. Exploring metaheuristic optimized machine learning for software defect detection on natural language and classical datasets. Mathematics 2024, 12, 2918. [Google Scholar] [CrossRef]

- Dang, N.C.; Moreno-García, M.N.; De la Prieta, F. Sentiment analysis based on deep learning: A comparative study. Electronics 2020, 9, 483. [Google Scholar] [CrossRef]

- Tan, K.L.; Lee, C.P.; Lim, K.M. Roberta-Gru: A hybrid deep learning model for enhanced sentiment analysis. Appl. Sci. 2023, 13, 3915. [Google Scholar] [CrossRef]

- Miazga, J.; Hachaj, T. Evaluation of most popular sentiment lexicons coverage on various datasets. In Proceedings of the 2019 2nd International Conference on Sensors, Signal and Image Processing, Prague, Czech Republic, 8–10 October 2019; pp. 86–90. [Google Scholar]

- Goutte, C.; Gaussier, E. A probabilistic interpretation of precision, recall and F-score, with implication for evaluation. In Proceedings of the European Conference on Information Retrieval, Compostela, Spain, 21–23 March 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 345–359. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Proceedings of the Text Summarization Branches Out, Barcelona, Spain, 25–26 July 2004; pp. 74–81. [Google Scholar]

- Abdel-Salam, S.; Rafea, A. Performance study on extractive text summarization using BERT models. Information 2022, 13, 67. [Google Scholar] [CrossRef]

- Weber, L.; Ramalingam, K.J.; Beyer, M.; Zimmermann, A. WRF: Weighted Rouge-F1 Metric for Entity Recognition. In Proceedings of the 4th Workshop on Evaluation and Comparison of NLP Systems, Bali, Indonesia, 1 November 2023; pp. 1–11. [Google Scholar]

- Denkowski, M.; Lavie, A. Meteor 1.3: Automatic metric for reliable optimization and evaluation of machine translation systems. In Proceedings of the Sixth Workshop on Statistical Machine Translation, Edinburgh, UK, 30–31 July 2011; pp. 85–91. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Arbor, MI, USA, 29 June 2005; pp. 65–72. [Google Scholar]

- Lavie, A.; Denkowski, M.J. The METEOR metric for automatic evaluation of machine translation. Mach. Transl. 2009, 23, 105–115. [Google Scholar] [CrossRef]

- Denkowski, M.J.; Lavie, A. Extending the METEOR Machine Translation Evaluation Metric to the Phrase Level. In Proceedings of the North American Chapter of the Association for Computational Linguistics, Los Angeles, CA, USA, 1 June 2010. [Google Scholar]

- Elloumi, Z.; Blanchon, H.; Serasset, G.; Besacier, L. METEOR for multiple target languages using DBnary. In Proceedings of the MT Summit 2015, Miami, FL, USA, 30 October–3 November 2015. [Google Scholar]

- Guo, Y.; Hu, J. Meteor++ 2.0: Adopt syntactic level paraphrase knowledge into machine translation evaluation. In Proceedings of the Fourth Conference on Machine Translation (Volume 2: Shared Task Papers, Day 1), Florence, Italy, 1–2 August 2019; pp. 501–506. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar] [CrossRef]

- Callison-Burch, C.; Osborne, M.; Koehn, P. Re-evaluating the role of BLEU in machine translation research. In Proceedings of the 11th Conference of the European Chapter of the Association for Computational Linguistics, Trento, Italy, 3–7 April 2006; pp. 249–256. [Google Scholar]

- Zhang, W.; Lei, X.; Liu, Z.; Wang, N.; Long, Z.; Yang, P.; Zhao, J.; Hua, M.; Ma, C.; Wang, K.; et al. Safety evaluation of deepseek models in chinese contexts. arXiv 2025, arXiv:2502.11137. [Google Scholar]

- McDermott, M.B.; Yap, B.; Szolovits, P.; Zitnik, M. Structure-inducing pre-training. Nat. Mach. Intell. 2023, 5, 612–621. [Google Scholar] [CrossRef]

- Turchin, A.; Masharsky, S.; Zitnik, M. Comparison of BERT implementations for natural language processing of narrative medical documents. Inform. Med. Unlocked 2023, 36, 101139. [Google Scholar] [CrossRef]

- Huang, A.H.; Wang, H.; Yang, Y. FinBERT: A large language model for extracting information from financial text. Contemp. Account. Res. 2023, 40, 806–841. [Google Scholar] [CrossRef]

- Sharaf, S.; Anoop, V. An analysis on large language models in healthcare: A case study of BioBERT. arXiv 2023, arXiv:2310.07282. [Google Scholar] [CrossRef]

- Kollapally, N.M.; Geller, J. Clinical BioBERT Hyperparameter Optimization using Genetic Algorithm. arXiv 2023, arXiv:2302.03822. [Google Scholar] [CrossRef]

- Ganapathy, N.; Chary, P.S.; Pithani, T.V.R.K.; Kavati, P. A Multimodal Approach For Endoscopic VCE Image Classification Using BiomedCLIP-PubMedBERT. arXiv 2024, arXiv:2410.19944. [Google Scholar] [CrossRef]

- Darji, H.; Mitrović, J.; Granitzer, M. German BERT model for legal named entity recognition. arXiv 2023, arXiv:2303.05388. [Google Scholar] [CrossRef]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. Bertscore: Evaluating text generation with bert. arXiv 2019, arXiv:1904.09675. [Google Scholar]

- Yuan, W.; Neubig, G.; Liu, P. Bartscore: Evaluating generated text as text generation. Adv. Neural Inf. Process. Syst. 2021, 34, 27263–27277. [Google Scholar]

- Cui, L.; Wu, Y.; Liu, J.; Yang, S.; Zhang, Y. Template-based named entity recognition using BART. arXiv 2021, arXiv:2106.01760. [Google Scholar] [CrossRef]

- De, S.; Das, R.; Das, K. Deep Learning Based Bengali Image Caption Generation. In Proceedings of the International Conference on Information Systems and Management Science, Msida, Malta, 6–9 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 49–59. [Google Scholar]

- Shin, T.; Razeghi, Y.; Logan IV, R.L.; Wallace, E.; Singh, S. Autoprompt: Eliciting knowledge from language models with automatically generated prompts. arXiv 2020, arXiv:2010.15980. [Google Scholar] [CrossRef]

| Metric | Strength | Weakness | Limitations with LLMs |

|---|---|---|---|

| ROUGE | Simple and fast; good for surface similarity | Ignores meaning; penalizes paraphrasing | Fails to reflect semantic adequacy; underestimates fluent, semantically correct paraphrases |

| BLEU | Standard in MT; captures word order well | Rigid; sensitive to small variations; no synonym handling | Misses valid outputs with different wording; not semantically aware |

| METEOR | Handles synonyms; better correlation with human judgment than BLEU | Slower than ROUGE/BLEU; limited semantic depth | Limited to lexical/word-level comparisons; not deep enough for LLM creativity |

| BERTScore | Captures meaning; tolerates paraphrasing; aligns with human judgment | Computationally expensive; token-level only | Slow; needs large pre-trained models; does not fully assess fluency or coherence |

| BARTScore | Evaluates fluency and coherence; closely mimics human evaluation | Very resource intensive; may be biased by BART’s own training data | Requires decoding with large LM; expensive for real-time evaluation |

| Type | Text |

|---|---|

| Original (O) | At last one day the wolf came indeed. |

| Generated Sentence 1 () | At long last, the wolf actually showed up one day. |

| Generated Sentence 2 () | Ultimately, the wolf did arrive one day. |

| Generated Sentence 3 () | Eventually, the wolf arrived one day as expected. |

| Original | |||

|---|---|---|---|

| ROUGE-L | 0.70 | 0.68 | 0.66 |

| METEOR | 0.65 | 0.60 | 0.58 |

| BLEU | 0.45 | 0.42 | 0.40 |

| BERTScore | 0.92 | 0.91 | 0.91 |

| BARTScore | −1.5 to −2.5 | −1.5 to −2.5 | −1.5 to −2.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Golec, J.; Hachaj, T. Ten Natural Language Processing Tasks with Generative Artificial Intelligence. Appl. Sci. 2025, 15, 9057. https://doi.org/10.3390/app15169057

Golec J, Hachaj T. Ten Natural Language Processing Tasks with Generative Artificial Intelligence. Applied Sciences. 2025; 15(16):9057. https://doi.org/10.3390/app15169057

Chicago/Turabian StyleGolec, Justyna, and Tomasz Hachaj. 2025. "Ten Natural Language Processing Tasks with Generative Artificial Intelligence" Applied Sciences 15, no. 16: 9057. https://doi.org/10.3390/app15169057

APA StyleGolec, J., & Hachaj, T. (2025). Ten Natural Language Processing Tasks with Generative Artificial Intelligence. Applied Sciences, 15(16), 9057. https://doi.org/10.3390/app15169057