1. Introduction

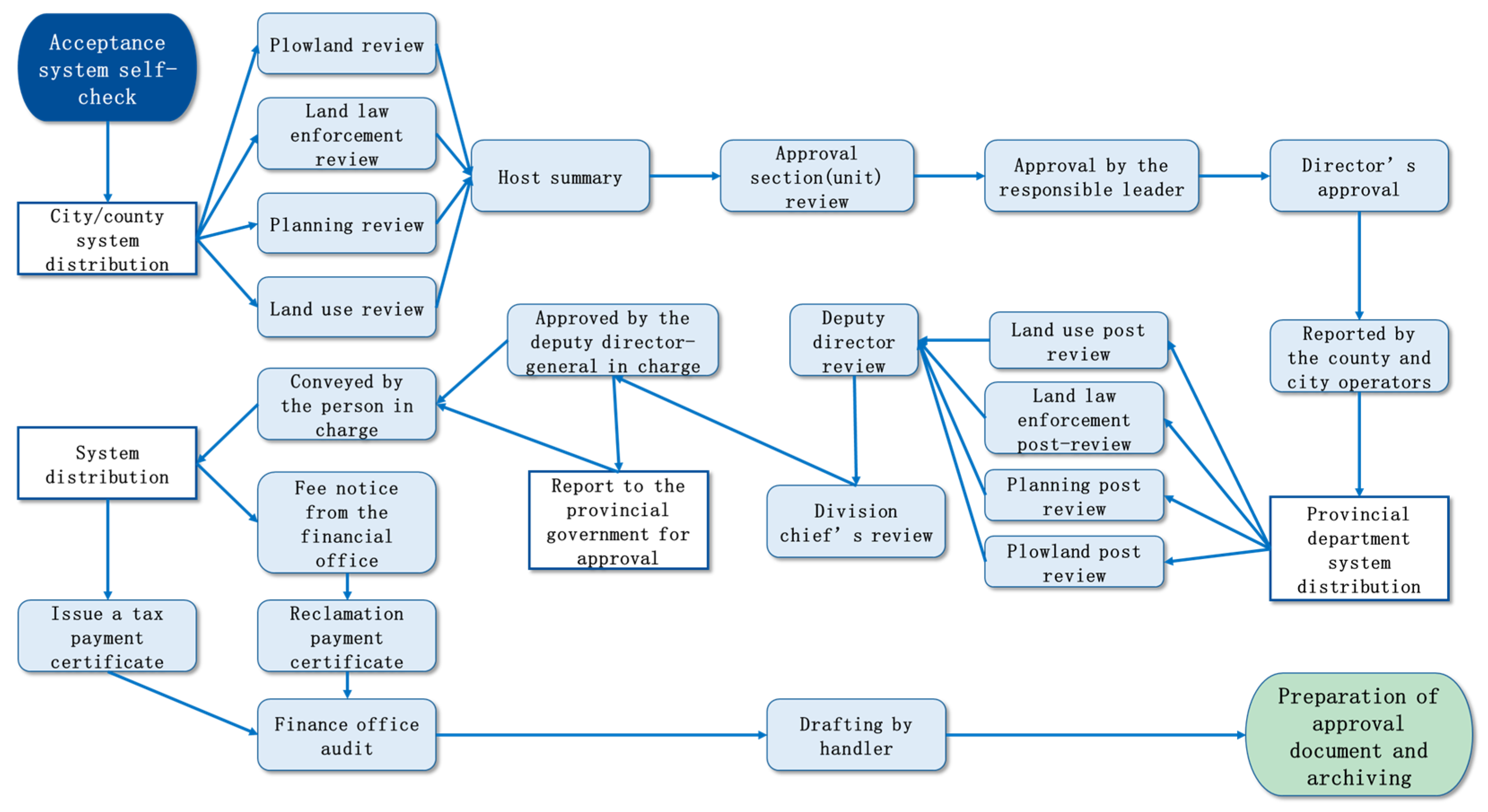

As an essential part of China’s land use regulation system, land use approval refers to the multi-agency process of converting agricultural or collectively owned land into state-owned construction land in accordance with legal procedures. It involves dual transformations in both land use type and ownership. As illustrated in

Figure 1, the land use approval workflow in practice engages multi-level approval from national, provincial, municipal, to county authorities (vertical coordination), and requires joint reviews across various departments, including planning adjustment, land consolidation, arable land compensation, and cadastral management (horizontal coordination). The outcomes of land use approval directly support land supply, development, utilization, and law enforcement. Land use approval plays a vital role in supporting territorial spatial governance, promoting urban–rural development, and ensuring the efficient allocation of land resources [

1]. However, traditional land use approval processes are often fragmented, bureaucratically complex, and heavily reliant on manual policy interpretation. In response to China’s ongoing “streamline administration, delegate power, strengthen regulation, and improve services” reform, intelligent approaches are urgently needed to improve information retrieval efficiency, enhance policy understanding, and standardize the entire approval workflow [

2].

In this context, Q&A technology has emerged as a key pathway to improving the efficiency and quality of information services. By enabling natural language interaction, Q&A systems can accurately understand user intent and intelligently retrieve, extract, and generate highly relevant and accurate responses from knowledge bases or corpora [

3,

4,

5]. As a significant form of knowledge services, Q&A systems have seen widespread application in government services, healthcare, financial consulting, and other domains [

6]. Through natural language interaction, these systems help users quickly acquire professional and precise auxiliary information, thereby enhancing service efficiency and decision-making quality [

7].

In recent years, LLMs such as InstructGPT [

8], GPT-4 [

9], LlaMA [

10], and ChatGLM [

11] have demonstrated exceptional capabilities in natural language understanding and generation. These models can learn language patterns from vast textual corpora and handle increasingly complex natural language processing tasks [

12], providing robust support for the development of Q&A systems [

13]. Domain-specific LLMs have also emerged—such as FinGPT in finance [

14] and ChatLaw in legal services [

15]—which have accelerated the application of Q&A in specialized fields. For instance, Frisoni [

16] improved response accuracy in open-domain medical Q&A by optimizing context input strategies, while Wang Zhe [

17] focused on intelligent Q&A for flood emergency management to enhance the efficiency and accuracy of response teams. These LLMs not only comprehend and answer user queries effectively but also offer personalized suggestions and solutions based on user needs, significantly improving work quality and operational efficiency.

However, LLMs still suffer from the hallucination problem when dealing with highly specialized knowledge. This refers to the generation of information that appears plausible but is actually incorrect or fabricated [

18,

19]. The root causes include the presence of unreliable or biased content in the training data, which introduces errors during generation [

20]; additionally, inherent limitations in the information processing mechanisms of LLMs at different levels may lead to deviations in understanding and generation [

21]. The Law of Knowledge Overshadowing [

22] further posits that LLMs tend to prioritize frequently occurring knowledge during content generation, thereby overshadowing low-frequency yet critical factual information. This theory offers an explanation for the common issues of irrelevant or logically flawed responses in domain-specific applications.

To mitigate hallucinations, researchers have proposed knowledge-enhanced techniques, such as RAG. RAG incorporates external knowledge sources during the Q&A process to improve the verifiability and contextual relevance of generated content [

23,

24]. For example, the SPOCK [

25] system retrieves content from textbooks to provide more accurate answers; in biomedicine, RAG is employed to introduce trustworthy background knowledge, improving the precision and traceability of specialized terminology explanations [

26]. He [

27] proposed an innovative RAG framework that dynamically identifies inter-document relevance to enhance retrieval recall and generation quality, while Yang [

28] developed IM-RAG, which integrates an inner monologue mechanism for multi-round knowledge retrieval and enhancement, effectively addressing hallucinations and adaptability issues in static knowledge environments. Despite these advancements, applying RAG in complex government domains like land use approval—characterized by intricate semantics, spatial constraints, and temporal requirements—remains challenging and falls short of fully supporting intelligent approval decision-making.

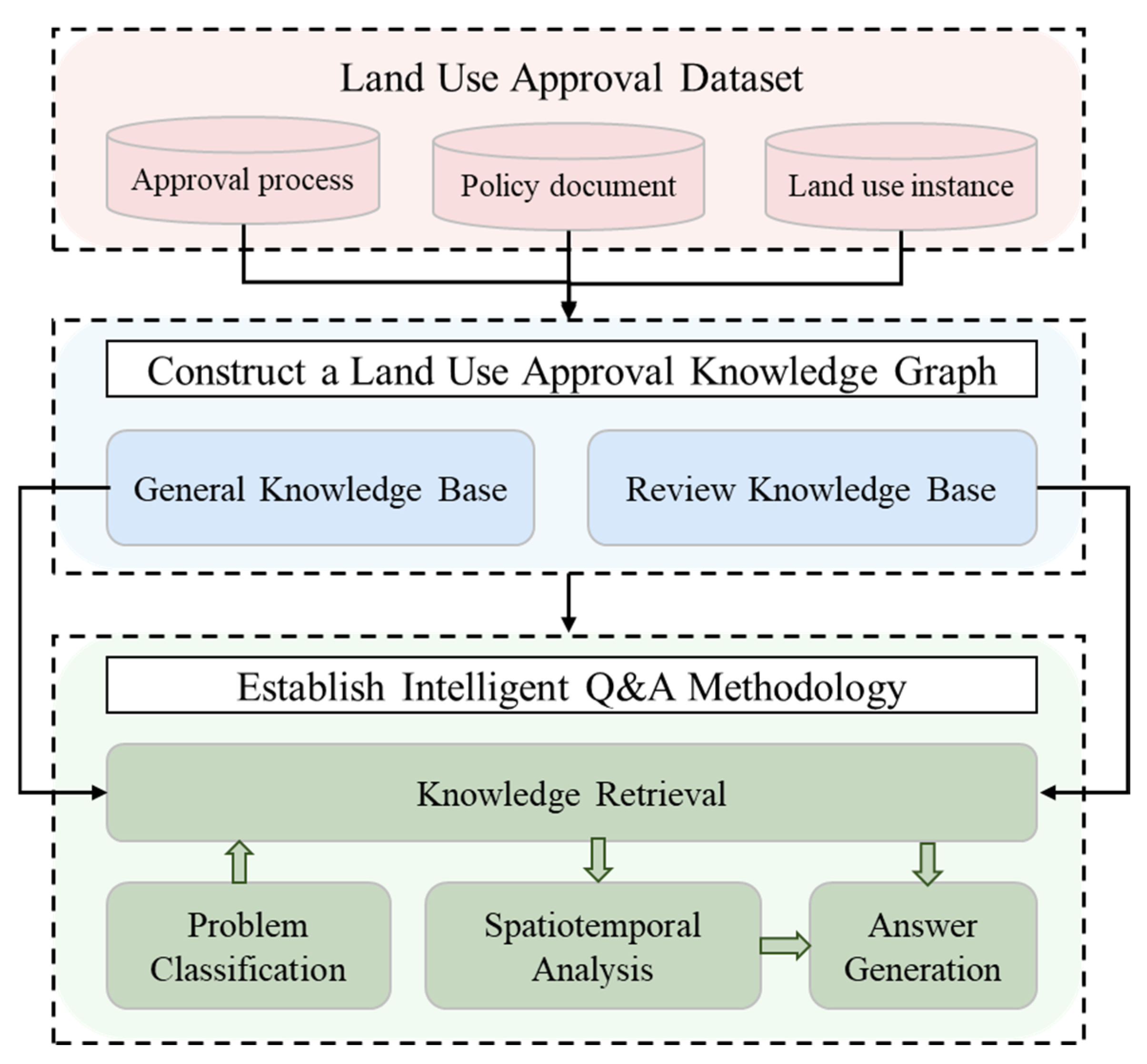

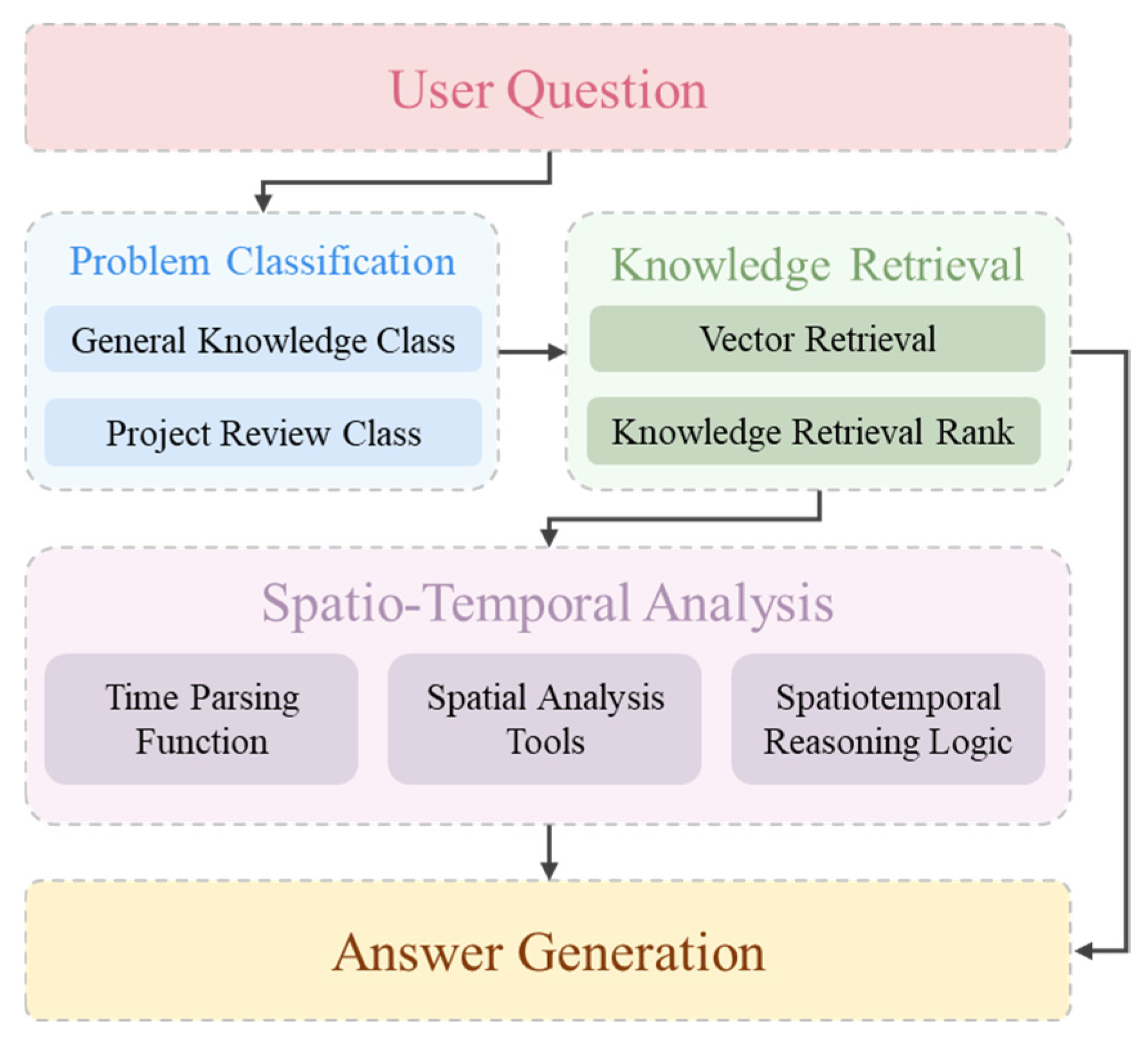

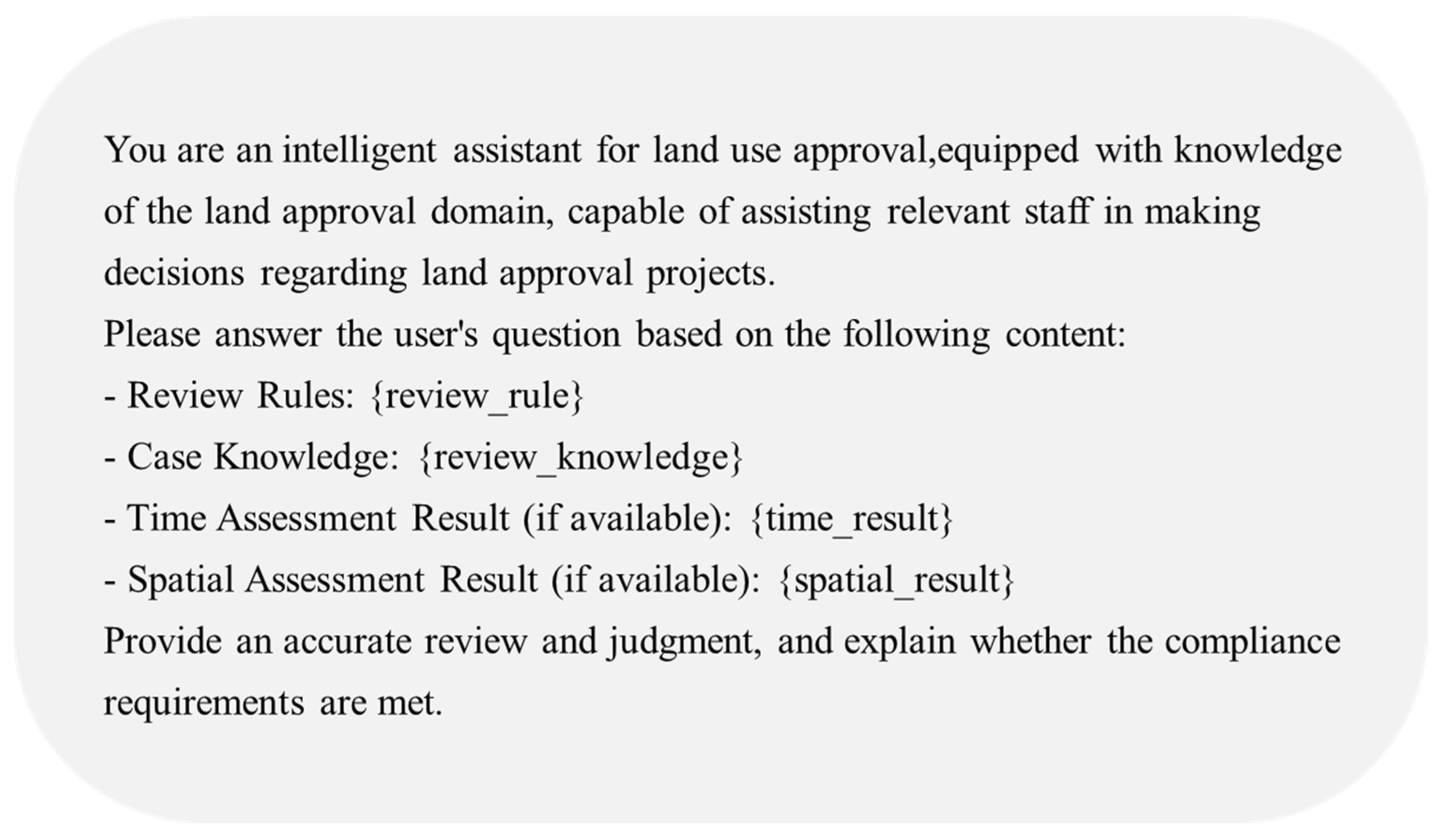

In light of these limitations, this study introduces an LLM-based agent framework enhanced with KG support to improve the intelligence level of land use approval processes. Agents refer to entities capable of perceiving the environment and autonomously making and executing decisions [

29], leveraging historical experiences and knowledge to act in a goal-directed manner [

30]. By integrating agents with an LLM, the model gains both knowledge-driven semantic reasoning capabilities and dynamic task planning for complex scenarios, thereby enhancing the accuracy, interpretability, and practicality of Q&A results [

31,

32]. Specifically, this paper proposes an LUA Q&A method that combines KGs and agent technologies, constructing an LUAKG covering key knowledge elements such as policies and regulations, approval procedures, and administrative departments. Additionally, an LLM-based agent module is introduced to handle semantic understanding, task decomposition, and dynamic reasoning. This agent, equipped with task-oriented autonomous action capabilities, can invoke geospatial analysis tools to perform critical tasks such as ecological redline avoidance analysis and land legality assessment. Ultimately, it enables spatio-semantic multidimensional evaluation and intelligent support for approval-related queries.

The key contributions of this study are as follows:

- (1)

To address the hallucination issues encountered by LLMs in interpreting policy semantics, a knowledge graph-based enhancement mechanism is proposed, enabling precise mapping between policy texts and approval semantics and significantly improving the traceability and credibility of Q&A outputs.

- (2)

To resolve the challenges posed by the lack of temporal and spatial elements in approval scenarios, agent technology is introduced to autonomously invoke analysis tools for time-sensitive assessments and spatial compliance evaluations, thereby enhancing the precision and intelligence of approval suggestion generation.

The remainder of this paper is organized as follows.

Section 2 describes the research methods used.

Section 3 details the experimental results and analysis.

Section 4 summarizes the content of this paper and proposes future prospects.

3. Results and Discussion

This study conducted an experimental analysis of the policy documents related to land use approval in Hunan Province, China, combined with the land use approval business workflow and three major project example data. To evaluate the method performance, this study uses a dataset containing 500 Q&A pairs and conducts comparative tests with multiple methods to assess the performance of different methods in the tasks of general knowledge Q&A and project review judgment. The following is a detailed description of the experimental setup of this study:

3.1. Experiment Setup

The dataset for this experiment contains policy documents related to land use approval in Hunan Province, business process data, three major project example data, and 500 Q&A pairs used to test the Q&A methodology, covering two major categories: general knowledge Q&A and project review judgment. In order to cover different types of land use submission and approval questions, these questions are subdivided into multiple subcategories and divided according to the nature of the tasks to ensure the representativeness and comprehensiveness of the dataset. Specific details are given below as follows:

3.1.1. Q&A Dataset

In this experiment, the dataset used to evaluate the performance of the Q&A method consists of 500 Q&A pairs covering two categories: GKC and PRC. To ensure the representativeness and comprehensiveness of the dataset, the questions are subdivided into subcategories and divided according to the nature of the tasks to cover different types of land use approval questions. Specific details are given below as follows:

General Knowledge Class (200 questions): These questions are related to the policies, regulations, and approval process of land use submission and approval, which are mainly categorized into the following five major categories (see

Table 3):

- 2.

Project Review Class (300 questions): These questions are related to the review of compliance of land use submission projects, covering three major categories: semantic class (SeC), spatial class (SpC), and temporal class (TC) (see

Table 4):

The question-answering dataset used in this study was generated through a semi-manual construction process. Initially, domain experts designed question templates covering both general land use knowledge and project-specific review rules. These templates were then used by a large language model (LLM) to generate reference answers with clear semantics and standardized formats. All generated QA pairs were manually reviewed and refined to ensure high quality. The dataset is grounded in real-world scenarios and includes a variety of question types and complexity levels. Each question is paired with a corresponding reference answer (see

Table 5), enabling the evaluation of QA methods across different categories.

3.1.2. Method Comparison

In order to comprehensively evaluate the performance of the system, three different methods are used in this study for comparative experiments in order to deeply analyze the performance and advantages of the different methods in the intelligent Q&A task of land use approval. The specific methods are as follows:

LLM-QA: this approach uses the large language model Qwen-plus for question comprehension and answer generation. As a benchmark model, LLM-QA directly performs semantic comprehension of user input questions and generates answers through a generative model, which is mainly examined for its performance in general knowledge quizzes and simple tasks.

RAG-QA: the approach uses a strategy based on RAG, which is generated by retrieving relevant content from a knowledge base in combination with the large language model Qwen-plus. RAG-QA enhances the model’s knowledge acquisition capability through the retrieval module, and bridges the knowledge gap of the large language model by utilizing the information in the external knowledge base, which in turn improves the accuracy and generation of answers.

KG-Agent-QA: this is an optimization method proposed in this study, combined with intelligent agent technology, in the intelligent Q&A task of land use approval. KG-Agent-QA provides structured domain knowledge through KGs, and the intelligent agent automatically selects the appropriate knowledge base according to the type of question and performs spatiotemporal analysis. The method not only flexibly responds to different types of questions, but also improves the ability of project review and complex decision support through spatiotemporal–semantic multidimensional analysis.

Through the comparison of these three methods, this study is able to comprehensively evaluate the effectiveness of different techniques in dealing with land application problems, and these comparative experiments will provide an important theoretical basis and practical guidance for further optimizing the Q&A system.

3.2. Evaluation of Method Performance

In order to comprehensively evaluate the performance of different methods in the Q&A task, two scoring methods were used in this study: manual scoring and LLM autonomous scoring. The manual scoring is based on four common evaluation metrics: accuracy, precision, recall, and F1, focusing on the comparison between correct and generated answers; while the LLM autonomous scoring scores the answers given by different methods in terms of accuracy, relevance, and fluency, and finally, the average of all the answers is taken to evaluate the performance of the different methods. The following are the specific definitions and calculations of these indicators:

Accuracy [

39]: One of the basic metrics for evaluating the overall performance of a system, defined as the percentage of questions that are answered correctly by the system.

In Equation (8), TP denotes the number of correct answers returned by the system, FP denotes the number of incorrect answers returned by the system, TN denotes the number of incorrect answers not returned by the system, and FN denotes the number of correct answers not returned by the system.

- 2.

Precision [

40]: A measure of the proportion of answers returned by the system that are actually correct.

- 3.

Recall [

41]: Measures the proportion of actual correct answers that are returned by the system.

- 4.

F1 [

42]: A reconciled average of the precision and recall, used to synthesize and evaluate the precision and recall capabilities of the system.

The F1 value combines precision and recall, providing a balanced metric that is particularly suitable for tasks that require a balance between accuracy and comprehensiveness.

- 5.

LLM Autonomy Scores [

43,

44]:

LLM autonomous scoring is a method for automatically assessing the quality of generated answers based on a large language model. By independently scoring each generated answer for accuracy, relevance, and fluency, LLM autonomous scoring provides a comprehensive measure of answer quality.

Specifically, accuracy reflects how well the generated answer matches the standard answer, relevance measures whether the generated answer effectively solves the problem, and fluency assesses how natural the answer is in terms of grammar and expression:

In the above Equations, N is the total number of questions, and accuracyi, relevancei, and fluencyi are the scores of the ith question on accuracy, relevance, and fluency, respectively. With this approach, LLM autonomic scoring provides an automated and systematic solution for evaluating Q&A systems, which is especially suitable for evaluating large-scale datasets, and is able to comprehensively measure the system performance in terms of the dimensions of accuracy, relevance, and fluency.

3.3. Results and Discussions

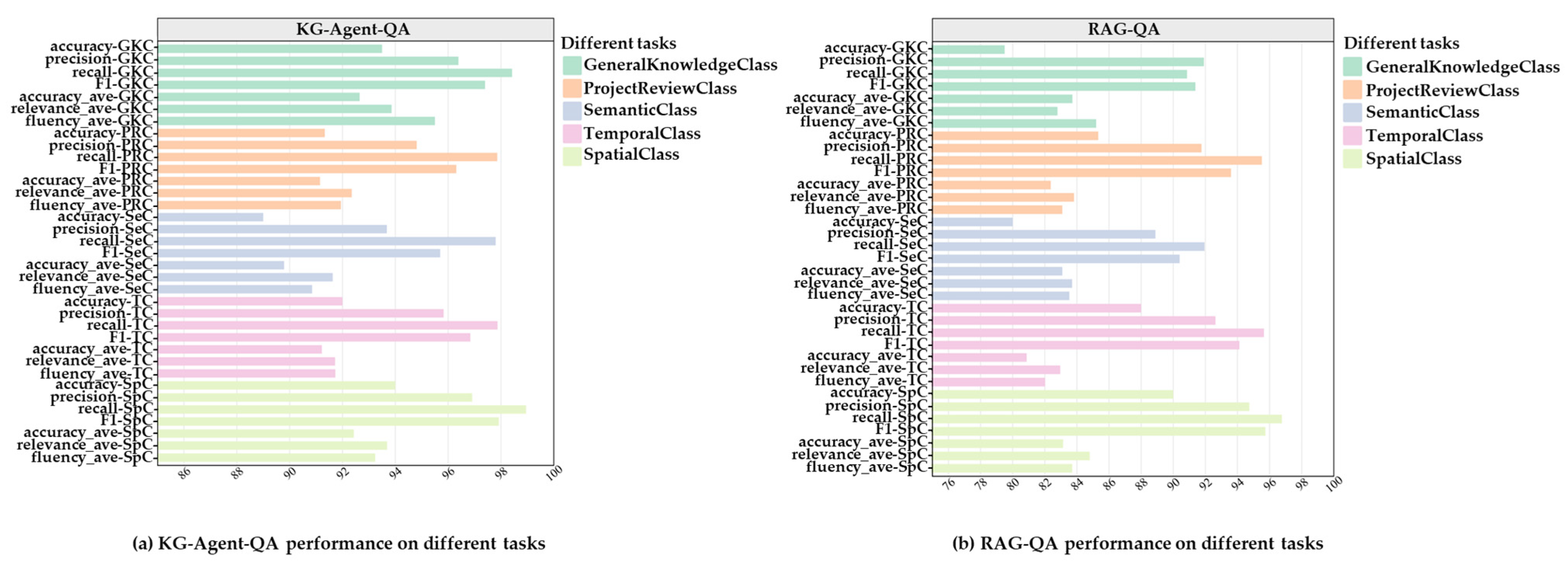

To compare the performance of different algorithms across various Q&A tasks, this study conducts a comparative experiment based on the evaluation metrics mentioned above. The results indicate that the proposed KG-Agent-QA method shows significant advantages in both GKC and PRC tasks (see

Table 6). In GKC tasks, KG-Agent-QA outperforms LLM-QA (77% accuracy_ave, 86% F1) and RAG-QA (84% accuracy_ave, 91% F1) with an accuracy_ave of 93% and an F1 of 97%. This performance improvement can be attributed to the structured organization of domain knowledge through the knowledge graph. In PRC tasks, KG-Agent-QA also demonstrates outstanding performance.

These findings highlight that KG-Agent-QA, by combining knowledge graphs and intelligent agent technology, enables more accurate problem understanding and knowledge retrieval, resulting in superior performance across multiple metrics. In comparison, while RAG-QA enhances answer accuracy and recall to some extent through retrieval-augmented generation, it falls short in utilizing deep knowledge and performing complex reasoning compared to KG-Agent-QA. LLM-QA, relying solely on pre-trained knowledge within the language model, lacks external knowledge augmentation and reasoning support, leading to knowledge bias or incomplete answers in complex tasks, and thus performs less effectively.

To address performance across different subcategories of project review questions, we conduct a fine-grained analysis by breaking down tasks into three representative types: semantic comprehension (SeC), temporal constraints (TC), and spatial compliance (SpC), as shown in

Table 7. KG-Agent-QA demonstrates clear advantages in each. In SpC, it outperforms RAG-QA (83% accuracy_ave, 96% F1) with an accuracy_ave of 92% and an F1 of 98%, validating the effectiveness of the spatial analysis tool in project review. In TC, KG-Agent-QA achieves an accuracy_ave of 91%, which is 10 percentage points higher than RAG-QA, benefiting from the temporal logic constraint verification agent’s modeling of time-related features such as approval deadlines. Notably, the method also achieves a recall of 96% in SeC, improving by 6 percentage points compared to RAG-QA, demonstrating that the integration of the knowledge graph and intelligent agent routing mechanism effectively mitigates the issue of missed detection due to semantic ambiguity.

Overall, KG-Agent-QA forms a systemic advantage in multidimensional evaluation metrics through entity relationship reasoning within the knowledge graph, targeted activation of spatiotemporal analysis tools, and dynamic decision-making via intelligent agent routing, especially showing robust adaptability in advanced tasks requiring cross-modal knowledge fusion.

The comparison of performance metrics for RAG-QA and KG-Agent-QA in different Q&A tasks, as shown in

Figure 6, further emphasizes that KG-Agent-QA has a systemic advantage over RAG-QA in terms of structured knowledge integration and multidimensional reasoning. In GKC, KG-Agent-QA’s metrics are predominantly in the range of 92–98%, significantly surpassing RAG-QA’s range of 78–92%. This advantage stems from the embedded representation of structured knowledge, such as approval procedures and material lists, in the knowledge graph. In PRC, KG-Agent-QA outperforms RAG-QA in semantic, time, and spatial tasks, further validating the superior performance of the knowledge graph and intelligent agent collaborative framework in complex domain-specific Q&A tasks.

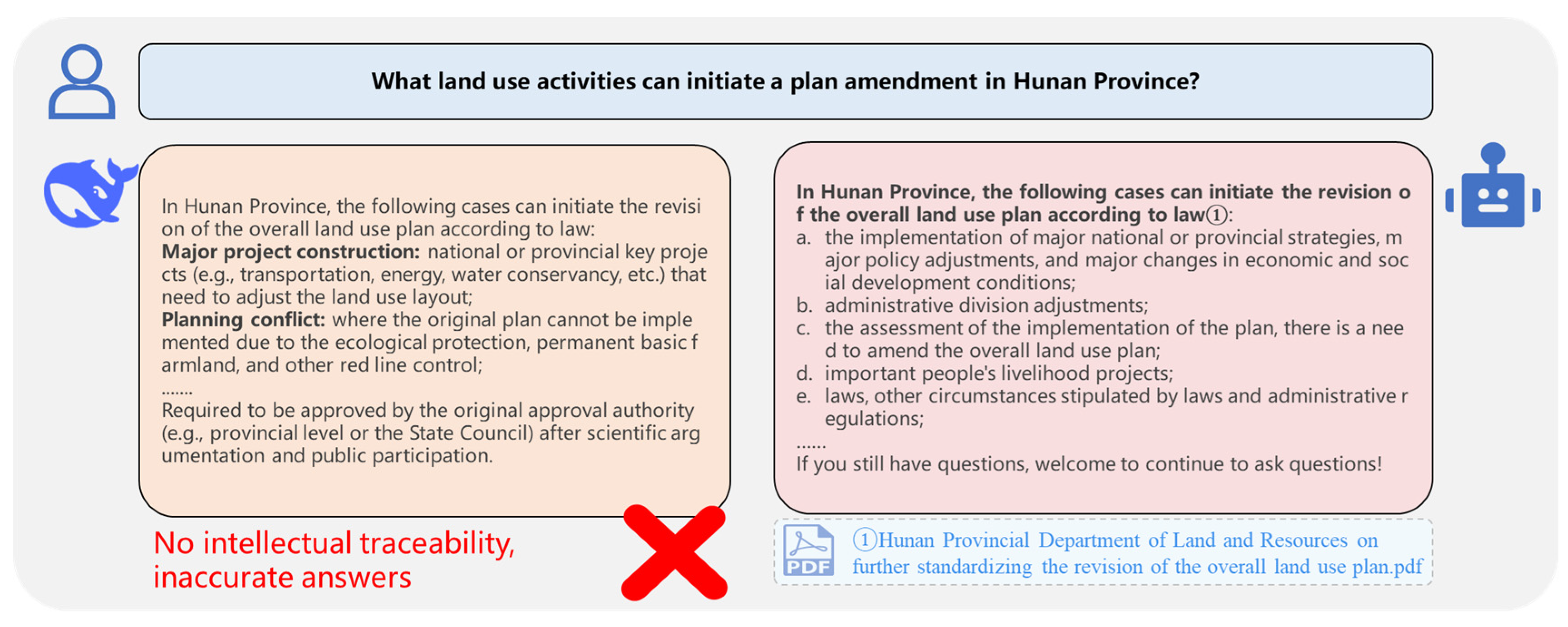

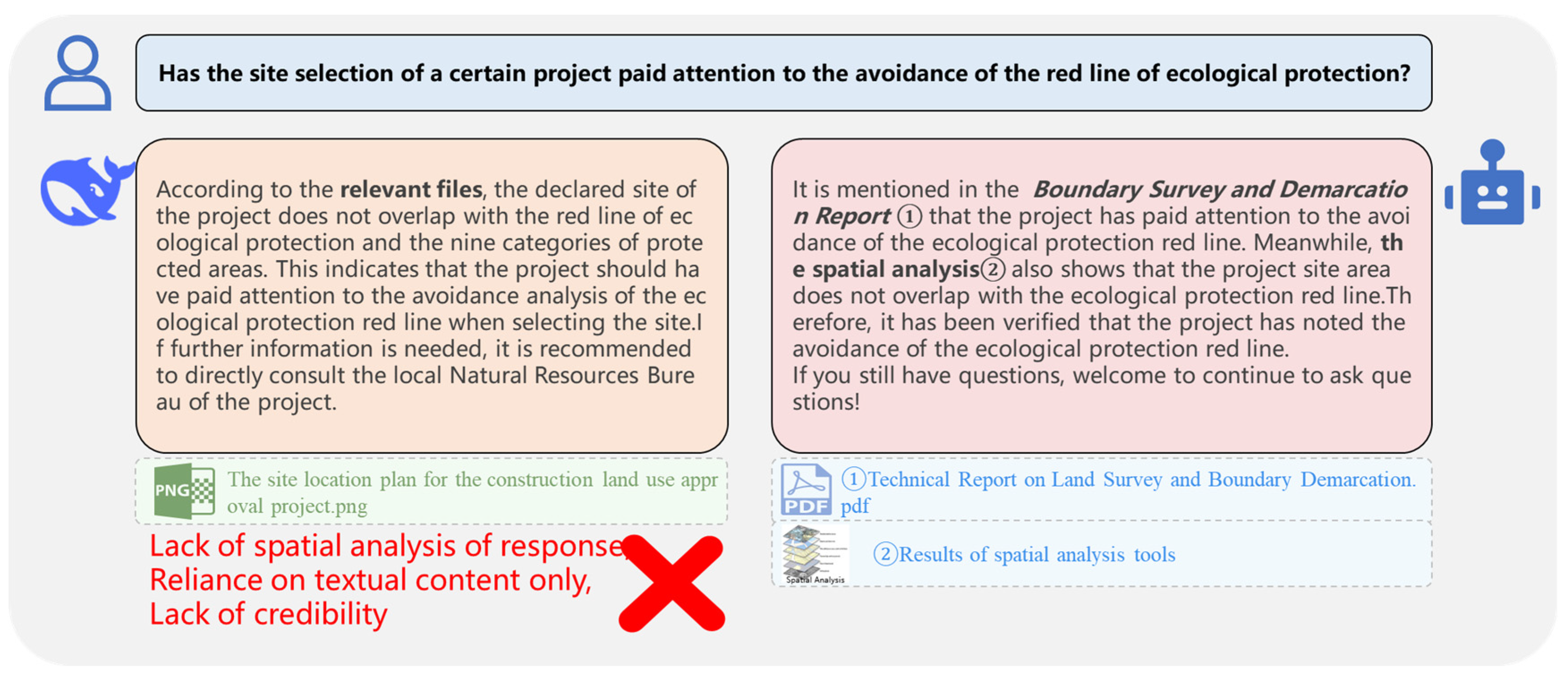

As shown in

Figure 7, the KG-Agent-QA method, by combining the LUAKG, significantly mitigates hallucinations in LLM-based answers. By associating with policy clauses, legal regulations, and other documents, this method greatly enhances the interpretability of answers. Additionally, the Q&A performance comparison between the two methods shown in

Figure 8 demonstrates that KG-Agent-QA, by utilizing spatiotemporal analysis tools, can effectively assess the spatial constraints and temporal validity of projects. By considering multiple dimensions such as semantics, time, and space, it generates more reliable answers, thereby optimizing the precision and efficiency of the land approval process.

4. Conclusions

To enhance decision support and work efficiency in land use approval, this study proposes a spatiotemporal–semantic coupling intelligent Q&A method for land use approval, based on KGs and intelligent agent technology. By constructing the LUAKG and intelligent agent technology, the proposed KG-Agent-QA method provides more precise and intelligent answers across various Q&A tasks, particularly demonstrating significant advantages in complex project review tasks. The main conclusions are summarized as follows:

Superiority in Q&A Tasks: Compared to LLM-QA and RAG-QA, KG-Agent-QA shows significant improvements in both GKC and PRC tasks, with accuracy and F1 score improving by 16% and 11%, respectively. This confirms the effectiveness of KGs in structuring domain knowledge and the dynamic decision-making capability of intelligent agents.

Enhanced Multidimensional Q&A Ability: KG-Agent-QA shows higher accuracy and recall in spatial and temporal Q&A tasks, and effectively reduces missed detections caused by semantic ambiguity in semantic Q&A tasks. This further validates the advantage of the KG and intelligent agent collaborative architecture in handling complex domain-specific tasks.

Advantages of Spatiotemporal–Semantic Coupling: By effectively utilizing spatiotemporal analysis tools, KG-Agent-QA performs comprehensive analysis across spatial, temporal, and semantic dimensions. The integration of multi-modal information systematically improves the precision and efficiency of decision support for land approval.

Effectiveness of the KG and Intelligent Agent Collaborative Architecture: The KG provides a structured foundation for domain knowledge, while intelligent agent technology enables dynamic decision support. This collaborative architecture allows for deeper reasoning and judgment in complex tasks, making it especially suitable for land use approval that requires the consideration of multiple conditions and cross-modal information.

This study proposes an innovative intelligent Q&A framework by combining KG and intelligent agent technology. Through effective spatiotemporal analysis and multidimensional reasoning, the method significantly enhances decision support for land use approval. This approach not only handles conventional policy and regulatory questions but also addresses complex project review tasks, demonstrating strong scalability and practical application value. However, the current method faces challenges such as high costs for knowledge graph construction and updates, limited ability to process unstructured text, and insufficient generalization capabilities of the intelligent agents. Future research should focus on exploring automated mechanisms for knowledge graph updates, improving the system’s ability to understand unstructured text, developing more collaborative multi-agent systems for cross-departmental collaboration, enhancing spatial analysis capabilities, and establishing a more comprehensive decision explanation mechanism. These improvements will help build more intelligent and efficient decision support systems for land use approval, driving the intelligent and digital transformation of territorial spatial planning.