Knit-FLUX: Simulation of Knitted Fabric Images Based on Low-Rank Adaptation of Diffusion Models

Abstract

1. Introduction

2. Theory

2.1. Diffusion Model

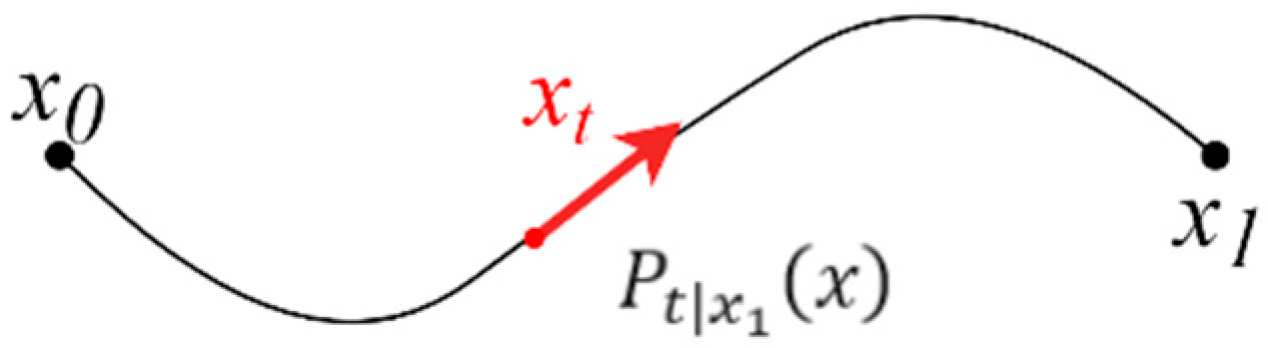

2.2. Conditional Flow Matching

3. Methods

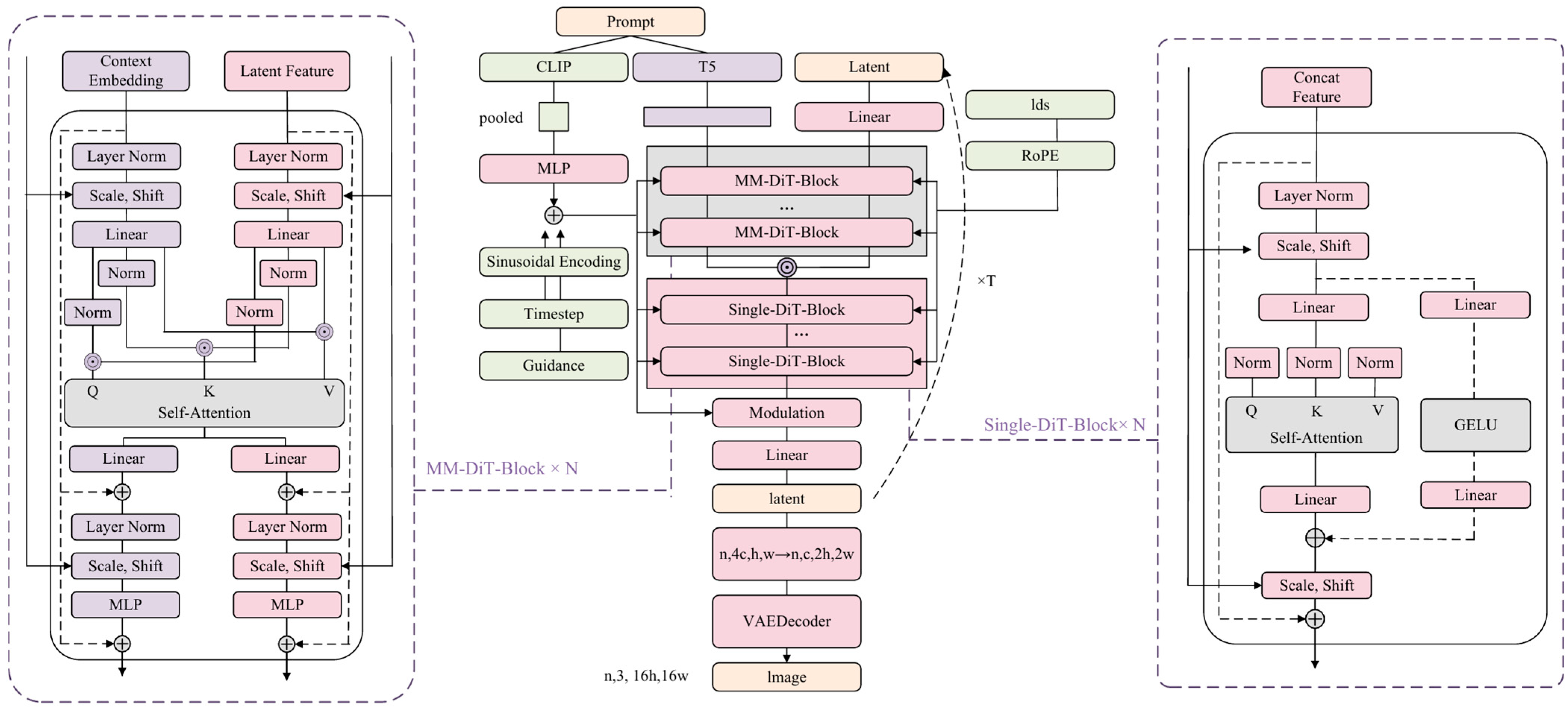

3.1. Text-to-Image Architecture

3.2. Training Low-Rank Adaption of Model

4. Experiment and Result Analysis

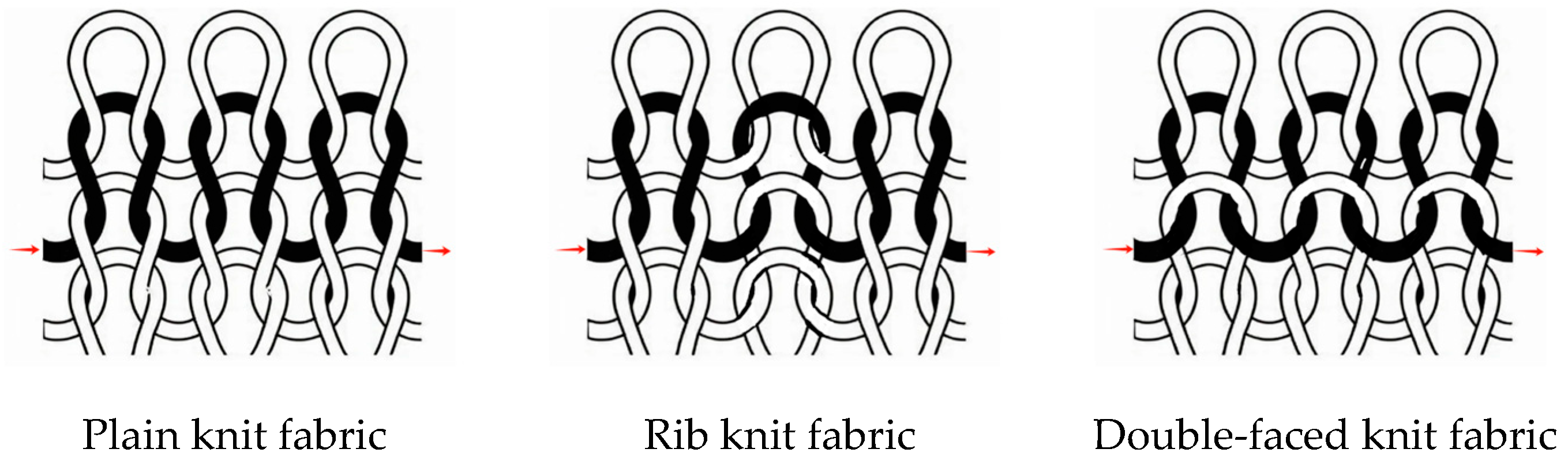

4.1. Dataset Preparation

4.2. Experimental Setup

4.3. Generation Parameters

4.4. Generation Results

4.5. Evaluation

4.5.1. Qualitative Assessment

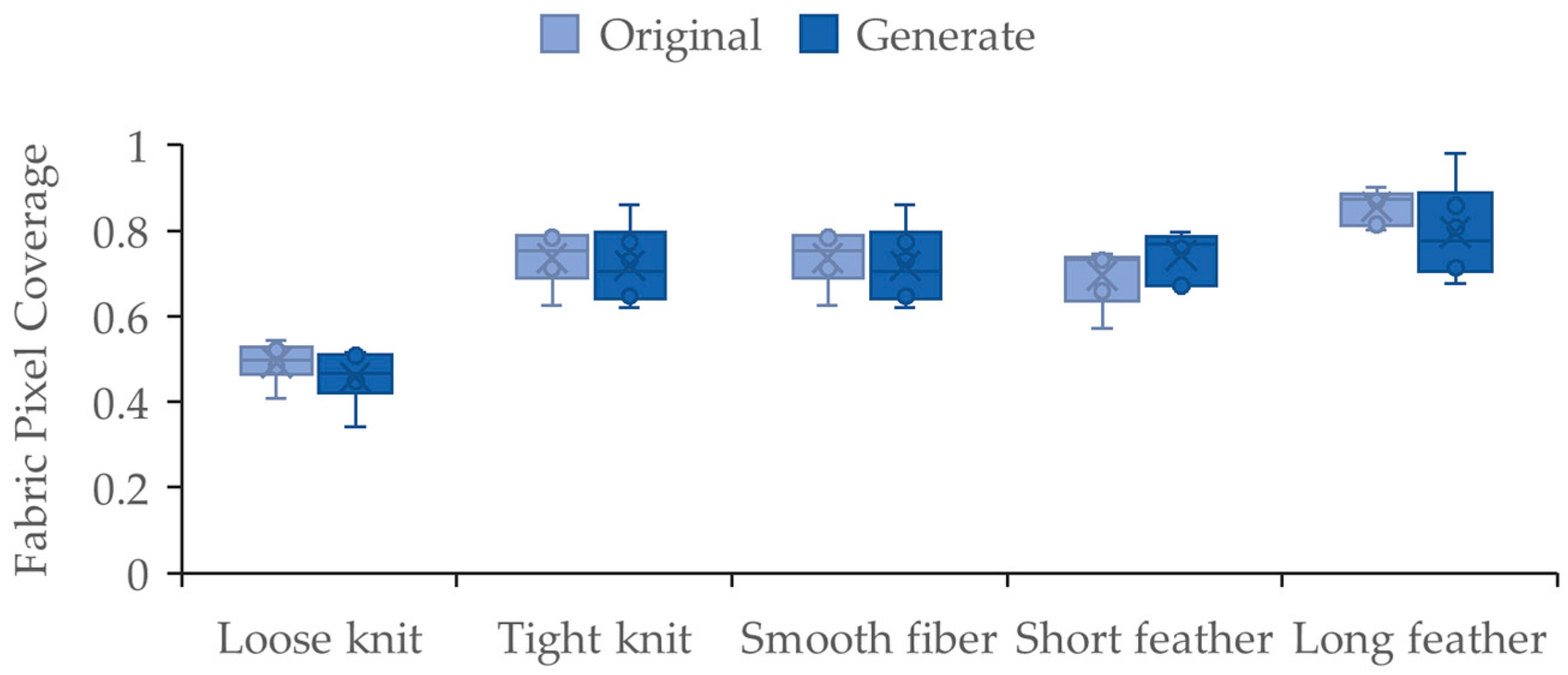

4.5.2. Quantitative Assessment

4.5.3. Generation Efficiency

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SSIM | Structural similarity index measure |

| VAE | Variational auto-encoder |

| GAN | Generative Adversarial Networks |

| SD3 | Stable Diffusion model 3 |

| MM-DiT | Multimodal Diffusion Transformer Backbone |

| FLUX | FLUX.1 Dev |

| Single-DiT | Single Transformer Block |

| PEFT | Parameter efficient fine-tuning |

| Knit-FLUX | Low-Rank Adaptation of FLUX model |

| Knit-Diff | Low-Rank Adaptation of SD1.5 model |

| CFM | Conditional Flow Matching |

| ODE | Ordinary Differential Equation |

| CLIP | Contrastive Language-Image Pre-training |

| T5 | Text-to-Text Transfer Transformer encoders |

| RoPE | Rotary Position Embedding |

| adaLN | Adaptive Layer Normalization |

| MLP | Multi-Layer Perceptron |

| GELU | Gaussian Error Linear Unit activation function |

| LoRA | Low-Rank Adaptation |

| AdamW | Adam with Weight Decay |

References

- Alcaide-Marzal, J.; Diego-Mas, J.A. Computers as Co-Creative Assistants. A Comparative Study on the Use of Text-to-Image AI Models for Computer Aided Conceptual Design. Comput. Ind. 2025, 164, 104168. [Google Scholar] [CrossRef]

- He, Y.; Liu, Z.; Chen, J.; Tian, Z.; Liu, H.; Chi, X.; Liu, R.; Yuan, R.; Xing, Y.; Wang, W.; et al. LLMs Meet Multimodal Generation and Editing: A Survey. arXiv 2024, arXiv:2405.19334. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2022, arXiv:1312.6114. [Google Scholar]

- Chen, J.; Song, W. GAN-VAE: Elevate Generative Ineffective Image through Variational Autoencoder. In Proceedings of the 2022 5th International Conference on Pattern Recognition and Artificial Intelligence (PRAI), Chengdu, China, 19–21 August 2022; pp. 765–770. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis with Latent Diffusion Models. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–22 June 2022; pp. 10674–10685. [Google Scholar]

- Zhang, H.; Qiao, G.; Liu, S.; Lyu, Y.; Yao, L.; Ge, Z. Attention-bassed Vector Quantisation Variational Autoencoder for Colour-patterned Fabrics Defect Detection. Color. Technol. 2023, 139, 223–238. [Google Scholar] [CrossRef]

- Lu, G.; Xiong, T.; Wu, G. YOLO-BGS Optimizes Textile Production Processes: Enhancing YOLOv8n with Bi-Directional Feature Pyramid Network and Global and Shuffle Attention Mechanisms for Efficient Fabric Defect Detection. Sustainability 2024, 16, 7922. [Google Scholar] [CrossRef]

- Wang, S.; Yu, J.; Li, Z.; Chai, T. Semisupervised Classification with Sequence Gaussian Mixture Variational Autoencoder. IEEE Trans. Ind. Electron. 2024, 71, 11540–11548. [Google Scholar] [CrossRef]

- Dhariwal, P.; Nichol, A. Diffusion Models Beat GANs on Image Synthesis. In Proceedings of the Advances in Neural Information Processing Systems, Virtual Event, 6–14 December 2021; Curran Associates, Inc.: Red Hook, NY, USA, 2021; Volume 34, pp. 8780–8794. [Google Scholar]

- Sengar, S.S.; Hasan, A.B.; Kumar, S.; Carroll, F. Generative Artificial Intelligence: A Systematic Review and Applications. Multimed. Tools Appl. 2024, 84, 23661–23700. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, K.; Li, Y.; Yan, Z.; Gao, C.; Chen, R.; Yuan, Z.; Huang, Y.; Sun, H.; Gao, J.; et al. Sora: A Review on Background, Technology, Limitations, and Opportunities of Large Vision Models. arXiv 2024, arXiv:2402.17177. [Google Scholar] [CrossRef]

- Liu, X.; Gong, C.; Liu, Q. Flow Straight and Fast: Learning to Generate and Transfer Data with Rectified Flow. arXiv 2022, arXiv:2209.03003. [Google Scholar] [CrossRef]

- Podell, D.; English, Z.; Lacey, K.; Blattmann, A.; Dockhorn, T.; Müller, J.; Penna, J.; Rombach, R. SDXL: Improving Latent Diffusion Models for High-Resolution Image Synthesis. arXiv 2023, arXiv:2307.01952. [Google Scholar] [CrossRef]

- Esser, P.; Kulal, S.; Blattmann, A.; Entezari, R.; Müller, J.; Saini, H.; Levi, Y.; Lorenz, D.; Sauer, A.; Boesel, F.; et al. Scaling Rectified Flow Transformers for High-Resolution Image Synthesis. In Proceedings of the Forty-first International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Lipman, Y.; Chen, R.T.Q.; Ben-Hamu, H.; Nickel, M.; Le, M. Flow Matching for Generative Modeling. arXiv 2022, arXiv:2210.02747. [Google Scholar]

- Peebles, W.; Xie, S. Scalable Diffusion Models with Transformers 2023. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 4–6 October 2023; pp. 4195–4205. [Google Scholar]

- Su, J.; Ahmed, M.; Lu, Y.; Pan, S.; Bo, W.; Liu, Y. RoFormer: Enhanced Transformer with Rotary Position Embedding. Neurocomputing 2024, 568, 127063. [Google Scholar] [CrossRef]

- Jiang, Y.; Liu, Q.; Chen, D.; Yuan, L.; Fu, Y. AnimeDiff: Customized Image Generation of Anime Characters Using Diffusion Model. IEEE Trans. Multimed. 2024, 26, 10559–10572. [Google Scholar] [CrossRef]

- Black Forest Labs—Frontier AI Lab. Available online: https://blackforestlabs.ai/ (accessed on 30 May 2025).

- Dehghani, M.; Djolonga, J.; Mustafa, B.; Padlewski, P.; Heek, J.; Gilmer, J.; Steiner, A.; Caron, M.; Geirhos, R.; Alabdulmohsin, I.; et al. Scaling Vision Transformers to 22 Billion Parameters. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 7480–7512, PMLR. [Google Scholar]

- Lialin, V.; Deshpande, V.; Yao, X.; Rumshisky, A. Scaling down to Scale up: A Guide to Parameter-Efficient Fine-Tuning. arXiv 2024, arXiv:2303.15647. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. In Proceedings of the Tenth International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Guo, Y.; Sun, L. Designer-style Clothing Generation Method Based on LoRA Model Fine-tuning Stable Diffusion 2024. J. Beijing Inst. Fash. Technol. 2024, 44, 58–69. [Google Scholar] [CrossRef]

- Fei, R.; Jun, J.; Xiang, W.; Hao, X.; Yuan, X. Automatic generation of blue calico’s single pattern based on Stable Diffusion. Adv. Text. Technol. 2024, 32, 48–57. [Google Scholar] [CrossRef]

- Huang, H.; Li, C.; Zhang, X. Research on the appearance simulation of fabrics by generative artificial intelligence. J. Donghua Univ. 2024, 24, 46–55+64. [Google Scholar] [CrossRef]

- Yan, H.; Zhang, H.; Liu, L.; Zhou, D.; Xu, X.; Zhang, Z.; Yan, S. Toward Intelligent Design: An AI-Based Fashion Designer Using Generative Adversarial Networks Aided by Sketch and Rendering Generators. IEEE Trans. Multimed. 2023, 25, 2323–2338. [Google Scholar] [CrossRef]

- Cao, S.; Chai, W.; Hao, S.; Zhang, Y.; Chen, H.; Wang, G. DiffFashion: Reference-Based Fashion Design with Structure-Aware Transfer by Diffusion Models. IEEE Trans. Multimed. 2024, 26, 3962–3975. [Google Scholar] [CrossRef]

- Zhang, S.; Ni, M.; Chen, S.; Wang, L.; Ding, W.; Liu, Y. A Two-Stage Personalized Virtual Try-on Framework with Shape Control and Texture Guidance. IEEE Trans. Multimed. 2024, 26, 10225–10236. [Google Scholar] [CrossRef]

- Yan, H.; Zhang, H.; Zhang, Z. Learning to Disentangle the Colors, Textures, and Shapes of Fashion Items: A Unified Framework. IEEE Trans. Multimed. 2024, 26, 5615–5629. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Song, Y.; Sohl-Dickstein, J.; Kingma, D.P.; Kumar, A.; Ermon, S.; Poole, B. Score-Based Generative Modeling through Stochastic Differential Equations. arXiv 2021, arXiv:2011.13456. [Google Scholar] [CrossRef]

- Tong, A.; Malkin, N.; Huguet, G.; Zhang, Y.; Rector-Brooks, J.; Fatras, K.; Wolf, G.; Bengio, Y. Improving and Generalizing Flow-Based Generative Models with Minibatch Optimal Transport. arXiv 2023, arXiv:2302.00482. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning, Virtual Event, 18–24 July 2021. [Google Scholar]

- Ni, J.; Ábrego, G.H.; Constant, N.; Ma, J.; Hall, K.B.; Cer, D.; Yang, Y. Sentence-T5: Scalable Sentence Encoders from Pre-Trained Text-to-Text Models. arXiv 2021, arXiv:2108.08877. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

| Method | SSIM↑ | HP↑ |

|---|---|---|

| Knit-Diff | 0.3537 | 0.383 |

| Knit-FLUX | 0.6528 | 0.883 |

| Method | Knit-Diff (SD1.5) | SDXL | Knit-FLUX (FLUX) |

|---|---|---|---|

| Time(s) | 1.9 | 3.76 | 16.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Peng, J.; Lu, Z.; Wang, Y.; Liu, F. Knit-FLUX: Simulation of Knitted Fabric Images Based on Low-Rank Adaptation of Diffusion Models. Appl. Sci. 2025, 15, 8999. https://doi.org/10.3390/app15168999

Liu X, Peng J, Lu Z, Wang Y, Liu F. Knit-FLUX: Simulation of Knitted Fabric Images Based on Low-Rank Adaptation of Diffusion Models. Applied Sciences. 2025; 15(16):8999. https://doi.org/10.3390/app15168999

Chicago/Turabian StyleLiu, Xiaochen, Jiajia Peng, Zhiwen Lu, Yongxue Wang, and Feng Liu. 2025. "Knit-FLUX: Simulation of Knitted Fabric Images Based on Low-Rank Adaptation of Diffusion Models" Applied Sciences 15, no. 16: 8999. https://doi.org/10.3390/app15168999

APA StyleLiu, X., Peng, J., Lu, Z., Wang, Y., & Liu, F. (2025). Knit-FLUX: Simulation of Knitted Fabric Images Based on Low-Rank Adaptation of Diffusion Models. Applied Sciences, 15(16), 8999. https://doi.org/10.3390/app15168999