XSS Attack Detection Method Based on CNN-BiLSTM-Attention

Abstract

1. Introduction

- A word segmentation algorithm based on regular expressions is designed to solve the problem of semantic boundary recognition of programming language symbols and better preserve the semantic and structural integrity of the attack text.

- A deep learning model that integrates convolutional neural networks, bidirectional long short-term memory networks and multi-head attention mechanisms is proposed, which realizes the three-stage collaborative processing of local feature perception, long-range dependency modeling, and key feature enhancement, and this effectively solves the semantic gap problem existing in traditional methods.

- Experimental results on real datasets show that the model outperforms mainstream benchmark models in XSS attack detection and can effectively deal with complex and changeable XSS attacks.

2. Related Work

2.1. Traditional XSS Detection Methods

2.2. XSS Detection Method Based on Deep Learning

3. Method

3.1. Overall Framework

3.2. Data Preprocessing

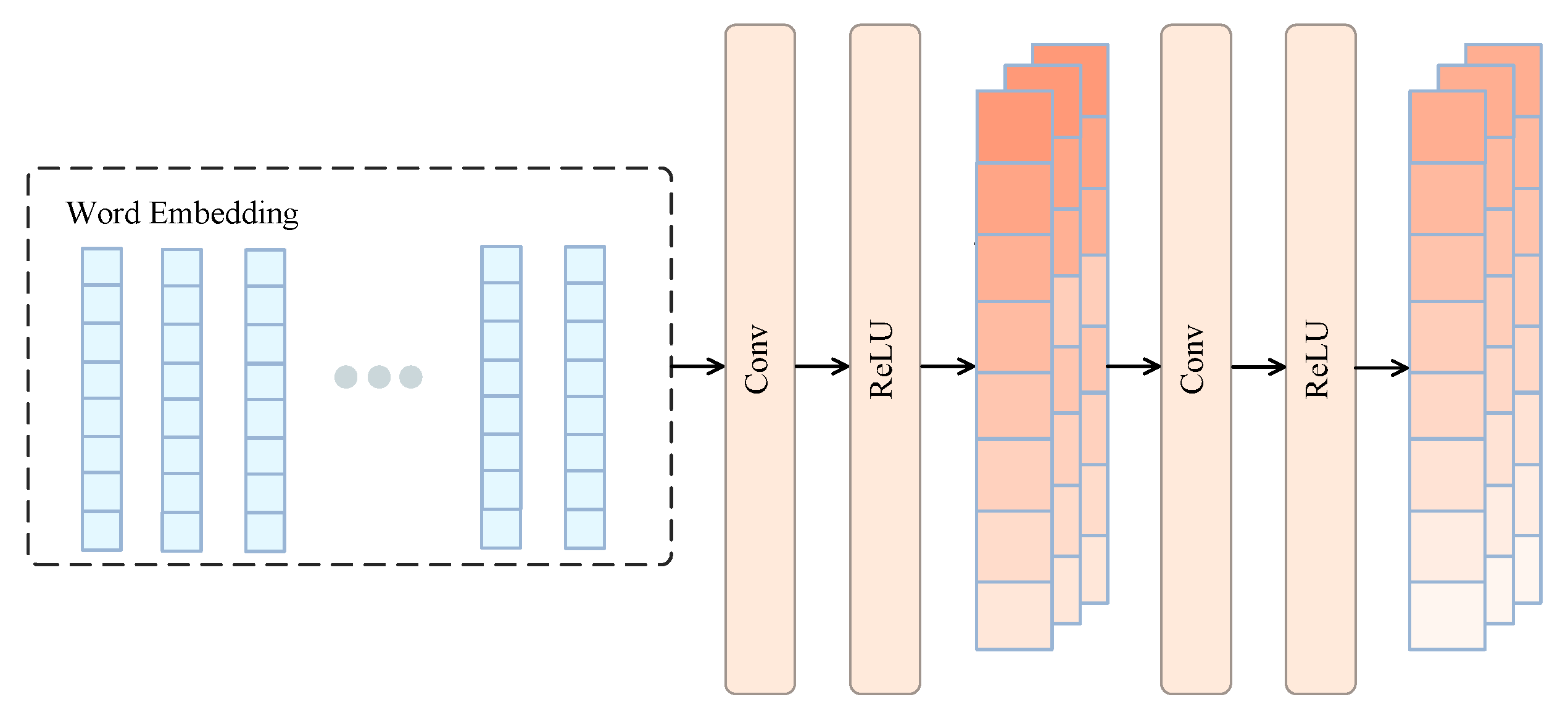

3.3. Convolutional Feature Extraction Module

3.4. Bidirectional LSTM Context Encoding

3.5. Multi-Head Attention Mechanism

3.6. Classifier

4. Experimental Analysis

4.1. Dataset

4.2. Evaluation Indicators

4.3. Benchmark Model

4.4. Experimental Environment

4.5. Experimental Results and Analysis

4.6. Ablation Studies

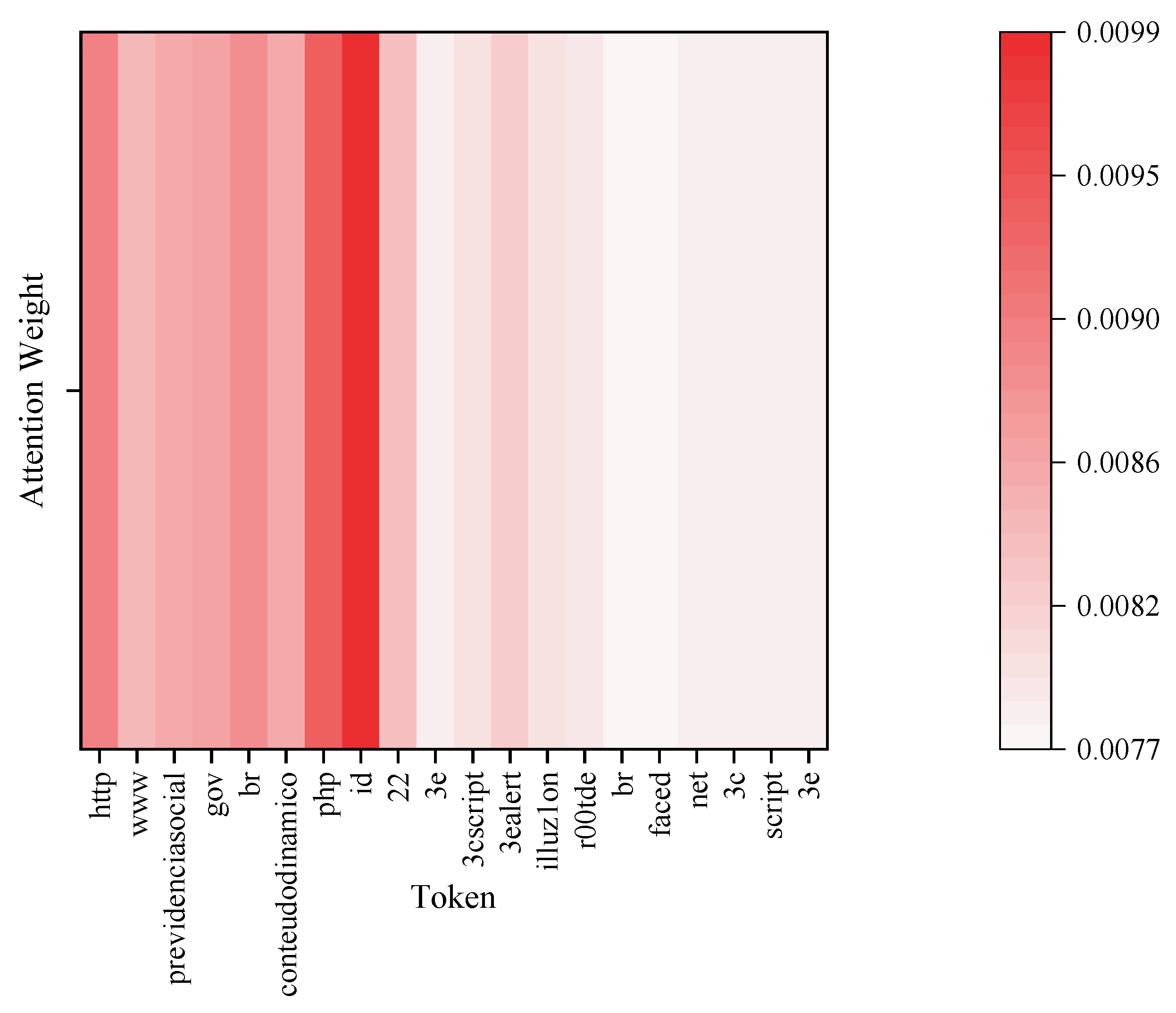

4.7. Model Interpretability Analysis Based on Attention Mechanism

4.8. Parameter Impact Analysis

4.8.1. Word Embedding Dimension

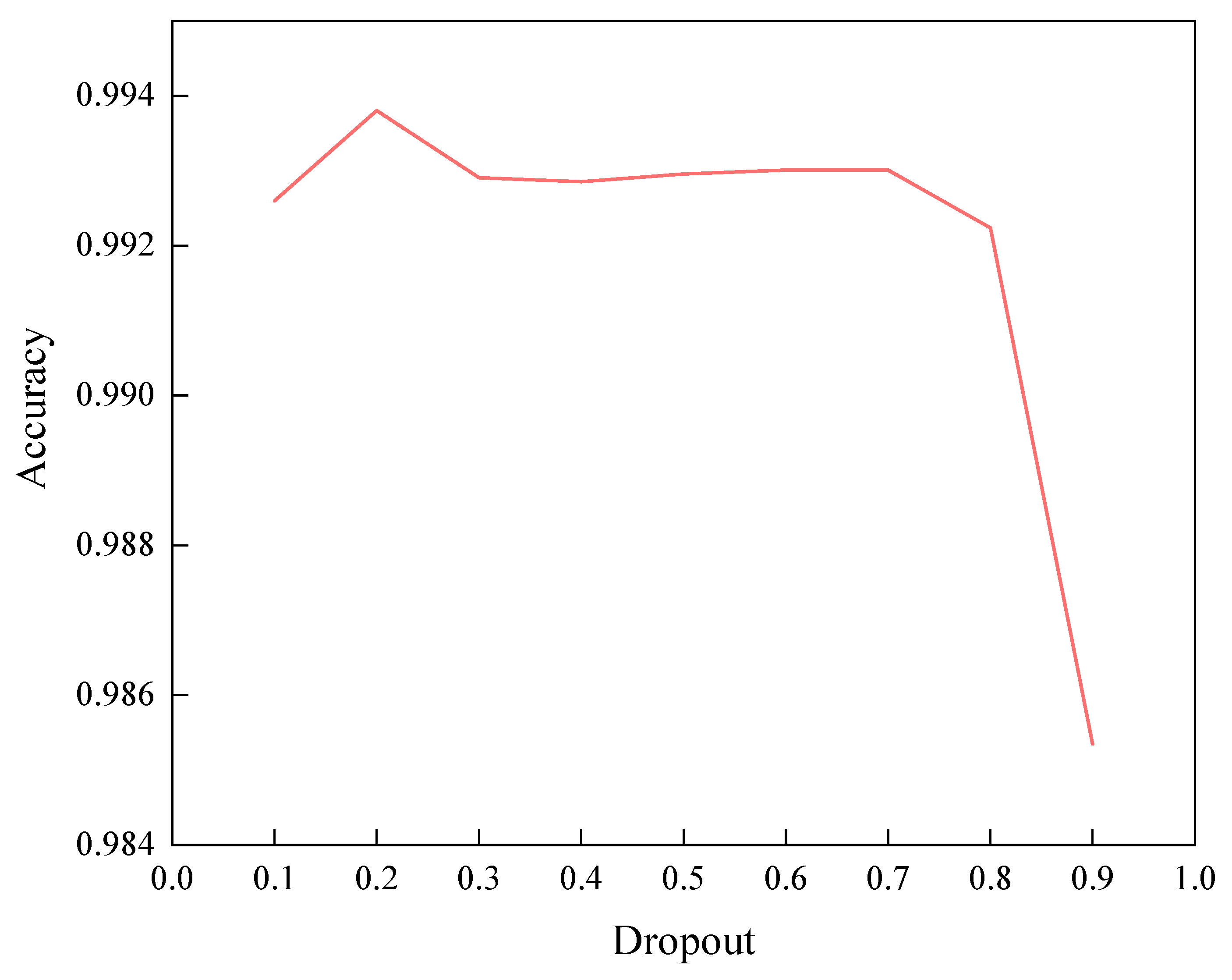

4.8.2. Dropout Ratio

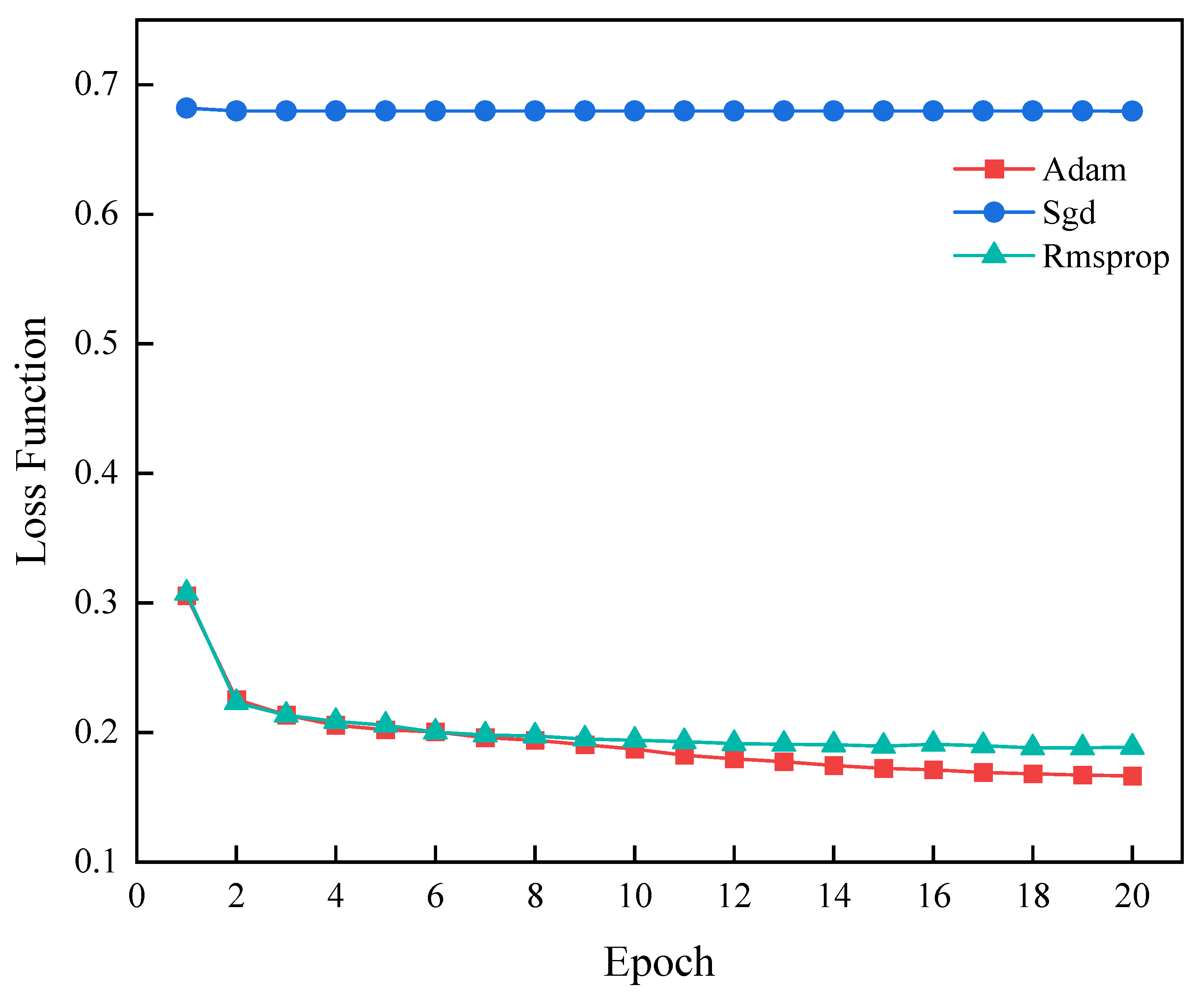

4.8.3. Optimizer

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kaur, J.; Garg, U.; Bathla, G. Detection of cross-site scripting (XSS) attacks using machine learning techniques: A review. Artif. Intell. Rev. 2023, 56, 12725–12769. [Google Scholar] [CrossRef]

- Hussainy, A.S.; Khalifa, M.A.; Elsayed, A.; Hussien, A.; Razek, M.A. Deep learning toward preventing web attacks. In Proceedings of the 2022 5th International conference on computing and informatics (ICCI), New Cairo, Egypt, 9–10 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 280–285. [Google Scholar]

- Eunaicy, J.C.; Suguna, S. Web attack detection using deep learning models. Mater. Today Proc. 2022, 62, 4806–4813. [Google Scholar] [CrossRef]

- Nilavarasan, G.; Balachander, T. XSS attack detection using convolution neural network. In Proceedings of the 2023 International Conference on Artificial Intelligence and Knowledge Discovery in Concurrent Engineering (ICECONF), Chennai, India, 5–7 January 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Joshi, I.S.; Kiratsata, H.J. Cross-Site Scripting Recognition Using LSTM Model. In Proceedings of the International Conference on Intelligent Computing and Communication, Hyderabad, India, 18–19 November 2022; Springer: Singapore, 2022; pp. 1–10. [Google Scholar]

- Li, X.; Wang, T.; Zhang, W.; Niu, X.; Zhang, T.; Zhao, T.; Wang, Y.; Wang, Y. An LSTM based cross-site scripting attack detection scheme for Cloud Computing environments. J. Cloud Comput. 2023, 12, 118. [Google Scholar] [CrossRef]

- Peng, B.; Xiao, X.; Wang, J. Cross-site scripting attack detection method based on transformer. In Proceedings of the 2022 IEEE 8th International Conference on Computer and Communications (ICCC), Chengdu, China, 9–12 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1651–1655. [Google Scholar]

- Wan, S.; Xian, B.; Wang, Y.; Lu, J. Methods for Detecting XSS Attacks Based on BERT and BiLSTM. In Proceedings of the 2024 8th International Conference on Management Engineering, Software Engineering and Service Sciences (ICMSS), Wuhan, China, 12–14 January 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–7. [Google Scholar]

- Guo, Z.; Li, X.; Hu, R.; Wang, D.; Song, W. A Vulnerability Detection Method for Internet Cross-site Scripting Based on Relationship Diagram Convolutional Networks. J. Web Eng. 2025, 24, 243–266. [Google Scholar] [CrossRef]

- Jovanovic, N.; Kruegel, C.; Kirda, E. Pixy: A static analysis tool for detecting web application vulnerabilities. In Proceedings of the 2006 IEEE Symposium on Security and Privacy (S&P’06), Berkeley/Oakland, CA, USA, 21–24 May 2006; IEEE: Piscataway, NJ, USA, 2006. [Google Scholar]

- Gupta, S.; Gupta, B.B. XSS-SAFE: A server-side approach to detect and mitigate cross-site scripting (XSS) attacks in JavaScript code. Arab. J. Sci. Eng. 2016, 41, 897–920. [Google Scholar] [CrossRef]

- Wang, R.; Jia, X.; Li, Q.; Zhang, S. Machine learning based cross-site scripting detection in online social network. In Proceedings of the 2014 IEEE Intl Conf on High Performance Computing and Communications, 2014 IEEE 6th Intl Symp on Cyberspace Safety and Security, 2014 IEEE 11th Intl Conf on Embedded Software and Syst (HPCC, CSS, ICESS), Paris, France, 20–22 August 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 823–826. [Google Scholar]

- Rathore, S.; Sharma, P.K.; Park, J.H. XSSClassifier: An efficient XSS attack detection approach based on machine learning classifier on SNSs. J. Inf. Process. Syst. 2017, 13, 1014–1028. [Google Scholar] [CrossRef]

- Hydara, I.; Sultan, A.B.M.; Zulzalil, H.; Admodisastro, N. Current state of research on cross-site scripting (XSS)—A systematic literature review. Inf. Softw. Technol. 2015, 58, 170–186. [Google Scholar] [CrossRef]

- Ahmed, M.A.; Ali, F. Multiple-path testing for cross site scripting using genetic algorithms. J. Syst. Archit. 2016, 64, 50–62. [Google Scholar] [CrossRef]

- Fang, Y.; Li, Y.; Liu, L.; Huang, C. DeepXSS: Cross site scripting detection based on deep learning. In Proceedings of the 2018 International Conference on Computing and Artificial Intelligence, Chengdu, China, 12–14 March 2018; pp. 47–51. [Google Scholar]

- Lei, L.; Chen, M.; He, C.; Li, D. XSS detection technology based on LSTM-attention. In Proceedings of the 2020 5th International Conference on Control, Robotics and Cybernetics (CRC), Wuhan, China, 16–18 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 175–180. [Google Scholar]

- Yan, H.; Feng, L.; Yu, Y.; Liao, W.; Feng, L.; Zhang, J.; Liu, D.; Zou, Y.; Liu, C.; Qu, L.; et al. Cross-site scripting attack detection based on a modified convolution neural network. Front. Comput. Neurosci. 2022, 16, 981739. [Google Scholar] [CrossRef] [PubMed]

- Stiawan, D.; Bardadi, A.; Afifah, N.; Melinda, L.; Heryanto, A.; Septian, T.W.; Idris, M.Y.; Subroto, I.M.I.; Lukman; Budiarto, R. An Improved LSTM-PCA Ensemble Classifier for SQL Injection and XSS Attack Detection. Comput. Syst. Sci. Eng. 2023, 46. [Google Scholar] [CrossRef]

- Farea, A.A.; Wang, C.; Farea, E.; Alawi, A.B. Cross-site scripting (XSS) and SQL injection attacks multi-classification using bidirectional LSTM recurrent neural network. In Proceedings of the 2021 IEEE International Conference on Progress in Informatics and Computing (PIC), Shanghai, China, 17–19 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 358–363. [Google Scholar]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Mereani, F.A.; Howe, J.M. Detecting cross-site scripting attacks using machine learning. In Proceedings of the International Conference on Advanced Machine Learning Technologies and Applications, Cairo, Egypt, 22–24 February 2018; Springer: Cham, Switzerland, 2018; pp. 200–210. [Google Scholar]

- Kitchenham, B.A.; Pickard, L.M.; MacDonell, S.G.; Shepperd, M.J. What accuracy statistics really measure. IEE Proc.-Softw. 2001, 148, 81–85. [Google Scholar] [CrossRef]

- Buckland, M.; Gey, F. The relationship between recall and precision. J. Am. Soc. Inf. Sci. 1994, 45, 12–19. [Google Scholar] [CrossRef]

- Yacouby, R.; Axman, D. Probabilistic extension of precision, recall, and f1 score for more thorough evaluation of classification models. In Proceedings of the First Workshop on Evaluation and Comparison of NLP Systems, Online, 20 November 2020; pp. 79–91. [Google Scholar]

- Nitish, S. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1. [Google Scholar]

| Dataset | Malicious | Benign | Total |

|---|---|---|---|

| XSSed-DMOZ | 33,426 | 31,407 | 64,833 |

| Merwani-XSS | 14,989 | 27,675 | 42,664 |

| Dataset | Methods | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| XSSed-DMOZ | SVM | 0.9760 | 0.9761 | 0.9767 | 0.9760 |

| CNN | 0.9878 | 0.9878 | 0.9878 | 0.9878 | |

| LSTM | 0.9916 | 0.9896 | 0.9898 | 0.9897 | |

| BiLSTM | 0.9923 | 0.9912 | 0.9915 | 0.9913 | |

| CNN-LSTM | 0.9911 | 0.9899 | 0.9901 | 0.9900 | |

| Transformer | 0.9869 | 0.9738 | 0.9719 | 0.9725 | |

| GCN | 0.9852 | 0.9889 | 0.9822 | 0.9856 | |

| CNN-BiLSTM-Attention | 0.9938 | 0.9936 | 0.9936 | 0.9937 | |

| Merwani-XSS | SVM | 0.9505 | 0.9427 | 0.9496 | 0.9460 |

| CNN | 0.9589 | 0.9536 | 0.9539 | 0.9537 | |

| LSTM | 0.9532 | 0.9538 | 0.9427 | 0.9479 | |

| BiLSTM | 0.9498 | 0.9510 | 0.9386 | 0.9443 | |

| CNN-LSTM | 0.9603 | 0.9515 | 0.9505 | 0.9510 | |

| Transformer | 0.9543 | 0.9505 | 0.9487 | 0.9496 | |

| GCN | 0.9552 | 0.9517 | 0.9180 | 0.9345 | |

| CNN-BiLSTM-Attention | 0.9609 | 0.9602 | 0.9598 | 0.9599 |

| Variants | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| BiLSTM-Attention | 0.9909 | 0.9896 | 0.9898 | 0.9897 |

| CNN-Attention | 0.9894 | 0.9872 | 0.9876 | 0.9873 |

| CNN-BiLSTM | 0.9903 | 0.9892 | 0.9894 | 0.9893 |

| CNN-LSTM-Attention | 0.9897 | 0.9894 | 0.9897 | 0.9895 |

| CNN-BiLSTM-Attention | 0.9938 | 0.9936 | 0.9936 | 0.9937 |

| Embedding Dimension | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| 32 | 0.9924 | 0.9923 | 0.9926 | 0.9924 |

| 64 | 0.9929 | 0.9927 | 0.9930 | 0.9928 |

| 128 | 0.9933 | 0.9931 | 0.9934 | 0.9933 |

| 256 | 0.9938 | 0.9936 | 0.9936 | 0.9937 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Liu, F.; Gu, Z.; Liu, Y. XSS Attack Detection Method Based on CNN-BiLSTM-Attention. Appl. Sci. 2025, 15, 8924. https://doi.org/10.3390/app15168924

Li Z, Liu F, Gu Z, Liu Y. XSS Attack Detection Method Based on CNN-BiLSTM-Attention. Applied Sciences. 2025; 15(16):8924. https://doi.org/10.3390/app15168924

Chicago/Turabian StyleLi, Zhiping, Fangzheng Liu, Zhaojun Gu, and Yun Liu. 2025. "XSS Attack Detection Method Based on CNN-BiLSTM-Attention" Applied Sciences 15, no. 16: 8924. https://doi.org/10.3390/app15168924

APA StyleLi, Z., Liu, F., Gu, Z., & Liu, Y. (2025). XSS Attack Detection Method Based on CNN-BiLSTM-Attention. Applied Sciences, 15(16), 8924. https://doi.org/10.3390/app15168924