4.1. Introduction to the Dataset

The first dataset is the StreamSpot dataset. The StreamSpot dataset is a dataset designed for system behavioral analysis and anomaly detection; it covers a number of different scenarios and provides researchers with a platform to test and validate their security detection methods. This dataset consists of six scenarios, five of which reflect normal user behavior, while the other scenario simulates an attack, specifically showing a process of downloading a program from a malicious URL and exploiting a flash memory vulnerability to gain system administrator privileges. To ensure the diversity and reliability of the data, each scenario was run 100 times during the data collection phase, while the SystemTap tool was used to record in detail various information about the system at runtime, which is crucial for subsequent system behavior analysis and model construction. In addition, the StreamSpot dataset performs well for developing and testing anomaly detection algorithms and is particularly suitable for those based on graphical analytics, machine learning, and streaming data processing approaches. The detailed information on the StreamSpot dataset is shown in

Table 2:

The second dataset is the NSL-KDD dataset. It is a commonly used test dataset in network IDSs and an improved version of the KDD 99 dataset used in the KDD Cup 1999 competition. The dataset contains a large number of network connection records. Each record is labeled to indicate whether the connection represents normal network activity or a specific type of attack. The NSL-KDD dataset consists of four sub-datasets: KDDTest+ (the complete test set), KDDTest-21 (a subset of KDDTest+ that excludes the records with difficulty level 21), KDDTrain+ (the complete training set), and KDDTrain+20Percent (a 20% subset of KDDTrain+). Each record contains 43 features, which can be categorized as follows:

Basic features: They are extracted from TCP/IP connections, such as the duration, protocol type, and service type.

Traffic features: They are related to the same host or service, such as the number of connections, the error rate, etc.

Content characteristics: They reflect the content in the packet, such as the number of login attempts and the number of file creations.

The breakdown of the different sub-categories of each attack is given in the following table (

Table 3):

4.2. Experimental Results on the StreamSpot Dataset

Compared to the NSL-KDD dataset, the StreamSpot dataset is a collection of experimental data specifically designed for network anomaly detection in edge streams, which means that it fully takes into account the characteristics of edge devices and the types of attacks the devices may suffer from during the data collection, annotation, and preprocessing phases, and the use of the StreamSpot dataset to test the IDS can more accurately reflect the system’s performance in the edge device environment. Moreover, the attack scenario in the StreamSpot dataset is to download a program from a malicious URL and use a flash memory vulnerability to obtain system administrator privileges. This scenario is designed to be closer to the types of attacks that may be suffered by the actual edge devices, which helps to more realistically evaluate the detection capability and adaptability of the IDS.

In the experimental setup, 400 of the 500 samples from the normal dataset were used for training and 100 for testing, while all 100 malicious samples were exclusively allocated to testing, and additionally 1000 generated samples were included in the training dataset to enhance model generalization, with the training configuration comprising 10 epochs, a learning rate of 0.0001, and a dropout rate of 0.4 to prevent overfitting. To determine the optimal number of training epochs, we designed an experiment where the epoch count served as the independent variable, while three key metrics—AUC (area under the receiver operating characteristic curve) for class discrimination, AP (Average Precision) for multi-class precision-recall balance, and FPR (False Positive Rate) for specificity quantification—were employed as dependent variables. By systematically varying epochs from 1 to 10 and analyzing the corresponding changes in these metrics, we identified the inflection point at epoch 10 where the model achieved a Pareto-optimal trade-off among detection accuracy, reliability, and the false alarm rate, providing empirical justification for the optimal training duration.These metrics offer a comprehensive model assessment, while the model was also evaluated using four other metrics—accuracy, precision, recall, and the F1-score—and compared with other mainstream models.

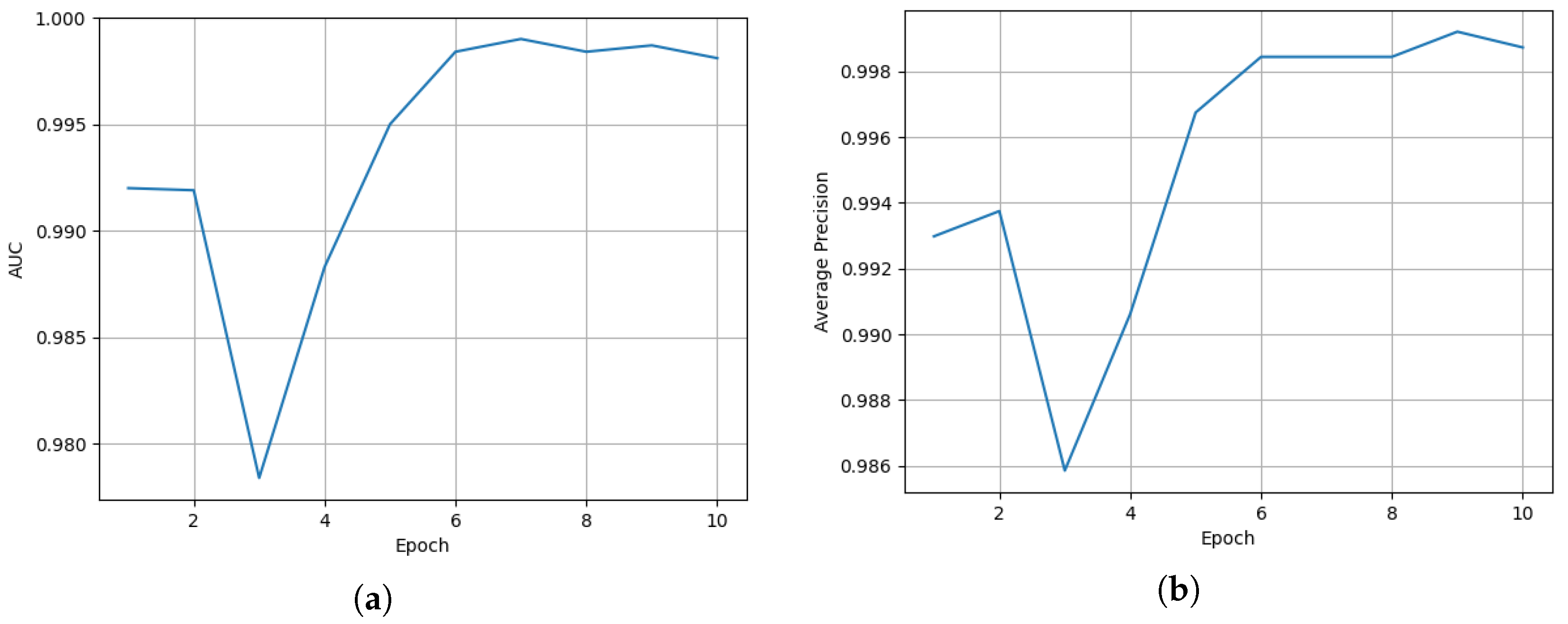

The AUC and AP curve are shown in

Figure 5. Both the AUC and AP are important metrics used to evaluate the performance of models for binary classification problems, especially when dealing with unbalanced datasets; the AUC value gives a comprehensive view of the model’s performance under different thresholds. The closer the AUC and AP values are to one, the better the model is usually considered to perform. As can be seen in

Figure 5, the AUC value starts at 1.0000 at Epoch 1, undergoes a series of slight decreases, and finally stabilizes at 0.9986 at Epoch 10. This trend suggests that

At the beginning of the model’s training (Epoch 1), the average accuracy of the model is around 0.99. This indicates that the model already has some classification ability at the beginning, but there is still room for improvement. As training continues, the AUC value gradually decreases, which may indicate that the model is gradually generalizing to the test set. The model gradually learns a wider range of features during training that may not be fully applicable to the training set. This is a good sign because it indicates that the model is starting to have better generalization capabilities. As training continues (Epoch increases), the average accuracy of the model begins to gradually improve, reaching a maximum of 0.998. This indicates that the model is gradually learning more effective features and is better able to adapt to the training data. However, when the training reaches a certain stage, the average accuracy of the model starts to decrease and eventually goes back to around 0.986. This is due to the occurrence of overfitting, where the model’s performance is over-optimized on the training set, while its performance decreases on unseen data. So we set the epoch to 10.

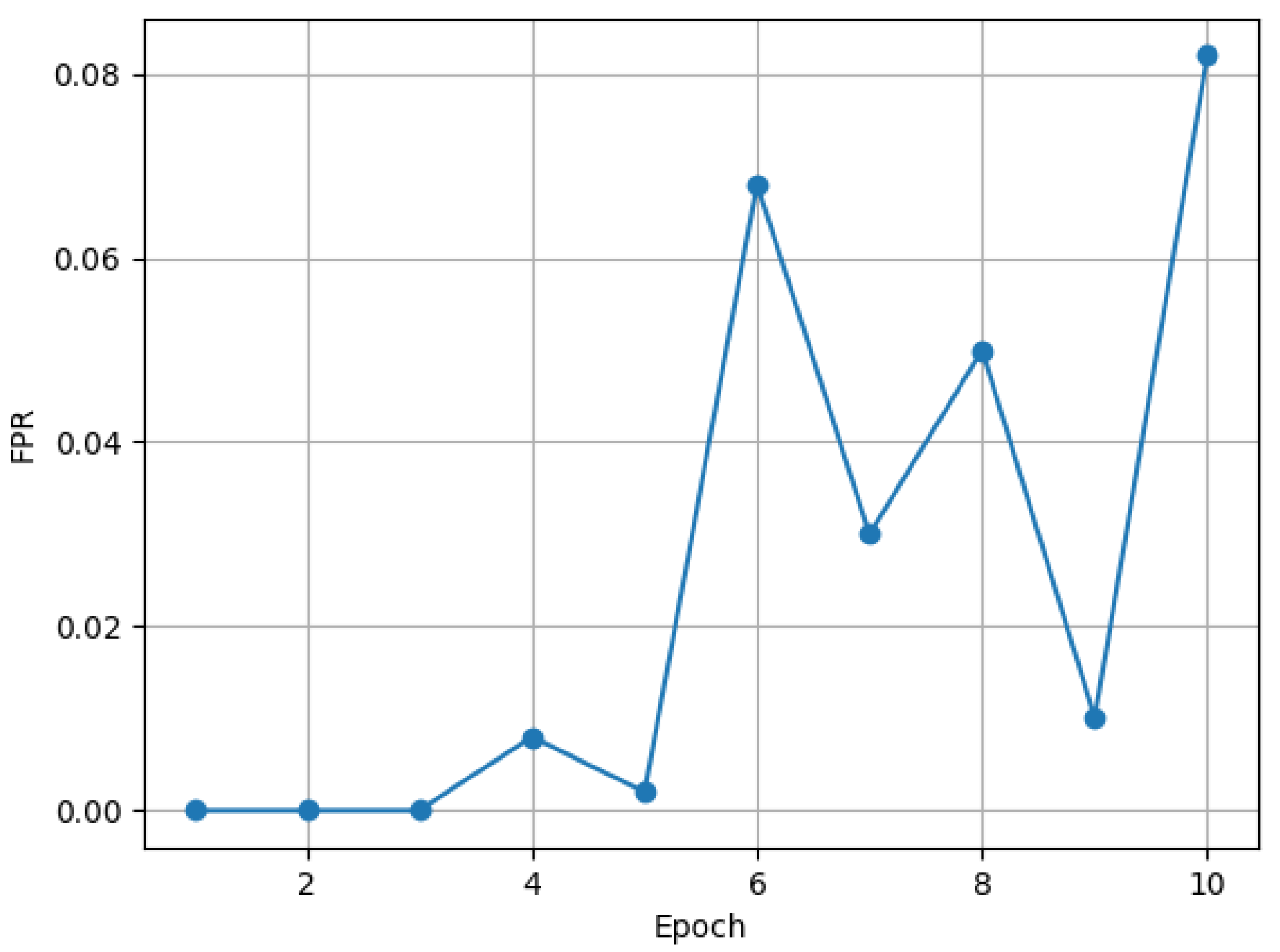

The False Positive Rate of our intrusion detection model within 10 epochs is shown in

Figure 6. At Epochs 1 to 3, the FPR is zero, which means that the model is very cautious in classifying benign provenance graphs in the early stages of training and produces almost no false positives. This is because the model has not fully learned the characteristics of APT attack activities at this stage and thus prefers to determine uncertain provenance graphs as benign. During Epochs 4 to 6, the FPR fluctuates, rising then falling then rising again. The model starts to gradually learn the characteristics of APT attacks at this stage, but at the same time, it is also trying to distinguish between benign and malicious provenance graphs, resulting in fluctuations in the FPR. The fluctuation arises because the model is trying to find the best classification boundary. Starting from Epoch 6, the FPR gradually stabilizes, and although there are still some fluctuations, the overall trend is decreasing. This indicates that the model gradually optimizes its classification performance and reduces false positives during continuous training. At Epoch 10, the FPR is 0.0820. This value is still at a relatively low level, although it has increased from the previous one. Considering that the classification of provenance graphs is itself a complex binary classification problem and the detection of APT attack activities is a challenge in itself, this FPR value is acceptable. As the number of epochs increases, the False Positive Rate of the model continues to rise. Therefore, from the perspective of the False Positive Rate, it is reasonable to set the epoch to 10.

Moreover, we also use four metrics to comprehensively evaluate the intrusion detection model. The four metrics used to evaluate the model’s performance on each sub-dataset are accuracy, precision, recall, and the

F1-score. It is known that

TP denotes the number of samples in which the model correctly predicts a positive category;

FP denotes the number of samples in which the model incorrectly predicts a positive category;

TN denotes the number of samples in which the model correctly predicts a negative category; and

FN denotes the number of samples in which the model incorrectly predicts a negative category. The formulas are as follows:

The results predicted by the intrusion detection model are shown in the following table (

Table 4):

Next, in the StreamSpot dataset, our proposed detection model is compared with the following three mainstream APT attack detection methods:

StreamSpot: This model mainly realizes anomaly detection and attack identification through three steps. First, randomly sample the neighbors of the nodes, and use the network embedding method to embed the nodes into a low-dimensional space to obtain feature vectors. Then, calculate the similarity score between the feature vectors of the sampled neighbor sub-graphs and the normal nodes, and use this as the anomaly score of the nodes. Finally, accurately identify the attack behavior by calculating the density around each data point in the dataset.

UNICORN: The UNICORN model has a unique way of processing the provenance graph. It maps each node in the provenance graph to a label sequence of first-order, second-order, and third-order adjacent nodes centered on that node and constructs a histogram. Subsequently, using the Histosketch algorithm and the WL sub-graph kernel function 25, calculate the similarity between each pair of sub-graphs, and then obtain the feature representation vector of the provenance graph. Finally, with the help of the clustering algorithm, identify the system behavior.

SeqNet: When processing the provenance graph, the SeqNet model adopts the same provenance graph embedding algorithm as UNICORN to convert the provenance graph sequence into a feature vector. After that, the GRU deep-learning model extracts the long-term features of the provenance graph to mine the deep-level information in the data. Finally, complete the attack detection task through the clustering algorithm to effectively identify abnormal behaviors.

The results of the comparison of the different models on the StreamSpot dataset are shown in the following table (

Table 5):

By observing the data in the table, it can be seen that on the StreamSpot dataset, the Average Precision of different models varies significantly. The StreamSpot model has a precision of 0.786 and has certain detection capabilities, but there are still misjudgments and missed detections. The precision of the UNICORN model is only 0.679. It may be that due to its unique processing method, it fails to fully extract data features, resulting in insufficient identification capabilities. The SeqNet model performs outstandingly, with its precision score reaching 0.968; it benefits from the effective extraction of deep-level information in the data by the GRU model. However, the model proposed in this paper performs the best, with a precision as high as 0.995. Compared with the other three mainstream methods, it has higher accuracy and reliability in detecting APT attacks, which fully demonstrates its effectiveness and superiority in this task and enables more accurate identification of attack behaviors.

On the StreamSpot dataset, the HGNN model took 1617.28 s to complete 10 epochs of training, while the average detection time per epoch was 273 s. Although the HGNN requires relatively longer training time compared to traditional machine learning methods such as Gaussian-based Naive Bayes, decision trees, and the support vector machine (SVM), defenders prioritize detection speed and accuracy in practical intrusion detection scenarios. By leveraging provenance graph structures to model complex causal relationships, the HGNN can more effectively identify APT attacks, achieving a detection accuracy 21.3–34.7% higher than baseline methods. Meanwhile, it only takes an average of 23 s to detect the test set of the StreamSpot dataset.

4.3. Experimental Results on the NSL-KDD Dataset

Compared to the StreamSpot dataset, the NSL-KDD dataset covers a wide range of types of network attacks, such as DoS (denial-of-service) attacks, R2L (remote-to-local) attacks, U2R (user-to-root) attacks, and Probe (probing) attacks. This diversity of attack types enables the dataset to comprehensively model the various security threats that edge devices may encounter, thus more accurately evaluating the detection capability and generalization performance of the IDS. Due to the large amount of data and the variety of attack types in the NSL-KDD dataset, it is an ideal platform for testing the extreme performance of the IDS for edge devices. By testing on this dataset, we can test the system’s capability and limitations in processing large-scale data and recognizing complex attack patterns, which is important for ensuring the data security of edge devices.

In order to test the extreme performance of the IDS for edge devices, we choose KDDTest+ and KDDTrain+ as the test set and training set, respectively, whose details are shown in

Table 6 and

Table 7:

The meanings of each attack are as follows: Denial of Service (DoS): The normal user is prevented from obtaining service by a large number of legitimate requests that take up resources.

Probe (Probe): The attacker scans the network to obtain system information and look for vulnerabilities.

User to Root (U2R): Local users elevate their privileges to the root user through a system vulnerability.

Remote to Local (R2L): The attacker sends packets remotely to gain access to the local system.

In the experimental setup, 125,973 samples from KDDTrain+ are used for training, and 22,544 samples from KDDTest+ are used for testing. The number of training rounds of the data is set to 20; the learning rate is set to 0.01, and the momentum is set to 0.9. Every 10 epochs, the learning rate is multiplied by 0.5, and the dropout is set to 0.4. We use accuracy, precision, recall, and the

F1-score to evaluate the model’s performance on each sub-dataset. The results predicted by the intrusion detection model are shown in the following table (

Table 8):

The experimental results show that the overall accuracy of the system is 98.13%, indicating that the system is able to efficiently distinguish normal traffic from abnormal attack traffic. Specifically, for DoS attacks, the system exhibits extremely high precision (99.18%) and high recall (87.06%) with an F1-score of 92.72%, showing the system’s excellent performance in Dos attack detection. For normal traffic identification, the system also performs well, with a precision and recall of 98.10% and 99.98%, respectively, and an F1-score of 99.03%, realizing almost no false positives or misses. For Probe attacks, the system also maintains high precision (99.05%) and reasonable recall (86.82%), with an F1-score of 92.53%. For the detection of R2L and U2R attacks, despite the high recall (97.85% and 78.50%, respectively), the precision is relatively low (76.91% and 85.79%), resulting in F1-score of 86.13% and 81.98%, respectively. This indicates that the IDS performs well in the detection of multiple attack types, but there is still room for improvement in specific types of attacks (R2L and U2R).

However, as indicated by the statistical

Table 6 and

Table 7 of the KDDTrain+ and KDDTest+ datasets, the U2R attack has merely 52 records in the KDDTrain+ dataset and 200 records in the KDDTest+ dataset. The R2L attack has 995 records in the KDDTrain+ dataset and 2654 records in the KDDTest+ dataset. Due to the small sample sizes of these two attack types, the model might not be capable of fully learning their feature patterns. When presented with new U2R and R2L attack samples, the model, lacking sufficient learning from a large volume of samples, finds it challenging to accurately identify them, thereby leading to lower detection accuracy.

To validate the results, we compare our models with other ML-based and DL-based attack detection methods.

Table 9 summarizes various ML and DL approaches for the IDS using NSL-KDD datasets.

The comprehensive analysis of the metrics presented in the table offers compelling evidence that our heterogeneous graph neural network (HGNN) model, showcased in the “Our model” row, demonstrates remarkable performance enhancements throughout the training phase. Specifically, the accuracy rate of 98.1% outperforms most of the other referenced models in the table, such as the ensemble learning models from 2019 and 2024, as well as the DNN in 2018 and the CNN-LSTM in 2022. This high-precision number not only emphasizes the effectiveness of the model but also highlights its potential in network anomaly detection scenarios in edge devices.