Retinal OCT Images: Graph-Based Layer Segmentation and Clinical Validation †

Abstract

1. Introduction

2. Materials and Methods

2.1. Image Dataset

2.2. Preprocessing

2.2.1. Gaussian Filter-Based Denoising

2.2.2. Wavelet-Based Denoising

2.3. Retinal Layer Segmentation and Thickness Computation

2.4. Efficacy of the Algorithm: Comparative Analysis

2.5. Validation Study

2.5.1. Custom-Built Graphical User Interface to Facilitate Manual Segmentation

2.5.2. Layer Thickness Comparison

2.5.3. Computational Efficiency

3. Results

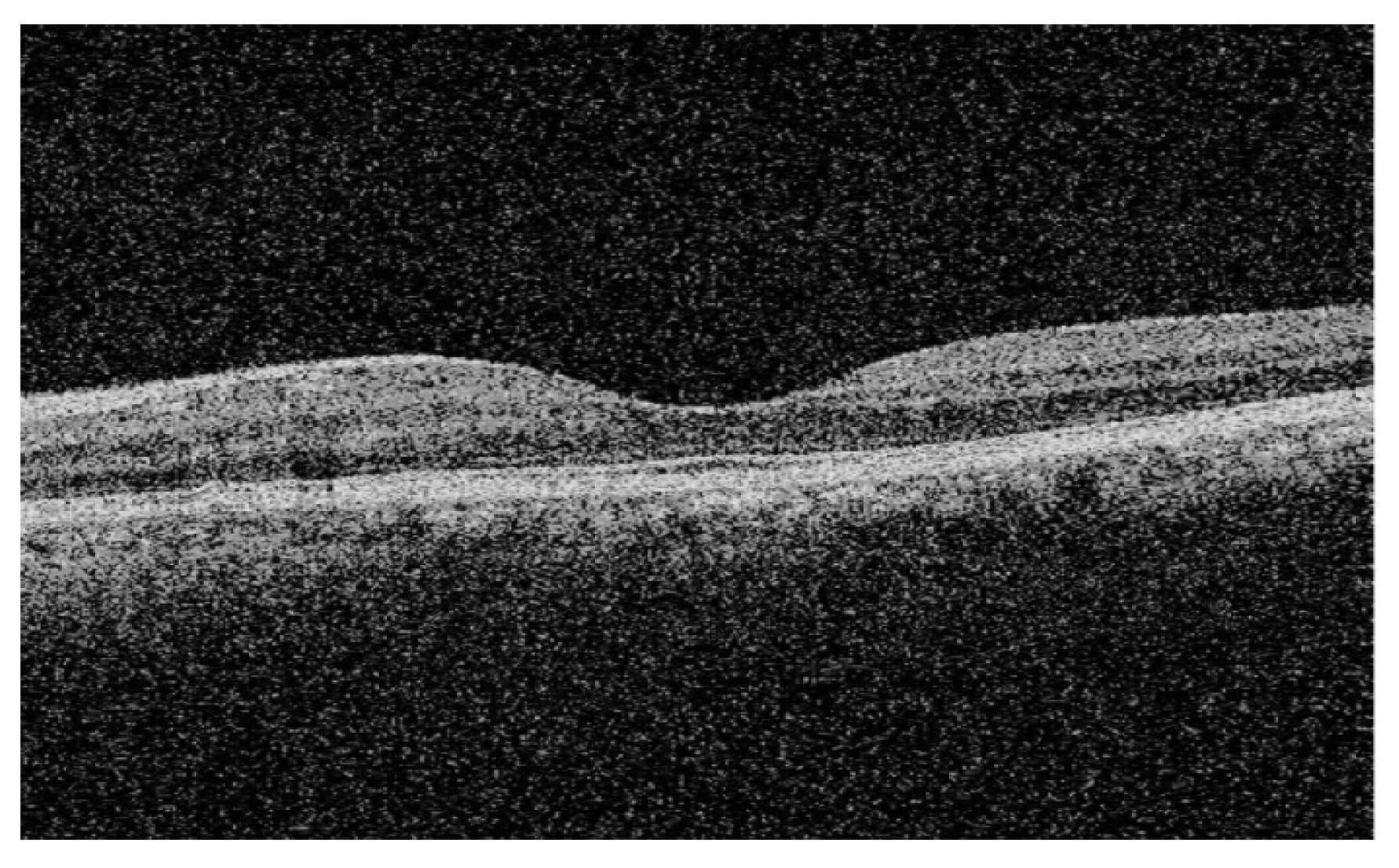

3.1. Retinal Layer Segmentation and Thickness Computation

3.2. Gaussian Filter-Based Denoising Versus Wavelet-Based Denoising and Their Impacts on the Segmentation Results

3.3. Performance Evaluation

3.3.1. Segmentation Accuracy with Respect to Previous Studies

3.3.2. Segmentation Accuracy with Respect to Manual Segmentation

4. Discussion

4.1. Denoising Techniques and Segmentation Accuracy

4.2. Algorithm Performance

4.3. Validation Against Manual Segmentation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| OCT | Optical Coherence Tomography |

| SD-OCT | Spectral Domain Optical Coherence Tomography |

| DR | Diabetic Retinopathy |

| AMD | Age-related Macular Degeneration |

| ROI | Region of Interest |

| BPI | Boundary Point Indices |

| GUI | Graphical User Interface |

| ILM | Internal Limiting Membrane |

| RNFL | Retinal Nerve Fiber Layer |

| GCL | Ganglion Cell Layer |

| IPL | Inner Plexiform Layer |

| INL | Inner Nuclear Layer |

| OPL | Outer Plexiform Layer |

| ONL | Outer Nuclear Layer |

| IS | Inner Segment |

| OS | Outer Segment |

| RPE | Retinal Pigment Epithelium |

| RAM | Random Access Memory |

References

- Fercher, A.F.; Drexler, W.; Hitzenberger, C.K.; Lasser, T. Optical Coherence Tomography—Principles and Applications. Rep. Prog. Phys. 2003, 66, 239–303. [Google Scholar] [CrossRef]

- Danesh, H.; Kafieh, R.; Rabbani, H.; Hajizadeh, F. Segmentation of Choroidal Boundary in Enhanced Depth Imaging OCTs Using a Multiresolution Texture Based Modeling in Graph Cuts. Comput. Math. Methods Med. 2014, 2014, 1–9. [Google Scholar] [CrossRef]

- Huang, D.; Swanson, E.A.; Lin, C.P.; Schuman, J.S.; Stinson, W.G.; Chang, W.; Hee, M.R.; Flotte, T.; Gregory, K.; Puliafito, C.A.; et al. Optical Coherence Tomography HHS Public Access. Science 1991, 254, 1178–1181. [Google Scholar] [CrossRef]

- Hee, M.R.; Izatt, J.A.; Swanson, E.A.; Huang, D.; Schuman, J.S.; Lin, C.P.; Puliafito, C.A.; Fujimoto, J.G. Optical Coherence Tomography of the Human Retina. Arch. Ophthalmol. 1995, 113, 325–332. [Google Scholar] [CrossRef]

- Schuman, J.S. Spectral Domain Optical Coherence Tomography for Glaucoma (an AOS Thesis). Trans. Am. Ophthalmol. Soc. 2008, 106, 426–458. [Google Scholar]

- Shi, F.; Chen, X.; Zhao, H.; Zhu, W.; Xiang, D.; Gao, E.; Sonka, M.; Chen, H. Automated 3-D Retinal Layer Segmentation of Macular Optical Coherence Tomography Images with Serous Pigment Epithelial Detachments. IEEE Trans. Med. Imaging 2015, 34, 441–452. [Google Scholar] [CrossRef]

- Srinivasan, P.P.; Kim, L.A.; Mettu, P.S.; Cousins, S.W.; Comer, G.M.; Izatt, J.A.; Farsiu, S. Fully Automated Detection of Diabetic Macular Edema and Dry Age-Related Macular Degeneration from Optical Coherence Tomography Images. Biomed. Opt. Express 2014, 5, 3568–3577. [Google Scholar] [CrossRef]

- Chiu, S.J.; Li, X.T.; Nicholas, P.; Toth, C.A.; Izatt, J.A.; Farsiu, S. Automatic Segmentation of Seven Retinal Layers in SDOCT Images Congruent with Expert Manual Segmentation. Opt. Express 2010, 18, 19413–19428. [Google Scholar] [CrossRef] [PubMed]

- Srinivasan, P.P.; Heflin, S.J.; Izatt, J.A.; Arshavsky, V.Y.; Farsiu, S. Automatic segmentation of up to ten layer boundaries in SD-OCT images of the mouse retina with and without missing layers due to pathology. Biomed. Opt. Express 2014, 5, 348–365. [Google Scholar] [CrossRef]

- Tian, J.; Varga, B.; Somfai, G.M.; Lee, W.-H.; Smiddy, W.E.; DeBuc, D.C. Real-Time Automatic Segmentation of Optical Coherence Tomography Volume Data of the Macular Region. PLoS ONE 2015, 10, e0133908. [Google Scholar] [CrossRef] [PubMed]

- DeBuc, D.C. A Review of Algorithms for Segmentation of Retinal Image Data Using Optical Coherence Tomography. In Image Segmentation; Ho, P.-G., Ed.; Intech Publishers: London, UK, 2011; pp. 15–54. [Google Scholar]

- Roy, P.; Lakshminarayanan, V.; Parthasarathy, M.K.; Zelek, J.S.; Gholami, P. Automated Intraretinal Layer Segmentation of Optical Coherence Tomography Images Using Graph-Theoretical Methods. In Proceedings of the Optical Coherence Tomography and Coherence Domain Optical Methods in Biomedicine XXII, San Francisco, CA, USA, 29–31 January 2018. [Google Scholar] [CrossRef]

- Roy, P. Automated Segmentation of Retinal Optical Coherence Tomography Images. Master’s Thesis, University of Waterloo, Waterloo, Canada, 2018. [Google Scholar]

- Vijaya, G.; Vasudevan, V. A Simple Algorithm for Image Denoising Based on MS Segmentation. Int. J. Comput. Appl. 2010, 2, 9–15. [Google Scholar] [CrossRef]

- Mishra, A.; Wong, A.; Bizheva, K.; Clausi, D.A. Intra-Retinal Layer Segmentation in Optical Coherence Tomography Images. Opt. Express 2009, 17, 23719–23728. [Google Scholar] [CrossRef] [PubMed]

- Garvin, M.K.; Abramoff, M.D.; Wu, X.; Russell, S.R.; Burns, T.L.; Sonka, M. Automated 3-D Intraretinal Layer Segmentation of Macular Spectral-Domain Optical Coherence Tomography Images. IEEE Trans. Med. Imaging 2009, 28, 1436–1447. [Google Scholar] [CrossRef]

- Rabbani, H.; Kafieh, R.; Kermani, S. A Review of Algorithms for Segmentation of Optical Coherence Tomography from Retina. J. Med. Signals Sens. 2013, 3, 45–60. [Google Scholar] [CrossRef]

- Kumar, B.K.S. Image Denoising Based On Gaussian/Bilateral Filter and Its Method Noise Thresholding. Signal Image Video Process. 2013, 7, 1159–1172. [Google Scholar] [CrossRef]

- Roy, P.; Parthasarathy, M.K.; Zelek, J.; Lakshminarayanan, V. Comparison of Gaussian Filter Versus Wavelet-Based Denoising on Graph-Based Segmentation of Retinal OCT Images. In Proceedings of the Biomedical Applications in Molecular, Structural, and Functional Imaging, Houston, TX, USA, 11–13 February 2018. [Google Scholar]

- Teng, P. Caserel—An Open Source Software for Computer-Aided Segmentation of Retinal Layers in Optical Coherence Tomography Images. 2013. Available online: https://github.com/pangyuteng/caserel (accessed on 29 December 2017).

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient Graph-Based Image Segmentation. Int. J. Comput. Vis. 2004, 59, 167–181. [Google Scholar] [CrossRef]

- Chen, X.; Niemeijer, M.; Zhang, L.; Lee, K.; Abramoff, M.D.; Sonka, M. Three-Dimensional Segmentation of Fluid-Associated Abnormalities in Retinal OCT: Probability Constrained Graph-Search-Graph-Cut. IEEE Trans. Med. Imaging 2012, 31, 1521–1531. [Google Scholar] [CrossRef]

- Dufour, P.A.; Ceklic, L.; Abdillahi, H.; Schroder, S.; De Dzanet, S.; Wolf-Schnurrbusch, U.; Kowal, J. Graph-Based Multi-Surface Segmentation of OCT Data Using Trained Hard and Soft Constraints. IEEE Trans. Med. Imaging 2013, 32, 531–543. [Google Scholar] [CrossRef]

- Li, K.; Wu, X.; Chen, D.; Sonka, M. Optimal Surface Segmentation in Volumetric Images—A Graph-Theoretic Approach. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 119–134. [Google Scholar] [CrossRef]

- Fabijańska, A. Graph Based Image Segmentation. Automatyka/Akademia Górniczo-Hutnicza im. Stanisława Staszica w Krakowie 2011, 15, 93–103. [Google Scholar]

- Kafieh, R.; Rabbani, H.; Hajizadeh, F.; Abramoff, M.D.; Sonka, M. Thickness Mapping of Eleven Retinal Layers Segmented Using the Diffusion Maps Method in Normal Eyes. J. Ophthalmol. 2015, 2015, 259123. [Google Scholar] [CrossRef]

- Agrawal, P.; Karule, P.T. Measurement of retinal thickness for detection of Glaucoma. In Proceedings of the 2014 International Conference on Green Computing Communication and Electrical Engineering (ICGCCEE), Coimbatore, India, 6–8 March 2014. [Google Scholar]

- Chan, A.; Duker, J.S.; Ko, T.H.; Fujimoto, J.G.; Schuman, J.S. Normal Macular Thickness Measurements in Healthy Eyes Using Stratus Optical Coherence Tomography. Arch. Ophthalmol. 2006, 124, 193–198. [Google Scholar] [CrossRef]

- Bagci, A.M.; Shahidi, M.; Ansari, R.; Blair, M.; Blair, N.P.; Zelkha, R. Thickness Profiles of Retinal Layers by Optical Coherence Tomography Image Segmentation. Arch. Ophthalmol. 2008, 146, 679–687.e1. [Google Scholar] [CrossRef]

- Koozekanani, D.; Boyer, K.; Roberts, C. Retinal Thickness Measurements from Optical Coherence Tomography Using a Markov Boundary Model. IEEE Trans. Med. Imaging 2001, 20, 900–916. [Google Scholar] [CrossRef]

- Gholami, P.; Roy, P.; Parthasarathy, M.K.; Lakshminarayanan, V. OCTID: Optical Coherence Tomography Image Database. Comput. Electr. Eng. 2020, 81, 106532. [Google Scholar] [CrossRef]

| Layer | Segmented Intra-Retinal Layers (as Shown in Figure 1) | Thickness = Mean ± SD (in Microns) |

|---|---|---|

| 1 | RNFL + GCL | 25.02 ± 3.16 |

| 2 | IPL | 5.40 ± 2.79 |

| 3 | INL | 5.94 ± 1.10 |

| 4 | OPL | 8.45 ± 0.96 |

| 5 | ONL + IS | 16.24 ± 1.76 |

| 6 | OS + RPE | 12.67 ± 6.04 |

| Layer | Segmented Intra-Retinal Layers (as Shown in Figure 1) | Gaussian Filter-Based Denoising | Wavelet-Based Denoising | ||

|---|---|---|---|---|---|

| Thickness = Mean ± SD (in Microns) | Mean Computation Time (in Seconds) | Thickness = Mean ± SD (in Microns) | Mean Computation Time (in Seconds) | ||

| 1 | RNFL + GCL | 25.16 ± 2.09 | 1.0535 | 25.29 ± 2.21 | 11.2736 |

| 2 | IPL | 5.38 ± 1.23 | 5.49 ± 1.18 | ||

| 3 | INL | 5.29 ± 0.15 | 6.15 ± 1.89 | ||

| 4 | OPL | 9.01 ± 2.85 | 8.19 ± 2.51 | ||

| 5 | ONL + IS | 16.95 ± 2.24 | 14.88 ± 2.62 | ||

| 6 | OS + RPE | 14.92 ± 2.59 | 14.96 ± 2.62 | ||

| Current Study | Published Results [26] | Statistical Comparison | |||

|---|---|---|---|---|---|

| Retinal Layer | Mean Thickness ± SD (in µ) | Retinal Layer | Mean Thickness ± SD (in µ) Thickness Across 9 Macular Sectors | p-Value | Std. Error of Diff. |

| Layer 1 | 25.02 ± 0.36 | Layers (1 + 2) | 25.88 ± 4.48 | 0.36 | 0.94 |

| Layer 2 | 5.40 ± 2.79 | Layer 3 | 5.44 ± 1.74 | 0.92 | 0.43 |

| Layer 3 | 5.94 ± 1.10 | Layer 4 | 6.00 ± 2.59 | 0.91 | 0.53 |

| Layer 4 | 8.45 ± 0.96 | Layer 5 | 7.67 ± 1.93 | 0.04 | 0.40 |

| Layer 5 | 16.24 ± 1.76 | Layers (6 + 7) | 15.00 ± 4.27 | 0.15 | 0.87 |

| Layer 6 | 12.67 ± 6.04 | Layers (8 + 9 + 10 + 11) | 13.22 ± 3.34 | 0.52 | 0.86 |

| Retinal Layer | Manual Segmentation | Automated Segmentation | Mean Error | Accuracy (%) |

|---|---|---|---|---|

| Mean Thickness ± SD (in µ) | Mean Thickness ± SD (in µ) | |||

| RNFL + GCL | 22.76 ± 2.52 | 25.02 ± 3.16 | −2.26 ± 1.59 | 90.07 |

| IPL | 7.24 ± 2.81 | 5.40 ± 2.79 | 1.84 ± 1.64 | 74.58 |

| INL | 6.46 ± 1.12 | 5.94 ± 1.10 | 0.52 ± 0.69 | 91.95 |

| OPL | 8.23 ± 0.93 | 8.45 ± 0.96 | −0.22 ± 0.67 | 97.33 |

| ONL + IS | 16.42 ± 1.58 | 16.24 ± 1.76 | 0.18 ± 0.74 | 98.90 |

| OS + RPE | 12.18 ± 4.68 | 12.67 ± 6.04 | −0.49 ± 1.57 | 95.98 |

| Segmentation Technique | Mean Computation Time (in Seconds) |

|---|---|

| Automated | 4.93 |

| Manual | 578.05 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roy, P.; Parthasarathy, M.K.; Lakshminarayanan, V. Retinal OCT Images: Graph-Based Layer Segmentation and Clinical Validation. Appl. Sci. 2025, 15, 8783. https://doi.org/10.3390/app15168783

Roy P, Parthasarathy MK, Lakshminarayanan V. Retinal OCT Images: Graph-Based Layer Segmentation and Clinical Validation. Applied Sciences. 2025; 15(16):8783. https://doi.org/10.3390/app15168783

Chicago/Turabian StyleRoy, Priyanka, Mohana Kuppuswamy Parthasarathy, and Vasudevan Lakshminarayanan. 2025. "Retinal OCT Images: Graph-Based Layer Segmentation and Clinical Validation" Applied Sciences 15, no. 16: 8783. https://doi.org/10.3390/app15168783

APA StyleRoy, P., Parthasarathy, M. K., & Lakshminarayanan, V. (2025). Retinal OCT Images: Graph-Based Layer Segmentation and Clinical Validation. Applied Sciences, 15(16), 8783. https://doi.org/10.3390/app15168783