Steel Surface Defect Detection Algorithm Based on Improved YOLOv8 Modeling

Abstract

1. Introduction

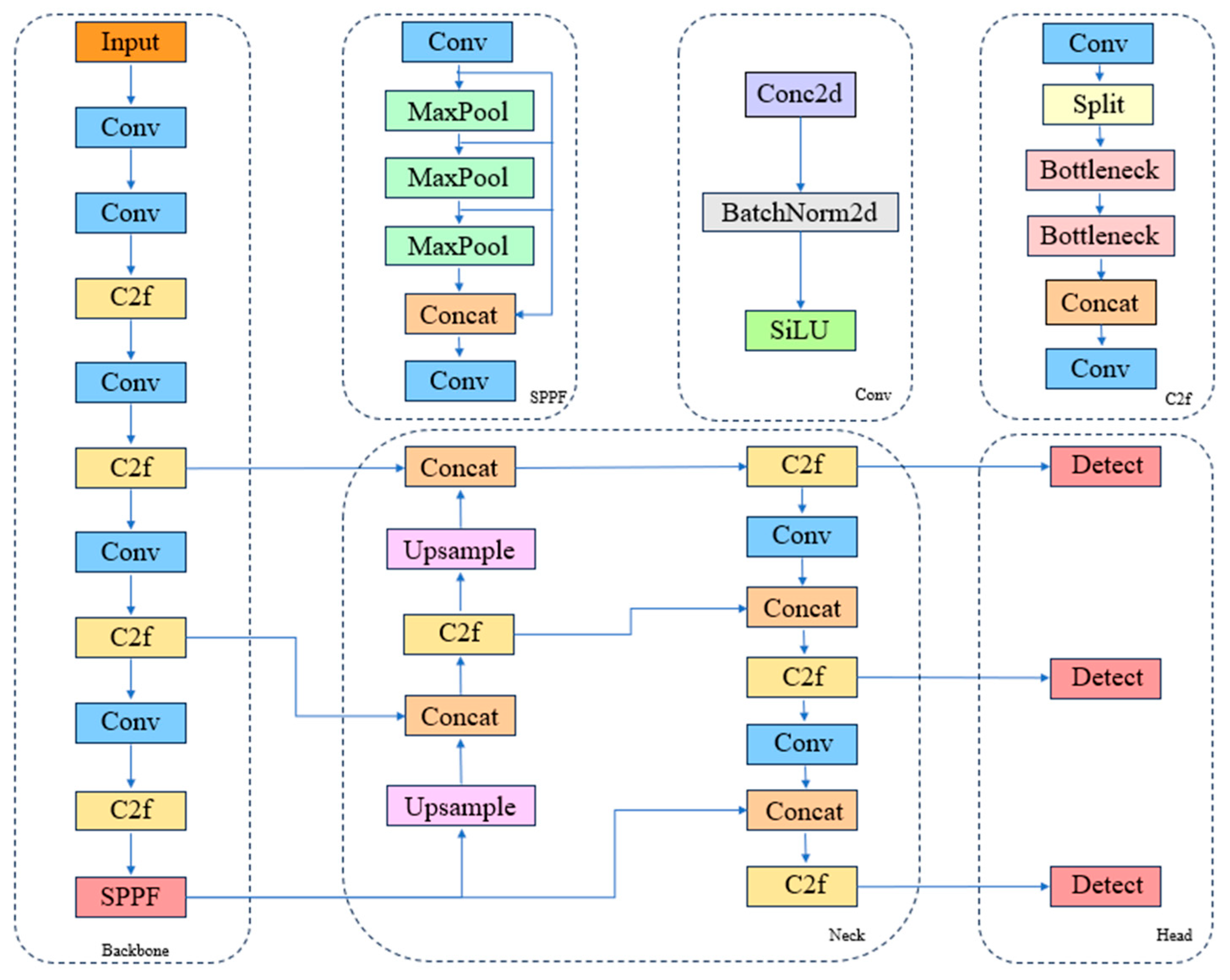

2. YOLOv8

3. Construction of EB-YOLOv8

3.1. Model Architecture

3.2. Embedding Multi-Scale Attention Mechanisms to Strengthen Feature Attention

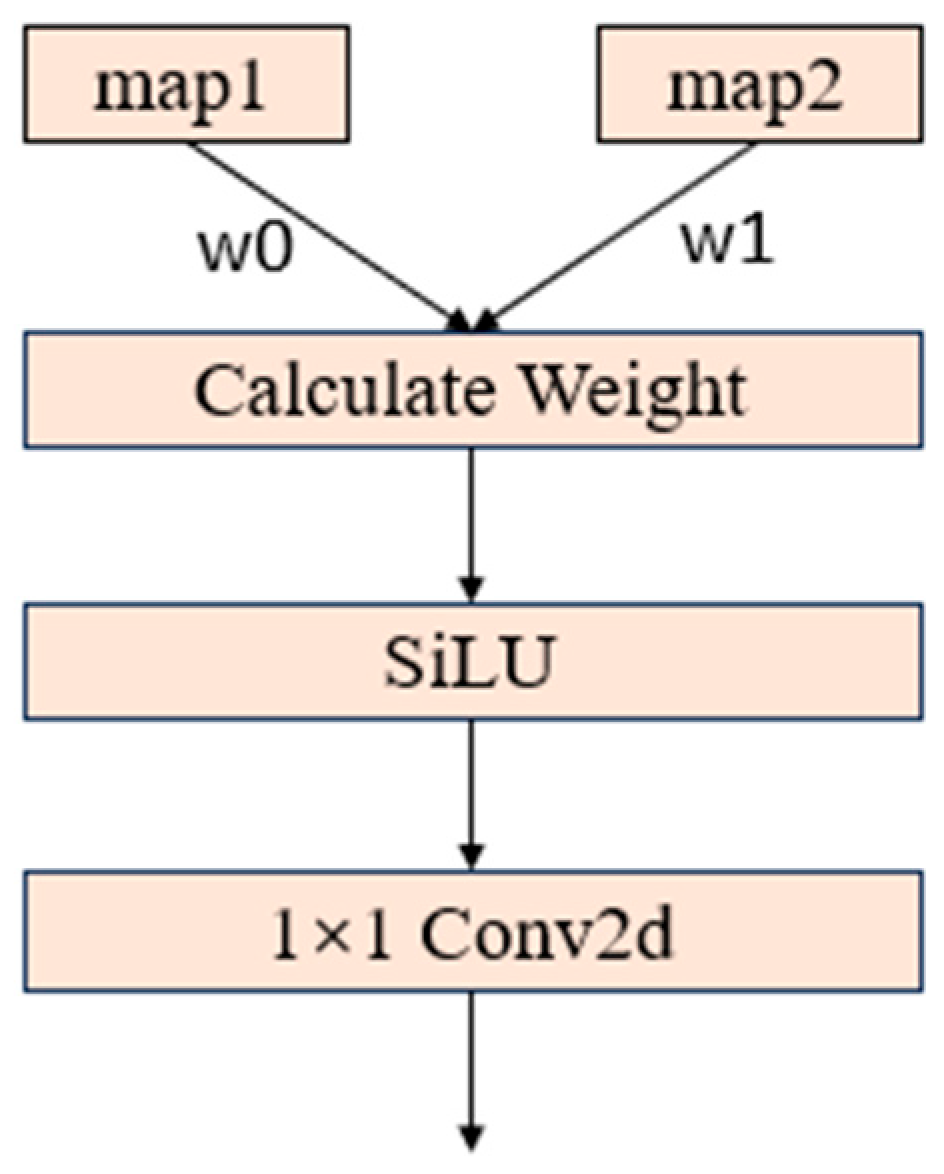

3.3. Constructing Weighted Fusion Splicing Module to Realize Multi-Scale Feature Fusion

4. Experimentation

4.1. Dataset

4.2. Experimental Environment

4.3. Experimental Evaluation Indicators

4.4. Ablation Experiment

4.5. Comparison Experiment

4.6. Visual Result Analysis

4.7. Visualization Result Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Luo, Q.; Fang, X.; Liu, L.; Yang, C.; Sun, Y. Automated Visual Defect Detection for Flat Steel Surface: A Survey. IEEE Trans. Instrum. Meas. 2020, 69, 626–644. [Google Scholar] [CrossRef]

- Kou, X.; Liu, S.; Cheng, K.; Qian, Y. Development of a YOLO-V3-based model for detecting defects on steel strip surface. Measurement 2021, 182, 109454. [Google Scholar] [CrossRef]

- Che, L.; He, Z.; Zheng, K.; Si, T.; Ge, M.; Cheng, H.; Zeng, L. Deep learning in alloy material microstructures: Application and prospects. Mater. Today Commun. 2023, 37, 107531. [Google Scholar] [CrossRef]

- Li, M.; Wang, H.; Wan, Z. Surface defect detection of steel strips based on improved YOLOv4. Comput. Electr. Eng. 2022, 102, 108208. [Google Scholar] [CrossRef]

- Fan, C.; Yang, S.; Duan, C.; Zhu, M.; Bai, Y. Microstructure and mechanical properties of 6061 aluminum alloy laser-MIG hybrid welding joint. J. Cent. South Univ. 2022, 29, 898–911. [Google Scholar] [CrossRef]

- Versaci, M.; Angiulli, G.; La Foresta, F.; Laganà, F.; Palumbo, A. Intuitionistic fuzzy divergence for evaluating the mechanical stress state of steel plates subject to bi-axial loads. Integr. Comput. Aided Eng. 2024, 31, 363–379. [Google Scholar] [CrossRef]

- Wang, Y.-Z.; Zheng, Z.; Zhu, M.-M.; Zhang, K.-T.; Gao, X.-Q. An integrated production batch planning approach for steelmaking-continuous casting with cast batching plan as the core. Comput. Ind. Eng. 2022, 173, 108636. [Google Scholar] [CrossRef]

- Zeng, K.; Xia, Z.; Qian, J.; Du, X.; Xiao, P.; Zhu, L. Steel Surface Defect Detection Technology Based on YOLOv8-MGVS. Metals 2025, 15, 109. [Google Scholar] [CrossRef]

- Bhatt, P.M.; Malhan, R.K.; Rajendran, P.; Shah, B.C.; Thakar, S.; Yoon, Y.J.; Gupta, S.K. Image-Based Surface Defect Detection Using Deep Learning: A Review. J. Comput. Inf. Sci. Eng. 2021, 21, 040801. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. arXiv 2014. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016. [Google Scholar] [CrossRef]

- Anantharaman, R.; Velazquez, M.; Lee, Y. Utilizing Mask R-CNN for Detection and Segmentation of Oral Diseases. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 2197–2204. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2018. [Google Scholar] [CrossRef]

- Han, O.C.; Kutbay, U. Detection of Defects on Metal Surfaces Based on Deep Learning. Appl. Sci. 2025, 15, 1406. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. arXiv 2014. [Google Scholar] [CrossRef]

- Chen, Z.; Zhu, Q.; Zhou, X.; Deng, J.; Song, W. Experimental Study on YOLO-Based Leather Surface Defect Detection. IEEE Access 2024, 12, 32830–32848. [Google Scholar] [CrossRef]

- Ishtiaque Mahbub, A.M.; Malikopoulos, A.A. Platoon Formation in a Mixed Traffic Environment: A Model-Agnostic Optimal Control Approach. In Proceedings of the 2022 American Control Conference (ACC), Atlanta, GA, USA, 8–10 June 2022; pp. 4746–4751. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022. [Google Scholar] [CrossRef]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient Multi-Scale Attention Module with Cross-Spatial Learning. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. arXiv 2020. [Google Scholar] [CrossRef]

- Wang, C.; Hu, J.; Yang, C.; Hu, P. DES-YOLO: A novel model for real-time detection of casting surface defects. PeerJ Comput. Sci. 2024, 10, e2224. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. arXiv 2018. [Google Scholar] [CrossRef]

- He, Y.; Song, K.; Meng, Q.; Yan, Y. An End-to-End Steel Surface Defect Detection Approach via Fusing Multiple Hierarchical Features. IEEE Trans. Instrum. Meas. 2020, 69, 1493–1504. [Google Scholar] [CrossRef]

- Severstal Steel Defect Dataset 2020. Available online: https://gitcode.com/open-source-toolkit/f4425/blob/main/severstal-steel-defect-detection.zip (accessed on 25 October 2024).

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning—ICML ’06, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar] [CrossRef]

- Ma, S.; Zhao, X.; Wan, L.; Zhang, Y.; Gao, H. A lightweight algorithm for steel surface defect detection using improved YOLOv8. Sci. Rep. 2025, 15, 8966. [Google Scholar] [CrossRef] [PubMed]

- Liang, L.; Chen, K.; Chen, L.; Long, P. Improving the lightweight FCM-YOLOv8n for steel surface defect detection. Opto-Electron. Eng. 2025, 52, 240280–240281. [Google Scholar]

| Model | AP% | P% | R% | mAP% | Param | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Cr | In | Pa | Ps | Rs | Sc | |||||

| YOLOv8 | 39.9 | 83.2 | 92.4 | 87.9 | 66.2 | 93.3 | 69.0 | 74.6 | 77.1 | 3006818 |

| YOLOv8+E | 38.1 | 80.9 | 93.9 | 87.0 | 76.4 | 95.7 | 72.4 | 72.5 | 78.7 | 3006874 |

| YOLOv8+B | 38.7 | 81.2 | 93.9 | 86.9 | 72.3 | 94.7 | 70.7 | 74.6 | 77.9 | 3006827 |

| Ours | 42.2 | 82.5 | 92.4 | 88.5 | 80.0 | 95.7 | 75.1 | 72.7 | 80.2 | 3006880 |

| Model | AP% | R% | P% | mAP% | Param | |||

|---|---|---|---|---|---|---|---|---|

| Class-0 | Class-1 | Class-2 | Class-3 | |||||

| YOLOv8 | 61.7 | 53.5 | 76.2 | 71.3 | 61.4 | 64.7 | 65.7 | 3006428 |

| YOLOv8+E | 60.1 | 55.4 | 76.7 | 71.1 | 62.3 | 66.9 | 65.8 | 3006484 |

| YOLOv8+B | 61.7 | 63.4 | 76.8 | 71.7 | 60.5 | 72.8 | 68.4 | 3006437 |

| Ours | 60.9 | 56.7 | 77.4 | 69.7 | 60.5 | 69.7 | 66.2 | 3006490 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, M.; Bai, S.; Lu, Y. Steel Surface Defect Detection Algorithm Based on Improved YOLOv8 Modeling. Appl. Sci. 2025, 15, 8759. https://doi.org/10.3390/app15158759

Peng M, Bai S, Lu Y. Steel Surface Defect Detection Algorithm Based on Improved YOLOv8 Modeling. Applied Sciences. 2025; 15(15):8759. https://doi.org/10.3390/app15158759

Chicago/Turabian StylePeng, Miao, Sue Bai, and Yang Lu. 2025. "Steel Surface Defect Detection Algorithm Based on Improved YOLOv8 Modeling" Applied Sciences 15, no. 15: 8759. https://doi.org/10.3390/app15158759

APA StylePeng, M., Bai, S., & Lu, Y. (2025). Steel Surface Defect Detection Algorithm Based on Improved YOLOv8 Modeling. Applied Sciences, 15(15), 8759. https://doi.org/10.3390/app15158759