Physics-Informed Surrogate Modelling in Fire Safety Engineering: A Systematic Review

Abstract

1. Introduction: Addressing Challenges in Fire Safety Engineering with Surrogate Modelling

1.1. Surrogate Modelling Definition and Motivation

1.2. Surrogate Modelling Algorithms

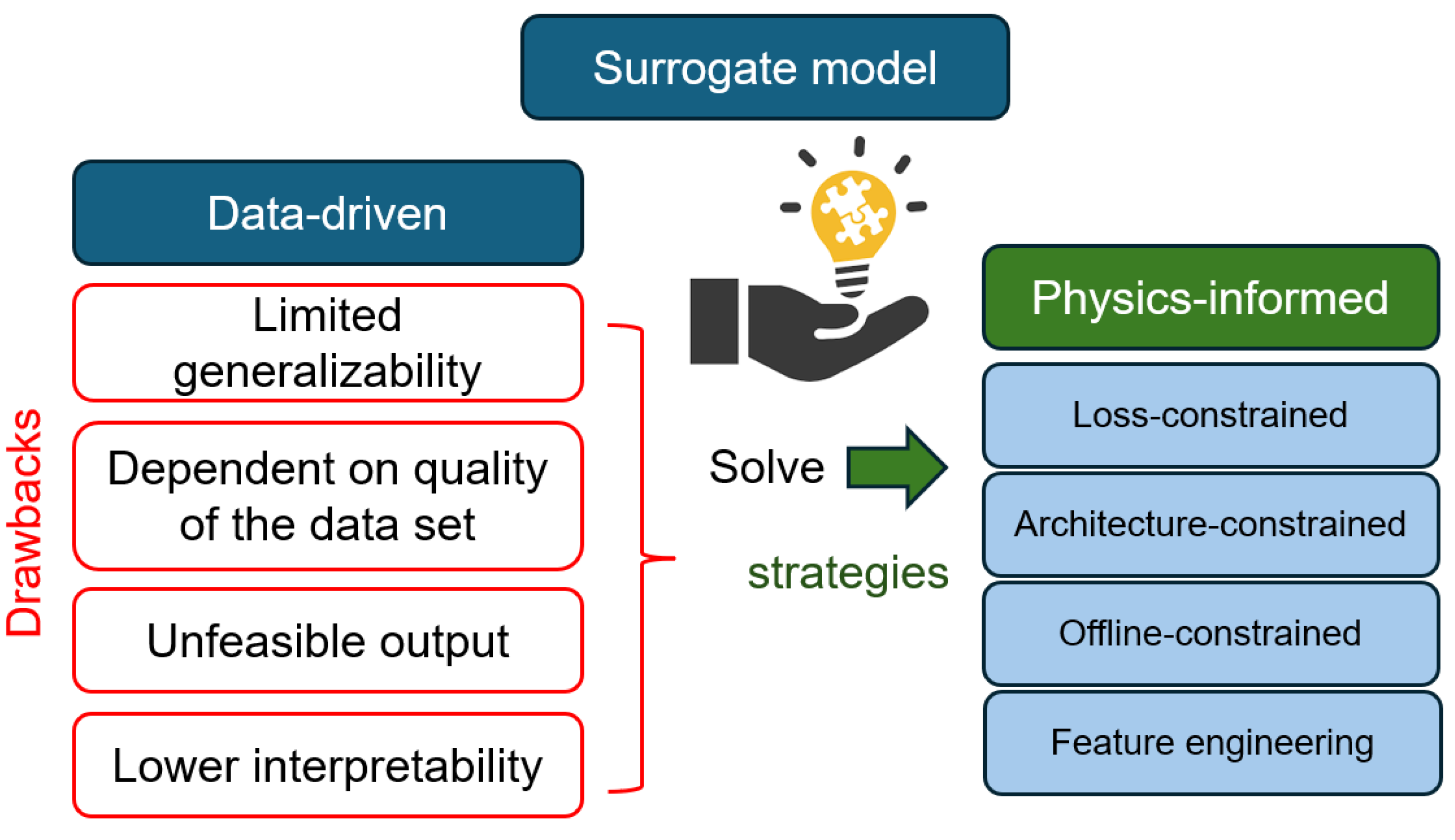

1.3. Data-Driven vs. Physics-Informed Surrogate Models

1.4. Strategies for Implementing Physics in Surrogate Models

- Loss-constrained technique (LCT): These models integrate physical knowledge by introducing physical constraints directly into the training process through the loss function. This is typically achieved by incorporating penalty terms that quantify the violation of known physical laws (e.g., conservation of mass, momentum, and energy, or governing partial differential equations). During training, the model is penalised for making predictions that do not satisfy these constraints. While this approach encourages the model to learn physically consistent solutions, it does not entirely prevent the possibility of non-physical predictions, especially in regions with limited data or complex physics. The strength of the penalty term influences the degree to which the model adheres to the physical constraints.

- Architecture-constrained technique (ACT): This strategy involves embedding physical laws directly into the architecture of the surrogate model. This can be done by designing specific activation functions, network layers, or even entire model structures that inherently respect or align with fundamental physical properties or concepts. As an example, suppose we are modelling the cooling of a steel section at uniform temperature after fire exposure. To ensure that the model respects the basic physics of cooling (i.e., the temperature must decay over time and never increase after the heat source is removed), we could design the model architecture to enforce this by using exponentially decaying functions (e.g., as part of the output layer. This guarantees that predicted temperatures always decay monotonically over time, reflecting the natural cooling behaviour of materials post-fire. This approach offers a strong form of physics integration, as it makes predictions that violate the implemented physical laws mathematically impossible. However, designing such architectures can be complex.

- Offline-constrained technique (OCT): These models apply physical constraints after the surrogate model has been trained, refining predictions during the inference phase. One approach is to perform a “sanity check” to ensure the model’s output falls within physically plausible ranges or adheres to basic physical principles. Another effective strategy involves using the initial output from the trained model as an input to a separate, well-established physical correlation or a set of physical equations. This allows for the imposition of more complex physical relationships on the model’s predictions, generating a new, physically refined output. This, in other words, introduces a physically informed refinement as an additional final step in the predictions by the trained model. This latter approach closely links with the ACT approach to PISM: the architecture of the global surrogate model is split into a data-driven model and a physical model, whereby the former provides input to the latter.

- Feature engineering technique (FET): The variables describing the training data are engineered to align with physical insights, such as non-dimensional parameters. This avoids, for example, non-physical combined effects of parameters within the trained model. This is thus a strategy for incorporating physics in the preprocessing stage.

1.5. Research Scope and Objectives

- What are the reported applications of PISM across various domains within FSE?

- What distinct strategies for integrating fundamental physical principles into machine learning models (i.e., feature engineering techniques, loss-constrained techniques, architecture-constrained techniques, and offline-constrained techniques) are employed in PISM studies within FSE?

- What are the current challenges and limitations associated with the development and application of PISM in FSE?

- Based on the synthesis of existing literature, what best practices can be identified, and what stepwise framework can be proposed for the systematic creation of PISMs in FSE?

2. Materials and Methods

2.1. Literature Review

2.1.1. Fire Dynamics

2.1.2. Wildfire

2.1.3. Structural Fire Engineering

| Article | Output of the Model | Model Algorithm | Physics-Informed Strategy | Implementation of the Physics-Informed Strategy |

|---|---|---|---|---|

| Esteghamati et al. [95] | Fire resistance of timber columns | Regression-based, partition-based, neural-network-based | FET | Features were selected through data processing and prior knowledge |

| Giu et al. [100] | Thermo-mechanical response | Neural-network based | LCT, ACT | Embedded physics in loss functions with different networks for different physics. |

| Harandi et al. [102] | Thermo-mechanical response | Neural-network based | LCT, ACT | A mixed PINN architecture for coupled thermo-mechanical analysis, together with physical loss functions, is used. |

| Li et al. [97] | Fire resistance of the composite column | Neural-network based | FET OCT | Frequency analysis used for feature selection A physical link for mechanical evaluation is also used |

| Naser et al. [101] | Fire resistance of the reinforced concrete column | Regression-based (Causal analysis) | ACT | Modifying links between parameters based on prior knowledge and causal analysis |

| Raj et al. [99] | Thermo-mechanical response | Neural-network based | LCT | Loss functions created to incorporate the thermo-elastic partial differential equation with the degradation of the material |

| Wang et al. [96] | Fire resistance of the concrete column | Regression-based | FET | Frequency analysis, correlation analysis |

2.1.4. Material Behaviour

2.1.5. Heat Transfer

| Article | Output of the Model | Model Algorithm | Physics-Informed Strategy | Implementation of the Physics-Informed Strategy |

|---|---|---|---|---|

| Cai et al. [135] | Temperature field | Neural network-based | LCT | Using loss terms from physics (from PDE) in combination with a data-driven loss term. |

| Gao et al. [136] | Pore pressure in the heated porous medium | Neural network-based | ACT LCT | Two coupled networks with loss terms defined by PDEs. |

| Koric et al. [137] | Temperature field solver | Neural network-based | LCT | Loss terms evaluated divergence from PDEs. |

| Niaki et al. [138] | Temperature field | Neural network-based | LCT | Loss terms evaluated divergence from PDEs (just convection at the boundary). |

| Raissi [133] | PDE solver | Neural network-based | LCT | Creating loss terms linked to the residual of the physical equation. |

| Sirignano et al. [134] | PDE solver | Neural network-based | LCT | Creating loss terms linked to the residual of the physical equation. |

| Zobeiry et al. [14] | Temperature field | Neural network-based | LCT ACT | Loss terms from PDE. Selecting activation functions and the configuration of the network. |

2.2. Discussion and Conclusions

3. Implementing Physics-Informed Surrogate Model

3.1. Framework

3.2. Integrating Physics with Surrogate Models

- Understanding physics

- 2.

- Database development (Feature Engineering technique—FET)

- 3.

- Model selection (Architecture-Constrained technique—ACT)

- 4.

- Objective level (Loss-Constrained technique—LCT)

- 5.

- Optimiser level

- 6.

- Inference level (Offline-Constrained technique—OCT)

3.3. Understanding Physics

3.3.1. Identifying Sub-Models

3.3.2. Identifying Physical Constraints

3.3.3. Identifying Known Solution Types

3.4. Injecting Physics into Models

3.4.1. Database Development (Feature Engineering Technique—FET)

- Visual Analysis: Plotting output values as a function of each input parameter allows for an intuitive assessment of trends (for example, in [96]).

- Statistical Analysis: Quantitative measures, such as Pearson correlation coefficients (PCCs) [141] for linear dependencies and maximal information coefficient (MIC) [142] for nonlinear correlations, can help determine parameter significance. Advanced techniques, such as partial dependence plots or feature importance rankings from tree-based models, provide additional insight.

- Expert Judgment: Domain expertise is incorporated to assess the practical significance of observed sensitivities.

3.4.2. Model Selection (Architecture-Constrained Technique—ACT)

Regression-Based Models

- x: the feature vector

- h(.): the regression function

Neural Networks-Based Models

3.4.3. Objective Level (Loss-Constrained Techniques—LCT)

Data-Driven Objective

Physics-Informed Objective (Loss-Constrained Technique—LCT)

3.4.4. Optimiser Level

- Loss function on which model is trained.

- Weighted loss function for each physics-informed loss component.

- Gradient-Based Reweighting: The model rescales loss weights based on the magnitude of their gradients, ensuring that all terms contribute equally to optimisation [153].

- Gradient Normalisation: Rescales gradients to balance optimisation across all terms, preventing any single loss component from dominating [157].

- Hybrid training scheme: It is based on an initial first-order optimisation like ADAM, followed by a second-order optimisation [86].

3.4.5. Inference Level (Offline-Constrained Techniques—OCT)

4. Challenges and Future Directions

5. Conclusions

- Feature Engineering Technique (FET): Incorporates physics-based variables and transformations into the dataset before training.

- Loss-Constrained Technique (LCT): Embeds physics-based constraints directly into the loss function to guide optimisation.

- Architecture-Constrained Technique (ACT): Modifies the neural network structure to enforce physical constraints (e.g., sub-models, and activation functions for bounded outputs).

- Offline-Constrained Technique (OCT): Applies physics-based corrections in post-processing, refining model predictions after training.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACT | Architecture-constrained technique |

| AI | Artificial intelligence |

| ANN | Artificial neural network |

| CFD | Computational fluid dynamics |

| CNN | Convolutional neural network |

| DL | Deep learning |

| DNN | Deep neural network |

| DT | Decision tree |

| FE | Finite element model |

| FET | Feature engineering technique |

| GAN | Generative adversarial network |

| GMM | Gaussian mixture models |

| GPR | Gaussian process regression |

| HMM | Hidden Markov models |

| LCT | Loss-constrained technique |

| LightGBM | Light gradient boosting algorithm |

| LR | Logistic regression |

| LSTM | Long short-term memory |

| MLR | Multiple linear regression |

| NGBoost | Natural gradient boosting |

| OCT | Offline-constrained technique |

| ODE | Ordinary differential equation |

| PDE | Partial differential equation |

| PIML | Physics-informed machine learning |

| PINN | Physics-informed neural network |

| PISM | Physics-informed surrogate model |

| RF | Random forest |

| ROM | Reduced-order modelling |

| SVM | Support vector machine |

| TCNN | Transverse convolutional neural network |

| XGBoost | Extreme gradient boosting algorithm |

References

- Kodur, V.K.R.; Garlock, M.; Iwankiw, N. Structures in Fire: State-of-the-Art, Research and Training Needs. Fire Technol. 2012, 48, 825–839. [Google Scholar] [CrossRef]

- Liu, J.-C.; Tan, K.H.; Yao, Y. A new perspective on nature of fire-induced spalling in concrete. Constr. Build. Mater. 2018, 184, 581–590. [Google Scholar] [CrossRef]

- Samadian, D.; Muhit, I.B.; Dawood, N. Application of Data-Driven Surrogate Models in Structural Engineering: A Literature Review. Arch. Comput. Methods Eng. 2024, 32, 735–784. [Google Scholar] [CrossRef]

- Koziel, S.; Pietrenko-Dabrowska, A. Physics-Based Surrogate Modeling. In Performance-Driven Surrogate Modeling of High-Frequency Structures; Springer International Publishing: Cham, Switzerland, 2020; pp. 59–128. [Google Scholar] [CrossRef]

- Forrester, A.I.J.; Sóbester, A.; Keane, A.J. Engineering Design via Surrogate Modelling; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2008. [Google Scholar] [CrossRef]

- Zhou, Y.; Lu, Z. An enhanced Kriging surrogate modeling technique for high-dimensional problems. Mech. Syst. Signal Process. 2020, 140, 106687. [Google Scholar] [CrossRef]

- Shang, X.; Su, L.; Fang, H.; Zeng, B.; Zhang, Z. An efficient multi-fidelity Kriging surrogate model-based method for global sensitivity analysis. Reliab. Eng. Syst. Saf. 2022, 229, 108858. [Google Scholar] [CrossRef]

- Robinson, T.D.; Eldred, M.S.; Willcox, K.E.; Haimes, R. Surrogate-based optimization using multifidelity models with variable parameterization and corrected space mapping. AIAA J. 2008, 46, 2814–2822. [Google Scholar] [CrossRef]

- Kroetz, H.; Moustapha, M.; Beck, A.; Sudret, B. A Two-Level Kriging-Based Approach with Active Learning for Solving Time-Variant Risk Optimization Problems. Reliab. Eng. Syst. Saf. 2020, 203, 107033. [Google Scholar] [CrossRef]

- Sahin, E.; Lattimer, B.; Allaf, M.A.; Duarte, J.P. Uncertainty quantification of unconfined spill fire data by coupling Monte Carlo and artificial neural networks. J. Nucl. Sci. Technol. 2024, 61, 1218–1231. [Google Scholar] [CrossRef]

- Kim, J.; Wang, Z.; Song, J. Adaptive active subspace-based metamodeling for high-dimensional reliability analysis. Struct. Saf. 2024, 106, 102404. [Google Scholar] [CrossRef]

- Chaudhary, R.K.; Van Coile, R.; Gernay, T. Potential of Surrogate Modelling for Probabilistic Fire Analysis of Structures. Fire Technol. 2021, 57, 3151–3177. [Google Scholar] [CrossRef]

- Jakeman, J.D.; Kouri, D.P.; Huerta, J.G. Surrogate modeling for efficiently, accurately and conservatively estimating measures of risk. Reliab. Eng. Syst. Saf. 2022, 221, 108280. [Google Scholar] [CrossRef]

- Zobeiry, N.; Humfeld, K.D. A physics-informed machine learning approach for solving heat transfer equation in advanced manufacturing and engineering applications. Eng. Appl. Artif. Intell. 2021, 101, 104232. [Google Scholar] [CrossRef]

- Mainini, L.; Willcox, K.E. A surrogate modeling approach to support real-time structural assessment and decision-making. In Proceedings of the 10th AIAA Multidisciplinary Design Optimization Conference, American Institute of Aeronautics and Astronautics, Reston, VA, USA, 13–17 January 2014. [Google Scholar] [CrossRef]

- Yondo, R.; Bobrowski, K.; Andrés, E.; Valero, E. A Review of Surrogate Modeling Techniques for Aerodynamic Analysis and Optimization: Current Limitations and Future Challenges in Industry. In Advances in Evolutionary and Deterministic Methods for Design, Optimization and Control in Engineering and Sciences; Springer International Publishing: Cham, Switzerland, 2019; pp. 19–33. [Google Scholar] [CrossRef]

- Barcenas, O.U.E.; Pioquinto, J.G.Q.; Kurkina, E.; Lukyanov, O. Surrogate Aerodynamic Wing Modeling Based on a Multilayer Perceptron. Aerospace 2023, 10, 149. [Google Scholar] [CrossRef]

- Sakurada, K.; Ishikawa, T. Synthesis of causal and surrogate models by non-equilibrium thermodynamics in biological systems. Sci. Rep. 2024, 14, 1001. [Google Scholar] [CrossRef]

- Winz, J.; Nentwich, C.; Engell, S. Surrogate Modeling of Thermodynamic Equilibria: Applications, Sampling and Optimization. Chem. Ing. Tech. 2021, 93, 1898–1906. [Google Scholar] [CrossRef]

- Wang, X.; Xiao, Y.; Li, W.; Wang, M.; Zhou, Y.; Chen, Y.; Li, Z. Kriging-based surrogate data-enriching artificial neural network prediction of strength and permeability of permeable cement-stabilized base. Nat. Commun. 2024, 15, 4891. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, B.D.; Potapenko, P.; Demirci, A.; Govind, K.; Bompas, S.; Sandfeld, S. Efficient surrogate models for materials science simulations: Machine learning-based prediction of microstructure properties. Mach. Learn. Appl. 2024, 16, 100544. [Google Scholar] [CrossRef]

- Mobasheri, F.; Hosseinpoor, M.; Yahia, A.; Pourkamali-Anaraki, F. Machine Learning as an Innovative Engineering Tool for Controlling Concrete Performance: A Comprehensive Review. Arch. Comput. Methods Eng. 2025. [Google Scholar] [CrossRef]

- Naser, M.Z. Mechanistically Informed Machine Learning and Artificial Intelligence in Fire Engineering and Sciences. Fire Technol. 2021, 57, 2741–2784. [Google Scholar] [CrossRef]

- Naser, M.; Kodur, V. Explainable machine learning using real, synthetic and augmented fire tests to predict fire resistance and spalling of RC columns. Eng. Struct. 2022, 253, 113824. [Google Scholar] [CrossRef]

- Chaudhary, R.K.; Van Coile, R.; Gernay, T. Fragility Curves for Fire Exposed Structural Elements Through Application of Regression Techniques. In Lecture Notes in Civil Engineering; Springer Science and Business Media Deutschland GmbH: Berlin, Germany, 2021; pp. 379–390. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer Science & Business Media: New York, NY, USA, 2009. [Google Scholar] [CrossRef]

- Zhang, H.T.; Gao, M.X. The Application of Support Vector Machine (SVM) Regression Method in Tunnel Fires. Procedia Eng. 2018, 211, 1004–1011. [Google Scholar] [CrossRef]

- Nasrabadi, N.M. Pattern recognition and machine learning. J. Electron. Imaging 2007, 16, 049901. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning. 2016. Available online: https://mitpress.mit.edu/9780262035613/deep-learning/ (accessed on 1 February 2025).

- Mitchell, T.; Buchanan, B.; DeJong, G.; Dietterich, T.; Rosenbloom, P.; Waibel, A. Machine Learning. Annu. Rev. Comput. Sci. 1990, 4, 417–433. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; Routledge: Boca Raton, FL, USA, 2017. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Bhadoria, R.S.; Pandey, M.K.; Kundu, P. RVFR: Random vector forest regression model for integrated & enhanced approach in forest fires predictions. Ecol. Inform. 2021, 66, 101471. [Google Scholar] [CrossRef]

- Sun, Y.; Zhang, F.; Lin, H.; Xu, S. A Forest Fire Susceptibility Modeling Approach Based on Light Gradient Boosting Machine Algorithm. Remote Sens. 2022, 14, 4362. [Google Scholar] [CrossRef]

- Wan, A.; Du, C.; Gong, W.; Wei, C.; Al-Bukhaiti, K.; Ji, Y.; Ma, S.; Yao, F.; Ao, L. Using Transfer Learning and XGBoost for Early Detection of Fires in Offshore Wind Turbine Units. Energies 2024, 17, 2330. [Google Scholar] [CrossRef]

- Wang, J.; Cui, G.; Kong, X.; Lu, K.; Jiang, X. Flame height and axial plume temperature profile of bounded fires in aircraft cargo compartment with low-pressure. Case Stud. Therm. Eng. 2022, 33, 101918. [Google Scholar] [CrossRef]

- Heskestad, G. Fire plumes, flame height, and air entrainment. In SFPE Handbook of Fire Protection Engineering, 5th ed.; SFPE: Washington, DC, USA, 2016; pp. 396–428. [Google Scholar] [CrossRef]

- Kaymaz, I. Application of kriging method to structural reliability problems. Struct. Saf. 2005, 27, 133–151. [Google Scholar] [CrossRef]

- Lee, C.S.; Jeon, J. Phenomenological hysteretic model for superelastic NiTi shape memory alloys accounting for functional degradation. Earthq. Eng. Struct. Dyn. 2022, 51, 277–309. [Google Scholar] [CrossRef]

- Naser, M. From failure to fusion: A survey on learning from bad machine learning models. Inf. Fusion 2025, 120, 103122. [Google Scholar] [CrossRef]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Hao, Z.; Liu, S.; Zhang, Y.; Ying, C.; Feng, Y.; Su, H.; Zhu, J. Physics-Informed Machine Learning: A Survey on Problems, Methods and Applications. arXiv 2022, arXiv:2211.08064. [Google Scholar]

- Quarteroni, A.; Gervasio, P.; Regazzoni, F. Combining physics-based and data-driven models: Advancing the frontiers of research with scientific machine learning. arXiv 2025, arXiv:2501.18708. [Google Scholar] [CrossRef]

- Karpatne, A.; Atluri, G.; Faghmous, J.H.; Steinbach, M.; Banerjee, A.; Ganguly, A.; Shekhar, S.; Samatova, N.; Kumar, V. Theory-Guided Data Science: A New Paradigm for Scientific Discovery from Data. IEEE Trans. Knowl. Data Eng. 2016, 29, 2318–2331. [Google Scholar] [CrossRef]

- Singh, V.; Harursampath, D.; Dhawan, S.; Sahni, M.; Saxena, S.; Mallick, R. Physics-Informed Neural Network for Solving a One-Dimensional Solid Mechanics Problem. Modelling 2024, 5, 1532–1549. [Google Scholar] [CrossRef]

- Tronci, E.M.; Downey, A.R.J.; Mehrjoo, A.; Chowdhury, P.; Coble, D. Physics-Informed Machine Learning Part I: Different Strategies to Incorporate Physics into Engineering Problems; Springer: Cham, Switzerland, 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Shaban, W.M.; Elbaz, K.; Zhou, A.; Shen, S.-L. Physics-informed deep neural network for modeling the chloride diffusion in concrete. Eng. Appl. Artif. Intell. 2023, 125, 106691. [Google Scholar] [CrossRef]

- Toscano, J.D.; Oommen, V.; Varghese, A.J.; Zou, Z.; Daryakenari, N.A.; Wu, C.; Karniadakis, G.E. From PINNs to PIKANs: Recent advances in physics-informed machine learning. Mach. Learn. Comput. Sci. Eng. 2025, 1, 1–43. [Google Scholar] [CrossRef]

- Zanetta, F.; Nerini, D.; Beucler, T.; Liniger, M.A. Physics-Constrained Deep Learning Postprocessing of Temperature and Humidity. Artif. Intell. Earth Syst. 2023, 2, e220089. [Google Scholar] [CrossRef]

- Kashinath, K.; Mustafa, M.; Albert, A.; Wu, J.L.; Jiang, C.; Esmaeilzadeh, S.; Azizzadenesheli, K.; Wang, R.; Chattopadhyay, A.; Singh, A.; et al. Physics-informed machine learning: Case studies for weather and climate modelling. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2021, 379, 20200093. [Google Scholar] [CrossRef]

- Page, M.J.; Moher, D.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ 2021, 372, n160. [Google Scholar] [CrossRef]

- Leiteritz, R.; Buchfink, P.; Haasdonk, B.; Pflüger, D. Surrogate-data-enriched Physics-Aware Neural Networks. Proc. North. Light. Deep. Learn. Work. 2021, 3, 1–8. [Google Scholar] [CrossRef]

- McKay, M.D.; Beckman, R.J.; Conover, W.J. A Comparison of Three Methods for Selecting Values of Input Variables in the Analysis of Output from a Computer Code. Technometrics 1979, 21, 239–245. [Google Scholar] [CrossRef]

- Yeoh, G.H.; Yuen, K.K. Computational Fluid Dynamics in Fire Engineering, Computational Fluid Dynamics in Fire Engineering; Elsevier: Amsterdam, The Netherlands, 2009. [Google Scholar] [CrossRef]

- Drysdale, D. An Introduction to Fire Dynamics, 3rd ed.; John Wiley: New York, NY, USA, 2011; pp. 1–551. [Google Scholar] [CrossRef]

- Zhang, L.; Mo, L.; Fan, C.; Zhou, H.; Zhao, Y. Data-Driven Prediction Methods for Real-Time Indoor Fire Scenario Inferences. Fire 2023, 6, 401. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, Z.; Wong, H.Y.; Tam, W.C.; Huang, X.; Xiao, F. Real-time forecast of compartment fire and flashover based on deep learning. Fire Saf. J. 2022, 130, 103579. [Google Scholar] [CrossRef]

- Wang, J.; Tam, W.C.; Jia, Y.; Peacock, R.; Reneke, P.; Fu, E.Y.; Cleary, T. P-Flash—A machine learning-based model for flashover prediction using recovered temperature data. Fire Saf. J. 2021, 122, 103341. [Google Scholar] [CrossRef] [PubMed]

- Yun, K.; Bustos, J.; Lu, T. Predicting Rapid Fire Growth (Flashover) Using Conditional Generative Adversarial Networks. arXiv 2018, arXiv:1801.09804. [Google Scholar] [CrossRef]

- Tam, W.C.; Fu, E.Y.; Li, J.; Huang, X.; Chen, J.; Huang, M.X. A spatial temporal graph neural network model for predicting flashover in arbitrary building floorplans. Eng. Appl. Artif. Intell. 2022, 115, 105258. [Google Scholar] [CrossRef]

- Fan, L.; Tam, W.C.; Tong, Q.; Fu, E.Y.; Liang, T. An explainable machine learning based flashover prediction model using dimension-wise class activation map. Fire Saf. J. 2023, 140, 103849. [Google Scholar] [CrossRef]

- Lattimer, B.Y.; Hodges, J.L.; Lattimer, A.M. Using machine learning in physics-based simulation of fire. Fire Saf. J. 2020, 114, 102991. [Google Scholar] [CrossRef]

- Nguyen, H.T.; Abu-Zidan, Y.; Zhang, G.; Nguyen, K.T. Machine learning-based surrogate model for calibrating fire source properties in FDS models of façade fire tests. Fire Saf. J. 2022, 130, 103591. [Google Scholar] [CrossRef]

- Coen, J.L.; Cameron, M.; Michalakes, J.; Patton, E.G.; Riggan, P.J.; Yedinak, K.M. WRF-Fire: Coupled Weather–Wildland Fire Modeling with the Weather Research and Forecasting Model. J. Appl. Meteorol. Clim. 2013, 52, 16–38. [Google Scholar] [CrossRef]

- Coen, J. Some Requirements for Simulating Wildland Fire Behavior Using Insight from Coupled Weather—Wildland Fire Models. Fire 2018, 1, 6. [Google Scholar] [CrossRef]

- Shaik, R.U.; Alipour, M.; Shamsaei, K.; Rowell, E.; Balaji, B.; Watts, A.; Kosovic, B.; Ebrahimian, H.; Taciroglu, E. Wildfire Fuels Mapping through Artificial Intelligence-based Methods: A Review. Earth-Sci. Rev. 2025, 262, 105064. [Google Scholar] [CrossRef]

- Jain, P.; Coogan, S.C.P.; Subramanian, S.G.; Crowley, M.; Taylor, S.W.; Flannigan, M.D. A review of machine learning applications in wildfire science and management. Environ. Rev. 2020, 28, 478–505. [Google Scholar] [CrossRef]

- Bot, K.; Borges, J.G. A Systematic Review of Applications of Machine Learning Techniques for Wildfire Management Decision Support. Inventions 2022, 7, 15. [Google Scholar] [CrossRef]

- Santos, F.L.; Couto, F.T.; Dias, S.S.; Ribeiro, N.d.A.; Salgado, R. Vegetation fuel characterization using machine learning approach over southern Portugal. Remote Sens. Appl. Soc. Environ. 2023, 32, 101017. [Google Scholar] [CrossRef]

- Pierce, A.D.; Farris, C.A.; Taylor, A.H. Use of random forests for modeling and mapping forest canopy fuels for fire behavior analysis in Lassen Volcanic National Park, California, USA. For. Ecol. Manag. 2012, 279, 77–89. [Google Scholar] [CrossRef]

- Andrianarivony, H.S.; Akhloufi, M.A. Machine Learning and Deep Learning for Wildfire Spread Prediction: A Review. Fire 2024, 7, 482. [Google Scholar] [CrossRef]

- Vasconcelos, R.N.; Rocha, W.J.S.F.; Costa, D.P.; Duverger, S.G.; de Santana, M.M.M.; Cambui, E.C.B.; Ferreira-Ferreira, J.; Oliveira, M.; Barbosa, L.d.S.; Cordeiro, C.L. Fire Detection with Deep Learning: A Comprehensive Review. Land 2024, 13, 1696. [Google Scholar] [CrossRef]

- Angayarkkani, K.; Radhakrishnan, N. An Intelligent System for Effective Forest Fire Detection Using Spatial Data, Journal of Computer Science. arXiv 2010, arXiv:1002.2199v1. [Google Scholar]

- Al-Rawi, K.R.; Casanova, J.L.; Calle, A. Burned area mapping system and fire detection system, based on neural networks and NOAA-AVHRR imagery. Int. J. Remote Sens. 2001, 22, 2015–2032. [Google Scholar] [CrossRef]

- Akhloufi, M.A.; Tokime, R.B.; Elassady, H.; Alam, M.S. Wildland fires detection and segmentation using deep learning. In Pattern Recognition and Tracking XXIX; SPIE: Orlando, FL, USA, 2018; p. 11. [Google Scholar] [CrossRef]

- Moradi, S.; Hafezi, M.; Sheikhi, A. Early wildfire detection using different machine learning algorithms. Remote Sens. Appl. Soc. Environ. 2024, 36, 101346. [Google Scholar] [CrossRef]

- Sathishkumar, V.E.; Cho, J.; Subramanian, M.; Naren, O.S. Forest fire and smoke detection using deep learning-based learning without forgetting. Fire Ecol. 2023, 19, 9. [Google Scholar] [CrossRef]

- Bhowmik, R.T.; Jung, Y.S.; Aguilera, J.A.; Prunicki, M.; Nadeau, K. A multi-modal wildfire prediction and early-warning system based on a novel machine learning framework. J. Environ. Manag. 2023, 341, 117908. [Google Scholar] [CrossRef]

- Burge, J.; Bonanni, M.R.; Hu, R.L.; Ihme, M. Recurrent Convolutional Deep Neural Networks for Modeling Time-Resolved Wildfire Spread Behavior. Fire Technol. 2023, 59, 3327–3354. [Google Scholar] [CrossRef]

- Hodges, J.L.; Lattimer, B.Y. Wildland Fire Spread Modeling Using Convolutional Neural Networks. Fire Technol. 2019, 55, 2115–2142. [Google Scholar] [CrossRef]

- Fan, D.; Biswas, A.; Ahrens, J. PAhrens, Explainable AI Integrated Feature Engineering for Wildfire Prediction. arXiv 2024, arXiv:2404.01487v1. [Google Scholar]

- Michail, D.; Panagiotou, L.-I.; Davalas, C.; Prapas, I.; Kondylatos, S.; Bountos, N.I.; Papoutsis, I. Papoutsis, Seasonal Fire Prediction using Spatio-Temporal Deep Neural Networks. arXiv 2024, arXiv:2404.06437v1. [Google Scholar]

- Shaddy, B.; Ray, D.; Farguell, A.; Calaza, V.; Mandel, J.; Haley, J.; Hilburn, K.; Mallia, D.V.; Kochanski, A.; Oberai, A. Generative Algorithms for Fusion of Physics-Based Wildfire Spread Models with Satellite Data for Initializing Wildfire Forecasts. Artif. Intell. Earth Syst. 2024, 3, e230087. [Google Scholar] [CrossRef]

- Jadouli, A.; El Amrani, C. Physics-Embedded Deep Learning for Wildfire Risk Assessment: Integrating Statistical Mechanics into Neural Networks for Interpretable Environmental Modeling. 2025. Available online: https://www.researchsquare.com/article/rs-6404320/v1 (accessed on 15 February 2025).

- Bottero, L.; Calisto, F.; Graziano, G.; Pagliarino, V.; Scauda, M.; Tiengo, S.; Azeglio, S. Physics-Informed Machine Learning Simulator for Wildfire Propagation, CEUR Workshop Proc 2964. arXiv 2020, arXiv:2012.06825v1. [Google Scholar]

- Vogiatzoglou, K.; Papadimitriou, C.; Bontozoglou, V.; Ampountolas, K. Physics-informed neural networks for parameter learning of wildfire spreading. Comput. Methods Appl. Mech. Eng. 2024, 434, 117545. [Google Scholar] [CrossRef]

- Lattimer, A.M.; Lattimer, B.Y.; Gugercin, S.; Borggaard, J.T.; Luxbacher, K.D. High Fidelity Reduced Order Models for Wildland Fires. In Proceedings of the The 5th International Fire Behavior and Fuels, Portland, Australia, 11–15 April 2016; Available online: https://www.researchgate.net/profile/Alan-Lat-tim-er/publication/309235783_High_Fidelity_Reduced_Order_Models_for_Wildland_Fires/links/58066d0b08ae0075d82c736e/High-Fidelity-Reduced-Order-Models-for-Wildland-Fires.pdf (accessed on 15 February 2025).

- Lattimer, A.; Borggaard, J.; Gugercin, S.; Luxbacher, K. Computationally Efficient Wildland Fire Spread Models. In Proceedings of the 14th International Fire Science & Engineering, Egham, UK, 4–6 July 2016; Available online: https://www.researchgate.net/profile/Alan-Lat-tim-er/publication/309230882_Computationally_Efficient_Wildland_Fire_Spread_Models/links/58063d0d08ae0075d82c42df/Computationally-Efficient-Wildland-Fire-Spread-Models.pdf (accessed on 10 February 2025).

- Naser, M. Fire resistance evaluation through artificial intelligence—A case for timber structures. Fire Saf. J. 2019, 105, 1–18. [Google Scholar] [CrossRef]

- Panev, Y.; Kotsovinos, P.; Deeny, S.; Flint, G. The Use of Machine Learning for the Prediction of fire Resistance of Composite Shallow Floor Systems. Fire Technol. 2021, 57, 3079–3100. [Google Scholar] [CrossRef]

- Norsk, D.; Sauca, A.; Livkiss, K. Fire resistance evaluation of gypsum plasterboard walls using machine learning method. Fire Saf. J. 2022, 130, 103597. [Google Scholar] [CrossRef]

- Liu, K.; Yu, M.; Liu, Y.; Chen, W.; Fang, Z.; Lim, J.B. Fire resistance time prediction and optimization of cold-formed steel walls based on machine learning. Thin-Walled Struct. 2024, 203, 112207. [Google Scholar] [CrossRef]

- Song, Z.; Zhang, C.; Lu, Y. The methodology for evaluating the fire resistance performance of concrete-filled steel tube columns by integrating conditional tabular generative adversarial networks and random oversampling. J. Build. Eng. 2024, 97, 110824. [Google Scholar] [CrossRef]

- Mei, Y.; Sun, Y.; Li, F.; Xu, X.; Zhang, A.; Shen, J. Probabilistic prediction model of steel to concrete bond failure under high temperature by machine learning. Eng. Fail. Anal. 2022, 142, 106786. [Google Scholar] [CrossRef]

- Esteghamati, M.Z.; Gernay, T.; Banerji, S. Evaluating fire resistance of timber columns using explainable machine learning models. Eng. Struct. 2023, 296, 116910. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, Z.; Zhang, X.; Qu, S.; Xu, T. Fire resistance of reinforced concrete columns: State of the art, analysis and prediction. J. Build. Eng. 2024, 96, 110690. [Google Scholar] [CrossRef]

- Li, S.; Liew, J.R.; Xiong, M.X. Prediction of fire resistance of concrete encased steel composite columns using artificial neural network. Eng. Struct. 2021, 245, 112877. [Google Scholar] [CrossRef]

- Bazmara, M.; Silani, M.; Mianroodi, M.; Sheibanian, M. Physics-informed neural networks for nonlinear bending of 3D functionally graded beam. Structures 2023, 49, 152–162. [Google Scholar] [CrossRef]

- Raj, M.; Kumbhar, P.; Annabattula, R.K. Physics-informed neural networks for solving thermo-mechanics problems of functionally graded material. arXiv 2022, arXiv:2111.10751. [Google Scholar]

- Qiu, L.; Wang, Y.; Gu, Y.; Qin, Q.-H.; Wang, F. Adaptive physics-informed neural networks for dynamic coupled thermo-mechanical problems in large-size-ratio functionally graded materials. Appl. Math. Model. 2024, 140, 115906. [Google Scholar] [CrossRef]

- Naser, M.Z.; Çiftçioğlu, A.Ö. Causal discovery and inference for evaluating fire resistance of structural members through causal learning and domain knowledge. Struct. Concr. 2023, 24, 3314–3328. [Google Scholar] [CrossRef]

- Harandi, A.; Moeineddin, A.; Kaliske, M.; Reese, S.; Rezaei, S. Mixed formulation of physics-informed neural networks for thermo-mechanically coupled systems and heterogeneous domains. Int. J. Numer. Methods Eng. 2023, 125, e7388. [Google Scholar] [CrossRef]

- Asteris, P.G.; Skentou, A.D.; Bardhan, A.; Samui, P.; Pilakoutas, K. Predicting concrete compressive strength using hybrid ensembling of surrogate machine learning models. Cem. Concr. Res. 2021, 145, 106449. [Google Scholar] [CrossRef]

- Emad, W.; Mohammed, A.S.; Kurda, R.; Ghafor, K.; Cavaleri, L.; Qaidi, S.M.A.; Hassan, A.; Asteris, P.G. Prediction of concrete materials compressive strength using surrogate models. Structures 2022, 46, 1243–1267. [Google Scholar] [CrossRef]

- Nunez, I.; Marani, A.; Flah, M.; Nehdi, M.L. Estimating compressive strength of modern concrete mixtures using computational intelligence: A systematic review. Constr. Build. Mater. 2021, 310, 125279. [Google Scholar] [CrossRef]

- Li, F.; Rana, S.; Qurashi, M.A. Advanced machine learning techniques for predicting concrete mechanical properties: A comprehensive review of models and methodologies. Multiscale Multidiscip. Model. Exp. Des. 2024, 8, 110. [Google Scholar] [CrossRef]

- Ben Chaabene, W.; Flah, M.; Nehdi, M.L. Machine learning prediction of mechanical properties of concrete: Critical review. Constr. Build. Mater. 2020, 260, 119889. [Google Scholar] [CrossRef]

- Han, T.; Huang, J.; Sant, G.; Neithalath, N.; Kumar, A. Predicting mechanical properties of ultrahigh temperature ceramics using machine learning. J. Am. Ceram. Soc. 2022, 105, 6851–6863. [Google Scholar] [CrossRef]

- Narayana, P.; Lee, S.W.; Park, C.H.; Yeom, J.-T.; Hong, J.-K.; Maurya, A.; Reddy, N.S. Modeling high-temperature mechanical properties of austenitic stainless steels by neural networks. Comput. Mater. Sci. 2020, 179, 109617. [Google Scholar] [CrossRef]

- Shaheen, M.A.; Presswood, R.; Afshan, S. Application of Machine Learning to predict the mechanical properties of high strength steel at elevated temperatures based on the chemical composition. Structures 2023, 52, 17–29. [Google Scholar] [CrossRef]

- Yazici, C.; Domínguez-Gutiérrez, F. Machine learning techniques for estimating high–temperature mechanical behavior of high strength steels. Results Eng. 2025, 25, 104242. [Google Scholar] [CrossRef]

- Rajczakowska, M.; Szeląg, M.; Habermehl-Cwirzen, K.; Hedlund, H.; Cwirzen, A. Interpretable Machine Learning for Prediction of Post-Fire Self-Healing of Concrete. Materials 2023, 16, 1273. [Google Scholar] [CrossRef]

- Tanhadoust, A.; Yang, T.; Dabbaghi, F.; Chai, H.; Mohseni, M.; Emadi, S.; Nasrollahpour, S. Predicting stress-strain behavior of normal weight and lightweight aggregate concrete exposed to high temperature using LSTM recurrent neural network. Constr. Build. Mater. 2023, 362, 129703. [Google Scholar] [CrossRef]

- Ramzi, S.; Moradi, M.J.; Hajiloo, H. Artificial Neural Network in Predicting the Residual Compressive Strength of Concrete after High Temperatures. SSRN Electron. J. 2022. [Google Scholar] [CrossRef]

- Najm, H.M.; Nanayakkara, O.; Ahmad, M.; Sabri, M.M.S. Mechanical Properties, Crack Width, and Propagation of Waste Ceramic Concrete Subjected to Elevated Temperatures: A Comprehensive Study. Materials 2022, 15, 2371. [Google Scholar] [CrossRef]

- Ahmad, A.; Ostrowski, K.A.; Maślak, M.; Farooq, F.; Mehmood, I.; Nafees, A. Comparative Study of Supervised Machine Learning Algorithms for Predicting the Compressive Strength of Concrete at High Temperature. Materials 2021, 14, 4222. [Google Scholar] [CrossRef]

- Alkayem, N.F.; Shen, L.; Mayya, A.; Asteris, P.G.; Fu, R.; Di Luzio, G.; Strauss, A.; Cao, M. Prediction of concrete and FRC properties at high temperature using machine and deep learning: A review of recent advances and future perspectives. J. Build. Eng. 2024, 83, 108369. [Google Scholar] [CrossRef]

- Barth, H.; Banerji, S.; Adams, M.P.; Esteghamati, M.Z. A Data-Driven Approach to Evaluate the Compressive Strength of Recycled Aggregate Concrete. In Proceedings of the ASCE Inspire 2023: Infrastructure Innovation and Adaptation for a Sustainable and Resilient World—Selected Papers from ASCE Inspire 2023, Arlington, VA, USA, 16–18 November 2023; pp. 433–441. [Google Scholar]

- Uysal, M.; Tanyildizi, H. Estimation of compressive strength of self compacting concrete containing polypropylene fiber and mineral additives exposed to high temperature using artificial neural network. Constr. Build. Mater. 2012, 27, 404–414. [Google Scholar] [CrossRef]

- Tanyildizi, H.; Vipulanandan, C. Prediction of the strength properties of carbon fiber-reinforced lightweight concrete exposed to the high temperature using artificial neural network and support vector machine. Adv. Civ. Eng. 2018, 2018, 5140610. [Google Scholar] [CrossRef]

- Ashteyat, A.M.; Ismeik, M. Predicting residual compressive strength of self-compacted concrete under various tempera-tures and relative humidity conditions by artificial neural networks. Comput. Concr. 2018, 21, 47–54. [Google Scholar] [CrossRef]

- Çolak, A.B.; Akçaözoğlu, K.; Akçaözoğlu, S.; Beller, G. Artificial Intelligence Approach in Predicting the Effect of Elevated Temperature on the Mechanical Properties of PET Aggregate Mortars: An Experimental Study. Arab. J. Sci. Eng. 2021, 46, 4867–4881. [Google Scholar] [CrossRef]

- Chen, H.; Yang, J.; Chen, X. A convolution-based deep learning approach for estimating compressive strength of fiber reinforced concrete at elevated temperatures. Constr. Build. Mater. 2021, 313, 125437. [Google Scholar] [CrossRef]

- Yarmohammdian, R.; Felicetti, R.; Robert, F.; Mohaine, S.; Izoret, L. Crack instability of concrete in fire: A new small-scale screening test for spalling. Cem. Concr. Compos. 2024, 153, 105739. [Google Scholar] [CrossRef]

- Felicetti, R.; Yarmohammadian, R.; Pont, S.D.; Tengattini, A. Tengattini, Fast Vapour Migration Next to a Depressurizing Interface:A Possible Driving Mechanism of Explosive Spalling Revealed by Neutron Imaging. Cem. Concr. Res. 2024, 180, 107508. [Google Scholar] [CrossRef]

- al-Bashiti, M.K.; Naser, M.Z. A sensitivity analysis of machine learning models on fire-induced spalling of concrete: Revealing the impact of data manipulation on accuracy and explainability. Comput. Concr. 2024, 33, 409–423. [Google Scholar] [CrossRef]

- Sirisena, G.; Jayasinghe, T.; Gunawardena, T.; Zhang, L.; Mendis, P.; Mangalathu, S. Machine learning-based framework for predicting the fire-induced spalling in concrete tunnel linings. Tunn. Undergr. Space Technol. 2024, 153, 106000. [Google Scholar] [CrossRef]

- Naser, M.Z. Observational Analysis of Fire-Induced Spalling of Concrete through Ensemble Machine Learning and Surrogate Modeling. J. Mater. Civ. Eng. 2021, 33, 04020428. [Google Scholar] [CrossRef]

- Ho, T.N.-T.; Nguyen, T.-P.; Truong, G.T. Concrete Spalling Identification and Fire Resistance Prediction for Fired RC Columns Using Machine Learning-Based Approaches. Fire Technol. 2024, 60, 1823–1866. [Google Scholar] [CrossRef]

- Peng, J.; Yamamoto, Y.; Hawk, J.A.; Lara-Curzio, E.; Shin, D. Coupling physics in machine learning to predict properties of high-temperatures alloys. npj Comput. Mater. 2020, 6, 141. [Google Scholar] [CrossRef]

- Liu, J.; Han, X.; Pan, Y.; Cui, K.; Xiao, Q. Physics-assisted machine learning methods for predicting the splitting tensile strength of recycled aggregate concrete. Sci. Rep. 2023, 13, 9078. [Google Scholar] [CrossRef]

- Onyelowe, K.C.; Kamchoom, V.; Hanandeh, S.; Kumar, S.A.; Vizuete, R.F.Z.; Murillo, R.O.S.; Polo, S.M.Z.; Castillo, R.M.T.; Ebid, A.M.; Awoyera, P.; et al. Physics-informed modeling of splitting tensile strength of recycled aggregate concrete using advanced machine learning. Sci. Rep. 2025, 15, 7135. [Google Scholar] [CrossRef]

- Raissi, M. Deep Hidden Physics Models: Deep Learning of Nonlinear Partial Differential Equations. 2018. Available online: http://jmlr.org/papers/v19/18-046.html (accessed on 15 February 2025).

- Sirignano, J.; Spiliopoulos, K. DGM: A deep learning algorithm for solving partial differential equations. J. Comput. Phys. 2018, 375, 1339–1364. [Google Scholar] [CrossRef]

- Cai, S.; Wang, Z.; Wang, S.; Perdikaris, P.; Karniadakis, G.E. Karniadakis, Physics-informed neural networks for heat transfer problems. J. Heat Transf. 2021, 143, 060801. [Google Scholar] [CrossRef]

- Gao, Z.; Fu, Z.; Wen, M.; Guo, Y.; Zhang, Y. Physical informed neural network for thermo-hydral analysis of fire-loaded concrete. Eng. Anal. Bound. Elem. 2023, 158, 252–261. [Google Scholar] [CrossRef]

- Koric, S.; Abueidda, D.W. Data-driven and physics-informed deep learning operators for solution of heat conduction equation with parametric heat source. Int. J. Heat Mass Transf. 2023, 203, 123809. [Google Scholar] [CrossRef]

- Niaki, S.A.; Haghighat, E.; Campbell, T.; Poursartip, A.; Vaziri, R. Physics-informed neural network for modelling the thermochemical curing process of composite-tool systems during manufacture. Comput. Methods Appl. Mech. Eng. 2021, 384, 113959. [Google Scholar] [CrossRef]

- Amirante, D.; Ganine, V.; Hills, N.J.; Adami, P. A Coupling Framework for Multi-Domain Modelling and Multi-Physics Simulations. Entropy 2021, 23, 758. [Google Scholar] [CrossRef]

- Willard, J.; Jia, X.; Xu, S.; Steinbach, M.; Kumar, V. Integrating Scientific Knowledge with Machine Learning for Engineering and Environmental System. ACM Comput. Surv. 2022, 55, 1–37. [Google Scholar] [CrossRef]

- Sedgwick, P. Pearson’s correlation coefficient. BMJ 2012, 345, e4483. [Google Scholar] [CrossRef]

- Reshef, D.N.; Reshef, Y.A.; Finucane, H.K.; Grossman, S.R.; McVean, G.; Turnbaugh, P.J.; Lander, E.S.; Mitzenmacher, M.; Sabeti, P.C. Detecting novel associations in large data sets. Science 2011, 334, 1518–1524. [Google Scholar] [CrossRef]

- Yarmohammadian, R.; Jovanović, B.; Van Coile, R. Sigmoid-Based Regression for Physically Informed Temperature Prediction Of Fire-Exposed Protected Steel Sections. In Proceedings of the ICOSSAR’25: 14th International Conference on Structural Safety and Reliability, Los Angeles, CA, USA, 1–6 June 2025; Available online: https://www.scipedia.com/wd/images/4/46/Draft_content_623658576I250381.pdf (accessed on 10 June 2025).

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier Nonlinearities Improve Neural Network Acoustic Models. 2013. Available online: https://www.semanticscholar.org/paper/Rectifier-Nonlinearities-Improve-Neural-Network-Maas/367f2c63a6f6a10b3b64b8729d601e69337ee3cc (accessed on 10 February 2025).

- Huber, P.J. Robust Estimation of a Location Parameter. Ann. Math. Stat. 1964, 35, 73–101. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression Shrinkage and Selection Via the Lasso. J. R. Stat. Soc. Ser. B Stat. Methodol. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Hoerl, A.E.; Kennard, R.W. Ridge Regression: Applications to Nonorthogonal Problems. Technometrics 1970, 12, 69–82. [Google Scholar] [CrossRef]

- Clifton, G.C.; Abu, A.; Gillies, A.G.; Mago, N.; Cowie, K. Fire engineering design of composite floor systems for two way response in severe fires. In Applications of Fire Engineering; CRC Press: Boca Raton, FL, USA, 2017; pp. 367–377. [Google Scholar] [CrossRef]

- Han, J.; Jentzen, A.; Weinan, E. Solving high-dimensional partial differential equations using deep learning. Proc. Natl. Acad. Sci. USA 2018, 115, 8505–8510. [Google Scholar] [CrossRef]

- Rathore, P.; Lei, W.; Frangella, Z.; Lu, L.; Udell, M. Challenges in Training PINNs: A Loss Landscape Perspective. 2024, pp. 42159–42191. Available online: https://proceedings.mlr.press/v235/rathore24a.html (accessed on 28 March 2025).

- Urbán, J.F.; Stefanou, P.; Pons, J.A. Unveiling the optimization process of physics informed neural networks: How accurate and competitive can PINNs be? J. Comput. Phys. 2025, 523, 113656. [Google Scholar] [CrossRef]

- Wang, S.; Teng, Y.; Perdikaris, P. Understanding and mitigating gradient flow pathologies in physics-informed neural networks. SIAM J. Sci. Comput. 2021, 43, A3055–A3081. [Google Scholar] [CrossRef]

- van der Meer, R.; Oosterlee, C.W.; Borovykh, A. Optimally weighted loss functions for solving PDEs with Neural Networks. J. Comput. Appl. Math. 2022, 405, 113887. [Google Scholar] [CrossRef]

- McClenny, L.D.; Braga-Neto, U.M. Self-adaptive physics-informed neural networks. J. Comput. Phys. 2022, 474, 111722. [Google Scholar] [CrossRef]

- Lu, B.; Moya, C.; Lin, G. NSGA-PINN: A Multi-Objective Optimization Method for Physics-Informed Neural Network Training. Algorithms 2023, 16, 194. [Google Scholar] [CrossRef]

- Bischof, R.; Kraus, M.A. Multi-Objective Loss Balancing for Physics-Informed Deep Learning. 2021. [CrossRef]

- Wang, S.; Bhartari, A.K.; Li, B.; Perdikaris, P. Gradient Alignment in Physics-informed Neural Networks: A Second-Order Optimization Perspective. arXiv 2025, arXiv:2502.00604. [Google Scholar]

- Zhang, Y.; Yang, Q. A Survey on Multi-Task Learning. IEEE Trans. Knowl. Data Eng. 2022, 34, 5586–5609. [Google Scholar] [CrossRef]

- Tang, K.; Wan, X.; Yang, C. DAS-PINNs: A deep adaptive sampling method for solving high-dimensional partial differential equations. J. Comput. Phys. 2023, 476, 111868. [Google Scholar] [CrossRef]

- Maddu, S.M.; Sturm, D.; Müller, C.L.; Sbalzarini, I.F. Inverse Dirichlet weighting enables reliable training of physics informed neural networks. Mach. Learn. Sci. Technol. 2022, 3, 015026. [Google Scholar] [CrossRef]

- Penwarden, M.; Zhe, S.; Narayan, A.; Kirby, R.M. A metalearning approach for Physics-Informed Neural Networks (PINNs): Application to parameterized PDEs. J. Comput. Phys. 2023, 477, 111912. [Google Scholar] [CrossRef]

- Yu, J.; Lu, L.; Meng, X.; Karniadakis, G.E. Gradient-enhanced physics-informed neural networks for forward and inverse PDE problems. Comput. Methods Appl. Mech. Eng. 2022, 393, 114823. [Google Scholar] [CrossRef]

- Xiu, D.; Karniadakis, G.E.; Comput, S.J.S. Sci Comput, The Wiener-Askey Polynomial Chaos for Stochastic Differential Equations. Soc. Ind. Appl. Math. 2002, 24, 619–644. [Google Scholar] [CrossRef]

- Jagtap, A.D.; Kawaguchi, K.; Karniadakis, G.E. Adaptive activation functions accelerate convergence in deep and physics-informed neural networks. J. Comput. Phys. 2020, 404, 109136. [Google Scholar] [CrossRef]

- Nabian, M.A.; Gladstone, R.J.; Meidani, H. Efficient training of physics-informed neural networks via importance sampling. arXiv 2021, arXiv:2104.12325. [Google Scholar] [CrossRef]

- Sobol, I. On the distribution of points in a cube and the approximate evaluation of integrals. USSR Comput. Math. Math. Phys. 1967, 7, 86–112. [Google Scholar] [CrossRef]

- Chen, Y.; Xiao, H.; Teng, X.; Liu, W.; Lan, L. Enhancing accuracy of physically informed neural networks for nonlinear Schrödinger equations through multi-view transfer learning. Inf. Fusion 2024, 102, 102041. [Google Scholar] [CrossRef]

- Desai, S.; Mattheakis, M.; Joy, H.; Protopapas, P.; Roberts, S. One-Shot Transfer Learning of Physics-Informed Neural Net-works. 2021. Available online: https://arxiv.org/abs/2110.11286v2 (accessed on 4 February 2025).

- Chakraborty, S. Transfer learning based multi-fidelity physics informed deep neural network. J. Comput. Phys. 2021, 426, 109942. [Google Scholar] [CrossRef]

- Jnini, A.; Vella, F. Dual Natural Gradient Descent for Scalable Training of Physics-Informed Neural Networks. arXiv 2025, arXiv:2505.21404. [Google Scholar] [CrossRef]

- Kiyani, K.; Shukla, J.F.; Urbán, J.; Darbon, G.E.; Karniadakis, G.E. Which Optimizer Works Best for Physics-Informed Neural Networks and Kolmogorov-Arnold Networks? 2025. Available online: https://arxiv.org/pdf/2501.16371 (accessed on 28 July 2025).

- Naser, M.Z.; Alavi, A.H. Error Metrics and Performance Fitness Indicators for Artificial Intelligence and Machine Learning in Engineering and Sciences. Arch. Struct. Constr. 2021, 3, 499–517. [Google Scholar] [CrossRef]

- Naser, M.Z. Intuitive tests to validate machine learning models against physics and domain knowledge. Digit. Eng. 2025, 7, 100057. [Google Scholar] [CrossRef]

| Article | Output of the Model | Model Algorithm | Physics-Informed Strategy | Implementation of the Physics-Informed Strategy |

|---|---|---|---|---|

| Fan et al. [61] | Flashover time | Neural network-based | FET | Using a soft constraint on the data by introducing a temperature cut-off to take into consideration the working temperature limit for detectors. |

| Lattimer et al. [62] | Temperature, velocity fields | Neural network based on CNN | FET | Using ROM as a physical link before on data to reduce the complexity of the problem and keeping the physics at the same time. |

| Nguyen et al. [63] | Temperature fields, flow fields… (CFD output) | ANN | OCT | A two-step calculation is performed whereby fire source properties generated by a data-driven model are implemented in a physical (CFD) calculation. |

| Tam et al. [60] | Flashover time | Neural network-based | FET | Using a soft constraint on the data by introducing a temperature cut-off to take into consideration the working temperature limit for detectors. |

| Article | Output of the Model | Model Algorithm | Physics-Informed Strategy | Implementation of the Physics-Informed Strategy |

|---|---|---|---|---|

| Bottero et al. [85] | Fire spread map | Neural-network based | LCT | Loss terms for residual PDE and initial condition, and boundary condition were included in the total loss calculation for model training. |

| Latimer et al. [87,88] | Spatiotemporal evolution of fire | Regression-based (ROM, DEIM) | ACT | Mathematically reduced form for solving a complex system |

| Vogiatzoglou et al. [86] | Spatiotemporal evolution of fire, wind velocity and heat transfer coefficient | Neural-network-based | LCT | A loss function was adopted that penalises deviations from the governing PDEs |

| Jadouli et al. [84] | Wildfire risk score | Neural-network-based | ACT | The physics-embedded entropy layer uses the Boltzmann–Gibbs entropy equation from statistical mechanics, enabling the architecture to inherently compute a physically meaningful quantity |

| Article | Output of the Model | Model Algorithm | Physics-Informed Strategy | Implementation of the Physics-Informed Strategy |

|---|---|---|---|---|

| Liu et al. [131] | Splitting tensile strength of recycled aggregate concrete | Partition-based and regression-based | FET | Using known fracture models (prior knowledge) for feature selection |

| Onyelowe et al. [132] | Tensile strength of recycled aggregate concrete | Partition-based and regression-based | FET | Key features were determined through prior domain knowledge, and sensitivity analyses were used to reduce features. |

| Peng et al. [130] | Yield strength of alloys | Regression-based and partition-based | FET | Correlation analysis, maximal information coefficient, and synthetic physically linked features were used |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yarmohammadian, R.; Put, F.; Van Coile, R. Physics-Informed Surrogate Modelling in Fire Safety Engineering: A Systematic Review. Appl. Sci. 2025, 15, 8740. https://doi.org/10.3390/app15158740

Yarmohammadian R, Put F, Van Coile R. Physics-Informed Surrogate Modelling in Fire Safety Engineering: A Systematic Review. Applied Sciences. 2025; 15(15):8740. https://doi.org/10.3390/app15158740

Chicago/Turabian StyleYarmohammadian, Ramin, Florian Put, and Ruben Van Coile. 2025. "Physics-Informed Surrogate Modelling in Fire Safety Engineering: A Systematic Review" Applied Sciences 15, no. 15: 8740. https://doi.org/10.3390/app15158740

APA StyleYarmohammadian, R., Put, F., & Van Coile, R. (2025). Physics-Informed Surrogate Modelling in Fire Safety Engineering: A Systematic Review. Applied Sciences, 15(15), 8740. https://doi.org/10.3390/app15158740