1. Introduction

With the widespread use of the internet today, there has been a significant increase in the size of emerging datasets. The rapid growth of datasets leads to challenges such as difficulties in extracting relevant information from real-world applications, increased processing time, hardware limitations, and higher computational complexity. Therefore, feature selection methods, which hold an important place in data mining, are gaining importance [

1]. Feature selection methods aim to identify relevant features prior to training models on real-world datasets containing many features. Relevant features are those directly related to the target concept and are neither irrelevant nor redundant; irrelevant features do not affect the target concept, whereas redundant features contain duplicate or unnecessary information [

2]. Given a dataset with N features, there exist possible subsets. As the number of features increases, the search space grows exponentially, making it increasingly challenging to find the optimal subset. Therefore, removing unnecessary features reduces both training time and memory requirements during model training [

2]. Moreover, reducing the dataset size facilitates simpler representation, visualization, and interpretation of the data [

3]. In line with the advantages offered by feature selection methods, various techniques have been developed in the literature. These methods are generally classified into three main categories: filter methods, wrapper methods, and hybrid methods [

4].

Filter methods operate independently of the learning algorithm; data are scored based on statistical criteria, and feature selection is performed according to these scores [

5]. These methods are generally faster than wrapper methods. However, due to uncertainties in determining the threshold that distinguishes between relevant and irrelevant features, filter methods are often considered less effective and less successful compared to wrapper methods [

3].

Wrapper methods comprise feature evaluation criterion, a search strategy, and a stopping criterion. Unlike filter methods, wrapper models use the performance of the learning algorithm as the evaluation metric and select the subset of features that achieves the best performance [

6]. Wrapper methods can be categorized into three main groups based on their feature search strategies: sequential, bio-inspired, and iterative methods. Sequential search methods are easy to implement and fast. Bio-inspired methods incorporate randomness into the search process to avoid getting trapped in local minima, while iterative methods aim to avoid combinatorial search [

4].

Hybrid methods have been developed to overcome the disadvantages of filter and wrapper methods. These approaches incorporate both a feature selection algorithm and a classification algorithm, and perform both processes simultaneously [

7].

Feature selection methods aim to determine which features should be selected from a dataset. The resulting solutions take binary values of 0 or 1, indicating whether each feature is excluded or included. In order to obtain an exact solution, all possible subsets must be evaluated; however, this significantly increases the computational cost. As the size of datasets increases, the use of heuristic methods to achieve an optimal solution has become increasingly common [

8]. Continuous versions of optimization algorithms need to be discretized in order to be applied to decision problems. Numerous discretization methods have been proposed in the literature for this purpose. By developing a reupdate rule as in discrete Jaya algorithm (DJAYA) [

9] algorithm, using XOR method as in Jaya-based binary optimization algorithm (JAYAX) [

10] or using transfer functions [

11,

12], discrete versions of these algorithms have been developed. Although these algorithms do not always guarantee the best solution, they can produce acceptable solutions within a reasonable time. Despite the existence of many optimization methods in the literature, researchers continue to develop new approaches specifically for feature selection problems due to unresolved issues such as getting stuck in local minima and premature convergence [

11].

The WSO is an optimization method inspired by the hunting behavior of white sharks [

13]. Developed in 2022, the WSO algorithm is designed for solving continuous optimization problems. It is recommended for further development due to its applicability to high-dimensional engineering problems and its ability to produce fast and accurate solutions [

13].

Motivation and Contribution of the Study

The increasing size and complexity of real-world data have made feature selection a critical step in data mining and machine learning processes. As the number of features grows, the search space required to identify the optimal subset expands exponentially; this situation increases computational complexity and adversely affects model performance. Therefore, developing efficient and scalable feature selection methods is of great importance.

Although many metaheuristic algorithms have been proposed for feature selection, most of these algorithms face challenges such as premature convergence, imbalance between exploration and exploitation, or insufficient adaptation to binary search spaces. The WSO, originally developed for continuous problem domains, has strong exploration capabilities and, inspired by the predatory behavior of sharks, provides a promising foundation for binary optimization problems.

In this study, to overcome the aforementioned limitations and leverage WSO’s potential in binary problem domains, a binary version of WSO (bWSO) specifically adapted for feature selection tasks is proposed. The contributions of this study to the literature can be summarized as follows:

A novel logarithmic transfer function offering a flexible and adaptive mechanism for converting continuous values into binary decisions is proposed.

The original WSO algorithm is adapted to binary problem solving by incorporating the proposed logarithmic transfer function along with eight other commonly used transfer functions, resulting in the bWSO algorithm.

The performance of the bWSO-log algorithm is evaluated on nineteen benchmark datasets, and it is observed to outperform other well-known metaheuristic algorithms in the context of feature selection.

In terms of classification accuracy, the algorithm is comprehensively compared with Artificial Algae Algorithm (AAA) [

11], Bat Optimization Algorithm (BAT) [

14], Firefly Algorithm (FA) [

15], Grey Wolf Optimizer (GWO) [

16], Moth Flame Optimizer (MFO) [

17], Multi-Verse Optimizer (MVO) [

18], Particle Swarm Optimization (PSO) [

19], and Whale Optimization Algorithm (WOA) [

20]

A simple yet effective feature selection framework combining WSO’s powerful search mechanism with adaptive discretization techniques is presented.

The remaining sections of the paper are organized as follows:

Section 2 provides a brief overview of related work.

Section 3 introduces the original WSO algorithm. The details of the proposed bWSO approach are presented in

Section 4.

Section 5 describes the experimental setup.

Section 6 presents the results along with a discussion, while

Section 7 offers conclusions and suggestions for future work.

2. Related Works

Swarm intelligence-based metaheuristic optimization algorithms have recently been widely used not only for solving continuous-valued problems but also for addressing feature selection problems effectively [

21]. This section examines feature selection approaches based on swarm intelligence optimization algorithms.

Various methods have been employed to discretize swarm intelligence-based optimization algorithms. These methods include [

8,

10], threshold-based transformation approaches [

22], transfer functions [

9,

23,

24], entropy-based methods [

25], similarity-based approaches [

26,

27], and hybrid methods [

28].

In most metaheuristic algorithms, an initial set of solutions—i.e., a population—is generated randomly, and these solutions are evaluated using a fitness function [

26]. New generations are iteratively produced until a termination criterion is met, and at each step, the solutions are re-evaluated according to the fitness function [

29,

30].

The WSO algorithm is a bio-inspired optimization method based on swarm intelligence. Therefore, this study focuses on the effects of swarm intelligence-based optimization algorithms on feature selection within the context of the literature.

Table 1 presents some of the methods used for this purpose.

3. Original White Shark Optimizer (WSO)

The WSO is a nature-inspired metaheuristic optimization algorithm developed by Braik et al. in 2022 [

13]. The fundamental principle of the algorithm is based on the dynamic and directional movements of white sharks during the hunting process. These creatures prefer to detect their prey using their sense of smell and hearing rather than directly chasing it. Their advanced hearing abilities enable them to explore the search space effectively. As illustrated in

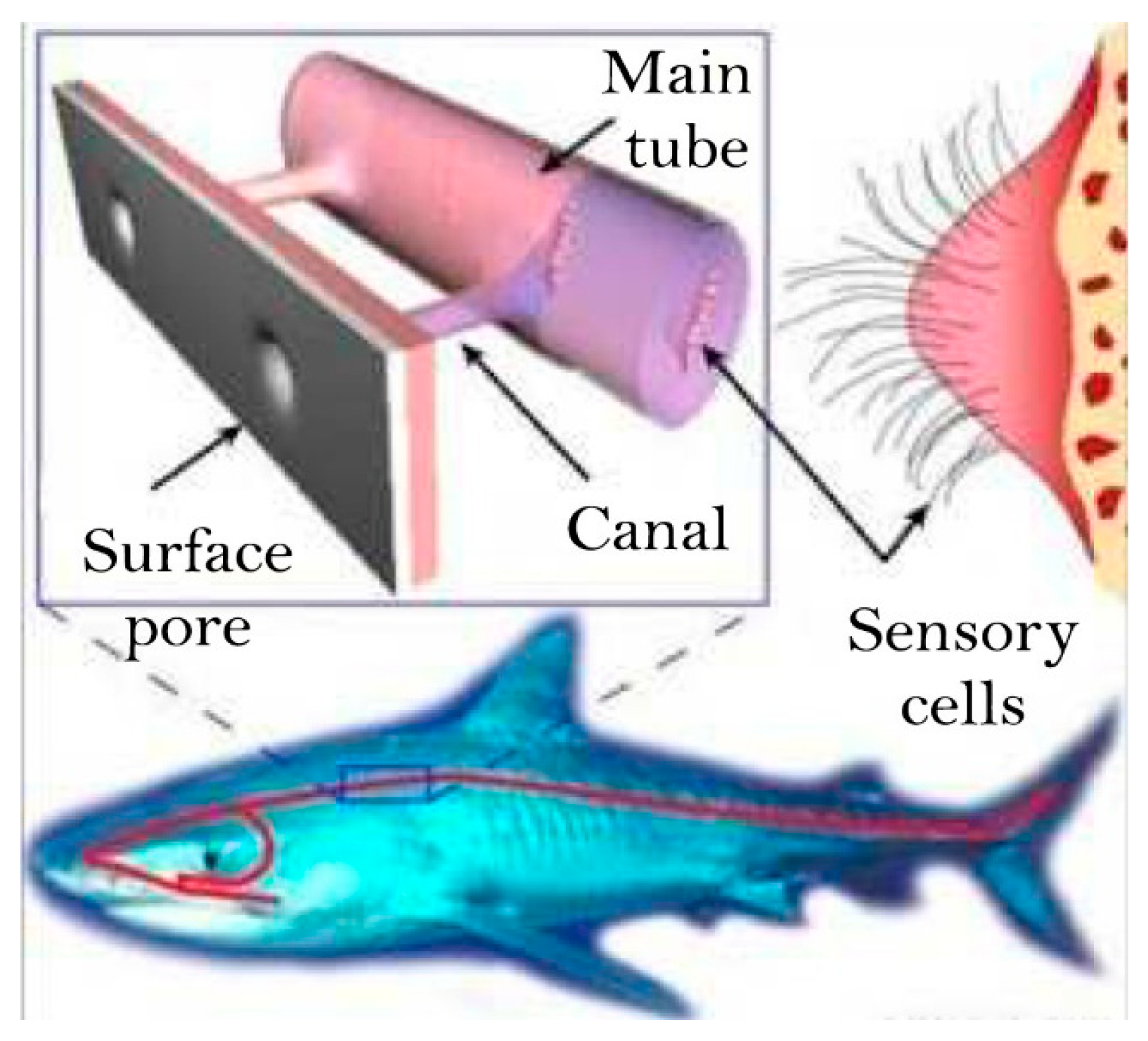

Figure 1, white sharks can detect changes in water pressure, electromagnetic waves, and wave frequencies through the lateral lines extending along both sides of their bodies, allowing them to locate the prey.

The structure of the algorithm is designed to model exploration and exploitation behaviors in the solution space based on these biological features. WSO begins with a randomly generated initial population, where each individual is represented by a d-dimensional solution vector corresponding to the problem’s dimensionality. The initial positions of individuals are randomly selected within predefined lower and upper bounds. The quality of each solution is evaluated using a fitness function.

The WSO algorithm operates based on three main behavioral models:

Moving towards prey: White sharks estimate the approximate position of their prey by sensing wave trails and odor signals. This orientation is modeled through velocity and position updates, allowing individuals to explore better regions in the solution space.

Moving towards optimal prey: Similarly to fish swarms, white sharks perform random exploratory behaviors to locate their prey. This mechanism enables a broader coverage of the solution space and reduces the risk of premature convergence.

Movement towards the best white shark: Individuals move toward the position of the best-performing shark (closest to the prey) and tend to cluster around it. This behavior simulates collective movement and enhances information sharing, leading to faster convergence to better solutions.

In each iteration, the population is updated according to these three strategies, and the best-found solution is preserved. Additionally, the tendency of individuals to move closer to one another reinforces dense convergence within the solution space. The overall functioning of the algorithm is summarized in the pseudo-code presented in Algorithm 1.

| Algorithm 1 Pseudo-code WSO [13] |

| 1: | Initialize the parameters of the problem |

| 2: | Initialize the parameters of WSO |

| 3: | Randomly generate the initial positions of WSO |

| 4: | Initialize the velocity of the initial population |

| 5: | Evaluate the positions of the initial population |

| 6: | while do |

| 7: | Update the parameters, , , , , , , , , and , using their corresponding update rules defined in the algorithm. |

| 8: | for to to do |

| 9: |

|

| 10: |

end for |

| 11: | for to to do |

| 12: |

if then |

| 13: |

|

| 14: |

else |

| 15: |

|

| 16: |

end if |

| 17: |

end for |

| 18: | for to to do |

| 19: |

ifthen |

| 20: |

|

| 21: |

ifthen |

| 22: |

|

| 23: |

else |

| 24: |

|

| 25: |

|

| 26: |

end if |

| 27: |

end if |

| 28: |

end for |

| 29: | Adjust the position of the white sharks that proceed beyod the boundary |

| 30: | Evaluate and update the news positions |

| 31: |

|

| 32: | end while |

| 33: | Return the optimal solution obtained so far |

Beyond the biological and behavioral modeling of the algorithm’s structure, there are also methodological reasons that support the preference for WSO in the context of the feature selection problem. Although numerous optimization algorithms have been proposed in the literature, there are several reasons why WSO was chosen in this study. First, WSO provides an effective balance between exploration and exploitation phases, which is particularly beneficial for solving complex and multimodal problems such as feature selection. Second, WSO has a low computational cost and has demonstrated competitive performance on various high-dimensional benchmark problems. Finally, the biologically inspired search mechanism based on the predatory behavior of white sharks offers flexible adaptation capabilities that align well with the structure of binary search spaces. All these characteristics make WSO a suitable and potentially powerful candidate for the feature selection problem addressed in this study.

4. The Proposed Binary White Shark Optimizer

The WSO algorithm is designed to provide optimal solutions in continuous search spaces. However, to apply WSO to feature selection problems, a discretization process is required. This section presents the details of the bWSO_log algorithm, which has been developed specifically for solving feature selection problems. In this context, the details of the bWSO_log algorithm developed for feature selection are presented, and its variants obtained using different discretization strategies are also included.

bWSO_log: In each position update step of the original WSO algorithm, the logarithmic transfer function described in

Section 4.2 is used.

bWSO-0: In this version, discretization is performed using a direct thresholding method without employing any transfer function. The obtained continuous value is compared with a randomly selected threshold; if it is greater, it is assigned 1, otherwise 0.

bWSO_s: Instead of the logarithmic function, the S-Shape transfer function is used.

bWSO_v: Instead of the logarithmic function, the V-Shape transfer function is used.

To rigorously assess the performance contribution of the proposed logarithmic transfer function, a baseline variant without any transfer function, denoted as bWSO-0, was also included in the evaluation. Accordingly, the effectiveness of the logarithmic function was empirically analyzed by comparing its optimization performance against the S-Shape, V-Shape, and transfer-function-free (bWSO-0) counterparts.

This section is organized as follows:

Section 4.1 explains the Problem Formulation and Solution Representation.

Section 4.2 discusses the binarization strategy applied to the original WSO algorithm.

4.1. Problem Formulation and Solution Representation

In this study, the feature selection problem is addressed as a binary optimization task. The primary objective is to identify the optimal subset of features that maximizes classification accuracy while minimizing the number of selected features.

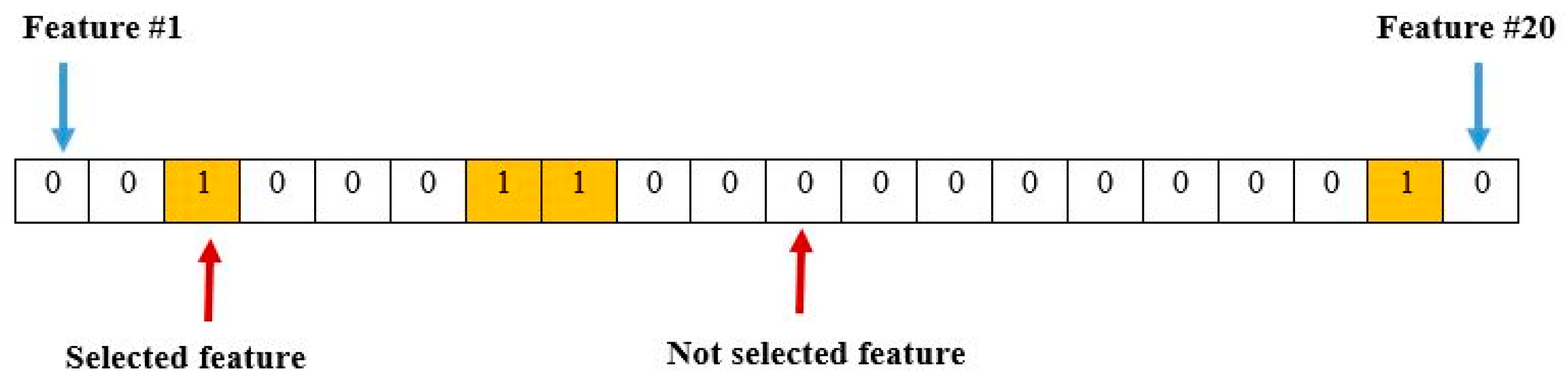

Each candidate solution is represented as a binary vector with a length equal to the number of features in the dataset, as shown in Equation (1).

where

represents the total number of features; a value of

indicates that the

th feature is selected, while

indicates that the th feature is not selected. An example of the solution representation is shown in

Figure 2.

Figure 2 illustrates the binary vector representation of a candidate solution, where each element corresponds to a feature in the dataset. A value of 1 indicates that the corresponding feature is selected, whereas a value of 0 means the feature is not selected. For example, in the given illustration, features 3, 7, 8 and 19 are included in the selected subset.

Fitness Function: In feature selection problems, the goal is to identify an ideal subset by minimizing the number of selected features while maximizing classification performance. The features selected by each search agent in the algorithm are evaluated using a fitness function based on the K-nearest neighbor (KNN) classifier to estimate classification accuracy. The fitness function is used by optimization algorithms to measure the performance of solution vectors. The fitness function used in this study is shown in Equation (2).

The fitness function represents the fitness value of the White Shark;

denotes the classification error rate;

is the number of selected features; and

is the total number of features. The parameters α and β correspond to the importance of the number of selected features and classification accuracy, respectively. In the literature, they are commonly used as

[

11,

28,

33,

38]. In this study, alpha was selected as 0.99 and beta as 0.01.

Classification Process: The K-nearest neighbor (K-NN) classifier is a commonly used method in feature selection problems [

11,

28,

33]. The Euclidean distance is used in the K-NN classifier, as shown in Equation (3). The value of K was set to 5 to obtain the optimal feature subset.

where

and

denote two instances represented as

-dimensional feature vectors, where

corresponds to the total number of features. The term

refers to the value of the

feature of instance

, and similarly

corresponds to the

feature of instance.

4.2. Discretization Strategy

In the literature, discretization is performed using various transfer functions. To convert the outputs of these transfer functions into binary values (0 or 1), different thresholding approaches are employed. The thresholding method used in this study is presented in Equation (4).

where

represents white sharks. The rand is a random value in the range. In the WSO algorithm, if the position update value can exceed the randomly generated value, it is equalized to take the value of 1, in other cases it will be zero.

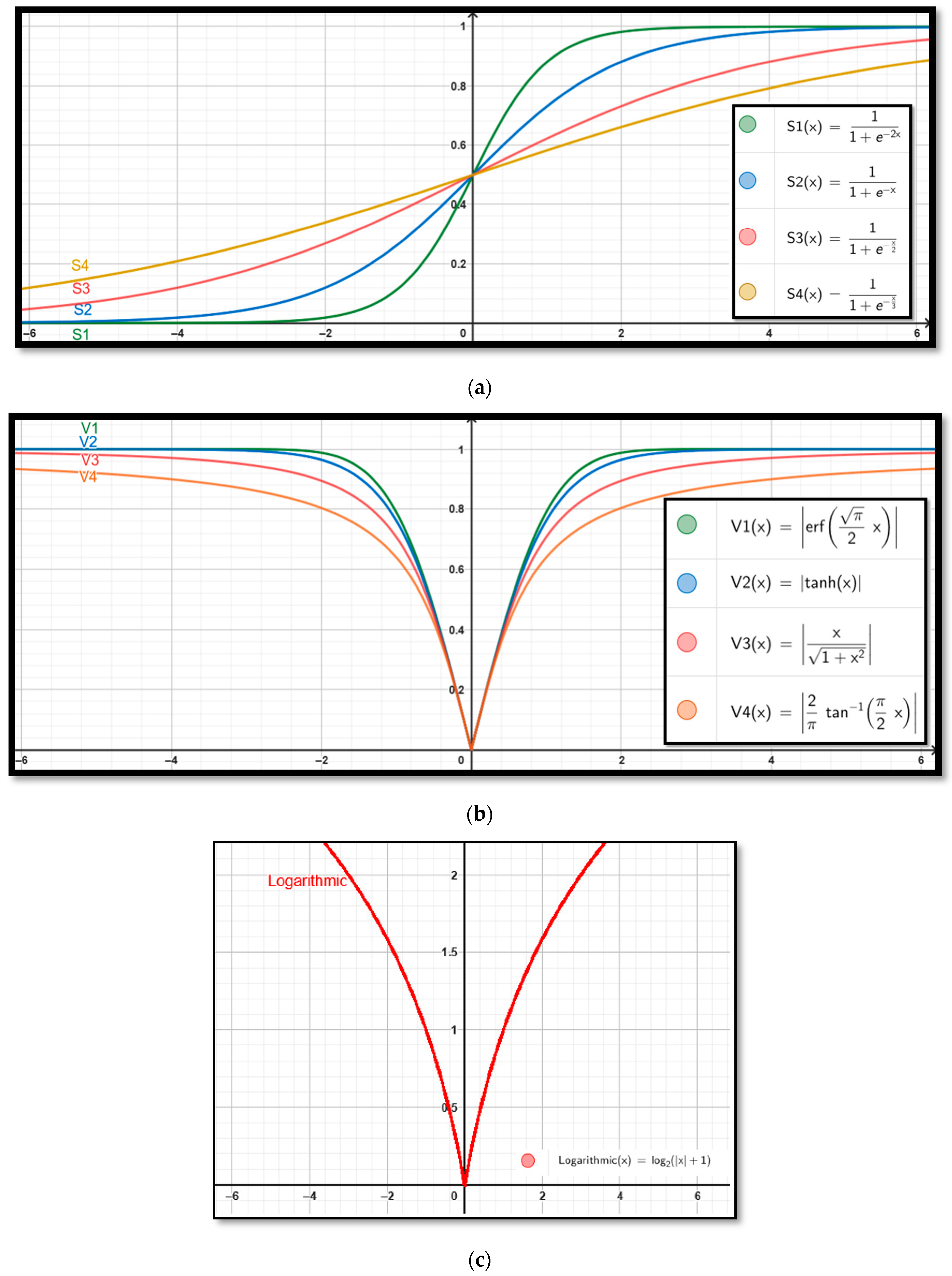

In this study, to evaluate the impact of discretization functions, the bWSO-0 algorithm was first implemented without employing any transfer function. Subsequently, experiments were conducted using S-Shape, V-Shape, and the proposed logarithmic transfer function. The transfer functions used in all proposed bWSO variants are presented in

Table 2 and the corresponding

Figure 3.

Figure 3 presents the graphical representation of the transfer functions used in the bWSO variants, including S-shaped, V-shaped, and the proposed logarithmic transfer function. These curves illustrate how the continuous values generated by the optimization algorithm are converted into binary values (0 or 1) during the discretization process.

The logarithmic transfer function provides a more flexible and gradual probability distribution on the transformation curve compared to traditional S-shaped and V-shaped functions. This characteristic enables the algorithm to maintain a better balance between exploration and exploitation during the search process, thereby preventing entrapment in local minima and improving solution quality. In addition, the natural monotonic structure of the logarithmic function offers a more controlled binary conversion mechanism in the solution space.

The pseudocode of the proposed bWSO algorithm is provided in Algorithm 2.

| Algorithm 2 Pseudo-code of binary WSO |

| 1: | Initialize the parameters of the problem |

| 2: | Initialize the parameters of WSO |

| 3: | Randomly generate the initial positions of WSO |

| 4: | Initialize the velocity of the initial population |

| 5: | Evaluate the positions of the initial population |

| 6: | whiledo |

| 7: | Update the parameters, , , , , , , , , and , using their corresponding update rules defined in the algorithm. |

| 8: | for to to do |

| 9: |

|

| 10: |

end for |

| 11: | for to to do |

| 12: |

ifthen |

| 13: |

|

| 14: |

|

| 15: |

else |

| 16: |

|

| 17: |

|

| 18: |

end if |

| 19: |

end for |

| 20: | for to to do |

| 21: |

ifthen |

| 22: |

|

| 23: | if then |

| 24: |

|

| 25: |

|

| 26: |

else |

| 27: |

|

| 28: |

|

| 29: |

|

| 30: |

end if |

| 31: |

end if |

| 32: |

end for |

| 33: | Adjust the position of the white sharks that proceed beyod the boundary |

| 34: | Evaluate and update the news positions |

| 35: |

|

| 36: | end while |

| 37: | Return the optimal solution obtained so far |

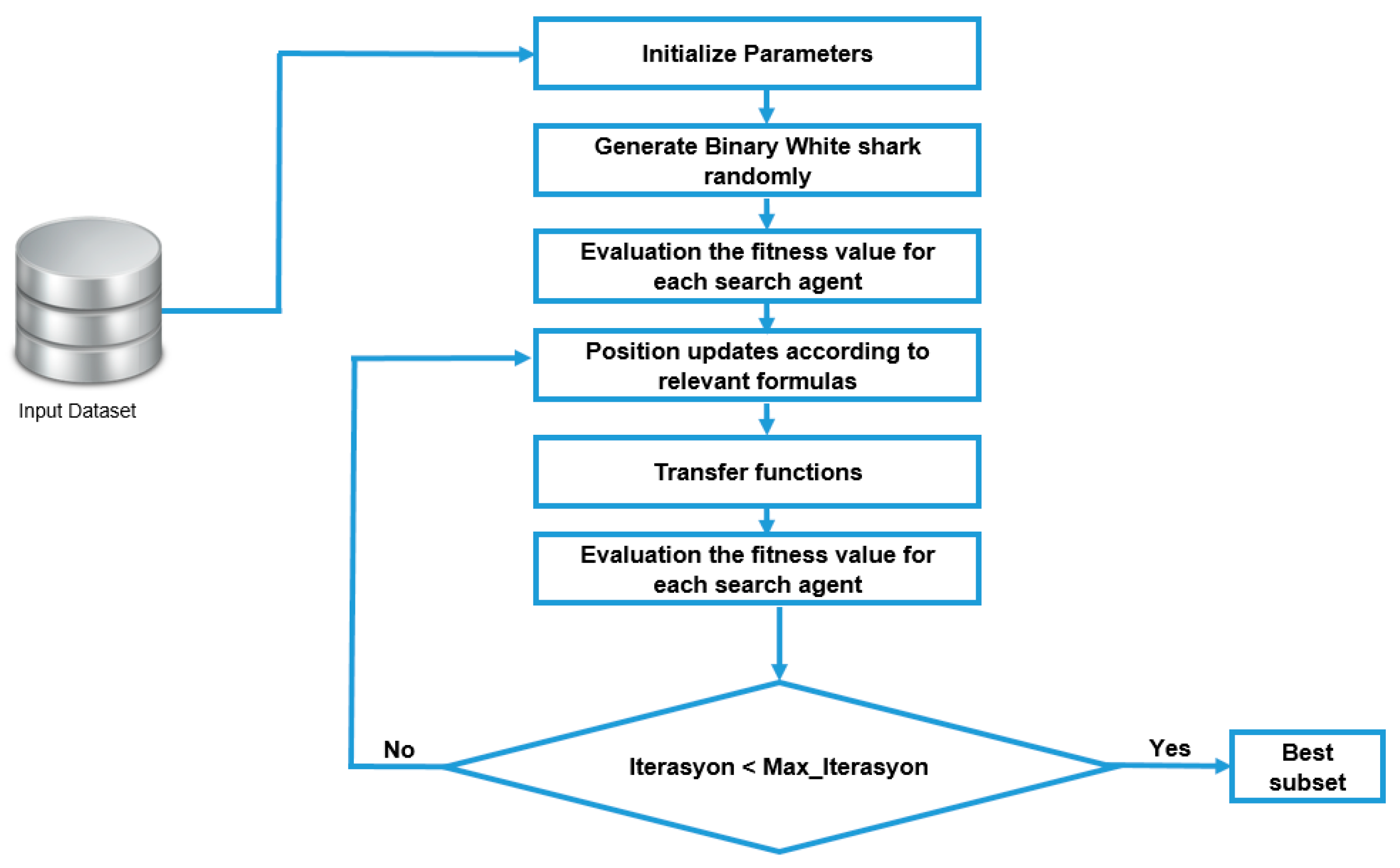

Figure 4 presents a block diagram illustrating the step-by-step workflow of the proposed bWSO algorithm. The process begins with the input of the dataset into the algorithm. Next, the algorithm parameters are initialized, and binary white sharks are generated randomly. Each search agent (white shark) is evaluated using a fitness function. The positions of the agents are then updated based on the relevant formulas, followed by the application of transfer functions for the discretization process. The fitness values are recalculated according to the new positions. This loop continues until the maximum number of iterations is reached. Once the iteration limit is exceeded, the best feature subset is returned as the output. This diagram aims to visualize how each component of the algorithm operates systematically.

5. Experiments

This section describes the experimental details. The datasets used in the study were selected due to their open access availability [

42], varying levels of difficulty [

28], and their usage in other studies in the literature [

11,

28,

31,

37]. Detailed information about the datasets is provided in

Table 3. All datasets listed in

Table 3 were obtained from the UC Irvine Machine Learning Repository [

42]. The datasets used in this study were divided into two groups: training and testing sets. The training and testing data ratios were set to 80% and 20%, respectively.

In the experimental study, the proposed bWSO algorithm was compared with eight widely preferred population-based algorithms from the literature [

11,

24,

28,

31,

37]. The algorithms used in this comparison include AAA [

11], BAT [

14], FA [

15], GWO [

16], MFO [

17], MVO [

18], PSO [

19], WOA [

20]. To ensure a fair comparison, AAA, BAT, FA, GWO, MFO, MVO, PSO, WOA, and bWSO algorithms were executed on the same platform. The experiments were conducted on a Windows 10 operating system equipped with an Intel(R) Core(TM) i7-10750H CPU @ 2.60 GHz (6 cores, 12 logical processors) and 16 GB of RAM. Coding was performed using Python (version 3.7.3 64bit) programming language within the Visual Studio Code (Version 1.92.2) editor. The EvolopyFS library was utilized in the experimental studies [

43]. EvolopyFS is a Python-based library containing frequently used metaheuristic optimization methods. The experiments were carried out with a population size of 30, 500 iterations, and 30 runs.

The hyperparameter values of all algorithms used in the experiments were determined based on default settings that are commonly used in the relevant literature and have been proven effective in previous studies. In particular, the parameters chosen for the WSO algorithm are those recommended in the original study [

13] and selected after comprehensive parameter analyses. This approach was adopted to reduce the need for additional optimization of parameter settings and to ensure a fair comparison among different algorithms. Thus, the focus of the experiments was on comparing the fundamental performance of the algorithms. The hyperparameter values of all algorithms are presented in

Table 4.

Evaluation Criteria

The performance of the proposed method was analyzed and compared using various evaluation metrics. In this context, the obtained results were evaluated in terms of accuracy, recall, and F1-Score.

Accuracy: Indicates the ratio of correctly predicted to all predictions. A value close to 1 indicates that the classification is a model that is highly accurate. It is shown in Equation (5)

where

(True Positive): What is actually true (actual positive) and what is predicted correctly (predicted positive).

(True Negative): It is not actually true (actual negative) and not predicted correctly (predicted negative).

(False Positive): Actually incorrect (actual negative) and correctly predicted (predicted positive).

(False Negative): What is actually correct (actual positive) and not correctly predicted (predicted negative).

Recall: Shows the ratio of correctly predicted to true positives. A value close to 1 indicates that the classification is a model that is highly recalled. It is shown in Equation (6).

F1-

Score: Recall and Precision harmonic average. A value close to 1 indicates that the classification is a model that has a high

F1-

Score. It is shown in Equation (7).

Wilcoxon signed-rank test: It is a test frequently used in the literature to test whether the results obtained by the algorithms are significant or not if they are remarkably close to each other. If the obtained value is greater than the significance level, H0 (Null Hypothesis) is accepted. Accepting the H0 hypothesis indicates that there is no significant difference between the two methods. If the obtained value is less than the significance level, H1 (Alternative Hypothesis) is accepted. H1 Hypothesis is the opposite of H0 Hypothesis. In other words, the H1 hypothesis states that there is a significant difference. In this study, the significance level was determined as

p-Value 0.05 [

44].

6. Results and Discussion

In this section, the proposed bWSO-log algorithm and the other algorithms were executed on the relevant datasets, and the experimental results were comprehensively analyzed. Initially, ten different versions of the bWSO algorithm were evaluated. During the analysis, the results obtained using 19 different datasets, as listed in

Table 3, were compared. Performance comparisons between the different versions of bWSO and the other algorithms were conducted based on the mean and standard deviation values obtained from 30 independent runs for each algorithm.

6.1. Comparison Between Versions of bWSO

In

Table 5, the average accuracy value, the best standard deviation, and the worst result of the binary bWSO algorithm are given as a result of 30 independent runs. The best results are highlighted in bold font. Considering the average success value, it was seen that using the appropriate transfer function increased the success rate when comparing the bWSO-0 version with the value bWSO versions. It has been seen that the bWSO versions proposed using the V-shape transfer function are more successful than the bWSO versions proposed with the S-shape transfer functions. Considering the average ranking value in total, it is seen that the proposed logarithmic transfer function is in the first place. In the bWSO versions whose Recall values are given in

Table 6, the recommended bWSO-log method achieved successful results in nine of the nineteen datasets. In the bWSO versions with the

F1-

Score values in

Table 7, the proposed bWSO-log method was found to be better in ten of the nineteen datasets.

6.2. Comparison with the State of the Art Approaches

In the second part of the experimental study, the proposed binary bWSO-log method was compared with algorithms widely used in the literature. All other algorithms included in the comparison were discretized using the S1 transfer function, which is frequently preferred in the literature. These algorithms are as follows: bAAA, bBAT, bFA, bGWO, bMFO, bMVO, bPSO, and bWOA.

Table 8 presents the performance of the bWSO-log and the other algorithms in terms of classification accuracy. According to the results obtained, the bWSO-log algorithm demonstrated superior performance in eleven out of nineteen datasets. In other words, the bWSO-log method achieved approximately 58% superior performance across the datasets.

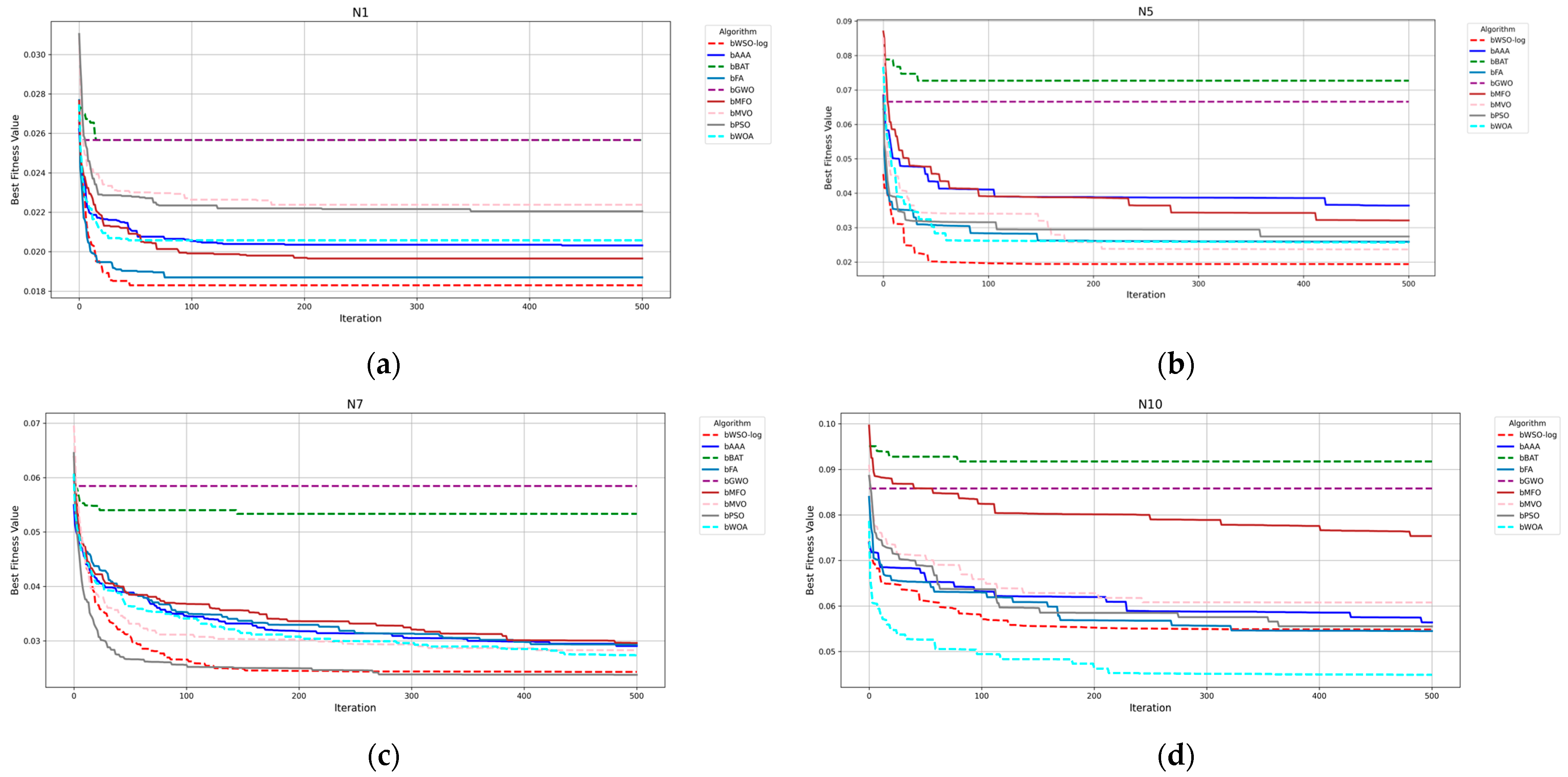

Figure 5 presents a comparative analysis of the convergence performance of the proposed bWSO-log algorithm against other algorithms for the N1, N5, N7, and N10 datasets. Only these four datasets are included as examples to avoid visual clutter and maintain clarity in the figure. Including all datasets would have made the diagram overly complex and difficult to interpret. However, the algorithm applies the same processing steps to the entire feature set of each dataset. As seen in the convergence curves, the proposed algorithm achieves the best results for the N1 and N5 datasets and produces results close to the best for the N7 and N10 datasets.

Table 9 presents the comparison of the accuracy rates of the proposed bWSO-log method with other algorithms based on the

p-value results of the Wilcoxon signed-rank test. In

Table 10,

p-values less than 0.05 are highlighted in bold. Values highlighted in bold indicate that the null hypothesis (H

0) is rejected and the alternative hypothesis (H

1) is accepted. In other words, a statistically significant difference is assumed. Upon examination of

Table 10, it is observed that the proposed bWSO-log method achieves a significant difference in terms of fitness values compared to the other algorithms.

Mean value, standard deviation, best and worst values of

F1-

Score values are given in

Table 10. It has been seen that the proposed bWSO-log value has better values than other algorithms.

7. Conclusions and Future Works

In this study, a novel approach called bWSO-log was proposed for addressing feature selection problems. The WSO, one of the swarm intelligence-based optimization algorithms, was adapted into a binary form using various discretization strategies, and the resulting bWSO-log method was evaluated on nineteen datasets with varying levels of complexity. In addition to commonly used algorithms in the literature (bAAA, bBAT, bFA, bGWO, bMFO, bMVO, bPSO, and bWOA), comparative analyses were conducted with bWSO variants employing S-Shape and V-Shape transfer functions.

To more clearly assess the contribution of the proposed logarithmic transfer function, a version of the algorithm without any transfer function, referred to as bWSO-0, was also included in the experiments. The results demonstrated that the logarithmic function not only significantly enhanced optimization performance but also offered a strong alternative to the widely used S-Shape and V-Shape transfer functions.

The findings indicate that the bWSO-log method is an effective and competitive solution for feature selection problems. However, this study also has certain limitations. First, the proposed algorithm was tested on a limited number of datasets; applying the method to a broader range of datasets from diverse domains would allow for a more comprehensive assessment of its generalizability. Furthermore, the performance of the algorithm is highly dependent on the structure of the logarithmic transfer function and the proper tuning of algorithm parameters, making parameter selection a critical factor.

For future research, different variants or parametric versions of the logarithmic function can be explored to examine their impact on performance, and the proposed algorithm can be compared with recently developed optimization algorithms. Additionally, the bWSO algorithm can be integrated with other feature selection strategies or deep learning-based models to develop more robust and comprehensive hybrid approaches.