1. Introduction

Unmanned drilling rigs are widely adopted because they integrate real-time monitoring systems that enable environmental awareness and dynamic risk alerts during drilling. In risk prediction, the motion characteristics of drill cuttings serve as key indicators of the drill bit’s working condition, the bottom-hole flow field, and other drilling dynamics. Accurate detection and tracking of drill cuttings can thus provide valuable insights into borehole conditions, offering significant engineering benefits.

With the rapid advancement of unmanned drilling technology over the past decade, numerous industrial methods for drill-cutting detection have emerged. Ref. [

1] proposed an acoustic detection approach, analyzing the sound signals generated by drill cuttings impacting the pipe wall or moving through drilling fluids to infer their velocity and distribution. While suitable for downhole environments without requiring optical equipment, this method is highly susceptible to environmental noise and fluid property variations, limiting its measurement accuracy. With the increasing adoption of radar and laser technologies, some systems utilize Laser Doppler Velocimetry (LDV) to measure the velocity and particle size distribution of drill cuttings [

2], while systems requiring high-precision real-time monitoring have employed millimeter-wave (MMW) radar for flow velocity and distribution measurements [

3]. Although these methods offer high detection accuracy, they suffer from high costs and vulnerability to dust and electromagnetic noise under harsh conditions. Pressure sensors, due to their low cost and simple installation [

4], are widely favored. By installing pressure sensors near the drill string or drill bit, the flow behavior of drill cuttings can be inferred from fluctuations in drilling fluid pressure. However, pressure-based methods cannot directly measure particle size or velocity, and downhole conditions such as high temperature, high pressure, and severe vibrations often lead to sensor zero drift or signal distortion, thereby compromising measurement stability.

Leveraging computer vision, drill-cutting monitoring can achieve low-cost, highly stable, all-weather, non-contact, real-time perception by simply addressing the issue of insufficient downhole illumination. As numerous solutions to the illumination challenge already exist, this study focuses on two core technologies critical for drill-cutting velocity measurement: object detection [

5] and multi-object tracking (MOT) [

6]. Object detection is employed to identify drill cuttings and provide precise localization, while the MOT algorithm ensures consistent target association across frames, thereby supporting accurate velocity computation.

The current object detection techniques can be broadly categorized into one-stage [

7] and two-stage approaches [

8]. The YOLO series [

9], representing one-stage methods, excels in high real-time requirements due to its end-to-end training and rapid inference capabilities. In contrast, two-stage methods—such as the Region-Based Convolutional Neural Network (R-CNN), Fast R-CNN, and Faster R-CNN proposed by [

10,

11,

12]—enhance detection accuracy by generating candidate regions followed by classification and regression. However, their high computational complexity limits their applicability in real-time industrial monitoring.

Effective detection of flying drill cuttings requires the deployment of multi-object tracking (MOT) algorithms. Based on the independence of detection and tracking stages, MOT approaches can be divided into Detection-Based Tracking (DBT) [

13] and Joint Detection and Tracking (JDT) [

14]. JDT methods require manual initialization, involve high computational overhead, struggle to recover from target loss, and exhibit limited adaptability to the appearance variations in drill cuttings, rendering them unsuitable for the harsh operational environments of unmanned drilling rigs. In contrast, DBT methods automatically detect drill cuttings, exhibit superior robustness and computational efficiency, adapt more effectively to target appearance changes, and can leverage deep learning detection advances to enhance tracking performance and stability. ByteTrack [

15], a representative DBT method, improves target trajectory consistency and reduces ID switching by optimizing data association and refining the matching of low-confidence detections, thus achieving high accuracy and robustness in real-world scenarios. Consequently, this study adopts ByteTrack as the tracking framework and introduces a velocity estimation module tailored to the motion characteristics of drill cuttings, achieving high-precision velocity measurement by mapping pixel displacement to physical displacement.

The primary contributions of this study are as follows:

- 1.

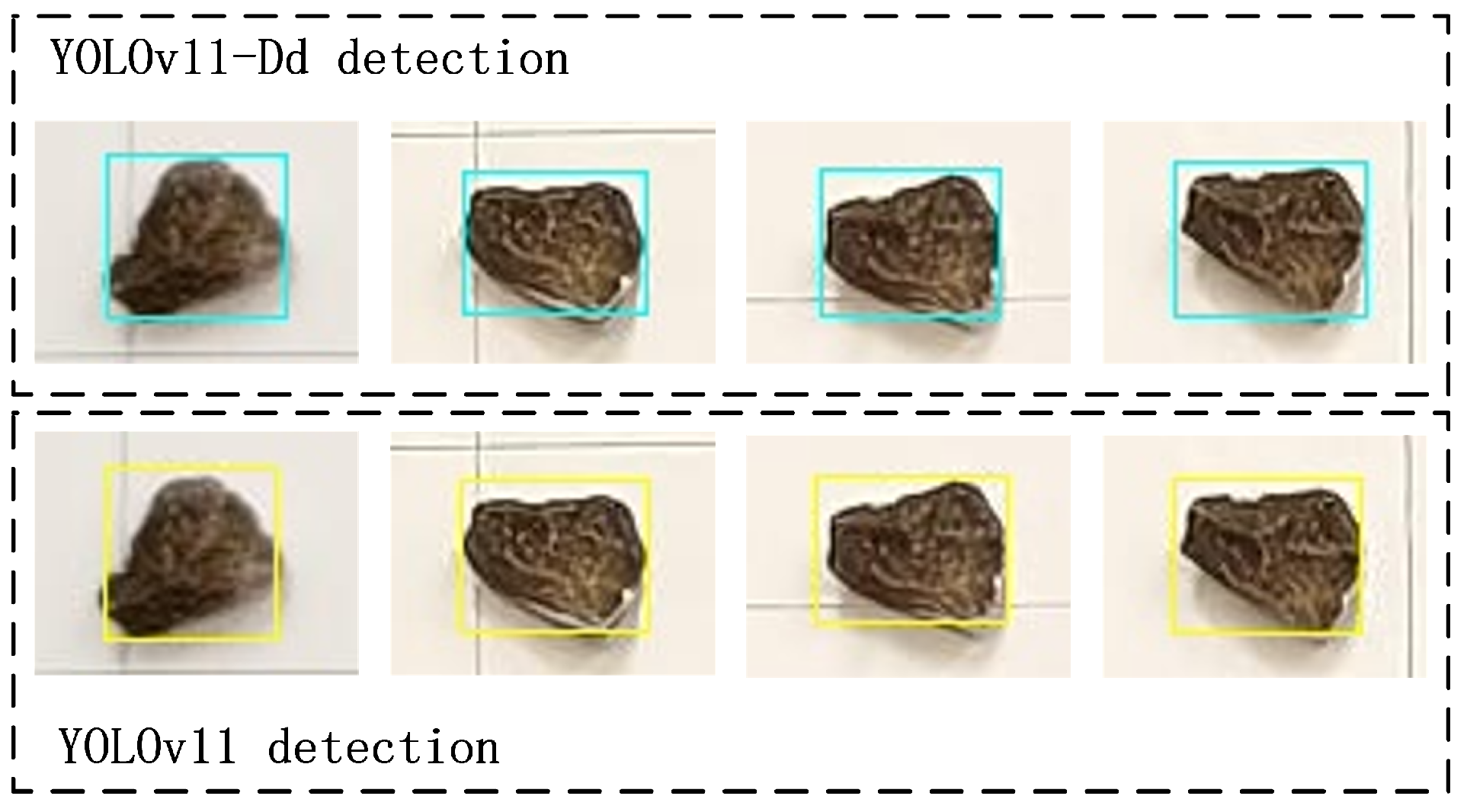

Enhancement of the YOLOv11 detection head. We optimize the multi-scale feature fusion capability of the YOLOv11 detection head by integrating the lightweight feature extraction module Ghost Conv and the feature-aligned fusion module FA-Concat, resulting in an improved model named YOLOv11-Dd (drilling debris).

- 2.

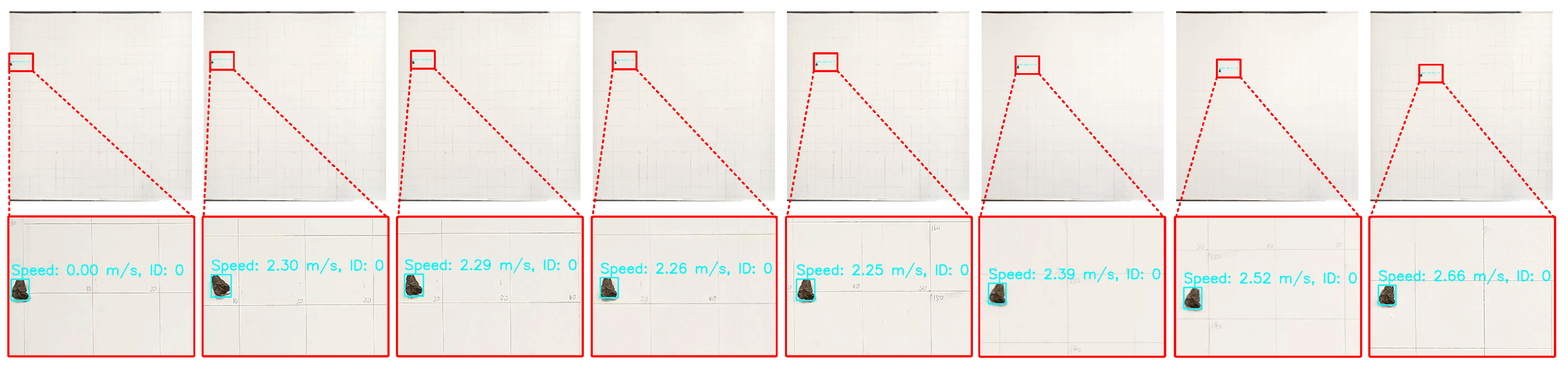

Development of a speed detection module. Leveraging the robust tracking capabilities of ByteTrack, we design a high-precision speed detection module that calculates the real-time movement speed of drilling debris by mapping the pixel displacement of detection box centers between consecutive frames to physical space.

- 3.

Construction of a custom dataset and comprehensive experimental validation. We build a drilling debris splashing simulation dataset comprising 3787 images, covering various ejection angles, speeds, and material conditions. The experimental results demonstrate that our YOLOv11-Dd detection head achieves a 4.65% improvement in detection performance compared to YOLOv11, with an mAP@80 of 76.04%. The proposed drilling debris speed measurement method achieves an accuracy of 92.12% in the speed detection task.

The remainder of this paper is organized as follows:

Section 2 reviews the related work;

Section 3 discusses the optimization of the detection head and the design of the speed detection module;

Section 4 presents the experimental validation and analysis based on the custom drilling debris splashing simulation dataset; and

Section 5 concludes the paper.

2. Related Work

This literature review covers studies published between 2017 and 2024, focusing on object detection, multi-object tracking, and velocity estimation. Relevant sources were retrieved from academic databases including IEEE Xplore, ScienceDirect, and SpringerLink. The main keywords used in the search included “object detection”, “small-object detection”, “multi-object tracking”, and “cutting recognition”. Priority was given to works with strong relevance to industrial applications, fine-grained target detection, and real-time processing techniques. Studies focused solely on static 2D images or tasks unrelated to our problem domain were excluded.

2.1. Object Detection

In recent years, single-stage object detectors have emerged as the mainstream choice for real-time applications, owing to their simple architectures and fast inference speeds. The SSD (Single Shot MultiBox Detector), proposed by [

16], utilizes multi-scale feature maps for bounding box regression and classification. Several improved versions have subsequently been developed, such as DSSD [

17], which incorporates a deconvolution module to enhance contextual information and improve small-object detection performance; FSSD [

18], which adds a feature fusion module to generate richer pyramid features; and PSSD [

19], which introduces an efficient feature enhancement module to strengthen local detection capabilities and semantic expressiveness. RetinaNet [

20] uses Focal Loss to address the imbalance between foreground and background classes, reaching accuracy close to two-stage methods while retaining high inference speed. RTMDet [

21] enhances detection accuracy through the integration of an efficient Transformer module, demonstrating superior performance particularly in small-object detection and under complex background conditions.

The YOLO series, as a hallmark of single-stage detectors, started with anchor-free detection in YOLOv1 and has undergone multiple structural evolutions. YOLOv3 [

22] integrates ResNet and FPN to improve multi-scale perception; YOLOv4 [

23] enhances feature reuse and path aggregation efficiency through CSPNet and PANet; YOLOv5 [

24] optimizes the network with lightweight designs, achieving simultaneous improvements in both accuracy and speed, making it ideal for resource-constrained environments; YOLOv8 [

25] introduces the C2f module to optimize semantic feature extraction, further enhancing detection performance. The latest YOLOv11 [

26] builds upon YOLOv8 by introducing the more efficient C3k2 module and the SPPF (Spatial Pyramid Pooling—Fast) structure, along with the C2PSA module that integrates spatial attention mechanisms, significantly strengthening the model’s multi-scale perception and directional robustness. However, although YOLOv5 performs well in speed and model size, its simplistic feature fusion limits its precision on fast and small targets. YOLOv8 improves semantic features but struggles in multi-scale detection accuracy. YOLOv11 further enhances backbone extraction, yet coarse concatenation of multi-level features hinders edge precision for small objects. In contrast, YOLOv11-Dd maintains efficiency while incorporating Ghost Conv to reduce complexity and FA-Concat to align cross-level features. These enhancements improve detection precision and robustness for small fast-moving objects in challenging environments.

2.2. Multi-Object Tracking

The current multi-object tracking (MOT) methods predominantly follow the Tracking-By-Detection (TbD) paradigm, wherein targets are first localized using an object detector and subsequently associated across frames via a tracking algorithm [

27,

28]. Within this framework, two mainstream strategies have emerged: Joint Detection and Embedding (JDE) and Separate Detection and Embedding (SDE). JDE methods [

29,

30] simultaneously perform object detection and feature extraction within a single deep network, offering improved computational efficiency. However, because detection and embedding share the same feature extraction backbone, JDE methods are prone to feature confusion in high-density scenes—particularly in unmanned drilling operations, where debris particles are densely packed, small in size, and weak in texture—leading to frequent mismatches and ID switches. Additionally, JDE methods face accuracy bottlenecks when handling small objects, compromising tracking stability.

In contrast, SDE methods utilize separate object detectors and re-identification (Re-ID) models, allowing independent optimization of detection and feature extraction. This significantly enhances detection precision and tracking robustness. ByteTrack, an SDE-based approach, further improves tracking stability by retaining low-confidence detections and employing an IoU-based matching strategy; however, the model does not natively support velocity estimation.

In summary, YOLOv11-Dd as the detection head and ByteTrack as the tracker form an effective solution for unmanned drilling environments, yet challenges remain in further improving detection accuracy and enabling real-time velocity measurement.

3. Research Methods

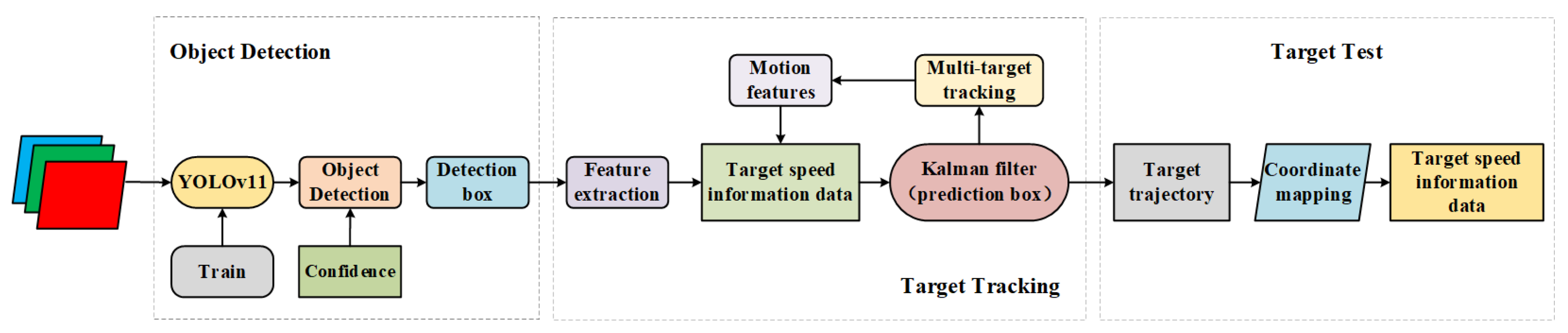

Compared to earlier versions, the YOLOv11 algorithm demonstrates superior performance in detecting small and overlapping objects. However, there is still room for improvement in the fusion methods of different extracted features. Additionally, the ByteTrack algorithm currently lacks direct velocity detection capabilities for drilling debris tracking and speed measurement. Therefore, this paper seeks to enhance these two algorithms to better meet the performance demands of practical applications.The specific workflow for tracking and speed estimation is shown in

Figure 1.

3.1. YOLOv11-Dd Model

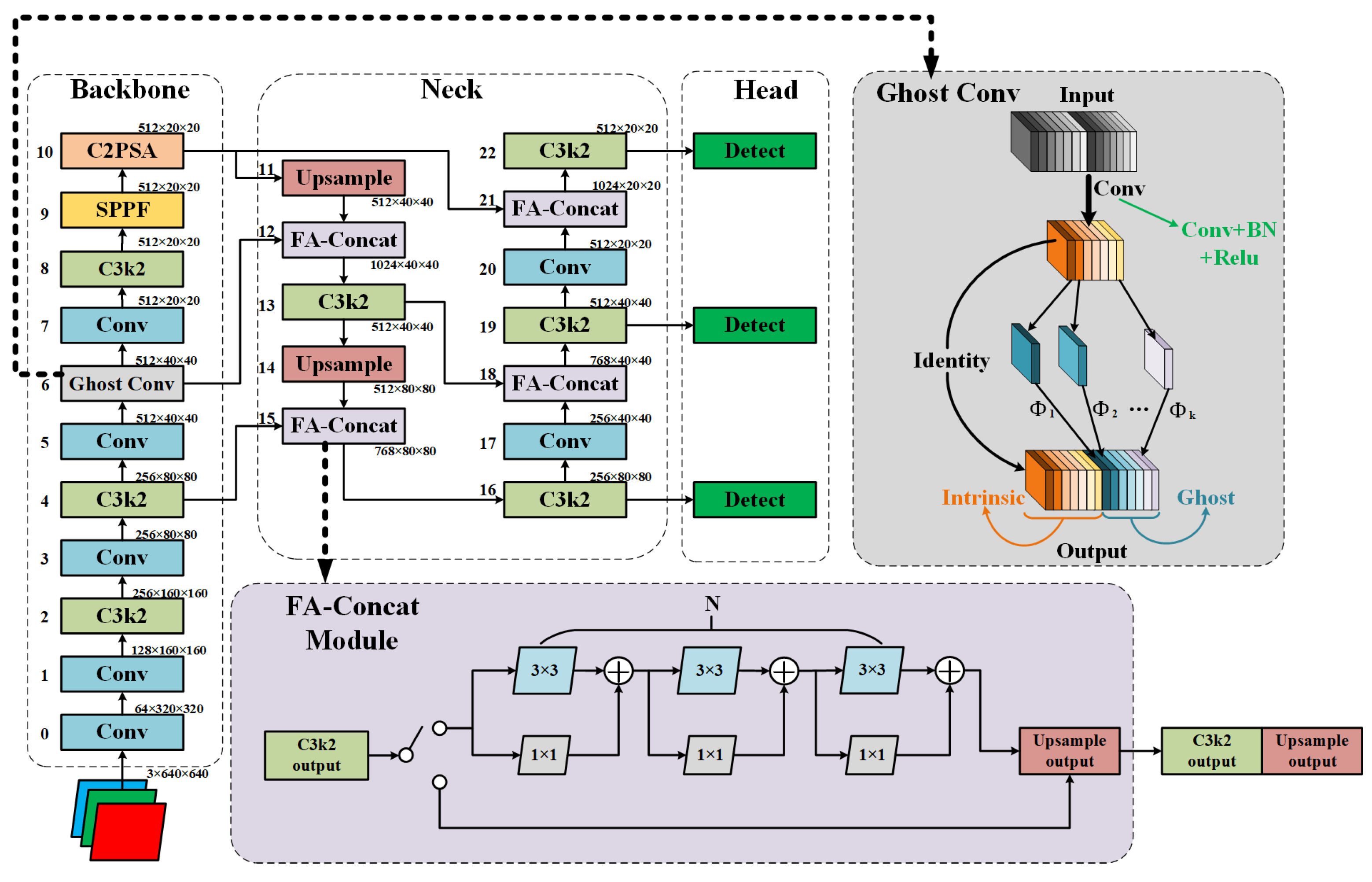

To further improve the accuracy and small-object detection capabilities of YOLOv11, we propose an enhanced detection model, YOLOv11-Dd, based on the original YOLOv11 architecture. This model optimizes the network structure in two key areas: first by replacing certain modules in the backbone network to reduce computational costs while enhancing feature representation and second by refining the feature fusion strategy to improve the synergy among multi-scale features.

First, in the sixth layer of the YOLOv11 backbone network, we replace the original C3f2 structure with the Ghost Conv module. Ghost Conv is a lightweight convolution technique that significantly reduces computation while preserving the model’s expressive power. It extracts a small number of primary features via standard convolution, applies linear transformations to generate Ghost features, and merges them into the final output map. The Ghost Conv module is illustrated in

Figure 2.

Second, we introduce an improved feature concatenation method through the FA-Concat (Feature Alignment Concat) module. The original Concat module in YOLOv11 simply concatenates feature vectors. However, due to significant differences in features across layers, such straightforward concatenation can lead to performance degradation. To address this, we connect the feature map from the C3k2 layer to the Unsample layer through a residual convolutional layer chain, as shown in

Figure 2, rather than directly concatenating them. By assessing the feature differences between layers, we adjust the number of convolution layers in the chain, which helps to minimize the feature discrepancies and enhances the model’s ability to fuse features effectively.

3.2. Tracking and Speed Measurement Central-ByteTrack Module

ByteTrack is an efficient multi-object tracking (MOT) algorithm. Its core concept is to generate candidate targets through an accurate detector and then use a Kalman filter to predict each target’s state, such as position and velocity. By combining appearance features and motion information, the algorithm evaluates the matching degree between detection boxes and existing trajectories. The greedy algorithm is then used to achieve optimal frame-to-frame association. This method significantly enhances both tracking stability and target association accuracy, making it highly effective for multi-target tracking in complex dynamic environments.

In this research, to accurately measure the speed of fast-moving targets such as splashing drilling debris, we propose a speed measurement module based on the center point displacement of the detection box, built upon the ByteTrack framework. This module extracts the center point coordinates of the detection box for each target across consecutive frames, calculates its displacement in pixel space, and uses camera parameters along with the calibration distance between the target’s movement plane and the camera. The pixel displacement is then converted into a real-world speed value, enabling precise estimation of the target’s instantaneous velocity. The design principles, calculation methods, and related equations of this module are elaborated below.

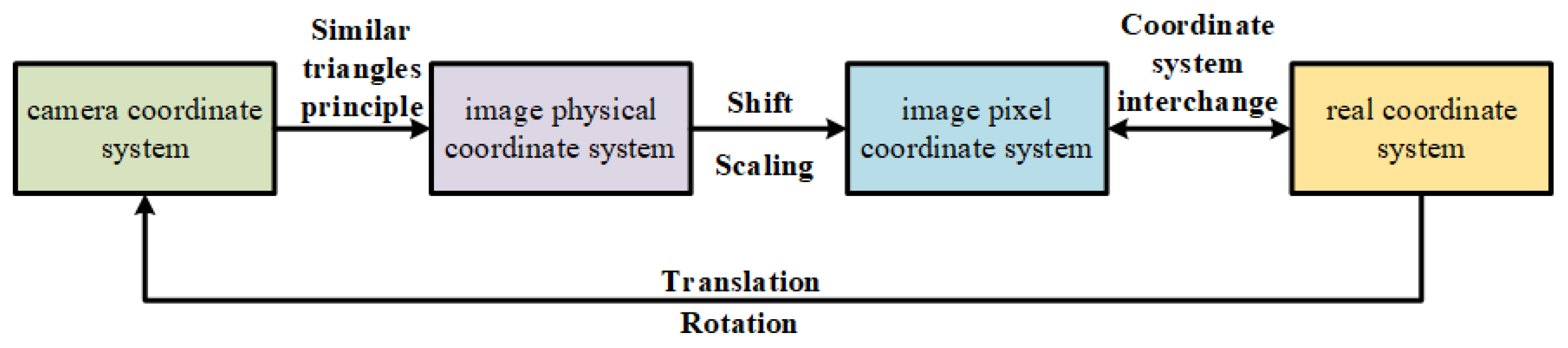

3.2.1. Transformation Between the World Coordinate System and the Image Coordinate System

In the task of detecting the velocity of drilling debris, it is essential to accurately map the motion trajectory of the debris presented in the image to the physical space in order to achieve precise estimation of its true velocity. To address this, this paper establishes a mapping relationship between the image coordinate system and the real-world (world) coordinate system. The image coordinate system represents the two-dimensional pixel position of the target on the camera’s sensing plane, while the world coordinate system describes the physical position of the target in the actual three-dimensional space. Considering the stability of the unmanned drilling machine operation environment and the controllability of camera parameters, this paper utilizes fixed industrial cameras, along with parameters such as field of view, camera height, lens focal length, and image resolution, to map pixel points in the image to physical space. Specifically, by calibrating the size and position of a reference object, a conversion relationship between pixels and real-world distance is established, allowing for accurate conversion of debris displacement in the image to real-world physical displacement.

Figure 3 illustrates the process of transforming between different coordinate systems, visually demonstrating how a three-dimensional spatial point is mapped to a two-dimensional image plane through various coordinate systems.

As shown in

Figure 4, during the actual camera calibration process, the range of the drilling machine’s working surface is relatively limited, and the vertical height difference can be considered negligible. As a result, the drilling debris ejection surface can be approximated as a plane, with minimal error introduced by this approximation. Therefore, the core task primarily focuses on the two-dimensional coordinate transformation between the image pixel coordinate system and the working surface coordinate system.

As shown in

Figure 5, this paper systematically outlines the transformation relationships between the four coordinate systems and rigorously derives the transformation process using mathematical equations, ultimately establishing the mapping relationship between the image pixel coordinate system and the world coordinate system. The specific transformation equations are as follows:

(1) The translation relationship between the physical image coordinate system and the image pixel coordinate system.

In the equations, denotes the origin of the image pixel coordinate system, while and represent the size of each pixel along the x and y axes in the pixel plane, and X and Y correspond to the values of the point in the image physical coordinate system.

(2) The relationship between the image physical coordinate system, the camera coordinate system, and the world coordinate system.

In the equations, the coordinates of point p in the camera coordinate system are , in the image physical coordinate system , with the focal length being f. The point represents the origin of the world coordinate system, with R and t being the rotation matrix and translation vector between the two, and K is a constant.

(3) The transformation relationship between the image pixel coordinate system and the world coordinate system.

This equation is obtained by combining Equations (

1)–(

4).

This conversion mechanism serves as a crucial foundation for drilling debris speed detection, enabling the reconstruction of debris trajectories, obtained using the ByteTrack algorithm, in the real-world space. By incorporating time information, it calculates the actual movement speed of the debris, thereby significantly enhancing the accuracy and practicality of the unmanned drilling rig’s intelligent monitoring system.

3.2.2. Speed Estimation Based on Pixel Displacement

First, the pixel displacement of the object’s center point is computed based on the detection boxes across consecutive frames. Let the center coordinates of the target in frame

t and frame

be

and

, respectively. The center point’s pixel displacement

can then be calculated using the following equation:

where

and

denote the center coordinates of the target in frames

t and

, respectively, and

indicates the pixel-level displacement between two consecutive frames.

The pixel displacement is subsequently mapped to real-world displacement. Assuming that the actual distance from the camera to the object is

, the real-world displacement corresponding to the pixel shift can be derived using the camera’s focal length

f and the principle of similar triangles. Accordingly, the physical displacement

can be calculated using the following equation:

In this equation, denotes the real-world displacement of the object between two consecutive frames, refers to the actual distance between the camera and the object, and f represents the focal length of the camera.

Once the actual displacement of the object is obtained, the subsequent step involves calculating the object’s real-world velocity based on the time interval between frames. Assuming a frame rate of

frames per second, the time interval

between two consecutive frames can be determined by

Based on the displacement–time relationship, the object’s real-world velocity

can be computed using the following equation:

In the equation, denotes the estimated real-world velocity of the object.

By following the above procedure, the velocity estimation based on the displacement of the detection box center is accomplished. This method determines the target’s motion speed by calculating its displacement across consecutive frames and translating it into real-world velocity according to the frame interval.

5. Conclusions

In response to the need for cutting-motion monitoring in unmanned drilling operations, this paper proposes a high-precision detection approach that integrates small-object detection with speed estimation. The method is built upon an improved YOLOv11 detection algorithm and establishes an end-to-end solution for cutting-speed measurement by leveraging the mapping relationship between image pixel displacement and actual physical displacement. Firstly, to address challenges such as the small size and weak features of cuttings, we optimize the YOLOv11 detection head by introducing a multi-scale feature alignment and fusion strategy, significantly enhancing the small-object detection capabilities. The experimental results demonstrate that the proposed improvements boost detection performance by 4.65% compared to the original model, achieving a final mAP@80 of 76.04%. Secondly, during the object tracking phase, the ByteTrack ensures stable tracking, while the speed estimation module maps pixel displacement to real-world coordinates using camera calibration, enabling precise velocity measurement. The proposed model was trained and evaluated on a custom-built dataset. The experimental results show that, while detection accuracy decreases under low-speed motion with high frame rates compared to high-speed motion with low frame rates, the method still achieves an average speed estimation accuracy of 92.12%. It also outperforms the baseline model in detection accuracy, tracking stability, and computational efficiency. However, the dataset used in this study was generated under idealized conditions and does not fully represent the complexity of real-world environments. Future research will focus on expanding and enriching the dataset to cover more realistic and challenging working conditions, enabling more targeted validation. In addition, we plan to further optimize the YOLOv11-Dd detection architecture by reducing model complexity while maintaining detection accuracy. Special improvements will also be made for low-speed target scenarios to enhance the system’s overall robustness and adaptability.