Abstract

Existing heuristic algorithms are based on inspiration sources and have not yet done a good job of basing themselves on optimization principles to minimize and utilize historical information, which may lead to low efficiency, accuracy, and stability of the algorithm. To solve this problem, a personalized-template-guided intelligent evolutionary algorithm named PTG is proposed. The core idea of PTG is to generate personalized templates to guide particle optimization. We also find that high-quality templates can be generated to guide the exploration and exploitation of particles by using the information of the population particles when the optimal value remains unchanged, the knowledge of population distribution changes, and the dimensional distribution properties of particles themselves. By conducting an ablation study and comparative experiments on the challenging CEC2022 test and CEC2005 test functions, we have validated the effectiveness of our method and concluded that the stability and accuracy of the solutions obtained by PTG are superior to other algorithms. Finally, we further verified the effectiveness of PTG through four engineering problems.

1. Introduction

Traditional optimization methods have established precise mathematical models for addressing diverse real-world problems, including linear programming, quadratic programming, nonlinear programming, penalty function methods, and Newton’s method. However, when dealing with large-scale non-convex optimization problems, these conventional approaches not only demand substantial computational resources but also tend to converge to local optima [1]. In contrast to traditional optimization algorithms, heuristic algorithms leverage historical information while incorporating stochastic elements for exploration. This approach not only reduces computational burden but also enhances the ability to escape the local optimum, making it particularly suitable for solving complex problems where exact solutions are difficult to obtain, notably NP-hard issues [2]. Heuristic algorithms have been widely used to solve different problems, such as portfolio optimization [3], path planning [4], electrical system control [5], industrial internet of things service request [6], water resources management [7], urban planning [8], wireless sensor point coverage [9], etc.

Most existing heuristic algorithms can be classified by their inspiration sources into four categories: evolutionary algorithms (EA), physics-based methods (PM), human behavior models (HM), and swarm intelligence (SI).

(1) Evolutionary algorithms.Evolutionary algorithms draw their inspiration from the theory of biological evolution, and their most notable feature is the simulation of biological inheritance and variation to produce new individuals. Genetic Algorithm (GA) [10] algorithm explores the solution space by cross-combining the genetic information of parents. It also uses mutation to explore areas not covered by crossover in the early stage and to refine the search solution in the later stage. In addition to GA, other algorithms in the EA category include the Differential Evolution (DE) algorithm [11], which is different from GA’s mutation strategy in difference. It multiplies the difference between two randomly selected individuals by the difference term to indicate the distance of mutation, so that the current individual changes at the corresponding distance and then enters the crossover stage. Each dimension in the intersection determines whether to replace it with the differentiated dimension value according to the user-defined intersection probability. It is helpful to keep excellent dimension values and combine excellent genetic characteristics into new individuals. Genetic Programming (GP) algorithm [12] has the same algorithm flow as GA, but the individual objects operated by GP are usually programs in the form of a tree structure, and its main purpose is to generate or optimize computer programs. Some other evolutionary-based algorithms include Evolutionary Programming (EP) algorithm [13], Evolution Strategy (ES) [14], Biogeography-Based Optimizer (BBO) [15].

(2) Physics-based methods. The physical phenomenon is used to simulate the change of the function solution space, and physical principles are used to balance the exploration and exploitation in the process of solving a function. For example, the Gravity Search Algorithm (GSA) [16] uses the fitness value of the particle to represent its quality, and the velocity of the particle related to the particle position update is calculated by Newton’s law of universal gravitation and Newton’s second law of motion. The Simulated Annealing algorithm (SA) [17] imitates the annealing process in physics and helps the material to achieve a more stable structure by controlling the rate of temperature drop. In the application of optimization, the exploration and development of the balanced algorithm are controlled by controlling the diversity of the solutions of the offspring. Big-Bang Big-Crunch (BB-BC) optimization [18] optimizes the algorithm by simulating the expansion and contraction of the universe, that is, controlling the distance between particles. Specifically, in the big bang stage, the global search is carried out by increasing the distance between particles, and in the big collision stage, the local search is carried out by reducing the distance between particles. The electromagnetic algorithm [19] transforms the solution search space by simulating the attraction and repulsion between charged particles and specifically updates the position of the particle solution by using the fitness value to represent the charge intensity and introducing Coulomb’s law, Newton’s second law, and related formulas of kinematics equations. There are Quantum-Inspired metaheuristic algorithms (QI) [20], Central Force Optimization (CFO) [21], Charged System Search algorithm (CSS) [22], Ray Optimization (RO) algorithm [23], and so on.

(3) Human behavior models. In the Teaching-Learning-Based Optimization algorithm (TLBO) [24], each particle represents a student, and the particle position is updated by simulating two stages of teaching and learning. In the teaching stage, each particle learns the optimal value and average value in the population. In the learning stage, particles learn from each other, and particles with higher fitness value guide particles with lower fitness value. In the Imperialist Competitive Algorithm (ICA) [25], each particle represents a country, and these particles are divided into imperialist countries and colonies according to the fitness value. The imperial competition process is used to determine the possibility of being occupied by other empires, and the colonial parties update their solutions according to the position of imperialist countries in the assimilation process. In the Educational Competition Optimization (ECO) algorithm [26], schools represent the evolution of the population, and students represent the particles in the population. The algorithm is divided into three stages: primary school represents the average position of the population, middle school represents the average and optimal position of the population, and students choose their nearby schools as their moving direction. In high school, schools represent the average, optimal, and worst positions in the population, while students choose the optimal position as their moving direction. In the Human Learning Optimization (HLO) algorithm [27], each latitude value of each particle is selected from three learnable operators according to probability, and the random learning operator uses random number generation to simulate extensive human learning. Individual learning operators store and use the best experience of individual history to generate solutions, while social learning operators generate new values through population knowledge sharing. HM categories include Driving Training-Based Optimization (DTBO) [28], Supply–Demand-Based Optimization (SDO) [29], Student Psychology Based Optimization (SPBO) algorithm [30], Poor and Rich Optimization (PRO) algorithm [31], Fans Optimization (FO) [32], Political Optimizer (PO) [33], and so on.

(4) Swarm intelligence. The most commonly used algorithm is the Particle Swarm Optimization algorithm [34]. In PSO, each bird represents the position of a solution, and the individual position is updated and optimized by simulating the foraging behavior of birds and recording the historical optimal position found by each bird and the whole flock. The Moth-Flame Optimization algorithm (MFO) [35] uses the behavior of the moth fireworm to find and approach the light source at night to simulate the process of function optimization. The Whale Optimization Algorithm (WOA) [36] compares each candidate solution to a humpback whale and updates the position of the candidate solution through the random search, encirclement, and rotation of the humpback whale when hunting. In the Harris Hawks optimization algorithm (HHO) [37], each eagle represents a potential solution, and the position of the candidate solution is updated by simulating the siege, scattered search, and direct attack of eagles during hunting. In the Coati Optimization Algorithm (COA) [38], each raccoon represents a candidate solution and effectively searches the solution space by simulating raccoon hunting and escaping from predators. The Tunicate Swarm Algorithm (TSA) [39] uses cystic worms to represent candidate solutions, and the position updating strategy in the search process draws lessons from the jet propulsion and group cooperation behavior of the cystic swarm in navigation and foraging activities. In the Reptile Search Algorithm (RSA) [40], each reptile represents the position of a solution, and the optimization idea of the algorithm draws lessons from the two stages of reptile hunting to carry out global and local search. SI categories include the American Zebra Optimization Algorithm (ZOA) [41], Artificial Bee Colony (ABC) algorithm [42], Spotted Hyena Optimizer (SHO) [43], Hermit Crab Optimization Algorithm (HCOA) [44], Grasshopper Optimization Algorithm (GOA), [45] and so on.

However, the existing heuristic algorithms determine the optimization mechanism through the source of inspiration, rather than establishing the optimization paradigm from the optimization mechanism, which does not have strong explanatory power or stability of the algorithm. They also do not make good use of the information on historical stagnation and the distribution of the particle itself, which makes the algorithm prone to missing the opportunity to evolve towards excellent dimension values and difficult to converge to the global optimal value.

Based on the above problems, we establish a paradigm that balances exploration and exploitation. Instead of directly interacting with particle groups and historical solutions by adjusting step sizes, we aim to make the most of historical information. We first create a template based on particle characteristics and population history information, and then the template is used to guide the particle optimization. During the whole algorithm, there are no user-defined parameters.

The main contributions of this paper are as follows:

- We propose a PTG algorithm based on an optimization principle. In the template generation stage, a personalized template containing key exploration areas is generated. In the template guidance stage, the particle exploits key areas under the guidance of the template. By gradually narrowing and locking the optimal value area, this paradigm solves the difficult problems of setting the search step size and defining parameters.

- We find that historical stagnation information can enhance the exploitation ability of the particle. So we extract this information as the history-retrace template’s base points. Historical stagnation information refers to the population particle information when the optimal value of the population is unchanged. The timely use of this information can guide particles to mine in the historical optimal solution area and avoid missing the global optimal value.

- From the perspective of historical distribution information, the template interval expansion strategy is proposed to extract the distribution change knowledge of population offspring, expand the particle exploration space, and enhance the particle’s exploration ability. We find that the particle’s own dimension distribution attribute can provide effective information to speed up particle optimization, so we propose the personalized template generation strategy based on particle dimensional distribution to generate a personalized template for each particle.

The following part of this paper is organized as follows. In Section 1, the existing heuristic algorithms are introduced. In Section 2, we introduce the proposed PTG algorithm. In Section 3, we conduct experiments and analysis of results. In Section 4, we summarize the content of this paper. In order to cover and vividly represent the newly generated individuals, population individuals, and populations. This paper draws on the expression of the PSO algorithm and describes individuals as particles. However, the method proposed in this paper has nothing to do with the PSO algorithm.

2. Methods

A personalized-template-guided intelligent evolutionary algorithm includes the template generation stage and the template guidance stage. The purpose of the template generation stage is to generate a guiding particle for the current particle, that is, a personalized template. Firstly, each particle randomly selects whether to generate a value-driven template or a history-retrace template to increase the diversity of exploration. Then the selection strategy of the template base point set is applied to determine the base point set of the template. Then, based on the template interval expansion strategy, the template interval surrounded by basic points is expanded. Finally, the personalized template generation strategy based on particle dimensional distribution is adopted to generate a personalized template in the corresponding interval dimension. In the template guidance stage, the template-guided knowledge transfer strategy is used to update the position of the particle. See Algorithm 1 for the specific process.

The detailed flowchart of the algorithm is shown in Figure 1. First, initialize the population and parameter values, and calculate the fitness value. Use the template interval expansion strategy to get the value , and then enter the loop. Calculate the value of the i-th particle, and decide whether to generate the history-retrace template or the value-driven template according to the value of the random integer L. When , enter the left half of the process to generate a history-retrace template, and use the selection strategy of the template base point set to calculate the base point of the history-retrace template by Equation (1). Then, the template generation strategy is adopted, and the is calculated by Equation (12), which determines whether to use the personalized template generation strategy based on particle dimensional distribution. When , the dimension to be learned is obtained by Equation (13), and then the history-retrace template is generated by Equations (14)–(19) in combination with the interval extension value . When , only the template generation strategy is used, and the history-retrace template is generated by Equations (14)–(19) in combination with the interval extension value . When , enter the right half of the process to generate a value-driven template. Calculate the base point of the value-driven template by Equation (2), and calculate the value by Equation (12). When , the dimension to be learned is obtained by Equation (13), and then the value-driven template is generated by combining the interval spacing value with Equations (20) and (21). When , is directly generated by combining the interval spacing value with Equations (20) and (21). After the template is generated, the algorithm enters the template guidance stage. First, a value of the random number is calculated. When , a new solution is obtained by Equations (22) and (23), and when , a new solution is obtained by Equation (24). Update the M and of the i-th particle. After N new solutions are generated by the above steps for N particles, the fitness value of the population is calculated, and the values of and are updated. When , continue the above cycle. Otherwise, jump out of the loop, return to the optimal solution value and its position, and end the algorithm.

| Algorithm 1: Pseudocode of PTG |

|

Figure 1.

The flowchart of the PTG.

2.1. Template Generation Stage

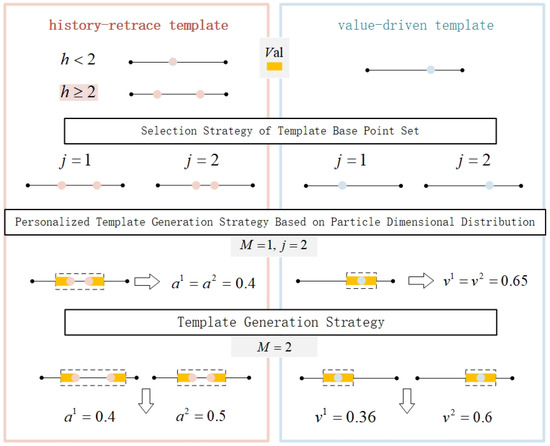

Firstly, an example is given to illustrate the brief process of template generation. Suppose there is a particle that has two dimensions. Figure 2 shows a brief process of generating a personalized template for this particle, and the same applies to the other particles. For specific details, please refer to the following content of this section. The pink part on the left of the figure represents the generation process of the history-retrace template, while the part on the right shows the generation process of the value-driven template. The length of the yellow square is the interval expansion value obtained through the interval expansion strategy. The two small dots on the left and right of the black line segment in the figure represent the upper and lower bounds of the particle dimension value. j represents the dimension of the particle. a refers to the generated history-retrace template, and v represents the generated value-driven template.

Figure 2.

Graph of the template generation stage.

If the particle chooses to generate a historical backtracking template, there are two possible cases for the selection of template base points. When , there is only one template base point. When , there are two template base points. In the case of , the selection strategy of the template base point set for the history-retrace template is given. According to this strategy, two basic points are obtained in each dimension of the particle. Next, if , the personalized template generation strategy based on particle dimensional distribution is implemented. Suppose the learning object selected by the particle is dimension 2; then, on the basis of the template base point , the interval range is expanded with the , and the value obtained within the dashed box range is used as the value of the personalized template dimensions 1 and 2. If , the template generation strategy is executed. Based on the base points of particle dimensions 1 and 2, add to expand the interval range, and then generate new values within their respective ranges as the generated templates.

If the particle selects to generate a value-driven template, there is only one basis point for its template. In the template-based point selection strategy, one base point is obtained on each of the two dimensions of the particle. The following demonstrations are consistent with the historical backtracking template, but it should be noted that the specific principles and formulas of the two are different. For details, please refer to the following content of this section.

2.1.1. Selection Strategy of Template Base Point Set

The choice of base point set is very important because it determines what area the template guides the particle to explore. In particular, this algorithm stores a random particle in the merged population when the merit of each generation is unchanged, which helps the algorithm to review the previous solutions and extract historical stagnation information from them. In the specific algorithm steps, each particle randomly chooses to generate a history-retrace template or a value-driven template, which increases the diversity of exploration. In order to balance exploration and exploitation, the corresponding template base point set selection strategy is adopted under the guidance of the optimal value signal . This strategy realizes the collaborative exploration of the population; some particles review the previous limited historical area, and some particles exploit near the optimal value.

Specifically, when the signal is the change of the previous generation’s merit, the base point of the history-retrace template is the random particles in the merged population when the merit stored in the last two generations is unchanged, while the value-driven template randomly selects one of the top three elite particles of the parent as the base point, thus realizing the collaborative exploration of the population. Some particles review the local points when they were unchanged before, and some particles are developed near the merit to jointly promote the further optimization of the solution. When the signal is the unchanged merit of the previous generation, the history-retrace template’s base point is selected from historical particles, and the value-driven template’s base point is a random particle of the parent. So as to expand the exploration scope of particles, help particles jump out of local optimization, and explore new fields.

where is the base point set of the history-retrace template of the i-th particle. In each iteration, randomly select one of the top N elite individuals in the merged population and store it in C. t is the current iteration number, , and T is the total iteration number. Where and are random integers of . represents the individual in C. When the optimal value of the t-iteration population remains unchanged, a random individual of the t-iteration merged population will be stored in Q. h is the number of individuals stored in Q. N is the number of individuals in the population. is the location of the optimal solution of the population. is the optimal value signal; when the optimal value of the population changes, is 1, otherwise it is 0.

where is the value-driven template’s base point set of the i-th particle, P is the parent population, and is a random integer of . is a random integer of .

2.1.2. Template Interval Expansion Strategy

The selection strategy of the template base point set is only based on historical information. If the particles are guided to explore the template interval surrounded by the basic points they choose, the algorithm will easily fall into local optimization, especially in the late convergence stage. In order to solve this problem, we use Equations (3)–(7) to calculate the interval value .

When the optimal value of the population changes, the offspring population, the parent population, and the merged population all contain valid information about the distribution of optimal values. It should be noted that is the spacing of the optimal particle population after the elite selection strategy, which lacks diversity compared with . Therefore, we set the parameter to balance the diversity and convergence of interval values. In the early stage, when the optimal value area is uncertain, the weight of decreases gradually, while increases gradually, which makes the randomness of the template interval expansion value increase gradually with iteration to help the particles jump out of the local optimization. After the midterm iteration, the weight of gradually decreases, and the weight of gradually increases, which makes the randomness of the template interval expansion value gradually decrease to help the particles gradually converge.

When the optimal value of the population is constant, the combined population may contain new distribution knowledge, because some particles in the offspring may be better than some particles in the parent. For this reason, we set the interval expansion range as the absolute difference between two random particles in the combined population to help the particle jump out of the local optimum.

where is the interval value in the j-th dimension of the current iteration, , and D is the maximum dimension value. is the j-th dimension value of the i-th individual in the offspring population. is the j-th dimension value of the i-th individual of the parent population. is the mean difference between the offspring population and the parent population in the j-th dimension. is the collection of the top N outstanding individuals in the contemporary merged population. is the difference between the average of the top 50% of individuals and the average of the bottom 50% of individuals in . is the adjustment parameter. is the absolute value of the difference between random individuals in in the j-th dimension. is a random integer of . is a random integer of . And is not equal to .

2.1.3. Personalized Template Generation Strategy Based on Particle Dimensional Distribution

There are three kinds of dimensional distribution of a particle: the first is concentrated distribution, the second is similar to normal distribution, and the third is random distribution. In the first two cases, just finding the mean point of the dimensional distribution can help the particle converge quickly. Therefore, we put forward the particle dimensional concentration degree to judge the particle dimensional distribution. For the particle that meets one of the first two conditions, all dimension values of the personalized template are the same, so as to guide the particle to mine near this dimension value, and then get the mean point of particle dimension distribution through the elite selection strategy.

We use the parameter to regulate the value of . At the initial stage of optimization, the particle dimension distribution is not stable, so should be small. With the progress of iteration, under the elite selection strategy, the dimension distribution of particles will gradually stabilize. At this time, the personalized guidance template can be made for the particle according to its dimensional distribution to help mine their potential knowledge. At the later stage, the dimension distribution of the particle itself has been particularly stable. At this time, each dimension should be optimized and converged separately, so the value of should be reduced.

where is the particle dimension concentration, is the i-th particle, is the concentration control parameter, and is a new particle obtained by disrupting the dimension order in .

When is large, it is more likely to generate templates with the same dimension values. In addition, the dimension value the template chooses for knowledge guidance is the selection of the i-th particle of the parent in the corresponding template block with a certain probability. If the historical particle selection value is empty, it will be generated randomly. See Equations (12) and (13) below for the specific dimension value selection.

where represents the dimension learning mode selected when the i-th particle generates the template L. If , a template with the same dimension value is generated. We set judgment condition 2 in our expression to be superior to condition 1. Where r is a random number between 0 and 1. represents the mode of dimension learning selected when the i-th parent particle generates the template L. L is a random integer of 1 or 2. The history-retrace template is selected when , and the value-driven template is selected when .

where represents the dimension selected by the i-th particle when generating the L-type template. represents the dimension selected by the i-th parent particle when generating the L-type template. is a random integer from 1 to D.

- ①

- Generate a history-retrace template

When , Equations (14)–(16) are used to generate the history-retrace template, where is the history-retrace template generated for the i-th particle, is the interval lower bound, and is the interval upper bound. represents the first value in , and represents the second value in .

When , the history-retrace template is generated by calculating Equations (17)–(19), where is the lower bound of the interval, and is the upper bound of the interval.

- ②

- Generate a value-driven template

When the optimal value changes, the change value of the particle dimension value of the optimal value of the previous generation and the current generation contains certain particle optimization trend information, and following this change rule may help the particle find the optimal value.

The particle selects the optimal value template generation method through a random number . When , the optimal value template generation method follows the changing law of the optimal value, and when , the optimal value template is generated randomly. is used to randomly select whether the template value is increased or decreased.

Where and are both random integers of 1 or 2. When the random number , and in the corresponding selected dimension , or the random number and , the following Equation (20) is used to generate the value-driven template.

When and or and , the following Equation (21) is used to generate the value-driven template.

where is the value-driven template generated for the i-th individual in the j-th dimension.

2.2. Template Guidance Stage

Particle optimization needs to explore the right spatial range at the right time node. After the template generation stage, we obtained the space that needs to be explored emphatically. Therefore, in the template guidance stage, we adopt the template-guided knowledge transfer strategy. The strategy, aimed at improving the optimization efficiency of particles, further controls the step size of particles based on the current iteration’s time node, guiding them to search for key optimal points within the focused exploration space. is a random integer of 1 or 2. In order to increase randomness, the particle uses to randomly select particle-led knowledge transfer or template-led knowledge transfer. When , all dimensions use the same random number. In order to balance the ability to optimize exploration and development, it is not necessary to constrain step size and direction through time when the optimal value changes.

When , the above Equations (22) and (23) generate new particles through knowledge transfer. is the regulatory parameter, and is the template selected by the i-th individual. When , , and when , .

When the figure of merit is constant, the knowledge transfer strategy with the template as the main learning object will gradually move closer to the particle direction with iteration, and the step size will gradually increase.

When , Equation (24) generates new particles through knowledge transfer. is a random number in the range .

When the figure of merit is constant, the direction of the knowledge transfer strategy with the particle itself as the core is random, and with the iteration, the step size of the particle to the template gradually decreases.

2.3. Computation Complexity

This section analyzes the time complexity and algorithm running time of PTG. Suppose the population size is N, the number of iterations is T, and the problem dimension is D. The main loop of the algorithm executes T iterations, and in each iteration, the algorithm needs to generate particles through the template generation stage and the template guidance stage. In the template generation stage, the dimension analysis value of each particle needs to be calculated, so the time complexity is . In the template interval expansion strategy, the value of each particle is the same in the current iteration, so the computational complexity is . The time complexity of generating history-retrace templates or value-driven templates for particles is . In the template guidance stage, the time complexity of both boot modes is . Therefore, the overall time complexity of the algorithm is . Since the constant term can be ignored, it can be reduced to .

3. Results

3.1. Experimental Setup

- (1)

- Benchmark function: CEC2005 [46] data set containing 23 benchmark test functions is adopted. Where F1–F13 are high-dimensional problems. The functions of F1–F5 are unimodal. F6 function is a step function, F7 function is a noisy quartic function, and F8–F13 is a multimodal function. F14–F23 are low-dimensional functions with only a few local minima. CEC2022 [47] test function with complex OP ability is also used to evaluate the performance of the algorithm and verify the algorithm. In CEC2022, the function consists of 12 benchmark functions, including unimodal, multimodal, mixed, and compound functions, where F1 is unimodal, F2–F5 are multimodal, F6–F8 are mixed, which can be unimodal or multimodal, and F9–F12 are compound and multimodal.

- (2)

- Comparison algorithm: We compare the proposed algorithm with the classical algorithm, which are Moth-Flame Optimization algorithm (MFO) [35], Whale Optimization Algorithm (WOA) [36], Harris Hawks optimization algorithm (HHO) [37], and Tunicate Swarm Algorithm (TSA) [39]. We also compare the proposed algorithm with the latest and most advanced optimization algorithm, which are Educational Competitive Optimization (ECO) [26] (Q2, 2024), Coati Optimization Algorithm (COA) [38] (Q1, 2023), Reptile Search Algorithm (RSA) [40] (Q1, 2022), Fata Morgana Algorithm (FATA) [48] (Q2, 2024), and Hippopotamus Optimization algorithm (HO) [49] (Q2, 2024). See Table 1 for the detailed settings of control parameters, in which the population size of all algorithms is set to 30, the number of iterations is 1000, and the maximum number of evaluations is 30,000.

Table 1. Algorithm parameters and their values.

Table 1. Algorithm parameters and their values. - (3)

- experimental environment: All experiments were carried out under the 64-bit version of Windows 11 operating system with MATLAB R2022a software, using Intel Core, with a basic frequency of 1.00 GHz and a maximum turbo frequency of 1.2 GHz, and a processor with 8 logic cores.

3.2. Ablation Experiment

To verify the validity of our proposed framework, we set up the ablation study. The specific results are shown in Table 2.

Table 2.

Ablation experiment of PTG on CEC2022.

- (1)

- PTG-noQ means that the history-retrace template’s base point set does not extract the stagnant historical value Q in Equation (1). It can be seen from the table that the stability and accuracy of the PTG solution are better than those of PTG-noQ, which shows that the timely backtracking and mining of historical stagnation optimal values can help the algorithm refine the search solution and reduce time-consuming shocks and wrong searches.

- (2)

- PTG-noPDD refers to adopting the template generation strategy that is not based on the particle’s dimension distribution, specifically, . PTG is superior to PTG-noPDD in all 11 functions, especially F1, F6, and F11, which indicates that the dimensional distribution information of the particle itself is very important for solving unimodal function problems, mixed function problems, and complex and multimodal function problems. It also shows that the personalized template generation strategy based on particle dimensional distribution can adjust the dimension value of the template promptly according to the dimensional distribution law of the particle itself and guide the particle to efficiently search for optimization.

- (3)

- PTG-noIE stands for eliminating the template interval expansion strategy. PTG is superior to PTG-noIE in 12 functions, especially in complex functions such as F5, F6, and F10. This shows that the template interval expansion strategy can help particles jump out of local optimization and enhance the exploration ability of the algorithm.

It can also be seen from the ablation experiment that the function value rarely changes in order of magnitude after removing the key strategy, which shows the effectiveness of the algorithm framework guided by the figure of merit signal.

3.3. Comparison of PTG with Other Algorithms

3.3.1. Analysis of Experimental Results of CEC2005 Test Function

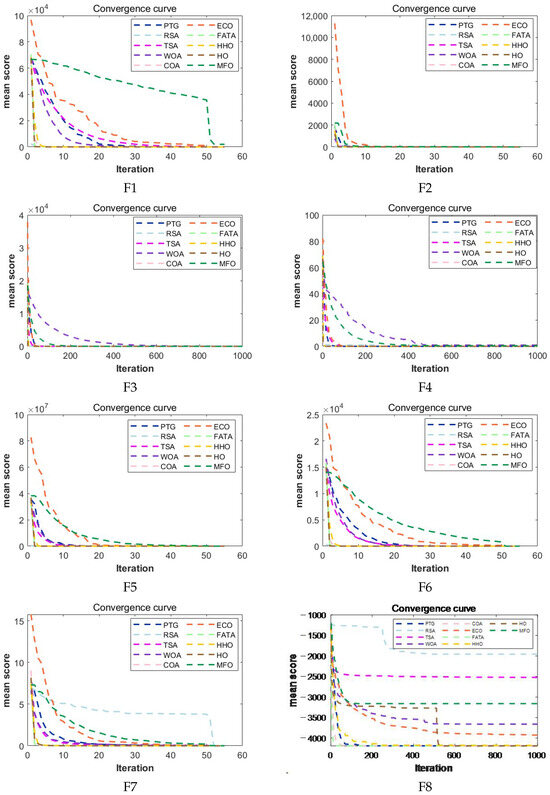

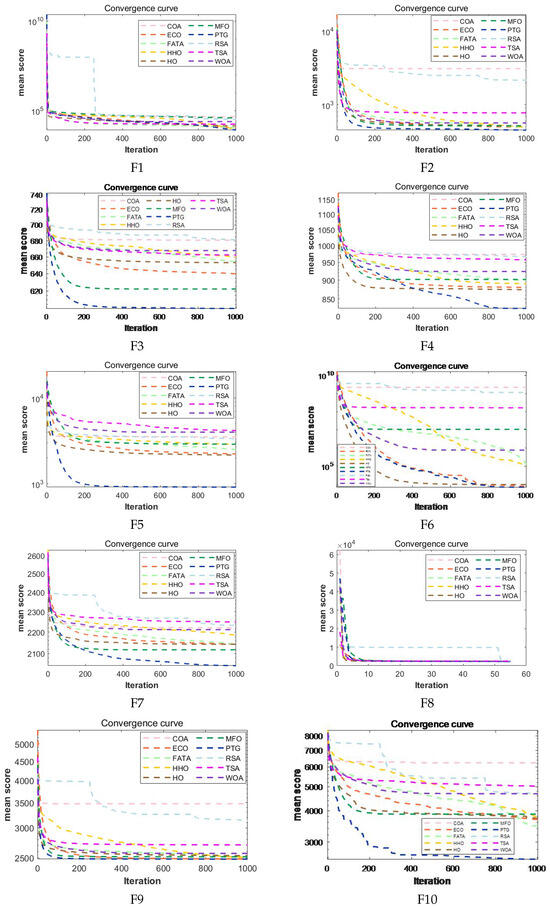

The comparison results between the algorithm and the comparison algorithm based on the CEC2005 test set are shown in Table 3 and Table 4. In the table, we take the average of the optimal solutions obtained by the algorithm in 30 independent runs as the mean of the algorithm. Calculate the standard deviation of these 30 solutions to measure the average deviation degree of data points from the mean. The larger the value, the more dispersed the data distribution. The 10 algorithms are ranked based on their standard deviations and means. The smaller the ranking value, the better the algorithm’s performance. The convergence of the algorithm is shown in Figure 3, where the vertical axis represents the mean value of the algorithm at the same iteration time point during 30 independent runs. For example, the vertical axis corresponding to the point of iteration 1 on the horizontal axis is the mean value of the 30 points with an iteration time of 1 during the 30 independent runs. Because the algorithm converges quickly on some functions, in order to show the convergence trend of the graph more obviously, in the functions F1, F2, F5, F6, F7, F11, F12, F13, F14, F15, F16, F17, and F18, only the first 50 of the 1000 mean points of the algorithm and the solution values corresponding to the 200th, 400th, 600th, 800th, and 1000th iterations are taken as the x-coordinate points.

Table 3.

Performance metrics for algorithms (PTG, WOA, HHO, COA, TSA) on CEC2005 (F1–F23).

Table 4.

Performance metrics for algorithms (RSA, FATA, MFO, ECO, HO) on CEC2005 (F1–F23).

Figure 3.

Convergence curves of PTG and 9 optimization algorithms in F1–F23 on CEC2005.

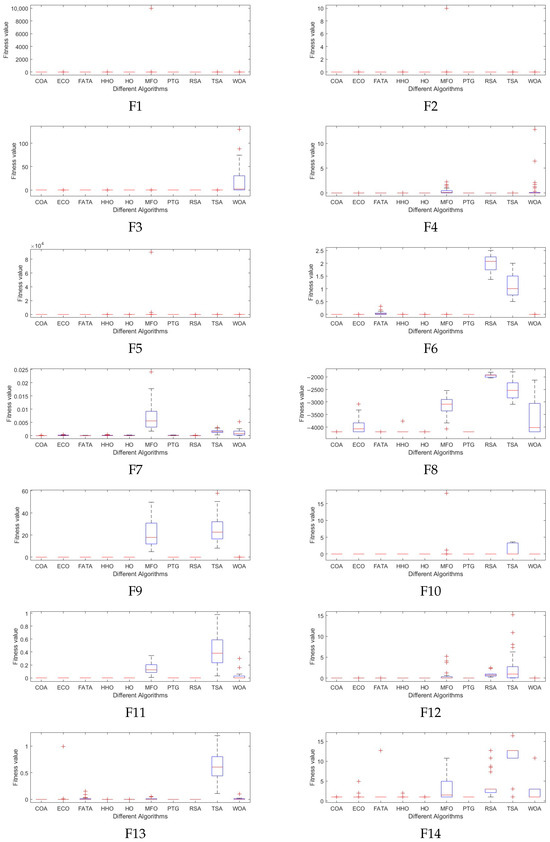

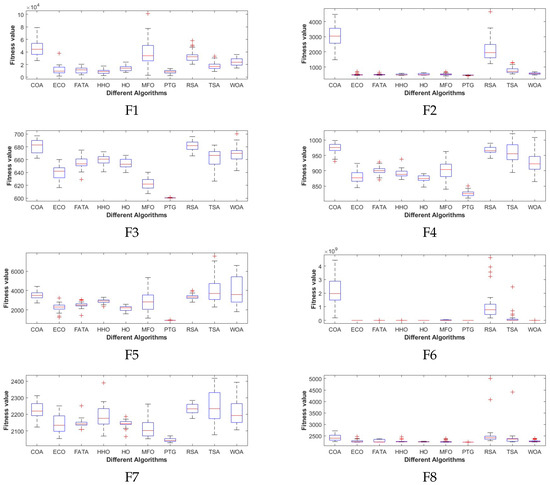

As can be seen from Table 3 and Table 4. In the comparison of unimodal benchmark functions, PTG ranks first among F1-F4 and F6 functions, and the final result of the F5 function is far superior to other algorithms except COA, with a standard deviation of 0 and ranking second. It can be seen from Figure 3 that the convergence speed of the PTG algorithm is not always the fastest among the F1–F4 and F6 functions, but it converges to a solution better than or equal to that of other algorithms before about 50 iterations. In the F4 function, although its convergence speed is slower than that of the four algorithms, it is better than their function values, so PTG has the ability to maintain diversity when converging. As can be seen from Figure 4, in F1–F7, the data of PTG running independently for 30 times presents a centralized distribution, and compared with other algorithms, there are no outliers. A “+” in the figure indicates an outlier of the data. To sum up, it can be seen that the algorithm is effective in solving the unimodal benchmark function problem.

Figure 4.

Box diagram of PTG and 9 optimization algorithms in CEC2005 (F1–F23).

The performance analysis of the algorithm in the F8-F13 multi-dimensional and multimodal test function of CEC2005 is as follows. As can be seen from Table 3 and Table 4, the PTG algorithm always ranks first, and the standard deviation is always the minimum. As can be seen from Figure 3, the PTG algorithm can always converge to a solution better than or equal to other algorithms 200 generations ago. As can be seen from Figure 4, the data of the PTG algorithm is the most concentrated, and there are no outliers. In summary, it can be seen that the algorithm has excellent global search ability.

The evaluation of this algorithm in the multimodal benchmark function of F14–F23 is as follows. As can be seen from Table 3 and Table 4, except for the functions of F15, F20, and F21, the algorithm ranks first in the other seven functions and second only to the HO algorithm in the function of F20. From Figure 3, in the F22 function, the algorithm found the excellent solution again after converging to the excellent value and maintaining a certain number of iterations, indicating that the algorithm has a strong ability to balance exploration and exploitation.

3.3.2. Analysis of Experimental Results of CEC2022 Test Function

The comparison results of PTG and other algorithms on CEC2022 are shown in Table 5 and Table 6. In the table, we take the average of the optimal solutions obtained by the algorithm in 30 independent runs as the mean of the algorithm. Calculate the standard deviation of these 30 solutions to measure the average deviation degree of data points from the mean. The larger the value, the more dispersed the data distribution. The 10 algorithms are ranked based on their standard deviations and means. The higher the ranking, the better the performance of the algorithm. It can be seen from the table that PTG ranks first among all 12 algorithms and has the highest solution accuracy. Compared with other algorithms, PTG has the smallest standard deviation and the best stability. In the F1 unimodal function, this algorithm is significantly superior to the comparison algorithm, proving that this algorithm has a strong global search ability. In the F5 multimodal function, the algorithm is one order of magnitude better than other algorithms, which means that the algorithm has the ability to traverse the multi-peak terrain so that the population can continuously find better areas. In the mixed function F6, the solution result of the algorithm is significantly better than that of other algorithms, indicating that the algorithm has the ability to solve complex problems.

Table 5.

Performance Comparison of Algorithms (PTG, WOA, HHO, COA, TSA) on CEC2022.

Table 6.

Performance Comparison of Algorithms (RSA, FATA, MFO, ECO, HO) on CEC2022.

Since CEC2022 contains a variety of test functions, we record the time required for the algorithm to run once on each function in Table 5 and Table 6. As can be seen from the table, the running time of PTG is longer, only better than that of HO, and it runs more than 2 s longer than other algorithms. After analysis, the algorithm’s main time is spent in the template generation stage. At this stage, after the template determines the base point and the extended interval, the dimension information of the particles is integrated to generate the template. Through the ablation experiment, it can be concluded that this design is reasonable and effective. The generated template integrates historical information to guide particles to optimize efficiently during the template guidance stage, while eliminating the need for custom parameters in the algorithm and solving the difficulty of setting algorithm parameters. The experiments in the next section also prove that the algorithm has high accuracy and high stability.

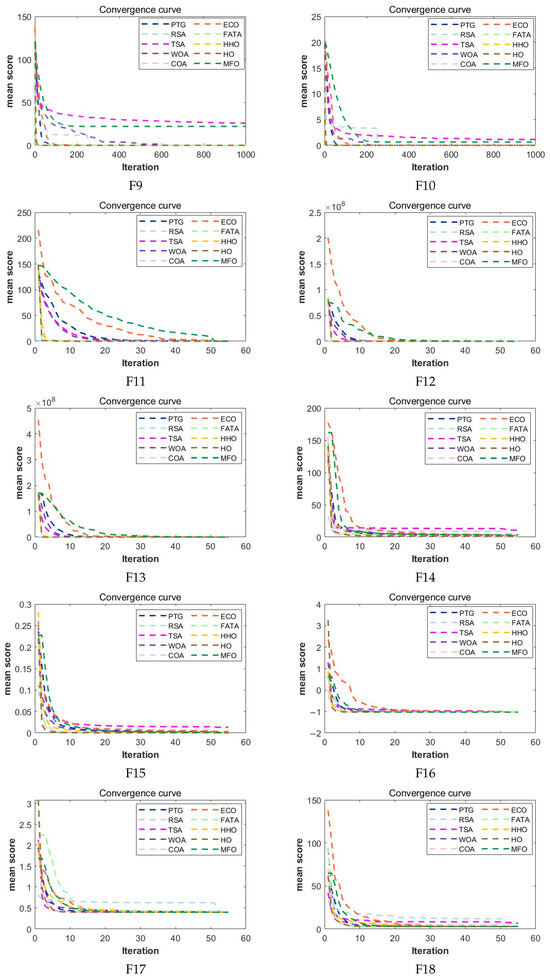

Convergence curves of PTG and 9 optimization algorithms on CEC2022 is shown in Figure 5, where the vertical axis represents the mean value of the algorithm at the same iteration time point during 30 independent runs. Since the algorithm converges very quickly on some functions (8), in order to more obviously show the convergence trend, in function F8, only the first 50 of the 1000 mean points of the algorithm and the solution values corresponding to the 200th, 400th, 600th, 800th, and 1000th iterations are taken as X-coordinate points. As can be seen from the figure, among the F2, F3, F9, F10, and F12 functions, compared with other algorithms, PTG has a faster convergence speed. Especially in the F10 function, the algorithm did not stop when it iterated to the 200th, 400th, and 600th generations and could still find better solutions, demonstrating the algorithm’s excellent development capabilities. In the F4, F5, F6, and F7 functions, the convergence speed of the algorithm in the early stage is slower than that of some algorithms, but it gradually speeds up in subsequent iterations. When other algorithms stagnated, PTG caught up with and surpassed them, obtaining the optimal solution.

Figure 5.

Convergence curves (F1–F12) of PTG and 9 optimization algorithms on CEC2022.

The box plots of optimization algorithms on CEC2022 benchmark functions are shown in Figure 6, where a “” indicates an outlier of the data. It is drawn using the 30 optimal solutions obtained from 30 independent runs of each algorithm. The box plot helps us analyze the degree of dispersion and outliers of the data. As can be seen from the figure, compared with other comparison algorithms, our algorithm has a more concentrated distribution on the F1, F2, F3, F5, F6, F8, F9, F10, F11, and F12 functions. Among the 12 functions, except for the F10 function, PTG generates at most one outlier and has relatively high stability.

Figure 6.

Box plots (F1–F12) of optimization algorithms on CEC2022 benchmark functions.

3.3.3. Statistical Testing

The Friedman test [50] is a nonparametric test method that uses rank to test whether there are significant differences between multiple population distributions. The test results are shown in Table 7. As can be seen from the table, among the CEC2005 and CEC2022 test functions, the proposed algorithm ranks first in the Friedman rank test, and it can be concluded that PTG is obviously superior to other algorithms.

Table 7.

Results of the Friedman rank test.

3.4. Engineering Problem Experiment

We applied PTG to four engineering problems, using 30 independent runs, with 30 individuals in the population and 1000 iterations.

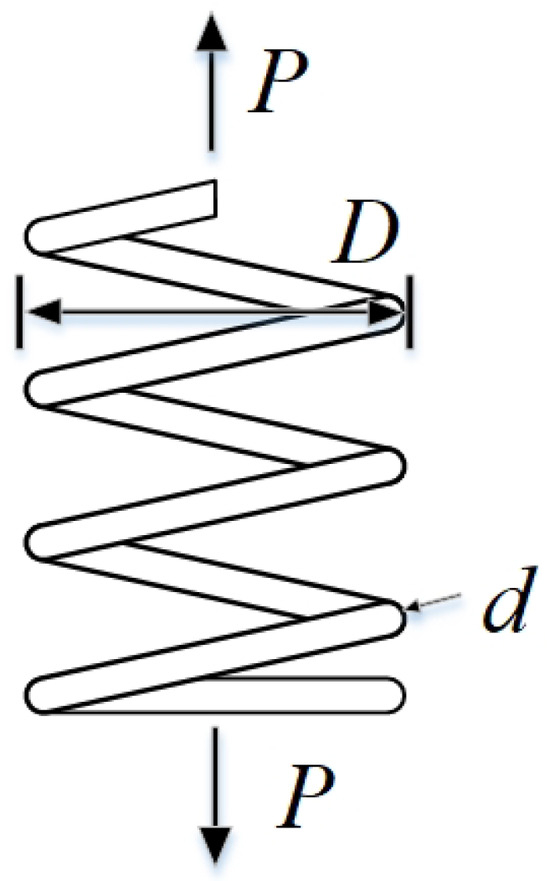

3.4.1. The Problem of Compression Spring Design

This problem involves three parameters, including the diameter of spring wire , the average diameter of spring coil , and the number of active coils . The goal is to minimize the weight of a spring. When the spring is subjected to an external force, several factors are constrained: the maximum allowable deformation at its end or designated position, the ratio of shear force generated by the internal material due to the external force to the material’s cross-sectional area, and the number of vibrations per unit time when subjected to a periodic external force. For specific definitions, see Equations (25)–(27), and the problem diagram is shown in Figure 7.

Figure 7.

Schematic diagram of design problems of compression spring.

Definition:

Minimization function:

Set of constraint function:

The solution results of PTG and other comparison algorithms are shown in Table 8. Thus, compared with other algorithms, PTG obtains the optimal value, and the mean and mean square error of 30 independent operations are the smallest, and the algorithm results are the most stable, and its performance in dealing with pressure vessel design is better than that of competitive algorithms.

Table 8.

Comparison results of PTG and advanced algorithm in compression spring design.

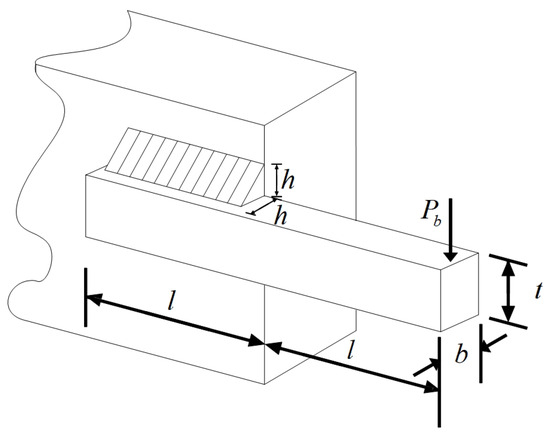

3.4.2. Welded Beam Structure Problem

The structural design of a welded beam involves four parameters, including weld thickness h, bar clamping length l, bar height t, and bar thickness b. The objective function is to obtain the minimum manufacturing cost of a welded beam under the premise of satisfying the shear stress , the bending stress in the beam , the bending load of the beam , and the deflection of the beam end . The specific problem is illustrated in Figure 8. For specific definitions, see Equations (28)–(30).

Figure 8.

Schematic diagram of structural design problems of welded beams.

Definition of the problem:

The objective function:

Constraint setting:

The solution results of PTG and other comparison algorithms are shown in Table 9. After the PTG solution, the optimal solution is , and the corresponding value of the objective function is 1.724399. Thus, compared with other algorithms, PTG obtains the optimal value, and the mean and mean square error of 30 independent runs are the smallest, and the algorithm results are the most stable, and its performance in welding beam structure design is better than that of competitive algorithms.

Table 9.

Comparison results of PTG and advanced algorithm in structural design of welded beams.

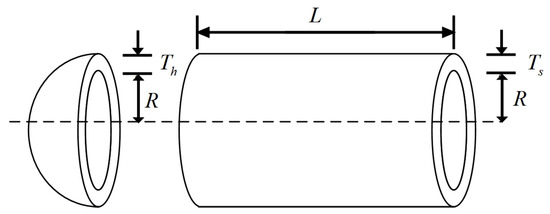

3.4.3. Pressure Vessel Design Problem

The goal of pressure vessel design is to minimize the manufacturing cost of a pressure vessel (pairing, forming, and welding). Both ends of the pressure vessel are capped with caps, and the head end is capped in a hemispherical shape. The wall thickness of the cylinder part and the wall thickness of the head are integer multiples of 0.625, and the goal is to minimize the manufacturing cost of welded beams under the condition of satisfying constraints. The design of a pressure vessel is shown in Figure 9. For specific definitions, see Equations (31)–(33).

Figure 9.

Schematic diagram of pressure vessel design problems.

Definition of the problem:

The objective function:

Constraint setting:

The solution results of PTG and other comparative algorithms are shown in Table 10. The L value obtained by all the algorithms is 200. After the PTG solution, the optimal solution is , , , , and the corresponding value of the objective function is 753.5014127298541. It can be seen from the table that PTG has obtained the optimal value compared with other algorithms except MFO and ECO, and the mean square deviation of 30 independent runs is the smallest, and the algorithm results are the most stable. The optimal value of PTG is second only to MFO and ECO, and the mean and mean square deviation of 30 independent operations are better than ECO.

Table 10.

Comparison results of PTG and advanced algorithm in pressure vessel design.

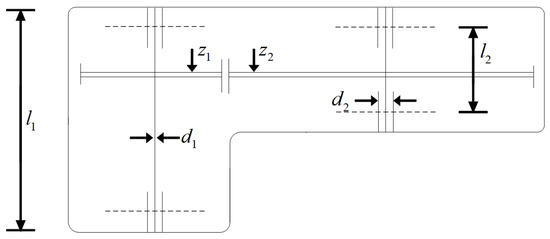

3.4.4. Reducer Design Issues

There are seven parameters involved in the design of the reducer, including width b, number of tooth dies m, number of gear teeth z, length of the first shaft between bearings , length of the second shaft between bearings , diameter of the first shaft , and diameter of the second shaft . By optimizing the weight of the gear and the axial deformation of the shaft, the weight of the reducer is minimized. The diagram of the problem is shown in Figure 10. For specific definitions, see Equations (34)–(36).

Figure 10.

Schematic diagram of reducer design problems.

Definition of the problem:

The objective function:

Constraint setting:

The solution results of PTG and other comparative algorithms are shown in Table 11. , , , . Thus, the optimal value of PTG is better than FATA, and the same optimal value is obtained as that of the other two comparative algorithms, but its average value and mean square error of 30 independent runs are the smallest among all algorithms, and the algorithm results are the most stable, and its performance in reducer design problems is better than that of competitive algorithms.

Table 11.

Comparison results of PTG and advanced algorithm in reducer design.

4. Conclusions

Existing heuristic algorithms are mostly based on inspiration sources, which are random and unstable. Therefore, based on the optimization principle, this paper proposes a highly stable algorithm named PTG. In particular, PTG can effectively use the knowledge of the particle’s own dimension distribution to accelerate the convergence of particle optimization and also effectively use the knowledge of population distribution to help the particle jump out of local optimization. Different from other comparison algorithms, PTG preserves and uses the random particles of the population with constant merit, which helps the algorithm trace back the previous limited points and prevents missing the merit solution.

The experimental results show that compared with the other nine comparison algorithms, PTG performs best among 23 classical benchmark functions and CEC2022 benchmark functions, which shows that PTG is suitable for solving multimodal problems and unimodal benchmark functions with global convergence. In addition, PTG has solved four engineering problems, including spring compression design, welded beam structure, pressure vessel, and reducer design, which have practical application value. In addition, PTG ranks first in the Friedman test, which shows that PTG has obvious advantages in two different types of test functions. Although PTG has many advantages, there are still several limitations. Firstly, when dealing with high-dimensional data, the performance of PTG is similar to that of contrast algorithms, which indicates that the dimension distribution strategy requires a more comprehensive design to handle high-dimensional complex problems. Secondly, real-world problems are intricate and complex. For issues under complex constraints, the PTG algorithm may perform poorly, and all these need to be improved in future work. And in the future, we also plan to further expand the PTG algorithm to solve multi-objective optimization problems and use it to optimize large deep models.

Author Contributions

Conceptualization, D.H.; Data curation, D.H.; Formal analysis, D.H.; Investigation, D.H.; Methodology, D.H.; Project administration, X.H.; Resources, D.H.; Software, D.H.; Supervision, X.H.; Validation, D.H.; Visualization, D.H.; Writing—original draft, D.H.; Writing—review and editing, D.H., X.H., M.G., Y.C. and T.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the National Social Science Foundation of China (24BTJ041).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are contained in this paper. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, S.; Chen, H.; Wang, M.; Heidari, A.A.; Mirjalili, S. Slime mould algorithm: A new method for stochastic optimization. Future Gener. Comput. Syst. 2020, 111, 300–323. [Google Scholar] [CrossRef]

- Mi, Z.; Yang, B. Heuristic Algorithms. Cornell University Computational Optimization Open Textbook. 2024. Available online: https://optimization.cbe.cornell.edu/index.php?title=Heuristic_algorithms (accessed on 15 December 2024).

- Yu, D.M.; Cheng, B.R.; Cheng, H.; Wu, L.; Li, X.Y. A quantum portfolio optimization algorithm based on hard constraint and warm starting. J. Univ. Electron. Sci. Technol. China 2025, 54, 116–124. [Google Scholar] [CrossRef]

- Zhang, X.; Yu, L.; Li, S.; Zhang, A.; Zhang, B. Research Progress of Metaheuristic Algorithm in Path Planning of Plant Protection UAV. J. Agric. Mech. Res. 2025, 47, 1–9. [Google Scholar]

- Roni, M.H.K.; Rana, M.; Pota, H.; Hasan, M.M.; Hussain, M.S. Recent trends in bio-inspired meta-heuristic optimization techniques in control applications for electrical systems: A review. Int. J. Dyn. Control. 2022, 10, 999–1011. [Google Scholar] [CrossRef]

- Natesha, B.; Guddeti, R.M.R. Meta-heuristic based hybrid service placement strategies for two-level fog computing architecture. J. Netw. Syst. Manag. 2022, 30, 47. [Google Scholar] [CrossRef]

- Bhavya, R.; Elango, L. Ant-inspired metaheuristic algorithms for combinatorial optimization problems in water resources management. Water 2023, 15, 1712. [Google Scholar] [CrossRef]

- Alghamdi, M. Smart city urban planning using an evolutionary deep learning model. Soft Comput. 2024, 28, 447–459. [Google Scholar] [CrossRef]

- Yang, J.; Xia, Y. Coverage and routing optimization of wireless sensor networks using improved cuckoo algorithm. IEEE Access 2024, 12, 39564–39577. [Google Scholar] [CrossRef]

- Woodcock, A.; Zhang, L. Genetic Algorithm with Reinforcement Learning based Parameter Optimisation. In Proceedings of the 2024 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Kuching, Malaysia, 6–10 October 2024; pp. 4833–4839. [Google Scholar] [CrossRef]

- Xu, Q.; Meng, Z. Differential Evolution with multi-stage parameter adaptation and diversity enhancement mechanism for numerical optimization. Swarm Evol. Comput. 2025, 92, 101829. [Google Scholar] [CrossRef]

- Zhong, J.; Dong, J.; Liu, W.L.; Feng, L.; Zhang, J. Multiform Genetic Programming Framework for Symbolic Regression Problems. IEEE Trans. Evol. Comput. 2025, 29, 429–443. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, H. Enhancing robot path planning through a twin-reinforced chimp optimization algorithm and evolutionary programming algorithm. IEEE Access 2023, 12, 170057–170078. [Google Scholar] [CrossRef]

- Hussien, A.G.; Heidari, A.A.; Ye, X.; Liang, G.; Chen, H.; Pan, Z. Boosting whale optimization with evolution strategy and Gaussian random walks: An image segmentation method. Eng. Comput. 2023, 39, 1935–1979. [Google Scholar] [CrossRef]

- Zhang, Z.; Gao, Y.; Liu, Y.; Zuo, W. A hybrid biogeography-based optimization algorithm to solve high-dimensional optimization problems and real-world engineering problems. Appl. Soft Comput. 2023, 144, 110514. [Google Scholar] [CrossRef]

- Su, F.; Wang, Y.; Yang, S.; Yao, Y. A Manifold-Guided Gravitational Search Algorithm for High-Dimensional Global Optimization Problems. Int. J. Intell. Syst. 2024, 2024, 5806437. [Google Scholar] [CrossRef]

- Wei, J.; Zhang, Y.; Wei, W. Augmenting Particle Swarm Optimization with Simulated Annealing and Dimensional Learning for UAVs Path Planning. In Proceedings of the 2024 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Kuching, Malaysia, 6–10 October 2024; pp. 3721–3726. [Google Scholar] [CrossRef]

- Abirami, A.; Palanikumar, S. BBBC-DDRL: A hybrid big-bang big-crunch optimization and deliberated deep reinforced learning mechanisms for cyber-attack detection. Comput. Electr. Eng. 2023, 109, 108773. [Google Scholar] [CrossRef]

- Zhu, C.; Zhang, Y.; Wang, M.; Deng, J.; Cai, Y.; Wei, W.; Guo, M. Optimization, validation and analyses of a hybrid PV-battery-diesel power system using enhanced electromagnetic field optimization algorithm and ε-constraint. Energy Rep. 2024, 11, 5335–5349. [Google Scholar] [CrossRef]

- Gharehchopogh, F.S. Quantum-inspired metaheuristic algorithms: Comprehensive survey and classification. Artif. Intell. Rev. 2023, 56, 5479–5543. [Google Scholar] [CrossRef]

- Charest, T.; Green, R.C. Implementing Central Force optimization on the Intel Xeon Phi. In Proceedings of the 2020 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), New Orleans, LA, USA, 18–22 May 2020; IEEE: New York, NY, USA, 2020; pp. 502–511. [Google Scholar]

- Talatahari, S.; Azizi, M. An extensive review of charged system search algorithm for engineering optimization applications. In Nature-Inspired Metaheuristic Algorithms for Engineering Optimization Applications; Springer: Singapore, 2021; pp. 309–334. [Google Scholar]

- Kaveh, A.; Kaveh, A. Ray optimization algorithm. In Advances in Metaheuristic Algorithms for Optimal Design of Structures; Springer: Singapore, 2017; pp. 237–280. [Google Scholar]

- Bao, Y.Y.; Xing, C.; Wang, J.S.; Zhao, X.R.; Zhang, X.Y.; Zheng, Y. Improved teaching–learning-based optimization algorithm with Cauchy mutation and chaotic operators. Appl. Intell. 2023, 53, 21362–21389. [Google Scholar] [CrossRef]

- Tang, Y.; Zhou, F. An improved imperialist competition algorithm with adaptive differential mutation assimilation strategy for function optimization. Expert Syst. Appl. 2023, 211, 118686. [Google Scholar] [CrossRef]

- Lian, J.; Zhu, T.; Ma, L.; Wu, X.; Heidari, A.A.; Chen, Y.; Chen, H.; Hui, G. The educational competition optimizer. Int. J. Syst. Sci. 2024, 55, 3185–3222. [Google Scholar] [CrossRef]

- Du, J.; Wen, Y.; Wang, L.; Zhang, P.; Fei, M.; Pardalos, P.M. An adaptive human learning optimization with enhanced exploration–exploitation balance. Ann. Math. Artif. Intell. 2023, 91, 177–216. [Google Scholar] [CrossRef]

- Rehman, H.; Sajid, I.; Sarwar, A.; Tariq, M.; Bakhsh, F.I.; Ahmad, S.; Mahmoud, H.A.; Aziz, A. Driving training-based optimization (DTBO) for global maximum power point tracking for a photovoltaic system under partial shading condition. IET Renew. Power Gener. 2023, 17, 2542–2562. [Google Scholar] [CrossRef]

- Daqaq, F.; Hassan, M.H.; Kamel, S.; Hussien, A.G. A leader supply-demand-based optimization for large scale optimal power flow problem considering renewable energy generations. Sci. Rep. 2023, 13, 14591. [Google Scholar] [CrossRef] [PubMed]

- Bao, Y.Y.; Wang, J.S.; Liu, J.X.; Zhao, X.R.; Yang, Q.D.; Zhang, S.H. Student psychology based optimization algorithm integrating differential evolution and hierarchical learning for solving data clustering problems. Evol. Intell. 2025, 18, 1–23. [Google Scholar] [CrossRef]

- Wang, Y.; Zhou, S. An improved poor and rich optimization algorithm. PLoS ONE 2023, 18, e0267633. [Google Scholar] [CrossRef]

- Wang, X.; Xu, J.; Huang, C. Fans Optimizer: A human-inspired optimizer for mechanical design problems optimization. Expert Syst. Appl. 2023, 228, 120242. [Google Scholar] [CrossRef]

- Bashkandi, A.H.; Sadoughi, K.; Aflaki, F.; Alkhazaleh, H.A.; Mohammadi, H.; Jimenez, G. Combination of political optimizer, particle swarm optimizer, and convolutional neural network for brain tumor detection. Biomed. Signal Process. Control. 2023, 81, 104434. [Google Scholar] [CrossRef]

- Elleuch, S.; Brahmi, I.; Hamdi, M.; Zarai, F. Efficient PSO Coupled with a Local Search Heuristic for Radio Resource Allocation in V2X Communications. In Proceedings of the 2024 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Kuching, Malaysia, 6–10 October 2024; pp. 2507–2512. [Google Scholar] [CrossRef]

- Zamani, H.; Nadimi-Shahraki, M.H.; Mirjalili, S.; Soleimanian Gharehchopogh, F.; Oliva, D. A critical review of moth-flame optimization algorithm and its variants: Structural reviewing, performance evaluation, and statistical analysis. Arch. Comput. Methods Eng. 2024, 31, 2177–2225. [Google Scholar] [CrossRef]

- Amiriebrahimabadi, M.; Mansouri, N. A comprehensive survey of feature selection techniques based on whale optimization algorithm. Multimed. Tools Appl. 2024, 83, 47775–47846. [Google Scholar] [CrossRef]

- Akl, D.T.; Saafan, M.M.; Haikal, A.Y.; El-Gendy, E.M. IHHO: An improved Harris Hawks optimization algorithm for solving engineering problems. Neural Comput. Appl. 2024, 36, 12185–12298. [Google Scholar] [CrossRef]

- Dehghani, M.; Montazeri, Z.; Trojovská, E.; Trojovskỳ, P. Coati Optimization Algorithm: A new bio-inspired metaheuristic algorithm for solving optimization problems. Knowl.-Based Syst. 2023, 259, 110011. [Google Scholar] [CrossRef]

- Jumakhan, H.; Abouelnour, S.; Redhaei, A.A.; Makhadmeh, S.N.; Al-Betar, M.A. Recent Versions and Applications of Tunicate Swarm Algorithm. Arch. Comput. Methods Eng. 2025, 1–30. [Google Scholar] [CrossRef]

- Abualigah, L.; Abd Elaziz, M.; Sumari, P.; Geem, Z.W.; Gandomi, A.H. Reptile Search Algorithm (RSA): A nature-inspired meta-heuristic optimizer. Expert Syst. Appl. 2022, 191, 116158. [Google Scholar] [CrossRef]

- Punia, P.; Raj, A.; Kumar, P. Enhanced zebra optimization algorithm for reliability redundancy allocation and engineering optimization problems. Clust. Comput. 2025, 28, 267. [Google Scholar] [CrossRef]

- Zhu, S.; Pun, C.M.; Zhu, H.; Li, S.; Huang, X.; Gao, H. An artificial bee colony algorithm with a balance strategy for wireless sensor network. Appl. Soft Comput. 2023, 136, 110083. [Google Scholar] [CrossRef]

- Sharma, N.; Gupta, V.; Johri, P.; Elngar, A.A. SHO-CH: Spotted hyena optimization for cluster head selection to optimize energy in wireless sensor network. Peer-to-Peer Netw. Appl. 2025, 18, 1–18. [Google Scholar] [CrossRef]

- Guo, J.; Zhou, G.; Yan, K.; Shi, B.; Di, Y.; Sato, Y. A novel hermit crab optimization algorithm. Sci. Rep. 2023, 13, 9934. [Google Scholar] [CrossRef]

- Alirezapour, H.; Mansouri, N.; Mohammad Hasani Zade, B. A comprehensive survey on feature selection with grasshopper optimization algorithm. Neural Process. Lett. 2024, 56, 28. [Google Scholar] [CrossRef]

- Yao, X.; Liu, Y.; Lin, G. Evolutionary programming made faster. IEEE Trans. Evol. Comput. 1999, 3, 82–102. [Google Scholar] [CrossRef]

- Kumar, A.; Price, K.V.; Mohamed, A.W.; Hadi, A.A.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the 2022 Special Session and Competition on Single Objective Bound Constrained Numerical Optimization; Technical Report; Nanyang Technological University: Singapore, 2021. [Google Scholar]

- Qi, A.; Zhao, D.; Heidari, A.A.; Liu, L.; Chen, Y.; Chen, H. FATA: An efficient optimization method based on geophysics. Neurocomputing 2024, 607, 128289. [Google Scholar] [CrossRef]

- Amiri, M.H.; Hashjin, N.M.; Montazeri, M.; Mirjalili, S.; Khodadadi, N. Hippopotamus optimization algorithm: A novel nature-inspired optimization algorithm. Sci. Rep. 2024, 14, 5032. [Google Scholar] [CrossRef] [PubMed]

- Watanabe, K. Current status of the position on labor progress prediction for contemporary pregnant women using Friedman curves: An updated review. J. Obstet. Gynaecol. Res. 2024, 50, 313–321. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).