Abstract

Lithology identification is one of the most important research areas in petroleum engineering, including reservoir characterization, formation evaluation, and reservoir modeling. Due to the complex structural environment, diverse lithofacies types, and differences in logging data and core data recording standards, there is significant overlap in the logging responses between different lithologies in the second member of the Lucaogou Formation in the Santanghu Basin. Machine learning methods have demonstrated powerful nonlinear capabilities that have a strong advantage in addressing complex nonlinear relationships between data. In this paper, based on felsic content, the lithologies in the study area are classified into four categories from high to low: tuff, dolomitic tuff, tuffaceous dolomite, and dolomite. We also study select logging attributes that are sensitive to lithology, such as natural gamma, acoustic travel time, neutron, and compensated density. Using machine learning methods, XGBoost, random forest, and support vector regression were selected to conduct lithology identification and favorable reservoir prediction in the study. The prediction results show that when trained with 80% of the predictors, the prediction performance of all three models has improved to varying degrees. Among them, Random Forest performed best in predicting felsic content, with an MAE of 0.11, an MSE of 0.020, an RMSE of 0.14, and a R2 of 0.43. XGBoost ranked second, with an MAE of 0.12, an MSE of 0.022, an RMSE of 0.15, and an R2 of 0.42. SVR performed the poorest. By comparing the actual core data with the predicted data, it was found that the results are relatively close to the XRD results, indicating that the prediction accuracy is high.

1. Introduction

In the process of well logging reservoir evaluation, lithology identification plays a crucial role. Coring in drilling is the most reliable technique for lithology identification. However, due to the high costs and limitations on core recovery rates, it is generally not feasible to core all intervals of a well. In this case, relying on well logging data for lithology analysis has become a critical technical approach. Currently, traditional methods and intelligent recognition methods are the primary analytical tools [1].

Traditional methods include the chart method, qualitative interpretation based on logging curves, and quantitative interpretation based on response equations. These largely rely on linear data and demonstrate limited capability in capturing the nonlinear relationships between logging parameters and lithology, especially under complex geological conditions. For instance, lithology identification models based on probabilistic and statistical analysis often depend heavily on prior information and are highly sensitive to assumptions about data distribution. Similarly, the crossplot method analyzes two-dimensional or three-dimensional intersections of logging parameters, but it relies strongly on the empirical judgment of geological experts and performs poorly in complex lithological transition zones. To sum up, these methods have significant limitations when identifying complex carbonate lithologies, and most can only distinguish a limited number of lithologies, including limestone and dolomite [2,3,4].

Due to the rapid development of machine learning technology, intelligent machine learning methods have been widely applied in lithology identification. Many scholars have used support vector machines [5,6], neural networks [7,8,9], fuzzy recognition [10,11], and traditional decision tree methods [12,13,14] to classify and identify complex carbonate rock lithology and carbonate rock diagenetic facies. These methods demonstrate advantages in handling large amounts of data and recognizing complex patterns, which helps improve the accuracy and efficiency of lithology identification [15]. Therefore, combining traditional logging interpretation methods with machine learning techniques can more effectively identify complex carbonate rock lithology, providing more accurate information for logging reservoir evaluation.

Zhao Jian et al. (2003) [16] utilized density, resistivity, and gamma-ray logging curves to identify lithology in an operational area by analyzing the corresponding logging curve response characteristics in their study of the Songliao Basin in China. Subsequently, Zhang Daquan et al. [17], through core analysis, selected optimal logging curves and established crossplots, which greatly enhanced the identification of lithology in the Junggar area. Following this, other scholars have also applied similar methods in their research. For example, Pang et al. [18] observed core samples, conducted classification, and then used logging curve crossplots, ultimately achieving lithology identification.

The crossplot method is an important technique in geophysical logging, primarily used for lithology identification and interpretation. This method involves using two or more logging parameters (natural gamma ray, resistivity, acoustic transit time, etc.) as the axes to plot a crossplot on a plane. This allows for a visual representation of the characteristics and differences in logging responses for different lithologies or formations [19]. Therefore, the crossplot method is one of the most commonly used methods in lithology identification.

The crossplot method is effective when the lithology is relatively simple and the corresponding features of the logging curves show significant differences. However, when the lithology becomes complex and the differences in the logging curve characteristics of the rocks are not pronounced, it becomes difficult to achieve effective results. In order to deal with this issue, some scholars have proposed using statistical methods for lithology identification. This approach involves organizing and summarizing observational data, followed by classification, to overcome the limitations of the crossplot method. The mathematical and statistical method was first proposed by Delfiner [20], and later, J.M. Busch and others applied this method in practical production, thoroughly demonstrating its feasibility and effectiveness. This method was later introduced to China, where domestic scholars conducted extensive research. Liu Ziyun et al. [21] designed a corresponding program based on this method for lithology identification in the North China region. In 2007, Liu et al. [22] developed a lithology identification model suitable for the study area using multivariate statistical methods. Practical verification has revealed that the multiple discriminant method can effectively identify lithology in most cases. However, it has significant errors when identifying lithology in transitional layers. Therefore, this method is not suitable when the geological features and lithology are complex. Based on the research progress of scholars both domestically and internationally, the mathematical and statistical method still relies heavily on expert experience. However, with the continuous development of computer technology, artificial intelligence has become increasingly complementary to this approach. Combining mathematical and statistical methods with AI for lithology identification has also become a popular research area [23].

In 2013, Wang et al. [24] compared the application effects of neural network and vector machine models and concluded that combining machine learning methods with other optimization algorithms could achieve lower computational costs and better stability. Later, Bhattacharya et al. [25] further validated this by comparing the performance of support vector machines, artificial neural networks, self-organizing maps, and multi-resolution graph-based clustering algorithms in shale lithology identification. They demonstrated that the vector machine outperformed other methods in lithology classification and identification.

In a global machine learning competition organized by Brendon Hall [26], participating teams used various machine learning algorithms for lithology identification. The comparison revealed that the XGBoost algorithm achieved the highest accuracy in lithology classification and identification. Zhou et al. [27] applied the rough set–random forest algorithm to train logging data and sample data, establishing a corresponding discriminant model. This approach was proven to have high accuracy in lithology identification. In 2018, An Peng et al. [28] based their work on the TensorFlow deep learning framework, integrating various techniques such as the Adagrad optimization algorithm, ReLU activation function, and Softmax regression layer into a neural network model. After training with sample data, this model demonstrated extremely high accuracy in conventional lithology identification. In 2019, Yadigar Imamverdiyev et al. [29] developed a one-dimensional convolutional neural network model. Compared to other machine learning methods, this model provided more accurate lithology classification predictions and also performed well in areas with complex geological structures. Chen et al. [30] applied convolutional neural networks to a specific study area and ultimately achieved very promising results. This paper utilizes machine learning methods, employing artificial intelligence for nonlinear prediction, to predict felsic content using logging data. Through research and analysis, three suitable machine learning methods were selected: XGBoost, random forest, and support vector regression. The predicted felsic content, along with the existing TOC content, was used for lithology identification and to predict favorable reservoirs.

2. Model Principles

2.1. Decision Tree and Random Forest Algorithm Principles

The structure of a decision tree consists of several key elements, including the root node, which serves as the starting point of the tree; branches that represent the decision paths; and leaf nodes that indicate the final prediction outcomes. The root node, located at the beginning of the decision tree, serves as the starting point of the entire tree structure. Each branch represents the path leading to a new decision point or a leaf node. Each decision node reflects a specific objective or requirement, usually associated with the characteristics of the target instances. The leaf nodes represent the predicted values derived within a specific range. When traversing the decision tree, the process begins at the root node and moves downward step by step. At each node, a prediction is made, and based on the different prediction outcomes, the path continues along the corresponding branch until it reaches a leaf node. This process embodies the core of the decision tree algorithm, which is to use multiple variables to perform classification prediction or regression analysis on the target. Figure 1 illustrates a simple conceptual diagram of the decision tree principle.

Figure 1.

Schematic Diagram of the Principle of Decision Tree.

Random forest (RF) is an ensemble learning method that improves prediction accuracy and stability by constructing multiple decision trees and integrating their results. This method introduces two main types of randomness when building each decision tree: first, by using bootstrap sampling to generate multiple different training subsets from the original dataset, it ensures that each tree is trained on a different subset of data; second, at each split node, a random subset of features is selected as candidate features, and the best feature is chosen from this subset to perform the split. This further increases diversity among the trees [31]. The final prediction of the random forest is obtained by aggregating the results of multiple decision trees. For classification problems, the majority voting method is typically used; for regression problems, the average of all the trees’ predicted results is calculated as the final output. The advantage of this method lies in its ability to provide high prediction accuracy while effectively resisting overfitting.

2.2. Support Vector Machine Algorithm Principles

Support vector machine (SVM) is a powerful supervised learning model primarily used for classification problems. Its core principle is to find an optimal hyperplane in a high-dimensional space that maximizes the margin between the data points of different classes. The support vectors are the data points closest to this hyperplane, and they determine the position and orientation of the hyperplane [32].

When selecting an appropriate kernel function, the following empirical guidelines are typically followed: If the dataset has a large number of features with high dimensionality, and the data points can be linearly separated, a linear kernel or polynomial kernel is a suitable choice. For such datasets, especially when the number of features far exceeds the number of samples, a linear or polynomial kernel often yields good results. Additionally, these kernels generally perform faster during training compared to other nonlinear kernels. Conversely, when the input data has lower dimensionality and a small sample size, and the data exhibits significant nonlinear characteristics, the Gaussian kernel (a type of radial basis function) is particularly well suited for handling nonlinear problems. Similarly, the sigmoid kernel function is also applicable to datasets with nonlinear characteristics. Although it is not as flexible as the Gaussian kernel, the sigmoid kernel tends to perform better in terms of generalization on unseen data, which helps prevent the model from overfitting [33].

Commonly used kernel functions include

When applied to regression, the variant of support vector machine (SVM) is called support vector regression (SVR). The goal of SVR is to find a function that ensures the predicted values are as close as possible to the actual data points within a given tolerance range. The main difference between SVR and SVM is that in SVR, there is no need to classify samples. The objective is not to find an optimal hyperplane that maximizes the distance between all sample points, as in SVM, but rather to find a hyperplane that brings all sample points as close as possible to it. In SVR, the sample points are located between two tolerance lines, with the distance between these lines and the hyperplane representing a predetermined threshold. This kind of hyperplane minimizes the regression error, similar to the margin maximization in SVM [34].

2.3. XGBoost Algorithm Principles

XGBoost is essentially a type of Gradient-Boosting Decision Tree (GBDT) but with enhancements in speed and efficiency, hence the term “Extreme Boosted”. Its core idea involves the introduction of a regularization term and the optimization of the loss function using both first-order and second-order derivatives. XGBoost supports various types of base classifiers, allows for data sampling, and can handle missing values. XGBoost is an iterative decision tree algorithm that falls under the category of ensemble learning algorithms. Its core idea is to calculate the residuals between the model’s predicted values and the actual values during multiple iterations and then use these residuals as new target variables for training. Through continuous iteration, a strong classifier is formed. As the number of iterations increases, the prediction results of the ensemble classifier become more accurate [35,36]. XGBoost gradually reduces prediction errors by iteratively generating CART trees, thereby improving prediction accuracy. The XGBoost algorithm is illustrated in Figure 2.

Figure 2.

Algorithm diagram of XGBoost.

3. Data Selection and Practical Application

3.1. Analysis of the Relationship Between Logging Parameters and Feldspar Content

- (1)

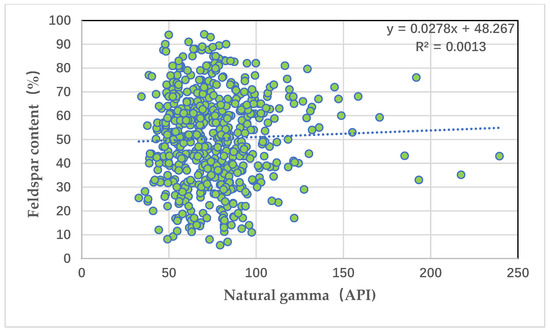

- Intersection plot of natural gamma and feldspar content

Figure 3 depicts an intersection graph of natural gamma and feldspar content. It can be seen from the graph that natural gamma is positively correlated with feldspar content, but the correlation is extremely poor, with a correlation coefficient of only 0.001.

Figure 3.

Intersection plot of natural gamma and feldspar content.

- (2)

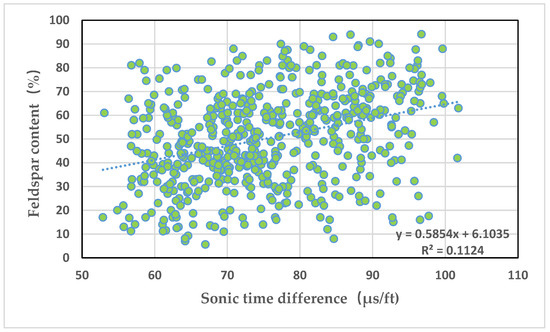

- Intersection plot of acoustic time difference and long quartz content

Figure 4 depicts an intersection graph of the time difference between sound waves and long quartz content. It can be seen from the graph that the time difference of sound waves is positively correlated with the long quartz content, but the correlation is poor, with a correlation coefficient of 0.112.

Figure 4.

Intersection graph of acoustic time difference and long quartz content.

- (3)

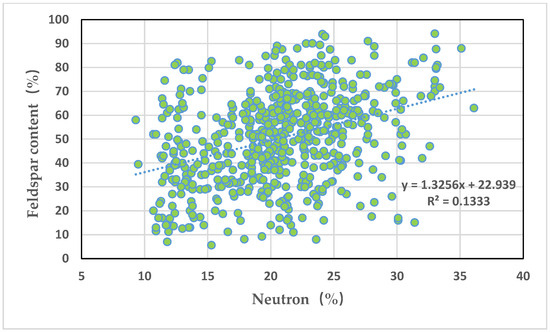

- Intersection plot of neutron and long quartz content

Figure 5 depicts an intersection graph of neutron and long quartz content. It can be seen from the graph that neutron and long quartz content are positively correlated, but the correlation is poor, with a correlation coefficient of 0.133.

Figure 5.

Intersection diagram of neutron and long quartz content.

- (4)

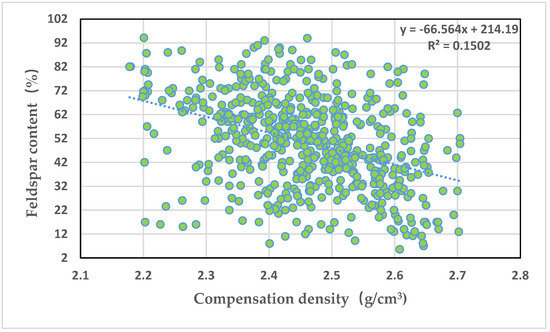

- Intersection plot of compensation density and feldspar content

Figure 6 depicts an intersection graph of compensation density and feldspar content. It can be seen from the graph that the compensation density is positively correlated with the feldspar content, but the correlation is poor, with a correlation coefficient of 0.15.

Figure 6.

Intersection graph of compensation density and feldspar content.

- (5)

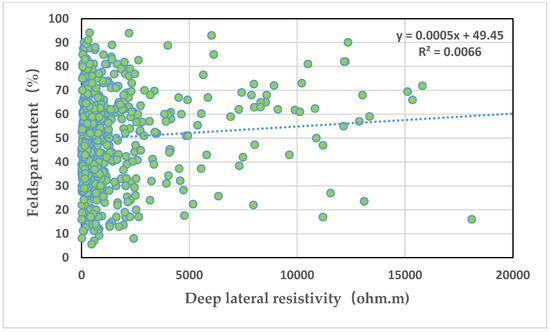

- Intersection plot of deep lateral resistivity and feldspar content

Figure 7 depicts an intersection graph of deep lateral resistivity and long quartz content. It can be seen from the graph that deep lateral resistivity is positively correlated with long quartz content, but the correlation is extremely poor, with a correlation coefficient of 0.007.

Figure 7.

Intersection plot of deep lateral resistivity and feldspar content.

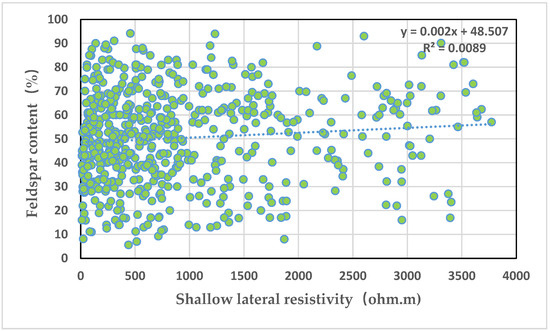

- (6)

- Intersection plot of shallow lateral resistivity and feldspar content

Figure 8 depicts an intersection graph of the shallow lateral resistivity and long quartz content. It can be seen from the graph that the deep lateral resistivity is positively correlated with the long quartz content, but the correlation is extremely poor, with a correlation coefficient of 0.009.

Figure 8.

Intersection plot of shallow lateral resistivity and feldspar content.

The existence of these correlations supports our decision to adopt machine learning algorithms that can handle moderate multicollinearity.

3.2. Data Preparation

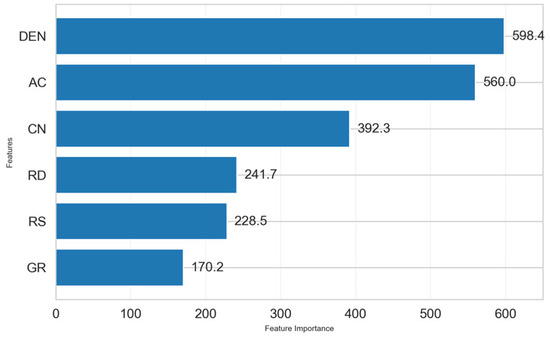

The original felsic dataset in the study area contains 834 sample points. After data preprocessing, the number of sample points was reduced to 493. Two different data splits were chosen: 80% of the data was used as the training set, 20% as the test set, and vice versa—20% as the training set and 80% as the test set. In this paper, the characteristics of the model input were determined through principal component analysis and XGBoost feature importance analysis. The contribution results of these features, evaluated by principal component analysis (PCA), show that the first three principal components cumulatively explain approximately 97.67% of the total variance (Table 1). However, considering the geological information represented by different features, XGBoost feature importance analysis was used simultaneously to confirm that each feature makes a unique contribution to the model (Figure 9). Finally, six input features of the model, namely, GR, AC, CN, DEN, RS, and RD, were determined. However, due to differences in the dimensions and value ranges among these logging curves, min–max normalization of the data was required before model training. The formula for min–max normalization is as follows:

Table 1.

Total variance interpretation table.

Figure 9.

Feature importance.

In this context, Y represents the normalized data; X is the original data; and Xmax and Xmin are the maximum and minimum values of the logging parameter, respectively. After normalization, the data is transformed in a way that enhances the model’s ability to learn from the data effectively.

3.3. Model Evaluation

To compare the predictive performance of different models, this paper introduces several evaluation metrics, including Mean Absolute Error (MAE), Mean Squared Error (MSE), Root Mean Squared Error (RMSE), and the Coefficient of Determination (R-squared). These metrics are used to assess and contrast the accuracy and effectiveness of each model.

- (1)

- Mean Absolute Error (MAE): Also known as norm lossl_1, MAE is obtained by calculating the absolute difference between the predicted value and the actual value for each sample, summing these absolute differences, and then taking the average. The formula for MAE is shown in Equation (6).MAE is the simplest evaluation metric in regression problems, primarily used to assess the closeness between predicted values and actual values. Its value is negatively correlated with the effectiveness of the regression; a lower MAE indicates better regression performance.

- (2)

- Mean Squared Error (MSE): Also known as norm lossl_2, MSE is calculated by taking the difference between each predicted value and the actual value, squaring this difference, summing all the squared differences, and then taking the average. The formula for MSE is shown in Equation (7).MSE is calculated as the average of the squared differences between the predicted values and the actual values. Its value is negatively correlated with the effectiveness of the regression; a lower MSE indicates better regression performance.

- (3)

- Root Mean Squared Error (RMSE): RMSE is obtained by taking the square root of the Mean Squared Error (MSE). The formula for RMSE is shown in Equation (8).Similar to MSE, RMSE is negatively correlated with the effectiveness of the regression; a lower RMSE indicates better regression performance. RMSE addresses the issue of unit inconsistency between input and output that exists with MSE, but it still does not perform well in the presence of outliers.

- (4)

- Coefficient of Determination (R2): The coefficient of determination, also known as the R2 score, reflects the proportion of the variance in the dependent variable that is predictable from the independent variables. As the goodness of fit of the regression model increases, the explanatory power of the independent variables over the dependent variable also increases, causing the actual observation points to cluster more closely around the regression line. The formula for R2 is shown in Equation (9).In the formula , the value of R2 ranges from 0 to 1, where a value closer to 1 indicates the better predictive performance of the model, and a value closer to 0 indicates poorer performance. It is also possible for R2 to be negative, which indicates that the model performs extremely poorly. As the number of input features increases, the R2 value will gradually stabilize at a constant value, at which point, adding more input features will no longer have a significant impact on the model’s prediction accuracy, neither improving nor reducing its performance.

3.4. Model Construction

This study selects three machine learning algorithms—random forest, support vector regression, and XGBoost—to predict felsic content in the study area. For the training dataset, two different ratios for the predictor variables and measured felsic content data were selected: 20% and 80%. After training the models, the remaining data was used as the validation set. The predictor variables in the validation set were then input into the models, and the felsic content was predicted using the three aforementioned machine learning methods.

For each machine learning algorithm adopted in this study, the grid search method was used to determine the hyperparameters of the model. For the XGBoost model, grid search explored the learning rate [0.01, 0.1, 0.2], the maximum tree depth [3, 5, 7], the number of estimators [50, 100, 200], the column sampling rate [0.3, 0.5, 0.8], and the regularized alpha value [1, 5, 10]. For the random forest and support vector regression models, the hyperparameter optimization of the models is carried out in the same way. Finally, the support vector regression model adopted the radial basis function (RBF) as the kernel function and set the regularization parameter, C, to 12.53 and the epsilon value to 0.31. The XGBoost model sets the maximum depth of the tree to 3 to prevent overfitting. The learning rate was selected as 0.01, and 200 decision trees were used for integration simultaneously. In total, 50% of the features of each tree were randomly sampled, and an l1 regularization coefficient of 5 was applied. The random forest regression model consists of 179 decision trees. The maximum depth limit of each tree is five layers, and the minimum number of samples required for internal node splitting is four. The model sets the random seed to 42.

3.4.1. XGBoost Prediction of Felsic Content

Initially, we selected 20% of the predictive factors for training. After achieving the best training results, we input the predictive factors of the test set into the trained model to obtain the predicted results. The evaluation metrics of the predicted results are shown in Table 2:

Table 2.

XGBoost model of 20% of the training samples from Well Lu 1 predicting the felsic quality evaluation index.

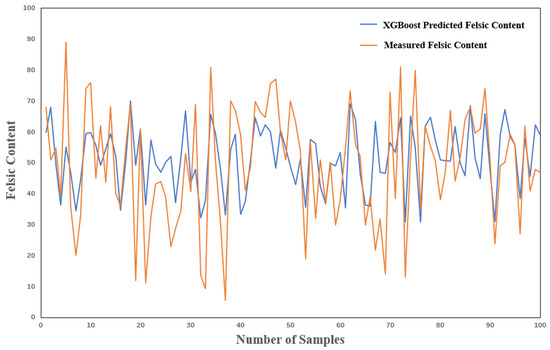

As shown in Table 2, when trained with 20% of the sample data, the MAE of the XGBoost model is 0.19, the MSE is 0.023, the RMSE is 0.15, and the R2 is 0.36. These indicators reflect the initial predictive ability of the model. Among them, an R2 of 0.36 indicates that the model can explain approximately 36% of the feldspar content, which is at a medium predictive level. An MAE value of 0.19 indicates that the average absolute error between the predicted value and the actual value is approximately 0.19, which still has considerable room for improvement in the prediction of feldspar content. The prediction results are shown in Figure 10.

Figure 10.

Effect diagram of 20%-training-sample XGBoost prediction of felsic content in Well Lu 1.

As shown in Figure 10, the fluctuations of the values predicted by the XGBoost model are generally similar to those of the actual values, although there are significant differences between the predicted and actual values at some peaks and troughs. Subsequently, 80% of the predictive factors were selected for training. After achieving the best training results, the predictive factors of the test set were input into the trained model to obtain the predicted results. The evaluation metrics of the predicted results are shown in Table 3:

Table 3.

XGBoost model of 80% of the training samples in Well Lu 1 predicting the felsic evaluation index.

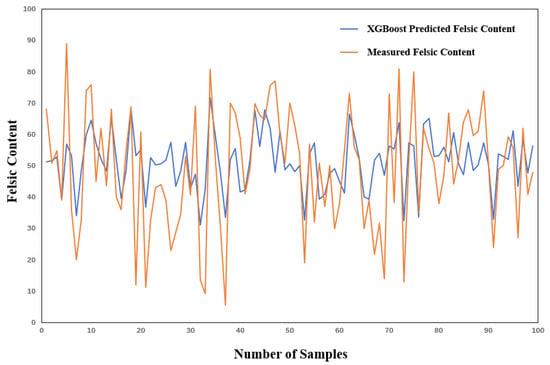

When the proportion of training samples increased to 80% (Table 3), the performance of the XGBoost model was significantly improved: the MAE decreased to 0.12, the MSE slightly dropped to 0.022, and the R2 increased to 0.42. This significant improvement indicates that the XGBoost algorithm is relatively sensitive to the training sample size, and increasing the training data can effectively improve its prediction accuracy. It is particularly worth noting that the significant decline in MAE means a reduction in the average absolute gap between the predicted values and the actual values, which is crucial for the precise classification of lithological types, as in this study; the feldspar content is a key indicator for differentiating different lithologies (such as tuff, dolomitic tuff, tuff dolomite, and dolomite). The prediction results are shown in Figure 11.

Figure 11.

Effect diagram of 80%-training-sample XGBoost prediction of felsic content in Well Lu 1.

3.4.2. SVR Regression Prediction of Felsic Content

Originally, we selected 20% of the predictive factors for training. After achieving the best training results, we input the predictive factors of the test set into the trained model to obtain the predicted results. The evaluation metrics of the predicted results are shown in Table 4.

Table 4.

The SVR model of 20% of the training samples in Well Lu 1 predicting the evaluation index of felsic quality.

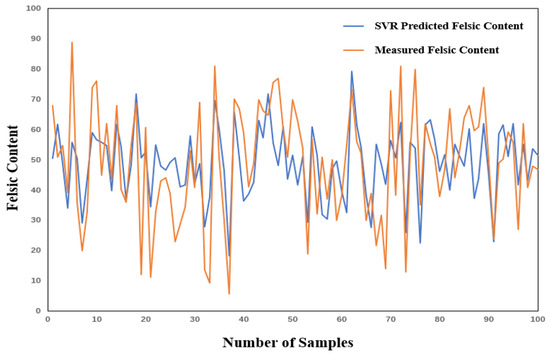

The support vector regression (SVR) model performs well when using 20% of the training samples, as shown in Table 4. Its MAE is 0.12, MSE is 0.021, RMSE is 0.14, and R2 is 0.39. Compared with the XGBoost model under the same proportion of training samples, the MAE of SVR decreased by 36.8%, the MSE decreased by 8.7%, and the R2 increased by 8.3%. This indicates that under the condition of small samples, the SVR model has a better generalization ability, which may be attributable to its characteristics based on the principle of structural risk minimization, as well as the advantages of the kernel function (the Gaussian kernel used in this study) when dealing with nonlinear relationships.

As shown in Figure 12, the fluctuations of the predicted values by the SVR model are generally similar to those of the actual values, although there are significant differences between the predicted and actual values at some peaks and troughs. Subsequently, 80% of the predictive factors were selected for training. After achieving the best training results, the predictive factors of the test set were input into the trained model to obtain the predicted results. The evaluation metrics of the predicted results are shown in Table 5.

Figure 12.

Effect diagram of 20%-training-sample SVR prediction of felsic content in Well Lu 1.

Table 5.

SVR model of 80% of the training samples in Well Lu 1 predicting the evaluation index of the felsic quality.

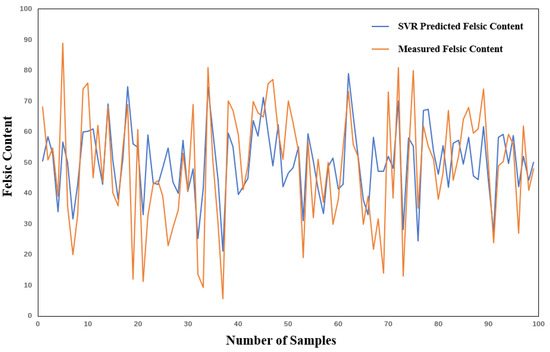

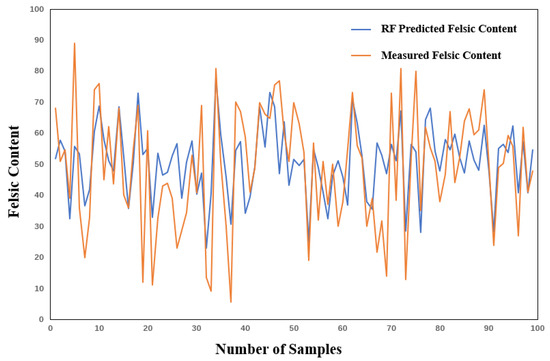

When the training samples increased to 80% (Table 5), the performance of the SVR model was further improved, with MAE dropping to 0.11 and R2 increasing to 0.41. However, compared with the XGBoost model, the performance improvement of the SVR model after increasing the training samples was relatively small (the MAE only decreased by 8.3%, and the R2 increased by 5.1%). This phenomenon indicates that the SVR model was able to capture the patterns in the data relatively well under the condition of small samples, and increasing the sample size has a relatively small marginal effect on the improvement of its performance. From the perspective of practical applications, when the available training data is limited, SVR may be a more suitable choice. The prediction results are shown in Figure 13.

Figure 13.

Effect diagram of 80%-training-sample SVR prediction of felsic content in Well Lu 1.

3.4.3. Random Forest Prediction of Felsic Content

First, we selected 20% of the predictive factors for training. After achieving the best training results, we input the predictive factors of the test set into the trained model to obtain the predicted results. The evaluation metrics of the predicted results are shown in Table 6.

Table 6.

Random forest model of 20% of the training samples in Well Lu 1 predicting the evaluation index of the felsic quality.

The random forest model performs similarly to SVR under the condition of 20% training samples (Table 6), with an MAE of 0.12, an MSE of 0.022, an RMSE of 0.15, and an R2 of 0.39. Although its MSE and RMSE are slightly higher than those of SVR, the MAE and R2 of the two are basically the same, indicating that random forest and SVR have similar predictive abilities under the condition of small samples. The prediction results are shown in Figure 14.

Figure 14.

Effect diagram of 20% of the random forest training samples predicting felsic quality in Well Lu 1.

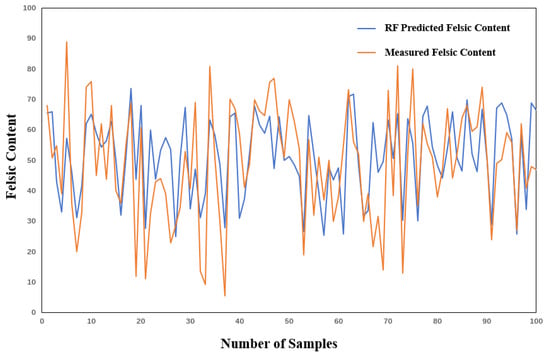

From Table 7, it can be seen that the MAE of the random forest model is 0.11, the MSE is 0.020, the RMSE is 0.14, and the R2 is 0.43. Compared to the results from training with 20% of the predictive factors, the MAE, MSE, and RMSE from training with 80% of the predictive factors have all slightly decreased, and the R2 has slightly improved. The prediction results are shown in Figure 15.

Table 7.

Random forest model of 80% of the training samples in Well Lu 1 predicting the evaluation index of felsic content.

Figure 15.

Effect diagram of 80% of the random forest training samples predicting felsic content in Well Lu 1.

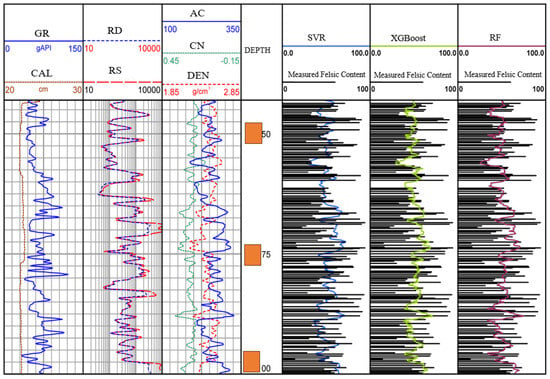

3.5. Analysis of Example Results

The Lu 1 well was selected for practical applications, and the felsic content of this well was predicted using the three models. Figure 9 shows the prediction results of the three models for the felsic content. It can be seen from Figure 16 that all three models provide good prediction results, with the predicted values generally matching the actual values, and the overall shapes of the predicted curves are similar, with only minor differences in some areas. By comparing the different evaluation metrics of the three methods, it can be concluded that the random forest method provides the most accurate predictions of felsic content.

Figure 16.

Three models predicting felsic content diagram.

The analysis shows that the excellent performance of the random forest model mainly stems from the matching of its algorithm design characteristics with the characteristics of the petrophysical data. As an ensemble learning method, random forest effectively alleviates the overfitting problem by aggregating the prediction results of multiple decision trees, which is particularly important for dealing with complex formation characteristic data with a limited sample size. In the identification of complex lithology in the Lucaogou Formation, random forest can capture the nonlinear relationship between logging parameters and long quartz content and has a natural advantage in processing multimodal distributed petrophysical data. In contrast, although support vector regression performs well in finding the optimal hyperplane, it is not as flexible as random forest in dealing with nonlinear relationships.

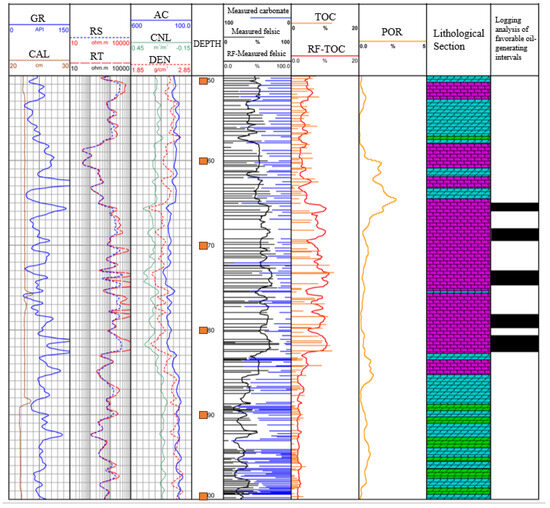

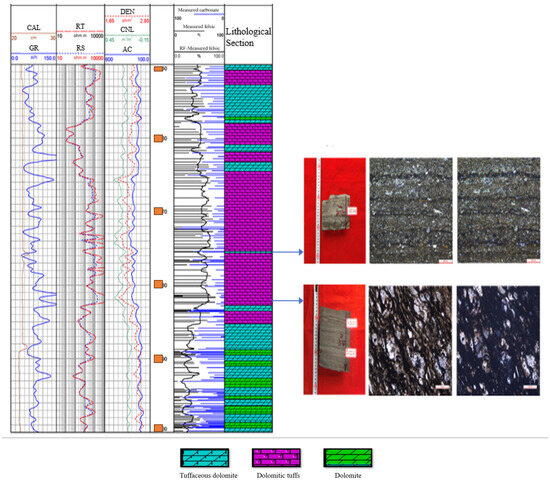

3.6. Comprehensive Lithology Identification Analysis

The random forest results, which had the best predictive performance, were selected to identify lithology and favorable reservoirs. The lithology was classified based on felsic content: less than 25% is dolomite, 25–50% is tuffaceous dolomite, 50–75% is dolomitic tuff, and greater than 75% is tuff. Favorable reservoirs were identified based on the TOC content data provided in this paper. The specific results are shown in Figure 17. Combined with the NMR movable fluid porosity curve, it can be concluded that the identification of favorable oil-producing layers is relatively accurate.

Figure 17.

Lu 1 well lithology comprehensive interpretation map.

As shown in Figure 18, the lithology interpretation log of the Lu 1 well indicates that the XRD-measured felsic content in the core is quite close to the predicted felsic content. This demonstrates that using predicted felsic content for lithology identification yields good results. At a depth of 3175.5 m, the core photograph shows that the lithology is tuffaceous dolomite. According to the XRD data, the main minerals present are felsic minerals and dolomite, with felsic content at 43.1% and dolomite content at 54.7%. The random forest algorithm predicted the felsic content to be 47.14%, which is close to the XRD results. At a depth of 3182.65 m, the core photograph shows that the lithology is dolomitic tuff. According to the XRD data, the main minerals present are felsic minerals and dolomite, with felsic content at 57.1% and dolomite content at 39.2%. The random forest algorithm predicted the felsic content to be 60%, which is also close to the XRD results. By comparing the actual core data with the predicted data, it can be concluded that the prediction results are highly accurate.

Figure 18.

Lithology interpretation diagram for Well Lu 1.

According to the XRD analysis, the mineral content is 43.1% felsic and 54.7% dolomite. According to XRD analysis, the mineral content is 57.1% felsic and 39.2% dolomite.

4. Conclusions

This study focuses on the second member of the Lucaogou Formation in the Santanghu Basin, using three machine learning methods to classify and identify lithology in the study area based on conventional logging curves and core data. The specific conclusions of this study are as follows:

- By analyzing the correlations between logging curves, the best feature curves for training were selected. The GR, AC, CN, DEN, RS, and RD curves were chosen as the input features. Three methods—XGBoost, random forest, and support vector regression—were used to predict the felsic content. The predictive performance of the models was evaluated using four evaluation metrics. The random forest method yielded the best performance with R2 = 0.43, MAE = 0.11, MSE = 0.020, and RMSE = 0.14 when using 80% of the training data, followed by XGBoost (R2 = 0.42, MAE = 0.12, MSE = 0.022, RMSE = 0.15) and then Support Vector Regression (R2 = 0.41, MAE = 0.11, MSE = 0.021, RMSE = 0.14). While these R2 values indicate moderate predictive capability, they represent a significant improvement over traditional methods in this complex geological setting. By comparing the predicted felsic content with the actual core felsic content at specific depths, it was demonstrated that lithology identification based on predicted felsic content achieves sufficient accuracy for practical reservoir characterization applications.

- In this study, three machine learning methods were adopted to identify the lithology of complex carbonate rocks in the Lujiagou Formation. Compared with previous studies, our random forest model has achieved better results in the prediction of feldspar content by optimizing feature selection. Although the R2 value of the model is at a medium level, it is comparable to the previous achievements under similar complex geological conditions and achieves a good balance between computational efficiency and accuracy. Verified by actual core data, the lithology identification method based on predicted feldspar content has practical value, providing a reliable technical solution for complex lithology identification and having reference significance for reservoir evaluation in similar areas.

- Although the prediction model constructed in this study performed well in the lithology identification of the Lucaogou Formation, there are still some limitations and uncertainties: On the one hand, the logging data itself contains various noise sources and uncertainties. Although certain data preprocessing has been carried out, some noises may still affect the prediction accuracy. On the other hand, the number of XRD samples available for model training in this study is limited, which may affect the model’s ability to fully capture the geological complexity of the region. Secondly, there is a potential sampling deviation. Some core samples may not perfectly represent all the lithological changes existing in the formation. To alleviate these uncertainties, future work should focus on expanding the training dataset, adding additional logging curves when available, and developing more complex model validation techniques.

- Although this research has achieved certain results, there is still room for improvement. Future research can be carried out from the following perspectives: Firstly, on the basis of sufficient data, deep learning methods such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs) can be used to predict complex rock types. Deep learning methods have good effects in capturing the tiny changes between different rock types. Secondly, other data types such as nuclear magnetic resonance (NMR) can be combined to enhance the lithology discrimination ability of the model in the case of overlapping conventional logging responses.

Author Contributions

L.C.: writing—review and editing, supervision, and validation, L.H.: writing—original draft and writing—review and editing. J.X.: data curation. Q.H.: methodology and supervision. J.F.: investigation, supervision, and writing—review and editing, visualization; Y.L.: supervision; Z.C.: validation and supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to privacy restrictions. Data contain sensitive patient information that cannot be publicly shared but may be made available to qualified researchers upon reasonable request and with appropriate ethical approval.

Conflicts of Interest

Authors Liangyu Chen, Qiuyuan Hou, Yonggui Li and Zhi Chen were employed by the company China National Logging Corporation. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

| GR | Natural gamma rays (API units) |

| AC | Sound wave time difference (μs/ft) |

| CN | Compensating neutrons (v/v) |

| DEN | Density (g/cm3) |

| RS | Shallow resistivity (ohm.m) |

| RD | Deep resistivity (ohm.m) |

| CYZ | Feldspar content (%) |

| TOC | Total organic carbon (%) |

| XRD | X-ray diffraction |

| SVR | Support vector regression |

| SVM | Support vector machine |

| RF | Random forest |

| XGBoost | Extreme gradient boosting |

References

- Jiang, K.; Wang, S.; Hu, Y.; Pu, S.; Duan, H.; Wang, Z. Lithology identification model based on Boosting Tree algorithm. Well Logging Technol. 2018, 42, 396. [Google Scholar]

- Liu, Q.; Gao, J.H.; Dong, X. Nonlinear inversion of the reservoir prediction. Lithol. Reserv. 2007, 1, 81–85. [Google Scholar]

- Chen, G. Lithology Identification and Drilling Rate Prediction in Sedimentary Basins Using Machine Learning and Logging Data: A Case Study of the Erlian Basin and Surat Basin. Ph.D. Thesis, East China University of Technology, Shanghai, China, 2024. [Google Scholar]

- Fan, Y.; Huang, L.; Dai, S. Application of Crossplot Technique to the Determination of Lithology Composition and Fracture Identification of Igneous Rock. Well Logging Technol. 1999, 23, 53–56. [Google Scholar]

- Zhang, X.; Xiao, X.; Yan, L.; Hu, W. Lithology identification based on fuzzy support vector machine method. J. Oil Gas (J. Jianghan Pet. Inst.) 2009, 31, 115–118. [Google Scholar]

- Zhong, Y.; Li, R. Lithology identification method based on principal component analysis and least squares support vector machine. Well Logging Technol. 2009, 33, 425–429. [Google Scholar]

- Zhao, Z.; Huang, Q.; Shi, L.; Wang, X.; Shan, J. Lithology identification of tight sandstone reservoirs in Sulige gas field based on BP neural network algorithm. Well Logging Technol. 2015, 39, 363–367. [Google Scholar]

- Fan, C.; Liang, Z.; Qin, Q.; Zhao, L.; Zuo, J. Lithology identification of volcanic rocks based on well logging parameters using genetic BP neural network: A case study of Carboniferous volcanic rocks in the Zhongguai uplift, northwestern margin of the Junggar Basin. J. Pet. Nat. Gas 2012, 34, 68–71. [Google Scholar]

- Wang, Z.; Zhang, C.; Gao, S. Lithology identification of complex carbonate rocks using decision tree method: A case study of the Sudong 41–33 block in the Sulige gas field. Oil Gas Geol. Recovery 2017, 24, 25–33. [Google Scholar]

- Zhong, H.; Cheng, Y.; Lin, M.; Gao, S.; Zhong, T. Lithology identification of complex carbonate rocks based on SOM and fuzzy recognition. Lithol. Oil Gas Reserv. 2019, 31, 84–91. [Google Scholar]

- Ma, Z.; Zhang, C.; Gao, S. Application of principal component analysis and fuzzy recognition in lithology identification. Lithol. Oil Gas Reserv. 2017, 29, 127–133. [Google Scholar]

- Song, Y.; Wang, T.; Fu, J.; Deng, X. Lithology identification method of sandy conglomerate reservoirs in the Lei 64 block. J. Harbin Univ. Commer. (Nat. Sci. Ed.) 2015, 31, 73–78. [Google Scholar]

- Li, H.; Tan, F.; Xu, C.; Wang, X.; Peng, S. Lithology identification of conglomerate reservoirs using decision tree method: A case study of the Klamayi oil field’s Kexia group reservoirs. J. Oil Gas (J. Jianghan Pet. Inst.) 2010, 32, 73–79. [Google Scholar]

- Li, B.; Zhang, X.; Wang, Q.; Guo, B.; Guo, Y.; Shang, X.; Cheng, H.; Lu, J.; Zhao, X. Diagenetic facies analysis and well logging identification of low and ultra-low permeability dolomite reservoirs: A case study of the Ma 5 member on the Yishan slope. Lithol. Oil Gas Reserv. 2019, 31, 70–83. [Google Scholar]

- Sun, Y.; Huang, Y.; Liang, T.; Ji, H.; Xiang, P.; Xu, X. Well logging identification of complex carbonate lithology based on XGBoost algorithm. Lithol. Oil Gas Reserv. 2020, 32, 98–106. [Google Scholar]

- Zhao, J.; Gao, F. Application of crossplot method in volcanic lithology identification using well logging data. World Geol. 2003, 22, 136–140. [Google Scholar]

- Zhang, D.; Zou, N.; Jiang, Y.; Ma, C.; Zhang, S.; Du, S. Research on volcanic lithology identification methods using well logging: A case study of volcanic rocks in the Junggar Basin. Lithol. Oil Gas Reserv. 2015, 27, 108–114. [Google Scholar]

- Pang, S.; Li, M.; Peng, J.; Zhou, Y.; Shi, D.; Wang, R. Characteristics and well logging identification of volcanic rocks in the Sihongtu Formation, Chagan Depression. Fault Block Oil Gas Field 2019, 26, 314–318. [Google Scholar]

- Wu, L.; Xu, H.; Ji, H. Application of neural network technology in volcanic rock identification using crossplots and multivariate statistics. Oil Geophys. Explor. 2006, 41, 81–86. [Google Scholar]

- Delfiner, P.C.; Peyret, O.; Serra, O. Automatic determination of lithology from well logs. SPE Form. Eval. 1984, 2, 303–310. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, X. Determination of lithology using probability statistics methods. J. Jianghan Pet. Inst. 1989, 11, 35–40. [Google Scholar]

- Liu, X.; Chen, C.; Zeng, C.; Lan, L. Multivariate statistical methods for lithology identification using well logging data. Geol. Sci. Technol. Inf. 2007, 26, 109–112. [Google Scholar]

- Feixue, Y. Research on Lithology Identification Methods Based on Deep Neural Networks. Master’s Thesis, China University of Petroleum, Beijing, China, 2023. [Google Scholar] [CrossRef]

- Wang, G.; Carr, T.R.; Ju, Y.; Li, C. Identifying organic-rich Marcellus Shale lithofacies by support vector machine classifier in the Appalachian Basin. Comput. Geosci. 2014, 64, 52–60. [Google Scholar] [CrossRef]

- Baldwin, J.L.; Otte, D.N.; Whealtley, C.L. Computer emulation of human mental processes: Application of neural network simulators to problems in well log interpretation. In Proceedings of the SPE Annual Technical Conference and Exhibition, San Antonio, TX, USA, 8–11 October 1989; pp. 8–11. [Google Scholar]

- Hall, B. Facies classification using machine learning. Lead. Edge 2016, 35, 906–909. [Google Scholar] [CrossRef]

- Zhou, X.; Zhang, Z.; Zhang, C.; Nie, X.; Zhu, L.; Zhang, H. Lithology identification of complex lithologies using a rough set and Random Forest algorithm. Daqing Pet. Geol. Dev. 2017, 36, 127–133. [Google Scholar]

- An, P.; Cao, D. Research and application of well logging lithology identification method based on deep learning. Adv. Geophys. 2018, 33, 1029–1034. [Google Scholar]

- Imamverdiyev, Y.; Sukhostat, L. Lithological facies classification using deep convolutional neural network. J. Pet. Sci. Eng. 2018, 174, 216–228. [Google Scholar] [CrossRef]

- Chen, G.; Liang, S.; Wang, J.; Sui, S. Application of convolutional neural network in lithology identification. Well Logging Technol. 2019, 43, 129–134. [Google Scholar]

- Svetnik, V.; Liaw, A.; Tong, C.J.; Culberson, P.; Sheridan, R.P.; Feuston, B. Random forest: A classification and regression tool for compound classification and QSAR modeling. J. Chem. Inf. Comput. Sci. 2003, 43, 1947. [Google Scholar] [CrossRef]

- Xiao, J.; Yu, L.; Bai, Y. A review of kernel function and hyperparameter selection methods in support vector regression. J. Southwest Jiaotong Univ. 2008, 43, 297–303. [Google Scholar]

- Zhu, Y. Research on Reservoir Parameter Prediction Method Based on Support Vector Machine. Master’s Thesis, Southwest Petroleum University, Sichuan, China, 2012. [Google Scholar]

- Liao, M. Research on Several Algorithms of Weighted Support Vector Machines and Their Applications. Master’s Thesis, Hunan University, Hunan, China, 2011. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System; ACM: New York, NY, USA, 2016. [Google Scholar]

- Li, H. Statistical Learning Methods; Tsinghua University Press: Beijing, China, 2012. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).