Embedding Security Awareness in IoT Systems: A Framework for Providing Change Impact Insights

Abstract

1. Introduction

- We develop a procedural approach to assign an Importance Factor (IF) to each system component based on its significance to the system’s overall operation.

- We enhance the security profile by incorporating IF values and extend the security awareness framework to generate enriched interpretations that provide insights into the affected components, their functionalities, and their operational significance.

2. Background

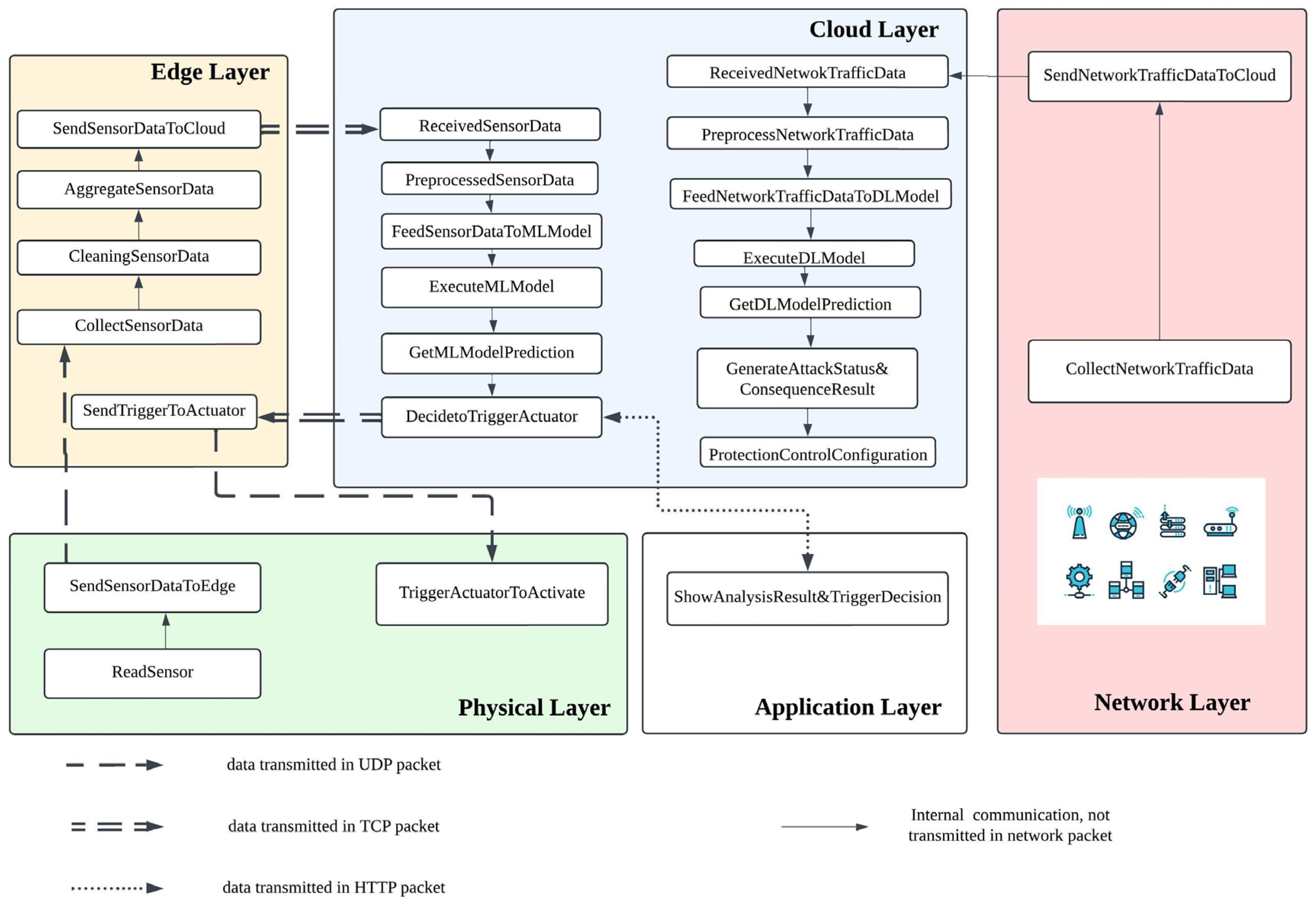

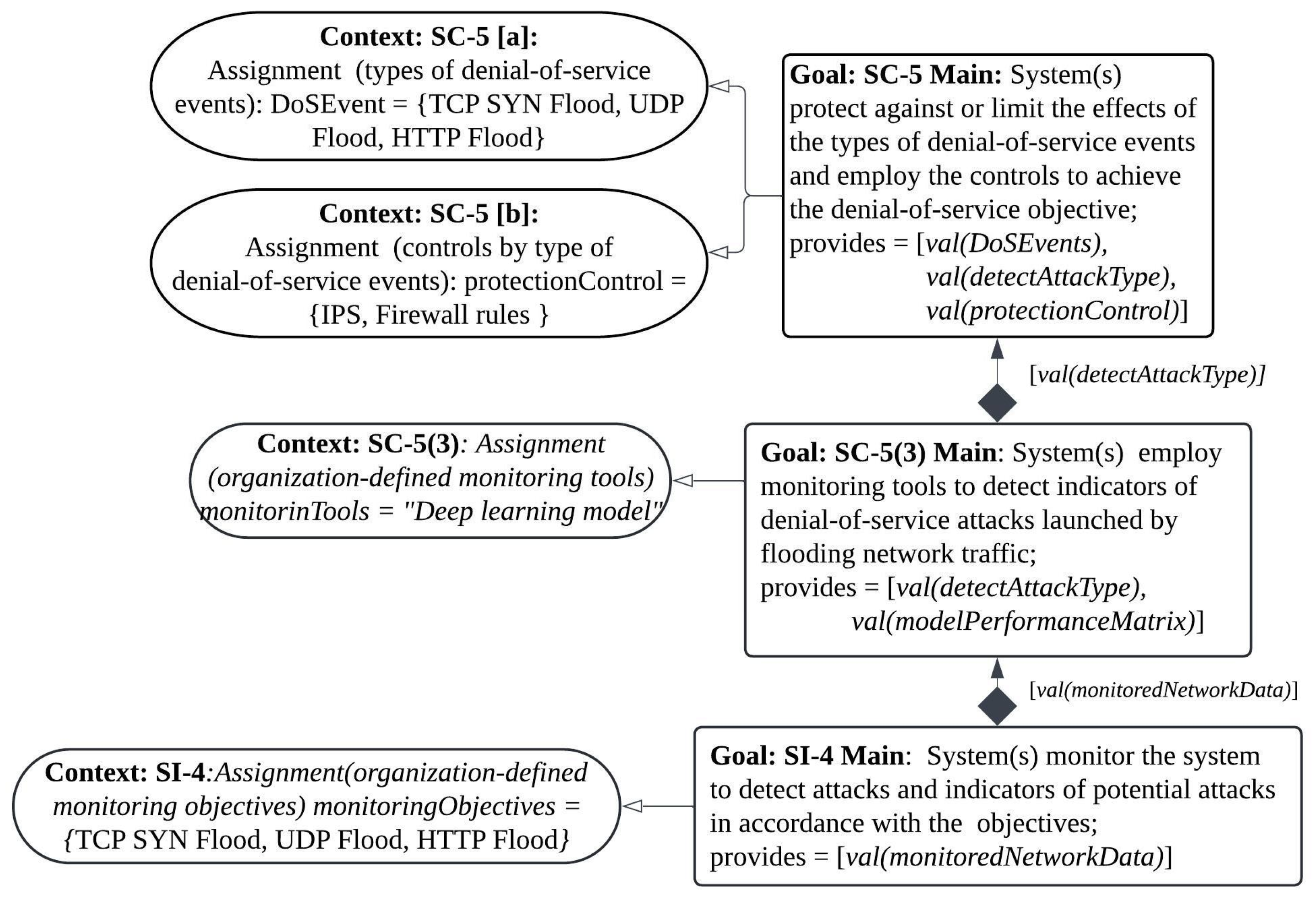

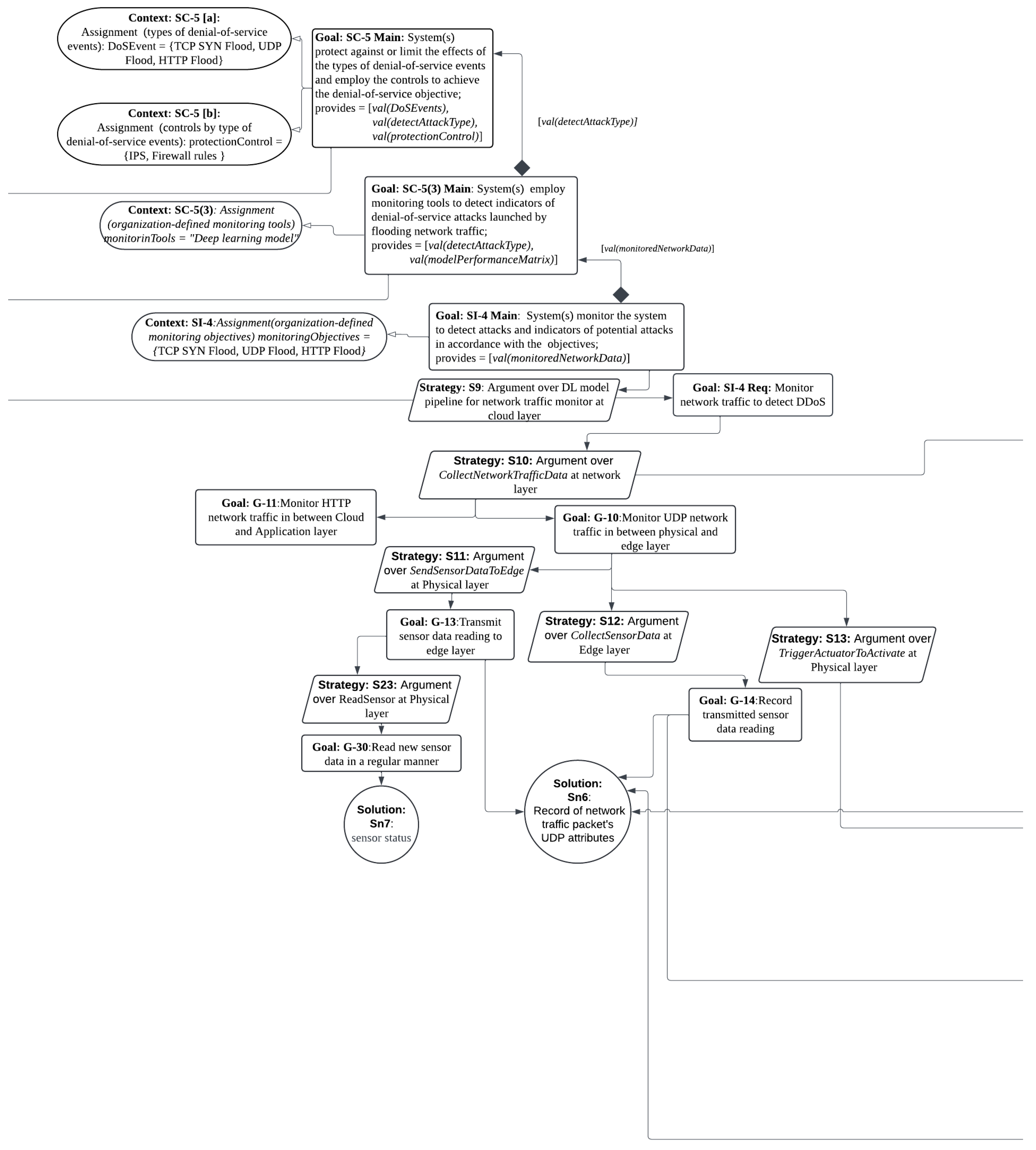

- Perception (or Physical) Layer: Houses sensing devices and collects raw data.

- Edge/Fog Layer: Offers localized computing resources to reduce latency and offload processing from the cloud.

- Cloud Layer: Manages large-scale data processing, analysis, and storage using heterogeneous cloud services [31].

- Network (or Transport) Layer: Ensures data transmission between layers.

- Application Layer: Delivers services and interfaces to end users.

3. Approach

3.1. IoT Based Smart Irrigation System Testbed

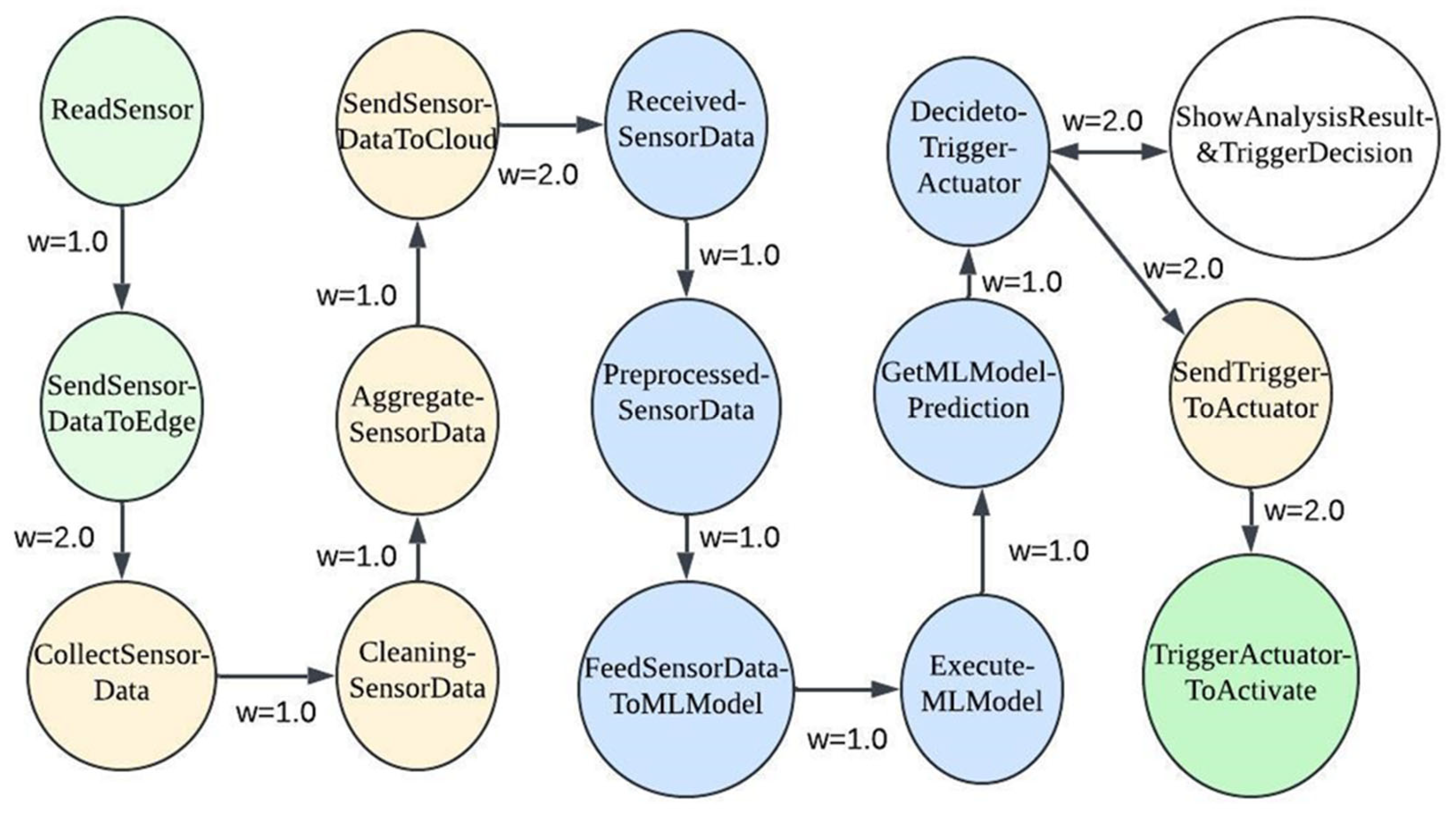

- To ShowAnalysisResult&TriggerDecision at the Application Layer, where the user is notified of the prediction and actuator decision.

- To the Edge Layer, where the SendTriggerToActuator process relays the command to the Physical Layer. In this layer, the TriggerActuatorToActivate process initiates actuator activation, which is simulated in the testbed using a buzzer that represents the irrigation valve.

3.2. IoT DDoS Dataset and DNN Model

3.3. Local Explanation of the DNN Model and Information Extraction

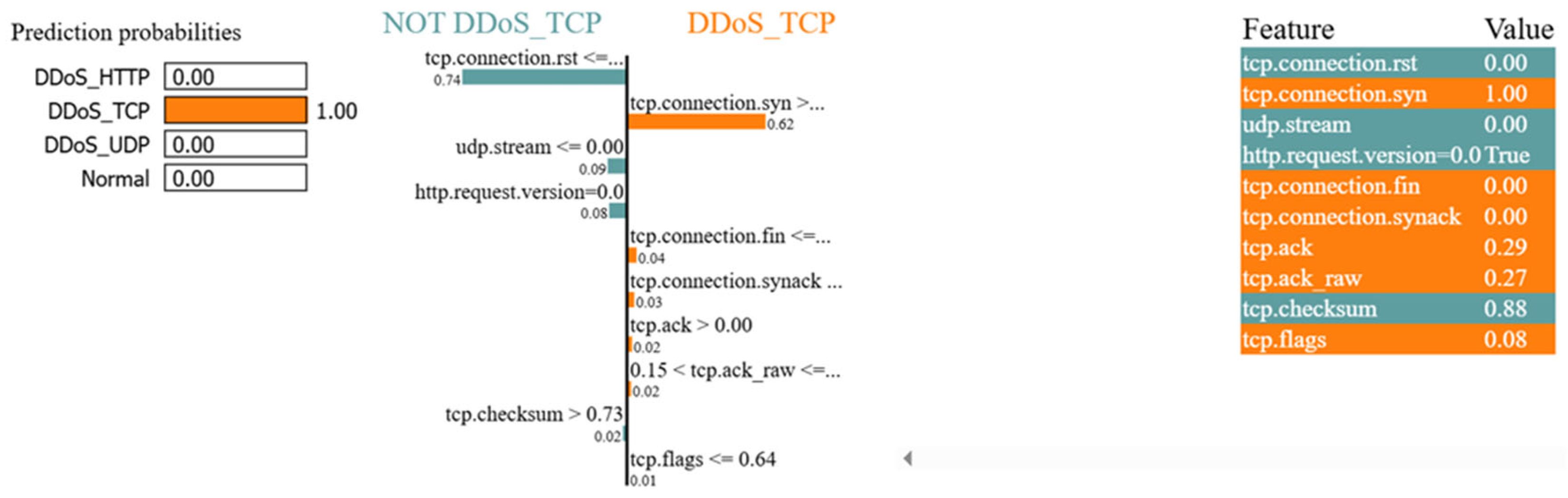

- Left Side (NOT DDoS_UDP): Displays attributes that contributed to the prediction that the instance is not a DDoS_UDP attack.

- Right Side (DDoS_UDP): Highlights attributes that pushed the prediction toward a DDoS_UDP classification.

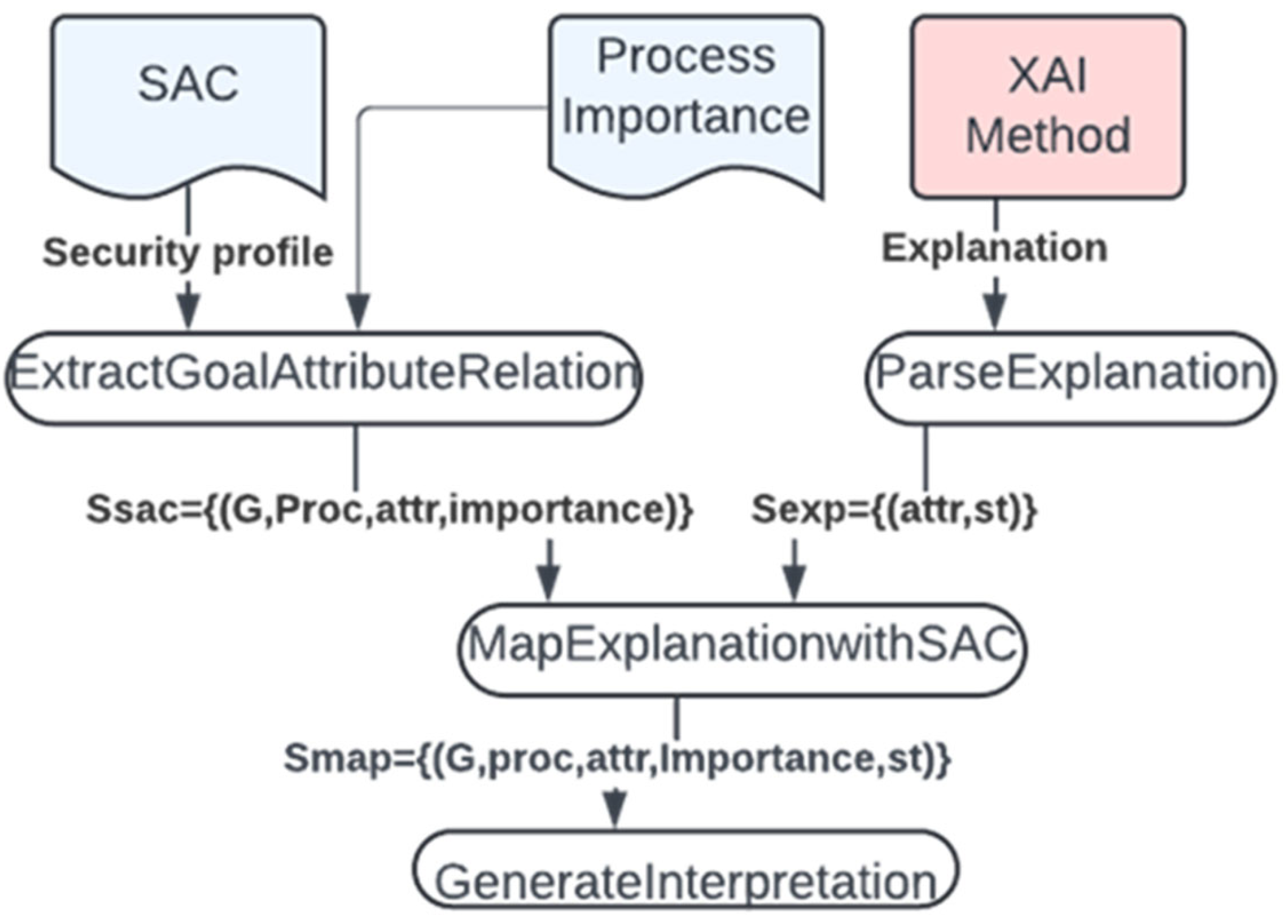

- is the system process responsible for fulfilling that goal,

- is the set of attributes linked to the goal via solution nodes in the SAC and used within ,

- is the Importance Factor (IF) assigned to each process based on its contribution to the overall system’s operation. Details on how IF is computed are provided in a later section.

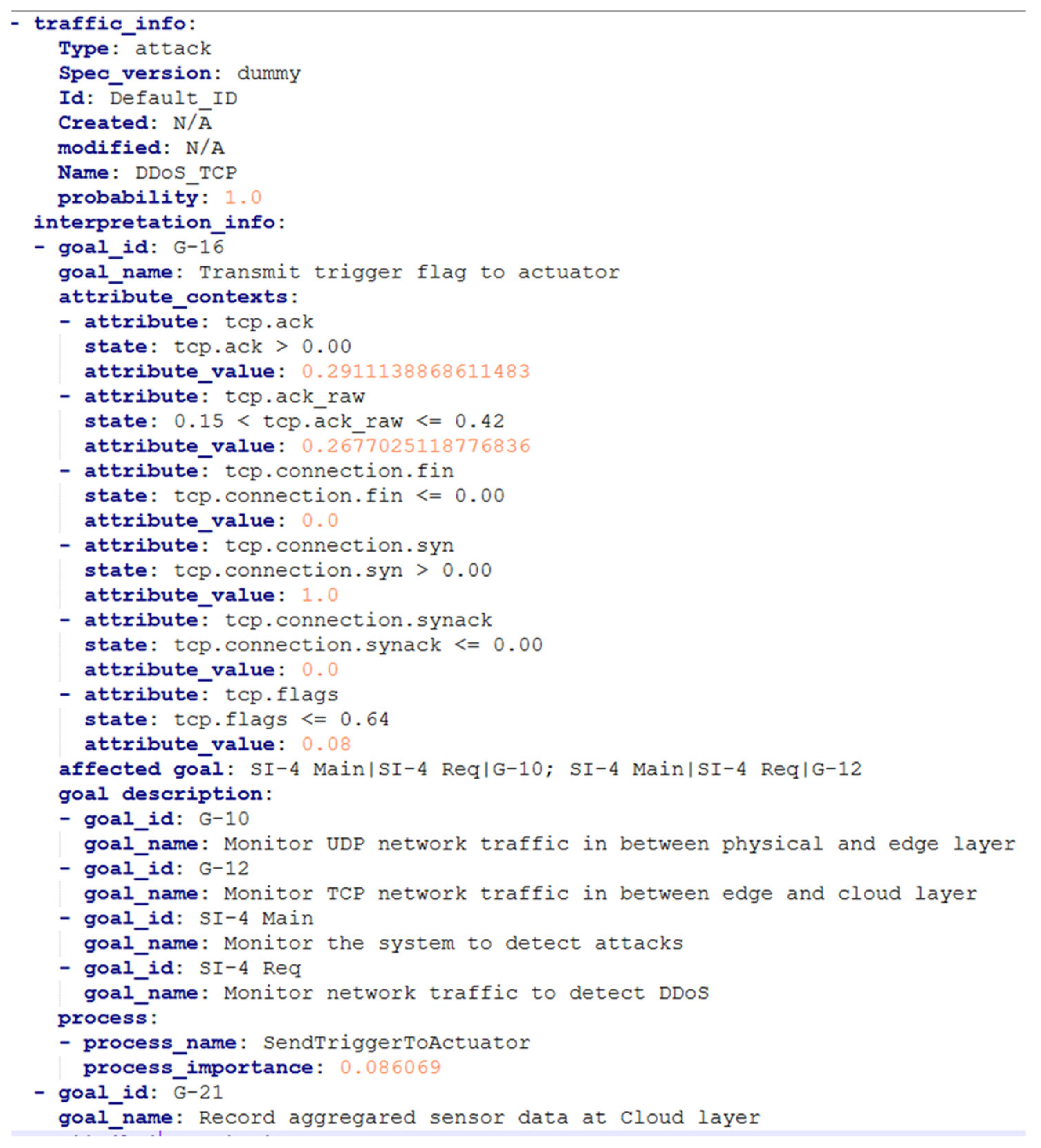

- interpretation_info: A nested object outlining affected attributes and their states, associated processes, corresponding goals, and IFs.

- traffic_info: A summary of the model’s prediction (e.g., DDoS_UDP with 99% confidence) and the relevant attack context.

3.4. Generate System-Call Dependency Graph (ScD Graph) and Calculate Importance Factor (IF) Assigned to Each Process

4. Experiment and Result

5. Performance Analysis

5.1. Execution Time

5.2. Fidelity Assessment

- DDoS_TCP explanations exhibited the highest fidelity, with a cosine similarity score of approximately 0.65. This strong alignment is attributed to the richer and more diverse set of TCP-specific features in the ground truth, increasing the likelihood of overlap with LIME-selected features. The model appears to have captured meaningful protocol-level patterns consistent with known attack behaviors.

- DDoS_HTTP explanations achieved moderate fidelity, with a cosine similarity of around 0.47. This reflects partial alignment, likely influenced by the limited number of HTTP-specific features in the ground truth. Some LIME-identified features were weakly relevant or attributable to noise.

- DDoS_UDP explanations demonstrated the lowest fidelity, with a cosine similarity of approximately 0.31. This suggests that the explanations either focused on less relevant features or failed to capture the protocol’s limited but critical attributes. The relative simplicity and minimal feature diversity of the UDP may have contributed to this outcome.

5.3. Robustness of the Framework

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Reggio, G.; Leotta, M.; Cerioli, M.; Spalazzese, R.; Alkhabbas, F. What are IoT systems for real? An experts’ survey on software engineering aspects. Internet Things 2020, 12, 100313. [Google Scholar] [CrossRef]

- Gubbi, J.; Buyya, R.; Marusic, S.; Palaniswami, M. Internet of Things (IoT): A vision, architectural elements, and future directions. Future Gener. Comput. Syst. 2013, 29, 1645–1660. [Google Scholar] [CrossRef]

- Taivalsaari, A.; Mikkonen, T. On the development of IoT systems. In Proceedings of the Third International Conference on Fog and Mobile Edge Computing (FMEC), Barcelona, Spain, 23–26 April 2018; IEEE: New York, NY, USA, 2018; pp. 13–19. [Google Scholar] [CrossRef]

- Tawalbeh, L.A.; Muheidat, F.; Tawalbeh, M.; Quwaider, M. IoT Privacy and security: Challenges and solutions. Appl. Sci. 2020, 10, 4102. [Google Scholar] [CrossRef]

- Alaba, F.A.; Othman, M.; Hashem, I.A.T.; Alotaibi, F. Internet of Things security: A survey. J. Netw. Comput. Appl. 2017, 88, 10–28. [Google Scholar] [CrossRef]

- Jahan, S.; Alqahtani, S.; Gamble, R.F.; Bayesh, M. Automated Extraction of Security Profile Information from XAI Outcomes. In Proceedings of the 2023 IEEE International Conference on Autonomic Computing and Self-Organizing Systems Companion (ACSOS-C), Toronto, ON, Canada, 25–29 September 2023; IEEE: New York, NY, USA, 2023; pp. 110–115. [Google Scholar] [CrossRef]

- Petrovska, A. Self-Awareness as a Prerequisite for Self-Adaptivity in Computing Systems. In Proceedings of the 2021 IEEE International Conference on Autonomic Computing and Self-Organizing Systems Companion (ACSOS-C), Washington, DC, USA, 27 September–1 October 2021; IEEE: New York, NY, USA, 2021; pp. 146–149. [Google Scholar] [CrossRef]

- Chattopadhyay, A.; Lam, K.Y.; Tavva, Y. Autonomous vehicle: Security by design. IEEE Trans. Intell. Transp. Syst. 2020, 22, 7015–7029. [Google Scholar] [CrossRef]

- Li, J.; Yi, X.; Wei, S. A study of network security situational awareness in Internet of Things. In Proceedings of the 2020 International Wireless Communications and Mobile Computing (IWCMC), Limassol, Cyprus, 15–19 June 2020; IEEE: New York, NY, USA, 2020; pp. 1624–1629. [Google Scholar] [CrossRef]

- Lei, W.; Wen, H.; Hou, W.; Xu, X. New security state awareness model for IoT devices with edge intelligence. IEEE Access 2021, 9, 69756–69765. [Google Scholar] [CrossRef]

- Hemmati, A.; Rahmani, A.M. The Internet of Autonomous Things applications: A taxonomy, technologies, and future directions. Internet Things 2022, 20, 100635. [Google Scholar] [CrossRef]

- Xu, R.; Nagothu, D.; Chen, Y.; Aved, A.; Ardiles-Cruz, E.; Blasch, E. A Secure Interconnected Autonomous System Architecture for Multi-Domain IoT Ecosystems. IEEE Commun. Mag. 2024, 62, 52–57. [Google Scholar] [CrossRef]

- Salehie, M.; Tahvildari, L. Self-adaptive software: Landscape and research challenges. ACM Trans. Auton. Adapt. Syst. (TAAS) 2009, 4, 1–42. [Google Scholar] [CrossRef]

- Hezavehi, S.M.; Weyns, D.; Avgeriou, P.; Calinescu, R.; Mirandola, R.; Perez-Palacin, D. Uncertainty in self-adaptive systems: A research community perspective. ACM Trans. Auton. Adapt. Syst. (TAAS) 2021, 15, 1–36. [Google Scholar] [CrossRef]

- Jahan, S.; Riley, I.; Gamble, R.F. Assessing adaptations based on change impacts. In Proceedings of the 2020 IEEE International Conference on Autonomic Computing and Self-Organizing Systems (ACSOS), Washington, DC, USA, 17–21 August 2020; IEEE: New York, NY, USA, 2020; pp. 48–54. [Google Scholar] [CrossRef]

- Jahan, S. An Adaptation Assessment Framework for Runtime Security Assurance Case Evolution. Ph.D. Dissertation, The University of Tulsa, Tulsa, OK, USA, 2021. Available online: https://www.proquest.com/docview/2637547876?pq-origsite=gscholar&fromopenview=true&sourcetype=Dissertations%20&%20Theses (accessed on 25 May 2025).

- Gheibi, O.; Weyns, D.; Quin, F. Applying machine learning in self-adaptive systems: A systematic literature review. ACM Trans. Auton. Adapt. Syst. (TAAS) 2021, 15, 1–37. [Google Scholar] [CrossRef]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Vilone, G.; Longo, L. Explainable artificial intelligence: A systematic review. arXiv 2020, arXiv:2006.00093. [Google Scholar] [CrossRef]

- Molnar, C. Interpretable Machine Learning; Lulu. com: Morrisville, NC, USA, 2020; Available online: https://books.google.com/books?hl=en&lr=&id=jBm3DwAAQBAJ&oi=fnd&pg=PP1&dq=Interpretable+machine+learning&ots=EhvWYjHDSY&sig=fJlg8xyZsauRhLjOYF_xUqr8khQ#v=onepage&q=Interpretable%20machine%20learning&f=false (accessed on 25 May 2025).

- Alexander, R.; Hawkins, R.; Kelly, T. Security Assurance Cases: Motivation and the State of the Art; High Integrity Systems Engineering, Department of Computer Science, University of York: Deramore Lane, UK, 2011; Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=623bb1c1ded3860ed1307d45a2a01823b13abff6 (accessed on 25 May 2025).

- Kelly, T.; Weaver, R. The goal structuring notation–a safety argument notation. In Dependable Systems and Networks 2004 Workshop on Assurance Cases; Citeseer: Princeton, NJ, USA, 2004; Volume 6, Available online: http://dslab.konkuk.ac.kr/class/2012/12SIonSE/Key%20Papers/The%20Goal%20Structuring%20Notation%20_%20A%20Safety%20Argument%20Notation.pdf (accessed on 25 May 2025).

- Averbukh, V.L.; Bakhterev, M.O.; Manakov, D.V. Evaluations of visualization metaphors and views in the context of execution traces and call graphs. Sci. Vis. 2017, 9, 1–18. Available online: https://www.researchgate.net/profile/Vladimir-Averbukh/publication/322070685_Evaluations_of_Visualization_Metaphors_and_Views_in_the_Context_of_Execution_Traces_and_Call_Graphs/links/5a4287690f7e9ba868a47bd5/Evaluations-of-Visualization-Metaphors-and-Views-in-the-Context-of-Execution-Traces-and-Call-Graphs.pdf (accessed on 25 May 2025). [CrossRef]

- Salis, V.; Sotiropoulos, T.; Louridas, P.; Spinellis, D.; Mitropoulos, D. Pycg: Practical call graph generation in Python. In Proceedings of the 2021 IEEE/ACM 43rd International Conference on Software Engineering (ICSE), Madrid, Spain, 25–28 May 2021; IEEE: New York, NY, USA, 2021; pp. 1646–1657. [Google Scholar] [CrossRef]

- Zhang, P.; Wang, T.; Yan, J. PageRank centrality and algorithms for weighted, directed networks. Phys. A Stat. Mech. Its Appl. 2022, 586, 126438. [Google Scholar] [CrossRef]

- Gómez, S. Centrality in networks: Finding the most important nodes. In Business and Consumer Analytics: New Ideas; Moscato, P., de Vries, N., Eds.; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Freeman, L.C. Centrality in social networks: Conceptual clarification. In Social Network: Critical Concepts in Sociology; Routledge: Londres, UK, 2002; Volume 1, pp. 238–263. Available online: https://books.google.com/books?hl=en&lr=&id=fy3m_EixWOsC&oi=fnd&pg=PA238&dq=Centrality+in+social+networks+conceptual+clarification&ots=unHaJzR81U&sig=hG9bkrrpA_B-kQ0r2iy7ISsLvts#v=onepage&q=Centrality%20in%20social%20networks%20conceptual%20clarification&f=false (accessed on 25 May 2025).

- Brin, S.; Page, L. The anatomy of a large-scale hypertextual web search engine. Comput. Netw. ISDN Syst. 1998, 30, 107–117. [Google Scholar] [CrossRef]

- Sarker, I.H.; Khan, A.I.; Abushark, Y.B.; Alsolami, F. Internet of things (IoT) security intelligence: A comprehensive overview, machine learning solutions and research directions. Mob. Netw. Appl. 2023, 28, 296–312. [Google Scholar] [CrossRef]

- Bouaouad, A.E.; Cherradi, A.; Assoul, S.; Souissi, N. The key layers of IoT architecture. In Proceedings of the 2020 5th International Conference on Cloud Computing and Artificial Intelligence: Technologies and Applications (CloudTech), Marrakesh, Morocco, 24–26 November 2020; IEEE: New York, NY, USA, 2020; pp. 1–4. [Google Scholar] [CrossRef]

- Mrabet, H.; Belguith, S.; Alhomoud, A.; Jemai, A. A survey of IoT security based on a layered architecture of sensing and data analysis. Sensors 2020, 20, 3625. [Google Scholar] [CrossRef]

- Tukur, Y.M.; Thakker, D.; Awan, I.U. Multi-layer approach to internet of things (IoT) security. In Proceedings of the 2019 7th International Conference on Future Internet of Things and Cloud (FiCloud), Istanbul, Turkey, 26–28 August 2019; IEEE: New York, NY, USA, 2019; pp. 109–116. [Google Scholar] [CrossRef]

- Hassija, V.; Chamola, V.; Saxena, V.; Jain, D.; Goyal, P.; Sikdar, B. A survey on IoT security: Application areas, security threats, and solution architectures. IEEE Access 2019, 7, 82721–82743. [Google Scholar] [CrossRef]

- Dargaoui, S.; Azrour, M.; El Allaoui, A.; Amounas, F.; Guezzaz, A.; Attou, H.; Hazman, C.; Benkirane, S.; Bouazza, S.H. An overview of the security challenges in IoT environment. Adv. Technol. Smart Environ. Energy 2023, 151–160. [Google Scholar] [CrossRef]

- Koohang, A.; Sargent, C.S.; Nord, J.H.; Paliszkiewicz, J. Internet of Things (IoT): From awareness to continued use. Int. J. Inf. Manag. 2022, 62, 102442. [Google Scholar] [CrossRef]

- Tariq, U.; Aseeri, A.O.; Alkatheiri, M.S.; Zhuang, Y. Context-aware autonomous security assertion for industrial IoT. IEEE Access 2020, 8, 191785–191794. [Google Scholar] [CrossRef]

- Jaigirdar, F.T.; Rudolph, C.; Bain, C. Prov-IoT: A security-aware IoT provenance model. In Proceedings of the 2020 IEEE 19th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Guangzhou, China, 29 December 2020–1 January 2021; pp. 1360–1367. [Google Scholar] [CrossRef]

- Chen, B.; Qiao, S.; Zhao, J.; Liu, D.; Shi, X.; Lyu, M.; Chen, H.; Lu, H.; Zhai, Y. A security awareness and protection system for 5G smart healthcare based on zero-trust architecture. IEEE Internet Things J. 2020, 8, 10248–10263. [Google Scholar] [CrossRef] [PubMed]

- Darias, J.M.; Díaz-Agudo, B.; Recio-Garcia, J.A. A Systematic Review on Model-agnostic XAI Libraries. In Proceedings of the ICCBR Workshops, Online, 13–16 September 2021; pp. 28–39. [Google Scholar]

- Lipton, Z.C. The mythos of model interpretability: In machine learning, the concept of interpretability is both important and slippery. Queue 2018, 16, 31–57. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Why should I trust you? Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar]

- Ng, C.H.; Abuwala, H.S.; Lim, C.H. Towards more stable LIME for explainable AI. In Proceedings of the 2022 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Penang, Malaysia, 22–25 November 2022; IEEE: New York, NY, USA, 2022; pp. 1–4. [Google Scholar] [CrossRef]

- Dieber, J.; Kirrane, S. Why model why? Assessing the strengths and limitations of LIME. arXiv 2020, arXiv:2012.00093. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4765–4774. [Google Scholar]

- Li, M.; Sun, H.; Huang, Y.; Chen, H. Shapley value: From cooperative game to explainable artificial intelligence. Auton. Intell. Syst. 2024, 4, 2. [Google Scholar] [CrossRef]

- Miller, T. Explainable ai is dead, long live explainable ai! hypothesis-driven decision support using evaluative ai. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, Chicago, IL, USA, 12–15 June 2023; pp. 333–342. [Google Scholar] [CrossRef]

- Saputri, T.R.D.; Lee, S.W. The application of machine learning in self-adaptive systems: A systematic literature review. IEEE Access 2020, 8, 205948–205967. [Google Scholar] [CrossRef]

- Bahmani, B.; Kumar, R.; Mahdian, M.; Upfal, E. Pagerank on an evolving graph. In Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Beijing, China, 12–16 August 2012; pp. 24–32. [Google Scholar]

- Sallinen, S.; Luo, J.; Ripeanu, M. Real-time pagerank on dynamic graphs. In Proceedings of the 32nd International Symposium on High-Performance Parallel and Distributed Computing, Orlando, FL, USA, 20–23 June 2023; pp. 239–251. [Google Scholar] [CrossRef]

- Zhang, J.; Luo, Y. Degree centrality, betweenness centrality, and closeness centrality in social network. In Proceedings of the 2017 2nd International Conference on Modelling, Simulation and Applied Mathematics (MSAM2017), Bangkok, Thailand, 26–27 March 2017; pp. 300–303. [Google Scholar] [CrossRef]

- Nesa, N.; Banerjee, I. SensorRank: An energy efficient sensor activation algorithm for sensor data fusion in wireless networks. IEEE Internet Things J. 2018, 6, 2532–2539. [Google Scholar] [CrossRef]

- Sun, Z.; Zeng, G.; Ding, C. Towards pagerank update in a streaming graph by incremental random walk. IEEE Access 2022, 10, 15805–15817. [Google Scholar] [CrossRef]

- Rangra, A.; Sehgal, V.K. Social Internet of Things: Their Trustworthiness, Node Rank, and Embeddings Management. Ph.D. Dissertation, Jaypee University of Information Technology, Solan, India, 2021. [Google Scholar]

- Ferrag, M.A. Edge-IIoTset Cyber Security Dataset of IoT IIoT. 2023. Available online: https://www.kaggle.com/datasets/mohamedamineferrag/edgeiiotset-cyber-security-dataset-of-iot-iiot (accessed on 25 May 2025).

- Ferrag, M.A.; Friha, O.; Hamouda, D.; Maglaras, L.; Janicke, H. Edge-IIoTset: A new comprehensive realistic cyber security dataset of IoT and IIoT applications for centralized and federated learning. IEEE Access 2022, 10, 40281–40306. [Google Scholar] [CrossRef]

- Saheed, Y.K.; Abdulganiyu, O.H.; Majikumna, K.U.; Mustapha, M.; Workneh, A.D. ResNet50-1D-CNN: A new lightweight resNet50-One-dimensional convolution neural network transfer learning-based approach for improved intrusion detection in cyber-physical systems. Int. J. Crit. Infrastruct. Prot. 2024, 45, 100674. [Google Scholar] [CrossRef]

- Joint Task Force. Security and Privacy Controls for Federal Information Systems; NIST Special Publication 800-53, Revision 5; NIST: Gaithersburg, MD, USA, 2020. [Google Scholar]

- Reif, J.H. Depth-first search is inherently sequential. Inf. Process. Lett. 1985, 20, 229–234. [Google Scholar] [CrossRef]

- Dantas Silva, F.S.; Silva, E.; Neto, E.P.; Lemos, M.; Venancio Neto, A.J.; Esposito, F. A taxonomy of DDoS attack mitigation approaches featured by SDN technologies in IoT scenarios. Sensors 2020, 20, 3078. [Google Scholar] [CrossRef]

- Guidotti, R. Evaluating local explanation methods on ground truth. Artif. Intell. 2021, 291, 103428. [Google Scholar] [CrossRef]

- Miró-Nicolau, M.; Jaume-i-Capó, A.; Moyà-Alcover, G. Assessing fidelity in XAI post-hoc techniques: A comparative study with ground truth explanations datasets. Artif. Intell. 2024, 335, 104179. [Google Scholar] [CrossRef]

- Canadian Institute for Cybersecurity. IDS 2018 Intrusion CSVs (CSE-CIC-IDS2018) [Data Set]; University of New Brunswick: Fredericton, NB, Canada, 2018; Available online: https://www.kaggle.com/datasets/solarmainframe/ids-intrusion-csv/data?select=02-20-2018.csv (accessed on 22 June 2025).

| TCP Attributes | Description |

|---|---|

| tcp.flags | Flags |

| tcp.ack | Acknowledgment number |

| tcp.ack_raw | Acknowledgment number (raw) |

| tcp.checksum | checksum |

| tcp.seq | Sequence number |

| tcp.flags.ack | Acknowledgment |

| tcp.len | TCP segment length |

| tcp.connection.syn | Connection establish request (SYN) |

| tcp.connection.rst | Connection reset (RST) |

| tcp.connection.fin | Connection finish (FIN) |

| tcp.connection.synack | Connection establish request (SYN + ACK) |

| Attack Type | Description |

|---|---|

| TCP SYN Flood DDoS attack | Make the victim’s server unavailable to legitimate requests |

| UDP flood DDoS attack | Overwhelm the processing and response capabilities of victim devices |

| HTTP flood DDoS attack | Exploits seemingly legitimate HTTP GET or POST requests to attack IoT application |

| Process Name | Importance Factor |

|---|---|

| DecidetoTriggerActuator | 0.156 |

| TriggerActuatorToActivate | 0.094 |

| GetMLModelPrediction | 0.09 |

| ShowAnalysisResult&TriggerDecision | 0.086 |

| SendTriggerToActuator | 0.086 |

| ExecuteMLModel | 0.082 |

| FeedSensorDataToMLModel | 0.074 |

| PreprocessedSensorData | 0.066 |

| ReceivedSensorData | 0.057 |

| SendSensorDataToCloud | 0.049 |

| AggregateSensorData | 0.041 |

| CleaningSensorData | 0.033 |

| CollectSensorData | 0.025 |

| SendSensorDataToEdge | 0.016 |

| ReadSensor | 0.008 |

| Process Name | Average Execution Time (in s) | |

|---|---|---|

| Overall | Model Prediction | 0.154893 |

| Generate Explanation | 8.426568 | |

| Mapping and Generate Report | 0.151432 | |

| DDoS_UDP | Model Prediction | 0.159036 |

| Generate Explanation | 8.082033 | |

| Mapping and Generate Report | 0.153765 | |

| DDoS_TCP | Model Prediction | 0.152603 |

| Generate Explanation | 8.802392 | |

| Mapping and Generate Report | 0.149118 | |

| DDoS_HTTP | Model Prediction | 0.153175 |

| Generate Explanation | 8.373173 | |

| Mapping and Generate Report | 0.151548 |

| Attack Type | Precision | Recall | F1-Score | Cosine Similarity |

|---|---|---|---|---|

| DDoS_TCP | 0.85 | 0.51 | 0.63 | 0.65 |

| DDoS_UDP | 0.19 | 0.50 | 0.27 | 0.31 |

| DDoS_HTTP | 0.44 | 0.50 | 0.47 | 0.47 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bayesh, M.; Jahan, S. Embedding Security Awareness in IoT Systems: A Framework for Providing Change Impact Insights. Appl. Sci. 2025, 15, 7871. https://doi.org/10.3390/app15147871

Bayesh M, Jahan S. Embedding Security Awareness in IoT Systems: A Framework for Providing Change Impact Insights. Applied Sciences. 2025; 15(14):7871. https://doi.org/10.3390/app15147871

Chicago/Turabian StyleBayesh, Masrufa, and Sharmin Jahan. 2025. "Embedding Security Awareness in IoT Systems: A Framework for Providing Change Impact Insights" Applied Sciences 15, no. 14: 7871. https://doi.org/10.3390/app15147871

APA StyleBayesh, M., & Jahan, S. (2025). Embedding Security Awareness in IoT Systems: A Framework for Providing Change Impact Insights. Applied Sciences, 15(14), 7871. https://doi.org/10.3390/app15147871