Abstract

Speech emotion recognition has become increasingly important in a wide range of applications, driven by the development of large transformer-based natural language processing models. However, the large size of these architectures limits their usability, which has led to a growing interest in smaller models. In this paper, we evaluate nineteen of the most popular small language models for the text and audio modalities for speech emotion recognition on the IEMOCAP dataset. Based on their cross-validation accuracy, the best architectures were selected to create ensemble models to evaluate the effect of combining audio and text, as well as the effect of incorporating contextual information on model performance. The experiments conducted showed a significant increase in accuracy with the inclusion of contextual information and the combination of modalities. The results obtained were highly competitive, outperforming numerous recent approaches. The proposed ensemble model achieved an accuracy of 82.12% on the IEMOCAP dataset, outperforming several recent approaches. These results demonstrate the effectiveness of ensemble methods for improving speech emotion recognition performance, and highlight the feasibility of training multiple small language models on consumer-grade computers.

1. Introduction

Speech can be defined as the expression of articulated sounds for the purpose of communicating thoughts, ideas, opinions, etc. By analyzing speech, humans are able to interpret the speaker’s intent, which enables effective communication. To understand speech, emotions play a crucial role; tone, rhythm, or word choice are changed according to the emotion the speaker feels or intends to convey. In the last two decades, speech emotion recognition (SER) [1] has gained increasing popularity in several research areas such as human–computer interaction [2], human–robot interaction [3], healthcare [4,5], or education [6,7]. During this period, handcrafted audio features such as energy, duration, harmonic-to-noise ratio, and classical feature extraction methods such as Mel-Frequency Cepstral Coefficients (MFCCs) combined with traditional machine-learning (ML) classifiers such as support vector machines (SVMs) have been successfully used for SER. However, in recent years, deep-learning (DL) methods have been increasingly adopted and gradually outperform traditional methods [8]. Deep-learning, and especially transformer-based architectures, achieve state-of-the-art accuracy on most popular SER datasets [9].

Part of the success of these approaches lies in their general-purpose design. Unlike traditional methods, whose knowledge capability is usually problem-specific, knowledge from a deep model can be easily transferred to perform a different task. This capability allows DL models to learn not only from small, manually labeled SER datasets, but also from much larger datasets focused on a different task, or even from unlabeled data [10].

The year 2018 marked a turning point in NLP, with the presentation of bidirectional encoder representations from transformers (BERT) [11] and generative pre-training (GPT) [12]. These transformer-based models demonstrated a great ability to capture longer-term dependencies in language in various NLP tasks, such as text generation, translation, summarization, question answering, or sentiment analysis. With 110 and 117 million parameters, respectively, the BERT and GPT architectures were originally considered large language models (LLMs). Since then, there has been significant progress in the development of much larger models, with architectures of billions or even hundreds of billions of parameters becoming standard [13]. As a result, models with just over 100 million parameters, previously considered large, are now referred to in the scientific community as small language models (SLMs) [14,15,16].

In general, LLMs report higher performance than SLMs in a wide range of NLP tasks [17]. However, their large size limits the usability of this type of model due to the large amount of computational resources required for both training and inference. On the other hand, SLMs can be fine-tuned relatively quickly on consumer-grade computers. And using techniques such as quantization [18] or low-rank adaptation (LoRA) [19], SLMs can be implemented in low-end devices, greatly increasing the number of potential applications for this type of model. The relatively light weight of SLMs facilitates the assembly of multiple models, which can significantly increase system performance in SER, especially when combining models of different modalities such as audio and text [20].

Several review articles published in recent years highlight the growing number of approaches using language models for SER [9,21,22], mostly models based on BERT for text processing or Wav2Vec2 [23] for audio processing. However, these models are often used as the backbone within more complex architectures, and research often focuses on the proposed architecture, sometimes neglecting the importance of the choice of language model.

In this paper, the performance of some of the most popular SLMs for text and audio modalities on the SER task is evaluated using a public benchmark dataset. All models are evaluated based on their accuracy and computational efficiency, with the aim of determining the most suitable architectures for the proposed task for each modality. Subsequently, the selected models are combined into an ensemble architecture to investigate the effect of combining models of the same modality but from different families on system performance, as well as the effect of combining text and audio modality models. In this paper, the terms context and contextual information are used to refer specifically to the immediately preceding utterance.

The key contributions of this paper are the following:

- The performance of popular fine-tuned SLMs for speaker-independent SER is evaluated on a public benchmark dataset.

- The effect of incorporating contextual information (previous utterance) on model performance is investigated.

- Ensemble architectures for unimodal and bimodal SER are developed and tested.

The rest of the paper is organized as follows. Section 2 provides a brief overview of related works. Section 3 describes the materials and methods used. Section 4 details the results and provides a comparison with other relevant works. Section 5 discusses the findings and outlines the limitations of the study, and Section 6 concludes the paper.

2. Related Work

In this section, an overview of relevant research on SER using SLMs is given. The section is divided into two parts: one presents different types of SLMs tested in this work, while the second examines recent work using language models for SER.

2.1. Architectures of SLMs

Based on their architecture, transformer-based models can be classified into three main categories: encoder-only, decoder-only, and encoder-decoder [9].

2.1.1. Encoder-Only Models

These models generate contextual representations for each token and are capable of understanding the interconnections between all input tokens. They are particularly well suited for natural language understanding tasks, where language features are extracted for subsequent applications such as text classification. However, several experiments show that the accuracy of this type of model is comparable to other models of similar size with encoder-decoder or decoder-only architectures in various text classification tasks such as financial sentiment analysis [24], fake news detection [25], or empathy detection in text [26].

In the text modality, BERT [11] is one of the first encoder-only models, and probably the most representative. BERT is a bidirectional language model designed to take into account the context of the surrounding tokens, both upstream and downstream of a given token. BERT’s architecture consists of multiple encoders arranged sequentially in a feed-forward structure, where the output of each encoder is used as the input of the next encoder. As a result of the great popularity of BERT since its release, several models based on its architecture have been introduced that have demonstrated better performance than the original model in several NLP tasks. The main improvements over the base model are briefly described below:

- RoBERTa [27]: Uses an optimized BERT training procedure by adjusting the hyperparameters, eliminating the next sentence prediction task, and using mini-batches and higher learning rates during training.

- ERNIE 2.0 [28]: Improves language understanding by integrating external knowledge sources during training and using knowledge masking to improve semantic understanding.

- ALBERT v2 [29]: Splits the embedding matrix into two smaller matrices and uses repeated layers split into groups to reduce the number of trainable parameters and increase training speed.

- DeBERTaV3 [30]: Uses disentangled attention, an improved mask decoder, and ELECTRA-style pre-training with gradient disentangled embedding sharing for improved performance over BERT and RoBERTa.

On the other hand, the BERT architecture has also served as a basis for the creation of audio models that apply similar principles of learning contextual representations to acoustic data. For example, Wav2Vec2 masks the speech input in latent space using a convolutional neural network (CNN) and solves a contrastive task defined over a quantization of the latent representations jointly learned in a BERT-like transformer. Similarly, HuBERT [31] uses the same blocks as Wav2Vec2, but introduces an offline clustering operation to provide aligned target labels for a BERT-like prediction. Similarly, WavLM [32] is based on the HuBERT framework with an emphasis on modeling spoken content and preserving speaker identity. This model extends the transformer architecture by incorporating a gated relative position bias, which improves its performance in recognition tasks. Additionally, squeezed and efficient Wav2Vec (SEW) [33] has been proposed as a lightweight alternative to this type of architecture, which modifies the CCN feature extractor and squeezes the input to the transformer for improved efficiency.

2.1.2. Decoder-Only Models

Decoder-only models are characterized by their auto-regressive approach, where each new token is predicted based only on the elements previously generated in the sequence. Although encoder-only architectures have been very successful in text processing, they are not typically used for audio classification. These models are particularly well suited for text generation tasks, but can be used for any NLP task. Within this category, GPT [12] and its variants are the most well-known family of SLMs. Just as BERT inspired the development of several encoder-only models, GPT has inspired the emergence of several notable decoder-only models, including:

- GPT-2 [34]: Second version of OpenAI’s GPT model. The model’s architecture is built by stacking multiple transformer decoder blocks composed of self-attention and linear layers.

- GPT Neo [35]: Trained on a larger dataset, it uses a similar architecture to GPT-2, but differs by using local attention in a different layer with a window size of 256 tokens.

- Pythia [36]: Uses a similar architecture to the previous ones, optimized for scalability. This model features changes from GPT-2, such as the use of rotary embeddings instead of positional embeddings.

- OPT [37]: Uses an architecture similar to GPT, but optimized for efficiency. Some versions of this model achieve accuracy comparable to GPT-3 on various NLP tasks, with a substantially lower carbon footprint during training.

- XLNet [38]: Introduces a permutation approach to an auto-regressive model, allowing it to learn bidirectional context without the need to mask tokens during training, as is done in BERT-like models.

2.1.3. Encoder-Decoder Models

These models use a classical transformer structure, where an encoder converts the input sequence into a fixed-length contextual representation that feeds the decoder, which converts it back into a natural language sequence. Within this category, the T5 [39] and BART [40] models stand out for text processing tasks. Both models use a similar approach and have demonstrated similar performance for text summarization [41,42]. However, a recent study by Rahman et al. [43] showed that BART outperformed T5 in accuracy for depression emotion classification, despite having significantly fewer trainable parameters.

In the field of audio processing, several approaches have been presented, mostly focused on automatic speech recognition. In this type of architecture, the encoder part is used to extract audio features and the decoder part is used to generate text from these features. For audio classification tasks, however, only the encoder part is usually used. Some of the most popular models are SpeechT5 [44], S2T [45], and Whisper [46]. Inspired by the popular T5 model, SpeechT5’s architecture consists of a shared encoder-decoder network and six modality-specific pre/post networks. It accepts both text and audio as input and is capable of generating both text and audio as output. On the other hand, S2T incorporates a convolutional downsampler to reduce the length of speech inputs by 3/4 before feeding them to the encoder. The model was trained with an auto-regressive cross-entropy loss and generates transcriptions or translations in an auto-regressive manner. Finally, Whisper uses a similar architecture to S2T, but it has been trained on a huge audio dataset with transcriptions, which makes this model stand out in transfer learning applications, presenting a high zero-shot performance.

2.2. SLMs for SER

Since the presentation of the BERT model in 2018, a large number of research papers have been published using this architecture or its variants as the backbone for SER from audio transcription, often combined with acoustic processing models to create multimodal systems. For example, a trimodal model was developed by merging text features extracted with RoBERTa, visual features extracted with DenseNet, and audio features extracted with OpenSmile through a long short-term memory (LSTM) and a multimodal attention module [47]. Another proposal fused MFCC features together with BERT embeddings in a recurrent neural network (RNN) with different attention mechanisms [48]. In the same vein, the location of the merging layer effect in a hybrid model combining BERT together with MFCC and other audio features has been explored [49]. The results of this work reflect an increase in the efficiency of the model. However, they point out a small influence of the location of the merging layer on the results.

Similarly, a BERT model was combined with an attention-based gated recurrent unit (GRU) for processing audio features from MFCC and different types of spectrograms [50]. The same approach was later used by replacing the BERT encoder with ALBERT and performing data augmentation using a generative adversarial network (GAN), which significantly improved the accuracy of the model [51]. On the other hand, a multihead attention module was used to fuse MFCC and other audio features with features extracted using a RoBERTa-based model, along with electroencephalogram (EEG) features, to create a multimodal brain-computer fusion network for human intention recognition [52].

In addition, a considerable amount of research has been done on architectures that combine BERT-type encoders for text feature extraction with CNNs for audio feature extraction from spectrograms or MFCCs. A visual geometry group (VGG)-type architecture with MEL spectrograms as input has been used in some works [53,54]. A modified AlexNet was used for feature extraction from audio spectrograms [55], and a similar approach but using three-dimensional spectrograms was also adopted [56]. A customized CNN incorporating RNN layers has also been proposed [57]. Finally, another customized CNN, but followed with a channel-wise global head-pooling, has been introduced [58].

On the other hand, although auto-regressive models are not commonly used in classification tasks, several proposals using this approach for SER have been presented recently. A model based on XLNet with bidirectional GRU and an attention mechanism has been proposed [59]. This model showed superior performance compared to BERT and the standard version of XLNet. Also, a generative framework for sentiment analysis designed to learn generic sentiment knowledge among different sentiment analysis subtasks was introduced [60]. The approach was evaluated on three different backbone architectures: BART, T5, and GPT-2. The results of the study showed that BART showed superior performance compared to the other two models. In addition, a trimodal approach using GPT for text, WaveRNN for audio, and FaceNet for images was used [61]. The results of this work indicate a superior accuracy of the text modality over the other two. However, the highest performance was achieved with the combination of the three modalities. A variational autoencoder combining RoBERTa as an encoder and the BART decoder for emotion recognition in conversation was developed [62]. In this approach, the decoder was used to train the utterance reconstruction model, which was shown to improve emotion recognition performance.

On the other hand, several studies using audio language models as the backbone of different architectures for SER have been published in recent years. For example, the output of Wav2Vec2 has been processed with CNNs, achieving improved accuracy over the base model in emotion recognition [63,64]. Similarly, the HuBERT and WavLM architectures were tested with different output blocks such as multilayer perceptron, CNN, or transformer [65]. Also, Wav2Vec2 features were fused with prosody features using a multi-headed attentional method [66,67]. Finally, a multi-modal autoencoder was proposed to combine features extracted with Wav2Vec2 and BERT and OpenFace [68].

Finally, several approaches that combine text and audio modalities have recently been presented [69]. Audio and text transformer features can be easily fused using attention-based methods to improve the overall SER accuracy. For example, Yi et al. [70] proposed a transformer encoder and dual modal cross-attention. Their approach achieved superior performance over existing models.

3. Materials and Methods

3.1. Dataset

There are dozens of public datasets that can be used for SER. According to a very recent systematic review on automatic speech emotion recognition [71], the most widely used of all available datasets is the Interactive Emotional Dyadic Motion Capture (IEMOCAP) [72]. In addition to its considerable popularity, this dataset has three key features that make it suitable for evaluating the models described in this paper. First, the dataset is divided into 5 sessions, with two different speakers in each session, which allows speaker-independent cross-validation of the models. Second, unlike other datasets that contain only a limited number of utterances spoken with different intonations, this dataset contains thousands of different utterances, allowing predictions based on text alone. Finally, it contains both audio and full transcriptions of these audio utterances, which makes it possible to study the effect of incorporating contextual information on model performance.

The dataset contains 12 h of recordings of two types of scenarios, emotional scripts and improvised scenarios. The dataset is divided into utterances, and each utterance is classified into one of the following emotion categories: neutral, happiness, sadness, anger, surprise, fear, disgust, frustration, excitement, and other. Most work on SER uses only four emotions: happiness, neutral, anger, and sadness. In our study, these four emotions are used to facilitate comparability of results with previous research.

3.2. Language Models

A selection of architectures with a similar number of trainable parameters and computational complexity was made. Table 1 lists all the baseline architectures evaluated, along with the number of trainable parameters for each model and the number of giga-floating point operations (GFLOPs) required to process one utterance. Parameter count is a common proxy for model complexity, but it does not always correlate with actual computational cost. GFLOPs provide a more direct measure of the operations required during inference. For instance, ALBERT reduces parameter count through techniques such as cross-layer parameter sharing, yet maintains similar GFLOPs to BERT. This highlights the relevance of GFLOPs when comparing the efficiency of different architectures. A fixed length of 100 tokens for text and 10 s for audio was used for each utterance as approximately 99% of the text sequences and over 90% of the audio sequences fell within these limits. The difference in coverage percentages is justified by the heavier computational demands of audio processing and the unique characteristics of prosody in audio data, which enable the prediction of emotional content even in incomplete dialogue segments. The value of GFLOPs was estimated using the fvcore [73] library developed by Facebook AI Research (https://ai.meta.com/research/, accessed on 2 February 2025). Models listed with (encoder-only) next to their name are encoder-decoder models where only the encoder part is used. In such a case, the values in Table 1 refer to the part of the model used, not the entire architecture.

Table 1.

Number of trainable parameters (millions) and GFLOPs for the baseline architectures used in the study.

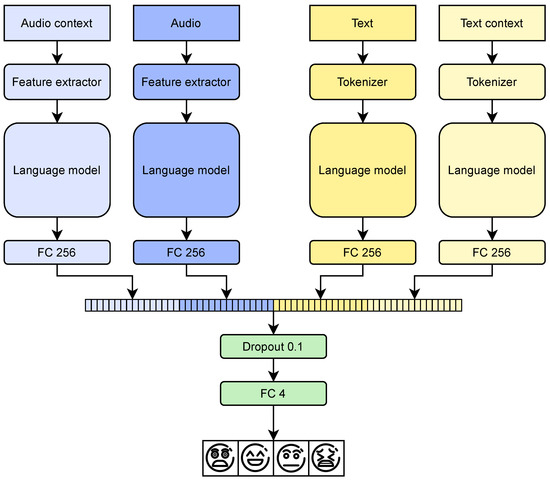

The baseline models were used as feature extractors. A 0.1 dropout layer was added to the output of the models to prevent overfitting, followed by a linear layer used as a classifier. The combination of different SLMs was also investigated. In this case, the output of the SLM was taken to a linear layer to equalize the output dimensionality of the different models before merging their features. The output features were then fused by concatenation. Figure 1 shows the proposed multimodal ensemble architecture, which represents the combination of four models. The same structure was followed when only two models were combined.

Figure 1.

Multimodal ensemble architecture. Feature vectors are projected to a common dimension using a fully connected layer (FC 256), followed by a dropout layer with probability 0.1 (Dropout 0.1), and a final classification layer with four output units (FC 4).

3.3. Experimental Setup

Training and validation of the different models was done by 5-fold cross-validation, where 4 sessions in each fold were used for training and the remaining session was used for validation. All SLMs were initialized with the pre-trained weights available from Hugging Face (https://huggingface.co/, accessed on 12 January 2025). Given that these models are pre-trained, a low learning rate () was chosen. In addition, a small batch size of 12 was chosen and an early stopping mechanism was applied to prevent overfitting. This mechanism consisted of stopping the training process if there was no improvement in the validation unweighted accuracy (UA) after 3 epochs. Furthermore, a maximum of 20 epochs was set for the training duration. In addition, the loss function was weighted by class weights (inversely proportional to class size) to compensate for the effect of class imbalance of the IEMOCAP dataset.

The audio models were trained using 10-s sequences, with padding or truncation applied as necessary to adjust them to that duration. In the case of Whisper, which is configured to support only 30-s sequences by default, the positional embedding layer was truncated to work with 10-s sequences. This change was made to allow a fair comparison with the other architectures. However, this truncation was not strictly necessary, as Whisper could have been trained by padding the audio inputs with 20 s of silence. An attention mask is applied to prevent the model from attending to these silent sections, which would hypothetically yield similar results to those obtained here, but with a significantly higher computational cost.

All individual and text ensemble models were trained on a workstation with an NVIDIA RTX 2070 Super GPU with 8 GB of VRAM, while the audio ensemble and multimodal models were trained on a workstation with an NVIDIA P100 GPU with 16 GB of VRAM.

The evaluation of the models was performed on the basis of weighted accuracy (WA) and UA, with the latter metric being used to select the best model. Different definitions of WA and UA exist in the literature, making it difficult to compare results. In this paper, one of the most extended definitions is used, where WA is considered as the percentage of correct predictions, while UA is defined as the average of the recall metric for each class:

where K is the number of classes, represents the true positives for class i, and represents the false negatives for class i.

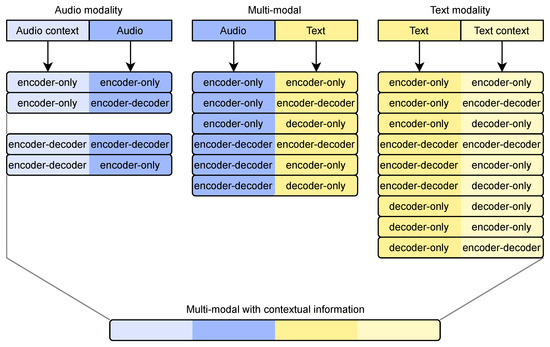

After training the baseline models, the model with the best validation AU in the text modality was selected for each category. In the audio modality, where no decoder-only model was used, the best encoder-only model and the best encoder-decoder model (using only the encoder part in this experiment) were selected. With the selected models, the effect of adding contextual information was investigated by combining different models or by using two copies of the same model, one for the utterance and one for the contextual information. In addition, the combination of models from different modalities was explored. Based on the results of this experiment, a multimodal model was assembled that included contextual information (see Figure 2). The decision to compare only models of different architectures and modalities was made for two main reasons. First, the number of combinations that would result from testing the 19 base models would be prohibitively large. Second, ensemble models often tend to perform better when combining features that are significantly different from each other, as diversity among classifiers has been shown to be a key factor in ensemble performance [74].

Figure 2.

Combinations of selected architectures from text and audio modalities, including models with and without contextual information, used to build and evaluate multimodal ensembles.

4. Results

4.1. Baseline Architectures

Table 2 shows the cross-validation results of the baseline architectures. The “Architecture” column refers to the original model architecture. Note, however, that in many encoder-decoder architectures only the encoder part was used. The best text classification results were obtained with the BERT model, closely followed by DeBERTa and RoBERTa. In the encoder-decoder models, BART clearly stands out with 70.23% UA, while in the encoder-only model, OPT stands out with a UA of 69.73%. On the other hand, in the audio modality, Whisper achieves the highest UA, beating the second best model (Wav2Vec2) by more than 4 points and the best text model by more than 2 points.

Table 2.

Cross-validation results of the baseline models, showing the average accuracy over 5 folds.

4.2. Ensemble Models

Based on these results, the best model in each category was selected to investigate the effect of adding contextual information on SER accuracy. For each model, its performance was evaluated using a copy of the same model to process the contextual information, as well as using the other models of the same modality to process the contextual information (see Figure 2). Table 3 summarizes the results of the different combinations studied.

Table 3.

Cross-validation results for unimodal ensemble models using contextual information.

In all combinations analyzed, a significant improvement in accuracy was observed over the individual models. However, the combination of different models did not lead to an improvement compared to using the same architecture twice. In the text modality, accuracy improvements of more than 9 points were achieved in both WA and UA. Conversely, in the audio modality, the improvements were more discrete. The use of two Wav2Vec2 models with contextual information only resulted in an increase in UA of about 2 points, while WA remained almost the same. On the other hand, the use of two Whisper models resulted in an increase of almost 4 points in both WA and UA.

In addition, the impact of combining text and audio models was evaluated to determine how the integration of different modalities affects overall performance, as shown in Table 4. The best multimodal classification results were obtained with the combination of Whisper and OPT. However, all the combinations studied achieved accuracies higher than those reported by the baseline models (see Table 2).

Table 4.

Cross-validation results for multimodal ensemble model without contextual information.

Considering that Whisper and OPT showed the best performance in both unimodal and multimodal ensemble architectures, these models were selected to build a multimodal ensemble architecture that supports contextual information according to the scheme shown in Figure 1. The ensemble model is composed of four SLMs, namely two Whisper models to process the audio with contextual information, and two OPT models to process the audio transcripts, also with contextual information.

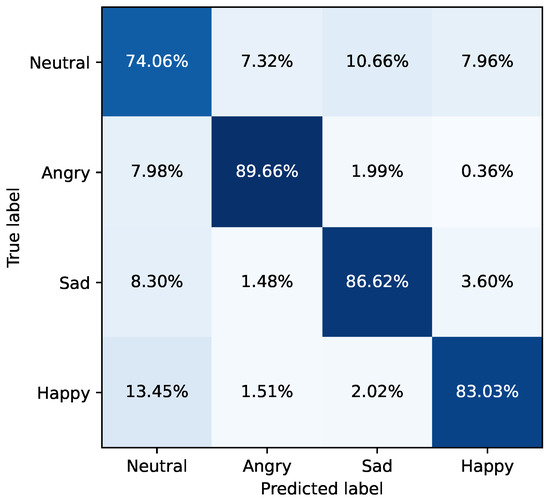

The proposed ensemble model showed a WA of 82.12% and a UA of 83.34%. Figure 3 shows the confusion matrix combined from the 5 folds, where the lowest accuracy corresponds to the neutral class (74.06%) and the highest to the angry class (89.66%). The model showed rapid convergence, reaching its maximum validation accuracy after an average of only 2.2 epochs. In addition, it achieved a WA of 80.11% and a UA of 81.68% after only one epoch. Furthermore, it is important to highlight the stability of the results, WA remained above 80% and UA above 81.5% during the first 4 epochs. Due to the early stopping criterion used, results are only available for the first 4 epochs in the 5 folds. However, in cases where training extended beyond this range, such as the fold that used session 1 as the validation set, where training stopped at epoch 7, both WA and UA metrics remained stable over the 7 epochs, with standard deviations of 1.4% for both metrics.

Figure 3.

Normalized confusion matrix for the final proposed ensemble model (Whisper + OPT with context) from 5-fold cross-validation.

To better understand the contribution of each component in the final model, we conducted an ablation study by progressively removing individual elements from the full ensemble, see Table 5. The results showed that removing the audio context led to a slight decrease in performance (UA dropped from 83.34% to 82.76%), while removing the text context caused a more pronounced decline (UA decreased to 81.11%). Excluding the entire text modality resulted in a significant drop in accuracy (UA fell to 76.31%). Similarly, eliminating both audio context and text context reduced performance considerably. These findings demonstrated that both modalities and their contextual information contributed meaningfully to the model’s effectiveness.

Table 5.

Ablation study of the proposed model. WA (%) and UA (%) after removing each component.

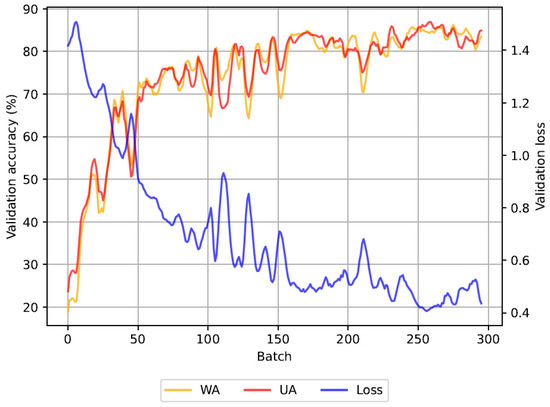

Figure 4 shows the learning curve of the proposed model during the first epoch, using session 5 as the validation set. The model achieved an accuracy of more than 80% after training with less than 150 batches, which represents 50% of the total dataset. Although some instability is observed at the beginning, both accuracy and loss tend to stabilize towards the end of the epoch.

Figure 4.

Learning curve of the proposed ensemble model (Whisper + OPT with context) during the first epoch.

4.3. Comparison with Previous Works

Table 6 provides a comparison of the results of this work with those reported in previous studies. The comparison only includes works that performed a cross-validation similar to ours, which guarantees that the models were evaluated independently of the speaker. This decision was motivated by the fact that the selection of the validation set was found to have a significant impact on accuracy. Some studies validate their results using a single session out of the five into which the dataset is divided, without cross-validation. While this ensures speaker independence, it does not allow for a fair comparison, as the choice of validation session can significantly affect accuracy. In our experiment, we observed that when session 2 was used as the validation set, accuracy was significantly higher than when any other session was used for validation. This variability highlights the importance of using a cross-validation strategy to ensure a more reliable and fair assessment.

Table 6.

Comparison with previous research.

As shown in Table 6, while the proposed ensemble architecture outperforms several recent approaches, it falls below the current state-of-the-art. However, it is crucial to consider the methodology used during the training process when interpreting the results. In our approach, very short training without hyperparameter tuning was carried out to avoid overfitting.

In contrast, many of the current approaches use much longer training, sometimes without early stopping criteria, combined with exhaustive hyperparameter search. In this sense, three of the approaches with better WA and UA [82,91,93] performed meticulous hyperparameter fitting. In addition, another outstanding work [90], which like us relied on a pre-trained model ensemble, performed 100 epochs of training and referred their results to the best epoch. Similarly, the other approach with high WA and UA [92] performed a 200 epoch training with a scheduled learning rate decay but without early stopping. While these procedures are valid and common in the literature, they can sometimes produce better results on a given benchmark at the cost of reducing the model’s ability to generalize to new data.

On the other hand, the fast convergence of our proposal is a notable advantage over other recent approaches. Some architectures outperform our model by resorting to data augmentation techniques, without which their accuracy drops by more than 5 points [93]. In contrast, our model is able to generalize after processing only half of the dataset once.

5. Discussion

Speech emotion recognition is a complex task that is usually addressed by implementing specific models for this purpose, usually involving a combination of different feature extraction methods or the use of complex attention mechanisms. The most common approach, as evidenced by the works reviewed in Section 2.2, often employs one or more SLMs as a backbone and their subsequent optimization by means of various strategies such as exhaustive hyperparameter tuning [52], self-labeling [76], use of advanced attention mechanisms [75], or customized loss functions [85].

In this paper, we have proposed a different approach. We fine-tuned 19 different general-purpose SLMs on a limited number of epochs, adding only a dropout layer and a linear layer to perform classification. We deliberately refrained from data augmentation, exhaustive hyperparameter tuning, or any other technique that could improve the accuracy of the analyzed models. Given that the architectures analyzed are typically used as the backbone of more complex models, our experiments aimed to establish a baseline that can serve as a reference for future research, providing valuable information about the architectures and their combinations that yield the best performance with minimal modifications.

The classification results of the baseline models highlight the importance of the choice of architecture for SER, as differences of more than 5 points were obtained between different architectures, despite having a similar number of trainable parameters. Encoder-only models are usually considered the most suitable for classification tasks and are the most widely used in the literature. In our study, these models were the most accurate for text classification. However, BART (encoder-decoder) and OPT (decoder-only) reported accuracies similar to those of the encoder-only architectures. Furthermore, when used in ensemble architectures with contextual information, BART and OPT slightly outperformed BERT.

The “Neutral” class was the most frequently misclassified across both text and audio models, likely because neutral utterances often lack strong prosodic variations or distinctive lexical markers that facilitate the recognition of other emotions. Additionally, in a subject-independent evaluation, the neutral tone of one speaker may resemble the slightly emotive tone of another, increasing classification difficulty. This pattern aligns with prior studies on the same dataset, where neutral is also the worst-classified class [82,83,91,92,95,96,97].

In the case of audio models, although encoder-only models are the most commonly used in the literature, the experiments conducted showed that Whisper, an encoder-decoder model (only the encoder pair was used in this work), produced the best classification results. This is particularly remarkable considering that Whisper is the architecture with the lowest number of trainable parameters and the lowest computational complexity. These results challenge the widely accepted notion that encoder-only models are the best performers in classification tasks, and invite reflection on the potential of other types of architectures.

The observed performance differences across models highlight the significant influence of pre-training data and objectives alongside architectural design. For instance, Whisper outperforms other audio models like Wav2Vec2 despite having fewer parameters and lower complexity, which likely reflects its extensive pre-training on a much larger and more diverse dataset. Similarly, among text models sharing similar architectures, variations in accuracy (e.g., OPT vs. GPT-2) suggest that pre-training corpus size and diversity, as well as the specific tasks used during pre-training, critically affect the models’ capacity to capture relevant emotional cues. Given that large-scale pre-training requires vast computational and data resources often unavailable to most researchers, our analysis considers architecture and pre-training jointly. This approach better reflects practical conditions and the overall impact on SER performance. The importance of dataset size and diversity in pre-training has been highlighted in several studies [39], where increased data scale yields substantial improvements beyond architectural changes.

On the other hand, the assembly of models, either to add contextual information or to combine modalities, was found to significantly increase the accuracy of SER. In this work, the incorporation of contextual information was done by combining two models, sometimes using the same architecture twice, although it could have been done with a single model. This choice was made for two main reasons. First, the use of two models allows the combination of different architectures to evaluate their performance and to make a fair comparison by also training an ensemble model composed of two copies of the same architecture. Second, the number of GFLOPs in inference of an ensemble model built with two copies of a model is practically the same as if a single model were used to process a sequence twice as long.

Finally, the combination of contextual and multimodal information produced the best classification results, outperforming many recent proposals. However, even if it falls below other approaches (e.g., [82,93]), the results obtained are particularly valuable because they were achieved without resorting to complex classifiers or advanced feature fusion techniques. The fact that these results were obtained by simply concatenating the feature vectors and using only a linear layer as the classification head suggests that significantly better results could potentially be obtained if more advanced fusion techniques were employed. For example, in a recent work, a feature fusion module based on multi-head cross-attention with two auxiliary tasks was proposed that significantly increased the accuracy of an ensemble model combining Wav2Vec2 and BERT compared to feature fusion by concatenation [81]. Although our results do not surpass the state-of-the-art, it is important to note that this work is not focused on achieving maximum accuracy, but on the backbone architectures and their possible combination. By employing a minimalist setup with a simple linear classifier, we demonstrate the great potential of these architectures. The simplicity of our approach highlights their adaptability and efficiency, making them ideal candidates for integration into more sophisticated models with advanced classifiers.

It may also be of interest for future work to perform feature fusion at an intermediate point in the SLM, which has been proven in other emotion classification tasks to improve performance without drastically increasing model complexity [99].

In addition, the rapid convergence of the proposed architecture is a major advantage over other approaches, as it allows for rapid retraining to adapt to new data, enabling its implementation in dynamic environments.

Limitations

An important limitation of this study is that the baseline models used were pre-trained on different datasets, which means that the comparison between different models is not completely fair. However, it is important to note that pre-training this type of model requires a large amount of computational resources due to the enormous size of the datasets used. This makes pre-training these architectures unaffordable for many researchers. For this reason, it is common to consider the model and its pre-training as a whole when making comparisons.

Another limitation is the size of the models used, which, although considered small for transformer-based models, still have a significant number of parameters. They are therefore heavier than many of the models used in SER. However, it is important to note that all the ensemble models analyzed can be trained on a single GPU with 16 GB of VRAM, using a batch size of 12 and float 32 precision. It would even be possible to train them on more modest hardware, using a reduced batch size with gradient accumulation, or using float 16 precision.

In addition, the evaluation of the models on a single dataset is another limitation. However, IEMOCAP is probably the most widely used dataset in SER and, unlike other popular datasets, it contains full transcripts of all audio recordings. This makes it easier to assess the impact of adding multimodal contextual information without relying on automatic speech recognition tools, which could alter the results in favor of one of the architectures. In light of the above considerations, we believe that evaluating the models on a single dataset is justified.

6. Conclusions

The aim of this paper was to evaluate the effectiveness of different general-purpose SLMs for the SER task using a consistent methodology, as well as to study the impact of adding contextual information and combining modalities on the performance of the models. The experiments conducted have validated the validity of these types of architectures for the proposed task. The results show a significant increase in accuracy when assembling audio and text models, as well as when assembling models of the same modality to process context. In particular, models such as Whisper and OPT, which have been used much less frequently in the literature than encoder-only models, showed superior performance in this study. This finding challenges the prevailing notion that encoder-only architectures are always the best choice for classification tasks such as SER.

Although the proposed solution is not state-of-the-art, it was not designed to maximize the performance of a model in a specific problem, but rather to demonstrate the potential of SLMs in SER and to provide a useful starting point for future research. The results of this study can be of great benefit to researchers and developers due to its ease of implementation and fast convergence. The evaluated architectures can easily be used as the backbone of more complex models. Future research could explore the use of these architectures together with fusion techniques based on attention mechanisms, advanced loss functions, or novel optimizers, which could potentially significantly improve their performance. Considering that the analyzed models are not specifically designed for emotion recognition, our findings may be useful for future research in other NLP areas beyond SER.

Author Contributions

Conceptualization, J.L.G.-S. and F.L.d.l.R.; methodology, J.L.G.-S.; software, J.L.G.-S.; validation, F.L.d.l.R., D.S.-R., R.S.-R. and A.F.-C.; writing—original draft preparation, J.L.G.-S.; writing—review and editing, A.F.-C.; funding acquisition, A.F.-C. All authors have read and agreed to the published version of the manuscript.

Funding

Grant PID2023-149753OB-C21 funded by Spanish MCIU/AEI/10.13039/501100011033/ ERDF, EU. Grant 2022-GRIN-34436 funded by Universidad de Castilla-La Mancha and by “ERDF A way to make Europe”. Grant BES-2021-097834 funded by MICIU/AEI/10.13039/501100011033 and by “ESF Investing in your future”. Grant 2023-PRED-21291 funded by Universidad de Castilla-La Mancha and by “ESF Investing in your future”. This work was also partially supported by Programa Iberoamericano de Ciencia y Tecnología para el Desarrollo (CYTED) (through Red [225RT0169]) and by CIBERSAM of the Instituto de Salud Carlos III, MICIU.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The IEMOCAP dataset can be requested from the authors at https://sail.usc.edu/iemocap/iemocap_release.htm, accessed on 12 January 2025. The source code used in this study will be made available upon request to the corresponding author.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Majkowski, A.; Kołodziej, M. Emotion Recognition from Speech in a Subject-Independent Approach. Appl. Sci. 2025, 15, 6958. [Google Scholar] [CrossRef]

- Thiripurasundari, D.; Bhangale, K.; Aashritha, V.; Mondreti, S.; Kothandaraman, M. Speech emotion recognition for human–computer interaction. Int. J. Speech Technol. 2024, 27, 817–830. [Google Scholar] [CrossRef]

- Grágeda, N.; Busso, C.; Alvarado, E.; García, R.; Mahu, R.; Huenupan, F.; Yoma, N.B. Speech emotion recognition in real static and dynamic human-robot interaction scenarios. Comput. Speech Lang. 2025, 89, 101666. [Google Scholar] [CrossRef]

- Sigona, F.; Radicioni, D.P.; Gili Fivela, B.; Colla, D.; Delsanto, M.; Mensa, E.; Bolioli, A.; Vigorelli, P. A computational analysis of transcribed speech of people living with dementia: The Anchise 2022 Corpus. Comput. Speech Lang. 2025, 89, 101691. [Google Scholar] [CrossRef]

- Wang, Y.; Pan, K.; Shao, Y.; Ma, J.; Li, X. Applying a Convolutional Vision Transformer for Emotion Recognition in Children with Autism: Fusion of Facial Expressions and Speech Features. Appl. Sci. 2025, 15, 3083. [Google Scholar] [CrossRef]

- Xie, Y.; Yang, L.; Zhang, M.; Chen, S.; Li, J. A Review of Multimodal Interaction in Remote Education: Technologies, Applications, and Challenges. Appl. Sci. 2025, 15, 3937. [Google Scholar] [CrossRef]

- Vyakaranam, A.; Maul, T.; Ramayah, B. A review on speech emotion recognition for late deafened educators in online education. Int. J. Speech Technol. 2024, 27, 29–52. [Google Scholar] [CrossRef]

- Ülgen Sönmez, Y.; Varol, A. In-depth investigation of speech emotion recognition studies from past to present –The importance of emotion recognition from speech signal for AI–. Intell. Syst. Appl. 2024, 22, 200351. [Google Scholar] [CrossRef]

- Hazmoune, S.; Bougamouza, F. Using transformers for multimodal emotion recognition: Taxonomies and state of the art review. Eng. Appl. Artif. Intell. 2024, 133, 108339. [Google Scholar] [CrossRef]

- Mares, A.; Diaz-Arango, G.; Perez-Jacome-Friscione, J.; Vazquez-Leal, H.; Hernandez-Martinez, L.; Huerta-Chua, J.; Jaramillo-Alvarado, A.F.; Dominguez-Chavez, A. Advancing Spanish Speech Emotion Recognition: A Comprehensive Benchmark of Pre-Trained Models. Appl. Sci. 2025, 15, 4340. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 15 December 2024).

- Raiaan, M.A.K.; Mukta, M.S.H.; Fatema, K.; Fahad, N.M.; Sakib, S.; Mim, M.M.J.; Ahmad, J.; Ali, M.E.; Azam, S. A Review on Large Language Models: Architectures, Applications, Taxonomies, Open Issues and Challenges. IEEE Access 2024, 12, 26839–26874. [Google Scholar] [CrossRef]

- Zhu, X.; Li, J.; Liu, Y.; Ma, C.; Wang, W. Distilling mathematical reasoning capabilities into Small Language Models. Neural Netw. 2024, 179, 106594. [Google Scholar] [CrossRef]

- Roh, J.; Kim, M.; Bae, K. Towards a small language model powered chain-of-reasoning for open-domain question answering. ETRI J. 2024, 46, 11–21. [Google Scholar] [CrossRef]

- Jovanović, M.; Campbell, M. Compacting AI: In Search of the Small Language Model. Computer 2024, 57, 96–100. [Google Scholar] [CrossRef]

- Oralbekova, D.; Mamyrbayev, O.; Othman, M.; Kassymova, D.; Mukhsina, K. Contemporary Approaches in Evolving Language Models. Appl. Sci. 2023, 13, 12901. [Google Scholar] [CrossRef]

- Zafrir, O.; Boudoukh, G.; Izsak, P.; Wasserblat, M. Q8BERT: Quantized 8Bit BERT. In Proceedings of the Fifth Workshop on Energy Efficient Machine and Cognitive Computing-NeurIPS Edition (EMC2-NIPS), Vancouver, BC, Canada, 13 December 2019; pp. 36–39. [Google Scholar] [CrossRef]

- Goswami, J.; Prajapati, K.K.; Saha, A.; Saha, A.K. Parameter-efficient fine-tuning large language model approach for hospital discharge paper summarization. Appl. Soft Comput. 2024, 157, 111531. [Google Scholar] [CrossRef]

- Pan, B.; Hirota, K.; Jia, Z.; Dai, Y. A review of multimodal emotion recognition from datasets, preprocessing, features, and fusion methods. Neurocomputing 2023, 561, 126866. [Google Scholar] [CrossRef]

- Atmaja, B.T.; Sasou, A.; Akagi, M. Survey on bimodal speech emotion recognition from acoustic and linguistic information fusion. Speech Commun. 2022, 140, 11–28. [Google Scholar] [CrossRef]

- Gan, C.; Zheng, J.; Zhu, Q.; Cao, Y.; Zhu, Y. A survey of dialogic emotion analysis: Developments, approaches and perspectives. Pattern Recognit. 2024, 156, 110794. [Google Scholar] [CrossRef]

- Baevski, A.; Zhou, H.; Mohamed, A.; Auli, M. Wav2vec 2.0: A Framework for Self-Supervised Learning of Speech Representations. arXiv 2020, arXiv:2006.11477. [Google Scholar] [CrossRef]

- Qian, T.; Xie, A.; Bruckmann, C. Sensitivity Analysis on Transferred Neural Architectures of BERT and GPT-2 for Financial Sentiment Analysis. arXiv 2022, arXiv:2207.03037. [Google Scholar] [CrossRef]

- Dhiman, P.; Kaur, A.; Gupta, D.; Juneja, S.; Nauman, A.; Muhammad, G. GBERT: A hybrid deep learning model based on GPT-BERT for fake news detection. Heliyon 2024, 10, e35865. [Google Scholar] [CrossRef]

- Devathasan, K.; Arony, N.N.; Gama, K.; Damian, D. Deciphering Empathy in Developer Responses: A Hybrid Approach Utilizing the Perception Action Model and Automated Classification. In Proceedings of the IEEE 32nd International Requirements Engineering Conference Workshops (REW), Reykjavik, Iceland, 24–25 June 2024; pp. 88–94. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, S.; Li, Y.; Feng, S.; Tian, H.; Wu, H.; Wang, H. ERNIE 2.0: A Continual Pre-training Framework for Language Understanding. arXiv 2019, arXiv:1907.12412. [Google Scholar] [CrossRef]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A Lite BERT for Self-supervised Learning of Language Representations. arXiv 2019, arXiv:1909.11942. [Google Scholar] [CrossRef]

- He, P.; Gao, J.; Chen, W. DeBERTaV3: Improving DeBERTa using ELECTRA-Style Pre-Training with Gradient-Disentangled Embedding Sharing. arXiv 2021, arXiv:2111.09543. [Google Scholar] [CrossRef]

- Hsu, W.N.; Bolte, B.; Tsai, Y.H.H.; Lakhotia, K.; Salakhutdinov, R.; Mohamed, A. HuBERT: Self-Supervised Speech Representation Learning by Masked Prediction of Hidden Units. arXiv 2021, arXiv:2106.07447. [Google Scholar] [CrossRef]

- Chen, S.; Wang, C.; Chen, Z.; Wu, Y.; Liu, S.; Chen, Z.; Li, J.; Kanda, N.; Yoshioka, T.; Xiao, X.; et al. WavLM: Large-Scale Self-Supervised Pre-Training for Full Stack Speech Processing. arXiv 2021, arXiv:2110.13900. [Google Scholar] [CrossRef]

- Wu, F.; Kim, K.; Pan, J.; Han, K.; Weinberger, K.Q.; Artzi, Y. Performance-Efficiency Trade-offs in Unsupervised Pre-training for Speech Recognition. arXiv 2021, arXiv:2109.06870. [Google Scholar] [CrossRef]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models Are Unsupervised Multitask Learners. 2019. Available online: https://cdn.openai.com/better-language-models/language_models_are_unsupervised_multitask_learners.pdf (accessed on 15 December 2024).

- Black, S.; Gao, L.; Wang, P.; Leahy, C.; Biderman, S. GPT-Neo: Large Scale Autoregressive Language Modeling with Meshtensorflow; Version v1.1.1; Zenodo: Geneva, Switzerland, 2021. [Google Scholar] [CrossRef]

- Biderman, S.; Schoelkopf, H.; Anthony, Q.; Bradley, H.; O’Brien, K.; Hallahan, E.; Khan, M.A.; Purohit, S.; Prashanth, U.S.; Raff, E.; et al. Pythia: A Suite for Analyzing Large Language Models Across Training and Scaling. arXiv 2023, arXiv:2304.01373. [Google Scholar] [CrossRef]

- Zhang, S.; Roller, S.; Goyal, N.; Artetxe, M.; Chen, M.; Chen, S.; Dewan, C.; Diab, M.; Li, X.; Lin, X.V.; et al. OPT: Open Pre-trained Transformer Language Models. arXiv 2022, arXiv:2205.01068. [Google Scholar] [CrossRef]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.; Le, Q.V. XLNet: Generalized Autoregressive Pretraining for Language Understanding. arXiv 2019, arXiv:1906.08237. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. arXiv 2019, arXiv:1910.10683. [Google Scholar] [CrossRef]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar] [CrossRef]

- Ghadimi, A.; Beigy, H. Hybrid multi-document summarization using pre-trained language models. Expert Syst. Appl. 2022, 192, 116292. [Google Scholar] [CrossRef]

- Dharrao, D.; Mishra, M.; Kazi, A.; Pangavhane, M.; Pise, P.; Bongale, A.M. Summarizing Business News: Evaluating BART, T5, and PEGASUS for Effective Information Extraction. Rev. Intell. Artif. 2024, 38, 847–855. [Google Scholar] [CrossRef]

- Rahman, A.B.S.; Ta, H.T.; Najjar, L.; Azadmanesh, A.; Gönul, A.S. DepressionEmo: A novel dataset for multilabel classification of depression emotions. J. Affect. Disord. 2024, 366, 445–458. [Google Scholar] [CrossRef]

- Ao, J.; Wang, R.; Zhou, L.; Wang, C.; Ren, S.; Wu, Y.; Liu, S.; Ko, T.; Li, Q.; Zhang, Y.; et al. SpeechT5: Unified-Modal Encoder-Decoder Pre-Training for Spoken Language Processing. arXiv 2021, arXiv:2110.07205. [Google Scholar] [CrossRef]

- Wang, C.; Tang, Y.; Ma, X.; Wu, A.; Popuri, S.; Okhonko, D.; Pino, J. fairseq S2T: Fast Speech-to-Text Modeling with fairseq. arXiv 2020, arXiv:2010.05171. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust Speech Recognition via Large-Scale Weak Supervision. arXiv 2022, arXiv:2212.04356. [Google Scholar] [CrossRef]

- Zou, S.; Huang, X.; Shen, X.; Liu, H. Improving multimodal fusion with Main Modal Transformer for emotion recognition in conversation. Knowl.-Based Syst. 2022, 258, 109978. [Google Scholar] [CrossRef]

- Ho, N.H.; Yang, H.J.; Kim, S.H.; Lee, G. Multimodal Approach of Speech Emotion Recognition Using Multi-Level Multi-Head Fusion Attention-Based Recurrent Neural Network. IEEE Access 2020, 8, 61672–61686. [Google Scholar] [CrossRef]

- Pepino, L.; Riera, P.; Ferrer, L.; Gravano, A. Fusion Approaches for Emotion Recognition from Speech Using Acoustic and Text-Based Features. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 6484–6488. [Google Scholar] [CrossRef]

- Kumar, P.; Kaushik, V.; Raman, B. Towards the Explainability of Multimodal Speech Emotion Recognition. In Proceedings of the 22nd Annual Conference of the International Speech Communication Association, Interspeech 2021, Brno, Czech Republic, 30 August–3 September 2021; pp. 1748–1752. [Google Scholar] [CrossRef]

- Setyono, J.C.; Zahra, A. Data augmentation and enhancement for multimodal speech emotion recognition. Bul. Electr. Eng. Inform. 2023, 12, 3008–3015. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, G.; Okada, S.; Wang, L.; Zhao, B.; Dang, J. MBCFNet: A Multimodal Brain–Computer Fusion Network for human intention recognition. Knowl.-Based Syst. 2024, 296, 111826. [Google Scholar] [CrossRef]

- Furutani, J.; Kang, X.; Kiuchi, K.; Nishimura, R.; Sasayama, M.; Matsumoto, K. Learning a Bimodal Emotion Recognition System Based on Small Amount of Speech Data. In Proceedings of the 8th International Conference on Systems and Informatics (ICSAI), Kunming, China, 10–12 December 2022; Volume E602, pp. 1–5. [Google Scholar] [CrossRef]

- Triantafyllopoulos, A.; Reichel, U.; Liu, S.; Huber, S.; Eyben, F.; Schuller, B.W. Multistage linguistic conditioning of convolutional layers for speech emotion recognition. Front. Comput. Sci. 2023, 5, 107247. [Google Scholar] [CrossRef]

- Tan, L.; Yu, K.; Lin, L.; Cheng, X.; Srivastava, G.; Lin, J.C.W.; Wei, W. Speech Emotion Recognition Enhanced Traffic Efficiency Solution for Autonomous Vehicles in a 5G-Enabled Space–Air–Ground Integrated Intelligent Transportation System. IEEE Trans. Intell. Transp. Syst. 2022, 23, 2830–2842. [Google Scholar] [CrossRef]

- Asiya, U.A.; Kiran, V.K. A Novel Multimodal Speech Emotion Recognition System. In Proceedings of the Third International Conference on Intelligent Computing Instrumentation and Control Technologies (ICICICT), Kannur, India, 11–12 August 2022; pp. 327–332. [Google Scholar] [CrossRef]

- Braunschweiler, N.; Doddipatla, R.; Keizer, S.; Stoyanchev, S. Factors in Emotion Recognition With Deep Learning Models Using Speech and Text on Multiple Corpora. IEEE Signal Process. Lett. 2022, 29, 722–726. [Google Scholar] [CrossRef]

- Chauhan, K.; Sharma, K.K.; Varma, T. Multimodal Emotion Recognition Using Contextualized Audio Information and Ground Transcripts on Multiple Datasets. Arab. J. Sci. Eng. 2023, 49, 11871–11881. [Google Scholar] [CrossRef]

- Han, T.; Zhang, Z.; Ren, M.; Dong, C.; Jiang, X.; Zhuang, Q. Text Emotion Recognition Based on XLNet-BiGRU-Att. Electronics 2023, 12, 2704. [Google Scholar] [CrossRef]

- Li, Z.; Lin, T.E.; Wu, Y.; Liu, M.; Tang, F.; Zhao, M.; Li, Y. UniSA: Unified Generative Framework for Sentiment Analysis. In Proceedings of the MM ’23: 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; Volume 30, pp. 6132–6142. [Google Scholar] [CrossRef]

- Xie, B.; Sidulova, M.; Park, C.H. Robust Multimodal Emotion Recognition from Conversation with Transformer-Based Crossmodality Fusion. Sensors 2021, 21, 4913. [Google Scholar] [CrossRef] [PubMed]

- Yang, K.; Zhang, T.; Ananiadou, S. Disentangled Variational Autoencoder for Emotion Recognition in Conversations. IEEE Trans. Affect. Comput. 2024, 15, 508–518. [Google Scholar] [CrossRef]

- Sun, C.; Zhou, Y.; Huang, X.; Yang, J.; Hou, X. Combining wav2vec 2.0 Fine-Tuning and ConLearnNet for Speech Emotion Recognition. Electronics 2024, 13, 1103. [Google Scholar] [CrossRef]

- Jo, J.; Kim, S.K.; Yoon, Y.-c. Text and Sound-Based Feature Extraction and Speech Emotion Classification for Korean. Int. J. Adv. Sci. Eng. Inf. Technol. 2024, 14, 873–879. [Google Scholar] [CrossRef]

- Pastor, M.A.; Ribas, D.; Ortega, A.; Miguel, A.; Lleida, E. Cross-Corpus Training Strategy for Speech Emotion Recognition Using Self-Supervised Representations. Appl. Sci. 2023, 13, 9062. [Google Scholar] [CrossRef]

- Naderi, N.; Nasersharif, B. Cross Corpus Speech Emotion Recognition using transfer learning and attention-based fusion of Wav2Vec2 and prosody features. Knowl.-Based Syst. 2023, 277, 110814. [Google Scholar] [CrossRef]

- Bautista, J.L.; Shin, H.S. Speech Emotion Recognition Model Based on Joint Modeling of Discrete and Dimensional Emotion Representation. Appl. Sci 2025, 15, 623. [Google Scholar] [CrossRef]

- Gladys A., A.; Vetriselvi, V. Sentiment analysis on a low-resource language dataset using multimodal representation learning and cross-lingual transfer learning. Appl. Soft Comput. 2024, 157, 111553. [Google Scholar] [CrossRef]

- O’Shaughnessy, D. Review of Automatic Estimation of Emotions in Speech. Appl. Sci. 2025, 15, 5731. [Google Scholar] [CrossRef]

- Yi, M.H.; Kwak, K.C.; Shin, J.H. HyFusER: Hybrid Multimodal Transformer for Emotion Recognition Using Dual Cross Modal Attention. Appl. Sci. 2025, 15, 1053. [Google Scholar] [CrossRef]

- Mustafa, H.H.; Darwish, N.R.; Hefny, H.A. Automatic Speech Emotion Recognition: A Systematic Literature Review. Int. J. Speech Technol. 2024, 27, 267–285. [Google Scholar] [CrossRef]

- Busso, C.; Bulut, M.; Lee, C.C.; Kazemzadeh, A.; Mower, E.; Kim, S.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive emotional dyadic motion capture database. Lang. Resour. Eval. 2008, 42, 335–359. [Google Scholar] [CrossRef]

- Facebook AI Research. Fvcore Library. Available online: https://github.com/facebookresearch/fvcore/ (accessed on 2 February 2025).

- Kuncheva, L.I.; Whitaker, C.J. Measures of Diversity in Classifier Ensembles and Their Relationship with the Ensemble Accuracy. Mach. Learn. 2003, 51, 181–207. [Google Scholar] [CrossRef]

- Yan, Y.; Shen, X. Research on Speech Emotion Recognition Based on AA-CBGRU Network. Electronics 2022, 11, 1409. [Google Scholar] [CrossRef]

- Wen, G.; Liao, H.; Li, H.; Wen, P.; Zhang, T.; Gao, S.; Wang, B. Self-labeling with feature transfer for speech emotion recognition. Knowl.-Based Syst. 2022, 254, 109589. [Google Scholar] [CrossRef]

- Xu, X.; Li, D.; Zhou, Y.; Wang, Z. Multi-type features separating fusion learning for Speech Emotion Recognition. Appl. Soft Comput. 2022, 130, 109648. [Google Scholar] [CrossRef]

- Lu, C.; Zheng, W.; Lian, H.; Zong, Y.; Tang, C.; Li, S.; Zhao, Y. Speech Emotion Recognition via an Attentive Time–Frequency Neural Network. IEEE Trans. Comput. Soc. Syst. 2023, 10, 3159–3168. [Google Scholar] [CrossRef]

- Tellai, M.; Gao, L.; Mao, Q.; Abdelaziz, M. A novel conversational hierarchical attention network for speech emotion recognition in dyadic conversation. Multimed. Tools Appl. 2023, 83, 59699–59723. [Google Scholar] [CrossRef]

- Adel, O.; Fathalla, K.M.; Abo ElFarag, A. MM-EMOR: Multi-Modal Emotion Recognition of Social Media Using Concatenated Deep Learning Networks. Big Data Cogn. Comput. 2023, 7, 164. [Google Scholar] [CrossRef]

- Sun, D.; He, Y.; Han, J. Using Auxiliary Tasks In Multimodal Fusion of Wav2vec 2.0 And Bert for Multimodal Emotion Recognition. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; Volume 30, pp. 1–5. [Google Scholar] [CrossRef]

- Tellai, M.; Mao, Q. CCTG-NET: Contextualized Convolutional Transformer-GRU Network for speech emotion recognition. Int. J. Speech Technol. 2023, 26, 1099–1116. [Google Scholar] [CrossRef]

- Hyeon, J.; Oh, Y.H.; Lee, Y.J.; Choi, H.J. Improving speech emotion recognition by fusing self-supervised learning and spectral features via mixture of experts. Data Knowl. Eng. 2024, 150, 102262. [Google Scholar] [CrossRef]

- Qu, L.; Li, T.; Weber, C.; Pekarek-Rosin, T.; Ren, F.; Wermter, S. Disentangling Prosody Representations With Unsupervised Speech Reconstruction. IEEE/ACM Trans. Audio Speech Lang. Process. 2024, 32, 39–54. [Google Scholar] [CrossRef]

- Chen, W.; Xing, X.; Chen, P.; Xu, X. Vesper: A Compact and Effective Pretrained Model for Speech Emotion Recognition. IEEE Trans. Affect. Comput. 2024, 15, 1711–1724. [Google Scholar] [CrossRef]

- Zhang, S.; Feng, Y.; Ren, Y.; Guo, Z.; Yu, R.; Li, R.; Xing, P. Multi-Modal Emotion Recognition Based on Wavelet Transform and BERT-RoBERTa: An Innovative Approach Combining Enhanced BiLSTM and Focus Loss Function. Electronics 2024, 13, 3262. [Google Scholar] [CrossRef]

- Liu, K.; Wei, J.; Zou, J.; Wang, P.; Yang, Y.; Shen, H.T. Improving Pre-trained Model-based Speech Emotion Recognition from a Low-level Speech Feature Perspective. IEEE Trans. Multimed. 2024, 26, 10623–10636. [Google Scholar] [CrossRef]

- Lei, J.; Wang, J.; Wang, Y. Multi-level attention fusion network assisted by relative entropy alignment for multimodal speech emotion recognition. Appl. Intell. 2024, 54, 8478–8490. [Google Scholar] [CrossRef]

- Khan, M.; Gueaieb, W.; El Saddik, A.; Kwon, S. MSER: Multimodal speech emotion recognition using cross-attention with deep fusion. Expert Syst. Appl. 2024, 245, 122946. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, S.; Li, P. Improvement of Multimodal Emotion Recognition Based on Temporal-Aware Bi-Direction Multi-Scale Network and Multi-Head Attention Mechanisms. Appl. Sci. 2024, 14, 3276. [Google Scholar] [CrossRef]

- Song, Y.; Zhou, Q. Bi-Modal Bi-Task Emotion Recognition Based on Transformer Architecture. Appl. Artif. Intell. 2024, 38, 2356992. [Google Scholar] [CrossRef]

- Saleem, N.; Elmannai, H.; Bourouis, S.; Trigui, A. Squeeze-and-excitation 3D convolutional attention recurrent network for end-to-end speech emotion recognition. Appl. Soft Comput. 2024, 161, 111735. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Song, Y.; Li, Y.; Wang, S.; Yuan, W.; Li, Y.; Zhao, Z. Discriminative feature learning based on multi-view attention network with diffusion joint loss for speech emotion recognition. Eng. Appl. Artif. Intell. 2024, 137, 109219. [Google Scholar] [CrossRef]

- Lian, H.; Lu, C.; Chang, H.; Zhao, Y.; Li, S.; Li, Y.; Zong, Y. AMGCN: An adaptive multi-graph convolutional network for speech emotion recognition. Speech Commun. 2025, 168, 103184. [Google Scholar] [CrossRef]

- Qi, X.; Song, Q.; Chen, G.; Zhang, P.; Fu, Y. Acoustic Feature Excitation-and-Aggregation Network Based on Multi-Task Learning for Speech Emotion Recognition. Electronics 2025, 14, 844. [Google Scholar] [CrossRef]

- Jin, G.; Xu, Y.; Kang, H.; Wang, J.; Miao, B. DSTM: A transformer-based model with dynamic-static feature fusion in speech emotion recognition. Comput. Speech Lang. 2025, 90, 101733. [Google Scholar] [CrossRef]

- Khan, M.; Tran, P.N.; Pham, N.T.; El Saddik, A.; Othmani, A. MemoCMT: Multimodal emotion recognition using cross-modal transformer-based feature fusion. Sci. Rep. 2025, 15, 5473. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, C.; Wang, Z.; Zhao, C.; Lin, M.; Zheng, Q. MTLSER: Multi-task learning enhanced speech emotion recognition with pre-trained acoustic model. Expert Syst. Appl. 2025, 273, 126855. [Google Scholar] [CrossRef]

- Gómez-Sirvent, J.L.; López de la Rosa, F.; López, M.T.; Fernández-Caballero, A. Facial Expression Recognition in the Wild for Low-Resolution Images Using Voting Residual Network. Electronics 2023, 12, 3837. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).