Mind, Machine, and Meaning: Cognitive Ergonomics and Adaptive Interfaces in the Age of Industry 5.0

Abstract

1. Introduction

1.1. Importance of the Human–Machine Interaction in Automated Industrial Environments

1.2. Main Objectives of This Research

- -

- To identify recent studies and trends in the field of HMIs, with a focus on applications within Industry 4.0 and 5.0;

- -

- To analyze ergonomic techniques applied in optimizing the design of interfaces and the interactions between operators and automated systems;

- -

- To explore interaction models and the cognitive, psychological, and physiological factors that influence operator performance, efficiency, and safety;

- -

- To define future research directions, emphasizing the development of intelligent, adaptive, and sustainable HMI solutions.

2. Human–Machine Interaction: Definitions and Key Concepts

2.1. Evolution of the Human–Machine Interaction

2.1.1. From Mechanization to Intelligent Automation

2.1.2. The Roles of Artificial Intelligence and Autonomous Systems

2.1.3. Industrial Examples and Use Cases

2.2. Models of the Human–Machine Interaction

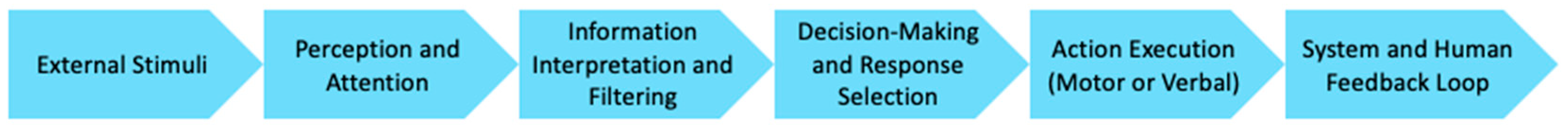

2.2.1. Classic Models of the Human–Machine Interaction

2.2.2. Modern Models Based on Intelligent Technologies: AI and Machine Learning Applications

2.2.3. User Interface and User Experience in Industrial Environments

2.3. Factors Influencing Human–Machine Interactions

2.3.1. Cognitive Factors

2.3.2. Physiological and Biomechanical Factors

2.3.3. Psychological Factors

2.3.4. Environmental and Organizational Factors

3. Ergonomic Techniques in Human–Machine Interactions

3.1. Principles of Ergonomics Applied to Human–Machine Interactions

3.1.1. Physical Ergonomics (Posture, Fatigue, and Physical Effort)

3.1.2. Cognitive Ergonomics

3.1.3. Organizational Ergonomics: Workplace Design and Collaboration

3.2. Methods Used in Ergonomic Evaluations

3.2.1. Rapid Upper Limb Assessment (RULA): Methodological Framework and Applications

- -

- Group A—upper arm, lower arm, and wrist (Figure 2).

- -

- One for 20° extension to 20° of flexion;

- -

- Two for extension greater than 20° or 20–45° of flexion;

- -

- Three for 45–90° of flexion;

- -

- Four for 90° or more of flexion.

- -

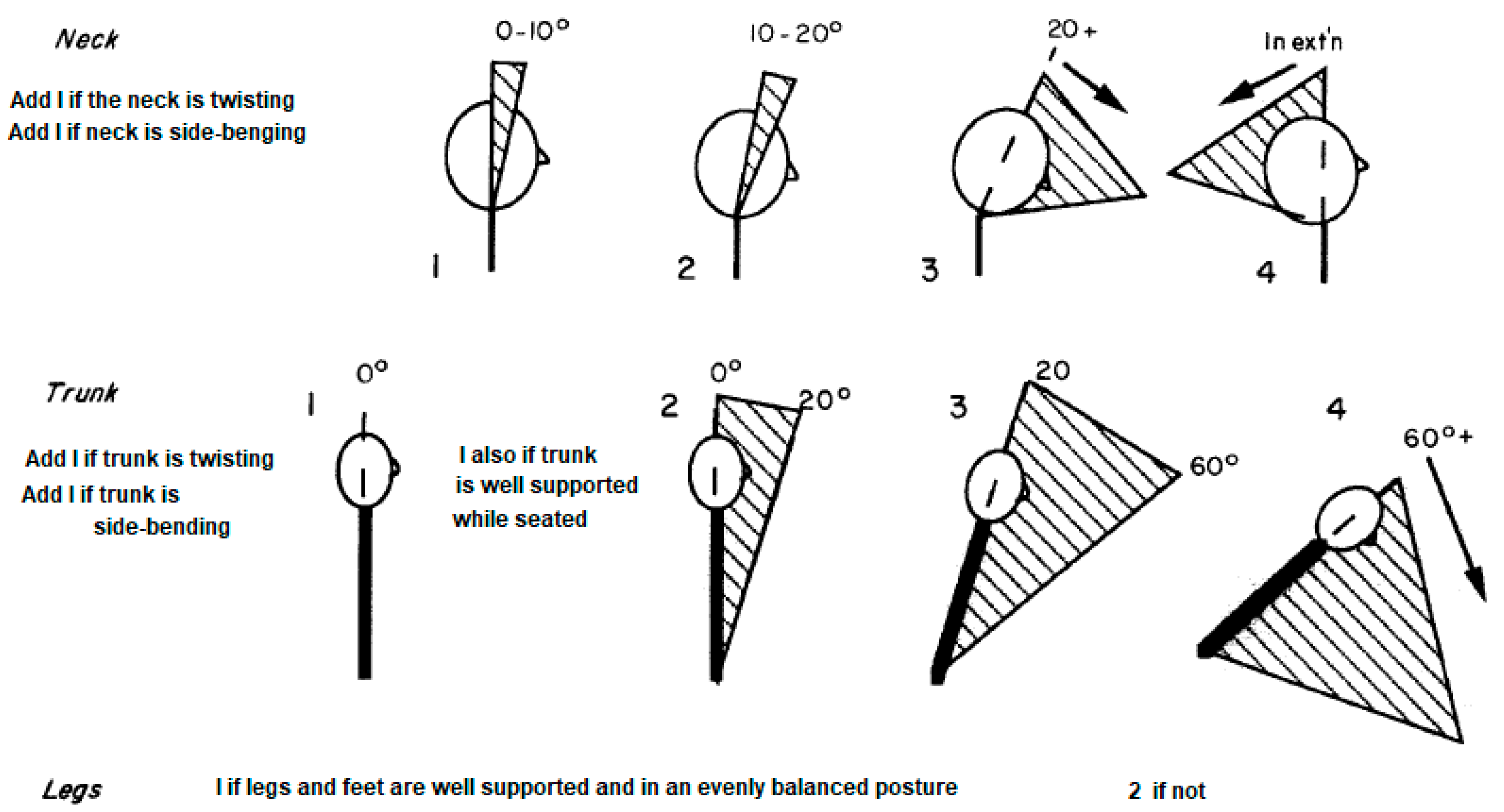

- Group B—neck, trunk, and legs (Figure 3).

- -

- One for 0–10° flexion;

- -

- Two for 10–20° flexion;

- -

- Three for 2/1° or more flexion;

- -

- Four if in extension.

Applied Industrial Case Studies

- -

- Wire Harness Assembly with Cobots: Navas-Reascos et al. applied RULA to assess ergonomic improvements in a wire harness assembly when a collaborative robot (cobot) was introduced. In the original manual process, high scores of six and seven were recorded, primarily due to upper arm elevation and wrist twisting. After implementation, these scores dropped to three, confirming a significant reduction in risk exposure [62].

- -

- Three-Dimensional Pose Estimation in Manufacturing: Paudel et al. developed a computer vision-based RULA evaluation framework using 3D human pose estimation. Their system showed 93% alignment with expert RULA ratings in non-occluded views and 82% accuracy in occluded postures. This method proves valuable in automating large-scale ergonomic assessments, especially in settings with numerous workers or real-time monitoring needs [59].

- -

- Inertial Sensor-Based RULA Automation: Gómez-Galán et al. proposed a wearable inertial sensor system that automates RULA and REBA scoring. Their results achieved an over 88% classification accuracy compared to expert assessments. This method is suitable for high-risk, dynamic tasks where visual scoring is impractical, such as in heavy industry or confined workspaces [58].

- -

- Agriculture Sector: Paudel et al. conducted a bibliometric review showing that agriculture, healthcare, and manufacturing are the top domains for RULA use. In agricultural contexts, such as fruit harvesting or rubber tapping, high RULA scores have led to equipment redesign and optimized workflows. The authors noted RULA’s consistent tendency to detect high risk in repetitive, bent-forward upper-limb postures [59,60].

Effectiveness and Reliability

- -

- -

- Statistical Reliability: Intraclass correlation coefficients (ICCs) often exceed 0.80 in well-trained evaluators, though variability remains for complex tasks [58].

- -

- Comparative Sensitivity: RULA was shown to yield higher risk ratings than OWAS and REBA in static or upper-limb focused tasks, but lower sensitivity in dynamic whole-body postures [63].

3.2.2. Ovako Working Posture Analysis System (OWAS): Ergonomic Assessment Methodology and Applications

Method Description and Scoring Principles

- -

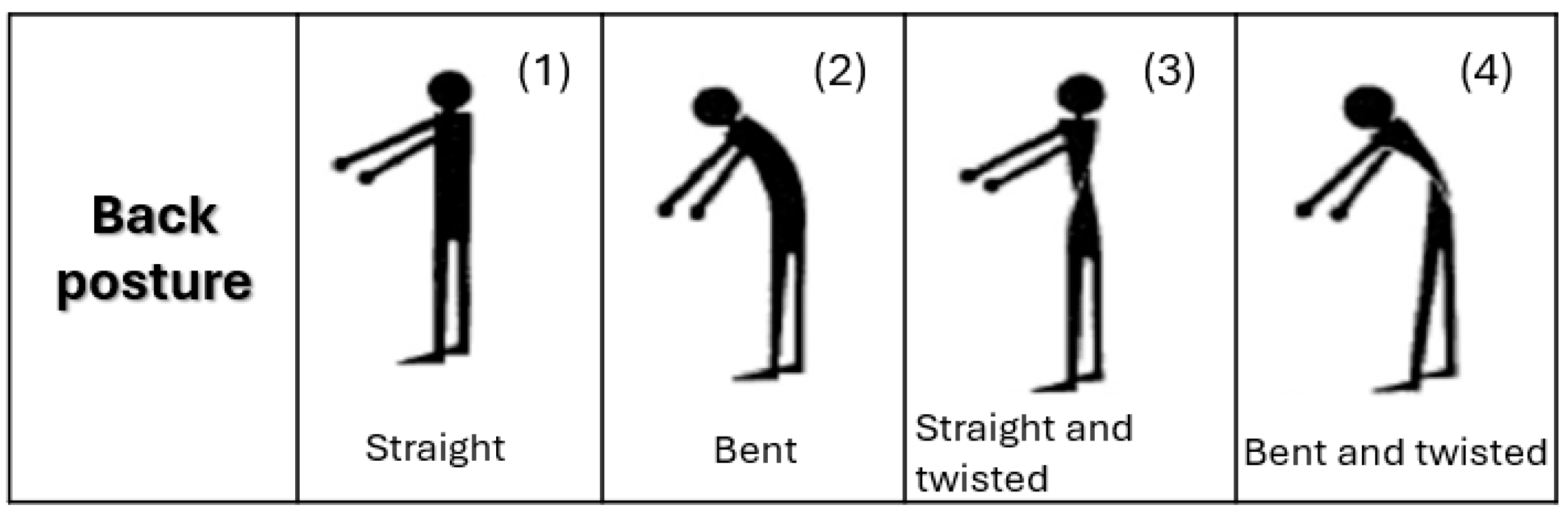

- Back posture (four categories) (Figure 4);

- -

- Arm postures (three categories) (Figure 5);

- -

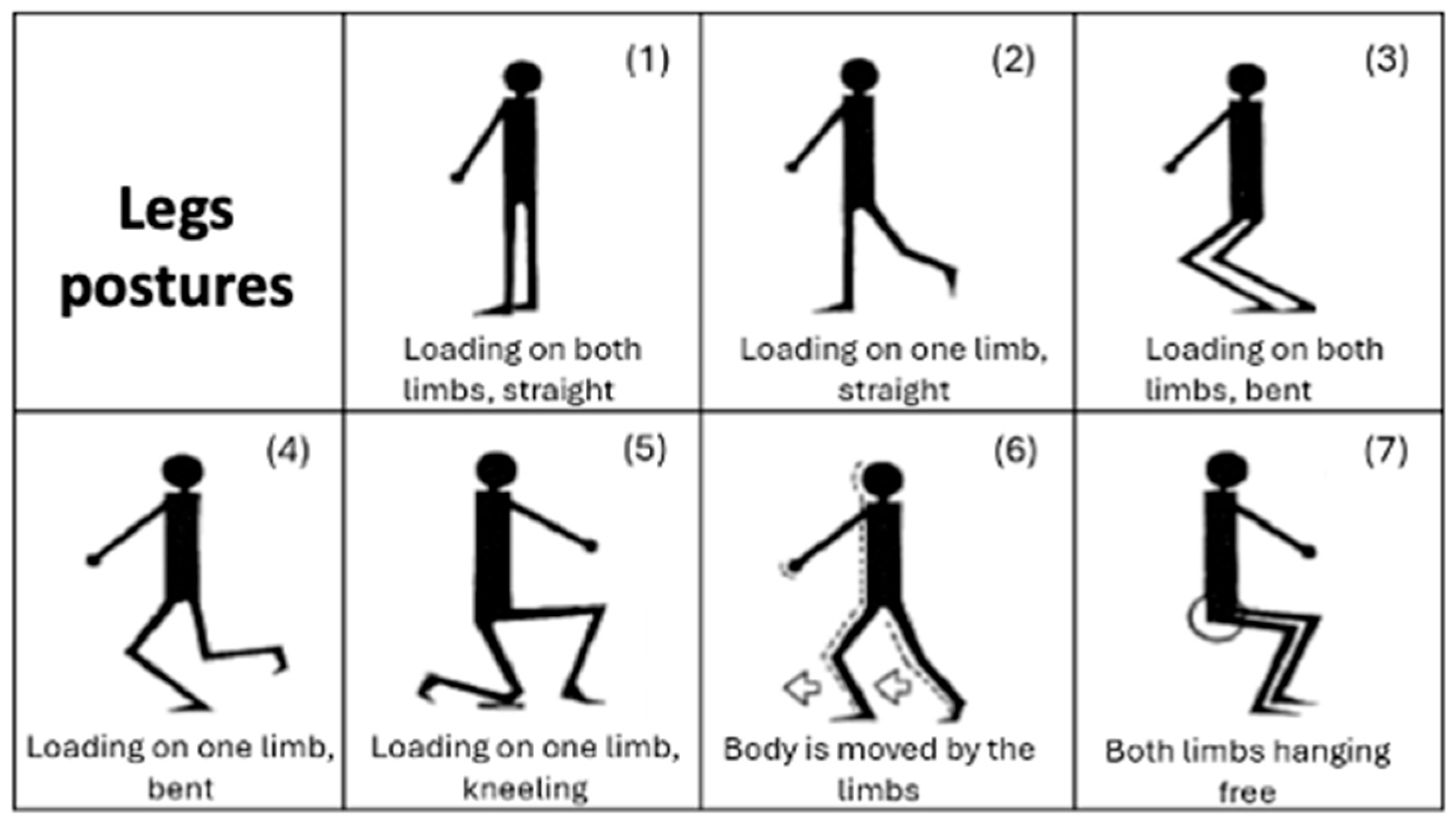

- Leg postures (seven categories) (Figure 6);

- -

- Load/force handled (three categories) (Figure 7).

- -

- Applicable in real-time with no specialized equipment;

- -

- Entire body evaluation, unlike RULA or AULA, which are more focused on upper limbs;

- -

- Suitable for physical work in logistics, agriculture, construction, and retail sectors.

- -

- Does not account for the duration or repetition of a posture;

- -

- Limited ability to differentiate between subtle variations in joint angles;

- -

- Less sensitive to dynamic tasks involving frequent movement transitions.

Case Studies and Industry Applications

- -

- Automotive Manual Assembly: Loske et al. applied OWAS to evaluate postures during manual seat assembly in the automotive sector [65]. The study showed that workers performing repetitive overhead and forward-bending tasks accumulated high-risk OWAS postures (code 4232, AC4). The use of AR-based training and ergonomic redesign reduced exposure to harmful postures and significantly improved cerebral oxygenation, indicating reduced fatigue.

- -

- Retail Intralogistics: Loske et al. (2021) analyzed logistics workers in warehouse picking and packaging using OWAS and CUELA (a digital strain analysis system) [65]. In four standard task configurations, OWAS identified a high frequency of AC3 and AC4 scores, primarily associated with trunk flexion and static leg postures. Recommendations included redesigning shelf heights and rotating tasks more frequently to reduce the lumbar load.

- -

- Agriculture Sector: In a comparative study by Choi et al. (2020), OWAS was used alongside RULA and REBA to evaluate 196 farm tasks [63]. While OWAS was less sensitive to the upper-limb load than RULA, it proved effective at identifying risky full-body postures, particularly during squatting and bent-knee tasks such as planting and harvesting.

- -

- Manufacturing Simulations: Ojstersek et al. (2020) employed OWAS within a digital human modeling platform (Tecnomatix Jack) to simulate ergonomic scenarios using data from a 360° spherical camera [67]. Postural risk profiles derived through OWAS were used to assess improvements achieved by collaborative workstations. The integration of OWAS in simulated environments validated its applicability in Industry 4.0 contexts.

Digital Integration and Future Directions

- -

- Combining OWAS with cognitive workload indicators;

- -

- Real-time risk alerts through wearable motion sensors;

- -

- Integration in digital twin environments and augmented reality simulations.

3.2.3. Human Reliability Analysis (HRA): Principles, Techniques, and Industrial Applications

Methodological Frameworks in HRA

- -

- HEART (Human Error Assessment and Reduction Technique)—assigns error-producing conditions to specific tasks to estimate human error probability (HEP) [70];

- -

- SHERPA (Systematic Human Error Reduction and Prediction Approach)—developed by Embrey, this method is regarded as a highly effective approach for examining human reliability in task execution, with a focus on the cognitive factors behind human error [71];

- -

- CREAM (Cognitive Reliability and Error Analysis Method)—it was introduced by E. Hollnagel in 1998 and it builds upon a cognitive process framework, the Contextual Control Model (COCOM), which describes the relationships between cognitive processes occurring in the human brain [72].

HRA in Manufacturing: Types of Human Error

HRA Applications: Industrial Case Studies

- -

- Aircraft Maintenance (Yu et al., 2025): A biomechanical model incorporating motion capture data was proposed for aircraft maintenance tasks [68]. By integrating inverse trigonometric algorithms, the model quantifies joint stress and task difficulty, directly supporting HRA metrics. The model proved capable of real-time feedback, enabling ergonomic and reliability-based scheduling.

- -

- Assembly Line Guidance (Torres et al., 2021): In a manual bracket installation process, the HEART method predicted the highest HEP values where operators had to choose among geometrically similar components [73]. Visual guidance systems and color-coded interfaces reduced the probability of action errors significantly.

- -

- Human–Robot Collaboration (Barosz et al., 2020): In simulation scenarios comparing human vs. robot operator reliability, HRA-informed scenarios demonstrated that mixed-mode (human and robot) setups outperformed human-only cells in stability and quality under high-volume settings [74]. The insights allowed for process redesign where robots handled repetitive tasks and humans were assigned tasks requiring judgment.

Limitations and Future Directions

- -

- Subjectivity—traditional HRA methods depend on expert judgment, leading to inter-analyst variability;

- -

- Data gaps—the lack of granular error data in real industrial settings limits HRA precision;

- -

- Complex environments—as systems grow more complex, mapping error pathways becomes harder.

- -

- Integration with AI and ML to create dynamic human error prediction models;

- -

- The use of digital twins to emulate and correct operator behaviors;

- -

- Enhanced human-in-the-loop interfaces for predictive workload and reliability modeling.

3.2.4. Eye-Tracking and EEG-Based Techniques in Ergonomic and Cognitive Research

Overview of EEG Technologies

Overview of Eye-Tracking Technologies

Integrated EEG and Eye-Tracking Research

- -

- Detect emotional and cognitive states in real time;

- -

- Quantify mental workload during complex tasks;

- -

- Improve HCIs by customizing feedback based on user states.

Applications in Ergonomics and Industrial Design

Limitations and Challenges

- -

- EEG preparation time and movement sensitivity may hinder field deployment;

- -

- Eye-tracking accuracy can be affected by lighting, screen size, or user posture;

- -

- Requires multidisciplinary expertise in signal processing, design, and psychology;

- -

- A risk of data misinterpretation if not triangulated with behavioral/contextual inputs;

- -

- Nonetheless, newer wearable EEG devices and mobile ET systems are overcoming such barriers, promoting use in real-world environments.

3.2.5. Summary of Key Differences

3.3. Modern Solutions for Improving Human–Machine Interactions

3.3.1. Exoskeletons and Assistive Devices

Technological Components

Applications

- -

- Medical Rehabilitation: Exoskeletons like Lokomat and ReWalk have transformed gait rehabilitation for individuals recovering from strokes, spinal cord injuries, or cerebral palsy. These devices enable repetitive, task-specific training that is often superior in consistency and intensity compared to manual therapy sessions [81].

- -

- Industrial Ergonomics: Industrial exoskeletons, especially those designed for lumbar support, help reduce strain during lifting or overhead tasks. Studies show reductions of up to 30% in muscle activation during assisted lifting tasks. However, user comfort and adaptability remain challenges, necessitating further design personalization [82,85].

- -

- Human Intention Recognition: Human–exoskeleton cooperation relies on accurate motion intention recognition. sEMG is currently the most widely used modality, despite variability among users due to physiology and electrode placement. Deep learning techniques are being developed to improve the generalization and robustness of control models [83,84,86].

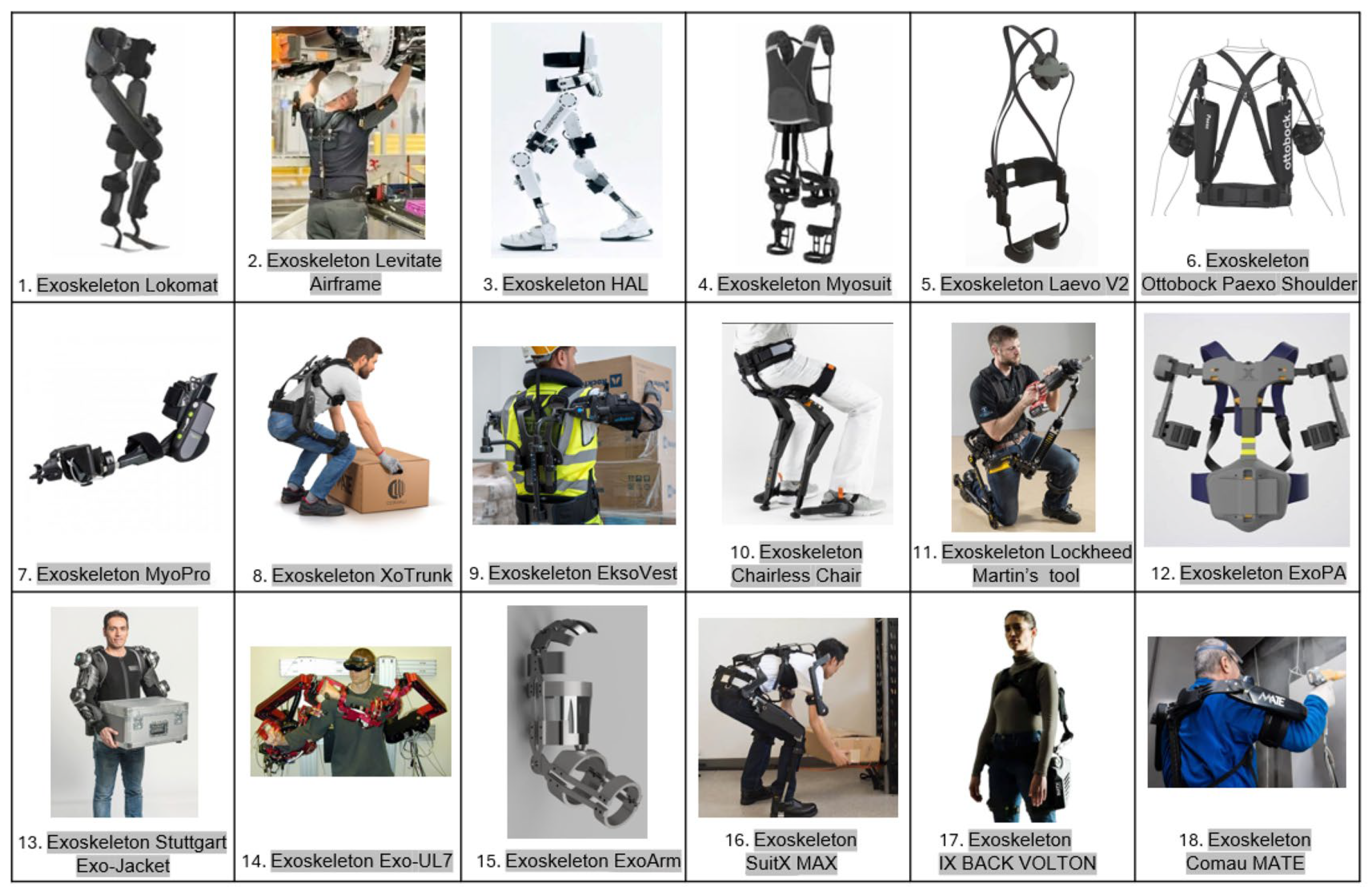

Illustrative Examples of Exoskeletons

Comparative Analysis of Industrial Exoskeletons in Automotive and Ergonomic Applications

3.3.2. Collaborative Robots and Human Assistance in Industry 5.0

Classification and Modes of Human–Robot Interactions (HRIs)

- -

- Power and force limiting—low-powered and with rounded edges to ensure safety;

- -

- Hand-guided teaching—robots that learn motions by manual demonstration;

- -

- Speed and separation monitoring—cobots that slow or stop based on human proximity;

- -

- Safety-rated monitored stop—temporary stops when humans enter the workspace.

Design Principles and Methods

- -

- Joint Cognitive Systems (JCS): A JCS is formed when humans and machines function as co-agents to achieve shared goals. Rather than treating the human and robot as isolated systems, the focus is on coordination patterns, adaptability, and co-agency [6]. Woods and Hollnagel emphasize that these systems should be resilient and functionally adaptive in real-time [108].

- -

- Actor–Network Theory (ANT): ANT views both humans and machines as actors (“actants”) in a sociotechnical network. It offers tools to map how dynamic roles evolve in human–machine teams, treating machines not merely as tools but as integral agents [109].

- -

- Concept of Operations (ConOps): ConOps provides a framework to define how humans and robots interact, collaborate, and share tasks within a system, specifying roles, responsibilities, operational scenarios, and performance expectations throughout the system’s lifecycle [110].

- -

- Human System Integration (HSI): HSI focuses on aligning system functionality, design, and operation with human capabilities, limitations, and work contexts by systematically integrating human factors throughout all stages of the system’s lifecycle [111].

Applications and Case Studies

Human-Centric Design Challenges

- -

- Cognitive load and mental models play a crucial role in human-centric design, as they influence how users perceive, interpret, and interact with automated systems. Effective human–machine collaboration requires minimizing unnecessary cognitive effort while supporting the development of accurate mental representations of the system’s behavior, capabilities, and intentions [112];

- -

- Resilience and role flexibility are fundamental to human-centric design, as they enable systems to adapt dynamically to unexpected changes, distribute tasks flexibly between human and machine agents, and maintain performance under varying conditions [113]. As highlighted by Madni et al. (2018), such adaptive architectures help reduce cognitive load, support the formation of shared mental models, and increase the overall robustness and effectiveness of human–machine collaboration [113].

- -

- Ethical and social concern: in human-centric system design become especially relevant when evaluating how the cognitive load influences users’ decision-making. As highlighted in the study by Zheng et al. (2025), an increased cognitive effort significantly alters moral judgments, making individuals more likely to adopt utilitarian reasoning and prioritize collective over individual outcomes under mental strain [114]. This raises concerns about the fairness and reliability of decisions made in cognitively demanding human–machine interaction scenarios. Designers must therefore ensure that system architectures minimize unnecessary cognitive load and preserve users’ ability to make ethically balanced decisions, avoiding unintended biases and safeguarding individual autonomy in collaborative settings [114].

Future Directions and Industry 5.0 Implications

- -

- AI-driven Personalization: In the context of Industry 5.0, artificial intelligence is increasingly tailored to human needs, enabling cobots to learn from human behavior and dynamically adapt to operator preferences. This leads to more personalized and inclusive human–robot collaboration, supporting worker well-being and task efficiency [115].

- -

- Digital Twins: Digital twins, particularly those driven by AI, provide a synchronized virtual representation of physical processes. They enhance predictive maintenance, optimize performance, and allow immersive human-in-the-loop simulations, which are crucial for training and decision support in dynamic environments [116,117].

- -

- Augmented Reality (AR): AR technologies contribute to improved human–machine interactions by visualizing robot trajectories, task sequences, and real-time guidance. This transparency fosters trust, reduces cognitive load, and improves accuracy during collaborative operations [118].

- -

- Edge Computing and 5G: The deployment of 5G networks and edge computing technologies enables ultra-low-latency and high-bandwidth communication between cobots and control systems. This infrastructure is essential for real-time decision-making and safe human–robot collaboration in smart factories [119,120].

Real-World Examples of Collaborative Robots

3.3.3. Augmented and Virtual Reality in Operator Training: Applications, Methods, and Examples

Technological Foundations and Classifications

- -

- Augmented Reality (AR): AR displays digital content within a real-world context, providing real-time assistance during training or operations. Devices include tablets, smartphones, and head-mounted displays (HMDs), such as Microsoft HoloLens and Vuzix Blade. AR allows step-by-step task guidance, superimposed safety alerts, and dynamic visualizations of internal machine components. For instance, a maintenance technician using AR can access exploded 3D diagrams and service checklists directly in their field of view, reducing the dependency on paper manuals [139,142].

- -

- Virtual Reality (VR): VR offers an environment that entirely surrounds the user, where users operate with digital replicas of machinery and systems using devices like the HTC Vive Pro, Oculus Rift, or Valve Index. It isolates trainees from distractions and enables a safe exploration of dangerous or high-stakes environments. In training for a chemical response, for example, VR simulations allow operators to rehearse emergency shutdown procedures without putting equipment or lives at risk. Some VR systems also integrate haptic feedback to simulate resistance, vibration, or material texture [140,143,144].

- -

- Mixed and Extended Reality (MR/XR): Mixed reality (MR) combines real and virtual elements, enabling interactions with both simultaneously. Devices like Microsoft HoloLens 2 or Magic Leap provide spatial anchoring, object occlusion, and shared workspaces for collaborative tasks. XR (extended reality) encompasses the full spectrum of immersive technologies—AR, VR, and MR—providing flexible deployment options based on context. CAVE systems (Computer-Assisted Virtual Environment), which project imagery on walls and floors of a room, are valuable for multi-user training and simulation but have high setup costs and space demands [142,144].

Methods and Implementation Strategies

- -

- Simulation-Based Training: VR simulation offers risk-free environments to rehearse both routine and emergency operations. In a Slovak study, trainees performed electrical plug assembly in VR using HTC Vive Pro, improving their speed and reducing errors. Training scenarios can be adapted for stress induction, multitasking, or fault diagnosis. Gamified modules enhance motivation, and performance metrics such as time-on-task or error frequency can be logged automatically [139,144].

- -

- Augmented Task Guidance: AR can deliver contextual assistance directly within the operator’s field of vision. In industrial maintenance, AR headsets provide overlays with torque values, replacement instructions, or real-time sensor readings. For example, a worker replacing a valve may see animated instructions guiding hand motion, reducing the training time. Studies show that AR reduces the cognitive load by offloading memorization and minimizing task-switching [139].

- -

- Mixed Reality Collaboration: This supports collaborative, spatially-aware training. Trainees can work in synchronized environments, manipulating virtual objects anchored in physical space. In a shipbuilding case, multi-user AR setups reduced communication latency and improved spatial awareness during structural assembly [139]. CAVE systems and MR telepresence also allow remote trainers to provide real-time corrections.

- -

- Deep Learning with 3D Models AR: Guidance systems can be enhanced using convolutional neural networks (CNNs) trained to recognize parts from CAD models. For instance, small parts like bolts or washers, which are often difficult to track visually, can be identified and labeled in real time. The automated generation of 2D datasets from 3D models streamlines the CNN training process, reducing the manual annotation effort [146].

Application Areas and Case Studies

- -

- Industrial Assembly and Maintenance: AR/VR tools are widely used in manufacturing for assembly training and predictive maintenance. In the aerospace industry, Boeing uses AR to assist technicians in wiring aircraft, reducing error rates by 90%. In electronics, operators can rehearse fine-motor tasks repeatedly before touching physical parts, reducing scrap rates and downtime [141,142].

- -

- Chemical Industry Accident Simulation: Vidal-Balea developed an Operator Training Simulator (OTS) for chemical accidents using a combination of VR and AR. The simulation connected operators in the field and control room through a Distributed Control System (DCS). Operators reported a 4.5× improvement in procedural familiarity and reaction time compared to traditional tabletop exercises [139].

- -

- Shipbuilding Collaborative: AR Navantia and Universidade da Coruña deployed Microsoft HoloLens (Microsoft, Redmond, WA, USA) in shipyards to facilitate multi-user AR training. Participants experienced synchronized visualizations of complex assemblies, significantly reducing spatial misalignment and communication delays in team operations [139].

- -

- Engineering and University Education: The University of Žilina implemented AR/VR in their Digital Factory curriculum. Students trained with virtual replicas of machinery using Unity 3D and Autodesk Maya models. Time studies (chronometry) and error tracking revealed substantial improvements in performance, demonstrating AR/VR’s value for technical education [144].

- -

- Object Recognition for Assembly: CNNs trained on synthetic image datasets allowed AR systems to recognize components and suggest actions during assembly. This method is ideal for small-batch production, where frequent part changes make traditional automation costly [146].

- -

- Construction Safety and Risk Training: Afolabi et al. highlighted AR/VR’s potential in improving safety awareness in construction. Simulations recreated fall risks, structural collapse, and equipment failure. While immersive tech increased engagement, implementation was limited by high costs, a lack of training, and initial resistance from the workforce [141].

- -

- XR in Collaborative Education: Mourtzis and Angelopoulos introduced an XR platform for engineering education. Students collaborated in real-time across locations to perform virtual repairs and maintenance, enhancing teamwork, spatial understanding, and technical skill retention [142].

Evaluation and Effectiveness

- -

- Cost—high upfront investments in hardware, software licenses, and content creation;

- -

- Scalability—difficulties in deploying across geographically distributed teams;

- -

- Resistance to change—especially among older employees unfamiliar with digital tools;

- -

- Technical limitations—VR sickness, display resolution, latency issues, and limited field of view;

- -

- Privacy and security—the use of cameras and sensors raises concerns in sensitive environments;

- -

- Content maintenance—updating 3D models and procedures as processes evolve requires dedicated resources.

4. Challenges and Future Directions in Cognitive Ergonomics for HMIs

4.1. Major Challenges in Cognitive Ergonomics for HMIs

- -

- Increasing System Complexity: Modern interfaces integrate vast amounts of data and functionality, potentially overwhelming users. Cognitive overload can reduce task performance, increase error rates, and impact mental well-being [149]. For instance, smart manufacturing systems in Industry 4.0 often require users to interpret complex data visualizations and make real-time decisions [150].

- -

- Multimodal and Adaptive Interfaces: These interfaces increasingly incorporate voice, gesture, haptic, and biometric inputs. Designing intuitive, seamless, and cognitively ergonomic multimodal systems remains a major challenge. Zheng et al. [151] reviewed EMG-, FMG-, and EIT-based biosensors, highlighting the difficulty in harmonizing input accuracy and user comfort.

- -

- Situational Awareness in Shared Control Environments: Maintaining shared control between the human and machine is critical, especially in safety-sensitive domains like autonomous driving. Brill et al. [152] emphasized the importance of external HMIs (eHMIs) for communicating AV intentions to vulnerable road users (VRUs) in shared spaces.

- -

- Latency and Tactile Feedback in Real-Time Systems: Real-time applications such as teleoperation and VR/AR demand an ultra-low latency. Mourtzis et al. [150] describe how 5G and the tactile internet promise to enhance cognitive ergonomics by enabling haptic feedback and low-latency control, but such systems also raise new cognitive demands and safety concerns.

4.2. Emerging Trends and Research Directions

- -

- Personalization through Adaptive Cognitive Models: Cognitive ergonomics is moving toward adaptive systems that respond to individual users’ cognitive states. Biosensors and physiological monitoring (e.g., EEG and EMG) can be used to detect workload and adjust interface complexity dynamically [151].

- -

- Standardization of External Human–Machine Interfaces: Brill et al. [152] emphasize the need for standardizing eHMI designs to ensure consistent communication between AVs and VRUs. This is critical for developing shared cognitive models and reducing ambiguity.

- -

- Integration of Wearable and Embedded Biosensors: The integration of EMG, FMG, and EIT-based wearables allows for more natural human–machine interactions, such as gesture recognition and prosthetic control [151]. Future research should focus on improving robustness, data fusion, and interpretability.

- -

- Ethical and Societal Implications: As HMIs increasingly mediate decisions and actions, ethical issues arise regarding autonomy, consent, and surveillance. Cognitive ergonomics must integrate ethical foresight into design methodologies [153].

- -

- Cross-Disciplinary Methodologies: Future research will benefit from combining insights from psychology, neuroscience, data science, and design. New evaluation methodologies should consider both subjective experience and objective performance metrics [154].

5. Conclusions and Recommendations

5.1. Summary of the Findings

- -

- The transition from mechanized control to intelligent, adaptive HMI systems has been driven by advancements in AI, machine learning, and multimodal interfaces;

- -

- Cognitive, physiological, psychological, and organizational factors play a critical role in shaping operator performance, safety, and satisfaction;

- -

- Ergonomic methodologies such as RULA, OWAS, and Human Reliability Analysis (HRA) are essential tools for assessing physical and cognitive strain in industrial environments;

- -

- Emerging technologies such as exoskeletons, eye-tracking, EEG, and digital twins are reshaping HMI design, enabling real-time feedback and predictive interventions.

5.2. Contributions of This Study

- -

- A multidisciplinary synthesis of ergonomic, cognitive, and psychological elements that influence HMI effectiveness;

- -

- A comparative analysis of evaluation methodologies (e.g., RULA, OWAS, HRA) in industrial settings, including their strengths, limitations, and digital integrations;

- -

- The integration of modern assistive technologies and sensing tools as pathways for improving safety, usability, and adaptability in smart factories;

- -

- The proposal of a human-centric paradigm in alignment with the Industry 5.0 goals of sustainability, personalization, and operator empowerment.

5.3. Practical Implications

- -

- Design guidelines—ergonomic and cognitive principles should inform the design of user interfaces, collaborative robotics, and wearable systems to enhance operator performance and reduce injury risks;

- -

- Risk mitigation—the systematic application of RULA, OWAS, and HRA can identify high-risk tasks and guide targeted interventions, especially in repetitive, awkward, or high-load work;

- -

- Technological integration—industries should embrace neuroergonomic tools (e.g., EEG and eye-tracking), digital simulations, and AI-based monitoring to enable adaptive and responsive HMI;

- -

- Workplace culture—organizational ergonomics must support user acceptance, trust, and well-being, fostering a resilient and engaged workforce in technologically intensive environments.

5.4. Final Remarks

5.5. Future Research Directions

- -

- Multimodal cognitive adaptation—Further research is needed on how to dynamically adapt interfaces using real-time cognitive input states using multi-sensor fusion (e.g., combining EEG, eye-tracking, and galvanic skin responses). Understanding how these inputs influence task performance in real-world environments can lead to more intuitive and adaptive HMI systems.

- -

- Trust calibration and emotional AI—The development of emotionally responsive systems capable of interpreting and responding to operator stress, fatigue, and trust levels remains in its infancy. Future studies could explore how emotional AI can foster safer and more reliable human–machine collaboration.

- -

- Longitudinal ergonomic impacts—Most ergonomic assessments, including those using RULA or OWAS, are applied in short-term studies. Long-term studies are necessary to measure the potential health benefits or risks linked to human–machine interactions in repetitive or physically demanding industrial tasks.

- -

- Human–digital twin co-simulation—Integrating human digital twins into cyber–physical systems opens new avenues for predictive ergonomics and task optimization. Research can focus on how digital representations of human behavior can be used to pre-validate workplace design and training scenarios.

- -

- AI-Enhanced Human Reliability Analysis—Expanding Human Reliability Analysis (HRA) frameworks to include machine learning models for error prediction and prevention can significantly improve safety in high-risk environments. Future research should explore how AI can reduce inter-analyst subjectivity and improve real-time reliability monitoring.

- -

- Inclusivity and personalization—As Industry 5.0 emphasizes human centrality, more studies are required on how HMI can be personalized for diverse user populations, including aging workers, people with disabilities, and those with varying cognitive styles.

Author Contributions

Funding

Conflicts of Interest

References

- Parasuraman, R.; Sheridan, T.B.; Wickens, C.D. A model for types and levels of human interaction with automation. IEEE Trans. Syst. Man, Cybern. Part A Syst. Humans 2000, 30, 286–297. [Google Scholar] [CrossRef]

- Romero, D.; Stahre, J.; Wuest, T.; Noran, O.; Bernus, P.; Vast-Berglund, Å.F.; Gorecky, D. Towards an Operator 4.0 Typology: A Human-Centric Perspective on the Fourth Industrial Revolution Technologies. In Proceedings of the International Conference on Computers & Industrial Engineering (CIE46), Tianjin, China, 29–31 October 2016; pp. 29–31. [Google Scholar]

- Nahavandi, S. Industry 5.0—A human-centric solution. Sustainability 2019, 11, 4371. [Google Scholar] [CrossRef]

- Zhang, J.; Walji, M.F. TURF: Toward a unified framework of EHR usability. J. Biomed. Inform. 2011, 44, 1056–1067. [Google Scholar] [CrossRef] [PubMed]

- Stanton, N.A.; Salmon, P.M.; Walker, G.H.; Baber, C.; Jenkins, D.P. Human Factors Methods; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar] [CrossRef]

- Breque, M.; De Nul, L.; Petridis, A. Industry 5.0: Towards a Sustainable, Human-Centric and Resilient European Industry; Publications Office of the European Union: Luxembourg, 2021. [Google Scholar]

- Weyer, S.; Schmitt, M.; Ohmer, M.; Gorecky, D. Towards industry 4.0—Standardization as the crucial challenge for highly modular, multi-vendor production systems. IFAC-PapersOnLine 2015, 48, 579–584. [Google Scholar] [CrossRef]

- Trstenjak, M.; Gregurić, P.; Janić, Ž.; Salaj, D. Integrated Multilevel Production Planning Solution According to Industry 5.0 Principles. Appl. Sci. 2023, 14, 160. [Google Scholar] [CrossRef]

- Rani, S.; Jining, D.; Shoukat, K.; Shoukat, M.U.; Nawaz, S.A. A Human–Machine Interaction Mechanism: Additive Manufacturing for Industry 5.0—Design and Management. Sustainability 2024, 16, 4158. [Google Scholar] [CrossRef]

- Solanes, J.E.; Gracia, L.; Miro, J.V. Advances in Human–Machine Interaction, Artificial Intelligence, and Robotics. Electronics 2024, 13, 3856. [Google Scholar] [CrossRef]

- Villalba-Diez, J.; Ordieres-Meré, J. Human–Machine integration in processes within industry 4.0 management. Sensors 2021, 21, 5928. [Google Scholar] [CrossRef]

- Yu, H.; Du, S.; Kurien, A.; van Wyk, B.J.; Liu, Q. The Sense of Agency in Human–Machine Interaction Systems. Appl. Sci. 2024, 14, 7327. [Google Scholar] [CrossRef]

- Jiang, J.; Xiao, Y.; Zhan, W.; Jiang, C.; Yang, D.; Xi, L.; Zhang, L.; Hu, H.; Zou, Y.; Liu, J. An HRA Model Based on the Cognitive Stages for a Human-Computer Interface in a Spacecraft Cabin. Symmetry 2022, 14, 1756. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, L. Failure Modes Analysis Related to User Experience in Interactive System Design Through a Fuzzy Failure Mode and Effect Analysis-Based Hybrid Approach. Appl. Sci. 2025, 15, 2954. [Google Scholar] [CrossRef]

- Sapienza, A.; Cantucci, F.; Falcone, R. Modeling Interaction in Human–Machine Systems: A Trust and Trustworthiness Approach. Automation 2022, 3, 242–257. [Google Scholar] [CrossRef]

- Bántay, L.; Abonyi, J. Machine Learning-Supported Designing of Human–Machine Interfaces. Appl. Sci. 2024, 14, 1564. [Google Scholar] [CrossRef]

- Khamaisi, R.K.; Prati, E.; Peruzzini, M.; Raffaeli, R.; Pellicciari, M. ux in ar-supported industrial human–Robot collaborative tasks: A systematic review. Appl. Sci. 2021, 11, 10448. [Google Scholar] [CrossRef]

- Grobelna, I.; Mailland, D.; Horwat, M. Design of Automotive HMI: New Challenges in Enhancing User Experience, Safety, and Security. Appl. Sci. 2025, 15, 5572. [Google Scholar] [CrossRef]

- Tufano, F.; Bahadure, S.W.; Tufo, M.; Novella, L.; Fiengo, G.; Santini, S. An Optimization Framework for Information Management in Adaptive Automotive Human–Machine Interfaces. Appl. Sci. 2023, 13, 10687. [Google Scholar] [CrossRef]

- Planke, L.J.; Lim, Y.; Gardi, A.; Sabatini, R.; Kistan, T.; Ezer, N. A cyber-physical-human system for one-to-many uas operations: Cognitive load analysis. Sensors 2020, 20, 5467. [Google Scholar] [CrossRef]

- Sun, Y.; Sun, Y.; Zhang, J.; Ran, F. Sensor-Based Assessment of Mental Fatigue Effects on Postural Stability and Multi-Sensory Integration. Sensors 2025, 25, 1470. [Google Scholar] [CrossRef]

- Ren, L.; Wu, L.; Feng, T.; Liu, X. A New Method for Inducing Mental Fatigue: A High Mental Workload Task Paradigm Based on Complex Cognitive Abilities and Time Pressure. Brain Sci. 2025, 15, 541. [Google Scholar] [CrossRef]

- Gardner, M.; Castillo, C.S.M.; Wilson, S.; Farina, D.; Burdet, E.; Khoo, B.C.; Atashzar, S.F.; Vaidyanathan, R. A multimodal intention detection sensor suite for shared autonomy of upper-limb robotic prostheses. Sensors 2020, 20, 6097. [Google Scholar] [CrossRef]

- Enjalbert, S.; Gandini, L.M.; Baños, A.P.; Ricci, S.; Vanderhaegen, F. Human–machine interface in transport systems: An industrial overview for more extended rail applications. Machines 2021, 9, 36. [Google Scholar] [CrossRef]

- Onofrejova, D.; Andrejiova, M.; Porubcanova, D.; Pacaiova, H.; Sobotova, L. A Case Study of Ergonomic Risk Assessment in Slovakia with Respect to EU Standard. Int. J. Environ. Res. Public Health 2024, 21, 666. [Google Scholar] [CrossRef]

- da Silva, A.G.; Gomes, M.V.M.; Winkler, I. Virtual Reality and Digital Human Modeling for Ergonomic Assessment in Industrial Product Development: A Patent and Literature Review. Appl. Sci. 2022, 12, 1084. [Google Scholar] [CrossRef]

- Hovanec, M.; Korba, P.; Al-Rabeei, S.; Vencel, M.; Racek, B. Digital Ergonomics—The Reliability of the Human Factor and Its Impact on the Maintenance of Aircraft Brakes and Wheels. Machines 2024, 12, 203. [Google Scholar] [CrossRef]

- Lorenzini, M.; Lagomarsino, M.; Fortini, L.; Gholami, S.; Ajoudani, A. Ergonomic human-robot collaboration in industry: A review. Front. Robot. AI 2023, 9, 813907. [Google Scholar] [CrossRef]

- Antonaci, F.G.; Olivetti, E.C.; Marcolin, F.; Jimenez, I.A.C.; Eynard, B.; Vezzetti, E.; Moos, S. Workplace Well-Being in Industry 5.0: A Worker-Centered Systematic Review. Sensors 2024, 24, 5473. [Google Scholar] [CrossRef]

- Trstenjak, M.; Benešova, A.; Opetuk, T.; Cajner, H. Human Factors and Ergonomics in Industry 5.0—A Systematic Literature Review. Appl. Sci. 2025, 15, 2123. [Google Scholar] [CrossRef]

- Aguiñaga, A.R.; Realyvásquez-Vargas, A.; Lopez Ramirez, M.A.; Quezada, A. Cognitive ergonomics evaluation assisted by an intelligent emotion recognition technique. Appl. Sci. 2020, 10, 1736. [Google Scholar] [CrossRef]

- Papetti, A.; Ciccarelli, M.; Manni, A.; Caroppo, A.; Rescio, G. Investigating the Use of Electrooculography Sensors to Detect Stress During Working Activities. Sensors 2025, 25, 3015. [Google Scholar] [CrossRef]

- Komeijani, M.; Ryen, E.G.; Babbitt, C.W. Bridging the Gap between Eco-Design and the Human Thinking System. Challenges 2016, 7, 5. [Google Scholar] [CrossRef]

- Yan, M.; Rampino, L.; Caruso, G. Comparing User Acceptance in Human–Machine Interfaces Assessments of Shared Autonomous Vehicles: A Standardized Test Procedure. Appl. Sci. 2024, 15, 45. [Google Scholar] [CrossRef]

- Rettenmaier, M.; Schulze, J.; Bengler, K. How much space is required? Effect of distance, content, and color on external human–machine interface size. Information 2020, 11, 346. [Google Scholar] [CrossRef]

- Cardoso, A.; Colim, A.; Bicho, E.; Braga, A.C.; Menozzi, M.; Arezes, P. Ergonomics and Human factors as a requirement to implement safer collaborative robotic workstations: A literature review. Safety 2021, 7, 71. [Google Scholar] [CrossRef]

- Gualtieri, L.; Palomba, I.; Merati, F.A.; Rauch, E.; Vidoni, R. Design of human-centered collaborative assembly workstations for the improvement of operators’ physical ergonomics and production efficiency: A case study. Sustainability 2020, 12, 3606. [Google Scholar] [CrossRef]

- Donisi, L.; Cesarelli, G.; Pisani, N.; Ponsiglione, A.M.; Ricciardi, C.; Capodaglio, E. Wearable Sensors and Artificial Intelligence for Physical Ergonomics: A Systematic Review of Literature. Diagnostics 2022, 12, 3048. [Google Scholar] [CrossRef]

- Stefana, E.; Marciano, F.; Rossi, D.; Cocca, P.; Tomasoni, G. Wearable devices for ergonomics: A systematic literature review. Sensors 2021, 21, 777. [Google Scholar] [CrossRef]

- Lind, C.M.; Diaz-Olivares, J.A.; Lindecrantz, K.; Eklund, J. A wearable sensor system for physical ergonomics interventions using haptic feedback. Sensors 2020, 20, 6010. [Google Scholar] [CrossRef]

- Colim, A.; Faria, C.; Cunha, J.; Oliveira, J.; Sousa, N.; Rocha, L.A. Physical Ergonomic Improvement and Safe Design of an Assembly Workstation through Collaborative Robotics. Safety 2021, 7, 14. [Google Scholar] [CrossRef]

- Maruyama, T.; Ueshiba, T.; Tada, M.; Toda, H.; Endo, Y.; Domae, Y.; Nakabo, Y.; Mori, T.; Suita, K. Digital twin-driven human robot collaboration using a digital human. Sensors 2021, 21, 8266. [Google Scholar] [CrossRef]

- Bläsing, D.; Bornewasser, M. Influence of Increasing Task Complexity and Use of Informational Assistance Systems on Mental Workload. Brain Sci. 2021, 11, 102. [Google Scholar] [CrossRef]

- Brunzini, A.; Peruzzini, M.; Grandi, F.; Khamaisi, R.K.; Pellicciari, M. A preliminary experimental study on the workers’ workload assessment to design industrial products and processes. Appl. Sci. 2021, 11, 12066. [Google Scholar] [CrossRef]

- Méndez, G.M.; Velázquez, F.d.C. Adaptive Augmented Reality Architecture for Optimising Assistance and Safety in Industry 4.0. Big Data Cogn. Comput. 2025, 9, 133. [Google Scholar] [CrossRef]

- Murata, A.; Nakamura, T.; Karwowski, W. Influence of cognitive biases in distorting decision making and leading to critical unfavorable incidents. Safety 2015, 1, 44–58. [Google Scholar] [CrossRef]

- Kistan, T.; Gardi, A.; Sabatini, R. Machine learning and cognitive ergonomics in air traffic management: Recent developments and considerations for certification. Aerospace 2018, 5, 103. [Google Scholar] [CrossRef]

- Hasanain, B. The Role of Ergonomic and Human Factors in Sustainable Manufacturing: A Review. Machines 2024, 12, 159. [Google Scholar] [CrossRef]

- Diego-Mas, J.A. Designing cyclic job rotations to reduce the exposure to ergonomics risk factors. Int. J. Environ. Res. Public Health 2020, 17, 1073. [Google Scholar] [CrossRef]

- Faez, E.; Zakerian, S.A.; Azam, K.; Hancock, K.; Rosecrance, J. An assessment of ergonomics climate and its association with self-reported pain, organizational performance and employee well-being. Int. J. Environ. Res. Public Health 2021, 18, 2610. [Google Scholar] [CrossRef]

- Sorensen, G.; Peters, S.; Nielsen, K.; Nagler, E.; Karapanos, M.; Wallace, L.; Burke, L.; Dennerlein, J.T.; Wagner, G.R. Improving working conditions to promote worker safety, health, and wellbeing for low-wage workers: The workplace organizational health study. Int. J. Environ. Res. Public Health 2019, 16, 1449. [Google Scholar] [CrossRef]

- Salisu, S.; Ruhaiyem, N.I.R.; Eisa, T.A.E.; Nasser, M.; Saeed, F.; Younis, H.A. Motion Capture Technologies for Ergonomics: A Systematic Literature Review. Diagnostics 2023, 13, 2593. [Google Scholar] [CrossRef]

- Bennett, S.T.; Han, W.; Mahmud, D.; Adamczyk, P.G.; Dai, F.; Wehner, M.; Veeramani, D.; Zhu, Z. Usability and Biomechanical Testing of Passive Exoskeletons for Construction Workers: A Field-Based Pilot Study. Buildings 2023, 13, 822. [Google Scholar] [CrossRef]

- de Souza, D.F.; Sousa, S.; Kristjuhan-Ling, K.; Dunajeva, O.; Roosileht, M.; Pentel, A.; Mõttus, M.; Özdemir, M.C.; Gratšjova, Ž. Trust and Trustworthiness from Human-Centered Perspective in Human–Robot Interaction (HRI)—A Systematic Literature Review. Electronics 2025, 14, 1557. [Google Scholar] [CrossRef]

- Sun, Z.; Zhu, M.; Lee, C. Progress in the Triboelectric Human–Machine Interfaces (HMIs)-Moving from Smart Gloves to AI/Haptic Enabled HMI in the 5G/IoT Era. Nanoenergy Adv. 2021, 1, 81–120. [Google Scholar] [CrossRef]

- Prati, E.; Villani, V.; Peruzzini, M.; Sabattini, L. An approach based on VR to design industrial human-robot collaborative workstations. Appl. Sci. 2021, 11, 11773. [Google Scholar] [CrossRef]

- Mcatamney, L.; Corlett, E.N. RULA: A survey method for the investigation of world-related upper limb disorders. Appl. Ergon. 1993, 24, 91–99. [Google Scholar] [CrossRef]

- Gómez-Galán, M.; Callejón-Ferre, Á.-J.; Pérez-Alonso, J.; Díaz-Pérez, M.; Carrillo-Castrillo, J.-A. Musculoskeletal risks: RULA bibliometric review. Int. J. Environ. Res. Public Health 2020, 17, 4354. [Google Scholar] [CrossRef]

- Paudel, P.; Kwon, Y.-J.; Kim, D.-H.; Choi, K.-H. Industrial Ergonomics Risk Analysis Based on 3D-Human Pose Estimation. Electronics 2022, 11, 3403. [Google Scholar] [CrossRef]

- Huang, C.; Kim, W.; Zhang, Y.; Xiong, S. Development and validation of a wearable inertial sensors-based automated system for assessing work-related musculoskeletal disorders in the workspace. Int. J. Environ. Res. Public Health 2020, 17, 1–15. [Google Scholar] [CrossRef]

- Kwon, Y.-J.; Kim, D.-H.; Son, B.-C.; Choi, K.-H.; Kwak, S.; Kim, T. A Work-Related Musculoskeletal Disorders (WMSDs) Risk-Assessment System Using a Single-View Pose Estimation Model. Int. J. Environ. Res. Public Health 2022, 19, 9803. [Google Scholar] [CrossRef]

- Navas-Reascos, G.E.; Romero, D.; Rodriguez, C.A.; Guedea, F.; Stahre, J. Wire Harness Assembly Process Supported by a Collaborative Robot: A Case Study Focus on Ergonomics. Robotics 2022, 11, 131. [Google Scholar] [CrossRef]

- Choi, K.-H.; Kim, D.-M.; Cho, M.-U.; Park, C.-W.; Kim, S.-Y.; Kim, M.-J.; Kong, Y.-K. Application of AULA risk assessment tool by comparison with other ergonomic risk assessment tools. Int. J. Environ. Res. Public Health 2020, 17, 1–9. [Google Scholar] [CrossRef]

- Mao, W.; Yang, X.; Wang, C.; Hu, Y.; Gao, T. A Physical Fatigue Evaluation Method for Automotive Manual Assembly: An Experiment of Cerebral Oxygenation with ARE Platform. Sensors 2023, 23, 9410. [Google Scholar] [CrossRef]

- Loske, D.; Klumpp, M.; Keil, M.; Neukirchen, T. Logistics Work, Ergonomics and Social Sustainability: Empirical Musculoskeletal System Strain Assessment in Retail Intralogistics. Logistics 2021, 5, 89. [Google Scholar] [CrossRef]

- Al-Zuheri, A.; Ketan, H.S. Correcting Working Postures in Water Pump Assembly Tasks using the OVAKO Work Analysis System (OWAS). Al-Khwarizmi Eng. J. 2008, 4, 8–17. [Google Scholar]

- Ojstersek, R.; Buchmeister, B.; Herzog, N.V. Use of data-driven simulation modeling and visual computing methods for workplace evaluation. Appl. Sci. 2020, 10, 1–17. [Google Scholar] [CrossRef]

- Yu, M.; Zhao, D.; Zhang, Y.; Chen, J.; Shan, G.; Cao, Y.; Ye, J. Development of a Novel Biomechanical Framework for Quantifying Dynamic Risks in Motor Behaviors During Aircraft Maintenance. Appl. Sci. 2025, 15, 5390. [Google Scholar] [CrossRef]

- Young, C.; Hamilton-Wright, A.; Oliver, M.L.; Gordon, K.D. Predicting Wrist Posture during Occupational Tasks Using Inertial Sensors and Convolutional Neural Networks. Sensors 2023, 23, 942. [Google Scholar] [CrossRef] [PubMed]

- Swain, A.D.; Guttmann, H.E. Handbook of Human-Reliability Analysis with Emphasis on Nuclear Power Plant Applications; Final Report (No. NUREG/CR-1278; SAND-80-0200); Sandia National Labs.: Albuquerque, NM, USA, 1983. [Google Scholar]

- Fargnoli, M.; Lombardi, M.; Puri, D. Applying hierarchical task analysis to depict human safety errors during pesticide use in vineyard cultivation. Agriculture 2019, 9, 158. [Google Scholar] [CrossRef]

- Żywiec, J.; Tchórzewska-Cieślak, B.; Sokolan, K. Assessment of Human Errors in the Operation of the Water Treatment Plant. Water 2024, 16, 2399. [Google Scholar] [CrossRef]

- Torres, Y.; Nadeau, S.; Landau, K. Classification and quantification of human error in manufacturing: A case study in complex manual assembly. Appl. Sci. 2021, 11, 1–23. [Google Scholar] [CrossRef]

- Barosz, P.; Gołda, G.; Kampa, A. Efficiency analysis of manufacturing line with industrial robots and human operators. Appl. Sci. 2020, 10, 2862. [Google Scholar] [CrossRef]

- Zhu, L.; Lv, J. Review of Studies on User Research Based on EEG and Eye Tracking. Appl. Sci. 2023, 13, 6502. [Google Scholar] [CrossRef]

- Ricci, A.; Ronca, V.; Capotorto, R.; Giorgi, A.; Vozzi, A.; Germano, D.; Borghini, G.; Di Flumeri, G.; Babiloni, F.; Aricò, P. Understanding the Unexplored: A Review on the Gap in Human Factors Characterization for Industry 5.0. Appl. Sci. 2025, 15, 1822. [Google Scholar] [CrossRef]

- Keskin, M.; Ooms, K.; Dogru, A.O.; De Maeyer, P. Exploring the Cognitive Load of Expert and Novice Map Users Using EEG and Eye Tracking. ISPRS Int. J. Geo-Inf. 2020, 9, 429. [Google Scholar] [CrossRef]

- Yu, R.; Schubert, G.; Gu, N. Biometric Analysis in Design Cognition Studies: A Systematic Literature Review. Buildings 2023, 13, 630. [Google Scholar] [CrossRef]

- Slanzi, G.; Balazs, J.; Velasquez, J.D. Predicting Web User Click Intention Using Pupil Dilation and Electroencephalogram Analysis. In Proceedings of the 2016 IEEE/WIC/ACM International Conference on Web Intelligence, Omaha, NE, USA, 13–16 October 2016; pp. 417–420. [Google Scholar]

- Paing, M.P.; Juhong, A.; Pintavirooj, C. Design and Development of an Assistive System Based on Eye Tracking. Electronics 2022, 11, 535. [Google Scholar] [CrossRef]

- Tiboni, M.; Borboni, A.; Vérité, F.; Bregoli, C.; Amici, C. Sensors and Actuation Technologies in Exoskeletons: A Review. Sensors 2022, 22, 884. [Google Scholar] [CrossRef]

- Pesenti, M.; Antonietti, A.; Gandolla, M.; Pedrocchi, A. Towards a functional performance validation standard for industrial low-back exoskeletons: State of the art review. Sensors 2021, 21, 808. [Google Scholar] [CrossRef]

- Zhang, X.; Qu, Y.; Zhang, G.; Wang, Z.; Chen, C.; Xu, X. Review of sEMG for Exoskeleton Robots: Motion Intention Recognition Techniques and Applications. Sensors 2025, 25, 2448. [Google Scholar] [CrossRef]

- Song, Z.; Zhao, P.; Wu, X.; Yang, R.; Gao, X. An Active Control Method for a Lower Limb Rehabilitation Robot with Human Motion Intention Recognition. Sensors 2025, 25, 713. [Google Scholar] [CrossRef]

- Kian, A.; Widanapathirana, G.; Joseph, A.M.; Lai, D.T.H.; Begg, R. Application of Wearable Sensors in Actuation and Control of Powered Ankle Exoskeletons: A Comprehensive Review. Sensors 2022, 22, 2244. [Google Scholar] [CrossRef]

- Lobov, S.; Krilova, N.; Kastalskiy, I.; Kazantsev, V.; Makarov, V.A. Latent factors limiting the performance of sEMG-interfaces. Sensors 2018, 18, 1122. [Google Scholar] [CrossRef]

- Lokomat—Exoskeleton Report. Available online: https://exoskeletonreport.com/product/lokomat/ (accessed on 6 May 2025).

- Szondy, D. Lockheed Martin’s Fortis Tool Arm takes the load to cut fatigue. Available online: https://newatlas.com/lockheed-martin-fortis-tool-arm/49137/ (accessed on 6 May 2025).

- HAL Senses Bio-electrical Signals and Complete Intended Motion of Wearers. Cyberdyne Care Robotics GmbH. Available online: https://www.cyberdyne.eu/en/products/medical-device/hal-motion-principal/ (accessed on 6 May 2025).

- Myosuit. Available online: https://exoskeletonreport.com/product/myosuit/ (accessed on 6 May 2025).

- Laevo V2—Exoskeleton Report. Available online: https://exoskeletonreport.com/product/laevo/ (accessed on 6 May 2025).

- Ottobock Paexo Shoulder. Available online: https://corporate.ottobock.com/en/company/newsroom/media-information/exoskeletons (accessed on 6 May 2025).

- MyoPro—Exoskeleton Report. Available online: https://exoskeletonreport.com/product/myopro/ (accessed on 6 May 2025).

- Kuber, P.M.; Rashedi, E. Training and Familiarization with Industrial Exoskeletons: A Review of Considerations, Protocols, and Approaches for Effective Implementation. Biomimetics 2024, 9, 520. [Google Scholar] [CrossRef] [PubMed]

- Willmott Dixon Trials High-Tech Robotic Vest That Could Revolutionise Construction. Willmott Dixon. Available online: https://www.willmottdixon.co.uk/news/willmott-dixon-trials-high-tech-robotic-vest-that-could-revolutionise-construction (accessed on 6 May 2025).

- Noonee’s Wearable Chairless Chair 2.0 Boasts Improved Comfort. Available online: https://www.homecrux.com/second-generation-wearable-chairless-chair/135899/ (accessed on 6 May 2025).

- EXOPA. Available online: https://www.ergonomiesite.be/exoskeleton-potential-assessment-tool-exopa/ (accessed on 6 May 2025).

- Digitalisierung: Technik Für die Arbeitswelt von Morgen. Available online: https://industrieanzeiger.industrie.de/technik/entwicklung/technik-fuer-die-arbeitswelt-von-morgen/ (accessed on 6 May 2025).

- Exoskeletons: State-of-the-Art, Design Challenges, and Future Directions—Scientific Figure on ResearchGate. Available online: https://www.researchgate.net/figure/Upper-limb-exoskeletons-for-rehabilitation-and-assistive-purposes-A-ARMin-III-B_fig1_330631170 (accessed on 6 May 2025).

- Exoskeleton Arm 3D Model 3D Printable Rigged. CGTrader. Available online: https://www.cgtrader.com/3d-print-models/science/engineering/exoarm (accessed on 6 May 2025).

- SUITX MAX. Available online: https://www.industrytap.com/suitx-selected-winner-chairmans-choice-annual-big-innovation-awards/40856 (accessed on 6 May 2025).

- IX BACK VOLTON. Available online: https://exoskeletonreport.com/product/ix-back/ (accessed on 6 May 2025).

- MATE-XB Exoskeleton—Comau. Available online: https://www.comau.com/en/our-offer/products-and-solutions/wearable-robotics-exoskeletons/mate-xb-exoskeleton/ (accessed on 6 May 2025).

- Kaasinen, E.; Anttila, A.-H.; Heikkilä, P.; Laarni, J.; Koskinen, H.; Väätänen, A. Smooth and Resilient Human–Machine Teamwork as an Industry 5.0 Design Challenge. Sustainability 2022, 14, 2773. [Google Scholar] [CrossRef]

- Alves, J.; Lima, T.M.; Gaspar, P.D. Is Industry 5.0 a Human-Centred Approach? A Systematic Review. Processes 2023, 11, 193. [Google Scholar] [CrossRef]

- Matheson, E.; Minto, R.; Zampieri, E.G.G.; Faccio, M.; Rosati, G. Human–robot collaboration in manufacturing applications: A review. Robotics 2019, 8, 100. [Google Scholar] [CrossRef]

- Matthias, B. ISO/TS 15066-Collaborative Robots Present Status. In Proceedings of the European Robotics Forum 2015, Vienna, Austria, 11–13 March 2015. [Google Scholar]

- Hollnagel, E.; Woods, D.D. Resilience Engineering: Concepts and Precepts; CRC Press: Boca Raton, FL, USA, 2006. [Google Scholar]

- Belliger, A.; Krieger, D.J. Organizing Networks: An Actor-Network Theory of Organizations; Transcript Verlag: Bielefeld, Germany, 2016. [Google Scholar]

- Purcărea, A.; Albulescu, S.; Negoiță, O.D.; Dănălache, F.; Corocăescu, M. Modeling the human resource development process in the automotive industry services. UPB Sci. Bull. Ser. D Mech. Eng. 2016, 78, 263–275. [Google Scholar]

- Schirmer, F.; Kranz, P.; Rose, C.G.; Schmitt, J.; Kaupp, T. Towards Dynamic Human–Robot Collaboration: A Holistic Framework for Assembly Planning. Electronics 2025, 14, 190. [Google Scholar] [CrossRef]

- Boschetti, G.; Minto, R.; Trevisani, A. Experimental investigation of a cable robot recovery strategy. Robotics 2021, 10, 35. [Google Scholar] [CrossRef]

- Madni, A.M.; Madni, C.C. Architectural framework for exploring adaptive human-machine teaming options in simulated dynamic environments. Systems 2018, 6, 44. [Google Scholar] [CrossRef]

- Zheng, M.; Wang, L.; Tian, Y. Does Cognitive Load Influence Moral Judgments? The Role of Action–Omission and Collective Interests. Behav. Sci. 2025, 15, 361. [Google Scholar] [CrossRef]

- Martini, B.; Bellisario, D.; Coletti, P. Human-Centered and Sustainable Artificial Intelligence in Industry 5.0: Challenges and Perspectives. Sustainability 2024, 16, 5448. [Google Scholar] [CrossRef]

- Alfaro-Viquez, D.; Zamora-Hernandez, M.; Fernandez-Vega, M.; Garcia-Rodriguez, J.; Azorin-Lopez, J. A Comprehensive Review of AI-Based Digital Twin Applications in Manufacturing: Integration Across Operator, Product, and Process Dimensions. Electronics 2025, 14, 646. [Google Scholar] [CrossRef]

- Krupas, M.; Kajati, E.; Liu, C.; Zolotova, I. Towards a Human-Centric Digital Twin for Human–Machine Collaboration: A Review on Enabling Technologies and Methods. Sensors 2024, 24, 2232. [Google Scholar] [CrossRef] [PubMed]

- Alojaiman, B. Technological Modernizations in the Industry 5.0 Era: A Descriptive Analysis and Future Research Directions. Processes 2023, 11, 1318. [Google Scholar] [CrossRef]

- Fraga-Lamas, P.; Barros, D.; Lopes, S.I.; Fernández-Caramés, T.M. Mist and Edge Computing Cyber-Physical Human-Centered Systems for Industry 5.0: A Cost-Effective IoT Thermal Imaging Safety System. Sensors 2022, 22, 8500. [Google Scholar] [CrossRef]

- Hassan, M.A.; Zardari, S.; Farooq, M.U.; Alansari, M.M.; Nagro, S.A. Systematic Analysis of Risks in Industry 5.0 Architecture. Appl. Sci. 2024, 14, 1466. [Google Scholar] [CrossRef]

- Universal Robots—UR5e Case Studies. Available online: https://www.universal-robots.com/products/ur5e/ (accessed on 14 May 2025).

- KUKA—LBR iiwa in Automotive. Available online: https://www.kuka.com/ (accessed on 14 May 2025).

- FANUC—CRX Collaborative Robots. Available online: https://www.fanuc.eu/ (accessed on 14 May 2025).

- Rethink Robotics—Sawyer Applications. Available online: https://robotsguide.com/robots/sawyer (accessed on 14 May 2025).

- ABB Robotics—YuMi Collaborative Robot. Available online: https://new.abb.com/products/robotics/robots/collaborative-robots/yumi (accessed on 14 May 2025).

- Universal Robots—UR10e Applications. Available online: https://www.universal-robots.com/products/ur10e/ (accessed on 14 May 2025).

- ABB—GoFa CRB 15000. Available online: https://new.abb.com/products/robotics/robots/collaborative-robots/crb-15000 (accessed on 14 May 2025).

- FANUC—CR-35iA Collaborative Robot. Available online: https://www.fanucamerica.com/products/robots/series/collaborative-robot (accessed on 14 May 2025).

- Techman Robot—TM5 Series. Available online: https://www.tm-robot.com/ (accessed on 14 May 2025).

- Yaskawa Motoman—HC10. Available online: https://www.motoman.com/ (accessed on 14 May 2025).

- Doosan Robotics—M Series. Available online: https://www.doosanrobotics.com/ (accessed on 14 May 2025).

- AUBO Robotics—AUBO-i5. Available online: https://www.aubo-robotics.com/ (accessed on 14 May 2025).

- Kinova Robotics—Link 6. Available online: https://www.kinovarobotics.com/ (accessed on 14 May 2025).

- AKKA Technologies—Air-Cobot. Available online: https://www.akka-technologies.com/en/innovation/projects/aircobot (accessed on 14 May 2025).

- Dobot—Magician Robot. Available online: https://www.dobot.cc/ (accessed on 14 May 2025).

- Rethink Robotics—Baxter. Available online: https://robotsguide.com/robots/baxter (accessed on 14 May 2025).

- Neura Robotics—Product Portfolio. Available online: https://neura-robotics.com/products/ (accessed on 14 May 2025).

- Siasun Robotics—SR Series. Available online: https://www.siasun.com/ (accessed on 14 May 2025).

- Vidal-Balea, A.; Blanco-Novoa, O.; Fraga-Lamas, P.; Vilar-Montesinos, M.; Fernández-Caramés, T.M. Creating collaborative augmented reality experiences for industry 4.0 training and assistance applications: Performance evaluation in the shipyard of the future. Appl. Sci. 2020, 10, 9073. [Google Scholar] [CrossRef]

- Lee, J.; Ma, B. An Operator Training Simulator to Enable Responses to Chemical Accidents through Mutual Cooperation between the Participants. Appl. Sci. 2023, 13, 1382. [Google Scholar] [CrossRef]

- Afolabi, A.O.; Nnaji, C.; Okoro, C. Immersive Technology Implementation in the Construction Industry: Modeling Paths of Risk. Buildings 2022, 12, 363. [Google Scholar] [CrossRef]

- Mourtzis, D.; Angelopoulos, J. Development of an Extended Reality-Based Collaborative Platform for Engineering Education: Operator 5. Electronics 2023, 12, 3663. [Google Scholar] [CrossRef]

- Badia, S.B.i.; Silva, P.A.; Branco, D.; Pinto, A.; Carvalho, C.; Menezes, P.; Almeida, J.; Pilacinski, A. Virtual Reality for Safe Testing and Development in Collaborative Robotics: Challenges and Perspectives. Electronics 2022, 11, 1726. [Google Scholar] [CrossRef]

- Gabajová, G.; Furmannová, B.; Medvecká, I.; Grznár, P.; Krajčovič, M.; Furmann, R. Virtual training application by use of augmented and virtual reality under university technology enhanced learning in Slovakia. Sustainability 2019, 11, 6677. [Google Scholar] [CrossRef]

- Zhao, H.; Zhao, Q.H.; Ślusarczyk, B. Sustainability and digitalization of corporate management based on augmented/virtual reality tools usage: China and other world IT companies’ experience. Sustainability 2019, 11, 4717. [Google Scholar] [CrossRef]

- Židek, K.; Lazorík, P.; Piteľ, J.; Hošovský, A. An automated training of deep learning networks by 3d virtual models for object recognition. Symmetry 2019, 11, 496. [Google Scholar] [CrossRef]

- Bahubalendruni, M.V.A.R.; Putta, B. Assembly Sequence Validation with Feasibility Testing for Augmented Reality Assisted Assembly Visualization. Processes 2023, 11, 2094. [Google Scholar] [CrossRef]

- Lanyi, C.S.; Withers, J.D.A. Striving for a safer and more ergonomic workplace: Acceptability and human factors related to the adoption of AR/VR glasses in industry 4.0. Smart Cities 2020, 3, 289–307. [Google Scholar] [CrossRef]

- Othman, U.; Yang, E. Human–Robot Collaborations in Smart Manufacturing Environments: Review and Outlook †. Sensors 2023, 23, 5663. [Google Scholar] [CrossRef]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. Smart manufacturing and tactile internet based on 5G in industry 4.0: Challenges, Applications and New Trends. Electronics 2021, 10, 3175. [Google Scholar] [CrossRef]

- Zheng, Z.; Wu, Z.; Zhao, R.; Ni, Y.; Jing, X.; Gao, S. A Review of EMG-, FMG-, and EIT-Based Biosensors and Relevant Human–Machine Interactivities and Biomedical Applications. Biosensors 2022, 12, 516. [Google Scholar] [CrossRef]

- Brill, S.; Payre, W.; Debnath, A.; Horan, B.; Birrell, S. External Human–Machine Interfaces for Automated Vehicles in Shared Spaces: A Review of the Human–Computer Interaction Literature. Sensors 2023, 23, 4454. [Google Scholar] [CrossRef]

- Panchetti, T.; Pietrantoni, L.; Puzzo, G.; Gualtieri, L.; Fraboni, F. Assessing the Relationship between Cognitive Workload, Workstation Design, User Acceptance and Trust in Collaborative Robots. Appl. Sci. 2023, 13, 1720. [Google Scholar] [CrossRef]

- Iarlori, S.; Perpetuini, D.; Tritto, M.; Cardone, D.; Tiberio, A.; Chinthakindi, M.; Filippini, C.; Cavanini, L.; Freddi, A.; Ferracuti, F.; et al. An Overview of Approaches and Methods for the Cognitive Workload Estimation in Human–Machine Interaction Scenarios through Wearables Sensors. Bio. Med. Inform. 2024, 4, 1155–1173. [Google Scholar] [CrossRef]

| Factor | Description | Impact on HMI |

|---|---|---|

| Working Posture | Misaligned or static body positions during tasks | Increases musculoskeletal strain and task inaccuracy |

| Muscle Fatigue | Local or systemic fatigue due to repetitive or high-load movements | Reduces performance and increases risk of errors |

| Physical Load | Manual handling, lifting, and force exertion | Leads to overexertion and decreased task tolerance |

| Wearable Sensors | IMU, EMG, and pressure-based feedback for real-time assessments | Enables posture monitoring and ergonomic corrections |

| Haptic Feedback | Vibrotactile cues guiding movement correction | Supports self-training and ergonomic behavior learning |

| Cobots and HRC | Collaborative robotics used to reduce the biomechanical workload | Prevent WMSDs and increase safety and comfort |

| Grand Score | Action Level | Interpretation |

|---|---|---|

| 1–2 | Level 1 | Acceptable posture |

| 3–4 | Level 2 | Further investigation needed |

| 5–6 | Level 3 | Investigation and changes required soon |

| 7 | Level 4 | Immediate investigation and changes |

| Action Category | Interpretation | Required Action |

|---|---|---|

| 1 | Normal posture, no action needed | None |

| 2 | Slightly risky body position | Correct in the near future |

| 3 | High-risk body position | Correct as soon as possible |

| 4 | Severely harmful posture | Urgent correction is needed |

| Band | Frequency (Hz) | Associated State |

|---|---|---|

| Delta | <4 | Deep sleep |

| Theta | 4–8 | Drowsiness, cognitive effort |

| Alpha | 8–12 | Relaxation, alertness |

| Beta | 13–30 | Active concentration |

| Gamma | >30 | High-level cognition |

| Metric | Significance |

|---|---|

| Fixation Duration | Indicates cognitive processing [77] |

| Number of Fixations | Related to the attention distribution [77] |

| Saccade Velocity/Amplitude | Associated with cognitive effort or stress [77] |

| Heat Maps and AOIs | Highlight key areas of visual attention [77] |

| Name | Type | Application | Operating Principle Description |

|---|---|---|---|

| Lokomat | Lower limb, active | Gait rehabilitation | Treadmill-based system with actuated hip/knee joints for repeated motion training. [87] |

| Levitate Airframe | Upper limb/passive | Overhead work fatigue reduction | A passive exoskeleton that uses a pulley-based mechanism to transfer the weight of the arms to the hips, relieving strain on the shoulders and upper back. [88] |

| HAL | Full body, active | Rehabilitation and industrial support | Uses sEMG to predict user motion, driving actuators accordingly. [89] |

| Myosuit | Lower limb, soft | Mobility assistance | Textile suit with cable-driven actuation for lower-limb extension. [90] |

| Laevo V2 | Trunk, passive | Industrial lumbar support | Spring-based lumbar support that redistributes the load during bending. [91] |

| Ottobock Paexo Shoulder | Upper limb/passive | Overhead industrial work | Spring-assisted support that reduces strain on the shoulders by transferring the load to the hips. [92] |

| MyoPro | Upper limb, active | Stroke rehab/assistance | EMG-based elbow and hand orthosis, assists with volitional movement. [93] |

| MATE-XB | Trunk, passive | Postural stabilization | Passive support structure targeting lower back stability. [94] |

| EksoVest | Upper limb, passive | Overhead work reduction | Reduces arm fatigue via passive spring/hinge mechanisms. [95] |

| Chairless Chair | Lower limb/passive | Industrial—fatigue reduction for standing workers | A passive leg-worn exoskeleton that locks at the knees to provide seated support anywhere, reducing fatigue by shifting weight to the heels. [96] |

| Lockheed Martin’s Fortis Tool Arm | Upper limb/passive | Industrial—tool support and fatigue reduction | A passive, waist-mounted arm support that redirects the weight of heavy tools (up to 50 lbs) to the ground, reducing user fatigue and increasing productivity. [88] |

| ExoPA | Assessment tool | Evaluating exoskeleton suitability for overhead tasks | Analyzes task demands to assess the ergonomic benefit of using an exoskeleton. [97] |

| Stuttgart Exo-Jacket | Upper limb, hybrid | Industrial cable installation | Active shoulder/elbow support with a gas spring and force grounding. [98] |

| Exo-UL7 | Upper limb, active | General haptic assistance | Electric actuators on shoulder/elbow; suitable for ADL support. [99] |

| ExoArm | Upper limb, active | Assistive/industrial use | Pneumatically actuated shoulder and elbow joints. [100] |

| SuitX MAX | Modular industrial (passive) | lifting, overhead work, injury prevention | Combines back, shoulder, and leg support modules to reduce strain and fatigue during physically demanding tasks. [101] |

| IX BACK VOLTON | Lower back/active | Dynamic heavy load handling | AI-driven, provides up to 17 kg of support and 8 h of battery life. [102] |

| Comau MATE | Upper limb/passive | Overhead work, assembly tasks | A passive upper-limb exoskeleton that reduces fatigue and improves posture during repetitive tasks. [103] |

| Type of Interaction | Description |

|---|---|

| Coexistence | The human and robot operate in the same area but without overlapping tasks. [106] |

| Synchronization | Shared space, but tasks are performed at different times. [106] |

| Cooperation | Simultaneous task execution in a shared space with different goals. [106] |

| Collaboration | Joint execution of shared tasks, requiring real-time interaction. [106] |

| Use Case | Industry | Description |

|---|---|---|

| Assembly Line Cobots | Automotive | Cobots assist in part fitting and inspection alongside human workers [104]. |

| Precision Welding | Aerospace | Human–robot shared control ensures accuracy and adaptability [105]. |

| Warehouse Sorting | Logistics | Cobots carry out repetitive lifting tasks to reduce worker fatigue [106]. |

| Human–AI Teams | Smart Factories | Cognitive agents assist humans in real-time decision-making [108]. |

| Cobot Name | Manufacturer | Application Area | Key Functions | Sustainability in Industry 5.0 | Documented Benefits |

|---|---|---|---|---|---|

| UR5e | Universal Robots | Electronic assembly | Screwdriving, quality inspection | Energy-efficient, reduces scrap through precision | 30% cycle time reduction and high repeatability [121] |

| LBR iiwa | KUKA | Automotive manufacturing | Precision joining, welding, HRC | Supports lightweight, precise processes; lower emissions | Enhanced precision, improved worker safety [122] |

| CRX-10iA | FANUC | Logistics, warehousing | Box handling, sorting | Optimizes logistics for energy efficiency | Reduced operator fatigue, incident-free deployment [123] |

| Sawyer | Rethink Robotics | Packaging, plastics | Machine tending, injection molding | Reduces plastic waste through precise handling | ROI in under 12 months, flexible deployment [124] |

| YuMi | ABB | Small part assembly | Dual-arm pick-and-place, testing | Supports lean production and waste reduction | Ideal for close collaboration, compact design [125] |

| UR10e | Universal Robots | Palletizing, welding | Heavy-load handling, MIG welding | Increases welding efficiency, reduces material waste | Up to 50% productivity increase, reduced errors [126] |

| GoFa CRB 15000 | ABB | Assembly, logistics | Material handling, quality inspection | Enhances process optimization, reduces transportation impact | High operation speed, easy to program [127] |

| CR-35iA | FANUC | Heavy-duty industry | Large part manipulation | Enables safe, efficient heavy tasks, reducing energy use | 35 kg payload, certified safety [128] |

| TM5 | Techman Robot | Visual inspection | Integrated camera, pick-and-place | Reduces inspection waste and improves defect detection | Reduced inspection costs, fast deployment [129] |

| HC10 | Yaskawa Motoman | Assembly, material handling | Manual guidance, flexible workspaces | Improves the reusability of production lines | Easy reprogramming, compact [130] |

| Doosan M0609 | Doosan Robotics | Electronics, packaging | Soldering, gluing, box packing | Promotes precise soldering, minimizing waste | High precision, intuitive interface [131] |

| AUBO-i5 | AUBO Robotics | Research, education | Pick-and-place, small part assembly | Encourages local, small-scale innovation and reuse | Low cost, ideal for prototyping and training [132] |

| Kinova Link 6 | Kinova Robotics | Assembly, inspection | Accurate handling, visual inspection | Low energy use, promotes a modular, flexible design | Compact, easy integration [133] |

| Air-Cobot | AKKA Technologies | Aerospace | Aircraft visual inspection | Supports predictive maintenance, reduces travel emissions | Time-saving, improved accuracy [134] |

| Dobot Magician | Dobot | Education, prototyping | 3D printing, laser engraving, pick-and-place | Enables circular economy practices via prototyping | Versatile, great for labs and training [135] |

| Baxter | Rethink Robotics | Light assembly, education | Guided teaching, dual-arm collaboration | Flexible for multiple tasks, supports reuse | Simple programming, safe around humans [136] |

| LARA | Neura Robotics | Manufacturing, logistics | Material handling, adaptive HRC | Facilitates adaptive production, reduces energy waste | AI-integrated, highly responsive [137] |

| SR6C | Siasun Robotics | Precision assembly | High-precision tasks, inspection | High precision minimizes material loss and defects | Compact and accurate design [138] |

| Method | Hardware Used | Benefits | Measured Outcomes | Method |

|---|---|---|---|---|

| VR Simulator | HTC Vive Pro | Safe, immersive learning | Reduced errors, faster completion | VR Simulator [142] |

| AR Step Guidance | Microsoft HoloLens | Hands-free, real-time support | Improved accuracy and efficiency | AR Step Guidance [142] |

| MR Collaborative | CAVE System | Multi-user training scenarios | Enhanced coordination | MR Collaborative [144] |

| CNN Object Detection | Epson Moverio AR | Automated guidance, fast training | Reliable identification in AR task | CNN Object Detection [144] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ioniță, A.-R.; Anghel, D.-C.; Boudouh, T. Mind, Machine, and Meaning: Cognitive Ergonomics and Adaptive Interfaces in the Age of Industry 5.0. Appl. Sci. 2025, 15, 7703. https://doi.org/10.3390/app15147703

Ioniță A-R, Anghel D-C, Boudouh T. Mind, Machine, and Meaning: Cognitive Ergonomics and Adaptive Interfaces in the Age of Industry 5.0. Applied Sciences. 2025; 15(14):7703. https://doi.org/10.3390/app15147703

Chicago/Turabian StyleIoniță, Andreea-Ruxandra, Daniel-Constantin Anghel, and Toufik Boudouh. 2025. "Mind, Machine, and Meaning: Cognitive Ergonomics and Adaptive Interfaces in the Age of Industry 5.0" Applied Sciences 15, no. 14: 7703. https://doi.org/10.3390/app15147703

APA StyleIoniță, A.-R., Anghel, D.-C., & Boudouh, T. (2025). Mind, Machine, and Meaning: Cognitive Ergonomics and Adaptive Interfaces in the Age of Industry 5.0. Applied Sciences, 15(14), 7703. https://doi.org/10.3390/app15147703