Computer Vision Meets Generative Models in Agriculture: Technological Advances, Challenges and Opportunities

Abstract

1. Introduction

2. Review Methodology

- Core Themes: This dimension defined the study’s broader context, including terms such as “Precision Agriculture”, “Smart Farming”, and “Digital Agriculture”.

- Core Technologies: This dimension focused on the key technical methods central to this review, with search terms like “Artificial Intelligence (AI)”, “Computer Vision”, “Deep Learning”, “Machine Learning”, “Generative AI”, “Generative Adversarial Networks (GANs)”, “Foundation Models”, “Convolutional Neural Networks (CNNs)”, and “Transformer”.

- Application Tasks: This dimension aimed to cover specific application scenarios of AI and vision in agriculture, including keywords such as “Disease Detection”, “Pest Detection”, “Weed Detection”, “Yield Prediction”, “Fruit Grading”, “Maturity Detection”, “Behaviour Recognition”, “Robotic Harvesting”, and “Autonomous Navigation”.

- Enabling Systems and Sensing Technologies: This dimension covered the hardware platforms and data acquisition technologies supporting the aforementioned applications, for instance, “Unmanned Aerial Vehicle (UAV)/Drone”, “Internet of Things (IoT)”, “Remote Sensing”, “Hyperspectral Imaging”, “Multispectral Imaging”, “Stereo Vision”, and “Edge Computing”.

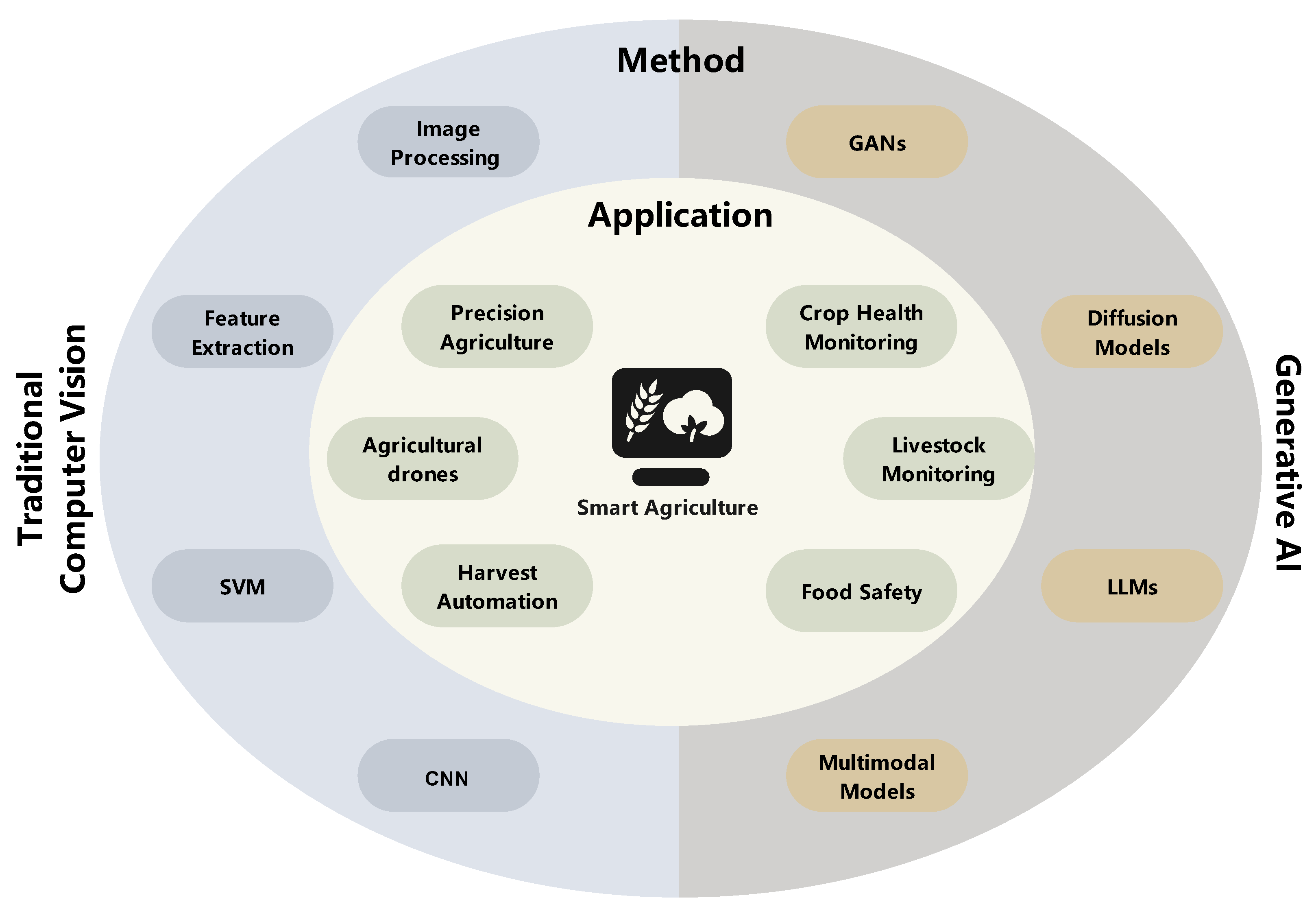

3. An Overview of GenAI-Driven CV Frameworks

3.1. Traditional CV Technologies in Smart Agriculture

3.2. A Paradigm Shift from Traditional CV to Generative AI

3.3. GenAI-Driven CV Framework with Agricultural IoT and Edge Computing Systems

- Data Acquisition and Edge Processing (IoT and Edge): An autonomous drone, acting as an agricultural IoT device, captures multispectral imagery of the field. Simultaneously, in-ground sensors report real-time soil moisture and temperature. Instead of transmitting vast amounts of raw data to the cloud, this information is processed locally on an edge computing device. This ensures a low-latency response, which is critical for guiding immediate in-field operations.

- The Data and Domain Challenge: The central problem is that a pretrained, generic CV model would likely fail. It lacks sufficient real-world examples of this specific crop variety’s nutrient deficiency under today’s unique lighting, atmospheric, and growth-stage conditions. This is a classic domain shift problem where models trained in one context perform poorly in another.

- Real-time, Physics-Informed Synthesis (GenAI and IoT): This is where GenAI becomes transformative. A lightweight generative model (e.g., a diffusion model) running on the edge device takes the real-time, multimodal IoT inputs—such as ambient light conditions from the camera and soil data—as conditional parameters [83,91]. Leveraging physics-informed neural rendering, the model synthesises a small, bespoke dataset of photorealistic images. These images accurately depict what the early signs of deficiency should look like, right here and now, effectively closing the domain gap.

- Adaptive Perception (CV and GenAI): The onboard CV model is then instantly fine-tuned with these newly generated, perfectly contextualised synthetic images. Now, the perception model is precisely adapted to the current environment and can accurately identify the subtle spectral and textural anomalies on the corn leaves that indicate deficiency.

- Actionable Insight for the Practitioner: Finally, the system moves beyond mere detection. It generates a precise, intuitive heat map of the affected areas, which is relayed to the farmer’s tablet or a variable-rate tractor. This enables a targeted application of fertiliser only where needed, optimising resource use and maximising crop health.

4. Applications of GenAI-Driven CV in Smart Agriculture

4.1. Crop Health Monitoring and Pest Detection

4.2. Fruit and Vegetable Maturity Detection and Harvesting Automation

4.3. Precision Agriculture and Field Management

4.4. Agricultural Drones and Remote Sensing Image Analysis

4.5. Livestock and Aquaculture Monitoring

4.5.1. Livestock Monitoring

4.5.2. Aquaculture Monitoring

4.6. Agricultural Product Quality Control and Food Safety

5. Challenges of CV in Smart Agriculture

5.1. Data Scarcity and Quality

5.2. Model Generalisation and Regional Adaptation

5.3. Edge Deployment Limitations

5.4. Environmental Variability and Robustness

5.5. Trustworthiness and Explainability

5.6. Socio-Technical and Economic Barriers

6. Future Trends and Outlook

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ghazal, S.; Munir, A.; Qureshi, W.S. Computer Vision in Smart Agriculture and Precision Farming: Techniques and Applications. Artif. Intell. Agric. 2024, 13, 64–83. [Google Scholar]

- Kumar, K.V.; Jayasankar, T. An Identification of Crop Disease Using Image Segmentation. Int. J. Pharm. Sci. Res. 2019, 10, 1054–1064. [Google Scholar]

- Granwehr, A.; Hofer, V. Analysis on Digital Image Processing for Plant Health Monitoring. J. Comput. Nat. Sci. 2021, 1, 5–8. [Google Scholar]

- Putra Laksamana, A.D.; Fakhrurroja, H.; Pramesti, D. Developing a Labeled Dataset for Chili Plant Health Monitoring: A Multispectral Image Segmentation Approach with YOLOv8. In Proceedings of the 2024 International Conference on Computer, Control, Informatics and its Applications (IC3INA), Bandung, Indonesia, 9–10 October 2024; pp. 440–445. [Google Scholar]

- Doll, O.; Loos, A. Comparison of Object Detection Algorithms for Livestock Monitoring of Sheep in UAV Images. In Proceedings of the International Workshop Camera Traps, AI, and Ecology, Jena, Germany, 7–8 September 2023. [Google Scholar]

- Ahmad, M.; Abbas, S.; Fatima, A.; Ghazal, T.M.; Alharbi, M.; Khan, M.A.; Elmitwally, N.S. AI-Driven Livestock Identification and Insurance Management System. Egypt. Inform. J. 2023, 24, 100390. [Google Scholar]

- Li, G.; Sun, J.; Guan, M.; Sun, S.; Shi, G.; Zhu, C. A New Method for Non-Destructive Identification and Tracking of Multi-Object Behaviors in Beef Cattle Based on Deep Learning. Animals 2024, 14, 2464. [Google Scholar]

- Kirongo, A.C.; Maitethia, D.; Mworia, E.; Muketha, G.M. Application of Real-Time Deep Learning in Integrated Surveillance of Maize and Tomato Pests and Bacterial Diseases. J. Kenya Natl. Comm. UNESCO 2024, 4, 1–13. [Google Scholar]

- K, S.R.; Sannakashappanavar, B.S.; Kumar, M.; Hegde, G.S.; Megha, M. Intelligent Surveillance and Protection System for Farmlands from Animals. In Proceedings of the 2024 IEEE International Conference on Contemporary Computing and Communications (InC4), Bangalore, India, 15–16 March 2024; IEEE: Piscataway, NJ, USA, 2024; Volume 1, pp. 1–6. [Google Scholar]

- Jiang, G.; Grafton, M.; Pearson, D.; Bretherton, M.; Holmes, A. Predicting Spatiotemporal Yield Variability to Aid Arable Precision Agriculture in New Zealand: A Case Study of Maize-Grain Crop Production in the Waikato Region. N. Z. J. Crop Hortic. Sci. 2021, 49, 41–62. [Google Scholar]

- Asadollah, S.B.H.S.; Jodar-Abellan, A.; Pardo, M.Á. Optimizing Machine Learning for Agricultural Productivity: A Novel Approach with RScv and Remote Sensing Data over Europe. Agric. Syst. 2024, 218, 103955. [Google Scholar]

- Sompal; Singh, R. To Identify a ML and CV Method for Monitoring and Recording the Variables that Impact on Crop Output. In Proceedings of the International Conference on Cognitive Computing and Cyber Physical Systems, Delhi, India, 1–2 December 2023; Springer: Singapore, 2025; pp. 371–382. [Google Scholar]

- Fadhaeel, T.; H, P.C.; Al Ahdal, A.; Rakhra, M.; Singh, D. Design and Development an Agriculture Robot for Seed Sowing, Water Spray and Fertigation. In Proceedings of the 2022 International Conference on Computational Intelligence and Sustainable Engineering Solutions (CISES), Greater Noida, India, 20–21 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 148–153. [Google Scholar]

- Yeshmukhametov, A.; Dauletiya, D.; Zhassuzak, M.; Buribayev, Z. Development of Mobile Robot with Autonomous Mobile Robot Weeding and Weed Recognition by Using Computer Vision. In Proceedings of the 2023 23rd International Conference on Control, Automation and Systems (ICCAS), Yeosu, Republic of Korea, 17–20 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1407–1412. [Google Scholar]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean Yield Prediction from UAV Using Multimodal Data Fusion and Deep Learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar]

- Zhou, H.; Yang, J.; Lou, W.; Sheng, L.; Li, D.; Hu, H. Improving Grain Yield Prediction through Fusion of Multi-Temporal Spectral Features and Agronomic Trait Parameters Derived from UAV Imagery. Front. Plant Sci. 2023, 14, 1217448. [Google Scholar]

- Su, X.; Nian, Y.; Shaghaleh, H.; Hamad, A.; Yue, H.; Zhu, Y.; Li, J.; Wang, W.; Wang, H.; Ma, Q.; et al. Combining Features Selection Strategy and Features Fusion Strategy for SPAD Estimation of Winter Wheat Based on UAV Multispectral Imagery. Front. Plant Sci. 2024, 15, 1404238. [Google Scholar]

- Ismail, N.; Malik, O.A. Real-Time Visual Inspection System for Grading Fruits Using Computer Vision and Deep Learning Techniques. Inf. Process. Agric. 2022, 9, 24–37. [Google Scholar]

- Hemamalini, V.; Rajarajeswari, S.; Nachiyappan, S.; Sambath, M.; Devi, T.; Singh, B.K.; Raghuvanshi, A. Food Quality Inspection and Grading Using Efficient Image Segmentation and Machine Learning-Based System. J. Food Qual. 2022, 2022, 5262294. [Google Scholar]

- Gururaj, N.; Vinod, V.; Vijayakumar, K. Deep Grading of Mangoes Using Convolutional Neural Network and Computer Vision. Multimed. Tools Appl. 2023, 82, 39525–39550. [Google Scholar]

- Dönmez, D.; Isak, M.A.; İzgü, T.; Şimşek, Ö. Green Horizons: Navigating the Future of Agriculture through Sustainable Practices. Sustainability 2024, 16, 3505. [Google Scholar] [CrossRef]

- Gai, J.; Xiang, L.; Tang, L. Using a Depth Camera for Crop Row Detection and Mapping for Under-Canopy Navigation of Agricultural Robotic Vehicle. Comput. Electron. Agric. 2021, 188, 106301. [Google Scholar]

- Yan, Y.; Zhang, B.; Zhou, J.; Zhang, Y.; Liu, X. Real-Time Localization and Mapping Utilizing Multi-Sensor Fusion and Visual–IMU–Wheel Odometry for Agricultural Robots in Unstructured, Dynamic and GPS-Denied Greenhouse Environments. Agronomy 2022, 12, 1740. [Google Scholar]

- Nakaguchi, V.M.; Abeyrathna, R.R.D.; Liu, Z.; Noguchi, R.; Ahamed, T. Development of a Machine Stereo Vision-Based Autonomous Navigation System for Orchard Speed Sprayers. Comput. Electron. Agric. 2024, 227, 109669. [Google Scholar]

- Li, M.; Shamshiri, R.R.; Weltzien, C.; Schirrmann, M. Crop Monitoring Using Sentinel-2 and UAV Multispectral Imagery: A Comparison Case Study in Northeastern Germany. Remote Sens. 2022, 14, 4426. [Google Scholar]

- Yang, Q.; Shi, L.; Han, J.; Chen, Z.; Yu, J. A VI-Based Phenology Adaptation Approach for Rice Crop Monitoring Using UAV Multispectral Images. Field Crops Res. 2022, 277, 108419. [Google Scholar]

- Shammi, S.A.; Huang, Y.; Feng, G.; Tewolde, H.; Zhang, X.; Jenkins, J.; Shankle, M. Application of UAV Multispectral Imaging to Monitor Soybean Growth with Yield Prediction through Machine Learning. Agronomy 2024, 14, 672. [Google Scholar] [CrossRef]

- Coito, T.; Firme, B.; Martins, M.S.E.; Vieira, S.M.; Figueiredo, J.; Sousa, J.M.C. Intelligent Sensors for Real-Time Decision-Making. Automation 2021, 2, 62–82. [Google Scholar] [CrossRef]

- Al-Jarrah, M.A.; Yaseen, M.A.; Al-Dweik, A.; Dobre, O.A.; Alsusa, E. Decision Fusion for IoT-Based Wireless Sensor Networks. IEEE Internet Things J. 2020, 7, 1313–1326. [Google Scholar]

- Kumar, P.; Motia, S.; Reddy, S. Integrating Wireless Sensing and Decision Support Technologies for Real-Time Farmland Monitoring and Support for Effective Decision Making: Designing and Deployment of WSN and DSS for Sustainable Growth of Indian Agriculture. Int. J. Inf. Technol. 2023, 15, 1081–1099. [Google Scholar]

- Almadani, B.; Mostafa, S.M. IIoT Based Multimodal Communication Model for Agriculture and Agro-Industries. IEEE Access 2021, 9, 10070–10088. [Google Scholar]

- Suciu, G.; Marcu, I.; Balaceanu, C.; Dobrea, M.; Botezat, E. Efficient IoT System for Precision Agriculture. In Proceedings of the 2019 15th International Conference on Engineering of Modern Electric Systems (EMES), Oradea, Romania, 13–14 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 173–176. [Google Scholar]

- Beldek, C.; Cunningham, J.; Aydin, M.; Sariyildiz, E.; Phung, S.; Alici, G. Sensing-Based Robustness Challenges in Agricultural Robotic Harvesting. In Proceedings of the 2025 IEEE International Conference on Mechatronics (ICM), Wollongong, Australia, 28 February–2 March 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–6. [Google Scholar]

- Luo, J.; Li, B.; Leung, C. A Survey of Computer Vision Technologies in Urban and Controlled-Environment Agriculture. ACM Comput. Surv. 2023, 56, 1–39. [Google Scholar]

- Zhang, X.; Cao, Z.; Dong, W. Overview of Edge Computing in the Agricultural Internet of Things: Key Technologies, Applications, Challenges. IEEE Access 2020, 8, 141748–141761. [Google Scholar]

- Williamson, H.F.; Brettschneider, J.; Caccamo, M.; Davey, R.P.; Goble, C.; Kersey, P.J.; May, S.; Morris, R.J.; Ostler, R.; Pridmore, T.; et al. Data Management Challenges for Artificial Intelligence in Plant and Agricultural Research. F1000Research 2023, 10, 324. [Google Scholar]

- Paulus, S.; Leiding, B. Can Distributed Ledgers Help to Overcome the Need of Labeled Data for Agricultural Machine Learning Tasks? Plant Phenomics 2023, 5, 0070. [Google Scholar]

- Culman, M.; Delalieux, S.; Beusen, B.; Somers, B. Automatic Labeling to Overcome the Limitations of Deep Learning in Applications with Insufficient Training Data: A Case Study on Fruit Detection in Pear Orchards. Comput. Electron. Agric. 2023, 213, 108196. [Google Scholar]

- Idoje, G.; Dagiuklas, T.; Iqbal, M. Survey for Smart Farming Technologies: Challenges and Issues. Comput. Electr. Eng. 2021, 92, 107104. [Google Scholar]

- Sai, S.; Kumar, S.; Gaur, A.; Goyal, S.; Chamola, V.; Hussain, A. Unleashing the Power of Generative AI in Agriculture 4.0 for Smart and Sustainable Farming. Cogn. Comput. 2025, 17, 63. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. In Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 6840–6851. [Google Scholar]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Fawakherji, M.; Potena, C.; Prevedello, I.; Pretto, A.; Bloisi, D.D.; Nardi, D. Data Augmentation Using GANs for Crop/Weed Segmentation in Precision Farming. In Proceedings of the 2020 IEEE Conference on Control Technology and Applications (CCTA), Montreal, QC, Canada, 24–26 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 279–284. [Google Scholar]

- Pasaribu, F.B.; Dewi, L.J.E.; Aryanto, K.Y.E.; Seputra, K.A.; Varnakovida, P.; Kertiasih, N.K. Generating Synthetic Data on Agricultural Crops with DCGAN. In Proceedings of the 2024 11th International Conference on Advanced Informatics: Concept, Theory and Application (ICAICTA), Singapore, 28–30 September 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Zhao, K.; Nguyen, M.; Yan, W. Evaluating Accuracy and Efficiency of Fruit Image Generation Using Generative AI Diffusion Models for Agricultural Robotics. In Proceedings of the 2024 39th International Conference on Image and Vision Computing New Zealand (IVCNZ), Christchurch, New Zealand, 4–6 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Yu, Z.; Ye, J.; Liufu, S.; Lu, D.; Zhou, H. TasselLFANetV2: Exploring Vision Models Adaptation in Cross-Domain. IEEE Geosci. Remote Sens. Lett. 2024, 21, 2504105. [Google Scholar]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Gu, Y.; Dong, L.; Wei, F.; Huang, M. MiniLLM: Knowledge Distillation of Large Language Models. In Proceedings of the The Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Huang, Q.; Wu, X.; Wang, Q.; Dong, X.; Qin, Y.; Wu, X.; Gao, Y.; Hao, G. Knowledge Distillation Facilitates the Lightweight and Efficient Plant Diseases Detection Model. Plant Phenomics 2023, 5, 0062. [Google Scholar]

- Haddaway, N.R.; Page, M.J.; Pritchard, C.C.; McGuinness, L.A. PRISMA2020: An R package and Shiny app for producing PRISMA 2020-compliant flow diagrams, with interactivity for optimised digital transparency and Open Synthesis. Campbell Syst. Rev. 2022, 18, e1230. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Sharif, M.; Khan, M.A.; Iqbal, Z.; Azam, M.F.; Lali, M.I.U.; Javed, M.Y. Detection and Classification of Citrus Diseases in Agriculture Based on Optimized Weighted Segmentation and Feature Selection. Comput. Electron. Agric. 2018, 150, 220–234. [Google Scholar]

- Aygün, S.; Güneş, E.O. A Benchmarking: Feature Extraction and Classification of Agricultural Textures Using LBP, GLCM, RBO, Neural Networks, k-NN, and Random Forest. In Proceedings of the 2017 6th International Conference on Agro-Geoinformatics, Fairfax, VA, USA, 7–10 August 2017; pp. 1–4. [Google Scholar]

- Dutta, K.; Talukdar, D.; Bora, S.S. Segmentation of Unhealthy Leaves in Cruciferous Crops for Early Disease Detection Using Vegetative Indices and Otsu Thresholding of Aerial Images. Measurement 2022, 189, 110478. [Google Scholar]

- Li, Z.; Sun, J.; Shen, Y.; Yang, Y.; Wang, X.; Wang, X.; Tian, P.; Qian, Y. Deep Migration Learning-Based Recognition of Diseases and Insect Pests in Yunnan Tea under Complex Environments. Plant Methods 2024, 20, 101. [Google Scholar]

- Heider, N.; Gunreben, L.; Zürner, S.; Schieck, M. A Survey of Datasets for Computer Vision in Agriculture. arXiv 2025, arXiv:2502.16950. [Google Scholar]

- Xu, R.; Li, C.; Paterson, A.H. Multispectral Imaging and Unmanned Aerial Systems for Cotton Plant Phenotyping. PLoS ONE 2019, 14, e0205083. [Google Scholar]

- Sarić, R.; Nguyen, V.D.; Burge, T.; Berkowitz, O.; Trtílek, M.; Whelan, J.; Lewsey, M.G.; Čustović, E. Applications of Hyperspectral Imaging in Plant Phenotyping. Trends Plant Sci. 2022, 27, 301–315. [Google Scholar] [PubMed]

- Detring, J.; Barreto, A.; Mahlein, A.K.; Paulus, S. Quality Assurance of Hyperspectral Imaging Systems for Neural Network Supported Plant Phenotyping. Plant Methods 2024, 20, 189. [Google Scholar]

- Tong, L.; Fang, J.; Wang, X.; Zhao, Y. Research on Cattle Behavior Recognition and Multi-Object Tracking Algorithm Based on YOLO-BoT. Animals 2024, 14, 2993. [Google Scholar] [CrossRef] [PubMed]

- Qiao, Y.; Guo, Y.; He, D. Cattle Body Detection Based on YOLOv5-ASFF for Precision Livestock Farming. Comput. Electron. Agric. 2023, 204, 107579. [Google Scholar]

- Vogg, R.; Lüddecke, T.; Henrich, J.; Dey, S.; Nuske, M.; Hassler, V.; Murphy, D.; Fischer, J.; Ostner, J.; Schülke, O.; et al. Computer Vision for Primate Behavior Analysis in the Wild. Nat. Methods 2025, 22, 1154–1166. [Google Scholar]

- Gaso, D.V.; de Wit, A.; Berger, A.G.; Kooistra, L. Predicting within-Field Soybean Yield Variability by Coupling Sentinel-2 Leaf Area Index with a Crop Growth Model. Agric. For. Meteorol. 2021, 308, 108553. [Google Scholar]

- Raj, R.; Walker, J.P.; Pingale, R.; Nandan, R.; Naik, B.; Jagarlapudi, A. Leaf Area Index Estimation Using Top-of-Canopy Airborne RGB Images. Int. J. Appl. Earth Obs. Geoinf. 2021, 96, 102282. [Google Scholar]

- Das Menon H, K.; Mishra, D.; Deepa, D. Automation and Integration of Growth Monitoring in Plants (with Disease Prediction) and Crop Prediction. Mater. Today Proc. 2021, 43, 3922–3927. [Google Scholar]

- Marcu, I.; Drăgulinescu, A.M.; Oprea, C.; Suciu, G.; Bălăceanu, C. Predictive Analysis and Wine-Grapes Disease Risk Assessment Based on Atmospheric Parameters and Precision Agriculture Platform. Sustainability 2022, 14, 11487. [Google Scholar] [CrossRef]

- Donmez, C.; Villi, O.; Berberoglu, S.; Cilek, A. Computer Vision-Based Citrus Tree Detection in a Cultivated Environment Using UAV Imagery. Comput. Electron. Agric. 2021, 187, 106273. [Google Scholar]

- Vergara, B.; Toledo, K.; Fernández-Campusano, C.; Santamaria, A.R. Drone and Computer Vision-Based Detection of Aleurothrixus Floccosus in Citrus Crops. In Proceedings of the 2024 IEEE International Conference on Automation/XXVI Congress of the Chilean Association of Automatic Control (ICA-ACCA), Santiago, Chile, 20–23 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Ali, H.; Nidzamuddin, S.; Elshaikh, M. Smart Irrigation System Based IoT for Indoor Housing Farming. In AIP Conference Proceedings; AIP Publishing: Melville, NY, USA, 2024; Volume 2898. [Google Scholar]

- Manikandan, J.; Saran, J.; Samitha, S.; Rhikshitha, K. An Effective Study on the Machine Vision-Based Automatic Control and Monitoring in Furrow Irrigation and Precision Irrigation. In Computer Vision in Smart Agriculture and Crop Management; Scrivener Publishing LLC: Beverly, MA, USA, 2025; pp. 323–342. [Google Scholar]

- Banh, L.; Strobel, G. Generative Artificial Intelligence. Electron. Mark. 2023, 33, 63. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Hu, X.; Gan, Z.; Wang, J.; Yang, Z.; Liu, Z.; Lu, Y.; Wang, L. Scaling up Vision-Language pre-Training for Image Captioning. In Proceedings of the IEEE/CVF conference on Computer Vision and pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17980–17989. [Google Scholar]

- Sapkota, R.; Karkee, M. Creating Image Datasets in Agricultural Environments Using DALL. E: Generative AI-Powered Large Language Model. arXiv 2023, arXiv:2307.08789. [Google Scholar]

- Chou, C.B.; Lee, C.H. Generative Neural network-Based Online Domain Adaptation (GNN-ODA) Approach for Incomplete Target Domain Data. IEEE Trans. Instrum. Meas. 2023, 72, 3508110. [Google Scholar]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing Scenes as Neural Radiance Fields for View Synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar]

- Modak, S.; Stein, A. Synthesizing Training Data for Intelligent Weed Control Systems Using Generative AI. In Proceedings of the International Conference on Architecture of Computing Systems, Potsdam, Germany, 13–15 May 2024; Springer: Cham, Switzerland, 2024; pp. 112–126. [Google Scholar]

- Bhugra, S.; Srivastava, S.; Kaushik, V.; Mukherjee, P.; Lall, B. Plant Data Generation with Generative AI: An Application to Plant Phenotyping. In Applications of Generative AI; Springer: Cham, Switzerland, 2024; pp. 503–535. [Google Scholar]

- Madsen, S.L.; Dyrmann, M.; Jørgensen, R.N.; Karstoft, H. Generating Artificial Images of Plant Seedlings Using Generative Adversarial Networks. Biosyst. Eng. 2019, 187, 147–159. [Google Scholar]

- Miranda, M.; Drees, L.; Roscher, R. Controlled Multi-Modal Image Generation for Plant Growth Modeling. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 5118–5124. [Google Scholar]

- Dhumale, Y.; Bamnote, G.; Kale, R.; Sawale, G.; Chaudhari, A.; Karwa, R. Generative Modeling Techniques for Simulating Rare Agricultural Events on Prediction of Wheat Yield Production. In Proceedings of the 2024 2nd DMIHER International Conference on Artificial Intelligence in Healthcare, Education and Industry (IDICAIEI), Wardha, India, 29–30 November 2024; pp. 1–5. [Google Scholar]

- Klair, Y.S.; Agrawal, K.; Kumar, A. Impact of Generative AI in Diagnosing Diseases in Agriculture. In Proceedings of the 2024 2nd International Conference on Disruptive Technologies (ICDT), Greater Noida, India, 15–16 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 870–875. [Google Scholar]

- Klein, J.; Waller, R.; Pirk, S.; Pałubicki, W.; Tester, M.; Michels, D.L. Synthetic Data at Scale: A Development Model to Efficiently Leverage Machine Learning in Agriculture. Front. Plant Sci. 2024, 15, 1360113. [Google Scholar]

- Saraceni, L.; Motoi, I.M.; Nardi, D.; Ciarfuglia, T.A. Self-Supervised Data Generation for Precision Agriculture: Blending Simulated Environments with Real Imagery. In Proceedings of the 2024 IEEE 20th International Conference on Automation Science and Engineering (CASE), Bari, Italy, 28 August–1 September 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 71–77. [Google Scholar]

- Lohar, R.; Mathur, H.; patil, V.R. Estimation of Crop Recommendation Using Generative Adversarial Network with Optimized Machine Learning Model. Cuest. Fisioter. 2025, 54, 328–341. [Google Scholar]

- Yoon, S.; Cho, Y.; Shin, M.; Lin, M.Y.; Kim, D.; Ahn, T.I. Melon Fruit Detection and Quality Assessment Using Generative AI-Based Image Data Augmentation. J. Bio-Environ. Control 2024, 33, 352–360. [Google Scholar]

- Yoon, S.; Lee, Y.; Jung, E.; Ahn, T.I. Agricultural Applicability of AI Based Image Generation. J. Bio-Environ. Control 2024, 33, 120–128. [Google Scholar]

- Duguma, A.; Bai, X. Contribution of Internet of Things (IoT) in Improving Agricultural Systems. Int. J. Environ. Sci. Technol. 2024, 21, 2195–2208. [Google Scholar]

- David, P.E.; Chelliah, P.R.; Anandhakumar, P. Reshaping Agriculture Using Intelligent Edge Computing. In Advances in Computers; Elsevier: Amsterdam, The Netherlands, 2024; Volume 132, pp. 167–204. [Google Scholar]

- Majumder, S.; Khandelwal, Y.; Sornalakshmi, K. Computer Vision and Generative AI for Yield Prediction in Digital Agriculture. In Proceedings of the 2024 2nd International Conference on Networking and Communications (ICNWC), Chennai, India, 2–4 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Dey, A.; Bhoumik, D.; Dey, K.N. Automatic detection of whitefly pest using statistical feature extraction and image classification methods. Int. Res. J. Eng. Technol. 2016, 3, 950–959. [Google Scholar]

- Islam, A.; Islam, R.; Haque, S.R.; Islam, S.M.; Khan, M.A.I. Rice Leaf Disease Recognition using Local Threshold Based Segmentation and Deep CNN. Int. J. Intell. Syst. Appl. 2021, 10, 35. [Google Scholar]

- Duan, Y.; Han, W.; Guo, P.; Wei, X. YOLOv8-GDCI: Research on the Phytophthora Blight Detection Method of Different Parts of Chili Based on Improved YOLOv8 Model. Agronomy 2024, 14, 2734. [Google Scholar] [CrossRef]

- Ma, J.; Zhao, Y.; Fan, W.; Liu, J. An Improved YOLOv8 Model for Lotus Seedpod Instance Segmentation in the Lotus Pond Environment. Agronomy 2024, 14, 1325. [Google Scholar] [CrossRef]

- Yang, N.; Chang, K.; Dong, S.; Tang, J.; Wang, A.; Huang, R.; Jia, Y. Rapid Image Detection and Recognition of Rice False Smut Based on Mobile Smart Devices with Anti-Light Features from Cloud Database. Biosyst. Eng. 2022, 218, 229–244. [Google Scholar]

- Mahmood ur Rehman, M.; Liu, J.; Nijabat, A.; Faheem, M.; Wang, W.; Zhao, S. Leveraging Convolutional Neural Networks for Disease Detection in Vegetables: A Comprehensive Review. Agronomy 2024, 14, 2231. [Google Scholar] [CrossRef]

- Verma, S.; Chug, A.; Singh, A.P.; Singh, D. Plant Disease Detection and Severity Assessment Using Image Processing and Deep Learning Techniques. SN Comput. Sci. 2023, 5, 83. [Google Scholar]

- Eunice, J.; Popescu, D.E.; Chowdary, M.K.; Hemanth, J. Deep Learning-Based Leaf Disease Detection in Crops using Images for Agricultural Applications. Agronomy 2022, 12, 2395. [Google Scholar] [CrossRef]

- Xu, Q.; Cai, J.R.; Zhang, W.; Bai, J.W.; Li, Z.Q.; Tan, B.; Sun, L. Detection of Citrus Huanglongbing (HLB) Based on the HLB-Induced Leaf Starch Accumulation Using a Home-Made Computer Vision System. Biosyst. Eng. 2022, 218, 163–174. [Google Scholar]

- Jia, Y.; Shi, Y.; Luo, J.; Sun, H. Y-Net: Identification of Typical Diseases of Corn Leaves Using a 3D-2D Hybrid CNN Model Combined with a Hyperspectral Image Band Selection Module. Sensors 2023, 23, 1494. [Google Scholar] [PubMed]

- Zhu, W.; Sun, J.; Wang, S.; Shen, J.; Yang, K.; Zhou, X. Identifying Field Crop Diseases Using Transformer-Embedded Convolutional Neural Network. Agriculture 2022, 12, 1083. [Google Scholar] [CrossRef]

- Kuswidiyanto, L.W.; Wang, P.; Noh, H.H.; Jung, H.Y.; Jung, D.H.; Han, X. Airborne Hyperspectral Imaging for Early Diagnosis of Kimchi Cabbage Downy Mildew using 3D-ResNet and Leaf Segmentation. Comput. Electron. Agric. 2023, 214, 108312. [Google Scholar]

- Wang, Y.; Li, T.; Chen, T.; Zhang, X.; Taha, M.F.; Yang, N.; Mao, H.; Shi, Q. Cucumber Downy Mildew Disease Prediction Using a CNN-LSTM Approach. Agriculture 2024, 14, 1155. [Google Scholar] [CrossRef]

- Bhargava, A.; Shukla, A.; Goswami, O.P.; Alsharif, M.H.; Uthansakul, P.; Uthansakul, M. Plant Leaf Disease Detection, Classification, and Diagnosis Using Computer Vision and Artificial Intelligence: A Review. IEEE Access 2024, 12, 37443–37469. [Google Scholar]

- Hu, Y.; Zhang, Y.; Liu, S.; Zhou, G.; Li, M.; Hu, Y.; Li, J.; Sun, L. DMFGAN: A Multifeature Data Augmentation Method for Grape Leaf Disease Identification. Plant J. 2024, 120, 1278–1303. [Google Scholar]

- Min, B.; Kim, T.; Shin, D.; Shin, D. Data Augmentation Method for Plant Leaf Disease Recognition. Appl. Sci. 2023, 13, 1465. [Google Scholar]

- Zhao, Y.; Chen, Z.; Gao, X.; Song, W.; Xiong, Q.; Hu, J.; Zhang, Z. Plant Disease Detection Using Generated Leaves Based on DoubleGAN. IEEE-ACM Trans. Comput. Biol. Bioinform. 2022, 19, 1817–1826. [Google Scholar]

- Abbas, A.; Jain, S.; Gour, M.; Vankudothu, S. Tomato plant disease detection using transfer learning with C-GAN synthetic images. Comput. Electron. Agric. 2021, 187, 106279. [Google Scholar]

- Cheng, W.; Ma, T.; Wang, X.; Wang, G. Anomaly Detection for Internet of Things Time Series Data Using Generative Adversarial Networks With Attention Mechanism in Smart Agriculture. Front. Plant Sci. 2022, 13, 890563. [Google Scholar]

- Zhang, Y.; Wa, S.; Zhang, L.; Lv, C. Automatic Plant Disease Detection Based on Tranvolution Detection Network With GAN Modules Using Leaf Images. Front. Plant Sci. 2022, 13, 875693. [Google Scholar]

- Cui, X.; Ying, Y.; Chen, Z. CycleGAN Based Confusion Model for Cross-Species Plant Disease Image Migration. J. Intell. Fuzzy Syst. 2021, 41, 6685–6696. [Google Scholar]

- Drees, L.; Demie, D.T.; Paul, M.R.; Leonhardt, J.; Seidel, S.J.; Doering, T.F.; Roscher, R. Data-Driven Crop Growth Simulation on Time-Varying Generated Images using Multi-Conditional Generative Adversarial Networks. Plant Methods 2024, 20, 93. [Google Scholar]

- Lopes, F.A.; Sagan, V.; Esposito, F. PlantPlotGAN: A Physics-Informed Generative Adversarial Network for Plant Disease Prediction. In Proceedings of the 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 7051–7060. [Google Scholar]

- Fawakherji, M.; Potena, C.; Pretto, A.; Bloisi, D.D.; Nardi, D. Multi-Spectral Image Synthesis for Crop/Weed Segmentation in Precision Farming. Robot. Auton. Syst. 2021, 146, 103861. [Google Scholar]

- Ismail; Budiman, D.; Asri, E.; Aidha, Z.R. The Smart Agriculture based on Reconstructed Thermal Image. In Proceedings of the 2022 2nd International Conference on Intelligent Technologies (CONIT), Hubli, India, 24–26 June 2022; pp. 1–6. [Google Scholar]

- Saxena, D.; Cao, J. Generative adversarial networks (GANs) challenges, solutions, and future directions. ACM Comput. Surv. (CSUR) 2021, 54, 1–42. [Google Scholar]

- Pothapragada, I.S.; R, S. GANs for data augmentation with stacked CNN models and XAI for interpretable maize yield prediction. Smart Agric. Technol. 2025, 11, 100992. [Google Scholar]

- Lin, W.; Adetomi, A.; Arslan, T. Low-Power Ultra-Small Edge AI Accelerators for Image Recognition with Convolution Neural Networks: Analysis and Future Directions. Electronics 2021, 10, 2048. [Google Scholar]

- Zhao, Y.; Li, C.; Yu, P.; Gao, J.; Chen, C. Feature Quantization Improves GAN Training. arXiv 2020, arXiv:2004.02088. [Google Scholar]

- Kudo, S.; Kagiwada, S.; Iyatomi, H. Few-shot Metric Domain Adaptation: Practical Learning Strategies for an Automated Plant Disease Diagnosis. arXiv 2024, arXiv:2412.18859. [Google Scholar]

- Chang, Y.C.; Stewart, A.J.; Bastani, F.; Wolters, P.; Kannan, S.; Huber, G.R.; Wang, J.; Banerjee, A. On the Generalizability of Foundation Models for Crop Type Mapping. arXiv 2025, arXiv:2409.09451. [Google Scholar]

- Awais, M.; Alharthi, A.H.S.A.; Kumar, A.; Cholakkal, H.; Anwer, R.M. AgroGPT: Efficient Agricultural Vision-Language Model with Expert Tuning. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Tucson, AZ, USA, 26 February–6 March 2025. [Google Scholar]

- Roumeliotis, K.I.; Sapkota, R.; Karkee, M.; Tselikas, N.D.; Nasiopoulos, D.K. Plant Disease Detection through Multimodal Large Language Models and Convolutional Neural Networks. arXiv 2025, arXiv:2504.20419. [Google Scholar]

- Joshi, H. Edge-AI for Agriculture: Lightweight Vision Models for Disease Detection in Resource-Limited Settings. arXiv 2024, arXiv:2412.18635. [Google Scholar]

- Chen, S.; Xiong, J.; Jiao, J.; Xie, Z.; Huo, Z.; Hu, W. Citrus fruits maturity detection in natural environments based on convolutional neural networks and visual saliency map. Precis. Agric. 2022, 23, 1515–1531. [Google Scholar]

- Terdwongworakul, A.; Chaiyapong, S.; Jarimopas, B.; Meeklangsaen, W. Physical properties of fresh young Thai coconut for maturity sorting. Biosyst. Eng. 2009, 103, 208–216. [Google Scholar]

- Jiang, L.; Wang, Y.; Wu, C.; Wu, H. Fruit Distribution Density Estimation in YOLO-Detected Strawberry Images: A Kernel Density and Nearest Neighbor Analysis Approach. Agriculture 2024, 14, 1848. [Google Scholar] [CrossRef]

- Ji, W.; Gao, X.; Xu, B.; Pan, Y.; Zhang, Z.; Zhao, D. Apple Target Recognition Method in Complex Environment Based on Improved YOLOv4. J. Food Process Eng. 2021, 44, e13866. [Google Scholar]

- Yan, B.; Fan, P.; Lei, X.; Liu, Z.; Yang, F. A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar]

- Hu, T.; Wang, W.; Gu, J.; Xia, Z.; Zhang, J.; Wang, B. Research on Apple Object Detection and Localization Method Based on Improved YOLOX and RGB-D Images. Agronomy 2023, 13, 1816. [Google Scholar] [CrossRef]

- Chen, Y.; Xu, H.; Chang, P.; Huang, Y.; Zhong, F.; Jia, Q.; Chen, L.; Zhong, H.; Liu, S. CES-YOLOv8: Strawberry Maturity Detection Based on the Improved YOLOv8. Agronomy 2024, 14, 1353. [Google Scholar] [CrossRef]

- Wang, M.; Li, F. Real-Time Accurate Apple Detection Based on Improved YOLOv8n in Complex Natural Environments. Plants 2025, 14, 365. [Google Scholar] [PubMed]

- Zhang, F.; Chen, Z.; Ali, S.; Yang, N.; Fu, S.; Zhang, Y. Multi-Class Detection of Cherry Tomatoes Using Improved Yolov4-Tiny Model. Int. J. Agric. Biol. Eng. 2023, 16, 225–231. [Google Scholar]

- Zhang, Q.; Chen, Q.; Xu, W.; Xu, L.; Lu, E. Prediction of Feed Quantity for Wheat Combine Harvester Based on Improved YOLOv5s and Weight of Single Wheat Plant without Stubble. Agriculture 2024, 14, 1251. [Google Scholar] [CrossRef]

- Li, J.; Lammers, K.; Yin, X.; Yin, X.; He, L.; Lu, R.; Li, Z. MetaFruit Meets Foundation Models: Leveraging a Comprehensive Multi-Fruit Dataset for Advancing Agricultural Foundation Models. Comput. Electron. Agric. 2025, 231, 109908. [Google Scholar]

- Eccles, B.J.; Rodgers, P.; Kilpatrick, P.; Spence, I.; Varghese, B. DNNShifter: An efficient DNN pruning system for edge computing. Future Gener. Comput. Syst. 2024, 152, 43–54. [Google Scholar]

- Zuo, Z.; Gao, S.; Peng, H.; Xue, Y.; Han, L.; Ma, G.; Mao, H. Lightweight Detection of Broccoli Heads in Complex Field Environments Based on LBDC-YOLO. Agronomy 2024, 14, 2359. [Google Scholar] [CrossRef]

- Li, Y.; Xu, X.; Wu, W.; Zhu, Y.; Yang, G.; Yang, X.; Meng, Y.; Jiang, X.; Xue, H. Hyperspectral Estimation of Chlorophyll Content in Grape Leaves Based on Fractional-Order Differentiation and Random Forest Algorithm. Remote Sens. 2024, 16, 2174. [Google Scholar]

- Sun, M.; Zhang, D.; Liu, L.; Wang, Z. How to Predict the Sugariness and Hardness of Melons: A Near-Infrared Hyperspectral Imaging Method. Food Chem. 2017, 218, 413–421. [Google Scholar]

- Sun, Q.; Chai, X.; Zeng, Z.; Zhou, G.; Sun, T. Noise-Tolerant RGB-D Feature Fusion Network for Outdoor Fruit Detection. Comput. Electron. Agric. 2022, 198, 107034. [Google Scholar]

- Lu, P.; Zheng, W.; Lv, X.; Xu, J.; Zhang, S.; Li, Y.; Zhangzhong, L. An Extended Method Based on the Geometric Position of Salient Image Features: Solving the Dataset Imbalance Problem in Greenhouse Tomato Growing Scenarios. Agriculture 2024, 14, 1893. [Google Scholar] [CrossRef]

- Huo, Y.; Liu, Y.; He, P.; Hu, L.; Gao, W.; Gu, L. Identifying Tomato Growth Stages in Protected Agriculture with StyleGAN3-Synthetic Images and Vision Transformer. Agriculture 2025, 15, 120. [Google Scholar]

- Hirahara, K.; Nakane, C.; Ebisawa, H.; Kuroda, T.; Iwaki, Y.; Utsumi, T.; Nomura, Y.; Koike, M.; Mineno, H. D4: Text-Guided Diffusion Model-Based Domain Adaptive Data Augmentation for Vineyard Shoot Detection. Comput. Electron. Agric. 2025, 230, 109849. [Google Scholar]

- Kierdorf, J.; Weber, I.; Kicherer, A.; Zabawa, L.; Drees, L.; Roscher, R. Behind the Leaves: Estimation of Occluded Grapevine Berries with Conditional Generative Adversarial Networks. Front. Artif. Intell. 2022, 5, 830026. [Google Scholar]

- Jin, Y.; Liu, J.; Xu, Z.; Yuan, S.; Li, P.; Wang, J. Development Status and Trend of Agricultural Robot Technology. Int. J. Agric. Biol. Eng. 2021, 14, 1–19. [Google Scholar]

- Luo, Y.; Wei, L.; Xu, L.; Zhang, Q.; Liu, J.; Cai, Q.; Zhang, W. Stereo-Vision-Based Multi-Crop Harvesting Edge Detection for Precise Automatic Steering of Combine Harvester. Biosyst. Eng. 2022, 215, 115–128. [Google Scholar]

- Chu, P.; Li, Z.; Zhang, K.; Chen, D.; Lammers, K.; Lu, R. O2RNet: Occluder-Occludee Relational Network for Robust Apple Detection in Clustered Orchard Environments. Smart Agric. Technol. 2023, 5, 100284. [Google Scholar]

- Jo, Y.; Park, Y.; Son, H.I. A Suction Cup-Based Soft Robotic Gripper for Cucumber Harvesting: Design and Validation. Biosyst. Eng. 2024, 238, 143–156. [Google Scholar]

- Zhou, H.; Ahmed, A.; Liu, T.; Romeo, M.; Beh, T.; Pan, Y.; Kang, H.; Chen, C. Finger Vision Enabled Real-Time Defect Detection in Robotic Harvesting. Comput. Electron. Agric. 2025, 234, 110222. [Google Scholar]

- Zhou, X.; Chen, W.; Wei, X. Improved Field Obstacle Detection Algorithm Based on YOLOv8. Agriculture 2024, 14, 2263. [Google Scholar] [CrossRef]

- Mortazavi, M.; Cappelleri, D.J.; Ehsani, R. RoMu4o: A Robotic Manipulation Unit For Orchard Operations Automating Proximal Hyperspectral Leaf Sensing. arXiv 2025, arXiv:2501.10621. [Google Scholar]

- Wang, Z.; Walsh, K.; Koirala, A. Mango Fruit Load Estimation using a Video Based MangoYOLO—Kalman Filter—Hungarian Algorithm Method. Sensors 2019, 19, 2742. [Google Scholar] [PubMed]

- Manoj, T.; Makkithaya, K.; Narendra, V. A Blockchain-Assisted Trusted Federated Learning for Smart Agriculture. SN Comput. Sci. 2025, 6, 221. [Google Scholar]

- Bai, J.; Mei, Y.; He, F.; Long, F.; Liao, Y.; Gao, H.; Huang, Y. Rapid and Non-Destructive Quality Grade Assessment of Hanyuan Zanthoxylum Bungeanum Fruit Using a Smartphone Application Integrating Computer Vision Systems and Convolutional Neural Networks. Food Control 2025, 168, 110844. [Google Scholar]

- Nyborg, J.; Pelletier, C.; Lefèvre, S.; Assent, I. TimeMatch: Unsupervised Cross-Region Adaptation by Temporal Shift Estimation. ISPRS J. Photogramm. Remote Sens. 2022, 188, 301–313. [Google Scholar]

- Cieslak, M.; Govindarajan, U.; Garcia, A.; Chandrashekar, A.; Hadrich, T.; Mendoza-Drosik, A.; Michels, D.L.; Pirk, S.; Fu, C.C.; Palubicki, W. Generating Diverse Agricultural Data for Vision-Based Farming Applications. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5422–5431. [Google Scholar]

- Alexander, C.S.; Yarborough, M.; Smith, A. Who is Responsible for ‘Responsible AI’?: Navigating Challenges to Build Trust in AI Agriculture and Food System Technology. Precis. Agric. 2024, 25, 146–185. [Google Scholar]

- Saranya, T.; Deisy, C.; Sridevi, S.; Anbananthen, K.S.M. A Comparative Study of Deep Learning and Internet of Things for Precision Agriculture. Eng. Appl. Artif. Intell. 2023, 122, 106034. [Google Scholar]

- Radoglou-Grammatikis, P.; Sarigiannidis, P.; Lagkas, T.; Moscholios, I. A Compilation of UAV Applications for Precision Agriculture. Comput. Netw. 2020, 172, 107148. [Google Scholar]

- Ampatzidis, Y.; Partel, V.; Costa, L. Agroview: Cloud-Based Application to Process, Analyze and Visualize UAV-Collected Data for Precision Agriculture Applications Utilizing Artificial Intelligence. Comput. Electron. Agric. 2020, 174, 105457. [Google Scholar]

- Anderegg, J.; Tschurr, F.; Kirchgessner, N.; Treier, S.; Schmucki, M.; Streit, B.; Walter, A. On-Farm Evaluation of UAV-Based Aerial Imagery for Season-Long Weed Monitoring under Contrasting Management and Pedoclimatic Conditions in Wheat. Comput. Electron. Agric. 2023, 204, 107558. [Google Scholar]

- Zhang, Y.; Yan, Z.; Gao, J.; Shen, Y.; Zhou, H.; Tang, W.; Lu, Y.; Yang, Y. UAV Imaging Hyperspectral for Barnyard Identification and Spatial Distribution in Paddy Fields. Expert Syst. Appl. 2024, 255, 124771. [Google Scholar]

- Velusamy, P.; Rajendran, S.; Mahendran, R.K.; Naseer, S.; Shafiq, M.; Choi, J.G. Unmanned Aerial Vehicles (UAV) in Precision Agriculture: Applications and Challenges. Energies 2021, 15, 217. [Google Scholar] [CrossRef]

- Punithavathi, R.; Rani, A.D.C.; Sughashini, K.; Kurangi, C.; Nirmala, M.; Ahmed, H.F.T.; Balamurugan, S. Computer Vision and Deep Learning-Enabled Weed Detection Model for Precision Agriculture. Comput. Syst. Sci. Eng. 2023, 44, 2759–2774. [Google Scholar]

- Khan, S.; Tufail, M.; Khan, M.T.; Khan, Z.A.; Anwar, S. Deep Learning-Based Identification System of Weeds and Crops in Strawberry and Pea Fields for a Precision Agriculture Sprayer. Precis. Agric. 2021, 22, 1711–1727. [Google Scholar]

- Ma, C.; Chi, G.; Ju, X.; Zhang, J.; Yan, C. YOLO-CWD: A Novel Model for Crop and Weed Detection Based on Improved YOLOv8. Crop Prot. 2025, 192, 107169. [Google Scholar]

- Sharma, V.; Tripathi, A.K.; Mittal, H.; Parmar, A.; Soni, A.; Amarwal, R. Weedgan: A Novel Generative Adversarial Network for Cotton Weed Identification. Vis. Comput. 2023, 39, 6503–6519. [Google Scholar]

- Li, Y.; Guo, Z.; Sun, Y.; Chen, X.; Cao, Y. Weed Detection Algorithms in Rice Fields Based on Improved YOLOv10n. Agriculture 2024, 14, 2066. [Google Scholar] [CrossRef]

- Deng, L.; Miao, Z.; Zhao, X.; Yang, S.; Gao, Y.; Zhai, C.; Zhao, C. HAD-YOLO: An Accurate and Effective Weed Detection Model Based on Improved YOLOV5 Network. Agronomy 2025, 15, 57. [Google Scholar]

- Tao, T.; Wei, X. STBNA-YOLOv5: An Improved YOLOv5 Network for Weed Detection in Rapeseed Field. Agriculture 2024, 15, 22. [Google Scholar] [CrossRef]

- Pei, H.; Sun, Y.; Huang, H.; Zhang, W.; Sheng, J.; Zhang, Z. Weed Detection in Maize Fields by UAV Images Based on Crop Row Preprocessing and Improved YOLOv4. Agriculture 2022, 12, 975. [Google Scholar] [CrossRef]

- Hu, C.; Thomasson, J.A.; Bagavathiannan, M.V. A Powerful Image Synthesis and Semi-Supervised Learning Pipeline for Site-Specific Weed Detection. Comput. Electron. Agric. 2021, 190, 106423. [Google Scholar]

- Pérez-Ortiz, M.; Peña, J.; Gutiérrez, P.A.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. A Semi-Supervised System for Weed Mapping in Sunflower Crops Using Unmanned Aerial Vehicles and a Crop Row Detection Method. Appl. Soft Comput. 2015, 37, 533–544. [Google Scholar]

- Teimouri, N.; Jørgensen, R.N.; Green, O. Novel Assessment of Region-Based CNNs for Detecting Monocot/Dicot Weeds in Dense Field Environments. Agronomy 2022, 12, 1167. [Google Scholar]

- Liu, J.; Abbas, I.; Noor, R.S. Development of Deep Learning-Based Variable Rate Agrochemical Spraying System for Targeted Weeds Control in Strawberry Crop. Agronomy 2021, 11, 1480. [Google Scholar] [CrossRef]

- Lakhiar, I.A.; Yan, H.; Zhang, C.; Wang, G.; He, B.; Hao, B.; Han, Y.; Wang, B.; Bao, R.; Syed, T.N.; et al. A Review of Precision Irrigation Water-Saving Technology under Changing Climate for Enhancing Water Use Efficiency, Crop Yield, and Environmental Footprints. Agriculture 2024, 14, 1141. [Google Scholar] [CrossRef]

- Chauhdary, J.N.; Li, H.; Jiang, Y.; Pan, X.; Hussain, Z.; Javaid, M.; Rizwan, M. Advances in Sprinkler Irrigation: A Review in the Context of Precision Irrigation for Crop Production. Agronomy 2023, 14, 47. [Google Scholar] [CrossRef]

- Elbeltagi, A.; Srivastava, A.; Deng, J.; Li, Z.; Raza, A.; Khadke, L.; Yu, Z.; El-Rawy, M. Forecasting Vapor Pressure Deficit for Agricultural Water Management Using Machine Learning in Semi-Arid Environments. Agric. Water Manag. 2023, 283, 108302. [Google Scholar]

- Zhu, X.; Chikangaise, P.; Shi, W.; Chen, W.H.; Yuan, S. Review of Intelligent Sprinkler Irrigation Technologies for Remote Autonomous System. Int. J. Agric. Biol. Eng. 2018, 11, 23–30. [Google Scholar]

- Kang, C.; Mu, X.; Seffrin, A.N.; Di Gioia, F.; He, L. A Recursive Segmentation Model for Bok Choy Growth Monitoring with Internet of Things (IoT) Technology in Controlled Environment Agriculture. Comput. Electron. Agric. 2025, 230, 109866. [Google Scholar]

- Chen, T.; Yin, H. Camera-Based Plant Growth Monitoring for Automated Plant Cultivation with Controlled Environment Agriculture. Smart Agric. Technol. 2024, 8, 100449. [Google Scholar]

- Li, C.; Adhikari, R.; Yao, Y.; Miller, A.G.; Kalbaugh, K.; Li, D.; Nemali, K. Measuring Plant Growth Characteristics Using Smartphone Based Image Analysis Technique in Controlled Environment Agriculture. Comput. Electron. Agric. 2020, 168, 105123. [Google Scholar]

- Zhang, T.; Zhou, J.; Liu, W.; Yue, R.; Yao, M.; Shi, J.; Hu, J. Seedling-YOLO: High-Efficiency Target Detection Algorithm for Field Broccoli Seedling Transplanting Quality Based on YOLOv7-Tiny. Agronomy 2024, 14, 931. [Google Scholar]

- Farooque, A.A.; Afzaal, H.; Benlamri, R.; Al-Naemi, S.; MacDonald, E.; Abbas, F.; MacLeod, K.; Ali, H. Red-Green-Blue to Normalized Difference Vegetation Index Translation: A Robust and Inexpensive Approach for Vegetation Monitoring Using Machine Vision and Generative Adversarial Networks. Precis. Agric. 2023, 24, 1097–1115. [Google Scholar]

- Fawakherji, M.; Suriani, V.; Nardi, D.; Bloisi, D.D. Shape and Style GAN-Based Multispectral Data Augmentation for Crop/Weed Segmentation in Precision Farming. Crop Prot. 2024, 184, 106848. [Google Scholar]

- Kong, J.; Ryu, Y.; Jeong, S.; Zhong, Z.; Choi, W.; Kim, J.; Lee, K.; Lim, J.; Jang, K.; Chun, J.; et al. Super Resolution of Historic Landsat Imagery Using a Dual Generative Adversarial Network (GAN) Model with CubeSat Constellation Imagery for Spatially Enhanced Long-Term Vegetation Monitoring. ISPRS J. Photogramm. Remote Sens. 2023, 200, 1–23. [Google Scholar]

- Li, X.; Li, X.; Zhang, M.; Dong, Q.; Zhang, G.; Wang, Z.; Wei, P. SugarcaneGAN: A Novel Dataset Generating Approach for Sugarcane Leaf Diseases Based on Lightweight Hybrid CNN-Transformer Network. Comput. Electron. Agric. 2024, 219, 108762. [Google Scholar]

- Mohamed, E.S.; Belal, A.; Abd-Elmabod, S.K.; El-Shirbeny, M.A.; Gad, A.; Zahran, M.B. Smart Farming for Improving Agricultural Management. Egypt. J. Remote Sens. Space Sci. 2021, 24, 971–981. [Google Scholar]

- Ganatra, N.; Patel, A. Deep Learning Methods and Applications for Precision Agriculture. In Machine Learning for Predictive Analysis; Springer: Singapore, 2020; pp. 515–527. [Google Scholar]

- Murindanyi, S.; Nakatumba-Nabende, J.; Sanya, R.; Nakibuule, R.; Katumba, A. Enhanced Infield Agriculture with Interpretable Machine Learning Approaches for Crop Classification. arXiv 2024, arXiv:2408.12426. [Google Scholar]

- Li, L.; Li, J.; Chen, D.; Pu, L.; Yao, H.; Huang, Y. VLLFL: A Vision-Language Model Based Lightweight Federated Learning Framework for Smart Agriculture. arXiv 2025, arXiv:2504.13365. [Google Scholar]

- Zhang, R.; Li, X. Edge Computing Driven Data Sensing Strategy in the Entire Crop Lifecycle for Smart Agriculture. Sensors 2021, 21, 7502. [Google Scholar] [CrossRef]

- Deka, S.A.; Phodapol, S.; Gimenez, A.M.; Fernandez-Ayala, V.N.; Wong, R.; Yu, P.; Tan, X.; Dimarogonas, D.V. Enhancing Precision Agriculture Through Human-in-the-Loop Planning and Control. In Proceedings of the 2024 IEEE 20th International Conference on Automation Science and Engineering (CASE), CASE 2024, Bari, Italy, 28 August–1 September 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 78–83. [Google Scholar]

- Zhang, H.; Wang, L.; Tian, T.; Yin, J. A Review of Unmanned Aerial Vehicle Low-Altitude Remote Sensing (UAV-LARS) Use in Agricultural Monitoring in China. Remote Sens. 2021, 13, 1221. [Google Scholar]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of Remote Sensing in Precision Agriculture: A Review. Remote Sens. 2020, 12, 3136. [Google Scholar]

- Mammarella, M.; Comba, L.; Biglia, A.; Dabbene, F.; Gay, P. Cooperation of Unmanned Systems for Agricultural Applications: A Theoretical Framework. Biosyst. Eng. 2022, 223, 61–80. [Google Scholar]

- Delavarpour, N.; Koparan, C.; Nowatzki, J.; Bajwa, S.; Sun, X. A Technical Study on UAV Characteristics for Precision Agriculture Applications and Associated Practical Challenges. Remote Sens. 2021, 13, 1204. [Google Scholar]

- Matese, A.; Czarnecki, J.M.P.; Samiappan, S.; Moorhead, R. Are Unmanned Aerial Vehicle-Based Hyperspectral Imaging and Machine Learning Advancing Crop Science? Trends Plant Sci. 2024, 29, 196–209. [Google Scholar] [PubMed]

- Moriya, É.A.S.; Imai, N.N.; Tommaselli, A.M.G.; Berveglieri, A.; Santos, G.H.; Soares, M.A.; Marino, M.; Reis, T.T. Detection and Mapping of Trees Infected with Citrus Gummosis Using UAV Hyperspectral Data. Comput. Electron. Agric. 2021, 188, 106298. [Google Scholar]

- Zhang, J.; Zhang, D.; Liu, J.; Zhou, Y.; Cui, X.; Fan, X. DSCONV-GAN: A UAV-BASED Model for Verticillium Wilt Disease Detection in Chinese Cabbage in Complex Growing Environments. Plant Methods 2024, 20, 186. [Google Scholar]

- Hu, G.; Ye, R.; Wan, M.; Bao, W.; Zhang, Y.; Zeng, W. Detection of Tea Leaf Blight in Low-Resolution UAV Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5601218. [Google Scholar]

- Yeh, J.F.; Lin, K.M.; Yuan, L.C.; Hsu, J.M. Automatic Counting and Location Labeling of Rice Seedlings from Unmanned Aerial Vehicle Images. Electronics 2024, 13, 273. [Google Scholar] [CrossRef]

- Feng, Z.; Cai, J.; Wu, K.; Li, Y.; Yuan, X.; Duan, J.; He, L.; Feng, W. Enhancing the Accuracy of Monitoring Effective Tiller Counts of Wheat Using Multi-Source Data and Machine Learning Derived from Consumer Drones. Comput. Electron. Agric. 2025, 232, 110120. [Google Scholar]

- Yu, H.; Weng, L.; Wu, S.; He, J.; Yuan, Y.; Wang, J.; Xu, X.; Feng, X. Time-Series Field Phenotyping of Soybean Growth Analysis by Combining Multimodal Deep Learning and Dynamic modeling. Plant Phenomics 2024, 6, 0158. [Google Scholar]

- Zhao, J.; Li, H.; Chen, C.; Pang, Y.; Zhu, X. Detection of Water Content in Lettuce Canopies Based on Hyperspectral Imaging Technology under Outdoor Conditions. Agriculture 2022, 12, 1796. [Google Scholar] [CrossRef]

- Fareed, N.; Das, A.K.; Flores, J.P.; Mathew, J.J.; Mukaila, T.; Numata, I.; Janjua, U.U.R. UAS Quality Control and Crop Three-Dimensional Characterization Framework Using Multi-Temporal LiDAR Data. Remote Sens. 2024, 16, 699. [Google Scholar]

- Burchard-Levine, V.; Guerra, J.G.; Borra-Serrano, I.; Nieto, H.; Mesias-Ruiz, G.; Dorado, J.; de Castro, A.I.; Herrezuelo, M.; Mary, B.; Aguirre, E.P.; et al. Evaluating the Utility of Combining High Resolution Thermal, Multispectral and 3D Imagery from Unmanned Aerial Vehicles to Monitor Water Stress in Vineyards. Precis. Agric. 2024, 25, 2447–2476. [Google Scholar]

- Wang, H.; Chen, X.; Zhang, T.; Xu, Z.; Li, J. CCTNet: Coupled CNN and Transformer Network for Crop Segmentation of Remote Sensing Images. Remote Sens. 2022, 14, 1956. [Google Scholar]

- Prasad, A.; Mehta, N.; Horak, M.; Bae, W.D. A Two-Step Machine Learning Approach for Crop Disease Detection Using GAN and UAV Technology. Remote Sens. 2022, 14, 4765. [Google Scholar]

- Niu, B.; Feng, Q.; Chen, B.; Ou, C.; Liu, Y.; Yang, J. HSI-TransUNet: A Transformer Based Semantic Segmentation Model for Crop Mapping from UAV Hyperspectral Imagery. Comput. Electron. Agric. 2022, 201, 107297. [Google Scholar]

- Wu, H.; Zhou, H.; Wang, A.; Iwahori, Y. Precise Crop Classification of Hyperspectral Images Using Multi-Branch Feature Fusion and Dilation-Based MLP. Remote Sens. 2022, 14, 2713. [Google Scholar]

- Hu, X.; Wang, X.; Zhong, Y.; Zhang, L. S3ANet: Spectral-Spatial-Scale Attention Network for End-to-End Precise Crop Classification Based on UAV-Borne H2 Imagery. ISPRS J. Photogramm. Remote Sens. 2022, 183, 147–163. [Google Scholar]

- Reddy, K.K.; Daduvy, A.; Mohana, R.M.; Assiri, B.; Shuaib, M.; Alam, S.; Sheneamer, A. Enhancing Precision Agriculture and Land Cover Classification: A Self-Attention 3D Convolutional Neural Network Approach for Hyperspectral Image Analysis. IEEE Access 2024, 12, 125592–125608. [Google Scholar]

- Rai, N.; Zhang, Y.; Villamil, M.; Howatt, K.; Ostlie, M.; Sun, X. Agricultural Weed Identification in Images and Videos by Integrating Optimized Feep Learning Architecture on an Edge Computing Technology. Comput. Electron. Agric. 2024, 216, 108442. [Google Scholar]

- Zhang, K.; Yuan, D.; Yang, H.; Zhao, J.; Li, N. Synergy of Sentinel-1 and Sentinel-2 Imagery for Crop Classification Based on DC-CNN. Remote Sens. 2023, 15, 2727. [Google Scholar]

- Ma, X.; Li, L.; Wu, Y. Deep-Learning-Based Method for the Identification of Typical Crops Using Dual-Polarimetric Synthetic Aperture Radar and High-Resolution Optical Images. Remote Sens. 2025, 17, 148. [Google Scholar]

- Wang, R.; Zhao, J.; Yang, H.; Li, N. Inversion of Soil Moisture on Farmland Areas Based on SSA-CNN Using Multi-Source Remote Sensing Data. Remote Sens. 2023, 15, 2515. [Google Scholar]

- Fu, H.; Lu, J.; Li, J.; Zou, W.; Tang, X.; Ning, X.; Sun, Y. Winter Wheat Yield Prediction Using Satellite Remote Sensing Data and Deep Learning Models. Agronomy 2025, 15, 205. [Google Scholar] [CrossRef]

- Ong, P.; Chen, S.; Tsai, C.Y.; Wu, Y.J.; Shen, Y.T. A Non-Destructive Methodology for Determination of Cantaloupe Sugar Content using Machine Vision and Deep Learning. J. Sci. Food Agric. 2022, 102, 6586–6595. [Google Scholar] [PubMed]

- Islam, T.; Islam, R.; Uddin, P.; Ulhaq, A. Spectrally Segmented-Enhanced Neural network for Precise Land Cover Object Classification in Hyperspectral Imagery. Remote Sens. 2024, 16, 807. [Google Scholar]

- Dericquebourg, E.; Hafiane, A.; Canals, R. Generative-Model-Based Data Labeling for Deep Network Regression: Application to Seed Maturity Estimation from UAV Multispectral Images. Remote Sens. 2022, 14, 5238. [Google Scholar]

- Ma, Z.; Yang, S.; Li, J.; Qi, J. Research on Slam Localization Algorithm for Orchard Dynamic Vision Based on YOLOD-SLAM2. Agriculture 2024, 14, 1622. [Google Scholar] [CrossRef]

- Wang, J.; Gao, Z.; Zhang, Y.; Zhou, J.; Wu, J.; Li, P. Real-Time Detection and Location of Potted Flowers Based on a ZED Camera and a YOLO V4-Tiny Deep Learning Algorithm. Horticulturae 2021, 8, 21. [Google Scholar]

- Mwitta, C.; Rains, G.C.; Prostko, E. Evaluation of Inference Performance of Deep Learning Models for Real-Time Weed Detection in an Embedded Computer. Sensors 2024, 24, 514. [Google Scholar] [CrossRef]

- Khater, O.H.; Siddiqui, A.J.; Hossain, M.S. EcoWeedNet: A Lightweight and Automated Weed Detection Method for Sustainable Next-Generation Agricultural Consumer Electronics. arXiv 2025, arXiv:2502.00205. [Google Scholar]

- Zhang, T.; Hu, D.; Wu, C.; Liu, Y.; Yang, J.; Tang, K. Large-Scale Apple Orchard Mapping from Multi-Source Data Using the Semantic Segmentation Model with Image- to- Image Translation and Transfer Learning. Comput. Electron. Agric. 2023, 213, 108204. [Google Scholar]

- Galodha, A.; Vashisht, R.; Nidamanuri, R.R.; Ramiya, A.M. Convolutional Neural Network (CNN) for Crop-Classification of Drone Acquired Hyperspectral Imagery. In Proceedings of the IGARSS 2022–2022 IEEE International Geoscience and Remote Sensing Symposium, Brisbane, Australia, 3–8 August 2025; IEEE: Piscataway, NJ, USA, 2022; pp. 7741–7744. [Google Scholar]

- Luo, P.; Niu, Y.; Tang, D.; Huang, W.; Luo, X.; Mu, J. A Computer Vision Solution for Behavioral Recognition in Red Pandas. Sci. Rep. 2025, 15, 9201. [Google Scholar]

- Kuncheva, L. Animal ReIdentification Using Restricted Set Classification. Ecol. Inform. 2021, 62, 101225. [Google Scholar]

- Hsieh, Y.Z.; Lee, P.Y. Analysis of Oplegnathus Punctatus Body Parameters Using Underwater Stereo Vision. IEEE Trans. Emerg. Top. Comput. Intell. 2023, 8, 879–891. [Google Scholar]

- Chen, C.; Zhu, W.; Norton, T. Behaviour Recognition of Pigs and Cattle: Journey from Computer Vision to Deep Learning. Comput. Electron. Agric. 2021, 187, 106255. [Google Scholar]

- Huang, X.; Hu, Z.; Qiao, Y.; Sukkarieh, S. Deep Learning-Based Cow Tail Detection and Tracking for Precision Livestock Farming. IEEE/ASME Trans. Mechatron. 2022, 28, 1213–1221. [Google Scholar]

- Lee, J.h.; Choi, Y.H.; Lee, H.s.; Park, H.J.; Hong, J.S.; Lee, J.H.; Sa, S.J.; Kim, Y.M.; Kim, J.E.; Jeong, Y.D.; et al. Enhanced Swine Behavior Detection with YOLOs and a Mixed Efficient Layer Aggregation Network in Real Time. Animals 2024, 14, 3375. [Google Scholar] [CrossRef]

- Alameer, A.; Buijs, S.; O’Connell, N.; Dalton, L.; Larsen, M.; Pedersen, L.; Kyriazakis, I. Automated Detection and Quantification of Contact Behaviour in Pigs Using Deep Learning. Biosyst. Eng. 2022, 224, 118–130. [Google Scholar]

- Zheng, Z.; Qin, L. PrunedYOLO-Tracker: An Efficient Multi-Cows Basic Behavior Recognition and Tracking Technique. Comput. Electron. Agric. 2023, 213, 108172. [Google Scholar]

- Pan, Y.; Jin, H.; Gao, J.; Rauf, H.T. Identification of Buffalo Breeds Using Self-Activated-Based Improved Convolutional Neural Networks. Agriculture 2022, 12, 1386. [Google Scholar]

- Nguyen, A.H.; Holt, J.P.; Knauer, M.T.; Abner, V.A.; Lobaton, E.J.; Young, S.N. Towards Rapid Weight Assessment of Finishing Pigs Using a Handheld, Mobile RGB-D Camera. Biosyst. Eng. 2023, 226, 155–168. [Google Scholar]

- Li, L.; Shi, G.; Jiang, T. Fish Detection Method Based on Improved YOLOv5. Aquac. Int. 2023, 31, 2513–2530. [Google Scholar]

- Chang, C.C.; Ubina, N.A.; Cheng, S.C.; Lan, H.Y.; Chen, K.C.; Huang, C.C. A Two-Mode Underwater Smart Sensor Object for Precision Aquaculture Based on AIoT Technology. Sensors 2022, 22, 7603. [Google Scholar]

- Chieza, K.; Brown, D.; Connan, J.; Salie, D. Automated Fish Detection in Underwater Environments: Performance Analysis of YOLOv8 and YOLO-NAS. In Communications in Computer and Information Science, Proceedings of the 5th Southern African Conference for Artificial Intelligence Research, SACAIR 2024, Bloemfontein, South Africa, 2–6 December 2024; Gerber, A., Maritz, J., Pillay, A., Eds.; Springer: Cham, Switzerland, 2025; Volume 2326, pp. 334–351. [Google Scholar]

- Zhao, Z.; Liu, Y.; Sun, X.; Liu, J.; Yang, X.; Zhou, C. Composited FishNet: Fish Detection and Species Recognition from Low-Quality Underwater Videos. IEEE Trans. Image Process. 2021, 30, 4719–4734. [Google Scholar]

- Yu, X.; Wang, Y.; An, D.; Wei, Y. Identification Methodology of Special Behaviors for Fish School Based on Spatial Behavior Characteristics. Comput. Electron. Agric. 2021, 185, 106169. [Google Scholar]

- Cai, K.; Yang, Z.; Gao, T.; Liang, M.; Liu, P.; Zhou, S.; Pang, H.; Liu, Y. Efficient Recognition of Fish Feeding Behavior: A Novel Two-Stage Framework Pioneering Intelligent Aquaculture Strategies. Comput. Electron. Agric. 2024, 224, 109129. [Google Scholar]

- Zhao, T.; Zhang, G.; Zhong, P.; Shen, Z. DMDnet: A Decoupled Multi-Scale Discriminant Model for Cross-Domain Fish Detection. Biosyst. Eng. 2023, 234, 32–45. [Google Scholar]

- Sarkar, P.; De, S.; Gurung, S.; Dey, P. UICE-MIRNet Guided Image Enhancement for Underwater Object Detection. Sci. Rep. 2024, 14, 22448. [Google Scholar]

- Sanhueza, M.I.; Montes, C.S.; Sanhueza, I.; Montoya-Gallardo, N.; Escalona, F.; Luarte, D.; Escribano, R.; Torres, S.; Godoy, S.E.; Amigo, J.M.; et al. VIS-NIR Hyperspectral Imaging and Multivariate Analysis for Direct Characterization of Pelagic Fish Species. Spectrochim. Acta Part Mol. Biomol. Spectrosc. 2025, 328, 125451. [Google Scholar]

- Xu, X.; Li, W.; Duan, Q. Transfer Learning and SE-ResNet152 Networks-Based for Small-Scale Unbalanced Fish Species Identification. Comput. Electron. Agric. 2021, 180, 105878. [Google Scholar]

- Bohara, K.; Joshi, P.; Acharya, K.P.; Ramena, G. Emerging Technologies Revolutionising Disease Diagnosis and Monitoring in Aquatic Animal Health. Rev. Aquac. 2024, 16, 836–854. [Google Scholar]

- Zhou, C.; Wang, C.; Sun, D.; Hu, J.; Ye, H. An Automated Lightweight Approach for Detecting Dead Fish in a Recirculating Aquaculture System. Aquaculture 2025, 594, 741433. [Google Scholar]

- Li, Y.; Tan, H.; Deng, Y.; Zhou, D.; Zhu, M. Hypoxia Monitoring of Fish in Intensive Aquaculture Based on Underwater Multi-Target Tracking. Comput. Electron. Agric. 2025, 232, 110127. [Google Scholar]

- Hu, X.; Liu, Y.; Zhao, Z.; Liu, J.; Yang, X.; Sun, C.; Chen, S.; Li, B.; Zhou, C. Real-Time Detection of Uneaten Feed Pellets in Underwater Images for Aquaculture Using an Improved YOLO-V4 Network. Comput. Electron. Agric. 2021, 185, 106135. [Google Scholar]

- Schellewald, C.; Saad, A.; Stahl, A. Mouth Opening Frequency of Salmon from Underwater Video Exploiting Computer Vision. IFAC-PapersOnLine 2024, 58, 313–318. [Google Scholar]

- Yu, H.; Song, H.; Xu, L.; Li, D.; Chen, Y. SED-RCNN-BE: A SE-Dual Channel RCNN Network Optimized Binocular Estimation Model for Automatic Size Estimation of Free Swimming Fish in Aquaculture. Expert Syst. Appl. 2024, 255, 124519. [Google Scholar]

- Xun, Z.; Wang, X.; Xue, H.; Zhang, Q.; Yang, W.; Zhang, H.; Li, M.; Jia, S.; Qu, J.; Wang, X. Deep Machine Learning Identified Fish Flesh Using Multispectral Imaging. Curr. Res. Food Sci. 2024, 9, 100784. [Google Scholar]

- Yang, Y.; Li, D.; Zhao, S. A Novel Approach for Underwater Fish Segmentation in Complex Scenes Based on Multi-Levels Triangular Atrous Convolution. Aquac. Int. 2024, 32, 5215–5240. [Google Scholar]

- An, S.; Wang, L.; Wang, L. MINM: Marine Intelligent Netting Monitoring Using Multi-Scattering Model and Multi-Space Transformation. ISA Trans. 2024, 150, 278–297. [Google Scholar]

- Riego del Castillo, V.; Sánchez-González, L.; Campazas-Vega, A.; Strisciuglio, N. Vision-Based Module for Herding with a Sheepdog Robot. Sensors 2022, 22, 5321. [Google Scholar] [CrossRef] [PubMed]

- Martin, K.E.; Blewett, T.A.; Burnett, M.; Rubinger, K.; Standen, E.M.; Taylor, D.S.; Trueman, J.; Turko, A.J.; Weir, L.; West, C.M.; et al. The Importance of Familiarity, Relatedness, and Vision in Social Recognition in Wild and Laboratory Populations of a Selfing, Hermaphroditic Mangrove Fish. Behav. Ecol. Sociobiol. 2022, 76, 34. [Google Scholar]

- Desgarnier, L.; Mouillot, D.; Vigliola, L.; Chaumont, M.; Mannocci, L. Putting Eagle Rays on the Map by Coupling Aerial Video-Surveys and Deep Learning. Biol. Conserv. 2022, 267, 109494. [Google Scholar]

- Shreesha, S.; Pai, M.M.; Pai, R.M.; Verma, U. Pattern Detection and Prediction Using Deep Learning for Intelligent Decision Support to Identify Fish Behaviour in Aquaculture. Ecol. Inform. 2023, 78, 102287. [Google Scholar]

- Li, G.; Huang, Y.; Chen, Z.; Chesser, G.D., Jr.; Purswell, J.L.; Linhoss, J.; Zhao, Y. Practices and Applications of Convolutional Neural Network-Based Computer Vision Systems in Animal Farming: A review. Sensors 2021, 21, 1492. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, C.; Zeng, S.; Yang, W.; Chen, Y. Hyperspectral Imaging Coupled with Deep Learning Model for Visualization and Detection of Early Bruises on Apples. J. Food Compos. Anal. 2024, 134, 106489. [Google Scholar]

- Cheng, J.; Sun, J.; Yao, K.; Xu, M.; Dai, C. Multi-Task Convolutional Neural Network for Simultaneous Monitoring of Lipid and Protein Oxidative Damage in Frozen-Thawed Pork Using Hyperspectral Imaging. Meat Sci. 2023, 201, 109196. [Google Scholar]

- Tan, H.; Ma, B.; Xu, Y.; Dang, F.; Yu, G.; Bian, H. An Innovative Variant Based on Generative Adversarial Network (GAN): Regression GAN Combined with Hyperspectral Imaging to Predict Pesticide Residue Content of Hami Melon. Spectrochim. Acta Part A-Mol. Biomol. Spectrosc. 2025, 325, 125086. [Google Scholar]

- Stasenko, N.; Shukhratov, I.; Savinov, M.; Shadrin, D.; Somov, A. Deep Learning in Precision Agriculture: Artificially Generated VNIR Images Segmentation for Early Postharvest Decay Prediction in Apples. Entropy 2023, 25, 987. [Google Scholar] [CrossRef]

- Li, X.; Xue, S.; Li, Z.; Fang, X.; Zhu, T.; Ni, C. A Candy Defect Detection Method Based on StyleGAN2 and Improved YOLOv7 for Imbalanced Data. Foods 2024, 13, 3343. [Google Scholar] [CrossRef]

- Xu, B.; Cui, X.; Ji, W.; Yuan, H.; Wang, J. Apple Grading Method Design and Implementation for Automatic Grader Based on Improved YOLOv5. Agriculture 2023, 13, 124. [Google Scholar] [CrossRef]

- Li, A.; Wang, C.; Ji, T.; Wang, Q.; Zhang, T. D3-YOLOv10: Improved YOLOv10-Based Lightweight Tomato Detection Algorithm Under Facility Scenario. Agriculture 2024, 14, 2268. [Google Scholar]

- Zhu, L.; Spachos, P. Support Vector Machine and YOLO for a Mobile Food Grading System. Internet Things 2021, 13, 100359. [Google Scholar]

- Han, F.; Huang, X.; Aheto, J.H.; Zhang, D.; Feng, F. Detection of Beef Adulterated with Pork Using a Low-Cost Electronic Nose Based on Colorimetric Sensors. Foods 2020, 9, 193. [Google Scholar] [CrossRef]

- Huang, X.; Li, Z.; Xiaobo, Z.; Shi, J.; Tahir, H.E.; Xu, Y.; Zhai, X.; Hu, X. Geographical Origin Discrimination of Edible Bird’s Nests Using Smart Handheld Device Based on Colorimetric Sensor Array. J. Food Meas. Charact. 2020, 14, 514–526. [Google Scholar]

- Arslan, M.; Zareef, M.; Tahir, H.E.; Guo, Z.; Rakha, A.; Xuetao, H.; Shi, J.; Zhihua, L.; Xiaobo, Z.; Khan, M.R. Discrimination of Rice Varieties Using Smartphone-Based Colorimetric Sensor Arrays and Gas Chromatography Techniques. Food Chem. 2022, 368, 130783. [Google Scholar] [PubMed]

- Guo, Z.; Zou, Y.; Sun, C.; Jayan, H.; Jiang, S.; El-Seedi, H.R.; Zou, X. Nondestructive Determination of Edible Quality and Watercore Degree of Apples by Portable Vis/NIR Transmittance System Combined with CARS-CNN. J. Food Meas. Charact. 2024, 18, 4058–4073. [Google Scholar]

- Srivastava, S.; Sadistap, S. Data Fusion for Fruit Quality Authentication: Combining Non-Destructive Sensing Techniques to Predict Quality Parameters of Citrus Cultivars. J. Food Meas. Charact. 2022, 16, 344–365. [Google Scholar]

- Cai, X.; Zhu, Y.; Liu, S.; Yu, Z.; Xu, Y. FastSegFormer: A Knowledge Distillation-Based Method for Real-Time Semantic Segmentation of Surface Defects in Navel Oranges. Comput. Electron. Agric. 2024, 217, 108604. [Google Scholar]

- Qiu, D.; Guo, T.; Yu, S.; Liu, W.; Li, L.; Sun, Z.; Peng, H.; Hu, D. Classification of Apple Color and Deformity Using Machine Vision Combined with CNN. Agriculture 2024, 14, 978. [Google Scholar] [CrossRef]

- Patel, K.K.; Kar, A.; Khan, M.A. Monochrome Computer Vision for Detecting Common External Defects of Mango. J. Food Sci. Techinology 2021, 58, 4550–4557. [Google Scholar]

- Yang, Z.; Zhai, X.; Zou, X.; Shi, J.; Huang, X.; Li, Z.; Gong, Y.; Holmes, M.; Povey, M.; Xiao, J. Bilayer pH-Sensitive Colorimetric Films with Light-Blocking Ability and Electrochemical Writing Property: Application in Monitoring Crucian Spoilage in Smart Packaging. Food Chem. 2021, 336, 127634. [Google Scholar]

- Xu, Y.; Zhang, W.; Shi, J.; Li, Z.; Huang, X.; Zou, X.; Tan, W.; Zhang, X.; Hu, X.; Wang, X.; et al. Impedimetric Aptasensor Based on Highly Porous Gold for Sensitive Detection of Acetamiprid in Fruits and Vegetables. Food Chem. 2020, 322, 126762. [Google Scholar]

- Wu, Y.; Li, P.; Xie, T.; Yang, R.; Zhu, R.; Liu, Y.; Zhang, S.; Weng, S. Enhanced Quasi-Meshing Hotspot Effect Integrated Embedded Attention Residual Network for Culture-Free SERS Accurate Determination of Fusarium Spores. Biosens. Bioelectron. 2025, 271, 117053. [Google Scholar]

- de Moraes, I.A.; Junior, S.B.; Barbin, D.F. Interpretation and Explanation of Computer Vision Classification of Carambola (Averrhoa Carambola L.) according to Maturity Stage. Food Res. Int. 2024, 192, 114836. [Google Scholar]

- Wang, F.; Lv, C.; Dong, L.; Li, X.; Guo, P.; Zhao, B. Development of Effective Model for Non-Destructive Detection of Defective Kiwifruit Based on Graded Lines. Front. Plant Sci. 2023, 14, 1170221. [Google Scholar]

- Sarkar, T.; Choudhury, T.; Bansal, N.; Arunachalaeshwaran, V.; Khayrullin, M.; Shariati, M.A.; Lorenzo, J.M. Artificial Intelligence Aided Adulteration Detection and Quantification for Red Chilli Powder. Food Anal. Methods 2023, 16, 721–748. [Google Scholar]

- Nguyen, N.M.T.; Liou, N.S. Detecting Surface Defects of Achacha Fruit (Garcinia Humilis) with Hyperspectral Images. Horticulturae 2023, 9, 869. [Google Scholar] [CrossRef]

- Wu, H.; Xie, R.; Hao, Y.; Pang, J.; Gao, H.; Qu, F.; Tian, M.; Guo, C.; Mao, B.; Chai, F. Portable Smartphone-Integrated AuAg Nanoclusters Electrospun Membranes for Multivariate Fluorescent Sensing of Hg2+, Cu2+ and l-histidine in Water and Food Samples. Food Chem. 2023, 418, 135961. [Google Scholar]

- Sharma, S.; Sirisomboon, P.; K.C, S.; Terdwongworakul, A.; Phetpan, K.; Kshetri, T.B.; Sangwanangkul, P. Near-Infrared Hyperspectral Imaging Combined with Machine Learning for Physicochemical-Based Quality Evaluation of Durian Pulp. Postharvest Biol. Technol. 2023, 200, 112334. [Google Scholar]

- Ji, W.; Zhai, K.; Xu, B.; Wu, J. Green Apple Detection Method Based on Multidimensional Feature Extraction Network Model and Transformer Module. J. Food Prot. 2025, 88, 100397. [Google Scholar]

- Ji, W.; Wang, J.; Xu, B.; Zhang, T. Apple Grading Based on Multi-Dimensional View Processing and Deep Learning. Foods 2023, 12, 2117. [Google Scholar] [CrossRef] [PubMed]

- Nabil, P.; Mohamed, K.; Atia, A. Automating Fruit Quality Inspection Through Transfer Learning and GANs. In Proceedings of the 2024 International Mobile, Intelligent, and Ubiquitous Computing Conference (MIUCC), Cairo, Egypt, 13–14 November 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 401–406. [Google Scholar]

- Hao, J.; Zhao, Y.; Peng, Q. A Specular Highlight Removal Algorithm for Quality Inspection of Fresh Fruits. Remote Sens. 2022, 14, 3215. [Google Scholar]

- Fakhrou, A.; Kunhoth, J.; Al Maadeed, S. Smartphone-based food recognition system using multiple deep CNN models. Multimed. Tools Appl. 2021, 80, 33011–33032. [Google Scholar]

- Zhao, Y.; Qin, H.; Xu, L.; Yu, H.; Chen, Y. A Review of Deep Learning-Based Stereo Vision Techniques for Phenotype Feature and Behavioral Analysis of Fish in Aquaculture. Artif. Intell. Rev. 2025, 58, 7. [Google Scholar]

- Zha, J. Artificial Intelligence in Agriculture. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2020; Volume 1693, p. 012058. [Google Scholar]

- Salman, Z.; Muhammad, A.; Piran, M.J.; Han, D. Crop-Saving with AI: Latest Trends in Deep Learning Techniques for Plant Pathology. Front. Plant Sci. 2023, 14, 1224709. [Google Scholar]

- Sharma, R. Artificial Intelligence in Agriculture: A Review. In Proceedings of the 2021 5th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 6–8 May 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 937–942. [Google Scholar]

- Coulibaly, S.; Kamsu-Foguem, B.; Kamissoko, D.; Traore, D. Deep Learning for Precision Agriculture: A Bibliometric Analysis. Intell. Syst. Appl. 2022, 16, 200102. [Google Scholar]

- Subeesh, A.; Mehta, C. Automation and Digitization of Agriculture Using Artificial Intelligence and Internet of Things. Artif. Intell. Agric. 2021, 5, 278–291. [Google Scholar]

- Tian, H.; Wang, T.; Liu, Y.; Qiao, X.; Li, Y. Computer Vision Technology in Agricultural Automation—A Review. Inf. Process. Agric. 2020, 7, 1–19. [Google Scholar]

- Khan, A.A.; Laghari, A.A.; Awan, S.A. Machine learning in Computer Vision: A Review. EAI Endorsed Trans. Scalable Inf. Syst. 2021, 8, e4. [Google Scholar]

- Ding, W.; Abdel-Basset, M.; Alrashdi, I.; Hawash, H. Next Generation of Computer Vision for Plant Disease Monitoring in Precision Agriculture: A Contemporary Survey, Taxonomy, Experiments, and Future Direction. Inf. Sci. 2024, 665, 120338. [Google Scholar]

- Zhang, J.; Kang, N.; Qu, Q.; Zhou, L.; Zhang, H. Automatic Fruit Picking Technology: A Comprehensive Review of Research Advances. Artif. Intell. Rev. 2024, 57, 54. [Google Scholar]

- Kassim, M.R.M. Iot Applications in Smart Agriculture: Issues and Challenges. In Proceedings of the 2020 IEEE Conference on Open Systems (ICOS), Kota Kinabalu, Malaysia, 17–19 November 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 19–24. [Google Scholar]

- Wang, T.; Chen, B.; Zhang, Z.; Li, H.; Zhang, M. Applications of Machine Vision in Agricultural Robot Navigation: A Review. Comput. Electron. Agric. 2022, 198, 107085. [Google Scholar]

- Akhter, R.; Sofi, S.A. Precision Agriculture Using IoT Data Analytics and Machine Learning. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 5602–5618. [Google Scholar]

- Memon, M.S.; Chen, S.; Shen, B.; Liang, R.; Tang, Z.; Wang, S.; Zhou, W.; Memon, N. Automatic Visual Recognition, Detection and Classification of Weeds in Cotton Fields Based on Machine Vision. Crop Prot. 2025, 187, 106966. [Google Scholar]

- Tzachor, A.; Devare, M.; King, B.; Avin, S.; Ó hÉigeartaigh, S. Responsible Artificial Intelligence in Agriculture Requires Systemic Understanding of Risks and Externalities. Nat. Mach. Intell. 2022, 4, 104–109. [Google Scholar]

- Karunathilake, E.; Le, A.T.; Heo, S.; Chung, Y.S.; Mansoor, S. The Path to Smart Farming: Innovations and Opportunities in Precision Agriculture. Agriculture 2023, 13, 1593. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, Y.; Zhao, Y.; Pan, Q.; Jin, K.; Xu, G.; Hu, Y. Ts-yolo: An All-Day and Lightweight Tea Canopy Shoots Detection Model. Agronomy 2023, 13, 1411. [Google Scholar]

- Lowder, S.K.; Sánchez, M.V.; Bertini, R. Which farms feed the world and has farmland become more concentrated? World Dev. 2021, 142, 105455. [Google Scholar]

| Study | Technique | Methods | Advantages | Limitations |

|---|---|---|---|---|

| [92] | Classical CV | Colour segmentation, thresholding, morphological operations | Simple, computationally inexpensive, intuitive feature extraction | Sensitive to illumination, requires manual features, lacks scalability |

| [94,101,104] | Deep Learning | ResNet, VGGNet, Inception, YOLO variants, Hybrid CNN models, 3D-CNN, CNN-LSTM | High accuracy, automatic feature extraction, suitable for complex patterns | Requires extensive datasets, performance degrades with unseen conditions |

| [109,113] | Generative Adversarial Networks | DCGAN, CycleGAN, spatiotemporal GANs | Addresses data scarcity and class imbalance, enhances dataset diversity, facilitates cross-domain adaptation | Training instability, potential mode collapse, semantic consistency challenges |

| [115,116] | Multimodal Integration | Fusion of hyperspectral, thermal, and RGB imagery, conditional GANs for modality translation | Improved resilience to sensor variability, enriched feature representation | Complex data alignment, sensor integration challenges |

| [118,120] | Explainable and Edge AI | SHAP, LIME, edge AI accelerators, quantised GAN architectures | Interpretability, real-time deployment, reduced computational overhead | Limited semantic consistency, stability concerns |

| [121,123] | Foundation Models and VLMs | Few-shot learning, GPT-Vision, dialogue-based advisory systems | Improved generalisation, accessibility, enhanced human interaction | Data privacy, resource-intensive pretraining |

| Study | Methodology | Core Technology | Typical Applications |

|---|---|---|---|

| [126,127] | Classical CV | Colour thresholding, texture analysis, visual saliency, morphology | Citrus ripeness, coconut maturity assessment |

| [128,129,132,133,134,135] | Deep Learning | CNNs, YOLO variants | Fruit detection (apple, strawberry, tomato), harvester feed prediction |

| [139,140,141] | Multimodal Sensing | Hyperspectral, thermal, and RGB datafusion; hyperspectral imaging | Grape/melon biochemical maturity (chlorophyll, sugar); robust sensing |

| [136] | Transformer-basedModels | VFMs, transformers, attention | Overlapping fruit resolution, occlusion handling in dense clusters |