Abstract

The recent advancements and emergence of rapidly evolving AI models, such as large language models (LLMs), have sparked interest among researchers and professionals. These models are ubiquitously being fine-tuned and applied across various fields such as healthcare, customer service and support, education, automated driving, and smart factories. This often leads to an increased level of complexity and challenges concerning the trustworthiness of these models, such as the generation of toxic content and hallucinations with high confidence leading to serious consequences. The European Union Artificial Intelligence Act (AI Act) is a regulation concerning artificial intelligence. The EU AI Act has proposed a comprehensive set of guidelines to ensure the responsible usage and development of general-purpose AI systems (such as LLMs) that may pose potential risks. The need arises for strengthened efforts to ensure that these high-performing LLMs adhere to the seven trustworthiness aspects (data governance, record-keeping, transparency, human-oversight, accuracy, robustness, and cybersecurity) recommended by the AI Act. Our study systematically maps research, focusing on identifying the key trends in developing LLMs across different application domains to address the aspects of AI Act-based trustworthiness. Our study reveals the recent trends that indicate a growing interest in emerging models such as LLaMa and BARD, reflecting a shift in research priorities. GPT and BERT remain the most studied models, and newer alternatives like Mistral and Claude remain underexplored. Trustworthiness aspects like accuracy and transparency dominate the research landscape, while cybersecurity and record-keeping remain significantly underexamined. Our findings highlight the urgent need for a more balanced, interdisciplinary research approach to ensure LLM trustworthiness across diverse applications. Expanding studies into underexplored, high-risk domains and fostering cross-sector collaboration can bridge existing gaps. Furthermore, this study also reveals domains (like telecommunication) which are underrepresented, presenting considerable research gaps and indicating a potential direction for the way forward.

Keywords:

large language models (LLMs); trustworthiness; EU AI Act; GPT; BERT; LLaMa; BARD; transparency; accuracy; systematic mapping study 1. Introduction

The development of large language models (LLMs) has been driven by the advancements in deep learning [1,2], the availability of vast public datasets [3,4,5], and powerful resources capable of processing big data with complex algorithms. These models have significantly enhanced the machines´ ability to process and understand the context in long sequences of text, allowing them to grasp the nuances and generate human-like responses [6]. In particular, Google’s Bidirectional Encoder Representations from Transformers (BERT) [7] and Open AI’s Generative Pre-trained Transformer (GPT) series have revolutionized natural language processing by excelling at various language tasks in various applications [8]. Moreover, fine-tuning LLMs on specific downstream tasks led to their widespread adoption in industries like customer service [9], healthcare, education [10], and finance [11].

Despite their remarkable capabilities, the increasing deployment of these models in high-stake domains raised significant concerns regarding their trustworthiness, primarily due to their propensity for hallucinations and inherent biases. As a result, there is a growing emphasis on establishing principles for the responsible development and deployment of LLMs. The EU Trustworthy AI framework [12] outlines core principles such as fairness, transparency, accountability, and safety. Complementing this, the EU AI Act [13] which came into force on August 2024 is considered the first-ever legal framework on AI that defines seven key attributes of trustworthiness such as human oversight, record-keeping, data and data governance, transparency, accuracy, robustness, and cybersecurity. This Act categorizes AI systems by risk levels, imposing strict requirements on high-risk applications to protect human rights and safety. Moreover, to facilitate the implementation of the law, the European Commission has authorized the CEN and CENELEC Joint Technical Committee 21 (JTC 21) to develop harmonized standards that will provide companies with a legal presumption of conformity [14]. Unlike existing strategies around AI governance by principal players (United States, China, Canada, United Kingdom, and The G7), the EU AI Act introduces clear, enforceable obligations for high-risk AI systems and a sophisticated product safety framework in a hierarchical structure [15]. Through harmonized standards, the AI Act codifies the EU’s rigorous requirements for trustworthy AI, providing a unified framework that serves as a global benchmark in the field. Furthermore, Ref. [15] discusses the EU AI Act, emphasizing the importance of interdisciplinary approaches, public trust, and ethical considerations in the development and regulation of AI systems to ensure their responsible deployment and societal benefits.

However, many LLMs do not meet these standards due to limitations in tailored methodologies or data availability [16]. For example, while proprietary models often outperform open source ones in certain dimensions, such as safety, they still fall short in areas such as bias mitigation and transparency [16]. Moreover, innovative approaches like the Think–Solve–Verify (TSV) framework have demonstrated improvements in reasoning tasks but highlight persistent challenges in introspective self-awareness and collaborative reasoning [17]. Investigating limitations and shortcomings of LLMs is crucial for understanding where and what trustworthiness measures are most needed [18,19]. For instance, untrustworthy models in telecommunications could lead to operational failures or privacy breaches, erroneous problem-solving, resource misallocation, etc. Similarly, in healthcare or education, biased or unreliable outputs could undermine public confidence or exacerbate inequalities.

The primary objective of this study is to perform a detailed analysis to assess the extent to which large language models adhere to the trustworthiness principles set forth in the EU AI Act [13]. This study also analyzes various application domains where LLMs are utilized to solve tasks with human-level performance. To achieve this, we used a systematic mapping process [20] to construct a structured map of relevant studies in the literature. This process ultimately uncovers underexplored areas and provides a foundation for future studies aimed at addressing critical challenges in ensuring the trustworthiness of LLMs.

The main contributions of this study are as follows:

- A systematic assessment of LLMs, examining both the current state and the most studied trustworthiness aspects across various high-impact domains in the light of the EU AI Act’s trustworthiness dimensions;

- An exploration of emerging trends in the domain-specific LLM applications, highlighting existing gaps and underexplored areas in the development of trustworthy LLMs;

- A systematic review of the applied methods and techniques to identify the type of research contributions presented in studies on LLM trustworthiness.

The rest of this paper is organized into the following sections: Section 2 outlines the related work emphasizing the trends to analyze the trustworthiness aspects characterized by LLMs. Section 3 describes the three-step process—planning, conducting and documentation—followed for this systematic mapping study. Section 4 elaborates on the six classification schemes opted for mapping the relevant research studies to address the respective research questions. Section 5 showcases insights into the analysis of the trends and addresses the research questions identified in Section 3.1.1 by visualization. Section 6 highlights the four types of issues that may threaten the validity of the research. And finally, the key takeaways from this study and directions for future research are presented in Section 7.

2. Related Works

Rapid advancements in LLMs in the field of artificial intelligence (AI) have sparked substantial academic and industry interest. A growing body of literature addresses the opportunities and challenges inherent in LLMs, focusing on regulatory frameworks, technical hurdles, domain specialization, and trustworthiness [15].

LLMs have transformed natural language processing (NLP) by achieving state-of-the-art (SOTA) performance on diverse tasks. The work of [21] provides an overview of this transition from task-specific architectures to general-purpose models capable of adapting across domains, emphasizing the role of the pre-training objectives, benchmarks, and transfer learning mechanisms of these models. Expanding on this, Ref. [4] analyzes the historical evolution, training techniques, and applications of LLMs in domains such as healthcare, education, and agriculture; Ref. [22] delves deeper into the mechanisms that enable LLMs to perform complex tasks. They highlight ongoing challenges related to their reasoning abilities, computational demands, and scalability, which remain critical. The work on domain-specialized LLMs presented in [23] introduces a taxonomy of domain-specialization techniques, application areas, and associated challenges, advocating customized approaches to harness the potential of LLMs in specialized fields. These findings align with [24], which analyzes research trends from 2017 to early 2023 in LLMs, analyzing 5000 publications to provide researchers, practitioners, and policy makers with a broad overview of the evolving landscape of LLMs, particularly in the fields of medicine, engineering, social science, and the humanities. These studies provide valuable insights into the advancements in LLMs, yet they fall short of systematically exploring more inclusive applications of LLMs across high-impact domains, such as the communication technologies, automotive, and robotics domains.

Trustworthiness in LLMs is a key focus in the recent literature, with multiple studies addressing associated risks and mitigation strategies. In the work of [25], the authors review debiasing techniques and approaches to mitigate hallucinations, highlighting the importance of ethical oversight and evaluation through a comprehensive taxonomy of mitigation methods, complementing the work of [26] which emphasizes the importance of robust mechanisms to improve transparency and controllability in mitigating bias. Extending the discussion on trust assessment, Ref. [18] advocates for interdisciplinary collaboration to address societal bias, value alignment, and environmental sustainability concerns. In [27], the authors investigate the relationship between model compression and trustworthiness, finding that moderate quantization (e.g., 4-bit) maintains trustworthiness while improving efficiency, whereas excessive compression (e.g., 3-bit) degrades it. Unlike [25], which touches upon several aspects of data handling and pre-processing that relate to improving data quality, this study links algorithmic efficiency to ethical outcomes, showing how quantization choices affect fairness and ethics metrics. Furthermore, the work of [15] discusses varying approaches to AI regulation across different global regions, while our key focus lies on the EU AI Act. In addition to that, the discussion covers LLMs under General Purpose AI Models (GPAI) and details certain obligations imposed on them. Compared to our work, these studies collectively highlight crucial ethical risks and societal implications but lack a detailed examination of LLM trustworthiness based on the specific trustworthiness dimensions outlined in the recent regulatory framework, the EU AI Act.

Regulatory frameworks for AI have been rapidly evolving with technological advancements and ever-growing public concerns. The EU AI Act is one of the first pioneering, legally binding, comprehensive, and horizontally applied regulatory frameworks for AI systems. AI regulations among the other regions of the world (U.S., China, Canada, and South Korea) reflect diverse national and state-level policies and legal traditions and differ in terms of implementation, scope, and enforcement among themselves as well as in comparison to the EU AI Act. The state/federal guidelines by the U.S. offers a more fragmented, industry-specific, and decentralized approach. Executive orders (such as on trustworthy AI), the CHIPS and Science Act, and agency guidelines are a part of federal actions. The states of Colorado and California have proposed or enacted laws for AI accountability, transparency, and anti-discrimination. Colorado has been known to focus on high-risk systems in high-impact sectors such as healthcare and recruitment [28]. The AI and Data Act (AIDA) [29] by Canada emphasizes safety, human rights, responsible AI practices, oversight, and public sector directives.This regulation also follows risk-based tiers, posing stricter rules for AI systems in high-impact sectors. China’s New Generation AI Development Plan [30] is a national strategy with sector-specific regulations for AI algorithms, generative AI, and deep synthesis. The Draft AI Law (2024) would impose legal requirements on high-risk and critical AI systems. China encourages innovation but maintains strict regulatory oversight, especially for public-facing AI services. A key limitation is the interpretability of the original legal document, which is available only in the Chinese language. South Korea’s Basic Act on AI Advancement and Trust [31] focuses on trust, advancement, and transparency for high-impact systems in healthcare, energy, and public services. Risk-based classification of AI systems, requirements for transparency and accountability, emphasis on ethics and human rights, and sector-specific rules are the common themes across the several regulatory frameworks. Unlike the other frameworks, the EU AI Act follows strict high-risk rules with high fines, while covering seven different trustworthiness aspects—data governance, record-keeping, transparency, human-oversight, accuracy, robustness, and cybersecurity.

Research on LLM evaluation methods, such as [32], addresses key questions about what, where, and how to assess performance. Further, the work of [17] introduces the Think–Solve–Verify (TSV) framework to enhance reasoning accuracy and trustworthiness through introspective reasoning and self-consistency. Together, these studies shed light on the importance of comprehensive evaluation frameworks and interdisciplinary approaches to ensure the responsible development and deployment of LLMs. Our study, however, takes a distinct perspective from the previous works by conceptualizing trustworthiness within the context of domain-specific LLM applications. It focuses particularly on advanced cellular generations, non-cellular technologies, and a broader range of high-impact application domains extending beyond telecommunications. By examining trustworthiness within both regulatory and technological frameworks, we provide insights crucial for the responsible implementation of LLMs with ensured safety in critical infrastructures.

3. Study Method

In this study, we follow the guidelines from [20,33] to structure the overall process into three classic phases commonly used in systematic mapping studies: A. planning, B. conducting, and C. documentation. Each phase is divided into several steps, as illustrated in Figure 1 based on [20,33] and explained in detail below.

Figure 1.

Systematic mapping process phases.

- (1)

- Planning

- 1.

- Justify the need to conduct a mapping study on the trustworthiness aspects of the LLM algorithms.

- 2.

- Establish the key research questions.

- 3.

- Develop the search string and identify the scientific online digital libraries for carrying out the systematic mapping study.

- (2)

- Conducting

- 1.

- Study retrieval: The finalized search string is applied to the selected academic search databases. This process produces a comprehensive list of all potential studies found in digital libraries.

- 2.

- Study selection: Duplicate entries are removed from the list of candidate studies. The remaining entries are then filtered on the basis of inclusion and exclusion criteria, followed by applying the Title–Abstract–Keywords (T-A-K) criteria to finalize a list of relevant studies for further analysis [20,33].

- 3.

- Classification scheme definition: The next step involves determining how the relevant studies will be categorized. The classification scheme is designed to collect data necessary to answer the research questions defined in the planning phase.

- 4.

- Data extraction: Each selected study is reviewed, and relevant information is extracted. A data extraction form is used to organize and record this information for analysis in the next step.

- 5.

- Data analysis: This final step of the conducting phase involves a thorough analysis of the extracted data, which is represented through various maps, such as radial bar charts, histogram charts, pie charts, and line graphs.

- (3)

- Documentation

- 1.

- A comprehensive analysis of the information gathered in the prior phase.

- 2.

- Detailed explanations of the results.

- 3.

- An evaluation of potential threats to validity.

The details of the process followed in each phase of this study are elucidated in Section 3.1 and Section 3.2.

3.1. Planning

3.1.1. Definition of Research Questions

This study aims to evaluate the current state of LLM trustworthiness, analyze research gaps, and identify emerging trends, with a particular focus on high-impact application domains. The purpose is to establish a foundation for future research and development that will enhance the reliability, transparency, and overall trustworthiness of LLMs across industries [33].

Here are the lists of the research questions.

- RQ1: What is the current state of trustworthiness in LLMs?

- –

- Objective: Identify the current state of the trustworthines in LLMs.

- RQ1a: Which LLMs are most studied for trustworthiness?

- –

- Objective: Identify specific LLMs that have been researched with a focus on trustworthiness.

- RQ1b: Which aspects of trustworthiness are explored in LLMs?

- –

- Objective: Explore specific dimensions of trustworthiness (e.g., based on EU Trustworthy AI guidelines) that researchers focus on within the LLM domain.

- RQ2: What are the current research gaps in the study of LLM trustworthiness in popular application domains?

- –

- Objective: Identify areas in LLM trustworthiness research that need further exploration, especially within high-impact application domains.

- RQ3: What are the emerging trends and developments in addressing trustworthiness in LLMs?

- –

- Objective: Track trends over time, identifying shifts in focus or methodology regarding LLM trustworthiness.

- RQ4: What types of research contributions are primarily presented in studies on LLM trustworthiness?

- –

- Objective: Classify the nature of contributions, such as frameworks, methodologies, tools, or empirical studies, made by researchers in this area.

- RQ5: Which research methodologies are employed in the studies on LLM trustworthiness?

- –

- Objective: Understand the research approaches and methodologies that are commonly used to investigate LLM trustworthiness.

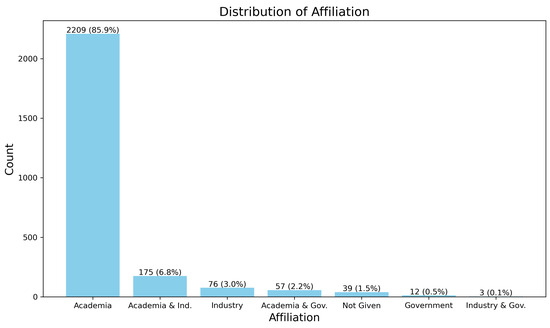

- RQ6: What is the distribution of publications in terms of academic and industrial affiliation concerning LLM trustworthiness?

- –

- Objective: Assess the level of interest and involvement from academic institutions and industry players in LLM trustworthiness.

3.1.2. Search String and Source Selection

This section focuses on defining the search string and selecting appropriate database sources to ensure comprehensive coverage of existing research while maintaining a manageable number of studies for analysis [20,34]. The search string is a logical expression created by combining keywords and is used to query research databases. A well-constructed search string should retrieve studies relevant to the research questions (RQs) of this work. Similarly, selecting appropriate research databases is crucial for broad coverage. To achieve this, databases relevant to both engineering and computer science were chosen. The subsections below provide details on the formulation of the search string and the selection of database sources.

3.1.3. Search String Formulation

The Population, Intervention, Comparison, and Outcomes (PICO) framework, proposed by [35], was utilized to define the search string. PICO involves the following criteria:

- Population: Refers to the category, application domain, or specific role that encompasses the scope of this systematic mapping study.

- Intervention: Represents the methodology, tool, or technology applied within the study’s context.

- Comparison: Identifies the methodology, tool, or technology used for comparative analysis in the study.

- Outcome: Denotes the expected results or findings of the study.

3.1.4. PICO Definition for This Study

For this systematic mapping study, the PICO framework was defined as follows:

- Population: Types of LLM.

- Intervention: Trustworthiness aspect.

- Comparison: No empirical comparison is made, therefore not applicable.

- Outcome: A classification of the primary studies, indicating the trustworthiness aspect of LLM.

3.1.5. Keyword Analysis and Synonym Sets

Analyzing the Population and Intervention categories, the following relevant keywords were identified: “LLM” and “trustworthy”. From these keywords, the following sets were derived, grouping synonyms and related terms:

- Set 1: Terms related to the LLM field.

- Set 2: Terms related to trustworthy [16,36], focusing on the following:

- Human oversight;

- Record-keeping;

- Data and data governance;

- Transparency;

- Accuracy;

- Robustness;

- Cybersecurity;

- Trustworthy.

There are three aspects that are not included in the search string:

- Record-keeping: It is covered under traceability.

- Accuracy: It is a commonly used metric for evaluating AI models beyond just LLMs. To prevent a significant increase in the number of retrieved papers, we covered it under faithfulness which refers to how accurately an explanation represents a model’s reasoning process.

- Data and data governance: It is addressed as part of ethics and bias.

- Cybersecurity: It is covered under safety and resilience against unauthorized third parties.

The final search string used to retrieve the studies was derived by combining the Population and Intervention search strings identified during the PICO process. The resulting search string, presented in Table 1, is constructed by associating three logical expressions separated by the AND operator. Each expression represents the fields of LLM and trustworthy.

Table 1.

Final search string for study retrieval.

3.1.6. Source Selection and the Scope of This Study

For this topic, four scientific online digital libraries were chosen: IEEE Xplore Digital Library (IEEE Xplore Digital Library: https://ieeexplore.ieee.org/ (accessed on 27 June 2024)), ACM Digital Library (ACM Digital Library: https://dl.acm.org/ (accessed on 27 June 2024)), Scopus (Scopus: https://www.scopus.com/ (accessed on 27 June 2024)), and Web of Science (Web of Science: https://www.webofscience.com/ (accessed on 27 June 2024)) as listed in Table 2. According to [37], these libraries are known to be valuable in the fields of engineering and technology when performing literature reviews or systematic mapping studies. Additionally, these libraries exhibit high accessibility and support the export of search results to computationally manageable formats.

Table 2.

Digital library search methods.

We limited the search to studies published from 2019 to June 2024, aligning with the timeframe of our research. This was realized for two main reasons: (1) the transformer, which forms the backbone of most LLMs, was introduced in the 2017 paper titled “Attention Is All You Need” [38]; (2) we found no relevant articles from 2017 to 2018, likely because LLMs were still an emerging topic, and the concept of Trustworthy LLMs developed later. This ensures a relevant and focused analysis for our systematic mapping study, specifically covering the period when LLMs became more advanced, widely adopted, and increasingly researched from the perspective of trustworthiness.

3.2. Conducting

The following key steps detailed in sections were crucial to conduct this study.

3.2.1. Study Retrieval

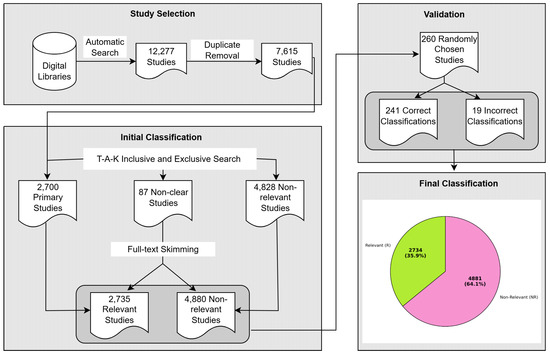

The initial step in the conducting phase involves utilizing the finalized search string to retrieve studies from the selected digital libraries, with minor adjustments made to its syntax as required. Table 2 outlines the specific modifications applied to the search string for each online digital library. The query yielded a total of 12,277 potential candidate studies, predominantly sourced from the Scopus and Web of Science databases. Table 3 provides a breakdown of the number of studies retrieved from each database.

Table 3.

Amount of studies retrieved from each database and the total number of potential candidate studies.

3.2.2. Study Selection

This step is used to determine the relevant studies that match the objective of the systematic mapping study. The results of automatic searches from digital libraries might have inconsistencies due to the presence of ambiguities and lack of detail in the retrieved studies. Therefore, relevant studies must be extracted by following a selection process. The first step is to remove duplicates, as the same studies can be retrieved from different sources. To identify these duplicates, we matched the study titles, years, and Digital Object Identifiers (DOIs). This step eliminated 4662 studies, leaving 7615 studies for initial classification. Additionally, querying digital libraries allowed us to obtain the study’s language and page count during the initial selection process.

The selection process continues with the criteria definition and selection of relevant studies. Details are provided as follows.

Criteria for Study Selection

The study selection criteria step is used to determine the relevant studies that match the goal of the systematic mapping study according to a set of well-defined inclusion (IC) and exclusion criteria (EC). To classify a study as relevant, it should satisfy all inclusion criteria at once and none of the exclusion ones. The applied inclusion and exclusion criteria are presented in Table 4.

Table 4.

Inclusion and exclusion criteria for study selection.

Evaluation of Study Relevance

These inclusion and exclusion criteria were first applied to the Title–Abstract–Keywords (T-A-K) tactic of each study to determine its relevance to the RQs. The relevant studies were classified according to the following terminology:

- A study is marked as relevant (R) if it meets all the inclusion criteria and none of the exclusion criteria.

- A study is marked as not-relevant (NR) if it does not fulfill one of the inclusion criteria or meets at least one of the exclusion criteria.

- A study is marked as not-clear (NC) if there are uncertainties from the T–A–K analysis.

The process of identifying relevant studies involved a thorough review of information in the Title–Abstract–Keywords (T-A-K) fields, followed by full-text skimming (including title, abstract, keywords, all sections, and any appendices) to determine inclusion in the final set of relevant studies. Out of 87 studies initially categorized as needing closer evaluation (NC), 35 were determined to be relevant (R) after full-text skimming. This brought the total number of relevant studies included in the mapping study to 2735.

To provide a clearer understanding of the selection process, Figure 2 illustrates the detailed workflow, showing the number of studies at each phase of the process.

Figure 2.

Workflow of the search and selection process.

Snowballing

One of the substeps of the selection process described in the guidelines is to perform snowball sampling. According to [39], snowballing is a method of identifying additional relevant studies after obtaining the primary ones. The snowball effect in the context of survey research refers to a method of identifying additional relevant studies or participants by leveraging the references or citations from the studies that have already been found. This technique is particularly useful when there is limited literature directly addressing the selected research questions or when the population of the study is hard to access. Due to the large number of papers (7615) retrieved using the criteria outlined above, it was decided not to employ snowballing in this systematic mapping study.

3.2.3. Classification Schemes Definition

This step in the systematic mapping study outlines how the relevant studies are categorized to address the research questions presented earlier. To achieve this, we first define a classification scheme, which is based on six primary facets, each associated with one or more of the research questions.

The first two facets, type of research contribution and type of research methodology, are adopted directly from the categorization proposed in [34,40]. To develop additional facets for our classification, two methods were employed: initial categorization and keywording. The initial categorization was based on discussions with experts in the respective fields, which provided an initial set of facets grounded in their domain knowledge. Several meetings were held to refine these categories further. The last four facets are LLM classification, trustworthiness aspect, communication technology, and application domain classification were selected from the drop-down menu list following the initial categorization process.

Subsequently, a systematic keywording method, as proposed in [34], was applied to refine the categories. This method was implemented in two main steps:

- Reading: The abstracts and keywords of the selected relevant studies were reviewed again, focusing on identifying a set of keywords representing the application domains, cellular and non-cellular communication types, the trustwothiness aspect, and the LLMs that are studied for trustworthiness.

- Clustering: The obtained keywords were grouped into clusters, resulting in a set of representative keyword clusters, which were then used for classifying the studies.

Both the initial categorization and keywording methods yielded a set of clusters (classes) for each of the targeted facets.

3.2.4. Data Extraction

To extract and organize data from the selected relevant studies, a well-structured data extraction form was created. This form is based on the classification scheme and research questions (RQs). The data extraction form is shown in Table 5, containing the data items and their corresponding values, as well as the RQs from which they were derived.

Table 5.

Data extraction form.

For this stage of the systematic mapping study, Microsoft Excel was used to store and manage the extracted information from each relevant study for later analysis. During this process, it was observed that some studies could be classified into more than one category according to our previously defined classification scheme. These studies were thus assigned to all relevant categories.

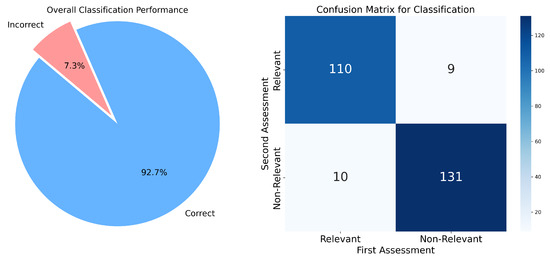

In order to validate our data extraction process, 260 studies from the total 7615 studies have been randomly selected, and one researcher checked whether the results were consistent, independently from the researcher who performed the initial data extraction. We calculate the overall agreement over the 260 studies as 92.69% (241 studies out of 260 were classified the same in the second assessment, as shown in Table 6). Out of the 19 incorrect cases (7.31%), 9 were found to be relevant instead of non-relevant, and 10 were found to be non-relevant instead of relevant.

Table 6.

Validation results from the total studies.

This means that 241 times (92.69%), the rating decision remained the same when repeated, indicating a high level of consistency in the data extraction process.

The output of this step was a filled data-extraction form consisting of all relevant studies that were categorized and validated in identified classes, as shown in Figure 3: overall classification performance (pie chart) and confusion matrix for classification.

Figure 3.

Validation results.

3.2.5. Data Analysis

Data analysis represents the final step in the systematic mapping study process. In this phase, the field map is created based on the classification of the studies. Using the maps, a comprehensive analysis of the studies is conducted to answer the research questions outlined in Section 3.1.1. A Python 3.8.10 script was employed to generate the mapping results, including radial bar charts, histogram charts, pie charts, and line graphs. The generated maps and the resulting analysis are presented in Section 5.

4. Classification Scheme

As a crucial step for conducting the systematic mapping study (outlined in Section 3), the following six classification schemes were defined as follows: types of LLM algorithms, types of trustworthiness aspects based on the seven dimensions outlined in the EU AI Act, types of communication technologies, types of application domain classifications, types of research contributions, and types of research.

4.1. LLM Classification

The following classes were developed based on an initial categorization and keywording. Each LLM model is described briefly according to its main function or application, as follows:

- ALPACA: A lightweight, instruction-following model by Stanford for task-oriented AI interactions [41].

- BARD: Google’s conversational AI optimized for creative content and open-ended user queries [42].

- BART: Facebook’s transformer model for text generation and transformation tasks like summarization [43].

- BERT: Google’s transformer-based model, excels in understanding context for tasks like sentiment analysis and question answering [7].

- BingAI: Microsoft’s integration of AI with Bing search for real-time web-based responses [44].

- BLOOM: Open-source multilingual text generation model designed for cross-lingual applications [45].

- ChatGLM: General-purpose conversational AI optimized for natural, coherent dialogues [46].

- CLAUDE: Anthropic’s ethical AI focused on safe, unbiased conversational interactions [47].

- Cohere: AI model for text generation and summarization, widely used for content creation [48].

- Copilot: OpenAI and GitHub’s CLI 1.0 code assistant that provides real-time code suggestions and completions [49]

- CodeGen: Model designed specifically for generating functional code across multiple programming languages [50].

- DALL-E: OpenAI’s image generation model that creates images from textual descriptions [51].

- ERNIE: Baidu’s model that enhances language understanding by integrating knowledge graphs [52].

- FALCON: AI model focused on generating coherent, context-aware dialogues in real-time [53].

- FLAN: Google’s fine-tuned T5 variant optimized for specific NLP tasks like summarization and question answering [54].

- GEMINI: A versatile AI model by Google for scalable and multi-purpose NLP tasks [55].

- GEMMA: Multilingual AI model for text generation and translation, supporting diverse languages [56].

- GPT: OpenAI’s general-purpose language model excelling in generating text and language understanding [57].

- LAMDA: Google’s conversational AI for maintaining fluid and natural open-ended conversations [58].

- LLaMa: Meta’s efficient and open-source language model optimized for natural language understanding [59].

- LLM: A broad class of large language models designed for various NLP tasks like text generation [3].

- T5: Google’s unified model for handling diverse NLP tasks by framing them as text-to-text problems [4].

- MISTRAL: A multi-modal model combining language and vision capabilities for complex tasks [60].

- MT5: A multilingual extension of T5, designed to handle text-to-text tasks across multiple languages [5].

- MPT: Memory-augmented AI model focused on improving learning efficiency and long-term retention [61].

- ORCA: Conversational AI optimized for generating coherent, context-aware dialogues [62].

- PaLM: Google’s Pathways Language Model designed for complex reasoning and large-scale NLP tasks [63].

- Phi: AI model focusing on ethical decision-making and reducing biases in responses [64].

- StableLM: A robust language model designed for stable and predictable AI-generated text [65].

- StartCoder: AI model designed specifically for coding assistance and code generation tasks [66].

- Vicuna: A safe, high-quality conversational AI model focused on ethical interactions [67].

- XLNet: An autoregressive model built to capture flexible contextual dependencies in language understanding [68].

- Zephyr: Lightweight AI model designed for efficient performance in resource-constrained environments [69].

4.2. Trustworthiness Aspects

We chose the EU AI Act due to its comprehensive scope, advanced regulatory approach, and strong foundational principles as compared to the other AI regulations. This is particularly relevant for LLMs as they are deployed across various domains. These principles address the human oversight, data governance, accuracy, robustness, cybersecurity, transparency, and record-keeping necessary to ensure that AI systems operate ethically and safely [36].

- Human Oversight: The system shall be designed with appropriate human–machine interface tools to ensure adequate oversight. It must aim to prevent or minimize risks to health, safety, and fundamental rights. The level of oversight shall be commensurate with the system’s autonomy, risks, and context of use. Additionally, the system must be verified and confirmed by at least two qualified natural persons with the necessary competence, training, and authority. Risk management measures should also address potential biases, including those originating from users’ cognitive biases, automation bias, and algorithmic bias.

- Record-Keeping: To ensure traceability and accountability, the system shall have logging capabilities to record relevant events. These logs should be sufficient for the intended purpose of the system, facilitating proper tracking of system functionality and decisions.

- Data and Data Governance: The datasets used for training, validation, and testing must be carefully chosen and reflect the relevant design choices and data collection processes. The origin of data, data-preparation operations, and the formulation of assumptions should be clearly documented. A comprehensive assessment of the data’s availability, quantity, suitability, and potential biases should be conducted, especially considering potential risks to health and safety, fundamental rights, or discrimination as outlined under applicable laws (e.g., Union law).

- Transparency: The system shall be transparent enough to allow deployers and users to interpret its outputs (explainability) and use them effectively. This includes providing clear, concise, and accurate instructions for use, available in a digital format or otherwise. These instructions should be comprehensive, correct, and easily understood by the system’s users.

- Trustworthy: The paper did not mention any specific aspects related to trustworthiness, but the general requirement is that the system’s design and operation must adhere to best practices for ethical AI use, ensuring it meets the aforementioned criteria.

- Accuracy: The system must be developed to achieve an appropriate level of accuracy, maintaining consistent performance throughout its lifecycle. The expected levels of accuracy, along with relevant accuracy metrics, should be clearly declared in the accompanying instructions for use.

- Robustness: The system must be designed to be robust, capable of maintaining consistent performance even in the presence of errors, faults, or inconsistencies. Robustness can be achieved through technical redundancy solutions, including backup systems or fail-safe mechanisms, ensuring that the system can continue functioning reliably in adverse conditions.

- Cybersecurity: The system must be developed with a strong focus on cybersecurity to prevent unauthorized access or manipulation by third parties. This includes measures to safeguard against data poisoning, model poisoning, adversarial examples, and confidentiality attacks. The system should include solutions to prevent, detect, respond to, resolve, and control attacks that could compromise its integrity, including manipulating the training dataset or exploiting model vulnerabilities.

4.3. Classification of Communication Technology

In our mapping study on the trustworthiness of large language models (LLMs) in communication technology, we examined advanced cellular generations along with non-cellular technologies. Based on the initial categorization for communication and the process of keywording, the following classes were extracted:

- Cellular;

- Non-cellular;

- Not Mentioned.

Although we initially considered all cellular generations, including 2G and 3G, we ultimately concentrated on 4G and beyond due to their compatibility with AI-driven applications. LLMs are particularly relevant to 4G, 5G, and 6G, which support advanced data processing and real-time decision-making [70].

- 4G—With significantly higher data rates, 4G enabled new applications such as IoT and industrial automation. LTE is another common term used for 4G.

- 5G—Offering low-latency communication and very high data rates, 5G broadens the range of use cases across diverse sectors.

- 6G—Expected to offer ultra-low latency, terabit-per-second data rates, and AI-driven network optimization, 6G aims to enable futuristic applications such as holographic communications, pervasive intelligence, and seamless global connectivity.

- Cellular (not-specified)—This category includes studies that do not specify which particular cellular technology is employed in the T-A-K fields.

Moreover, non-cellular technologies play a crucial role in applications where LLMs can enhance security, automation, and efficiency [71].

- WiFi—Encompasses technologies based on the IEEE 802.11 standard, including vehicular ad hoc networks (VANET) and dedicated short-range communication (DSRC).

- Radio-frequency Identification (RFID)—A short-range communication method using electromagnetic fields to transmit data.

- Bluetooth—A technology designed for low power consumption, short-range communication, and low data transfer rates.

- Satellite—A long-range communication method that is sensitive to interference and relies on a clear line of sight.

- Non-cellular (not-specified)—Includes studies that do not specify the non-cellular technology used in the T-A-K fields.

4.4. Application Domain Classification

Based on keywording, the following classification scheme was derived for domains where AI, particularly LLMs, are applied to ensure trustworthiness:

- Air Space—Ensuring that LLMs provide accurate, reliable, and unbiased information for aerospace applications, such as navigation systems and communication protocols.

- Automotive—Maintaining safety and reliability in LLM-driven automotive technologies, including autonomous driving systems and in-vehicle assistants.

- Construction—Ensuring precision and safety in LLM applications for construction project planning, risk assessments, and management.

- Cybersecurity—Enhancing security measures and threat detection systems in the cybersecurity domain using LLMs.

- Defence—Ensuring the ethical application, security, and accuracy of LLMs in defense scenarios, such as threat analysis and decision support systems.

- Education—Promoting fairness, accuracy, and bias reduction in LLM-based educational tools for personalized learning and academic assistance.

- Environment—Ensuring LLM-driven technologies support environmental monitoring and sustainability efforts.

- Finance—Ensuring transparency, bias reduction, and data protection in LLM applications for the fintech industry.

- History—Supporting transparent and reliable LLM usage in the analysis and preservation of cultural heritage.

- Human–Computer Interaction (HCI)—Enhancing interactions between humans and AI through improved dialogues, negotiations, and task delegation.

- Information Verification—Utilizing LLMs in automated fake news detection, fact-checking processes, and cross-domain analysis for verifying information credibility.

- Smart Factory—Maintaining operational integrity, reliability, and efficiency in LLM applications for automated manufacturing and production systems.

- Health Care—Ensuring privacy, accuracy, and ethical practices in LLM applications for medical diagnostics, patient care, and health data management.

- Internet of Things (IoT)—Ensuring precision, reliability, and ethical behavior in LLM-guided IoT systems across diverse applications.

- Law—Ensuring transparency, data integrity, and fairness in the use of LLMs for legal purposes.

- Linguistic—Promoting linguistic accuracy, cultural sensitivity, and reducing bias in LLM applications for translation, text generation, and language learning.

- Marine—Ensuring the safety and reliability of LLM applications in marine navigation, communication, and environmental monitoring.

- Mining—Enhancing operational safety and efficiency in LLM applications for resource extraction, site management, and predictive maintenance.

- News/Media—Leveraging LLMs for ethical reporting, fact-checking, and content creation in the media industry.

- Robotics—Ensuring the ethical behavior, precision, and reliability of LLM-powered robotic systems in both industrial and domestic settings.

- Smart System/Coding—Utilizing LLMs to improve software development processes, automate code generation, and ensure robust, efficient, and secure software systems.

- Smart Business—Harnessing LLMs to foster innovative business solutions, enhance operational efficiency, and provide data-driven insights for strategic decision-making.

- Social Scoring—Promoting fairness, transparency, and objectivity in social scoring systems by identifying and mitigating biases related to politics and social context.

- Surveillance—Ensuring the ethical use of LLMs in surveillance systems for monitoring, threat detection, and security analysis, while safeguarding privacy.

- Telecommunication—Ensuring security, reliability, and unbiased communication in LLM applications used for network management, customer service, and data analysis.

- Not Specified—This category is used for studies that do not specify the application domain.

- Other—This category includes studies working on application domains not listed here.

4.5. Research Contribution Classification

The classification framework for research contributions allows researchers to identify potential areas for developing new methods, techniques, or tools aimed at enhancing safety. The classification scheme used for this facet is based on the one proposed by Petersen et al. in [34], which is widely adopted in systematic mapping studies [72]. This facet is categorized into the following data items:

- Model—Represents conceptual frameworks or abstract structures addressing key principles and theoretical aspects in AI-based safety implementations. These frameworks are typically idea-focused and aim to guide and ensure trustworthiness in large language models (LLMs).

- Method—Describes procedural steps and actionable guidelines designed to tackle specific challenges in trustworthiness in large language models (LLMs). These are practical, action-oriented processes, such as steps to evaluate the accuracy of LLMs.

- Metric—Pertains to measurable indicators or quantitative criteria used to evaluate the properties and performance of large language models (LLMs) in trustworthiness aspects.

- Tool—Refers to software, prototypes, or applications that support or operationalize the models and methods discussed.

- Open Item—Includes studies that do not conform to the categories of models, methods, metrics, or tools, covering unique aspects outside the defined classifications.

4.6. Research Types Classification

The classification of research types follows the scheme proposed by Wieringa et al. in [40], which includes the following categories:

- Validation Research—Investigates new techniques that have not yet been applied in practice, typically through experiments, prototyping, and simulations.

- Evaluation Research—Assesses implemented solutions in practice, considering both the advantages and drawbacks. This includes case studies, field experiments, and similar approaches.

- Solution Proposal—Proposes a novel solution for an existing problem or a significant enhancement to an existing solution.

- Conceptual Proposal—Provides a new perspective on a topic by organizing it into a conceptual framework or taxonomy.

- Experience Paper—Shares the experiences of the authors, describing the practical application or implementation of a concept.

- Opinion Paper—Presents the personal opinions of the authors on specific methods or approaches.

5. Results and Discussion

This section presents the analysis conducted on the relevant studies. The structure is organized as follows:

- Each subsection addresses one or more research questions outlined in Section 3.1.1.

- Each research question is analyzed and supported with a corresponding chart to provide a comprehensive answer.

5.1. Results of RQ1(a–b)

The first research question focuses on the current state of trustworthiness in large language models (LLMs), specifically analyzing two sub-questions: (1) identifying the most studied LLMs in terms of trustworthiness and (2) exploring the specific aspects of trustworthiness addressed in the research.

5.1.1. RQ1a: Which LLMs Are Most Studied for Trustworthiness?

Objective: Identify specific LLMs that have been researched with a focus on trustworthiness.

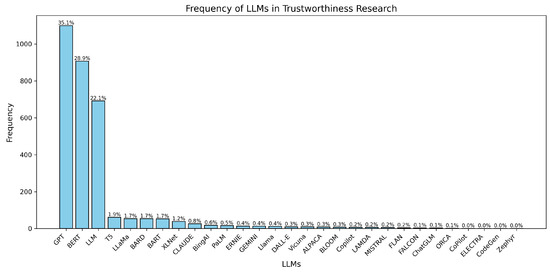

Results: Figure 4 presents a bar chart summarizing the frequency of studies on LLMs in relation to trustworthiness. The analysis reveals that GPT models are the most extensively explored, with 1099 studies (35.1%), followed by BERT with 906 studies (28.9%) and general references to LLMs with 691 studies (22.1%). Other notable models include T5 (61 studies, 1.9%), LLaMa (54 studies, 1.7%), and BARD (54 studies, 1.7%).

Figure 4.

Frequency of studies exploring trustworthiness aspects for specific LLMs.

Relatively fewer studies focus on emerging or specialized models such as XLNet (39 studies, 1.2%), Claude (26 studies, 0.8%), and BingAI (18 studies, 0.6%). The least explored models include Mistral (6 studies, 0.2%), FLAN (5 studies, 0.2%), and ChatGLM (4 studies, 0.1%). Notably, single studies were identified for models such as CoPilot, ELECTRA, CodeGen, and Zephyr, each contributing 0.0% to the total.

These results suggest that research on trustworthiness primarily focuses on widely adopted and versatile LLMs like GPT and BERT, while newer or less commonly used models remain underexplored. This highlights potential opportunities for further research on the trustworthiness of emerging and niche LLMs.

5.1.2. RQ1b: Which Aspects of Trustworthiness Are Explored in LLMs?

Objective: Explore specific dimensions of trustworthiness that researchers focus on.

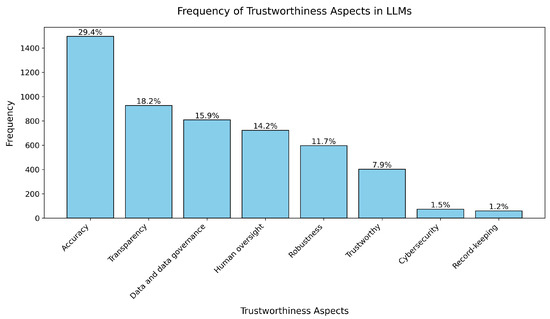

Results: Figure 5 presents a bar chart illustrating the frequency and percentages of trustworthiness aspects considered in LLM research. The most frequently addressed dimension is accuracy, which accounts for 1,496 studies (29.40%). This is followed by data and data governance, representing 809 studies (15.90%), and transparency, with 927 studies (18.22%).

Figure 5.

Trustworthiness aspects considered in LLM research.

Other notable dimensions include human oversight (723 studies, 14.21%) and robustness (597 studies, 11.73%). In contrast, less explored aspects are cybersecurity (74 studies, 1.45%) and record-keeping (60 studies, 1.18%). A broader focus on trustworthiness was observed in 403 studies (7.92%).

Major Trend: The predominance of accuracy and transparency underscores their critical role in ensuring the reliability and interpretability of LLMs. However, the relatively lower emphasis on dimensions such as cybersecurity and record-keeping suggests underexplored areas that warrant greater attention in future research. This trend highlights a potential gap in addressing comprehensive trustworthiness within the LLM domain, particularly in aspects that contribute to systemic security and compliance.

5.2. Results of RQ2

Research Question: What are the current research gaps in the study of LLM trustworthiness in popular application domains?

Objective: Identify areas in LLM trustworthiness research that need further exploration, especially within high-impact application domains.

Analysis and Results

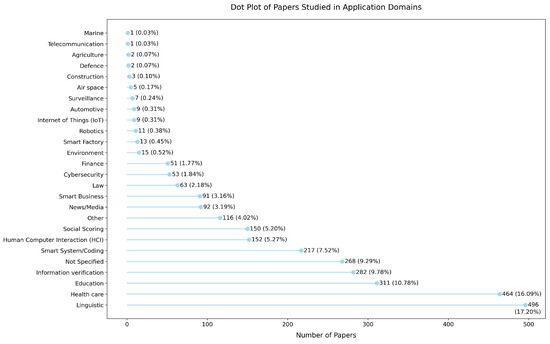

Figure 6 illustrates the general distribution of studies exploring LLM trustworthiness across various application domains based on selected primary studies. The analysis reveals several key insights into the current state of research and highlights potential gaps:

Figure 6.

Explored LLM trustworthiness across different application domains.

- Linguistic Applications: This domain accounts for the largest share of studies, with 496 papers (17.20%). This reflects the significant interest in improving language comprehension, generation, and translation tasks in LLMs. However, the focus on linguistic applications may overshadow trustworthiness considerations in other high-impact domains.

- Healthcare: With 464 papers (16.09%), healthcare is the second-most explored domain, demonstrating the growing reliance on LLMs in medical decision-making, diagnostics, and patient interaction. Despite this focus, challenges in data governance, robustness, and transparency remain underexplored.

- Education: Trustworthiness in educational applications has been explored in 311 papers (10.78%). The increasing use of LLMs in personalized learning and content creation raises concerns about accuracy, bias, and human oversight.

- Information Verification: This domain has 282 papers (9.78%), reflecting the growing importance of LLMs in verifying information, particularly in the context of news, media, and social media content. Despite its relevance, further research into transparency and robustness in this area is necessary.

- Smart System/Coding: This domain has 217 papers (7.52%), showcasing the role of LLMs in software development and automation. Trustworthiness in coding tasks requires ensuring reliability, security, and the ability to handle ambiguous situations.

- Underexplored Domains: Several critical domains such as cybersecurity (53 papers, 1.84%), finance (51 papers, 1.77%), and environment (15 papers, 0.52%) have received limited attention. These domains involve high stakes, where trustworthy AI is essential, highlighting a significant research gap.

- Emerging and Niche Domains: Application areas such as smart factory (13 papers, 0.45%), robotics (11 papers, 0.38%), Internet of Things (IoT) (9 papers, 0.31%), automotive (9 papers, 0.31%), surveillance (7 papers, 0.24%), air space (5 papers, 0.17%), construction (3 papers, 0.10%), defense (2 papers, 0.07%), agriculture (2 papers, 0.07%), telecommunication (1 paper, 0.03%), and marine (1 paper, 0.03%) remain largely unexplored. These domains demand robust and transparent LLMs due to their direct impact on safety, security, and privacy.

- General Observations: A significant portion of studies (268 papers, 9.29%) did not specify a domain, indicating a lack of targeted research. Furthermore, domains like smart business (91 papers, 3.16%) and social scoring (150 papers, 5.20%) also demonstrate a notable gap in research focused on trustworthiness.

These findings suggest that while certain domains such as linguistics, healthcare, and education have seen substantial research, there is an urgent need to extend trustworthiness studies to underexplored and high-stakes application areas. Addressing these gaps will enhance the applicability of LLMs across diverse fields while ensuring their responsible and reliable use.

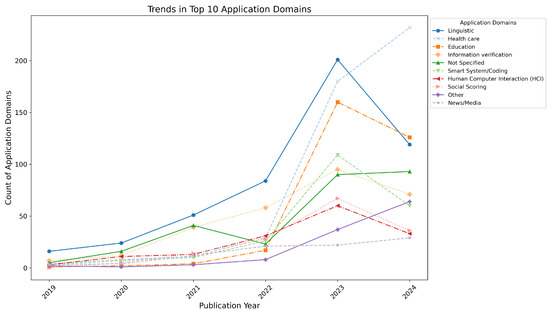

Furthermore, Figure 7 provides a closer look into the trends in the top 10 domains of applications assessed for LLM trustworthiness over a six-year period. The data reveals significant growth in certain fields, particularly in the healthcare and education sectors.

Figure 7.

Trends in top 10 application domains from 2019 to June 2024.

- Linguistic applications started with 16 papers in 2019 and experienced steady growth, peaking at 201 papers in 2023 before slightly declining to 119 papers in 2024. The sharp increase from 2020 to 2023 highlights the growing attention to linguistic trustworthiness.

- Healthcare exhibited the most remarkable growth, starting with 4 papers in 2019 and skyrocketing to 232 papers in 2024, with a sharp increase observed between 2022 and 2024.

- Education grew steadily from 1 paper in 2019 to 126 papers in 2024, with a dramatic surge in 2023 (160 papers).

- Information verification saw consistent growth, rising from 7 papers in 2019 to 71 papers in 2024, with a peak of 95 papers in 2023.

- Not specified domain-related papers fluctuated across the years, starting with 5 papers in 2019, peaking at 90 papers in 2023, and stabilizing at 93 papers in 2024.

- Smart system/coding applications increased significantly, starting with 3 papers in 2019 and peaking at 109 papers in 2023 before declining to 60 papers in 2024.

- Human–computer interaction (HCI) grew from 3 papers in 2019 to 33 papers in 2024, with notable peaks in 2022 (31 papers) and 2023 (60 papers).

- Social scoring saw rapid growth from 5 papers in 2020 to 67 papers in 2023, followed by a decline to 36 papers in 2024.

- Other applications started with 2 papers in 2019 and grew significantly to 64 papers in 2024, with substantial increases in 2023 and 2024.

- News/Media applications steadily increased from 3 papers in 2019 to 29 papers in 2024, showing moderate growth from 2023 onwards.

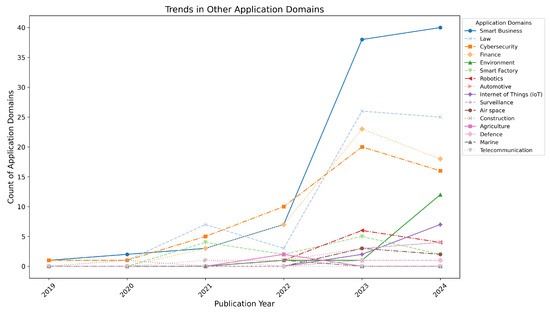

- Other Application Domains

The trends across other domains of LLM trustworthiness are presented in Figure 8. While these domains were less prominent compared to the top 10, they exhibited notable growth:

Figure 8.

Trends in other application domains from 2019 to June 2024.

- Smart business started with 1 paper in 2019 and grew significantly, reaching 40 papers in 2024, with a sharp rise in 2023 (38 papers).

- Law grew from 1 paper in 2019 to 25 papers in 2024, with a peak of 26 papers in 2023.

- Cybersecurity started with 1 paper in 2019 and grew steadily to 16 papers in 2024, peaking at 20 papers in 2023.

- Finance grew from 3 papers in 2021 to 18 papers in 2024, with a peak of 23 papers in 2023.

- Environment emerged as a focus area in 2022 with 1 paper, growing to 12 papers in 2024.

- Smart factory showed modest growth, peaking at five papers in 2023 and declining to two papers in 2024.

- Robotics emerged in 2022 with one paper, growing to six papers in 2023 and stabilizing at four papers in 2024.

- Automotive remained minor, with one paper in 2021 and 2022, increasing slightly to four papers in 2024.

- Internet of Things (IoT) saw an increase from two papers in 2023 to seven papers in 2024.

- Surveillance grew from three papers in 2023 to four papers in 2024.

- Air space declined slightly, from three papers in 2023 to two papers in 2024.

- Domains such as construction, agriculture, defense, marine, and telecommunication maintained a minimal presence over the years, with little to no growth. For instance, construction had one paper annually in 2020, 2023, and 2024; agriculture emerged in 2022 with two papers; and defense, marine, and telecommunication exhibited sporadic publications with only one paper per year in specific instances.

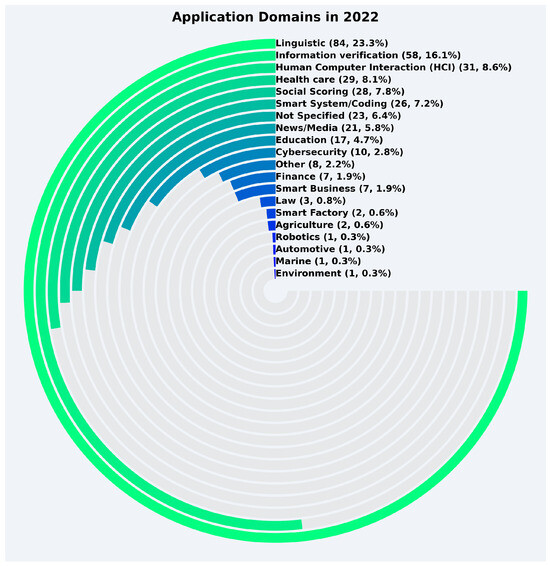

Given the number of application domains examined in this study, the following analysis presents a detailed year-by-year trends. The application domains in 2022 are presented in Figure 9. As shown, the majority of LLM papers were concentrated in a few domains, with linguistic applications dominating the field at 23.3%, totaling 84 papers. Other prominent domains included information verification (16.1%), human–computer interaction (HCI) (8.6%), and health care (8.1%). These domains represented the core areas of LLM application during this year, with linguistic applications experiencing a marked presence. Social scoring (7.8%) and smart system/coding (7.2%) also held significant shares. On the other hand, domains such as agriculture, robotics, smart factory, and marine were considerably less represented, each with less than 1% of the total papers. The total number of records in 2022 was 360.

Figure 9.

Year-wise trend for application domains in 2022.

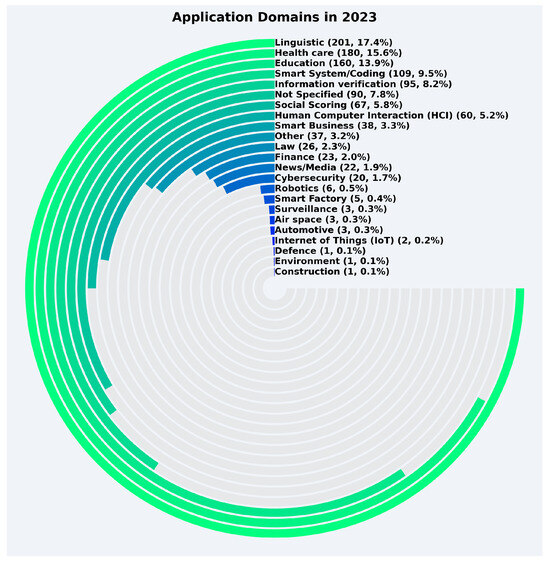

The trends in 2023 show significant changes in the application domain distribution, as shown in Figure 10. The number of records rose dramatically to 1,153, with linguistic (17.4%), health care (15.6%), and education (13.9%) maintaining their prominence. Smart system/coding also saw notable growth, with 9.5% of the total records, up from 7.2% in 2022. The domain of information verification accounted for 8.2%, while not specified increased significantly, reaching 7.8%. Social scoring maintained a substantial share of 5.8%, and human–computer interaction (HCI) contributed 5.2%. On the other hand, smaller domains like robotics, smart factory, and automotive remained low, each comprising less than 1% of the total records. Notably, the emergence of new application domains such as air space and Internet of Things (IoT) began to contribute, though their shares remained minimal.

Figure 10.

Year-wise trend for application domains in 2023.

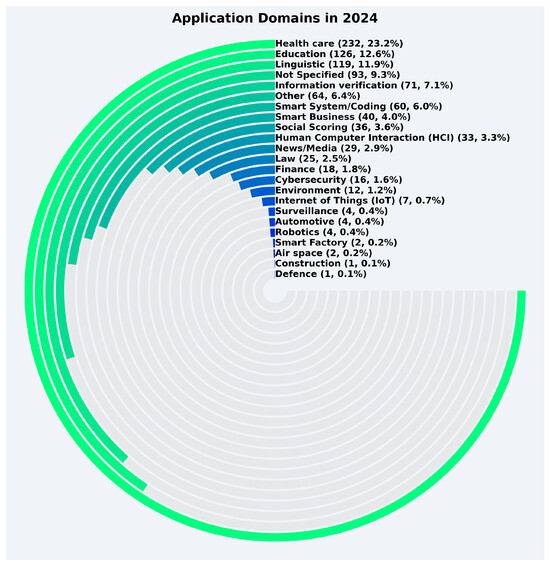

The distribution of LLM applications in 2024 is shown in Figure 11. Health care remained the most significant domain, representing 23.2% of the total records, with 232 papers published. Education (12.6%) and linguistic (11.9%) followed as major domains, though their shares declined slightly compared to 2023. Not specified maintained a notable percentage at 9.3%, reflecting the continued growth in unspecified applications. The smart system/coding domain remained stable at 6.0%, while the smart business domain grew to 4.0%. Smaller domains like cybersecurity (1.6%) and robotics (0.4%) persisted with relatively low shares. Additionally, emerging application areas like environment (1.2%) and Internet of Things (IoT) (0.7%) continued to contribute modestly. The total records for 2024 amounted to 999, as we considered only up to June 2024, marking a slight decrease from 2023.

Figure 11.

Year-wise trend for application domains in 2024 (up to June).

5.3. Results of RQ3

Research Question: What are the emerging trends and developments in addressing trustworthiness in LLMs?

Objective: Track trends over time, identifying shifts in focus or methodology regarding LLM trustworthiness.

Analysis and Results

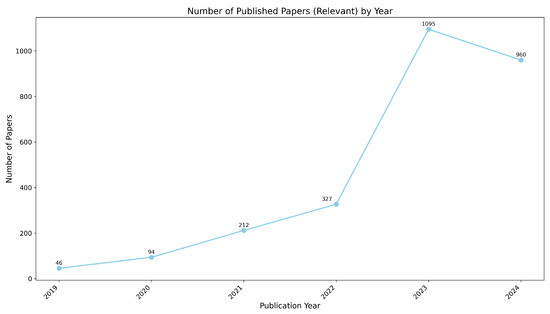

Figure 12 shows the number of papers published on LLM trustworthiness from 2019 to June 2024.

Figure 12.

Number of papers on LLM trustworthiness from 2019 to June 2024.

- In 2019, there were 46 papers, indicating early research interest in LLM trustworthiness.

- This number grew to 94 papers in 2020, showing increased attention to the topic.

- In 2021, the publications sharply rose to 212 papers, and by 2022, this number further increased to 327 papers.

- The trend peaked in 2023 with 1095 papers, reflecting a significant surge in research on LLM trustworthiness.

- In 2024, the number slightly decreased to 960 papers, but still remains high. This decrease can be attributed to the fact that we considered data only up to June 2024, which explains the slightly lower number compared to the peak of 2023. Despite this, the data shows a promising, massive increase in the number of papers, indicating strong and ongoing interest in the area.

These trends indicate that research in LLM trustworthiness has grown significantly over the years, with a marked increase in interest around 2021 and a peak in 2023.

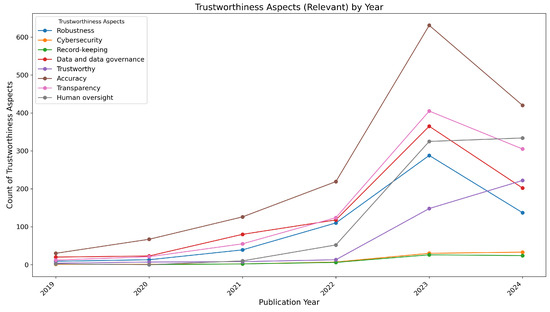

Figure 13 shows the distribution of trustworthiness aspects in LLM research.

Figure 13.

Distribution of trustworthiness aspects in LLM research from 2019 to June 2024.

- In 2019, the focus was on accuracy with 30 papers, followed by data and data governance (20 papers) and transparency (12 papers).

- In 2020, accuracy rose to 67 papers, and transparency increased to 21 papers, reflecting growing interest in these areas.

- In 2021, accuracy saw a significant jump to 126 papers, with robustness reaching 39 papers, highlighting the growing emphasis on these aspects.

- The trend continued in 2022, with accuracy at 219 papers and transparency reaching 124 papers, alongside a notable increase in human oversight (52 papers).

- In 2023, accuracy surged to 631 papers, robustness to 288 papers, and transparency to 405 papers, marking the highest level of research attention.

- In 2024, we only considered papers up to June, where accuracy remained at 420 papers, and robustness decreased to 137 papers. However, there was still a strong focus on human oversight (334 papers) and data governance (202 papers).

These trends show a significant increase in research on accuracy, transparency, and robustness, with growing attention to human oversight in recent years.

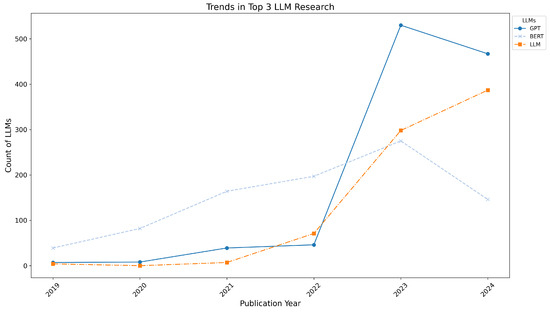

Figure 14 illustrates yearly trends for the top 3 most relevant LLMs in terms of occurrences related to trustworthiness research: GPT, BERT, and LLM.

Figure 14.

Trends for GPT, BERT, and LLM research on trustworthiness from 2019 to June 2024.

- GPT: Research on GPT’s trustworthiness has grown significantly, starting with 7 papers in 2019 and gradually increasing to 530 papers in 2023, before a slight decline to 467 papers in 2024.

- BERT: Interest in BERT began earlier, with 39 papers in 2019, and reached its peak in 2023 with 275 papers, followed by a decline to 146 papers in 2024.

- LLM: Research on the general LLM category had a slow start with 4 papers in 2019, but experienced rapid growth from 71 papers in 2022 to 298 papers in 2023, and further rising to 387 papers in 2024.

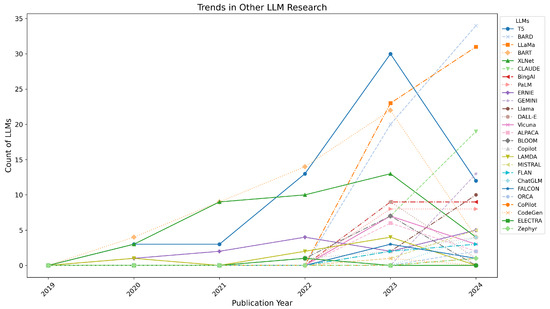

Figure 15 shows trends for additional LLMs such as T5, BARD, LLaMa, and others.

Figure 15.

Trends for other LLMs (T5, BARD, LLaMa, etc.) research on trustworthiness from 2019 to June 2024.

- T5: Research on T5 started in 2020 with 3 papers and peaked at 30 papers in 2023, but saw a decline to 12 papers in 2024.

- BARD: Research on BARD began only in 2023 with 20 papers, increasing to 34 papers in 2024, reflecting growing interest.

- LLaMa: LLaMa-related trustworthiness research commenced in 2023 with 23 papers, rising to 31 papers in 2024.

- Other LLMs:

- –

- BART: From 4 papers in 2020 to 22 papers in 2023, followed by a sharp drop to 4 papers in 2024.

- –

- XLNet: Showed moderate growth, with 3 papers in 2020, peaking at 13 papers in 2023, and dropping to 4 papers in 2024.

- –

- CLAUDE: Research began in 2023 with 7 papers, rising to 19 papers in 2024.

- –

- BingAI and PaLM: Both had consistent yet modest interest, with nine and eight papers, respectively, in 2023 and 2024.

- –

- Emerging Models: Models like GEMINI and Llama saw limited but increasing interest in 2024, e.g., GEMINI with 13 papers and Llama with 10 papers.

The trends highlight a sustained focus on GPT and BERT, with the general LLM category gaining momentum in recent years. Emerging models such as BARD, LLaMa, and CLAUDE have also gained traction, signaling a diversification in research priorities. Models like T5 and XLNet show fluctuating interest, while others, including GEMINI and MISTRAL, are witnessing initial growth. These shifts emphasize the evolving landscape of trustworthiness research in LLMs.

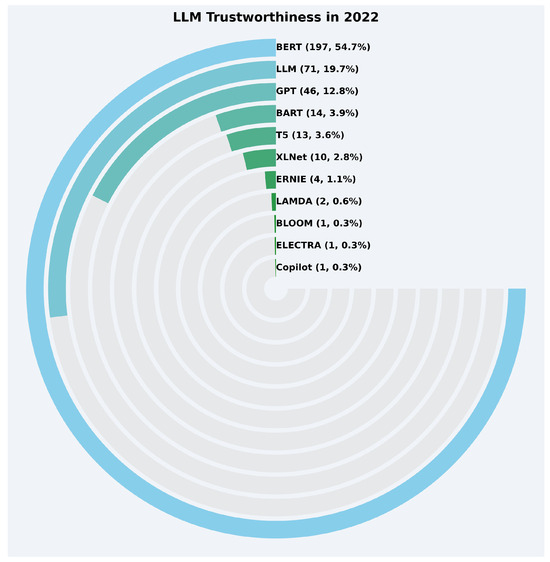

Trends in LLM trustworthiness research from 2022 to June 2024 reveal interesting dynamics in the evaluation of various language models.

In 2022, as shown in Figure 16, among the top LLMs, BERT dominated with 197 occurrences, accounting for 54.7% of the total focus, followed by LLM with 71 occurrences (19.7%). GPT also received significant attention with 46 occurrences (12.8%). Other notable LLMs include BART (14 occurrences, 3.9%) and T5 (13 occurrences, 3.6%). Smaller contributions were made by XLNet (10 occurrences, 2.8%), ERNIE (4 occurrences, 1.1%), LAMDA (2 occurrences, 0.6%), and other models like BLOOM, ELECTRA, and Copilot, each accounting for less than 0.5%.

Figure 16.

LLM trustworthiness distribution in 2022.

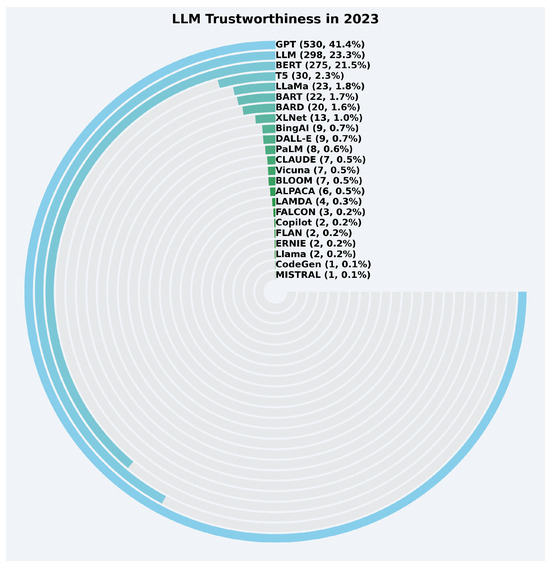

The trends shifted significantly in 2023, as depicted in Figure 17. The most notable change was the substantial increase in the focus on GPT, which surged to 530 occurrences, constituting 41.4% of the total interest. LLM also experienced notable growth, reaching 298 occurrences (23.3%). BERT maintained its relevance with 275 occurrences (21.5%). Other LLMs included T5 (30 occurrences, 2.3%), LLaMa (23 occurrences, 1.8%), BARD (20 occurrences, 1.6%), and BART (22 occurrences, 1.7%). Emerging models like CLAUDE, PaLM, and BingAI had modest but significant occurrences (ranging from 0.5% to 0.7%). Models such as MISTRAL, CodeGen, and Llama accounted for less than 0.3% each.

Figure 17.

LLM trustworthiness distribution in 2023.

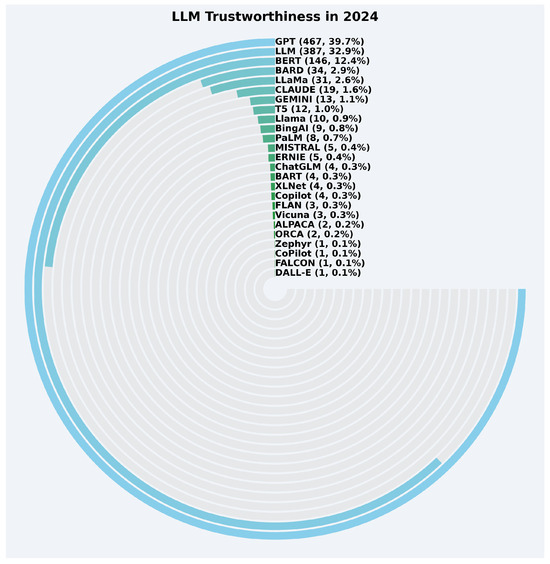

In 2024, as shown in Figure 18, GPT continued to lead with 467 occurrences, making up 39.7% of the total focus. LLM saw a slight increase to 387 occurrences (32.9%). However, BERT experienced a notable decline, with 146 occurrences (12.4%). Other LLMs such as LLaMa (31 occurrences, 2.6%), BARD (34 occurrences, 2.9%), and CLAUDE (19 occurrences, 1.6%) played smaller but growing roles. Emerging models such as GEMINI (13 occurrences, 1.1%), Llama (10 occurrences, 0.9%), and PaLM (8 occurrences, 0.7%) reflect a diversifying research focus. Models like DALL-E, MISTRAL, and FALCON each accounted for 0.1% to 0.4%, indicating initial but limited attention.

Figure 18.

LLM trustworthiness distribution in 2024 (up to June).

The trends across the three years show a clear dominance of GPT, followed by LLM and BERT, although their relative contributions vary over time. GPT experienced exponential growth, especially from 2022 to 2023, while LLM also gained momentum. BERT, despite its early dominance, saw a steady decline. Emerging models such as LLaMa, BARD, CLAUDE, and GEMINI reflect the growing diversity of LLMs. Smaller models like T5, BART, and XLNet retained some relevance, while newer entrants such as MISTRAL and ChatGLM highlight evolving trends in the LLM trustworthiness ecosystem.

5.4. Results of RQ4

Research Question: What types of research contributions are primarily presented in studies on LLM trustworthiness?

Objective: To classify the nature of research contributions, such as frameworks, methodologies, tools, or empirical studies, made by researchers in this area.

Analysis and Results

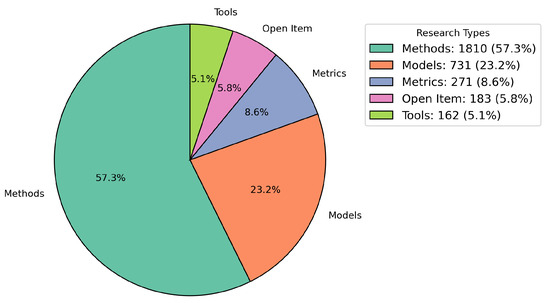

Figure 19 illustrates the distribution of the types of research contributions in studies on LLM trustworthiness. The analysis reveals the following key insights:

Figure 19.

Distribution of research contributions in LLM trustworthiness studies.

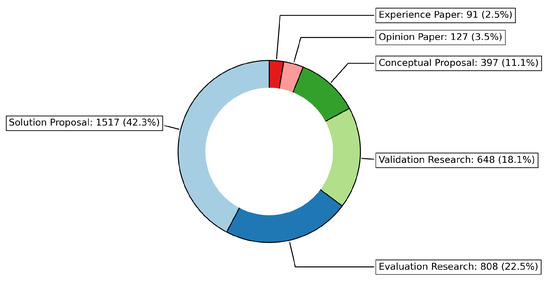

- Solution Proposal: The largest share of research types falls under solution proposals, with 1517 papers (42.3%). This indicates a strong focus on proposing new solutions and approaches for enhancing the trustworthiness of LLMs.

- Evaluation Research: Research on evaluation constitutes 808 papers (22.5%), highlighting a significant interest in assessing and evaluating the effectiveness of LLMs in ensuring their trustworthiness.

- Validation Research: Research focused on validation account for 648 papers (18.1%), emphasizing the importance of validating the methods, models, and solutions used to evaluate LLM trustworthiness.

- Conceptual Proposal: A total of 397 papers (11.1%) were categorized under conceptual proposals, suggesting that there is ongoing work to define new concepts and frameworks related to LLM trustworthiness.

- Opinion Paper: There were 127 papers (3.5%) related to opinion papers, indicating a smaller focus on providing subjective viewpoints or discussions related to LLM trustworthiness.

- Experience Paper: Experience papers constituted 91 papers (2.5%), reflecting a relatively smaller body of work focused on sharing practical experiences related to LLM trustworthiness.

These findings demonstrate that the majority of research in this area is focused on solution proposals and evaluation, with a notable amount of validation research. While conceptual, opinion, and experience papers are valuable, they make up a relatively smaller proportion of the overall research types.

5.5. Results of RQ5

Research Question: Which research methodologies are employed in the studies on LLM trustworthiness?

Objective: To understand the research approaches and methodologies that are commonly used to investigate LLM trustworthiness.

Analysis and Results

Figure 20 illustrates the distribution of research methodologies employed in studies on LLM trustworthiness. The analysis reveals the following key insights:

Figure 20.

Distribution of research methodologies to investigate LLM trustworthiness.

- Solution Proposal: The largest share of research methodologies is in the form of solution proposals, with 1520 papers (42.3%). This indicates a predominant focus on presenting novel solutions or frameworks aimed at enhancing the trustworthiness of LLMs.

- Evaluation Research: Evaluation research accounts for 810 papers (22.5%), highlighting the importance of assessing the effectiveness and trustworthiness of proposed models, tools, or methods.

- Validation Research: With 649 papers (18.0%), validation research plays a crucial role in confirming the reliability and robustness of LLMs within specific application contexts.

- Conceptual Proposal: 400 papers (11.1%) are categorized as conceptual proposals, which involve theoretical contributions aimed at advancing the understanding of LLM trustworthiness.

- Opinion Paper: Opinion papers make up 127 contributions (3.5%), representing subjective perspectives or expert views on LLM trustworthiness.

- Experience Paper: Experience papers, accounting for 91 contributions (2.5%), focus on practical experiences, lessons learned, or case studies related to LLM trustworthiness.

These findings suggest that most of the research on the trustworthiness of LLMs revolves around proposing solutions and evaluating their effectiveness. Validation research also plays a significant role, while conceptual contributions and experience papers are relatively less frequent.

5.6. Results of RQ6

Research Question: What is the distribution of publications in terms of academic and industrial affiliation concerning LLM trustworthiness?

Objective: To assess the level of interest and involvement from academic institutions and industry players in LLM trustworthiness.

Analysis and Results

Figure 21 illustrates the distribution of publications based on academic and industrial affiliation concerning LLM trustworthiness. The analysis reveals the following insights:

Figure 21.

Distribution of publications based on academic and industrial affiliation in LLM trustworthiness studies.

- Academia: The overwhelming majority of publications come from academic institutions, with 2209 papers (85.9%). This indicates that academic research is the primary driver of studies on LLM trustworthiness.

- Academia and Industry: A smaller share of 175 papers (6.8%) involve both academia and industry, reflecting some level of collaboration between the two sectors in this field.

- Industry: Industry-only contributions account for 76 papers (3.0%), suggesting that while industry is involved, it is less active in the publication of research focused on LLM trustworthiness.

- Academia and Government: Publications from academia and government combined make up 57 papers (2.2%), indicating a smaller involvement from government bodies in this research area.

- Not Given: In 39 papers (1.5%), the affiliation is not specified, leaving their academic or industrial origin unclear.

- Government: Research contributions from government institutions alone represent 12 papers (0.5%), showing a minimal role for government in this research domain.

- Industry and Government: Only three papers (0.1%) involve both industry and government, suggesting a very limited level of collaboration between these sectors in LLM trustworthiness research.

These findings highlight that academia is the dominant player in the research on LLM trustworthiness, with industry and government institutions having a relatively smaller, though growing, involvement.

6. Threats to the Validity of the Results

When performing a systematic mapping study, various issues can arise that may threaten the validity of the research. This section outlines the approach for addressing these validity threats, based on the guidelines provided in [73].