1. Introduction

Hand gesture recognition plays a vital role in facilitating interaction and manipulation of virtual objects in virtual reality (VR) and augmented reality (AR) environments. In the physical world, humans depend significantly on sensory inputs such as touch and vision to perceive and interact with their surroundings [

1]. Translating natural hand interactions into VR and AR environments presents significant challenges, especially in accurately detecting gestures and sensing virtual objects. To tackle these challenges, researchers have developed various techniques, with one of the most notable being gesture recognition combined with haptic feedback. This approach aims to improve training effectiveness and enhance the sense of realism in VR simulations. These methods provide significant advantages in performing complex tasks, indicating that haptic feedback plays a crucial role in successful interaction recognition [

2]. When haptic feedback is absent, users often report decreased satisfaction due to the lack of tactile confirmation, which undermines their sense of action validation and reduces overall engagement [

3]. For example, surgeons have pointed out that the absence of tactile feedback is a significant limitation in virtual reality (VR) training systems. They often prioritize haptic input over visual cues, particularly when navigating delicate and concealed anatomical structures, such as those found in the brain [

4].

Extensive studies have focused on exploring hand gestures and interactions with objects in VR and AR platforms, intending to improve control capabilities. Furthermore, advancements in haptic device technology have made it possible to recognize virtual objects through various sensory inputs, including motion, rotation, and other complex interactions. This evolution in haptic interfaces has significantly advanced human–computer interaction and, more generally, human–machine interaction, bridging the gap between the virtual and physical worlds by enriching tactile experiences [

5]. Lippi et al. in [

6] proposed a robotic manipulator with off-the-shelf vision-based tactile sensors on the gripper and a controller that delivers vibration feedback. Low-computation image processing converts tactile data into vibrations, allowing users to perceive applied pressure. Simultaneously, the controller translates linear and angular velocities into end-effector commands, ensuring a reactive and intuitive interface. In another study, Yentl et al. [

7] integrated Prime II Haptic gloves from Manus with an Oculus VR headset. Since the gloves are not recognized as hands, they require attached Oculus controllers, making optical hand tracking impossible. The controllers send hand location data to the PC via the HMD, while the gloves transmit hand shape data via wireless connection. When a fingertip touches a virtual model, the computer triggers a vibration response, allowing users to touch, grab, and interact directly with virtual objects. Pezent et al. [

8] used a compact bracelet device from Tasbi to simulate discrete events such as hand collisions, impacts, and high-frequency feedback. Their approach utilized these events to inform users about interactions with virtual objects. In addition, they employed proportional squeezing to convey continuous interaction forces.

Another group, Sun et al. in [

9], developed haptic feedback patterns using ATH-Rings for virtual reality (VR) applications, providing vibrotactile and thermo-haptic feedback. The tactile sensor, equipped with a nichrome (NiCr) heater, is mounted on the inner surface of the ring, ensuring direct contact with the finger’s skin. This design allows for precise monitoring of muscle swelling during finger bending while also delivering thermo-haptic feedback to the skin. In another study, Kim et al. [

10] introduced a wearable feedback interface to enhance ergonomics in heavy or repetitive industrial tasks. Wireless vibrotactile displays were created to alert users about excessive strain on their body joints by delivering appropriate tactile stimuli and guiding the individuals toward safer physical movements through tactile stimuli. Arbeláez et al. [

11] demonstrated the importance of vibrotactile feedback in improving spatial awareness by using a wristband to assist users in solving 2D puzzles. Their system analyzed the object’s position and rotation concerning its target placement, delivering feedback to guide necessary adjustments. Another study in P. Chiu et al. [

12] developed a haptics-based rehabilitation system using Novint Falcon gloves to enhance pinch skills and finger strength. They tracked the 3D positions of two devices, synchronized the data, and fed it into a game engine to create virtual balls. A force mechanics model calculated fingertip forces based on the spatial relationship with a target cube, generating precise haptic feedback for direction and strength. These haptic feedback technologies that integrated mixed reality were ballistically dependent on hand gesture recognition in the virtual environment. This is because humans often use their hands more frequently when communicating or interacting with their surroundings [

13].

Despite the importance of hand gesture recognition with mixed reality, the long-term and large-scale gestures in mid-air seem to lead to muscle fatigue [

14]. Other problems arise with rapid movement, where users may lose the interaction without notification when their hands disappear from the camera field of view of the head-mounted display (HMD). Another problem that a user may face in HMDs like HoloLens 2 (HL2) is related to near-interacting actions when the user’s hand is really on the bounding box that surrounds the objects, but the contact is not recognized. However, there are many applications with haptic feedback for VR and robotics and only a few examples with AR. In particular for AR, perfect finger tracking would be required to offer the user a realistic visual interaction perception, which cannot be currently guaranteed with current AR devices like the HL2. In the case of a VR application, the virtual representation of the hand and fingers visually informs the user about their pose with the virtual objects to clearly understand their collisions and therefore the possibility of activating some gestures, such as pinching. This approach remains effective even if the tracking is not entirely accurate. Users can adjust the position of their hands and fingers to achieve the desired virtual pose, even if there is some misalignment between their actual pose and the virtual representation. The VR system only requires coherent tracking regarding the direction of movement (up–down, left–right, etc.), and it can tolerate misalignment of up to several centimeters. In AR applications, errors that may happen during the tracking can lead to incorrect interactions with virtual objects. For example, a user may see their fingertips near an object but may fail to pinch it due to being below the required threshold distance.

Haptic feedback helps notify users of gesture recognition and compensates for misalignment between visual perception and actual contact. For example, a user knows pinching is feasible after a haptic notification, even if fingers do not yet appear in contact with the object. Simple devices, such as vibrating bracelets, can be beneficial [

11], while delivering haptic feedback directly to the fingers feels more natural and intuitive. Glove-like devices may interfere with hand and finger tracking, particularly with video-based tracking systems like those on the Microsoft HL2. Therefore, simpler devices that have less demanding wearability requirements may be a better option. For this reason, the goal of this paper is to compare simple vibrotactile rings with a full glove device, with their possibility for better recognition of a fundamental gesture like pinching, which leads to smoother and faster fine movement of objects with the Microsoft HL2. Pinching and fine object movement, particularly using the pincer grasp, are essential fine motor skills. The pincer grasp involves using the thumb and index finger to pick up and manipulate small objects. This skill is crucial for activities like self-feeding, holding pencils, and buttoning clothes. Developing these skills allows for precise hand movements and manipulation of small objects, which is vital for various daily tasks and activities.

2. Materials and Methods

2.1. Pinching Gestures Detection

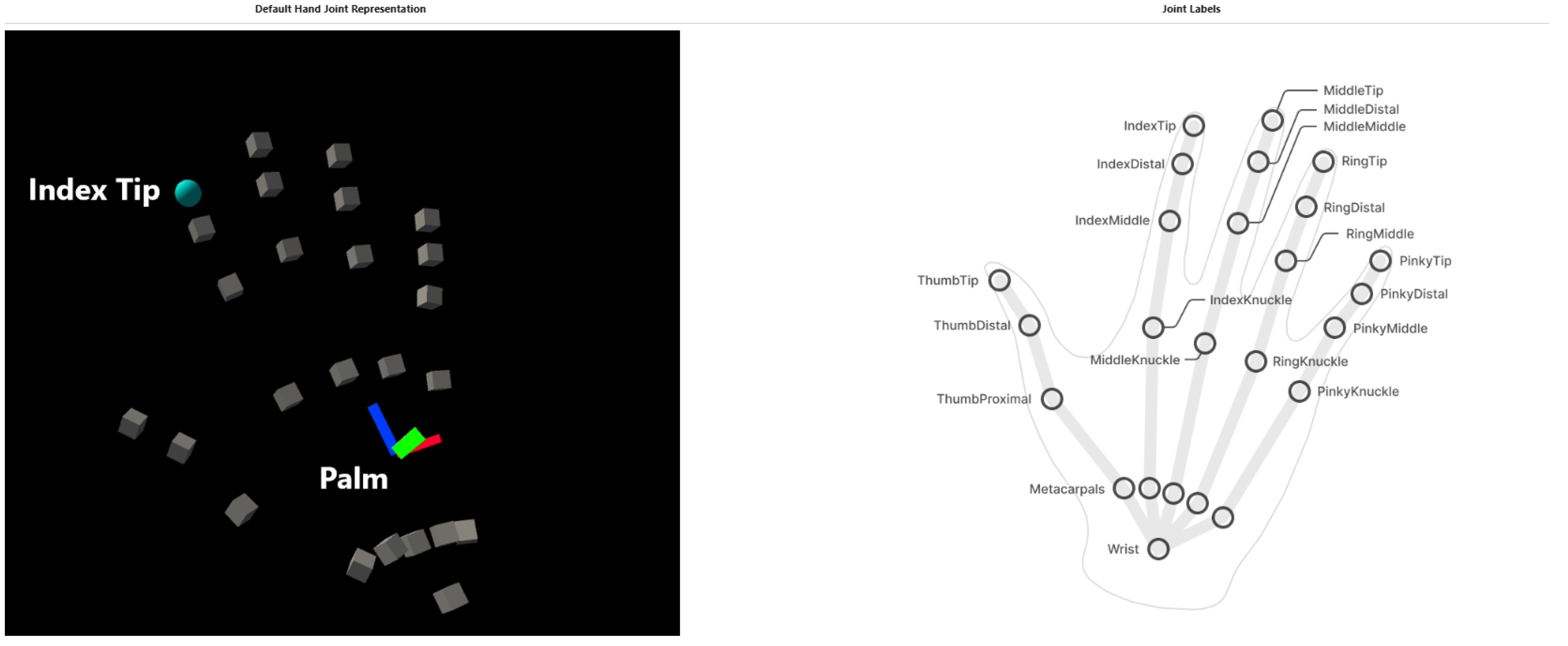

HL2 is a head- mounted display (HMD), manufactured by Microsoft and MicroVision Inc., USA, with onboard computation and tracking that projects digital information into real physical space. By integrating it with a Mixed Reality Toolkit (MRTK 2), users can interact with 3D virtual models coherently displayed in their environment. These devices offer many functionalities, including hand tracking that captures 26 joints for each hand, such as the palm and the tip of the index finger, allowing for precise tracking of hand movements [

15] (see

Figure 1). By leveraging these capabilities, the pose of the user’s index finger is detected and mapped as a rigid body. This enables the recognition of primary gestures, such as touching, through collision detection with virtual objects. Assigning mass properties to the index finger’s rigid body enables users to simulate physical interactions, such as pushing and moving virtual objects. As detailed in the introduction, one notable gesture to simulate is the act of pinching an object between the index finger and thumb, commonly referred to as the pincer grasp. This gesture enables precise movements of objects in six degrees of freedom (6DOF), as during precision assembly tasks.

The recognition of a pinch by the MRTK works on the boundary box around the objects, but our goal is to notify the pinch only when the object is between the index and the thumb, as in real life. For this reason, we detect the gesture by monitoring both the position and the curling motion of the index finger toward the thumb, based on the relative positions of its three phalangeal joints. When the fingers meet at a defined threshold, the application recognizes this as a pinch event, enabling more accurate and controlled interactions. We have defined a value range from 0 to 1, where 0 indicates a fully curled finger and 1 signifies a fully open and extended finger. Through practical experimentation, we found that a value between 0.6 and 0.8 effectively captures the pinch event. We have chosen a threshold of 0.75, as it strikes a good balance between accuracy and reliability [

16,

17,

18]. The threshold value of 0.8 has been used by the Leap Motion company (Ultraleap) for their hand-tracking device to detect pinch gestures [

19]. In our experiments, we found that using a threshold close to 0.8 reduces the likelihood of accidental pinch detections, making the system more reliable.

While it is technically possible to lower the threshold to around 0.6 for more precise detection, this often leads to inconsistencies, especially when users wear haptic gloves that cover their entire hands. Through our empirical tests, we determined that a threshold value of 0.75 provides the most precise and reliable results for pinch detection.

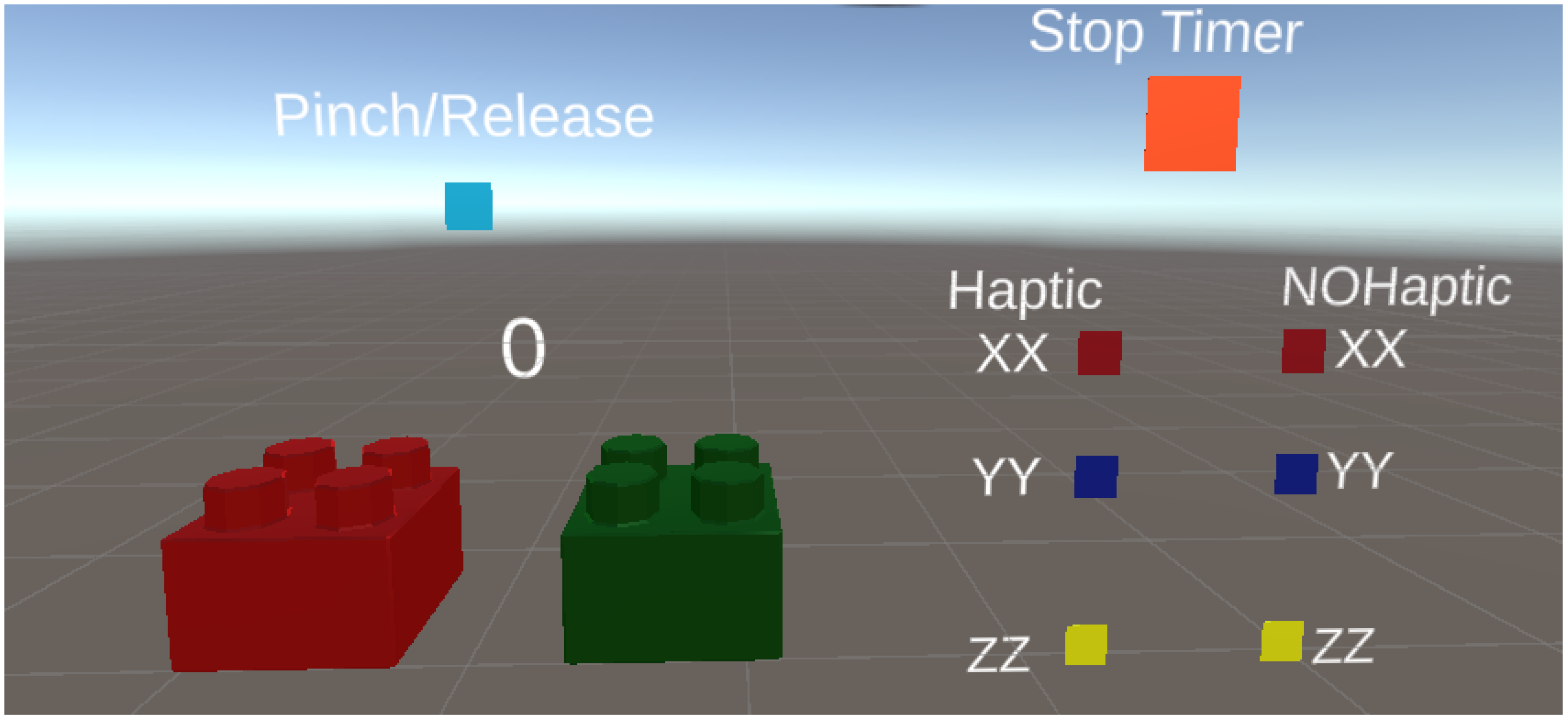

2.2. Pinching and Fine Object Moving Application

The application simulates the delicate task of assembling or picking and placing small, fragile objects in the real world by displaying two virtual LEGO cubes—one red and one green. These cubes help guide the user toward achieving the final goal with familiar objects. Users have to pinch the red cube and move it close to the green one without collisions as much as possible. Once the pinch gesture is activated, the position and orientation of the entire hand dictate the 6-DOF movements of the red cube. This simulation mimics the action of grasping an object, like a cup or a pen, between the thumb and index finger in real life. If the fingertip touches the cube without activating the pinching, a collision is recognized and the cube is moved by the physics engine, simulating what happens in reality when we try to pinch a small object but instead nudge it. Whenever the pinching is deactivated, the 6-DOF movement of the virtual object with the entire hand is deactivated, as in the real world when we decide to release an object or when we involuntarily lose the grip. The gravity was turned off as if the task were being performed in space. The application allows for simulation of the entire task along specific axes, such as the following:

- 1.

X-axis: the red and green cubes are placed side by side.

- 2.

Y-axis: the red cube starts below the green one.

- 3.

Z-axis: the red cube is positioned behind the green, roughly aligned with the user’s line of sight.

This scene also features a 3 × 2 grid of buttons (see

Figure 2). The first column contains three buttons to run the tests with haptic feedback on the X, Y, or Z axes, while the second column is for tests without haptics. A timer starts pressing each one of the buttons in the grid, and it is stopped by the user when they complete the task.

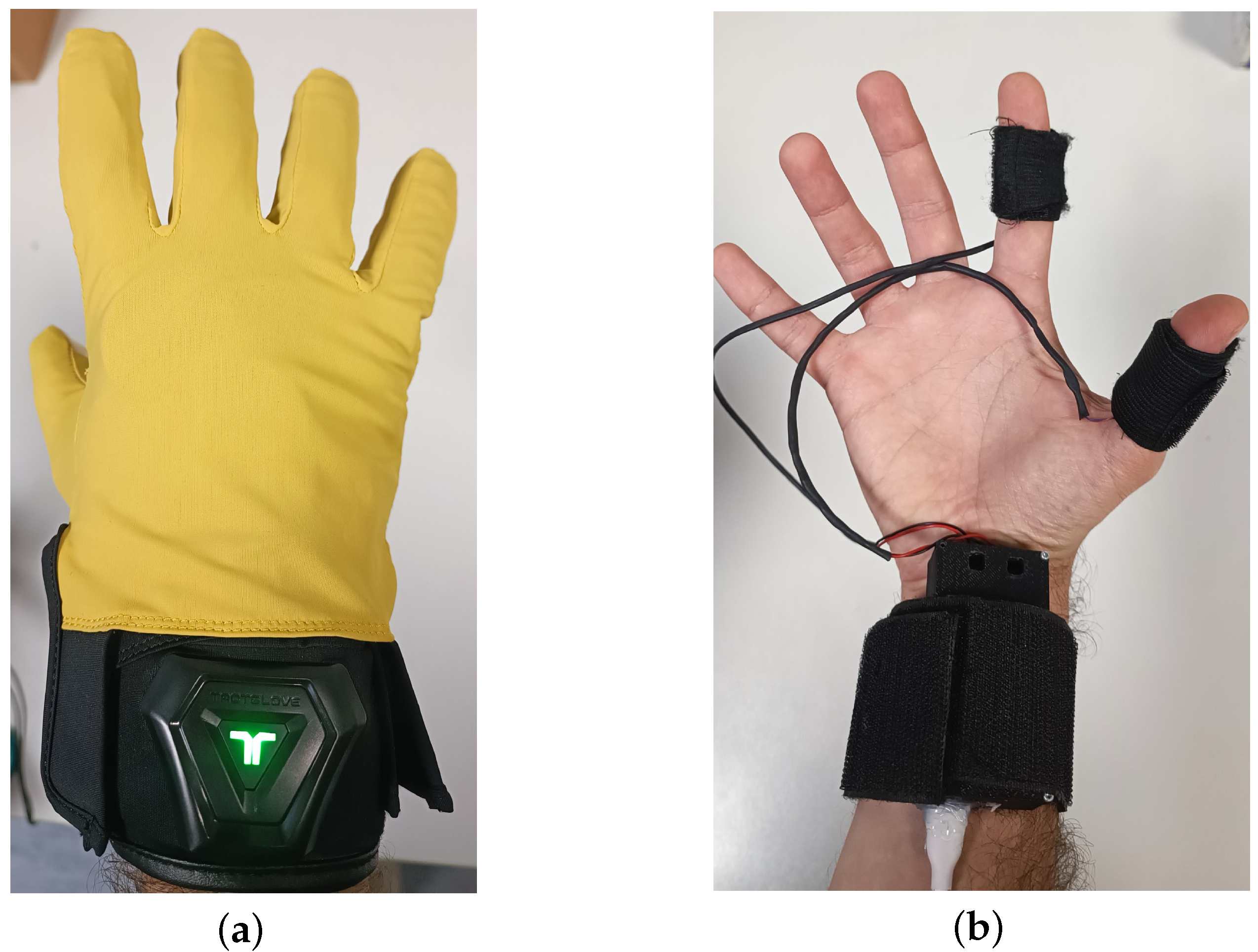

2.3. Haptic Feedback

Two haptic devices were used in this experiment. The first was a full vibrotactile glove developed by the company bHaptics, provided with full black gloves with a yellow cover to improve the hand trekking, and the gloves are combined with 6 vibrotactile motors (5 on the user tips and one on the wrist) (see

Figure 3a). The vibration patterns can be customized through the company’s portal website. Connecting the gloves to the HL2 involves two steps: first, installing the bHaptics package from the Unity Store into the Unity application, and second, downloading their companion application on an Android device. This application communicates with the HL2 over the same Wi-Fi network and sends events to the gloves via Bluetooth, enabling them to vibrate in the desired pattern [

20]. The second device features a pair of vibrotactile rings designed by the team at the University of Pisa, which has an electronic board (ESP8266), manufactured by Espressif Systems, Shanghai, China with custom code written through Arduino IDE, while the vibration motors are (FIT0774), from DFRobot, Shanghai, China (see

Figure 3b).

This device is integrated with the HL2 through UDP sender/receiver packets. The device includes a receiver for the UDP packets, allowing the HL2 to send messages with the desired vibration patterns directly to the device via Wi-Fi, without the need for communication with a PC, mobile device, or any additional hardware. Both devices are designed to offer fast response times and extremely low latency. They also offered a variety of vibration patterns tailored to different user actions. Three distinct patterns were selected for the bHaptics glove, each corresponding to different gesture intensities as follows:

Low intensity on a finger, 30% of the provided intensity: vibration on the index or on the thumb, used during simple touch of an object to notify about the identification of a collision but without the detection of pinching.

Medium intensity on both fingers 40% of the provided intensity: vibration on both the index finger and thumb, triggered during a pinching action. This vibration is maintained until the pinching is active.

High intensity 80% of the provided intensity: Full-glove vibration, including all fingers and the wrist, is used to signal task completion and indicate that no further interaction is needed. The task is considered completed when the distance error becomes lower than 1 mm.

The vibrotactile rings developed by the University of Pisa offer safe vibration patterns with a high degree of customization. Vibration patterns can be tailored by adjusting two key variables: frequency, which affects the intensity of the vibrations, and pulse-width modulation (PWM). The PWM operates at a frequency of 5 KHz, which controls the duration of each pulse within a cycle by rapidly turning the vibration motor on and off. This allows for the creation of various customized patterns for both continuous and discrete vibration effects. We set these intensities to match the user’s perception with that of the bHaptic glove:

Low intensity on a finger 20% of PWM cycle.

Medium intensity on both fingers 35% of PWM cycle.

High intensity: applied to both fingers when the task is completed and further interaction is unnecessary 50% of the PWM cycle.

The only difference between the haptic patterns is to signal the task completion: full glove vibration for the bHaptics glove vs. index and thumb vibration for the rings.

2.4. Experimental Trials

At first, we evaluated the haptic latency with both devices. We measured the response time after stimulating the vibration tactile feedback. To visually evaluate these vibration delays, we attached a strip of paper to the haptic device, which moved during the vibration, and we changed the color of the Lego block after each touch detection. With both devices, we recorded a 60 Hz video using an Android phone camera through the HL2 display.

Twelve users (8 males and 4 females) participated in the experiment. All of them with previous experience with the HL2. Eleven users are biomedical engineers at the University of Pisa, working full-time or part-time in the EndoCAS lab. Four of them have not yet graduated with their master’s degrees, while the others hold positions as PhD students, postdoctoral researchers, or technicians. Additionally, one participant is in the army but has previously conducted several experiments in our lab. The study was conducted by the Declaration of Helsinki, approved by the Bioethics Committee of the University of Pisa (resolution n. 51/2024 of 27 September 2024). All the participants enrolled signed an informed consent that also included the consent to publish any media (photos and videos) from the trial. Before starting the experiment, they received both verbal and visual instructions on a PC running the same application uploaded in the HL2, outlining the tasks and the user interface functionalities. Once the experiment began with the HL2, no additional guidance was given. All participants performed the experiments using three different modalities: the bHaptics glove, the haptics ring, and bare hands. The starting order of the modalities was randomized as the execution order along the axes. As said, the timer started when a participant clicked on the button corresponding to the desired axis in the button array. The application recorded all user interactions, including timestamps, the number of trial actions required to complete the task (consisting of the number of pinching activations), and the distance error between the final position of the red cube with respect to the optimal one, and saved these data into an Excel file.

Upon finishing the experiment, the participants completed a questionnaire consisting of 16 items based on a seven-point Likert scale ranging from −3 to +3, with 0 indicating a neutral response. The questionnaire was adapted from the ISO NORM 9241/110 standard for software usability evaluation [

21]. To facilitate a more effective analysis, the responses were categorized into six groups: Software Usability and Effectiveness, User Interface, User Support, Learning Time, User Flexibility, Troubleshooting, Error Handling, and Adaptability. We also asked the users for their written opinion about both the applications and the devices.

Statistical analyses were performed using IBM’s SPSS software 22 on the collected responses. This included calculating the mean, median, standard error (SE), standard deviation (SD), and interquartile range (IQR). Additionally, the Shapiro–Wilk test was used to assess the normality of the data distribution. None of the experimental result datasets satisfied the normality assumption since their

p-values were below 0.05. Consequently, we used a non-parametric test, specifically the Friedman test, to compare the three devices across three feedback modalities (number of trials, time, and distance error) to check for any significant differences. This approach was appropriate since our experimental design involved the same subjects testing the same application on different devices [

22]. Based on the resulting

p-values, if any were below 0.05 for a group test, a Wilcoxon Signed-Rank test was applied to identify where the significant differences lay between two different devices (Rings Vs bHaptics, Rings vs. Bare Hand, and bHaptics vs. Bare Hand). Furthermore, to control the false discovery rate (FDR) under arbitrary dependence, we applied the Benjamini–Yekutieli (BY) correction to adjust the

p-values using MATLAB 2025a by MathWorks.

3. Results

We estimated a delay between the haptic and visual events of 32 ms with both devices. The following

Table 1,

Table 2 and

Table 3 report the experiment results wearing bHaptics gloves and the haptic rings and with the bare hand. The median error along the combined three axes with the bare hand was 10.3 mm (IQR = 13.1), while the median time for task completion was 10.0 s (IQR = 4.0 s); with the bHaptic glove, it was 2.4 mm (IQR 5.2 mm) in a median time of 8.0 s (IQR = 6.0 s), and with the haptic rings, it was 2.0 mm (IQR 2.0 mm) in a median time of 6.0 s (IQR = 5.0 s). Trials represent the number of pinch actions.

Table 2 shows the results of the users while wearing the haptic rings.

The following

Table 3 shows the bare-hand results.

Results for the Friedman test showed there was no significant difference between the modalities in terms of number of trials (pinches), as the

p-value was 0.1133, while for the distance error

p-value was 9.3113 × 10

−6, indicating a significant difference at least between two modalities, and the same for the “Time” where the

p-value was 0.0016.

Table 4 shows the SPSS results for the combined data from each axis of each factor and compares the significant difference after applying a non-parametric (Wilcoxon Signed-Rank) test, corrected by the Benjamini–Yekutieli correction value using MATLAB 2025a.

Finally, based on the analysis of participants’ questionnaire responses and after organizing the questions into relevant categories,

Table 5 presents the corresponding metric values for each category.

4. Discussion

Our experiment integrated haptic feedback with augmented reality (AR) to provide users with an immersive experience similar to interacting with real-world objects. The results indicated that user performance, measured by distance error, improved with the use of haptic devices (see

Table 1 and

Table 2) compared with using bare hands (refer to

Table 3). This improvement was observed across all three axes and was evident in both the mean and median values. Additionally, the haptic devices were associated with lower standard deviation values, suggesting greater consistency in performance. The interquartile range (IQR) was also reduced when haptic feedback was utilized, further reinforcing the reliability and precision of the haptic devices. The results presented in

Table 4 highlight the statistical differences in distance error between the bare-hand condition and both the glove and ring conditions.

The haptic devices provide informative feedback that helps users understand task completion when the distance error is minimized. Furthermore, user performance in terms of execution time is significantly better with the haptic rings compared with the bare hand (see

Table 2 vs.

Table 3), which is also supported by the statistical differences shown in

Table 4. In contrast, there are no statistically significant differences when comparing the bare hand to the bHaptics glove. The differences in execution time between the two devices become particularly clear when directly compared (see

Table 1 vs.

Table 2), with the results favoring the haptic rings being statistically significant (as shown in

Table 3). The slower performance observed with the bHaptic glove can be attributed to the higher number of trials required by users, particularly along the X direction (see

Table 2 compared with

Table 3). The increased number of trials correlates with more pinching activations, indicating that as the number of trials increases. This phenomenon may occur due to a loss of tracking during pinching, which can result from the altered shape and appearance of the hand while wearing the full glove. However, statistically speaking, there was no significant difference in the number of trials conducted using the two haptic devices, as presented in

Table 4.

Given the questionnaire answers, users praised the application for its intuitiveness and its ability to facilitate task comprehension, achieving the highest score for the learning time (mean = 2.1 ± 0.31). This was further reflected in the questionnaire metrics: Software Usability and Effectiveness (mean = 1.5 ± 0.26), User Interface (mean = 1.5 ± 0.30), and User Flexibility (mean = 1.4 ± 0.44), all of which received high scores, presenting a significant improvement in user performance, as shown (

Table 5). Nonetheless, the study also identified areas for improvement. User Support received a lower mean score (0.47 ± 0.35), as did Troubleshooting (0.75 ± 0.37) and Adaptability (0.85 ± 0.52). These results indicate that enhancements could be made by incorporating system-generated messages or prompts. For example, notifying users when a task is active or reminding them if they accidentally initiate a new task before completing the current one could be beneficial. Participants’ feedback echoed these suggestions. The lowest score recorded was for Error Handling (mean = −0.10 ± 0.48). We attribute this to the lack of prior training in recognizing and avoiding incorrect or unintended actions, as pointed out by several users in their responses.

The almost entirely positive responses obtained for all questions confirm the quality of the application and the significance of the experiments, which were not influenced by intrinsic limitations of the application itself. These results showed that most categories of the questionnaire reflected an improved user experience during the experiment. However, the categories related to software functionality, graphic interface, and learning time had the lowest significance values. Despite this, they were still rated positively on average, noting that they found the software easy to learn. Conversely, users perceived that the software’s error handling could be unclear or inconsistent. Additionally, there was some disagreement regarding the application’s adaptability. We understood these two results, as some users experienced a lack of visual guidance, such as notifications or error messages. Since none of the participants had prior experience with haptic feedback, it was important for us to understand the challenges that typical users might face to enhance our application. In the written opinions, many users highlighted the benefits of haptic feedback in general. However, a few pointed out its drawbacks, primarily the loss of hand tracking when using bHaptics gloves, confirming the potential hand-tracking issues associated with wearing the bHaptics gloves, which we also highlighted as the number of trials increased.

5. Conclusions

Pinching and fine movement of objects, particularly the use of the pincer grasp, are essential fine motor skills. Integration of haptic feedback with augmented reality can be useful to notify the user when this gesture is recognized. Additionally, this feedback helps compensate for any misalignment in visual perception between the tracked fingertip and virtual objects, leading to improved spatial precision. Our results show that the median distance error (with IQR) on all three axes varied significantly across different conditions. The bare-hand condition had the highest median distance error, of 10.3 mm (IQR = 13.1 mm), and the slowest execution time of 10.0 s (IQR = 4.0 s). The bHaptics glove improved performance, resulting in a lower median error of 2.4 mm (IQR = 5.2 mm) and an execution time of 8.0 s (IQR = 6.0 s). The haptic rings achieved the highest precision, with the lowest error of 2.0 mm (IQR = 2.0 mm) and the fastest execution time of 6.0 s (IQR = 5.0 s). Based on our experiments, it is possible to improve performance in terms of execution time without sacrificing spatial accuracy. However, the results may vary depending on the haptic device used. Simpler devices, such as the described haptic rings, can outperform more complex devices like the bHaptic gloves, offering enhanced accuracy and faster execution times. The haptic gloves may face challenges with tracking the hand and fingers, particularly when relying on the video-based tracking provided by the Microsoft HoloLens 2. Moreover, the haptic rings are also superior in terms of wearability. This study holds significant promise for future research and practical deployments, especially in fields that require high levels of precision and depth perception. One of the most impactful areas can be surgical training and guidance, where haptic feedback can help prevent critical errors, such as penetrating incorrect tissues or organs. Additionally, it can assist users in comprehending the spatial depth of their environment, an essential factor in various professional applications beyond healthcare, including robotics, industrial design, and remote manipulation. The experimental approaches described in this paper can also be applied in the future to other gestures, such as power grasping.