Multi-Agentic LLMs for Personalizing STEM Texts

Abstract

1. Introduction

2. Related Work

2.1. Intelligent Tutoring Systems in Education

2.2. Multi-Agent Large Language Models

3. Methods

3.1. Study Design

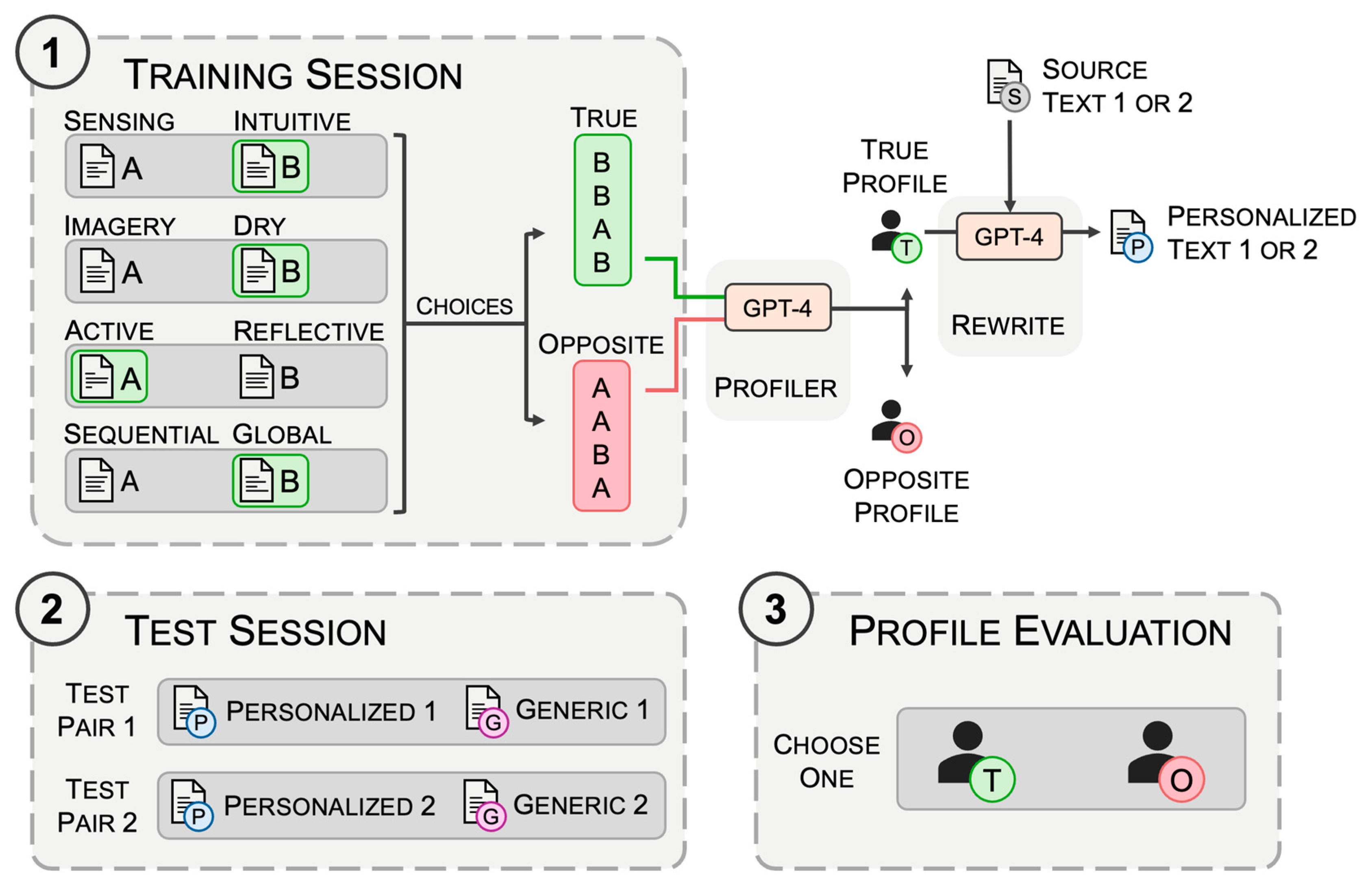

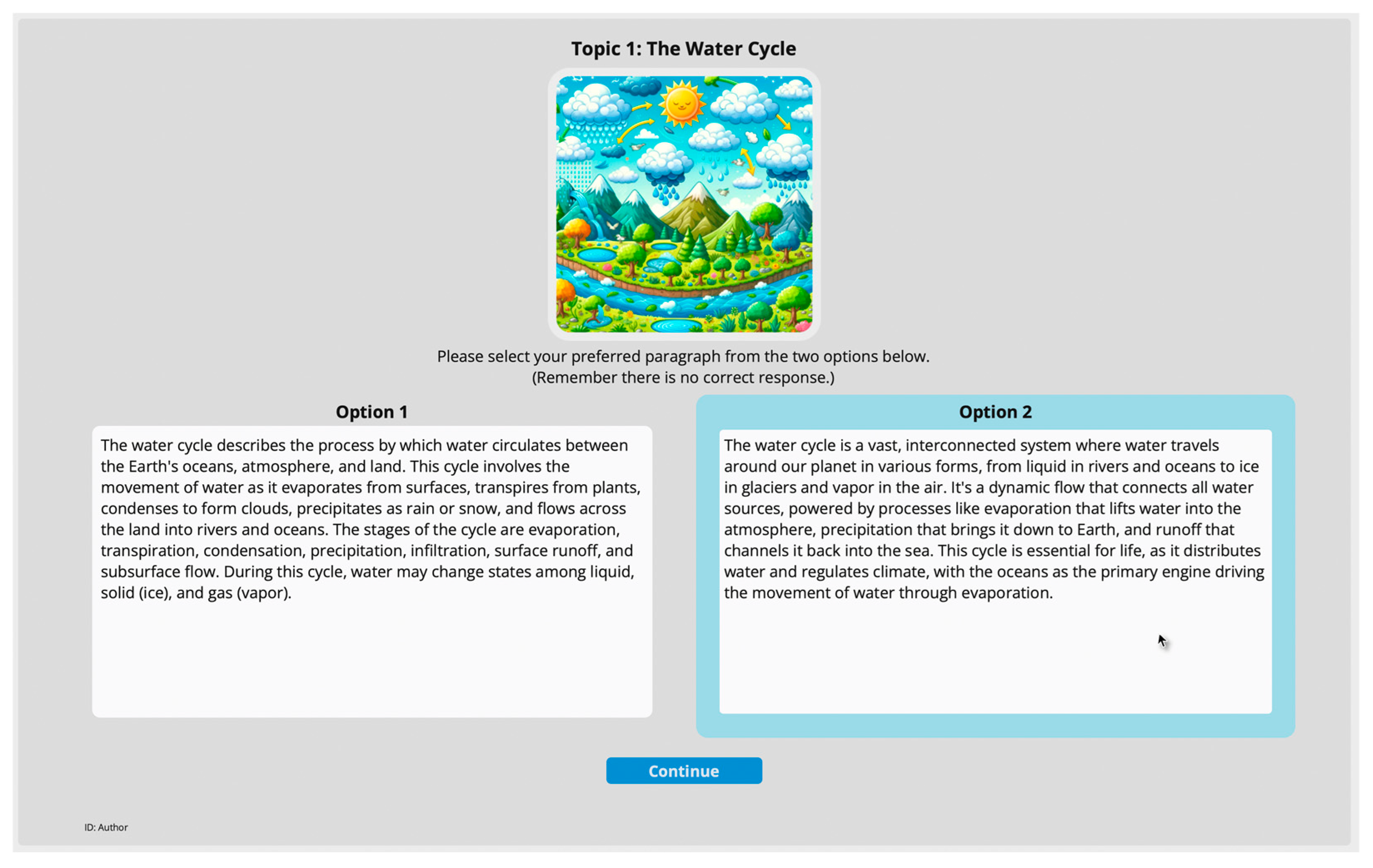

3.1.1. Component 1: Training Session

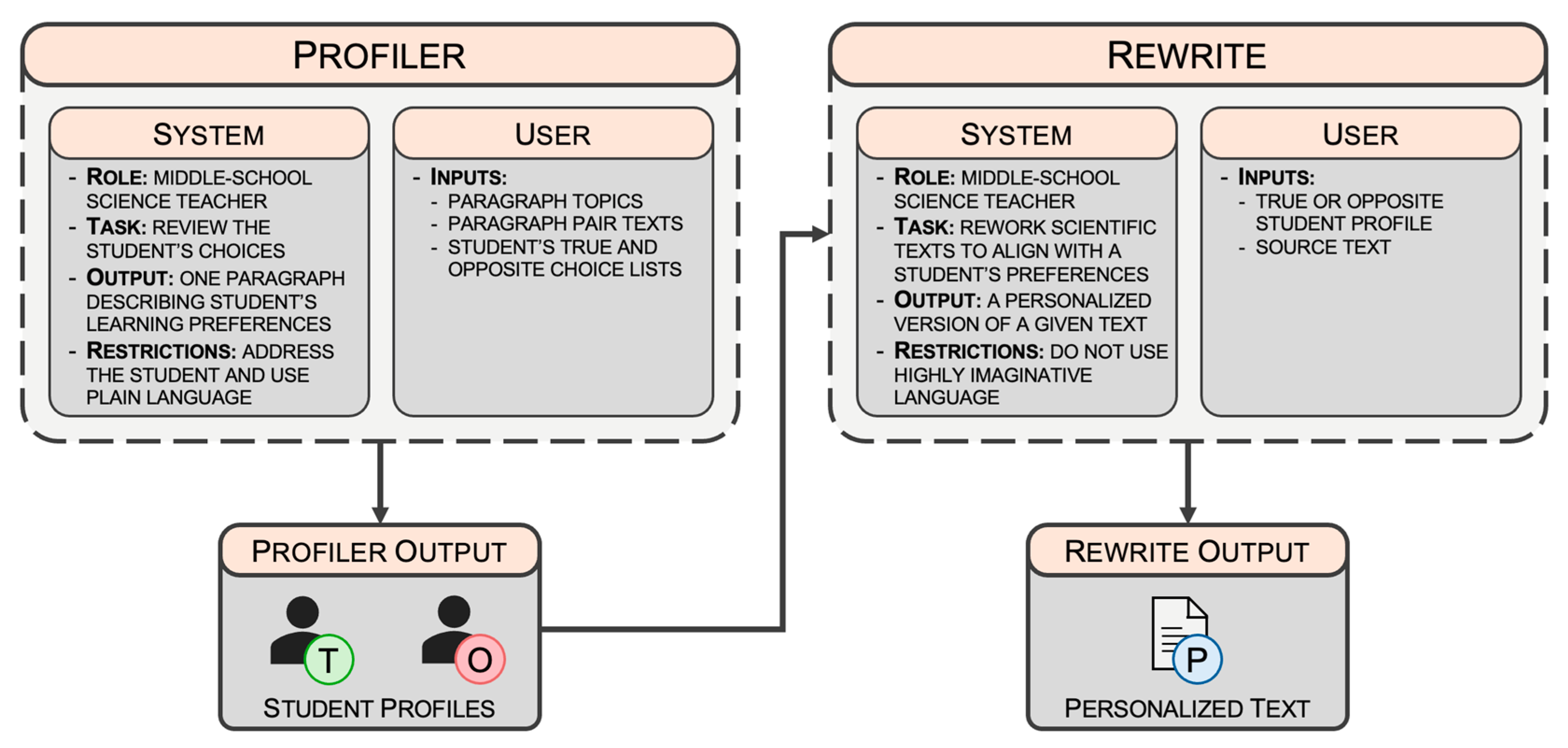

3.1.2. GPT-4 Agents and Component 2: Test Session

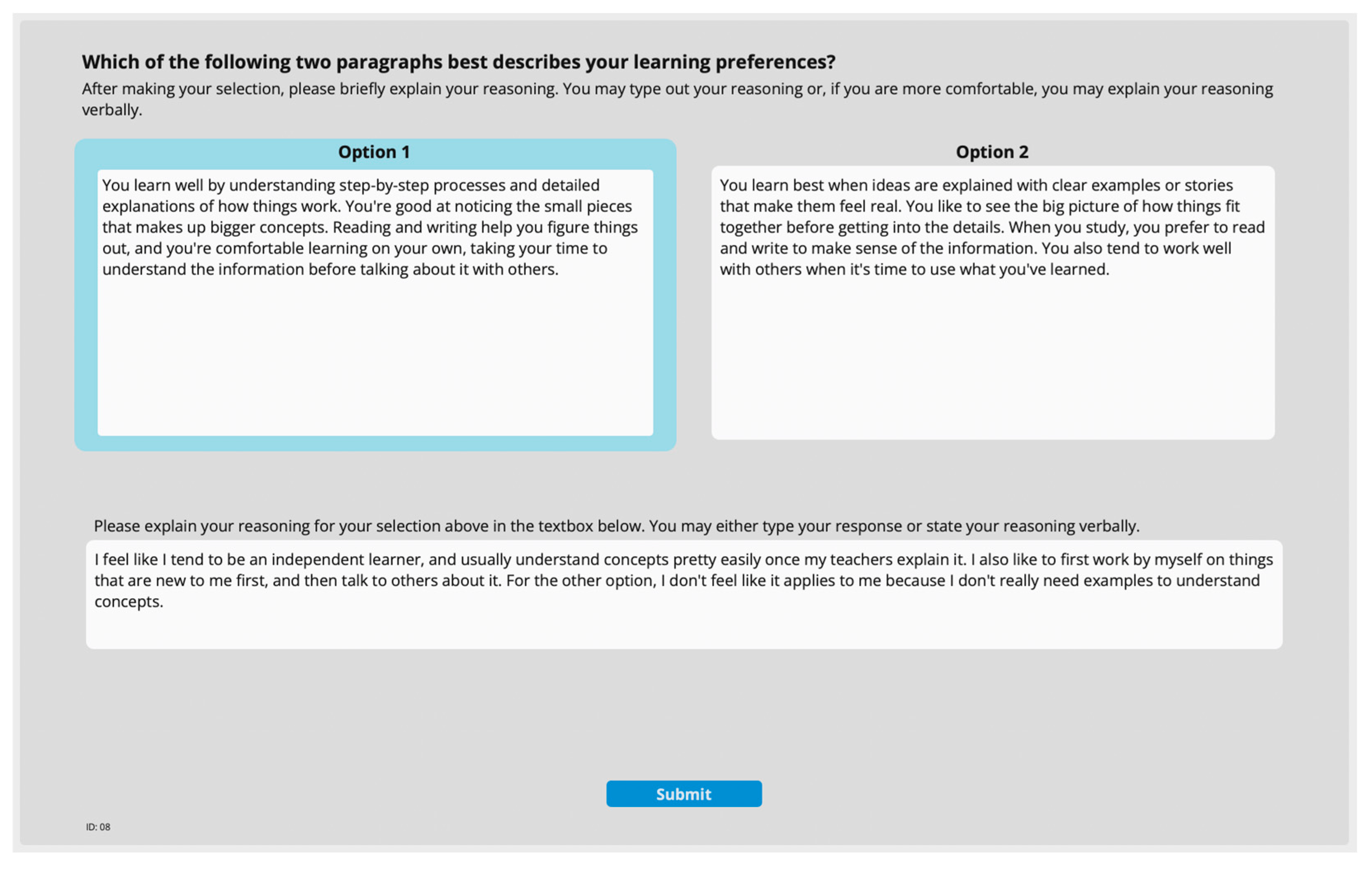

3.1.3. Component 3: Profile Evaluation

3.1.4. Control Group and Summary

3.2. Participants

3.3. Research Procedure

4. Results

5. Discussion

5.1. Limitations and Future Research

5.2. Ethical Considerations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| API | Application programming interface |

| CI | Confidence interval |

| GPT | Generative pre-trained transformer |

| GUI | Graphical user interface |

| ITS | Intelligent tutoring system |

| LLM | Large language model |

| MAS | Multi-agent system |

| NGSS | Next-Generation Science Standards |

| PL | Personalized learning |

References

- Wooldridge, M. An Introduction to Multiagent Systems, 2nd ed.; John Wiley & Sons Ltd.: West Sussex, UK, 2009. [Google Scholar]

- Havrylov, S.; Titov, I. Emergence of Language with Multi-Agent Games: Learning to Communicate with Sequences of Symbols. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U., Von Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Han, S.; Zhang, Q.; Yao, Y.; Jin, W.; Xu, Z. LLM Multi-Agent Systems: Challenges and Open Problems. arXiv 2024, arXiv:2402.03578. [Google Scholar]

- Li, G.; Al Kadeer Hammoud, H.A.; Itani, H.; Khizbullin, D. CAMEL: Communicative Agents for “Mind” Exploration of Large Language Model Society. In Proceedings of the Advances in Neural Information Processing Systems 36, New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Al-Dahdooh, R.; Marouf, A.; Ghali, M.J.A.; Mahdi, A.O.; Abu-Nasser, B.S.; Abu-Naser, S.S. Explainable AI (XAI). Int. J. Acad. Eng. Res. 2024, 8, 65–70. [Google Scholar]

- Dockterman, D. Insights from 200+ Years of Personalized Learning. NPJ Sci. Learn. 2018, 3, 15. [Google Scholar] [CrossRef] [PubMed]

- National Academy of Engineering Grand Challenges for Engineering—Advance Personalized Learning. Available online: http://www.engineeringchallenges.org/challenges/learning.aspx (accessed on 7 June 2024).

- Shemshack, A.; Spector, J.M. A Systematic Literature Review of Personalized Learning Terms. Smart Learn. Environ. 2020, 7, 33. [Google Scholar] [CrossRef]

- Walkington, C.; Bernacki, M.L. Appraising Research on Personalized Learning: Definitions, Theoretical Alignment, Advancements, and Future Directions. J. Res. Technol. Educ. 2020, 52, 235–252. [Google Scholar] [CrossRef]

- Bernacki, M.L.; Greene, M.J.; Lobczowski, N.G. A Systematic Review of Research on Personalized Learning: Personalized by Whom, to What, How, and for What Purpose(s)? Educ. Psychol. Rev. 2021, 33, 1675–1715. [Google Scholar] [CrossRef]

- Zhang, L.; Basham, J.D.; Yang, S. Understanding the Implementation of Personalized Learning: A Research Synthesis. Educ. Res. Rev. 2020, 31, 100339. [Google Scholar] [CrossRef]

- U.S. Department of Education. National Education Technology Plan Update; U.S. Department of Education: Washington, DC, USA, 2017.

- Zhang, L.; Carter, R.A.; Bernacki, M.L.; Greene, J.A. Personalization, Individualization, and Differentiation: What Do They Mean and How Do They Differ for Students with Disabilities? Exceptionality 2024. [Google Scholar] [CrossRef]

- Adelman, H.S.; Taylor, L.L. Addressing Barriers to Learning: In the Classroom and Schoolwide; University of California, Los Angeles: Los Angeles, CA, USA, 2018. [Google Scholar]

- Arnaiz Sánchez, P.; de Haro Rodríguez, R.; Maldonado Martínez, R.M. Barriers to Student Learning and Participation in an Inclusive School as Perceived by Future Education Professionals. J. New Approaches Educ. Res. 2019, 8, 18–24. [Google Scholar] [CrossRef]

- Tetzlaff, L.; Schmiedek, F.; Brod, G. Developing Personalized Education: A Dynamic Framework. Educ. Psychol. Rev. 2021, 33, 863–882. [Google Scholar] [CrossRef]

- Kulik, J.A.; Fletcher, J.D. Effectiveness of Intelligent Tutoring Systems: A Meta-Analytic Review. Rev. Educ. Res. 2016, 86, 42–78. [Google Scholar] [CrossRef]

- Cardoso, J.; Bittencourt, G.; Frigo, L.B.; Pozzebon, E.; Postal, A. Mathtutor: A Multi-Agent Intelligent Tutoring System. In Artificial Intelligence Applications and Innovations; Bramer, M., Devedvic, V., Eds.; Springer: Boston, MA, USA, 2004; Volume 154, pp. 231–242. [Google Scholar]

- Giuffra, P.; Cecelia, E.; Silveria, R. A Multi-Agent System Model to Integrate Virtual Learning Environments and Intelligent Tutoring Systems. Int. J. Interact. Multimed. Artif. Intell. 2013, 2, 51–58. [Google Scholar] [CrossRef][Green Version]

- Tweedale, J.; Ichalkaranje, N.; Sioutis, C.; Jarvis, B.; Consoli, A.; Phillips-Wren, G. Innovations in Multi-Agent Systems. J. Netw. Comput. Appl. 2007, 30, 1089–1115. [Google Scholar] [CrossRef]

- Dorri, A.; Kanhere, S.S.; Jurdak, R. Multi-Agent Systems: A Survey. IEEE Access 2018, 6, 28573–28593. [Google Scholar] [CrossRef]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A. Language Models Are Few-Shot Learners. Adv. Neural. Inf. Process Syst. 2020, 33, 1877–1901. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U., Von Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Chen, J.; Liu, Z.; Huang, X.; Wu, C.; Liu, Q.; Jiang, G.; Pu, Y.; Lei, Y.; Chen, X.; Wang, X. When Large Language Models Meet Personalization: Perspectives of Challenges and Opportunities. World Wide Web 2024, 27, 42. [Google Scholar] [CrossRef]

- Henkel, O.; Hills, L.; Boxer, A.; Roberts, B.; Levonian, Z. Can Large Language Models Make the Grade? An Empirical Study Evaluating LLMs Ability To Mark Short Answer Questions in K-12 Education. In Proceedings of the Eleventh ACM Conference on Learning @ Scale, Atlanta, GA, USA, 18–20 July 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 300–304. [Google Scholar]

- Nunes, D.; Primi, R.; Pires, R.; Lotufo, R.; Nogueira, R. Evaluating GPT-3.5 and GPT-4 Models on Brazilian University Admission Exams. arXiv 2023. [Google Scholar] [CrossRef]

- Friday, M.; Vaccaro, M.; Zaghi, A. Leveraging Large Language Models for Early Study Optimization in Educational Research. In Proceedings of the 2025 ASEE Annual Conference & Exposition, Montreal, QC, Canada, 22–25 June 2025. [Google Scholar]

- Maltese, A.V.; Melki, C.S.; Wiebke, H.L. The Nature of Experiences Responsible for the Generation and Maintenance of Interest in STEM. Sci. Educ. 2014, 98, 937–962. [Google Scholar] [CrossRef]

- Mendez, S.L.; Yoo, M.S.; Rury, J.L. A Brief History of Public Education in the United States. In The Wiley Handbook of School Choice; Fox, R.A., Buchanan, N.K., Eds.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2017; pp. 13–27. [Google Scholar]

- Bartolomé, A.; Castañeda, L.; Adell, J. Personalisation in Educational Technology: The Absence of Underlying Pedagogies. Int. J. Educ. Technol. High. Educ. 2018, 15, 14. [Google Scholar] [CrossRef]

- Hooshyar, D.; Pedaste, M.; Yang, Y.; Malva, L.; Hwang, G.-J.; Wang, M.; Lim, H.; Delev, D. From Gaming to Computational Thinking: An Adaptive Educational Computer Game-Based Learning Approach. J. Educ. Comput. Res. 2021, 59, 383–409. [Google Scholar] [CrossRef]

- Huang, X.; Craig, S.D.; Xie, J.; Graesser, A.; Hu, X. Intelligent Tutoring Systems Work as a Math Gap Reducer in 6th Grade After-School Program. Learn Individ. Differ. 2016, 47, 258–265. [Google Scholar] [CrossRef]

- Liu, M.; McKelroy, E.; Corliss, S.B.; Carrigan, J. Investigating the Effect of an Adaptive Learning Intervention on Students’ Learning. Educ. Technol. Res. Dev. 2017, 65, 1605–1625. [Google Scholar] [CrossRef]

- Price, T.W.; Dong, Y.; Lipovac, D. ISnap: Towards Intelligent Tutoring in Novice Programming Environments. In Proceedings of the 2017 ACM SIGCSE Technical Symposium on Computer Science Education, Seattle, WA, USA, 8–11 March 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 483–488. [Google Scholar]

- Sibley, L.; Lachner, A.; Plicht, C.; Fabian, A.; Backfisch, I.; Scheiter, K.; Bohl, T. Feasibility of Adaptive Teaching with Technology: Which Implementation Conditions Matter? Comput. Educ. 2024, 219, 105108. [Google Scholar] [CrossRef]

- Shute, V.J.; Psotka, J. Intelligent Tutoring Systems: Past, Present, and Future. In Handbook of Research for Educational Communications and Technology; Macmillian: New York, NY, USA, 1994; pp. 570–600. [Google Scholar]

- Reiser, B.J.; Anderson, J.R.; Farrel, R.G. Dynamic Student Modelling in an Intelligent Tutor for Lisp Programming. In Proceedings of the IJCAI 1985, Los Angeles, CA, USA, 18–23 August 1985. [Google Scholar]

- Ford, L. A New Intelligent Tutoring System. Br. J. Educ. Technol. 2008, 39, 311–318. [Google Scholar] [CrossRef]

- Heffernan, N.T.; Koedinger, K.R. An Intelligent Tutoring System Incorporating a Model of an Experienced Human Tutor. In International Conference on Intelligent Tutoring Systems; Springer: Berlin/Heidelberg, Germany, 2002; pp. 596–608. [Google Scholar]

- Keleş, A.; Ocak, R.; Keleş, A.; Gülcü, A. ZOSMAT: Web-Based Intelligent Tutoring System for Teaching–Learning Process. Expert Syst. Appl. 2009, 36, 1229–1239. [Google Scholar] [CrossRef]

- VanLehn, K. The Relative Effectiveness of Human Tutoring, Intelligent Tutoring Systems, and Other Tutoring Systems. Educ. Psychol. 2011, 46, 197–221. [Google Scholar] [CrossRef]

- Xu, Z.; Wijekumar, K.; Ramirez, G.; Hu, X.; Irey, R. The Effectiveness of Intelligent Tutoring Systems on K-12 Students’ Reading Comprehension: A Meta-analysis. Br. J. Educ. Technol. 2019, 50, 3119–3137. [Google Scholar] [CrossRef]

- Contrino, M.F.; Reyes-Millán, M.; Vázquez-Villegas, P.; Membrillo-Hernández, J. Using an Adaptive Learning Tool to Improve Student Performance and Satisfaction in Online and Face-to-Face Education for a More Personalized Approach. Smart Learn. Environ. 2024, 11, 6. [Google Scholar] [CrossRef]

- McCarthy, K.S.; Watanabe, M.; Dai, J.; McNamara, D.S. Personalized Learning in ISTART: Past Modifications and Future Design. J. Res. Technol. Educ. 2020, 52, 301–321. [Google Scholar] [CrossRef]

- Bulger, M. Personalized Learning: The Conversations We’re Not Having. Data Soc. 2016, 22, 1–29. [Google Scholar]

- Chen, E.; Lee, J.-E.; Lin, J.; Koedinger, K. GPTutor: Great Personalized Tutor with Large Language Models for Personalized Learning Content Generation. In Proceedings of the Eleventh ACM Conference on Learning @ Scale, Atlanta, GA, USA, 18–20 July 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 539–541. [Google Scholar]

- Kärger, P.; Olmedilla, D.; Abel, F.; Herder, E.; Siberski, W. What Do You Prefer? Using Preferences to Enhance Learning Technology. IEEE Trans. Learn. Technol. 2008, 1, 20–33. [Google Scholar] [CrossRef]

- Dhingra, S.; Singh, M.; Vaisakh, S.B.; Malviya, N.; Gill, S.S. Mind Meets Machine: Unravelling GPT-4’s Cognitive Psychology. BenchCouncil Trans. Benchmarks Stand. Eval. 2023, 3, 100139. [Google Scholar] [CrossRef]

- Lee, D.H.; Chung, C.K. Enhancing Neural Decoding with Large Language Models: A GPT-Based Approach. In Proceedings of the 12th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Republic of Korea, 26–28 February 2024; IEEE: Piscataway, NJ, USA, 2024. [Google Scholar]

- Waisberg, E.; Ong, J.; Masalkhi, M.; Kamran, S.A.; Zaman, N.; Sarker, P.; Lee, A.G.; Tavakkoli, A. GPT-4: A New Era of Artificial Intelligence in Medicine. Ir. J. Med. Sci. 2023, 192, 3197–3200. [Google Scholar] [CrossRef]

- OpenAI GPT-4. Available online: https://openai.com/index/gpt-4-research/ (accessed on 27 June 2024).

- Bansal, G.; Chamola, V.; Hussain, A.; Guizani, M.; Niyato, D. Transforming Conversations with AI—A Comprehensive Study of ChatGPT. Cognit. Comput. 2024, 16, 2487–2510. [Google Scholar] [CrossRef]

- Katz, D.M.; Bommarito, M.J.; Gao, S.; Arredondo, P. GPT-4 Passes the Bar Exam. Philos. Trans. R. Soc. A 2024, 382, 20230254. [Google Scholar] [CrossRef]

- Roy, S.; Khatua, A.; Ghoochani, F.; Hadler, U.; Nejdl, W.; Ganguly, N. Beyond Accuracy: Investigating Error Types in GPT-4 Responses to USMLE Questions. In Proceedings of the SIGIR ’24, 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, Washington, DC, USA, 14–18 July 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 1073–1082. [Google Scholar]

- Kortemeyer, G. Toward AI Grading of Student Problem Solutions in Introductory Physics: A Feasibility Study. Phys. Rev. Phys. Educ. Res. 2023, 19, 20163. [Google Scholar] [CrossRef]

- Doughty, J.; Wan, Z.; Bompelli, A.; Qayum, J.; Wang, T.; Zhang, J.; Zheng, Y.; Doyle, A.; Sridhar, P.; Agarwal, A.; et al. A Comparative Study of AI-Generated (GPT-4) and Human-Crafted MCQs in Programming Education. In Proceedings of the 26th Australasian Computing Education Conference, Sydney, NSW, Australia, 29 January–2 February 2024; pp. 114–123. [Google Scholar]

- Guo, Q.; Zhen, J.; Wu, F.; He, Y.; Qiao, C. Can Students Make STEM Progress With the Large Language Models (LLMs)? An Empirical Study of LLMs Integration Within Middle School Science and Engineering Practice. J. Educ. Comput. Res. 2025, 63, 372–405. [Google Scholar] [CrossRef]

- Mohammed, I.A.; Bello, A.; Ayuba, B. Effect of Large Language Models Artificial Intelligence Chatgpt Chatbot on Achievement of Computer Education Students. Educ. Inf. Technol. 2025, 30, 11863–11888. [Google Scholar] [CrossRef]

- Mizrahi, G. Understanding Prompting and Prompt Techniques. In Unlocking the Secrets of Prompt Engineering: Master the Art of Creative Language Generation to Accelerate Your Journey from Novice to Pro; Packt Publishing Limited: Birmingham, UK, 2024; ISBN 978-1-83508-383-3. [Google Scholar]

- Heston, T.F.; Khun, C. Prompt Engineering in Medical Education. Int. Med. Educ. 2023, 2, 198–205. [Google Scholar] [CrossRef]

- Lee, A.V.Y.; Teo, C.L.; Tan, S.C. Prompt Engineering for Knowledge Creation: Using Chain-of-Thought to Support Students’ Improvable Ideas. AI 2024, 5, 1446–1461. [Google Scholar] [CrossRef]

- Leinonen, J.; Denny, P.; MacNeil, S.; Sarsa, S.; Bernstein, S.; Kim, J.; Tran, A.; Hellas, A. Comparing Code Explanations Created by Students and Large Language Models. In Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V. 1 (ITiCSE 2023), Turku, Finland, 10–12 July 2023; pp. 124–130. [Google Scholar]

- Zhang, P.; Tur, G. A Systematic Review of ChatGPT Use in K-12 Education. Eur. J. Educ. 2024, 59, e12599. [Google Scholar] [CrossRef]

- Chu, Z.; Wang, S.; Xie, J.; Zhu, T.; Yan, Y.; Ye, J.; Zhong, A.; Hu, X.; Liang, J.; Yu, P.S.; et al. LLM Agents for Education: Advances and Applications. arXiv 2025, arXiv:2503.11733. [Google Scholar]

- Jauhiainen, J.S.; Guerra, A.G. Generative AI and ChatGPT in School Children’s Education: Evidence from a School Lesson. Sustainability 2023, 15, 14025. [Google Scholar] [CrossRef]

- Wang, T.; Zhan, Y.; Lian, J.; Hu, Z.; Yuan, N.J.; Zhang, Q.; Xie, X.; Xiong, H. LLM-Powered Multi-Agent Framework for Goal-Oriented Learning in Intelligent Tutoring System. In Proceedings of the Companion ACM on Web Conference 2025, Sydney, NSW, Australia, 28 April–2 May 2025; ACM: New York, NY, USA, 2025; pp. 510–519. [Google Scholar]

- OpenAI Prompt Engineering. Available online: https://platform.openai.com/docs/guides/prompt-engineering (accessed on 31 May 2024).

- Lee, U.; Jung, H.; Jeon, Y.; Sohn, Y.; Hwang, W.; Moon, J.; Kim, H. Few-Shot Is Enough: Exploring ChatGPT Prompt Engineering Method for Automatic Question Generation in English Education. Educ. Inf. Technol. 2024, 29, 11483–11515. [Google Scholar] [CrossRef]

- Felder, R.M.; Silverman, L.K. Learning and Teaching Styles in Engineering Education. Eng. Educ. 1988, 78, 674–681. [Google Scholar]

- Kirschner, P.A. Stop Propagating the Learning Styles Myth. Comput. Educ. 2017, 106, 166–171. [Google Scholar] [CrossRef]

- NGSS Lead States. Next Generation Science Standards: For States, By States; National Academies Press: Washington, DC, USA, 2013. [Google Scholar]

- Vaccaro, M.; Friday, M.; Zaghi, A. LLMs and Personalized Learning. Available online: https://github.com/m-vaccaro/LLMs-and-Personalized-Learning (accessed on 18 March 2025).

- Wikipedia Contributors Electricity. Available online: https://en.wikipedia.org/w/index.php?title=Electricity&oldid=1194309762 (accessed on 11 January 2024).

- Schimansky, T. CustomTkinter. Available online: https://customtkinter.tomschimansky.com/ (accessed on 5 June 2024).

- Choi, D.; Lee, S.; Kim, S.-I.; Lee, K.; Yoo, H.J.; Lee, S.; Hong, H. Unlock Life with a Chat(GPT): Integrating Conversational AI with Large Language Models into Everyday Lives of Autistic Individuals. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; Floyd Mueller, F., Kyburz, P., Williamson, J.R., Sas, C., Wilson, M.L., Toups Dugas, P., Shklovski, I., Eds.; Association for Computing Machinery: New York, NY, USA, 2024; p. 72. [Google Scholar]

- Rogers, M.P.; Hillberg, H.M.; Groves, C.L. Attitudes Towards the Use (and Misuse) of ChatGPT: A Preliminary Study. In Proceedings of the 55th ACM Technical Symposium on Computer Science Education V. 1, Portland, OR, USA, 20–23 March 2024; Association for Computing Machinery: New York, NY, USA, 2024; pp. 1147–1153. [Google Scholar]

- Meyer, J.; Jansen, T.; Schiller, R.; Liebenow, L.W.; Steinbach, M.; Horbach, A.; Fleckenstein, J. Using LLMs to Bring Evidence-Based Feedback into the Classroom: AI-Generated Feedback Increases Secondary Students’ Text Revision, Motivation, and Positive Emotions. Comput. Educ. Artif. Intell. 2024, 6, 100199. [Google Scholar] [CrossRef]

- Chin, C. Student-Generated Questions: What They Tell Us about Students’ Thinking. In Proceedings of the Annual Meeting of the American Educational Research Association, Seattle, WA, USA, 10–14 April 2001. [Google Scholar]

- Elyoseph, Z.; Hadar-Shoval, D.; Asraf, K.; Lvovsky, M. ChatGPT Outperforms Humans in Emotional Awareness Evaluations. Front. Psychol. 2023, 14, 1199058. [Google Scholar] [CrossRef]

- Addy, T.; Kang, T.; Laquintano, T.; Dietrich, V. Who Benefits and Who Is Excluded?: Transformative Learning, Equity, and Generative Artificial Intelligence. J. Transform. Learn. 2023, 10, 92–103. [Google Scholar]

- Chrysochoou, M.; Zaghi, A.E.; Syharat, C.M. Reframing Neurodiversity in Engineering Education. Front. Educ. 2022, 7, 995865. [Google Scholar] [CrossRef]

- Maya, J.; Luesia, J.F.; Pérez-Padilla, J. The Relationship between Learning Styles and Academic Performance: Consistency among Multiple Assessment Methods in Psychology and Education Students. Sustainability 2021, 13, 3341. [Google Scholar] [CrossRef]

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E. ChatGPT for Good? On Opportunities and Challenges of Large Language Models for Education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Kop, R.; Fournier, H.; Durand, G. A Critical Perspective on Learning Analytics and Educational Data Mining. In Handbook of Learning Analytics; Lang, C., Siemens, G., Wise, A., Gašević, D., Eds.; Society for Learning Analytics Research (SoLAR): Beaumont, AB, Canada, 2017; Volume 319, pp. 319–326. [Google Scholar]

- Yağcı, M. Educational Data Mining: Prediction of Students’ Academic Performance Using Machine Learning Algorithms. Smart Learn. Environ. 2022, 9, 11. [Google Scholar] [CrossRef]

- Wu, X.; Duan, R.; Ni, J. Unveiling Security, Privacy, and Ethical Concerns of ChatGPT. J. Inf. Intell. 2024, 2, 102–115. [Google Scholar] [CrossRef]

- Hu, T.; Kyrychenko, Y.; Rathje, S.; Collier, N.; van der Linden, S.; Roozenbeek, J. Generative Language Models Exhibit Social Identity Biases. Nat. Comput. Sci. 2024, 5, 65–75. [Google Scholar] [CrossRef] [PubMed]

- Navigli, R.; Conia, S.; Ross, B. Biases in Large Language Models: Origins, Inventory, and Discussion. J. Data Inf. Qual. 2023, 15, 10. [Google Scholar] [CrossRef]

- UNESCO. Recommendation on the Ethics of Artificial Intelligence; UNESCO: Paris, France, 2022. [Google Scholar]

- Potgieter, I. Privacy Concerns in Educational Data Mining and Learning Analytics. Int. Rev. Inf. Ethics 2020, 28. [Google Scholar] [CrossRef]

- OpenAI Creating Video from Text. Available online: https://openai.com/index/sora/ (accessed on 18 June 2024).

| Type | Text |

|---|---|

| True Profile | You learn best when information is given to you in a clear and organized way. You prefer details and facts, and like steps and sequences in what you’re learning. You also appreciate learning with pictures and diagrams. When it comes to working with new information, you often like to work with others and talk about the ideas. |

| Opposite Profile | You like to see the big picture and understand how ideas are connected in a broad sense. You enjoy when learning includes examples and stories that make a topic real and lively. When reading and writing, you’re good at taking in lots of information and like different viewpoints. It’s also fun for you to work in groups where you can share ideas and talk about what you’re learning. |

| Original Text (source: [73]) | The movement of electric charge is known as an electric current, the intensity of which is usually measured in amperes. Electric current can flow through some things, electrical conductors, but will not flow through an electrical insulator. By historical convention, a positive current is defined as having the same direction of flow as any positive charge it contains, or to flow from the most positive part of a circuit to the most negative part. Current defined in this manner is called conventional current. |

| Personalized Text | Electric current is like a stream of tiny charged particles moving through a wire, and we measure its strength in units called amperes. It can only travel through materials that let it pass, called conductors. It can’t move through insulators, which block the flow. We say that electric current flows from the positive side to the negative side of a circuit, even though it’s really the electrons moving the other way. This kind of current is called conventional current because that’s the way scientists have agreed to talk about it. |

| Group | Grade | Age | Gender 1 | Ethnicity 1 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 7 | 8 | 11 | 12 | 13 | M | F | NB | White | Asian | Other 2 | |

| Experimental | 5 | 7 | 0 | 4 | 8 | 7 | 4 | 1 | 8 | 3 | 1 |

| Control | 4 | 7 | 1 | 3 | 7 | 7 | 4 | 0 | 6 | 3 | 2 |

| Total | 9 | 14 | 1 | 7 | 15 | 14 | 8 | 1 | 14 | 6 | 3 |

| Study Group | No. Selected Personalized Paragraphs | Mean | Std. Dev. | ||

|---|---|---|---|---|---|

| 0 | 1 | 2 | |||

| Experimental | 1 | 7 | 4 | 1.25 | 0.622 |

| Control | 5 | 4 | 2 | 0.73 | 0.786 |

| Group | Selected Profile 1 | |

|---|---|---|

| True Profile | Opposite Profile | |

| Experimental (n = 12) | 9 (0.75) | 3 (0.25) |

| Control (n = 11) | 5 (0.455) | 6 (0.545) |

| Type | Text |

|---|---|

| True Profile | You seem to like learning in a way that connects ideas together, showing how things work in the big picture. You enjoy explanations that make you feel involved in a story, bringing science to life in a way that is less about lists and more about the flow of ideas. You also appreciate clear, straightforward facts that show how things work step by step at times. |

| Opposite Profile | You seem to learn best when facts and steps are laid out clearly for you. You like to see information presented in a sequence or process that you can follow from beginning to end. You often prefer descriptions that are detailed, explaining the how and why of things in a straightforward way. However, sometimes you also appreciate a clear demonstration of concepts, where you can see how things change or behave under different conditions. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vaccaro, M., Jr.; Friday, M.; Zaghi, A. Multi-Agentic LLMs for Personalizing STEM Texts. Appl. Sci. 2025, 15, 7579. https://doi.org/10.3390/app15137579

Vaccaro M Jr., Friday M, Zaghi A. Multi-Agentic LLMs for Personalizing STEM Texts. Applied Sciences. 2025; 15(13):7579. https://doi.org/10.3390/app15137579

Chicago/Turabian StyleVaccaro, Michael, Jr., Mikayla Friday, and Arash Zaghi. 2025. "Multi-Agentic LLMs for Personalizing STEM Texts" Applied Sciences 15, no. 13: 7579. https://doi.org/10.3390/app15137579

APA StyleVaccaro, M., Jr., Friday, M., & Zaghi, A. (2025). Multi-Agentic LLMs for Personalizing STEM Texts. Applied Sciences, 15(13), 7579. https://doi.org/10.3390/app15137579