Critical Factors in Young People’s Use and Non-Use of AI Technology for Emotion Regulation: A Pilot Study

Abstract

1. Introduction

2. Materials and Methods

2.1. Research Methods

2.2. Interview Questions

2.3. Procedure

2.4. Participants

2.5. Analysis

3. Results

3.1. Overview of the Data Coding and Analysis

3.2. Attitudes Toward AI Technology for Emotion Regulation

3.3. Reasons for Using AI Technology for Emotion Regulation

3.4. Reasons for Not Using AI Technology for Emotion Regulation

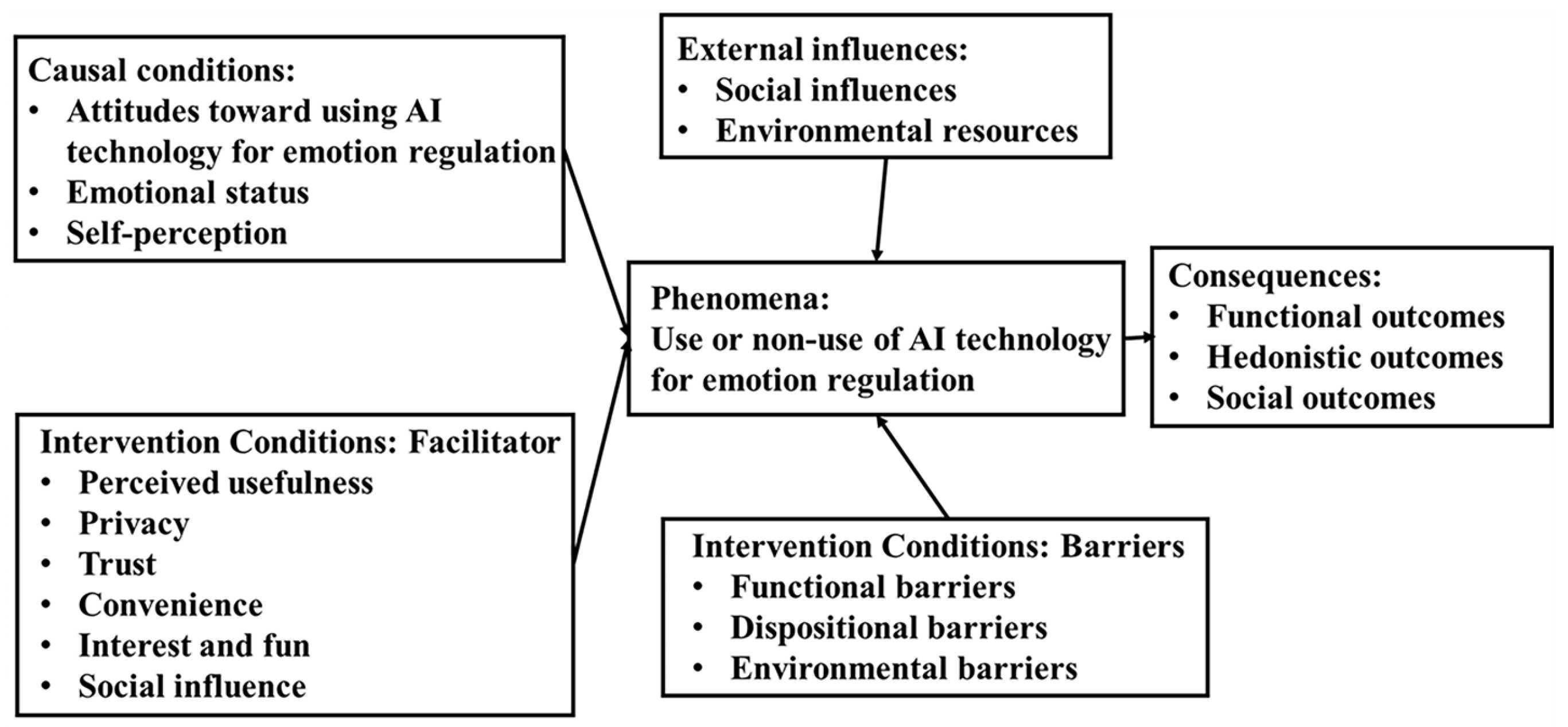

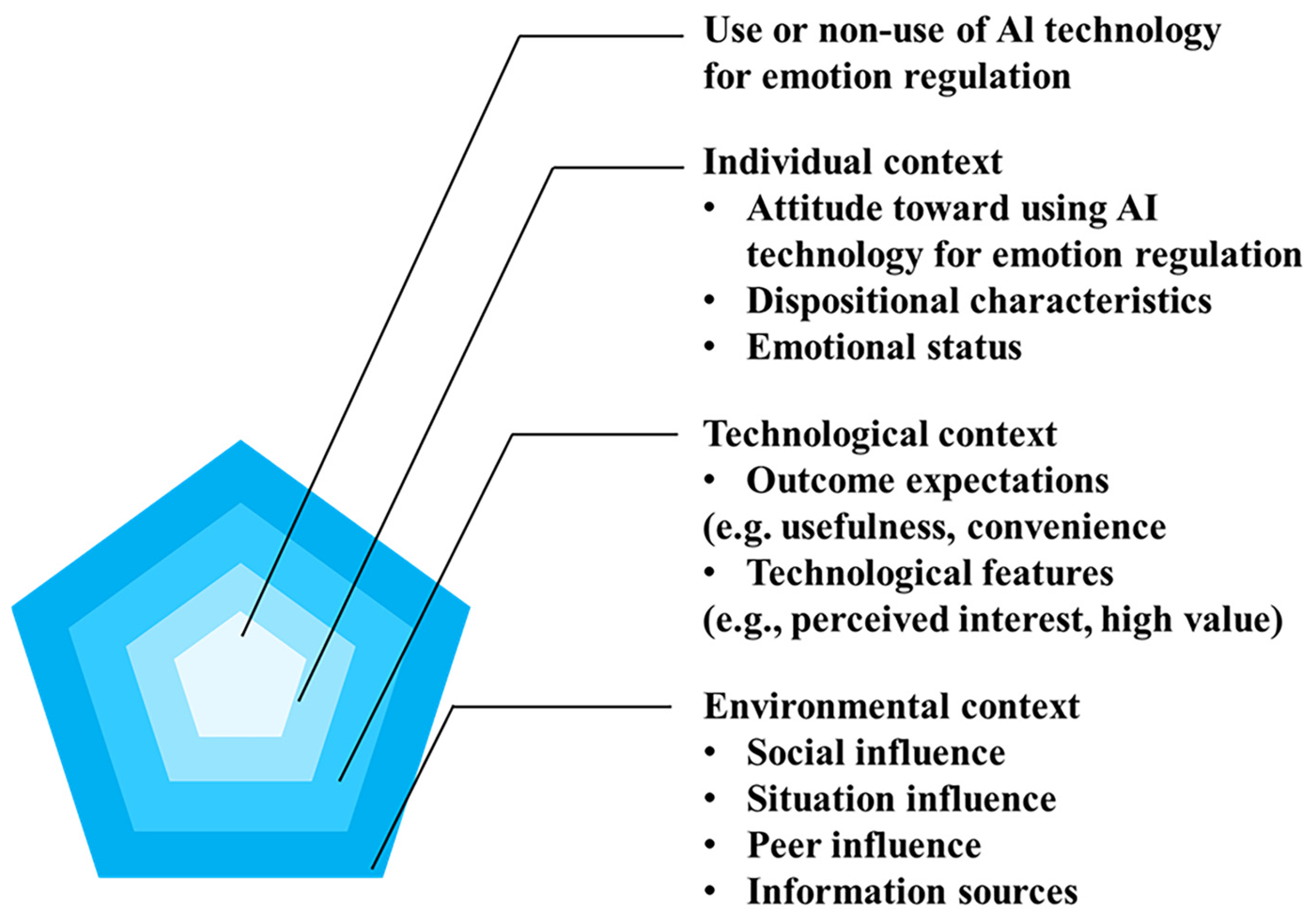

3.5. Grounded Theory

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | artificial intelligence |

References

- Thapar, A.; Eyre, O.; Patel, V.; Brent, D. Depression in young people. Lancet 2022, 400, 617–631. [Google Scholar] [CrossRef] [PubMed]

- Mahfoud, D.; Pardini, S.; Mróz, M.; Hallit, S.; Obeid, S.; Akel, M.; Novara, C.; Brytek-Matera, A. Profiling orthorexia nervosa in young adults: The role of obsessive behaviour, perfectionism, and self-esteem. J. Eat. Disord. 2023, 11, 188. [Google Scholar] [CrossRef] [PubMed]

- Gómez-Galán, J.; Lázaro-Pérez, C.; Martínez-López, J.Á. Exploratory study on video game addiction of college students in a pandemic scenario. J. New Approaches Educ. Res. 2021, 10, 330–346. [Google Scholar] [CrossRef]

- Carballo-Marquez, A.; Ampatzoglou, A.; Rojas-Rincón, J.; Garcia-Casanovas, A.; Garolera, M.; Fernández-Capo, M.; Porras-Garcia, B. Improving Emotion Regulation, Internalizing Symptoms and Cognitive Functions in Adolescents at Risk of Executive Dysfunction—A Controlled Pilot VR Study. Appl. Sci. 2025, 15, 1223. [Google Scholar] [CrossRef]

- Tabares, M.T.; Álvarez, C.V.; Salcedo, J.B.; Rendón, S.M. Anxiety in young people: Analysis from a machine learning model. Acta Psychol. 2024, 248, 104410. [Google Scholar] [CrossRef]

- Telzer, E.H.; Kwon, S.-J.; Jorgensen, N.A. Neurobiological Development in Adolescence and Early Adulthood: Implications for Positive Youth Adjustment; American Psychological Association: Washington, DC, USA, 2023. [Google Scholar]

- Criss, M.M.; Cui, L.; Wood, E.E.; Morris, A.S. Associations between emotion regulation and adolescent adjustment difficulties: Moderating effects of parents and peers. J. Child Fam. Stud. 2021, 30, 1979–1989. [Google Scholar] [CrossRef]

- Edwards, E.R.; Wupperman, P. Research on emotional schemas: A review of findings and challenges. Clin. Psychol. 2019, 23, 3–14. [Google Scholar] [CrossRef]

- Faramarzi, A.; Sharini, H.; Shanbehzadeh, M.; Pour, M.Y.; Fooladi, M.; Jalalvandi, M.; Amiri, S.; Kazemi-Arpanahi, H. Anhedonia symptoms: The assessment of brain functional mechanism following music stimuli using functional magnetic resonance imaging. Psychiatry Res. Neuroimaging 2022, 326, 111532. [Google Scholar] [CrossRef]

- Du, Y.; Hua, L.; Tian, S.; Dai, Z.; Xia, Y.; Zhao, S.; Zou, H.; Wang, X.; Sun, H.; Zhou, H. Altered beta band spatial-temporal interactions during negative emotional processing in major depressive disorder: An MEG study. J. Affect. Disord. 2023, 338, 254–261. [Google Scholar] [CrossRef]

- Khedr, M.A.; Alharbi, T.A.F.; Alkaram, A.A.; Hussein, R.M. Impact of resilience-based intervention on emotional regulation, grit and life satisfaction among female Egyptian and Saudi nursing students: A randomized controlled trial. Nurse Educ. Pract. 2023, 73, 103830. [Google Scholar] [CrossRef]

- Klausner, E.A.; Rose, T.M.; Gundrum, D.A.; McMorris, T.E.; Lang, L.A.; Shan, G.; Chu, A. Evaluating the Effects of a Mindfulness Mobile Application on Student Pharmacists’ Stress, Burnout, and Mindfulness. Am. J. Pharm. Educ. 2023, 87, 100259. [Google Scholar] [CrossRef]

- Pavlopoulos, A.; Rachiotis, T.; Maglogiannis, I. An Overview of Tools and Technologies for Anxiety and Depression Management Using AI. Appl. Sci. 2024, 14, 9068. [Google Scholar] [CrossRef]

- Moore, P.V. Jerry Kaplan artificial intelligence: What everyone needs to know. Organ. Stud. 2019, 40, 466–470. [Google Scholar] [CrossRef]

- Thakkar, A.; Gupta, A.; De Sousa, A. Artificial intelligence in positive mental health: A narrative review. Front. Digit. Health 2024, 6, 1280235. [Google Scholar] [CrossRef]

- Nguyen, D.; Nguyen, M.T.; Yamada, K. Electroencephalogram Based Emotion Recognition Using Hybrid Intelligent Method and Discrete Wavelet Transform. Appl. Sci. 2025, 15, 2328. [Google Scholar] [CrossRef]

- Alheeti, A.A.M.; Salih, M.M.M.; Mohammed, A.H.; Hamood, M.A.; Khudhair, N.R.; Shakir, A.T. Emotion Recognition of Humans using modern technology of AI: A Survey. In Proceedings of the 2023 7th International Symposium on Innovative Approaches in Smart Technologies (ISAS), Istanbul, Turkiye, 23–25 November 2023; pp. 1–10. [Google Scholar]

- Singh, G.V.; Firdaus, M.; Chauhan, D.S.; Ekbal, A.; Bhattacharyya, P. Zero-shot multitask intent and emotion prediction from multimodal data: A benchmark study. Neurocomputing 2024, 569, 127128. [Google Scholar] [CrossRef]

- Yeh, P.-L.; Kuo, W.-C.; Tseng, B.-L.; Sung, Y.-H. Does the AI-driven Chatbot Work? Effectiveness of the Woebot app in reducing anxiety and depression in group counseling courses and student acceptance of technological aids. Curr. Psychol. 2025, 44, 8133–8145. [Google Scholar] [CrossRef]

- Chalabianloo, N.; Can, Y.S.; Umair, M.; Sas, C.; Ersoy, C. Application level performance evaluation of wearable devices for stress classification with explainable AI. Pervasive Mob. Comput. 2022, 87, 101703. [Google Scholar] [CrossRef]

- Conderman, G.; Van Laarhoven, T.; Johnson, J.; Liberty, L. Wearable technologies for anxious adolescents. Clear. House A J. Educ. Strateg. Issues Ideas 2020, 94, 1–7. [Google Scholar] [CrossRef]

- Song, Y.; Wu, K.; Ding, J. Developing an immersive game-based learning platform with generative artificial intelligence and virtual reality technologies–“LearningverseVR”. Comput. Educ. X Real. 2024, 4, 100069. [Google Scholar] [CrossRef]

- Man, S.S.; Li, X.; Lin, X.J.; Lee, Y.-C.; Chan, A.H.S. Assessing the Effectiveness of Virtual Reality Interventions on Anxiety, Stress, and Negative Emotions in College Students: A Meta-Analysis of Randomized Controlled Trials. Int. J. Hum.–Comput. Interact. 2024, 1–17. [Google Scholar] [CrossRef]

- Wang, E.; Chang, W.-L.; Shen, J.; Bian, Q.; Huang, P.; Lai, X.; Chang, T.-Y.; Shaoying, H.; Ziyue, Z.; Wang, Z. Effect of artificial intelligence on one-to-one emotional regulation and psychological intervention system of middle school students. Int. J. Neuropsychopharmacol. 2022, 25, A62–A63. [Google Scholar] [CrossRef]

- Chakravarthi, B.; Ng, S.-C.; Ezilarasan, M.R.; Leung, M.-F. EEG-based emotion recognition using hybrid CNN and LSTM classification. Front. Comput. Neurosci. 2022, 16, 1019776. [Google Scholar] [CrossRef] [PubMed]

- Draucker, C.B.; Martsolf, D.S.; Ross, R.; Rusk, T.B. Theoretical sampling and category development in grounded theory. Qual. Health Res. 2007, 17, 1137–1148. [Google Scholar] [CrossRef]

- Upjohn, M.; Attwood, G.; Lerotholi, T.; Pfeiffer, D.; Verheyen, K. Quantitative versus qualitative approaches: A comparison of two research methods applied to identification of key health issues for working horses in Lesotho. Prev. Vet. Med. 2013, 108, 313–320. [Google Scholar] [CrossRef]

- Bogdan, R.; Biklen, S.K. Qualitative Research for Education; Allyn & Bacon: Boston, MA, USA, 1997; Volume 368. [Google Scholar]

- Bolderston, A. Conducting a research interview. J. Med. Imaging Radiat. Sci. 2012, 43, 66–76. [Google Scholar] [CrossRef]

- Wong, T.K.M.; Man, S.S.; Chan, A.H.S. Critical factors for the use or non-use of personal protective equipment amongst construction workers. Saf. Sci. 2020, 126, 104663. [Google Scholar] [CrossRef]

- Man, S.S.; Chan, A.H.S.; Wong, H.M. Risk-taking behaviors of Hong Kong construction workers—A thematic study. Saf. Sci. 2017, 98, 25–36. [Google Scholar] [CrossRef]

- Karemaker, M.; Ten Hoor, G.A.; Hagen, R.R.; van Schie, C.H.; Boersma, K.; Ruiter, R.A. Elderly about home fire safety: A qualitative study into home fire safety knowledge and behaviour. Fire Saf. J. 2021, 124, 103391. [Google Scholar] [CrossRef]

- Lee, W.K.H.; Man, S.S.; Chan, A.H.S. Cogeneration System Acceptance in the Hotel Industry: A Qualitative Study. J. Hosp. Tour. Manag. 2022, 51, 339–345. [Google Scholar] [CrossRef]

- James, R.; Hodson, K.; Mantzourani, E.; Davies, D. Exploring the implementation of Discharge Medicines Review referrals by hospital pharmacy professionals: A qualitative study using the consolidated framework for implementation research. Res. Soc. Adm. Pharm. 2023, 19, 1558–1569. [Google Scholar] [CrossRef]

- Ahlberg, M.; Berterö, C.; Ågren, S. Family functioning of families experiencing intensive care and the specific impact of the COVID-19 pandemic: A grounded theory study. Intensive Crit. Care Nurs. 2023, 76, 103397. [Google Scholar] [CrossRef] [PubMed]

- Ng, J.Y.; Usman, M.S.; Gilotra, K.; Guyatt, G.H.; Levine, M.A.; Busse, J.W. Attitudes towards medical cannabis among Ontario family physicians: A qualitative interview study. Eur. J. Integr. Med. 2021, 48, 101952. [Google Scholar] [CrossRef]

- Rosenberg, L.; Kottorp, A.; Nygård, L. Readiness for technology use with people with dementia: The perspectives of significant others. J. Appl. Gerontol. 2012, 31, 510–530. [Google Scholar] [CrossRef]

- Glaser, B.; Strauss, A. Discovery of Grounded Theory: Strategies for Qualitative Research; Routledge: Abingdon, UK, 2017. [Google Scholar]

- Sun, Y.; Li, Z.; Liu, Z. Usability Study of Museum Website Based on Analytic Hierarchy Process: A Case of Foshan Museum Website. In Proceedings of the International Conference on Human-Computer Interaction, Virtual Event, 26 June–1 July 2022; pp. 504–525. [Google Scholar]

- Hennink, M.; Kaiser, B.N. Sample sizes for saturation in qualitative research: A systematic review of empirical tests. Soc. Sci. Med. 2022, 292, 114523. [Google Scholar] [CrossRef]

- Mason, M. Sample size and saturation in PhD studies using qualitative interviews. Forum Qual. Soc. Res. Soz. 2010, 11, 8. [Google Scholar]

- Tong, A.; Sainsbury, P.; Craig, J. Consolidated criteria for reporting qualitative research (COREQ): A 32-item checklist for interviews and focus groups. Int. J. Qual. Health Care 2007, 19, 349–357. [Google Scholar] [CrossRef]

- Corbin, J.; Strauss, A. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory; Sage Publications: Thousand Oaks, CA, USA, 2014. [Google Scholar]

- Fassinger, R.E. Paradigms, praxis, problems, and promise: Grounded theory in counseling psychology research. J. Couns. Psychol. 2005, 52, 156–166. [Google Scholar] [CrossRef]

- Strauss, A.; Corbin, J. Basics of Qualitative Research; Sage: Newbury Park, CA, USA, 1990; Volume 15. [Google Scholar]

- Kelle, U. The development of categories: Different approaches in grounded theory. In The Sage Handbook of Grounded Theory; Sage Publications: Thousand Oaks, CA, USA, 2007; pp. 191–213. [Google Scholar]

- Angrosino, M. Doing Ethnographic and Observational Research; Sage Publications: Thousand Oaks, CA, USA, 2007. [Google Scholar]

- Shi, L. The integration of advanced AI-enabled emotion detection and adaptive learning systems for improved emotional regulation. J. Educ. Comput. Res. 2025, 63, 173–201. [Google Scholar] [CrossRef]

- Henkel, A.P.; Bromuri, S.; Iren, D.; Urovi, V. Half human, half machine–augmenting service employees with AI for interpersonal emotion regulation. J. Serv. Manag. 2020, 31, 247–265. [Google Scholar] [CrossRef]

- Carney, J.; Robertson, C. Five studies evaluating the impact on mental health and mood of recalling, reading, and discussing fiction. PLoS ONE 2022, 17, e0266323. [Google Scholar] [CrossRef]

- Eldesouky, L.; Ellis, K.; Goodman, F.; Khadr, Z. Daily emotion regulation and emotional well-being: A replication and extension in Egypt. Curr. Res. Ecol. Soc. Psychol. 2023, 4, 100106. [Google Scholar] [CrossRef]

- Afroogh, S.; Akbari, A.; Malone, E.; Kargar, M.; Alambeigi, H. Trust in AI: Progress, challenges, and future directions. Humanit. Soc. Sci. Commun. 2024, 11, 1568. [Google Scholar] [CrossRef]

- Mohr, D.C.; Zhang, M.; Schueller, S.M. Personal sensing: Understanding mental health using ubiquitous sensors and machine learning. Annu. Rev. Clin. Psychol. 2017, 13, 23–47. [Google Scholar] [CrossRef] [PubMed]

- Hill, C.L.; Updegraff, J.A. Mindfulness and its relationship to emotional regulation. Emotion 2012, 12, 81. [Google Scholar] [CrossRef]

- Balleyer, A.H.; Fennis, B.M. Hedonic consumption in times of stress: Reaping the emotional benefits without the self-regulatory cost. Front. Psychol. 2022, 13, 685552. [Google Scholar] [CrossRef]

- Hamari, J.; Koivisto, J.; Sarsa, H. Does gamification work?—A literature review of empirical studies on gamification. In Proceedings of the 2014 47th Hawaii International Conference on System Sciences, Waikoloa, HI, USA, 6–9 January 2014; pp. 3025–3034. [Google Scholar]

- Roberts, M.E.; Clarkson, J.J.; Cummings, E.L.; Ragsdale, C.M. Facilitating emotional regulation: The interactive effect of resource availability and reward processing. J. Exp. Soc. Psychol. 2017, 69, 65–70. [Google Scholar] [CrossRef]

- Chen, S.X.; Cheung, F.M.; Bond, M.H.; Leung, J.-P. Decomposing the construct of ambivalence over emotional expression in a Chinese cultural context. Eur. J. Personal. 2005, 19, 185–204. [Google Scholar] [CrossRef]

- Salim, T.A.; El Barachi, M.; Mohamed, A.A.D.; Halstead, S.; Babreak, N. The mediator and moderator roles of perceived cost on the relationship between organizational readiness and the intention to adopt blockchain technology. Technol. Soc. 2022, 71, 102108. [Google Scholar] [CrossRef]

- Green, G. Analysis of the mediating effect of resistance to change, perceived ease of use, and behavioral intention to use technology-based learning among younger and older nursing students. J. Prof. Nurs. 2024, 50, 66–72. [Google Scholar] [CrossRef]

- Palomba, A. Virtual perceived emotional intelligence: How high brand loyalty video game players evaluate their own video game play experiences to repair or regulate emotions. Comput. Hum. Behav. 2018, 85, 34–42. [Google Scholar] [CrossRef]

- Ko, K.S.; Lee, W.K. A preliminary study using a mobile app as a dance/movement therapy intervention to reduce anxiety and enhance the mindfulness of adolescents in South Korea. Arts Psychother. 2023, 85, 102062. [Google Scholar] [CrossRef]

- Yu, W.-J.; Hung, S.-Y.; Yu, A.P.-I.; Hung, Y.-L. Understanding consumers’ continuance intention of social shopping and social media participation: The perspective of friends on social media. Inf. Manag. 2024, 61, 103808. [Google Scholar] [CrossRef]

- Cheshin, A.; Amit, A.; Van Kleef, G.A. The interpersonal effects of emotion intensity in customer service: Perceived appropriateness and authenticity of attendants’ emotional displays shape customer trust and satisfaction. Organ. Behav. Hum. Decis. Process. 2018, 144, 97–111. [Google Scholar] [CrossRef]

- Thompson, C.B.; Walker, B.L. Basics of research (part 12): Qualitative research. Air Med. J. 1998, 17, 65–70. [Google Scholar] [CrossRef]

- Aslan, H. The influence of halal awareness, halal certificate, subjective norms, perceived behavioral control, attitude and trust on purchase intention of culinary products among Muslim costumers in Turkey. Int. J. Gastron. Food Sci. 2023, 32, 100726. [Google Scholar] [CrossRef]

- Ajzen, I. From intentions to actions: A theory of planned behavior. In Action Control: From Cognition to Behavior; Springer: Berlin/Heidelberg, Germany, 1985. [Google Scholar]

- MacNamara, A.; Joyner, K.; Klawohn, J. Event-related potential studies of emotion regulation: A review of recent progress and future directions. Int. J. Psychophysiol. 2022, 176, 73–88. [Google Scholar] [CrossRef]

- Lisetti, C.; Amini, R.; Yasavur, U.; Rishe, N. I can help you change! an empathic virtual agent delivers behavior change health interventions. ACM Trans. Manag. Inf. Syst. 2013, 4, 1–28. [Google Scholar] [CrossRef]

- Bandura, A. Social Foundations of Thought and Action: A Social Cognitive Theory; Prentice-Hall: Englewoods Cliffs, NJ, USA, 1986. [Google Scholar]

- Man, S.S.; Wang, J.; Chan, A.H.S.; Liu, L. Ageing in the digital age: What drives virtual reality technology adoption among older adults? Ergonomics 2025, 1–15. [Google Scholar] [CrossRef]

- Man, S.S.; Ding, M.; Li, X.; Chan, A.H.S.; Zhang, T. Acceptance of highly automated vehicles: The role of facilitating condition, technology anxiety, social influence and trust. Int. J. Hum.–Comput. Interact. 2025, 41, 3684–3695. [Google Scholar] [CrossRef]

- Zhang, T.; Li, J.; Qiao, L.; Zhang, Y.; Li, W.; Man, S.S. Evaluation of drivers’ mental model, trust, and reliance toward level 2 automated vehicles. Int. J. Hum.–Comput. Interact. 2025, 41, 3696–3707. [Google Scholar] [CrossRef]

- Mohsin, A.; Lengler, J.; Chaiya, P. Does travel interest mediate between motives and intention to travel? A case of young Asian travellers. J. Hosp. Tour. Manag. 2017, 31, 36–44. [Google Scholar] [CrossRef]

- Yoon, J.; Pohlmeyer, A.E.; Desmet, P.M.; Kim, C. Designing for positive emotions: Issues and emerging research directions. Des. J. 2020, 24, 167–187. [Google Scholar] [CrossRef]

- Alam, M.N.; Ogiemwonyi, O.; Alshareef, R.; Alsolamy, M.; Mat, N.; Azizan, N.A. Do social media influence altruistic and egoistic motivation and green purchase intention towards green products? An experimental investigation. Clean. Eng. Technol. 2023, 15, 100669. [Google Scholar] [CrossRef]

- Fan, L.; Wang, Y.; Mou, J. Enjoy to read and enjoy to shop: An investigation on the impact of product information presentation on purchase intention in digital content marketing. J. Retail. Consum. Serv. 2024, 76, 103594. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User acceptance of computer technology: A comparison of two theoretical models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef]

- Venkatesh, V.; Thong, J.Y.; Xu, X. Consumer acceptance and use of information technology: Extending the unified theory of acceptance and use of technology. MIS Q. 2012, 36, 157–178. [Google Scholar] [CrossRef]

- Man, S.S.; Guo, Y.; Chan, A.H.S.; Zhuang, H. Acceptance of online mapping technology among older adults: Technology acceptance model with facilitating condition, compatibility, and self-satisfaction. ISPRS Int. J. Geo-Inf. 2022, 11, 558. [Google Scholar] [CrossRef]

- Pergantis, P.; Bamicha, V.; Doulou, A.; Christou, A.I.; Bardis, N.; Skianis, C.; Drigas, A. Assistive and Emerging Technologies to Detect and Reduce Neurophysiological Stress and Anxiety in Children and Adolescents with Autism and Sensory Processing Disorders: A Systematic Review. Technologies 2025, 13, 144. [Google Scholar] [CrossRef]

- Cutcliffe, J.R. Methodological issues in grounded theory. J. Adv. Nurs. 2000, 31, 1476–1484. [Google Scholar] [CrossRef]

| Categories | Subcategories | Definition | Percentage |

|---|---|---|---|

| Attitudes toward using AI technology for emotion regulation (16.25%) | Positive attitudes (47.24%) | A person’s favourable evaluation of using AI technology for emotion regulation | Regulating emotional states (44.15%) Functional values (38.96%) Raising emotional awareness (12.99%) Addressing the source problem (3.90%) |

| Neutral attitudes (12.88%) | A person’s neither favourable nor unfavourable evaluation of using AI technology for emotion regulation | Usage environment (52.38%) No disadvantages (19.04%) Low positive impact (14.29%) Single form (14.29%) | |

| Negative attitudes (39.88%) | A person’s unfavourable evaluation of using AI technology for emotion regulation | Negative effects (55.39%) Unresolved issues (15.38%) Inconvenience (12.31%) Poor quality-price ratio (7.69%) Complexity (4.62%) Addiction (3.07%) Security and privacy risks (1.54%) | |

| Reasons for the use of AI technology for emotion regulation (56.07%) | Functional outcomes (76.48%) | The extent to which using AI technology for emotion regulation is perceived to be instrumental in achieving valued outcomes | Usefulness (23.57%) Privacy (21.66%) Trust (15.29%) Convenience (12.74%) Intelligent (12.74%) High value (5.1%) Accessibility (4.44%) Functionality (2.55%) Interactive feedback (1.91%) |

| Hedonic outcomes (22.08%) | The individual’s level of curiosity during the interaction and the perception that the interaction is intrinsically enjoyable | Interest and fun (93.75%) Beautiful interface (4.17%) Emotional experience (2.08%) | |

| Social influence (1.44%) | The extent to which social values and members of a social network influence the user behaviour | Peer influence (66.67%) Surrounding environment influence (33.33%) | |

| Reasons for the non-use of AI technology for emotion regulation (27.68%) | Functional barriers (55.94%) | The extent to which the non-use of AI technology for emotion regulation is perceived to be instrumental in achieving valued outcomes | Low value (51.76%) Uselessness (27.50%) Lack of trust (16.25%) Inadequate functionality (1.99%) Emotions hard to understand (1.25%) Low accuracy (1.25%) |

| Dispositional barriers (41.96%) | Personal factors associated with individuals’ attitudes and self-perceptions about oneself as a user | Inconvenience (36.67%) Difficulty with learning (33.33%) No interest (30%) | |

| Environmental barriers (2.1%) | Factors that are beyond one’s control and are related to the individual’s life situation or environment at a particular time | No relevant products (56.69%) No professional platform or channel (30.75%) Lack of information (12.56%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Tang, H.; Man, S.-S.; Chen, Y.; Zhou, S.; Chan, H.-S. Critical Factors in Young People’s Use and Non-Use of AI Technology for Emotion Regulation: A Pilot Study. Appl. Sci. 2025, 15, 7476. https://doi.org/10.3390/app15137476

Wang J, Tang H, Man S-S, Chen Y, Zhou S, Chan H-S. Critical Factors in Young People’s Use and Non-Use of AI Technology for Emotion Regulation: A Pilot Study. Applied Sciences. 2025; 15(13):7476. https://doi.org/10.3390/app15137476

Chicago/Turabian StyleWang, Junyu, Hongying Tang, Siu-Shing Man, Yingwei Chen, Shuzhang Zhou, and Hoi-Shou (Alan) Chan. 2025. "Critical Factors in Young People’s Use and Non-Use of AI Technology for Emotion Regulation: A Pilot Study" Applied Sciences 15, no. 13: 7476. https://doi.org/10.3390/app15137476

APA StyleWang, J., Tang, H., Man, S.-S., Chen, Y., Zhou, S., & Chan, H.-S. (2025). Critical Factors in Young People’s Use and Non-Use of AI Technology for Emotion Regulation: A Pilot Study. Applied Sciences, 15(13), 7476. https://doi.org/10.3390/app15137476