A LiDAR-Driven Approach for Crop Row Detection and Navigation Line Extraction in Soybean–Maize Intercropping Systems

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Data Collection and Processing

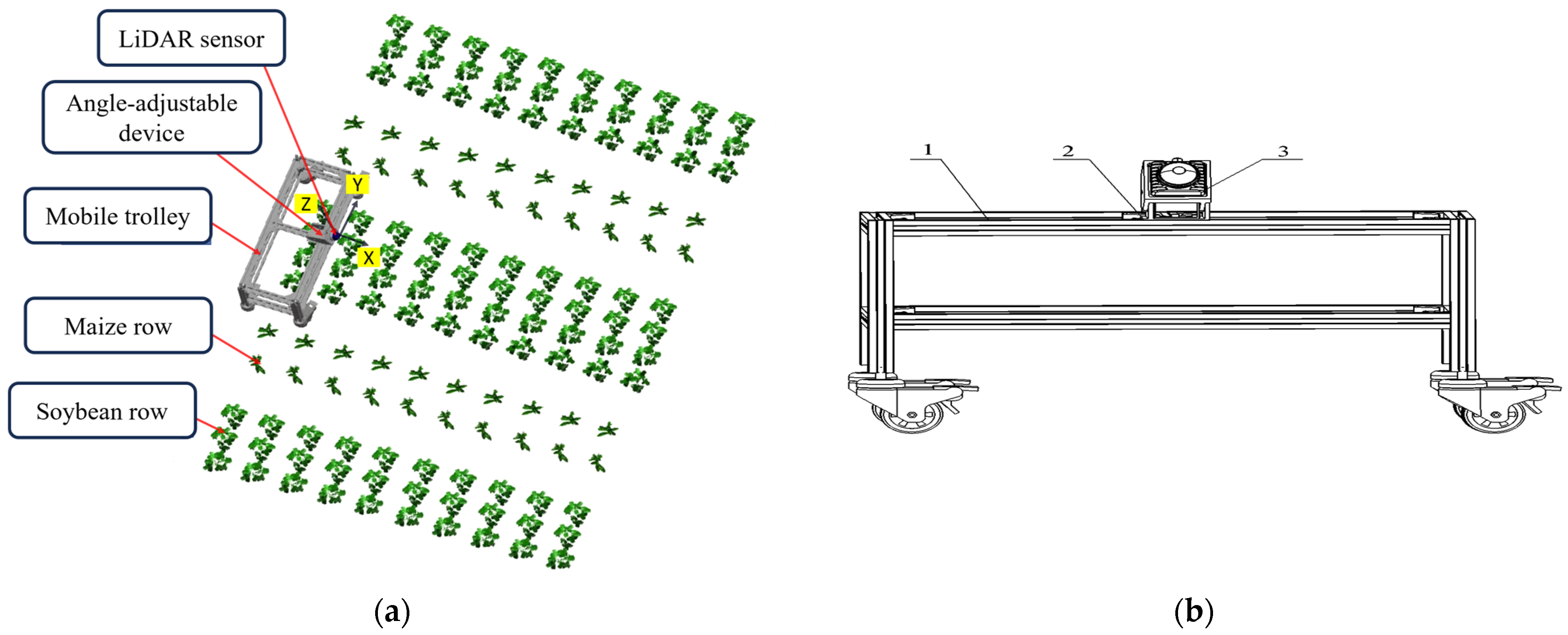

2.1.1. Field Point Cloud Data Collection

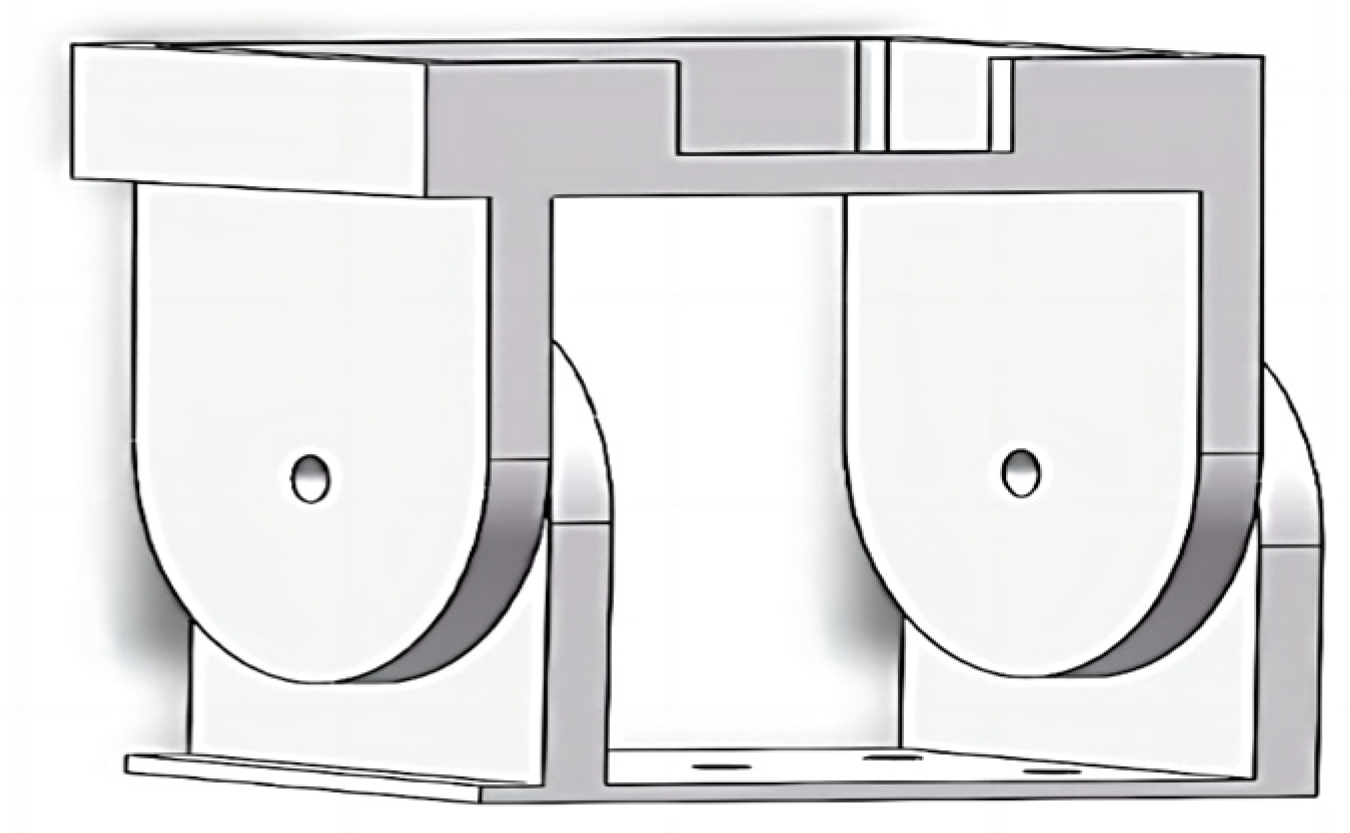

2.1.2. Key Component Design

2.2. Crop Row Recognition and Navigation Line Extraction

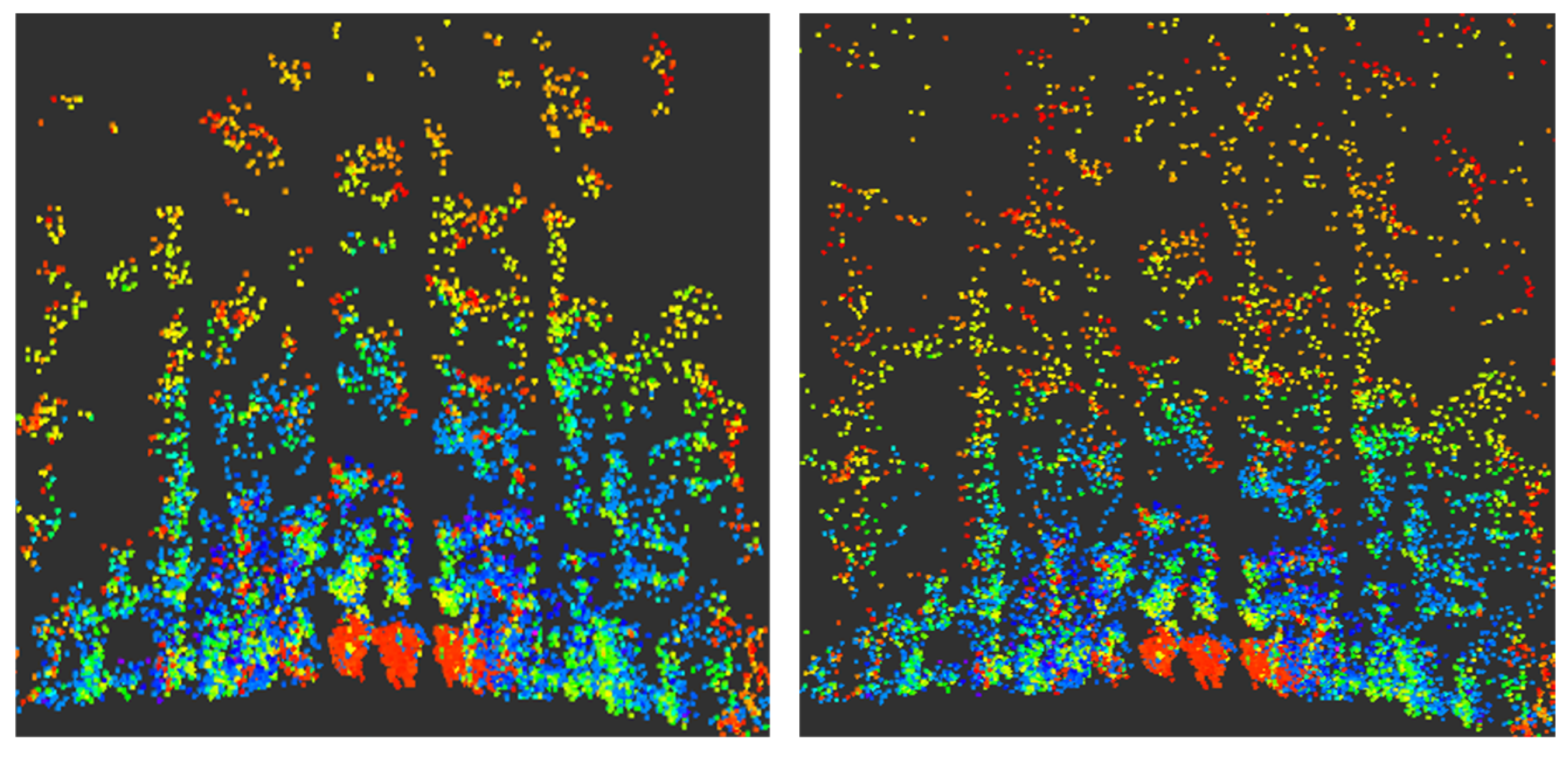

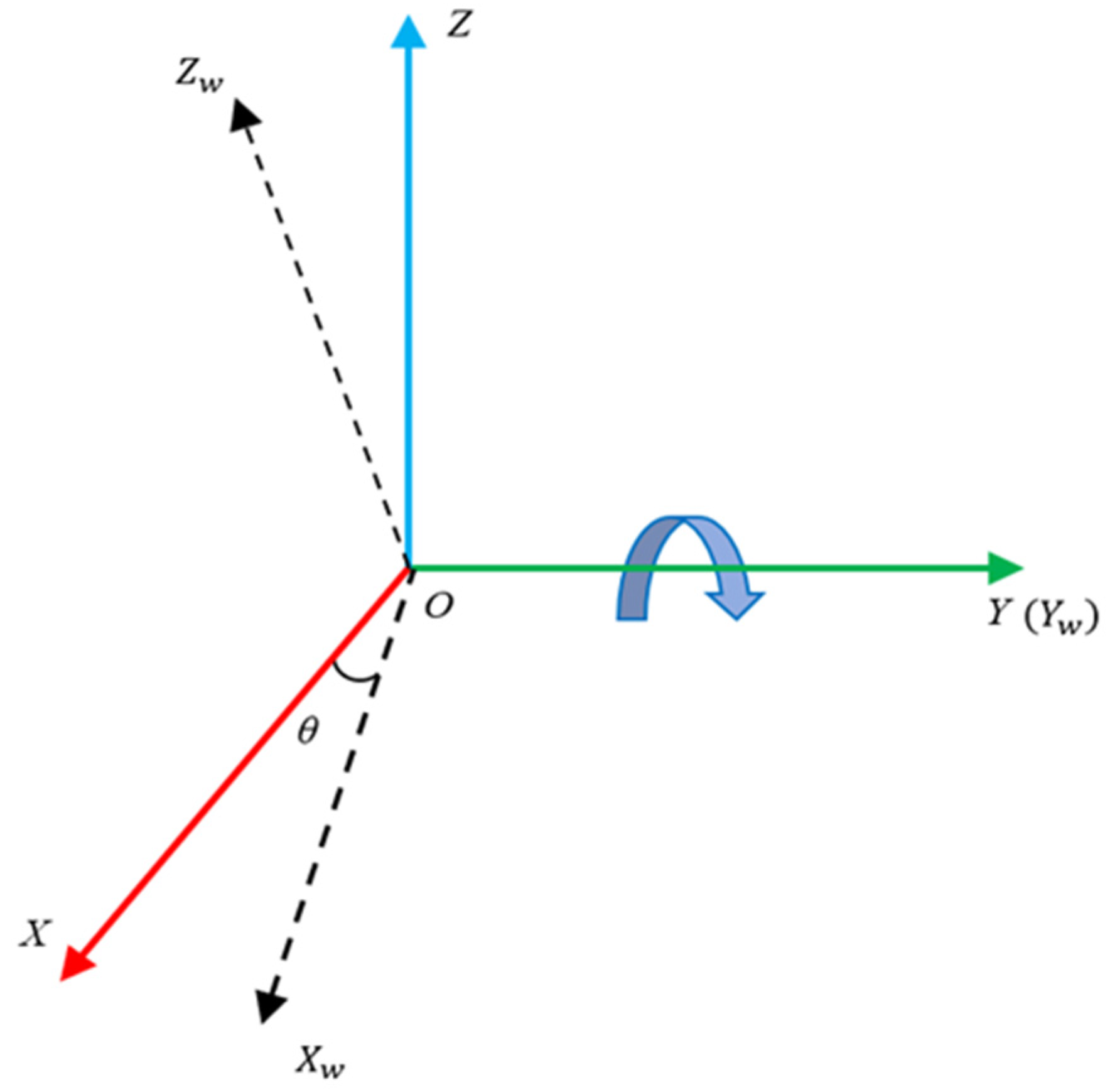

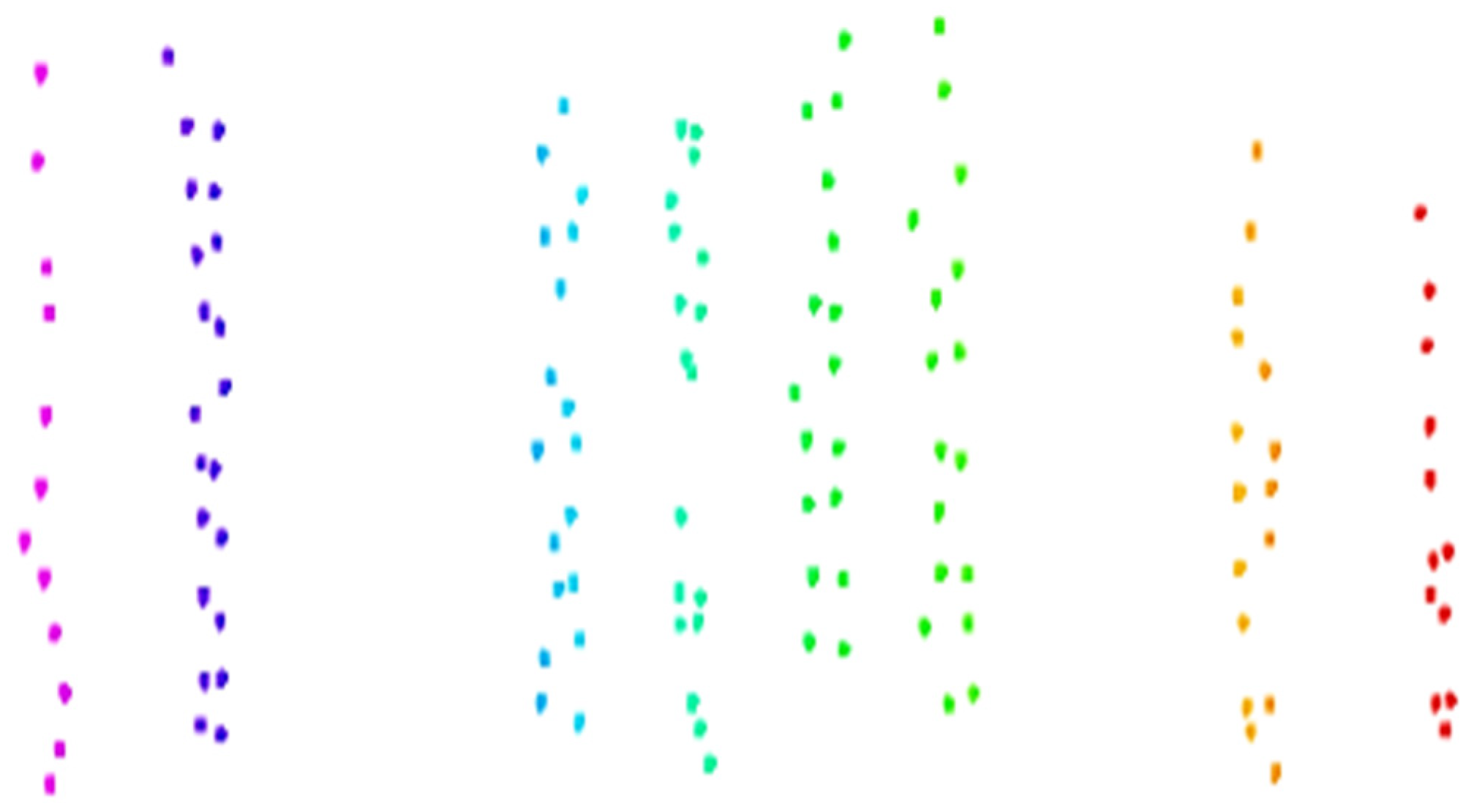

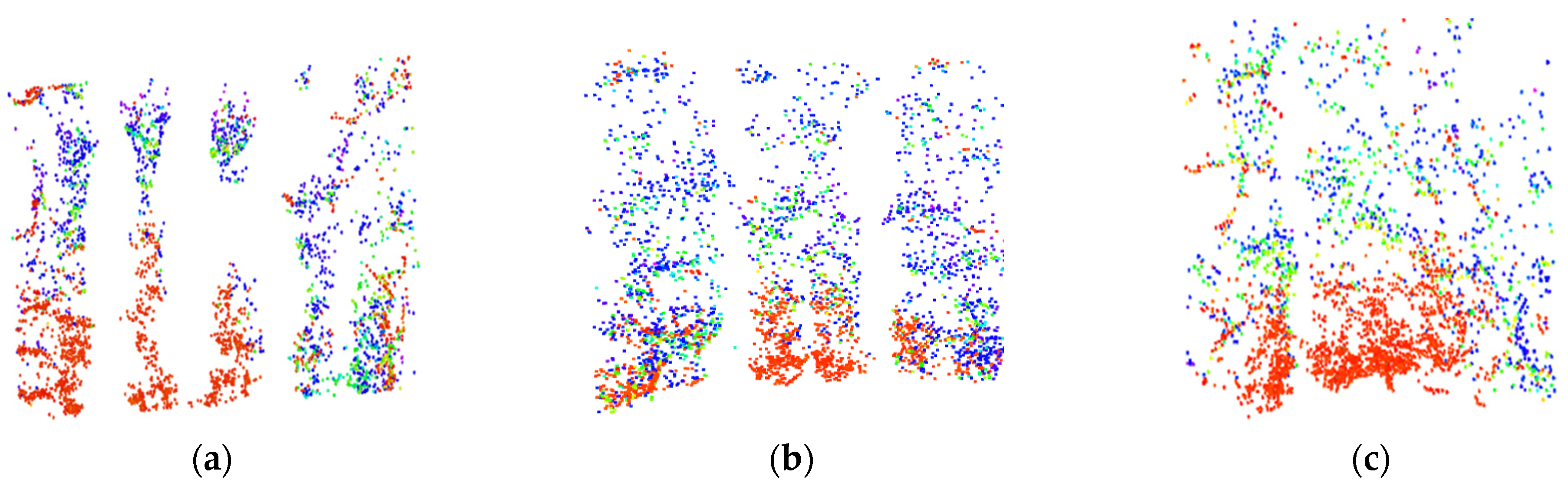

2.2.1. Point Cloud Filtering and Coordinate Transformation

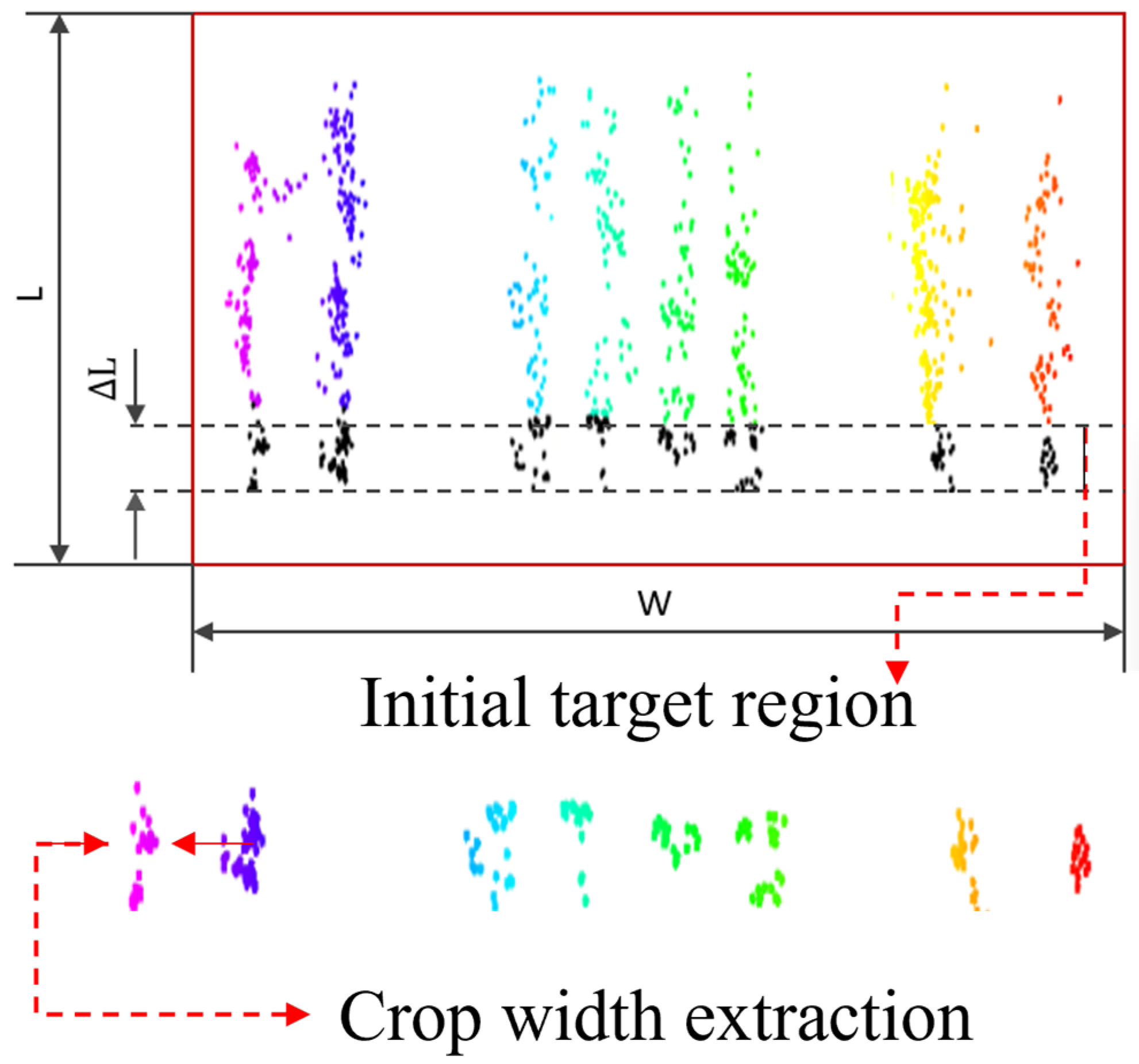

2.2.2. Determination of the Crop Row Target Region

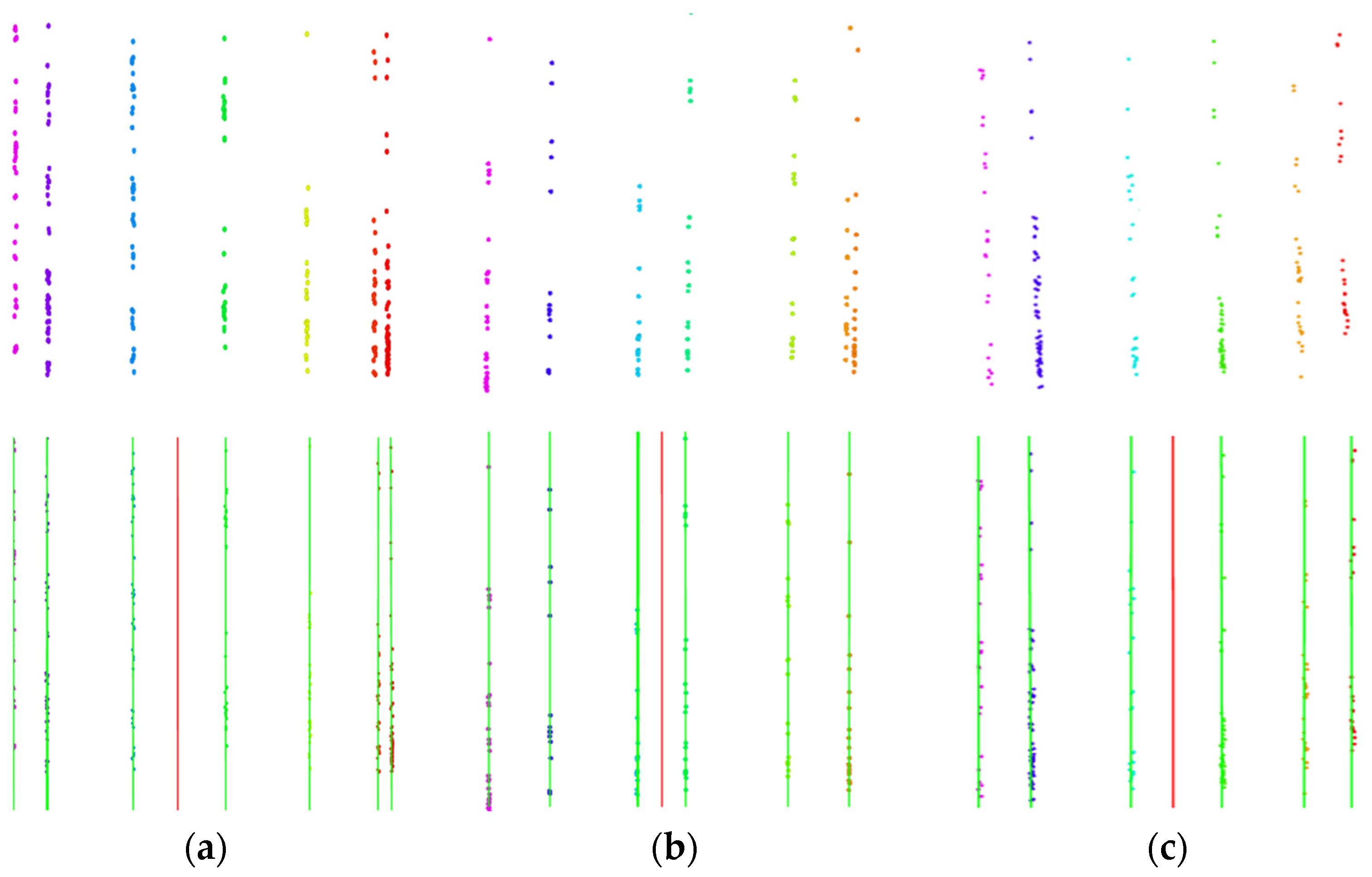

2.2.3. Crop Row Centerline Fitting

2.2.4. Crop Row Navigation Line Extraction

3. Results and Discussion

3.1. Crop Row Detection and Navigation Line Extraction

3.2. Effect of the Filtering Algorithm on Navigation Angle Under Multiple Scenarios

3.3. Influence of Multi-Scenario Conditions on the Navigation Line Extraction Algorithm

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kama, R.; Liu, Y.; Aidara, M.; Kpalari, D.F.; Song, J.; Diatta, S.; Li, Z. Plant-soil feedback combined with straw incorporation under maize/soybean intercropping increases heavy metals migration in soil-plant system and soil HMRG abundance under livestock wastewater irrigation. J. Soil Sci. Plant Nutr. 2024, 24, 7090–7104. [Google Scholar] [CrossRef]

- Liu, X.; Rahman, T.; Song, C.; Su, B.; Yang, F.; Yong, T.; Yang, W. Changes in light environment, morphology, growth and yield of soybean in maize-soybean intercropping systems. Field Crops Res. 2017, 200, 38–46. [Google Scholar] [CrossRef]

- Fu, Z.-D.; Zhou, L.; Chen, P.; Du, Q.; Pang, T.; Song, C.; Wang, X.-C.; Liu, W.-G.; Yang, W.-Y.; Yong, T.-W. Effects of maize-soybean relay intercropping on crop nutrient uptake and soil bacterial community. J. Integr. Agric. 2019, 18, 2006–2018. [Google Scholar] [CrossRef]

- Ahmed, A.; Aftab, S.; Hussain, S.; Nazir Cheema, H.; Liu, W.; Yang, F.; Yang, W. Nutrient accumulation and distribution assessment in response to potassium application under maize–soybean intercropping system. Agronomy 2020, 10, 725. [Google Scholar] [CrossRef]

- Wang, B.; Du, X.; Wang, Y.; Mao, H. Multi-machine collaboration realization conditions and precise and efficient production mode of intelligent agricultural machinery. Int. J. Agric. Biol. Eng. 2024, 17, 27–36. [Google Scholar]

- Wu, P.; Lei, X.; Zeng, J.; Qi, Y.; Yuan, Q.; Huang, W.; Lyu, X. Research progress in mechanized and intelligentized pollination technologies for fruit and vegetable crops. Int. J. Agric. Biol. Eng. 2024, 17, 11–21. [Google Scholar] [CrossRef]

- Liu, W.; Hu, J.; Liu, J.; Yue, R.; Zhang, T.; Yao, M.; Li, J. Method for the navigation line recognition of the ridge without crops via machine vision. Int. J. Agric. Biol. Eng. 2024, 17, 230–239. [Google Scholar]

- Zhang, T.; Zhou, J.; Liu, W.; Yue, R.; Shi, J.; Zhou, C.; Hu, J. SN-CNN: A Lightweight and Accurate Line Extraction Algorithm for Seedling Navigation in Ridge-Planted Vegetables. Agriculture 2024, 14, 1446. [Google Scholar] [CrossRef]

- Wang, Q.; Qin, W.; Liu, M.; Zhao, J.; Zhu, Q.; Yin, Y. Semantic Segmentation Model-Based Boundary Line Recognition Method for Wheat Harvesting. Agriculture 2024, 14, 1846. [Google Scholar] [CrossRef]

- Rabab, S.; Badenhorst, P.; Chen, Y.P.P.; Daetwyler, H.D. A template-free machine vision-based crop row detection algorithm. Precis. Agric. 2021, 22, 124–153. [Google Scholar] [CrossRef]

- Xu, B.; Chai, L.; Zhang, C. Research and application on corn crop identification and positioning method based on Machine vision. Inf. Process. Agric. 2023, 10, 106–113. [Google Scholar] [CrossRef]

- Kise, M.; Zhang, Q.; Más, F.R. A stereovision-based crop row detection method for tractor-automated guidance. Biosyst. Eng. 2005, 90, 357–367. [Google Scholar] [CrossRef]

- Diao, Z.; Guo, P.; Zhang, B.; Zhang, D.; Yan, J.; He, Z.; Zhao, C. Maize crop row recognition algorithm based on improved UNet network. Comput. Electron. Agric. 2023, 210, 107940. [Google Scholar] [CrossRef]

- Lin, S.; Jiang, Y.; Chen, X.; Biswas, A.; Li, S.; Yuan, Z.; Qi, L. Automatic detection of plant rows for a transplanter in paddy field using faster R-CNN. IEEE Access 2020, 8, 147231–147240. [Google Scholar] [CrossRef]

- Shi, J.; Bai, Y.; Zhou, J.; Zhang, B. Multi-Crop Navigation Line Extraction Based on Improved YOLO-v8 and Threshold-DBSCAN under Complex Agricultural Environments. Agriculture 2024, 14, 45. [Google Scholar] [CrossRef]

- Raumonen, P.; Tarvainen, T. Segmentation of vessel structures from photoacoustic images with reliability assessment. Biomed. Opt. Express 2018, 9, 2887–2904. [Google Scholar] [CrossRef]

- Zhu, Q.; Chen, W.; Hu, H.; Wu, X.; Xiao, C.; Song, X. Multi-sensor based attitude prediction for agricultural vehicles. Comput. Electron. Agric. 2019, 156, 24–32. [Google Scholar] [CrossRef]

- Nilsson, M. Estimation of tree heights and stand volume using an airborne lidar system. Remote Sens. Environ. 1996, 56, 1–7. [Google Scholar] [CrossRef]

- Kwak, D.A.; Lee, W.K.; Lee, J.H.; Biging, G.S.; Gong, P. Detection of individual trees and estimation of tree height using LiDAR data. J. For. Res. 2007, 12, 425–434. [Google Scholar] [CrossRef]

- Kane, V.R.; Bakker, J.D.; McGaughey, R.J.; Lutz, J.A.; Gersonde, R.F.; Franklin, J.F. Examining conifer canopy structural complexity across forest ages and elevations with LiDAR data. Can. J. For. Res. 2010, 40, 774–787. [Google Scholar] [CrossRef]

- Sun, Y.; Luo, Y.; Zhang, Q.; Xu, L.; Wang, L.; Zhang, P. Estimation of Crop Height Distribution for Mature Rice Based on a Moving Surface and 3D Point Cloud Elevation. Agronomy 2022, 12, 836. [Google Scholar] [CrossRef]

- Ahmed, S.; Qiu, B.; Ahmad, F.; Kong, C.W.; Xin, H. A state-of-the-art analysis of obstacle avoidance methods from the perspective of an agricultural sprayer UAV’s operation scenario. Agronomy 2021, 11, 1069. [Google Scholar] [CrossRef]

- Barawid, O.C., Jr.; Mizushima, A.; Ishii, K.; Noguchi, N. Development of an autonomous navigation system using a two-dimensional laser scanner in an orchard application. Biosyst. Eng. 2007, 96, 139–149. [Google Scholar] [CrossRef]

- Malavazi, F.B.; Guyonneau, R.; Fasquel, J.B.; Lagrange, S.; Mercier, F. LiDAR-only based navigation algorithm for an autonomous agricultural robot. Comput. Electron. Agric. 2018, 154, 71–79. [Google Scholar] [CrossRef]

- Bergerman, M.; Maeta, S.M.; Zhang, J.; Freitas, G.M.; Hamner, B.; Singh, S.; Kantor, G. Robot farmers: Autonomous orchard vehicles help tree fruit production. IEEE Robot. Autom. Mag. 2015, 22, 54–63. [Google Scholar] [CrossRef]

- Zhang, S.; Ma, Q.; Cheng, S.; An, D.; Yang, Z.; Ma, B.; Yang, Y. Crop Row Detection in the Middle and Late Periods of Maize under Sheltering Based on Solid State LiDAR. Agriculture 2022, 12, 2011. [Google Scholar] [CrossRef]

| Test Scenario | Average Soybean Plant Height/cm | Average Maize Plant Height/cm | Average Accuracy/% | ||

|---|---|---|---|---|---|

| Tested Value | Actual Value | Tested Value | Actual Value | ||

| S1 | 10 | 14.86 | 20 | 22.67 | 81 |

| S2 | 30 | 31.71 | 50 | 45.14 | 90 |

| S3 | 40 | 44.94 | 75 | 77.69 | 84 |

| Test Scenario | Average Navigation Angle/° | Average Processing Time ± SD/ms |

|---|---|---|

| S1 | 0.28 | 42.36 (±20.56) |

| S2 | 0.17 | 46.96 (±19.38) |

| S3 | 0.18 | 75.62 (±21.70) |

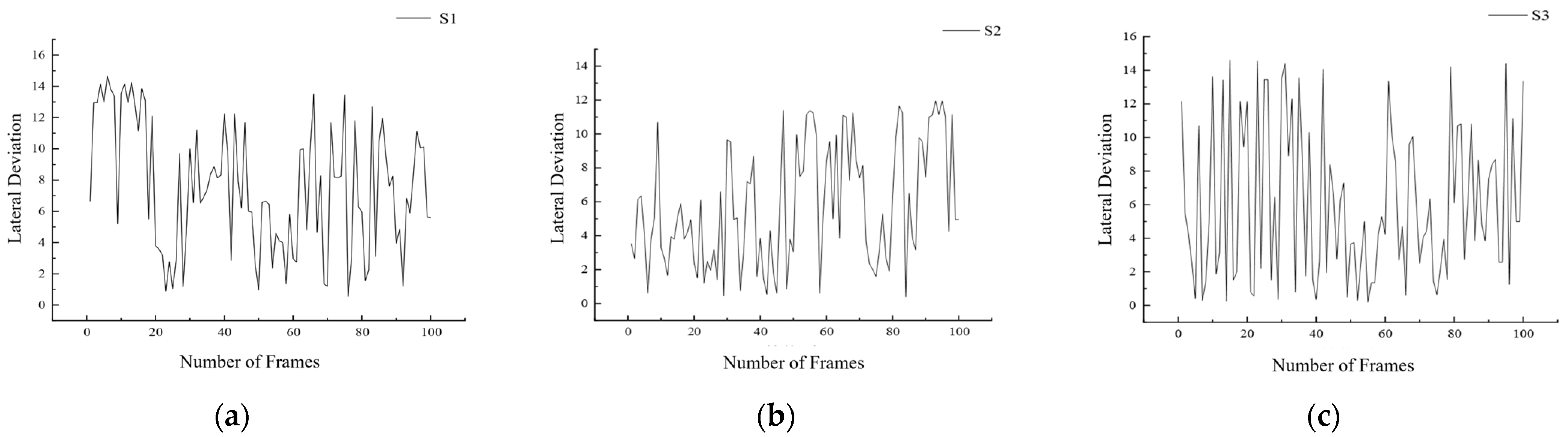

| Test Scenario | Maximum Lateral Deviation/cm | Minimum Lateral Deviation/cm | Average Lateral Deviation/cm | Standard Deviation/cm |

|---|---|---|---|---|

| S1 | 14.65 | 0.55 | 7.55 | 4.07 |

| S2 | 11.95 | 0.40 | 5.76 | 3.56 |

| S3 | 14.60 | 0.20 | 6.34 | 4.66 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ou, M.; Ye, R.; Wang, Y.; Gu, Y.; Wang, M.; Dong, X.; Jia, W. A LiDAR-Driven Approach for Crop Row Detection and Navigation Line Extraction in Soybean–Maize Intercropping Systems. Appl. Sci. 2025, 15, 7439. https://doi.org/10.3390/app15137439

Ou M, Ye R, Wang Y, Gu Y, Wang M, Dong X, Jia W. A LiDAR-Driven Approach for Crop Row Detection and Navigation Line Extraction in Soybean–Maize Intercropping Systems. Applied Sciences. 2025; 15(13):7439. https://doi.org/10.3390/app15137439

Chicago/Turabian StyleOu, Mingxiong, Rui Ye, Yunfei Wang, Yaoyao Gu, Ming Wang, Xiang Dong, and Weidong Jia. 2025. "A LiDAR-Driven Approach for Crop Row Detection and Navigation Line Extraction in Soybean–Maize Intercropping Systems" Applied Sciences 15, no. 13: 7439. https://doi.org/10.3390/app15137439

APA StyleOu, M., Ye, R., Wang, Y., Gu, Y., Wang, M., Dong, X., & Jia, W. (2025). A LiDAR-Driven Approach for Crop Row Detection and Navigation Line Extraction in Soybean–Maize Intercropping Systems. Applied Sciences, 15(13), 7439. https://doi.org/10.3390/app15137439