1. Introduction

Wheat is one of the most widely cultivated cereal crops globally due to its strong adaptability and high yield potential. As a fundamental dietary staple, it plays a vital role in ensuring global food security. Approximately 20% of the world’s wheat is produced in China, where wheat production plays a crucial role in ensuring national food security [

1]. However, the frequent occurrence of wheat diseases has become a significant issue affecting wheat production [

1]. In particular, the spread of wheat yellow dwarf disease and wheat rust poses a severe threat, as these diseases are highly contagious and can cause significant damage to wheat leaves and roots, weakening photosynthesis and disrupting growth and development [

2]. As a result, wheat yield and quality are significantly reduced. To address this challenge, developing a cost-effective and timely method for the large-scale, coarse-grained identification of wheat disease-affected areas is of paramount importance for enhancing disease control efficiency, safeguarding wheat yields, and ensuring national food security.

Conventional plant disease detection predominantly depends on visual assessments conducted by farmers or experts, which are inherently subjective, highly reliant on individual experience, time-consuming, labor-intensive, and economically inefficient. With the advancement of deep learning, an increasing number of deep learning approaches have been applied to computer vision [

3,

4,

5,

6,

7], thus advancing the development of smart agriculture [

8]. Goyal et al. introduced an enhanced deep convolutional model for wheat disease classification, achieving an accuracy of 97.88% across 10 disease classes [

9]. Nachtigall et al. developed a CNN-based model for the automatic detection and classification of apple tree disorders, achieving 97.3% accuracy [

10]. Furthermore, the development of object detection techniques in deep learning has further propelled research into fine-grained plant disease detection [

11]. For instance, Sun et al. proposed MEAN-SSD, a lightweight CNN model for real-time apple leaf disease detection on mobile devices, achieving 83.12% mAP and 12.53 FPS [

12]. Qi et al. improved the SE-YOLOv5 model for tomato virus disease recognition, achieving an accuracy of 91.07% and a mean average precision of 94.10%, outperforming other models [

13]. However, existing studies predominantly rely on RGB single-source images captured by ground-based handheld devices. While these methods perform well in fine-grained detection at the leaf or lesion level, they are often unsuitable for large-scale field scenarios, particularly when it comes to detecting disease-affected areas.

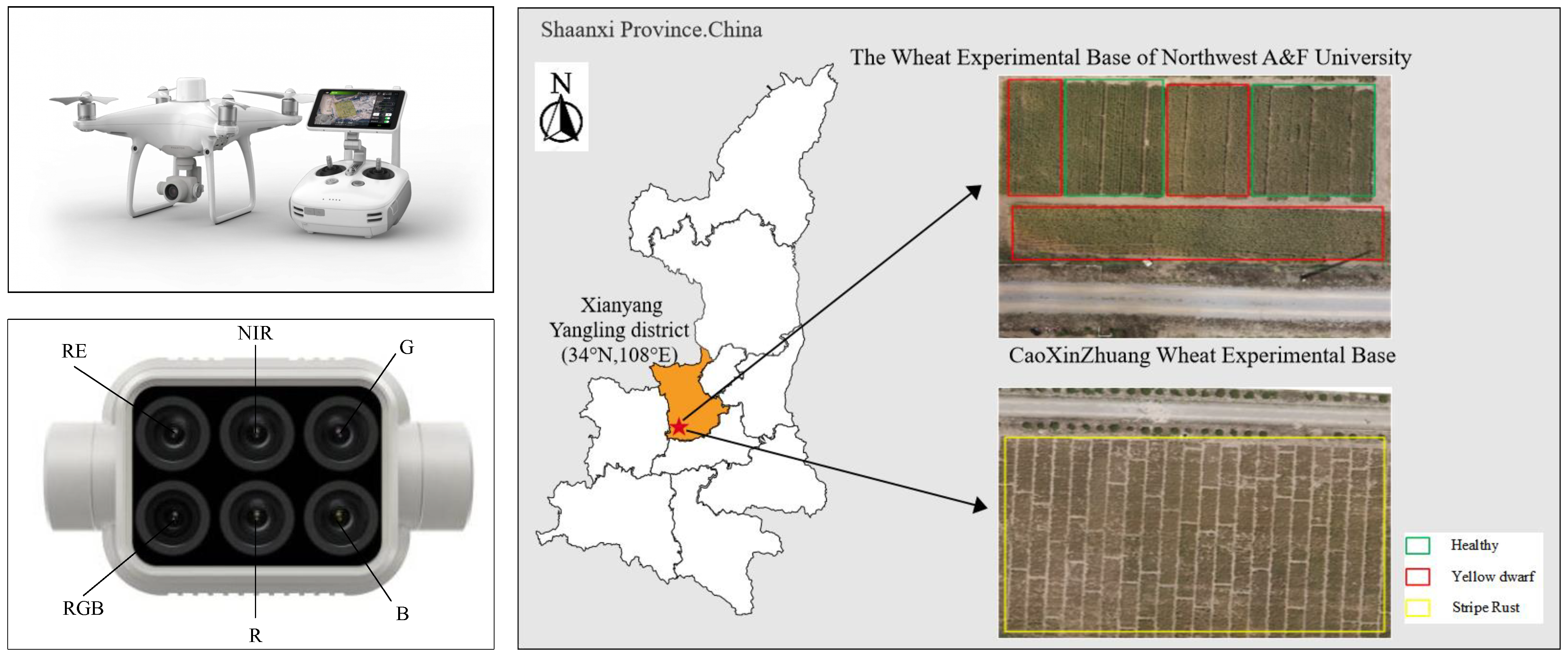

The development of UAV-based low-altitude remote sensing technology has provided significant opportunities for precision agriculture [

14,

15]. Due to its advantages, including minimal meteorological and landing site constraints, wide application range, low operational costs, and ability to capture high-resolution imagery, UAV remote sensing has been widely applied in crop yield estimation [

16,

17,

18], disease diagnosis [

19,

20,

21], and crop growth monitoring [

22,

23,

24]. When crops are affected by diseases, their spectral responses at specific wavelengths exhibit marked alterations. By effectively capturing these spectral variations, accurate disease diagnosis can be achieved. For example, the red band can be used to detect potato late blight [

25], the red-edge band is suitable for detecting oil palm orange spot disease [

26], and infrared bands have also been widely applied in crop disease detection [

27]. The above studies mainly focus on using a single band or detecting a single disease, neglecting the comprehensive use of multi-band information. UAVs equipped with multispectral cameras can capture images across various bands when crop diseases occur, enabling the extraction of spectral features for different diseases at different bands. Recently, an increasing number of studies have employed multispectral remote sensing technology for plant disease detection [

28,

29]. For instance, Li et al. applied multi-source image fusion using RGB and multispectral UAV images for apple disease and pest classification, achieving a subset accuracy of 92.92% [

30]. Hao et al. developed DBFormer, a dual-branch multiscale model for detecting wheat yellow dwarf disease from UAV multispectral images, achieving a mean intersection over union of 88.51% and a mean pixel accuracy of 93.73% [

31]. Silva et al. combined convolutional neural networks and Vision Transformers for multispectral plant disease detection, achieving a precision of 90.1% and an accuracy of 83.3% [

32]. These studies highlight the promising potential of multispectral remote sensing technology for plant disease detection.

Despite the considerable body of research applying remote sensing technologies for plant disease detection, most studies have focused on image segmentation and object detection, with insufficient attention given to disease area classification. Additionally, existing multi-source image fusion methods for disease regional classification tend to be simplistic and struggle to effectively integrate information from different data sources. Moreover, due to the low spectral resolution of multispectral images and the use of relatively simple image classification models in current studies, spectral semantic information is often underutilized and poorly fused. To address the above challenges, a novel MSFNet was proposed in this paper, which effectively integrates multi-source data and leverages the concept of a visual pyramid to enhance the extraction and fusion of spectral semantic information at different levels, thereby improving the accuracy and performance of disease regional classification. The main contributions of this paper are as follows:

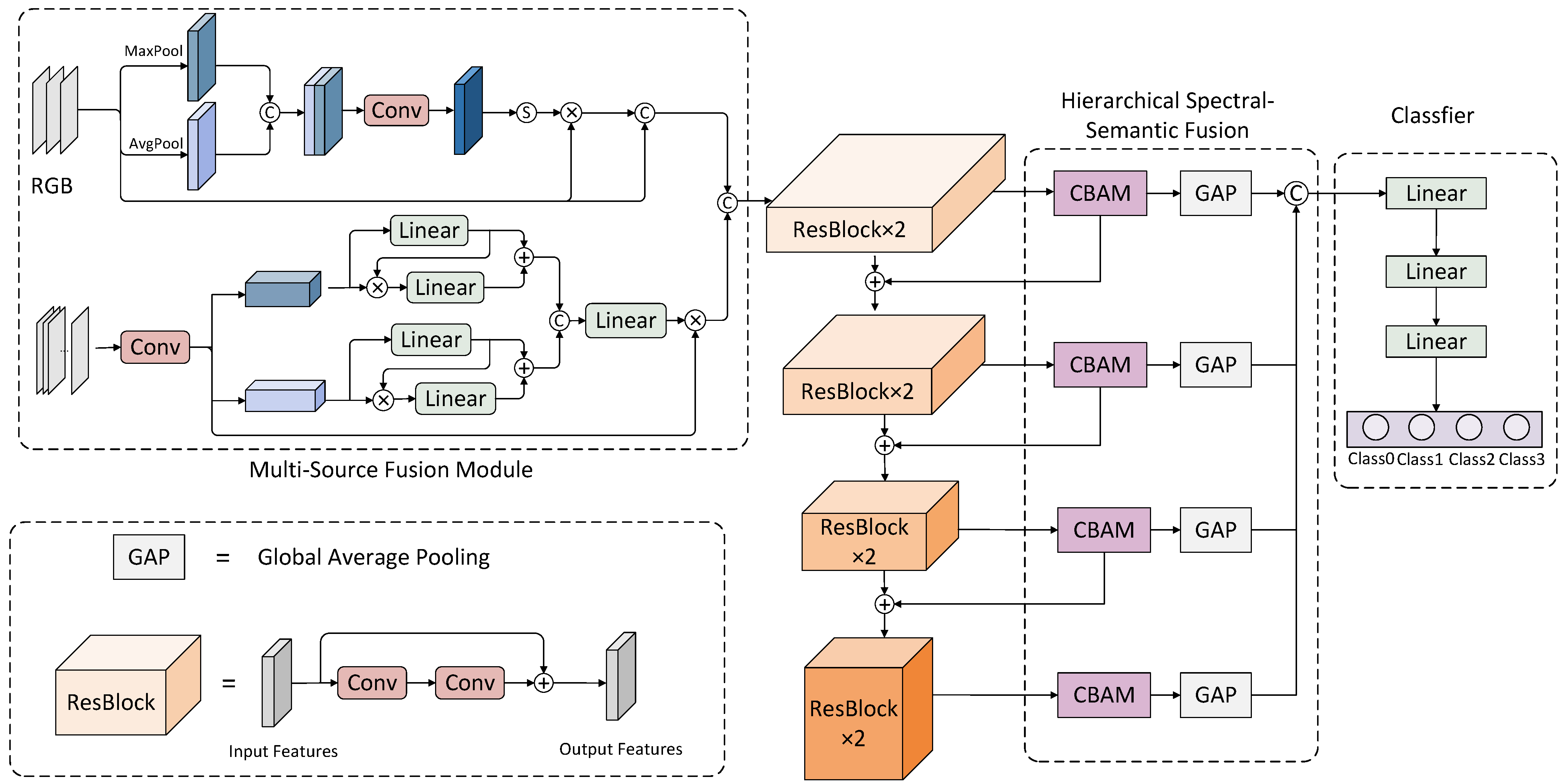

A novel MSFNet was proposed for the identification of wheat disease-affected regions from multispectral UAV images, which integrates multi-source features via a multi-source fusion module and enhances spectral variation perception through a hierarchical spectral semantic fusion module.

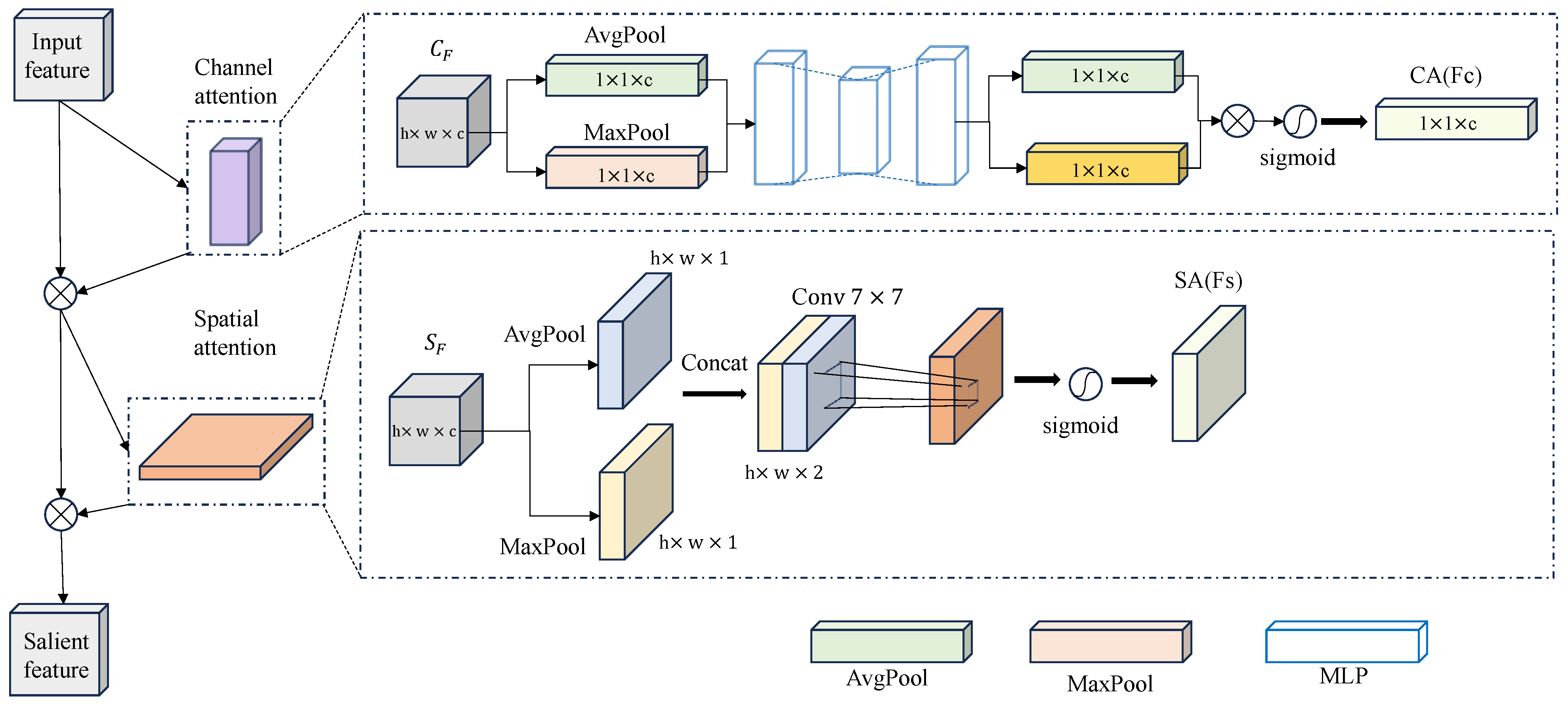

A novel multi-source fusion module (MSFM) was proposed, utilizing a dual-branch structure to separately process RGB and multispectral vegetation index images, thereby enhancing spatial–spectral semantics and effectively integrating the complementary features of multi-source data.

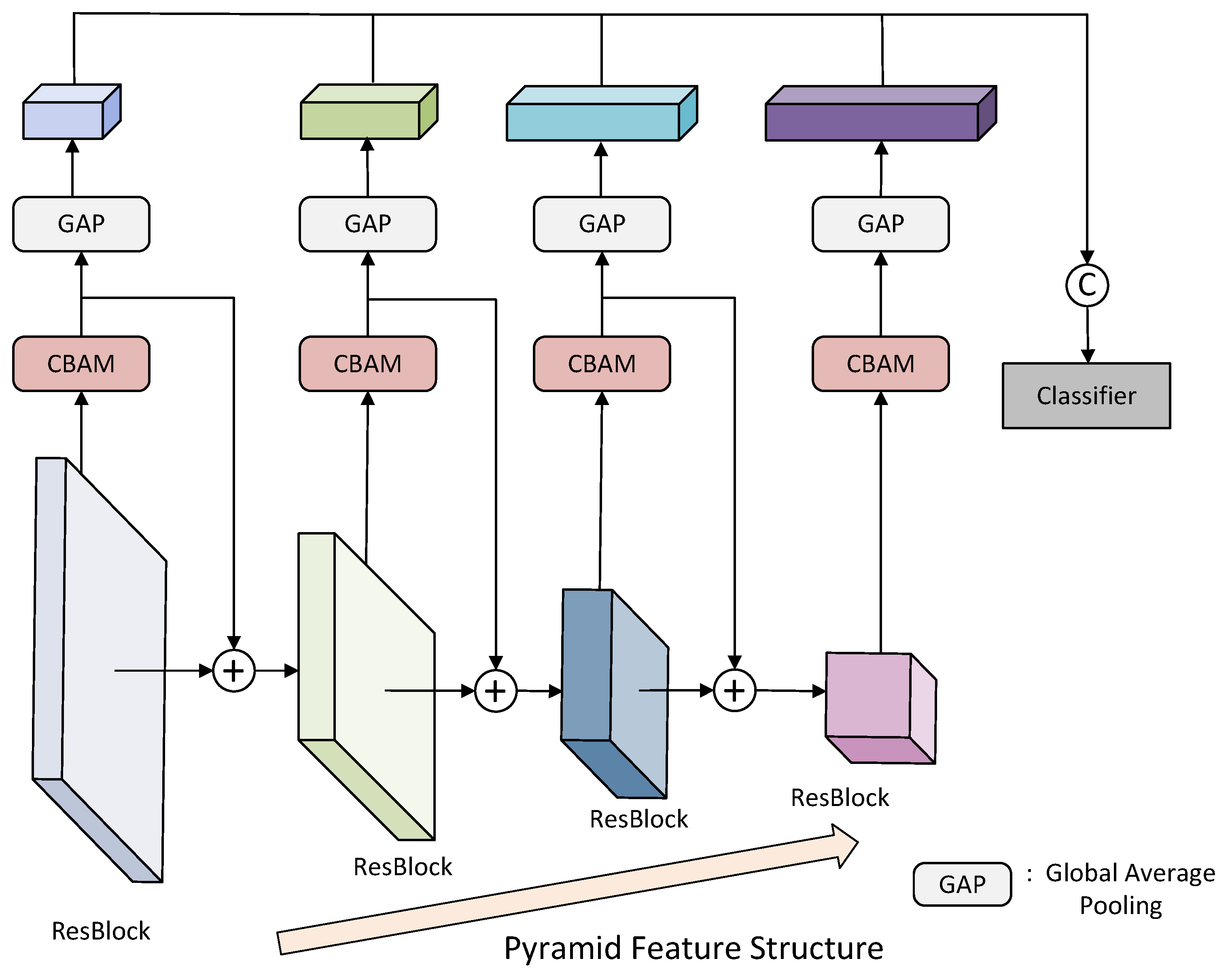

A novel hierarchical spectral semantic fusion module (HSSFM) was presented, which adopts a pyramid architecture and incorporates an attention mechanism to fuse hierarchical spectral semantics, thereby expanding the model’s receptive field for spectral variations.

3. Results

3.1. The Experiment Settings

As shown in

Table 3, experiments were performed on a cloud server equipped with an RTX 3080 Ti GPU, which included 12 GB of graphic memory, operating on an Ubuntu 20.04 LTS 64-bit system. The programming environment was set up using Python 3.8, alongside CUDA version 11.3 to support GPU acceleration. Furthermore, the model was implemented using the PyTorch deep learning framework, specifically version 1.10.0.

The training strategy employed in this study involved training the model from scratch without using pre-trained weights. A dropout layer was applied in the classifier to enhance generalization. Several dropout rates (0.1, 0.2, and 0.3), initial learning rates (e.g., 0.001 and 0.0005), and learning rate decay schedules were evaluated using a 5-fold cross-validation. The best-performing combination (with a dropout rate of 0.2, an initial learning rate of 0.001, and a step decay factor of 0.1 every 30 epochs) was selected based on the average validation accuracy across folds. The final model was trained for 150 epochs with a batch size of 16 using the selected settings. The Adam optimizer was adopted for parameter updates with its default parameters ( and ), which have demonstrated stable performance across a wide range of deep learning tasks and were found effective in our setup. Furthermore, the cross-entropy loss was used as the objective function. The final model was selected based on the highest accuracy achieved on the validation set.

3.2. Evaluation Metrics

In order to comprehensively and objectively evaluate the performance of MSFNet, four indicators, namely, accuracy, precision, recall, and

F1-

score, are selected for model evaluation. The specific calculation formulas are shown in Formulas (

4)–(

7).

Accuracy measures the proportion of correctly classified samples, reflecting overall model performance. Precision represents the ratio of correctly predicted positives to all predicted positives, indicating the reliability of positive predictions. Recall (sensitivity) quantifies the proportion of correctly predicted positives among all actual positives, highlighting the model’s ability to identify positive instances. Additionally, the

F1-

score, as the harmonic mean of precision and recall, offers a balanced evaluation, especially in imbalanced datasets.

where

TP denotes the number of positive samples correctly predicted by the model,

TN denotes the number of negative samples correctly predicted,

FN represents the number of negative samples incorrectly predicted as positive, and

FP indicates the number of positive samples incorrectly predicted as negative.

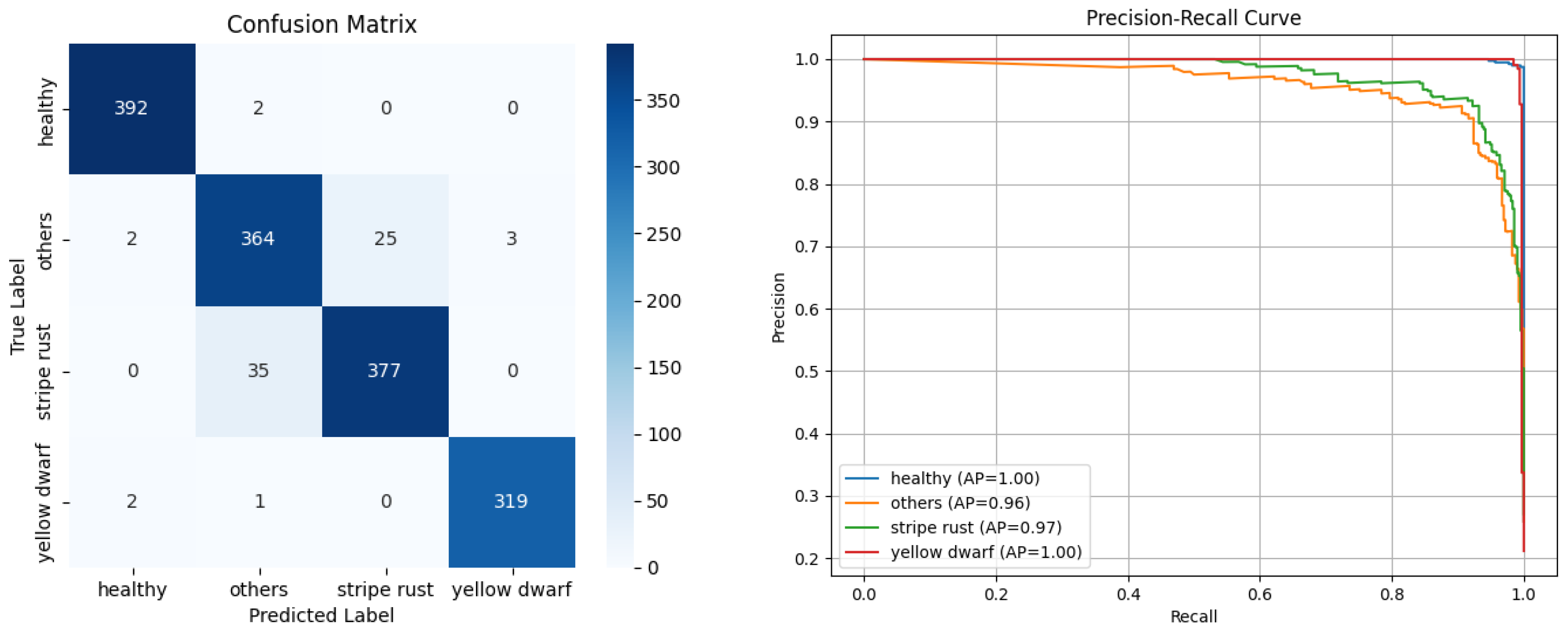

3.3. Analysis of Prediction Results of MSFNet

To comprehensively assess the model’s performance, we computed the accuracy, precision, recall, and F1-score of each category, as well as the overall classification results. Additionally, PR curves were plotted for each class, and a confusion matrix was generated to provide deeper insights into the model’s classification behavior. The PR curves illustrate the balance between precision and recall across varying decision thresholds, while the confusion matrix offers a detailed analysis of misclassifications, highlighting both the model’s strengths and areas for improvement. These evaluations serve as a crucial basis for the further refinement and optimization of the model.

As shown in

Table 4, all classification metrics exceed 90% across categories. However, the accuracy for others and stripe rust is relatively lower, at 92.4% and 91.5%, respectively. The confusion matrix (

Figure 7) indicates a pronounced misclassification between these two categories, with 35 others samples misclassified as stripe rust, and 25 stripe rust samples misclassified as others. The others category encompasses diverse regions, including soil, buildings, and low-density weed areas, some of which—such as shadowed soil or sparsely vegetated backgrounds—may exhibit texture and spectral characteristics similar to the canopy of wheat stripe rust regions, thereby increasing the difficulty of accurate discrimination. This feature overlap is likely a key factor contributing to misclassification and highlights a potential direction for improving the model’s discriminative capability in future research. Furthermore,

Figure 7 presents the P-R curves for each category, where all AP values exceed 0.95, indicating that the model maintains a good balance between precision and recall, ensuring the robustness of the detection results. Notably, the AP values for healthy and yellow dwarf reach 1.0, demonstrating the model’s exceptional discriminative ability for these categories and further validating its superior classification performance.

3.4. Comparison with SOTA Methods

To further assess the effectiveness of the proposed method, we conducted comparative experiments on MSWDD2024 using various state-of-the-art (SOTA) image classification models, including Transformer-based models (Vision Transformer [

38] and Swin Transformer [

39]) and CNN-based models (MobileNetV3-s [

40], ShuffleNetV2 [

41], SENet [

42], ResNet [

5], ResNeXt [

43], and EfficientNetV2 [

44]). All inference speed (FPS) measurements were conducted on the same hardware platform described in

Section 3.1 to ensure consistency. As shown in

Table 5, the proposed MSF-ResNet outperformed all baseline models across all evaluation metrics, demonstrating its superior performance and validating its effectiveness in wheat disease region classification.

Among the baseline models, the Transformer-based models (e.g., ViT and Swin Transformer) achieve lower accuracy, possibly due to their lack of explicit channel modeling and reliance on larger input sizes, which may limit their ability to capture fine-grained spectral–spatial patterns in small 64 × 64 patches. Among the CNN-based baseline models, SENet achieves the best performance, with an accuracy of 91.5%, primarily due to its channel attention mechanism, which effectively enhances the model’s ability to focus on discriminative spectral features. EfficientNetV2 also demonstrates strong classification performance (90.1%), benefiting from its compound scaling strategy, which strikes a balance between network depth, width, and resolution. Traditional ResNet models, while stable, slightly lag behind the aforementioned models, with ResNet18, ResNet50, and ResNet101 achieving accuracies of 89.4%, 88.7%, and 89.2%, respectively. Although deeper networks, such as ResNet101, extract richer feature representations, the improvement is marginal, likely due to the increased model complexity failing to effectively integrate refined spectral–spatial feature expressions, thus limiting performance gains.

Notably, the proposed MSFNet significantly outperforms all baseline models across all evaluation metrics, achieving the best performance, with an accuracy of 95.7%, a precision of 96.4%, a recall of 95.2%, and an F1-score of 95.8%. Moreover, MSFNet achieves a high inference speed of 62.18 FPS, which is only slightly lower than that of the fastest baseline ResNet18 (79.54 FPS) and significantly higher than that of most other models, including Transformer-based architectures. In addition, MSFNet contains only 14.2 M parameters, indicating lower memory and storage demands than the other methods. These results demonstrate that MSFNet not only delivers superior accuracy but also meets the computational and memory constraints required for real-time UAV deployment.

The superior performance of MSFNet is primarily attributed to its efficient data fusion strategy and multi-level spectral feature extraction mechanism. Specifically, the MSFM effectively uncovers complementary features between RGB and MS-VIs, enhancing the synergy of multi-source information and improving the effectiveness of data fusion. The HSSFM further integrates hierarchical spectral semantic information, overcoming the limitations of single-level feature extraction, while the attention mechanism optimizes feature representation, enhancing the model’s ability to discriminate disease regions. Overall, MSFNet excels in wheat disease region classification by deeply integrating multi-source data and hierarchical feature extraction, fully exploiting the potential of spectral information, and demonstrating exceptional robustness and generalization capabilities.

3.5. Ablative Experiments

The above comparative experiments demonstrate the superior performance of our proposed method. To further analyze the contribution of different data sources and validate the effectiveness of key components, we conducted an ablation experiment by selecting different data source combinations, the MSFM, and the HSSFM as ablation components.

As shown in

Table 6, the experimental results indicate that using only RGB or MS-VIs as input leads to suboptimal performance across all evaluation metrics. This suggests that a single data source fails to provide sufficient feature representation, thereby limiting the model’s classification capability. When RGB and MS-VIs are combined, accuracy improves by 6.7% and 9.0% compared to using RGB or MS-VIs alone, respectively, while precision increases by 7.2% and 12.5%. These findings highlight the complementary nature of RGB and MS-VIs, demonstrating that multi-source data fusion significantly enhances regional classification accuracy.

Building upon multi-source data fusion, we further evaluate the impact of different modules. When the MSFM is incorporated, model performance improves considerably, with precision and recall increasing by 4.7% compared to the baseline model. This indicates that the MSFM effectively captures complementary spatial–spectral information and key features, thereby enhancing multi-source feature fusion. Furthermore, when the HSSFM is introduced, accuracy and precision improve by 4.8% and 4.7%, respectively, compared to the baseline, demonstrating that the HSSFM efficiently extracts and integrates multi-level spectral features, outperforming models that rely solely on single-level features.

Ultimately, when both the MSFM and HSSFM are integrated, the model achieves the highest performance across all evaluation metrics, surpassing both the baseline and single-module configurations. Specifically, accuracy and precision increase by 6.0%, further validating the effectiveness of the MSFM and HSSFM in multi-source data fusion and hierarchical spectral feature extraction.

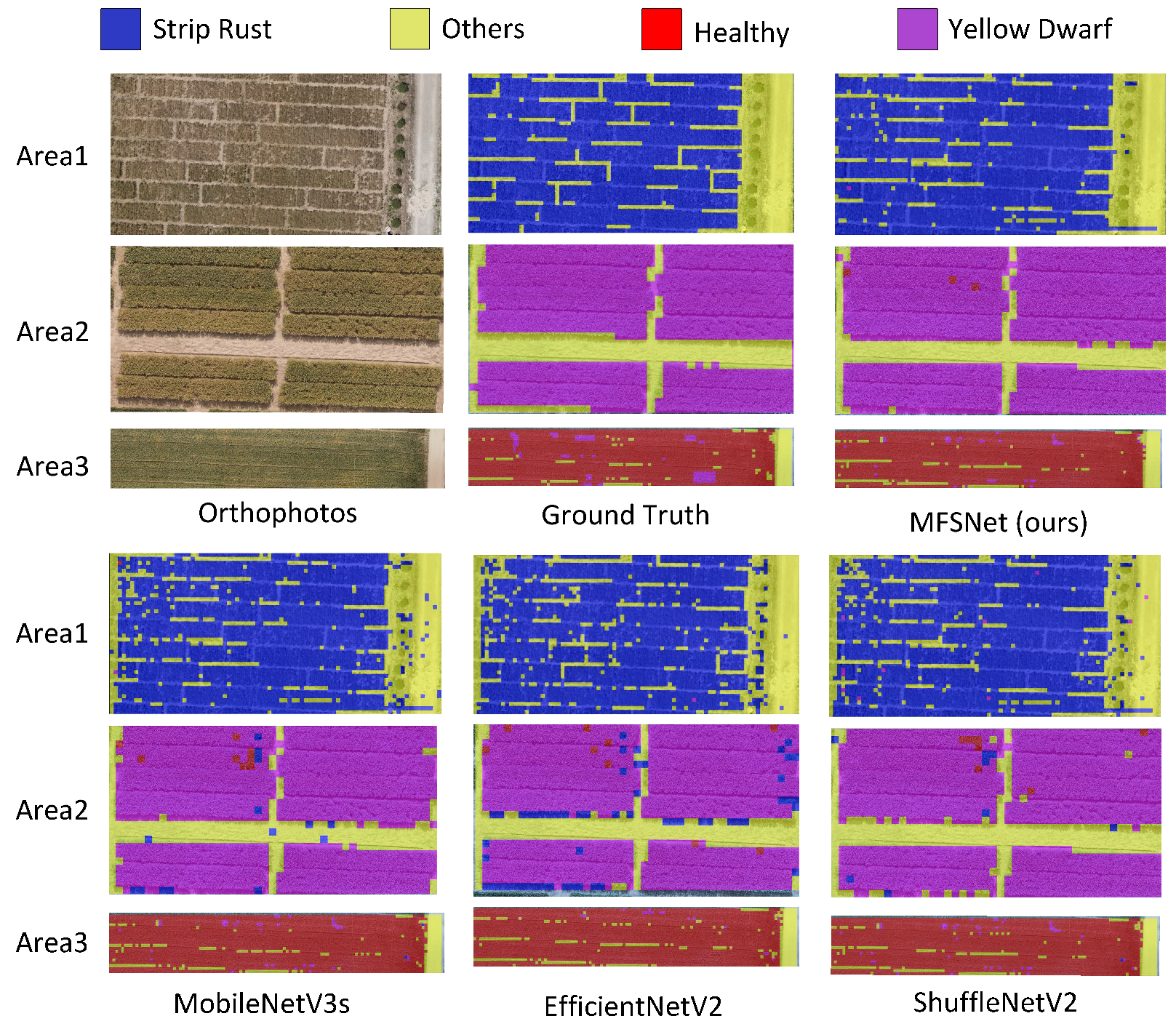

3.6. The Detection Results of the Different Methods

To better evaluate the model’s performance, three test regions were selected from the orthorectified imagery, and the recognition results of different models in these regions were post-processed for visualization, as shown in

Figure 8.

Among them, Area 1 primarily consists of stripe rust, Area 2 is dominated by yellow dwarf disease, and Area 3 mainly contains healthy vegetation, with some regions affected by yellow dwarf disease. The results demonstrate that MSFNet achieves high recognition accuracy across all regions, with only a few misclassifications. In contrast, ShuffleNetV2 exhibits significant mutual misclassification between stripe rust and yellow dwarf disease in Area 1, while EfficientNetV2 erroneously classifies roadside trees as stripe rust in the same region. Moreover, MobileNetV3 suffers from both types of misclassification. Additionally, ShuffleNetV2, EfficientNetV2, and MobileNetV3 frequently misidentify wheat field shadows along the edges of Area 2 as disease-affected regions.

These misclassifications can be primarily attributed to the spatial similarity between different categories. For instance, wheat shadows share similar texture patterns with stripe rust and yellow dwarf disease. However, the comparative models have limited capabilities in capturing spectral variations, making them more susceptible to interference from spatially similar features, leading to misclassification. In contrast, MSFNet effectively integrates spatial and spectral information, enabling it to distinguish regions with similar spatial features but distinct spectral characteristics. This further validates the superiority of MSFNet in wheat disease detection.

3.7. Validation of the Effectiveness of the Proposed MSFM

To validate the effectiveness of the proposed MSFM in multi-source feature fusion and to assess its generalization across different network architectures, we designed and conducted a comparative experiment. Specifically, we selected several state-of-the-art deep learning models, including ShuffleNetV2, ResNeXt, MobileNetV3-s, SENet, ResNet50, and ResNet101. Each model was evaluated using two fusion strategies, namely, direct data concatenation (data fusion) and MSFM-based feature fusion, to analyze their impact on classification performance.

As shown in

Table 7, the MSFM consistently improved classification performance across different network architectures, achieving a higher accuracy, precision, recall, and

F1-

score than conventional data fusion methods. This demonstrates that the MSFM effectively captures complementary features from multi-source data and optimizes feature fusion strategies, thereby enhancing the model’s discriminative capability. Notably, for deeper networks such as ResNeXt, ResNet50, and ResNet101, the MSFM achieved substantial performance gains, improving accuracy by 3.9%, 4.3%, and 3.4%, respectively. These results indicate that, in the context of this study, feature-level fusion outperforms direct data concatenation by better leveraging complementary information from multi-source inputs, ultimately enhancing the model’s ability to identify diseased regions.

3.8. Validation of the Effectiveness of the Proposed HSSFM

The previous ablation experiment demonstrated the advantage of the HSSFM in integrating hierarchical spectral semantic information. To further evaluate the effectiveness and generalization capability of hierarchical spectral semantic modeling for the classification of the HSSFM, we conducted comparative experiments on several state-of-the-art deep learning models, including ResNeXt, MobileNetV3-s, SENet, ResNet50, and ResNet101. The use of a single-level feature refers to directly utilizing the final feature layer of different models for classification after pooling or flattening. In contrast, a hierarchical feature extracts multi-scale feature layers from the model and employs the HSSFM to integrate hierarchical spectral semantics, generating more discriminative features for classification.

The experimental results (

Table 8) demonstrate that the HSSFM effectively enhances classification performance across all network architectures. Compared to methods that rely solely on the final feature layer for classification, the HSSFM integrates multi-scale spectral semantic information, achieving superior performance in terms of accuracy, precision, recall, and

F1-

score. Notably, in deeper networks such as ResNet50 and ResNet101, the HSSFM improves classification accuracy by 5.1% and 5.0%, respectively. This improvement can be attributed to the more structured hierarchical features in deeper networks, allowing the HSSFM to more effectively capture and integrate multi-scale spectral features, thereby enhancing the model’s discriminative capability.

Overall, this experiment demonstrates the effectiveness and generalization capability of the HSSFM in multi-scale feature fusion. By deeply integrating hierarchical semantic information, the HSSFM enhances the model’s ability to identify diseased regions, further highlighting the significance of hierarchical spectral information in disease classification tasks.

3.9. Influence of Noise on Model Performance

To further evaluate the robustness of the proposed model under noisy conditions, we introduced synthetic noise into the test set images to simulate sensor interference and transmission errors. Two common noise types were considered: Gaussian noise and salt-and-pepper noise. Gaussian noise with a standard deviation of 0.2 was added to both RGB and multispectral channels to simulate random sensor disturbances. In addition, salt-and-pepper noise was introduced at a density of 0.1 to mimic image corruption caused by bit errors or hardware faults.

After generating the noisy dataset, we evaluated both the baseline ResNet50 and the proposed MSFNet models on the same set. As shown in

Table 9, MSFNet outperformed ResNet50 across all categories in terms of classification accuracy, with all metrics exceeding 94%.

Furthermore, when comparing the results to those obtained on the clean test set (see

Table 9), we observed that ResNet50 experienced a notable performance drop, with precision and recall decreasing by 2.5% and 3.4%, respectively. In contrast, MSFNet showed much smaller performance degradation under noise, with all metric drops remaining within 1%. These results demonstrate that MSFNet maintains high classification accuracy even in the presence of noise, indicating its strong robustness and practical applicability in real-world scenarios with imperfect imaging conditions.

3.10. Cross-Region Evaluation of Model Generalization

To further evaluate the generalization capability of MSFNet under different geographic conditions, we conducted a cross-region test using images collected from a wheat field located in a different area to the training sets. The additional dataset was collected from the Experimental Base of the Shaanxi Hybrid Rapeseed Research Center (as shown in

Figure 9), which is geographically distinct from the area used for the original MSWDD2024 dataset. The collection and processing process of the data is consistent with that described in

Section 2.1.

As shown in

Table 10, MSFNet continued to exhibit excellent performance on the new test set collected from different fields. Specifically, the overall accuracy, precision, recall, and

F1-

score reached 94.7%, 94.6%, 95.0%, and 94.7%, respectively. Among the four classes, the model performed best on the healthy and stripe rust categories, with both exceeding 97% in all evaluation metrics. The remaining categories also maintained metrics above 90%, demonstrating the model’s strong robustness and generalization capability across different geographic conditions.

Compared to the results obtained on the test set from the original field (as shown in

Table 5), the performance drop was marginal—most metrics decreased by less than 1%. These results suggest that MSFNet maintains a reliable prediction accuracy even when applied to regions beyond the training domain, confirming its potential for practical deployment in varied agricultural environments.

4. Discussion

The strong performance of MSFNet can be attributed to its architecture, which was specifically designed for multi-source fusion and hierarchical spectral feature modeling. Unlike traditional CNN- or Transformer-based approaches that process single-modality inputs, MSFNet adopts a dual-branch structure to jointly leverage RGB and MS-VI data. Within the MSFM, the spatial semantic enhancement branch captures detailed texture and structural patterns from RGB images, while the spectral enhancement branch focuses on the physiological variations captured by MS-VIs. In addition, the proposed HSSFM incorporates a pyramid structure and attention mechanism to integrate spectral semantics across multiple levels. This design enhances the model’s ability to capture fine-grained, scale-aware spectral variations, contributing to more accurate disease localization and classification.

Compared to existing methods, MSFNet exhibits significant advantages in all metrics. RGB-based models are prone to background interference due to the absence of spectral information, whereas MS-VI-based models, despite their spectral sensitivity, lack a sufficient spatial resolution to effectively capture structural details. By combining both modalities, MSFNet strikes an effective balance between spatial detail and spectral sensitivity. In particular, the introduction of the HSSFM enables deeper spectral reasoning across scales, a capability rarely addressed in previous works.

Despite its promising performance, the model has several limitations. First, all samples used in this study were collected during disease stages with visible symptoms, and the dataset lacks early-stage samples in which wheat plants exhibit no apparent signs of infection. This may hinder the model’s effectiveness in early disease detection, which is crucial for timely intervention in real-world agricultural scenarios. Additionally, the current model supports only the diagnosis of stripe rust and yellow dwarf diseases, along with the classification of healthy wheat and background regions. The number of supported disease categories remains narrow.

In future work, we aim to extend the model’s capability to recognize a broader range of wheat diseases and improve its diagnostic accuracy during the early stages of infection. We will also focus on enhancing the model’s robustness and generalization across various disease types, growth stages, and regional environments.

5. Conclusions

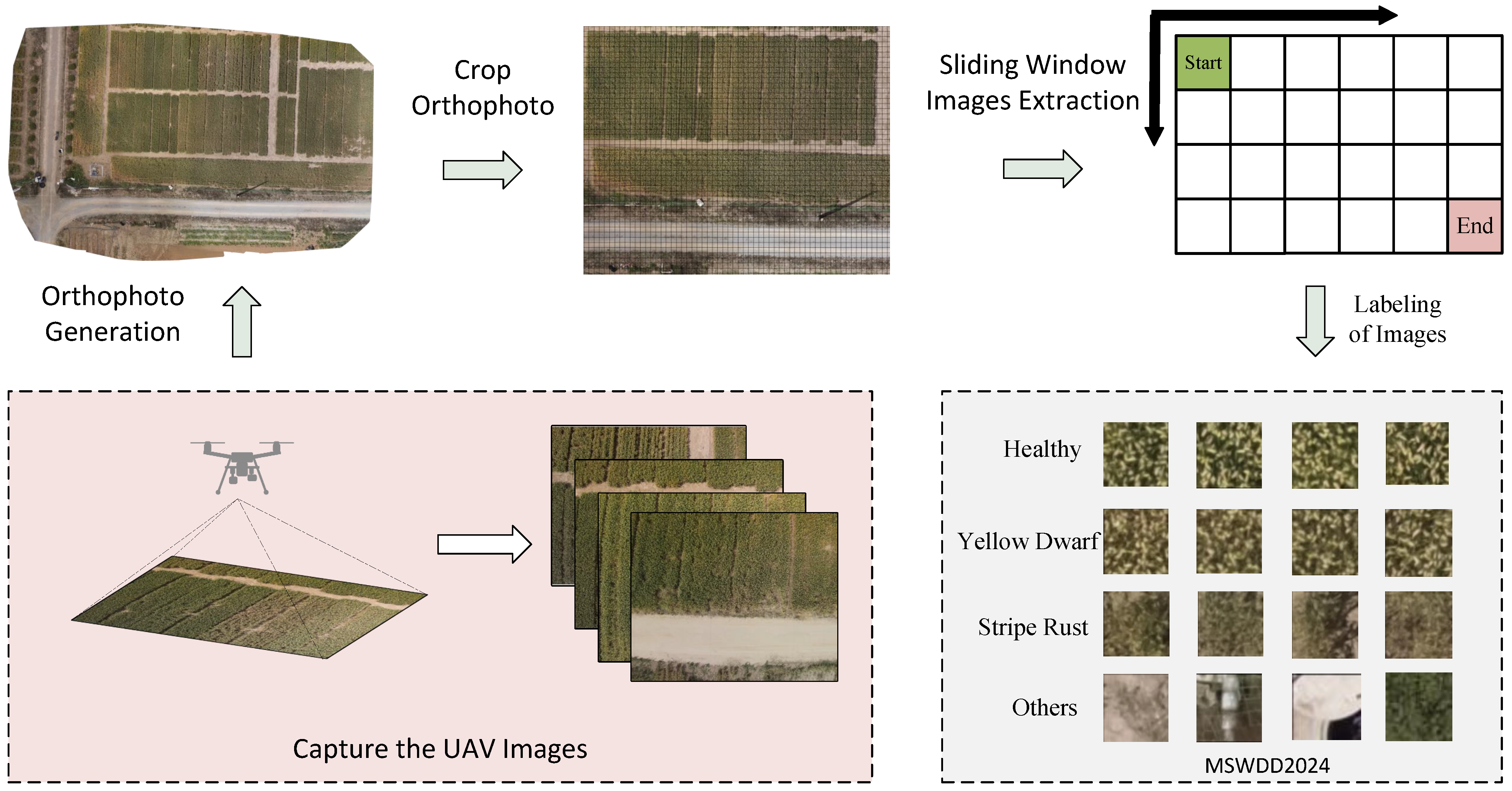

This paper proposes MSFNet, an ultra-efficient model tailored for the precise diagnosis of wheat disease-affected regions. Given the scarcity of publicly available remote sensing datasets for wheat disease regional classification, this study leverages a multispectral UAV platform to acquire both RGB and multispectral images of wheat canopies, constructing a high-quality multispectral wheat disease dataset named MSWDD2024. To effectively integrate multimodal data, a dual-branch multi-source fusion module is designed, incorporating a parallel spatial–spectral semantic enhancement branch to deeply extract spatial texture features from RGB images and spectral response characteristics from MS-VI images, thereby fully utilizing the complementary information of multi-source data. Furthermore, to enhance the model’s capability in perceiving spectral information at different levels, a novel hierarchical spectral semantic fusion module is proposed. By integrating attention mechanisms and a pyramid structure, this module effectively fuses spectral semantics across multiple levels, expanding the receptive field and improving spectral feature extraction. Experimental results on the MSWDD2024 dataset demonstrate that MSFNet achieves state-of-the-art performance, attaining an accuracy of 95.4%, a precision of 95.6%, a recall of 95.6%, and an F1-score of 95.6%. Notably, MSFNet outperforms single-source RGB-based methods by 12.7% in accuracy and 13.0% in precision, surpasses MS-VI-based models by 15.0% in accuracy and 18.3% in precision, and exceeds the ResNet18 baseline by 6.0% in accuracy, highlighting its robust multi-source fusion capabilities. These results strongly validate the effectiveness of MSFNet in wheat disease regional diagnosis, providing technical support for precision agriculture disease monitoring. Moreover, to extend the applicability of this approach to disease diagnosis in other crops and to enhance the generalizability of remote sensing-based plant disease detection, future research will focus on improving the model’s generalization ability and mitigating the noise caused by spectral and spatial variations across different plant species.